- Cisco Community

- Technology and Support

- Data Center and Cloud

- Application Centric Infrastructure

- Re: Retrieval of routing information and interfaces

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-10-2022 02:27 AM

Hello,

Today I have 3 APICs and 70 leafs on my infrastructure. I need to retrieve routing information and interfaces in a text file.

To put it simply, how to do an old "show ip route" and "show ip interface" on ACI?

On which equipment should I perform this request (APIC, Tenant, Leaf)?

Is it possible to do this through Ansible and the cisco.aci collection?

Do you have any code example?

Thanks in advance

Valentino

Solved! Go to Solution.

- Labels:

-

Cisco ACI

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-21-2022 08:40 PM - edited 02-21-2022 08:40 PM

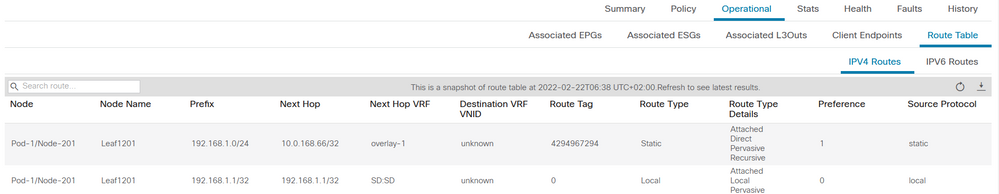

in the newer version of ACI (I have 5.2.2f) it looks like you can see the routing table, per VRF, in the GUI, and it also shows the nodes where the route is present: Tenant-name -> VRF-name -> Operational -> Route table:

Cheers,

Sergiu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-10-2022 05:53 AM

Hi @valentik

Remember that behind the "ACI" cloud, there Nexus 9000 switches are still L3 switches, running a fairly similar operating system as the original NX-OS.

In other words, you can attach to the leaf you are interested and run the following commands to check the routing info:

show vrf show ip route vrf <VRF-NAME>

Note: you will see the VRF name listed in the following format: tenant-name:vrf-name. This is the format you need to use in the show ip route command.

Stay safe,

Sergiu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-12-2022 01:08 AM

Hi @valentik ,

My friend @Sergiu.Daniluk is absolutely on the right track, but he failed to mention that you don't need to log into each of the 70 leaf switches to achieve the output of show ip route vrf <VRF-NAME>

Instead, you can issue the same command from the APIC using the fabric <id> <command> construct.

So if your leaves had IDs of say 2101-2170, you could get ALL the routes with a single command issued form any APIC

fabric 2101-2170 show ip route vrf all

BUT be ready for a lot of output. I issued the command on our lab with just two switches below - its over 900 lines long! And then you'd have to re-organise it into VRFs probably. IF you wanted just EXTERNAL routes (learned from say OSPF) the result would be a lot smaller. So to show just OSPF learned routes, use

fabric 2101-2170 show ip route ospf vrf all

So TBH - I think you'd be better off trying to either write a python script or (as you suggested) learn a little Ansible to achieve your result.

I thought about using BASH - but it didn't work. The following command (on the APIC) collects a list of the VRFs in the correct format:

apic1# vrfList=$(moquery -c fvCtx | grep ^dn | cut -c 31- | sed 's#/ctx-#:#')

apic1# echo $vrfList

mgmt:oob

common:SharedServices_VRF

infra:ave-ctrl

common:default

common:copy

infra:overlay-1

mgmt:inb

Tenant06:Production_VRF

Lab:Lab

<...snip...>

But my attempt to feed this into a fabric <id> <command> format failed

apic1# for v in $vrfList; do fabric 2201-2202 show ip route vrf $v; done

Invalid return character or leading space in header: APIC-Client

FYI - here's a trimmed version output of the fabric 2201-2202 show ip route vrf all on out lab

apic1# fabric 2201-2202 show ip route vrf all

----------------------------------------------------------------

Node 2201 (Leaf2201)

----------------------------------------------------------------

IP Route Table for VRF "overlay-1"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%' in via output denotes VRF

0.0.0.0/32, ubest/mbest: 1/0

*via 10.2.16.65, eth1/49.86, [115/12], 08:11:14, isis-isis_infra, isis-l1-ext

10.2.0.0/27, ubest/mbest: 1/0, attached, direct

*via 10.2.0.30, vlan8, [0/0], 1d16h, direct

10.2.0.30/32, ubest/mbest: 1/0, attached

*via 10.2.0.30, vlan8, [0/0], 1d16h, local, local

10.2.0.32/32, ubest/mbest: 2/0, attached, direct

*via 10.2.0.32, lo1023, [0/0], 1d16h, local, local

*via 10.2.0.32, lo1023, [0/0], 1d16h, direct

10.2.0.33/32, ubest/mbest: 1/0

*via 10.2.16.65, eth1/49.86, [115/2], 1d16h, isis-isis_infra, isis-l1-int

10.2.0.34/32, ubest/mbest: 1/0

*via 10.2.16.65, eth1/49.86, [115/2], 1d16h, isis-isis_infra, isis-l1-int

10.2.0.35/32, ubest/mbest: 1/0

*via 10.2.16.65, eth1/49.86, [115/2], 1d16h, isis-isis_infra, isis-l1-int

10.2.0.64/32, ubest/mbest: 2/0, attached, direct

*via 10.2.0.64, lo1, [0/0], 1d16h, local, local

*via 10.2.0.64, lo1, [0/0], 1d16h, direct

10.2.0.65/32, ubest/mbest: 1/0

*via 10.2.16.65, eth1/49.86, [115/2], 1d16h, isis-isis_infra, isis-l1-int

10.2.0.66/32, ubest/mbest: 1/0

*via 10.2.16.65, eth1/49.86, [115/2], 1d16h, isis-isis_infra, isis-l1-int

10.2.0.67/32, ubest/mbest: 1/0

*via 10.2.16.65, eth1/49.86, [115/2], 1d16h, isis-isis_infra, isis-l1-int

10.2.16.64/32, ubest/mbest: 2/0, attached, direct

*via 10.2.16.64, lo0, [0/0], 1d16h, local, local

*via 10.2.16.64, lo0, [0/0], 1d16h, direct

10.2.16.65/32, ubest/mbest: 1/0

*via 10.2.16.65, eth1/49.86, [115/2], 1d16h, isis-isis_infra, isis-l1-int

10.2.16.66/32, ubest/mbest: 1/0

*via 10.2.16.65, eth1/49.86, [115/3], 08:11:14, isis-isis_infra, isis-l1-int

10.2.184.65/32, ubest/mbest: 1/0

*via 10.2.16.65, eth1/49.86, [115/2], 1d16h, isis-isis_infra, isis-l1-int

10.2.184.66/32, ubest/mbest: 1/0

*via 10.2.16.65, eth1/49.86, [115/2], 1d16h, isis-isis_infra, isis-l1-int

10.2.184.67/32, ubest/mbest: 1/0

*via 10.2.16.65, eth1/49.86, [115/2], 1d16h, isis-isis_infra, isis-l1-int

10.3.2.0/24, ubest/mbest: 1/0

*via 10.2.16.65, eth1/49.86, [115/11], 1d16h, isis-isis_infra, isis-l1-ext

10.3.8.11/32, ubest/mbest: 1/0

*via 10.2.16.65, eth1/49.86, [115/64], 1d16h, isis-isis_infra, isis-l1-ext

10.3.8.12/32, ubest/mbest: 1/0

*via 10.2.16.65, eth1/49.86, [115/64], 1d16h, isis-isis_infra, isis-l1-ext

10.3.9.2/32, ubest/mbest: 1/0

*via 10.2.16.65, eth1/49.86, [115/2], 1d16h, isis-isis_infra, isis-l1-int

10.3.12.225/32, ubest/mbest: 1/0

*via 10.2.16.65, eth1/49.86, [115/2], 1d16h, isis-isis_infra, isis-l1-int

10.3.12.226/32, ubest/mbest: 1/0

*via 10.2.16.65, eth1/49.86, [115/2], 1d16h, isis-isis_infra, isis-l1-int

<snip aout 200 lines>

IP Route Table for VRF "Tenant07:Production_VRF"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%' in via output denotes VRF

10.200.0.5/32, ubest/mbest: 1/0, attached, direct, pervasive

*via 10.2.0.65%overlay-1, [1/0], 16:56:38, static, rwVnid: vxlan-2523136

10.207.11.0/24, ubest/mbest: 1/0, attached, direct, pervasive

*via 10.2.0.65%overlay-1, [1/0], 16:56:38, static, tag 4294967294

10.207.11.1/32, ubest/mbest: 1/0, attached, pervasive

*via 10.207.11.1, vlan37, [0/0], 16:56:38, local, local

10.207.12.0/24, ubest/mbest: 1/0, attached, direct, pervasive

*via 10.2.0.65%overlay-1, [1/0], 15:49:01, static, tag 4294967294

10.207.12.1/32, ubest/mbest: 1/0, attached, pervasive

*via 10.207.12.1, vlan97, [0/0], 15:49:01, local, local

<snip about 200 lines >

----------------------------------------------------------------

Node 2202 (Leaf2202)

----------------------------------------------------------------

IP Route Table for VRF "overlay-1"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%' in via output denotes VRF

0.0.0.0/32, ubest/mbest: 1/0

*via 10.2.0.1, vlan8, [1/0], 08:24:48, static

<snip about 400 lines>

Forum Tips: 1. Paste images inline - don't attach. 2. Always mark helpful and correct answers, it helps others find what they need.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-13-2022 08:38 PM

I think the reason why I didn't put the "show ip route vrf X" in the context of 70 leafs is because the instances you will really check the routing table on all 70 leafs are close to none. 99% of the time you check the routing table on the Border Leafs (where the L3Out is configured), and, if the config is correct, the prefixes should propagate to the appropriate compute leafs via MP-BGP.

On the other hand, yes, you can check the routing table on multiple leafs at once if necessary (during tshoot for example) using the commands described so clear by Chris.

Cheers,

Sergiu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-21-2022 08:40 PM - edited 02-21-2022 08:40 PM

in the newer version of ACI (I have 5.2.2f) it looks like you can see the routing table, per VRF, in the GUI, and it also shows the nodes where the route is present: Tenant-name -> VRF-name -> Operational -> Route table:

Cheers,

Sergiu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-14-2022 07:43 AM

Hello,

I made some research about interfaces :

the informations I need to collect (export) as Interfaces are in format :

L3OUT_COMMON_TO_MAN_L3/topology/pod-2/node-2003/eth1/1/vlan-212, 10.56.239.1, 255.255.255.0, common/VRF_ONE

L3OUT_COMMON_TO_MAN_L3/topology/pod-2/node-2003/eth1/1/vlan-212 : Tenant, L3Outs, Logical Node Profile, Logical Interface Profile, Routed Sub-Interface

10.56.239.1 : IP Address

255.255.255.0 : Netmask (calculated)

common/VRF_ONE : Tenant/VRF

How to export all informations from all tenants and VRF ?

Thanks in advance

Valentino

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-25-2022 09:03 AM

Hello,

The informations present in Tenant-name -> VRF-name -> Operational -> Route table seems to be the right one.

I used moquery to recover all routes, but I have a little more than 300K

How to export in a text file moquery results ?

I made some search about some option to send results in a file without success ?

Do I need to use API REST ? Another tool ?

Thanks

Valenitno Kusmic

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide