- Cisco Community

- Technology and Support

- Data Center and Cloud

- Application Centric Infrastructure

- Re: Aci changing vlan pool

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-03-2020 08:59 AM

Hi,

I'm looking for some guidance.

We have aci in network centric mode, and have began migration of services into aci using a physical and a vmm domain, these vlans are statically configured as we are trunking the vlans into aci while we migrate, however at moment we have seen errors for overlapping vlans across the physical and vmm domain which we need as the vlans were spread across multiple phyiscal and virtual hosts in the old DC, we started using separate vlan pools, which looks like we're it is going wrong, so I would like to move them to all use one vlan pool. Would this cause an outage for the vmm domain if I moved it to point at same vlan pool that the physical domain is using if they contained all relevant vlans?

Solved! Go to Solution.

- Labels:

-

Cisco ACI

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-03-2020 11:35 PM

Hi @alwynaz84,

I'll comment on a few things, and ask a few questions

I have trunked in vlan 100 into aci and mapped it to my l2out on vlan100.

Firstly, I'd suggest NOT using L2Outs. They are harder to manage and not as flexible as an ordinary Application EPG because you are locked to a single VLAN per L2Out. I don't believe that there is anything that a L2Out can do that an Application EPG can't do, but an Application EPG has much more flexibility.

I have 2 leaf switches in a vpc configuration, with both physical and virtual servers connected on vlan100.

This suggests to me that they currently share the same policy. I'm going to assume that you want the same policy for these VLAN 100 servers in ACI too. (For the most part). That's going to make that L2Out a very difficult beast.

I have a physical domain relating to a vlan pool with a static vlan 100 set and my physical server attached to a vpc that has a AEP pointing to that physical domain.

Hmm. This sounds, OK - but if you have a L2Out, you must also have a External L2 Domain. I'll assume it uses the same VLAN Pool and attaches to the same AAEP.

On that same pair of switches we have a vpc connected to a vmm domain that relates to a different vlan pool that is dynamic but has the statis vlan blocks set for vlan100.

I am using the same EPG_A and bridge domain for them.

Same EPG_A as what else?

The error I can see at the moment on the vpc to the vmm domain and on the vpc to the phy server is:

Code F3274

Fabric-encap-mismatch

Vnid mismatch between peers for encap vlan 100

Hope that makes it clearer in what I'm trying to achieve?

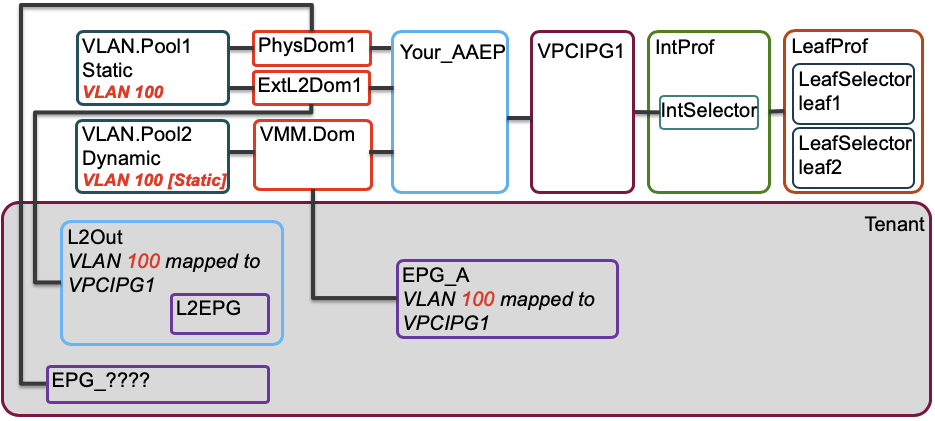

Somewhat clearer. But I still don't have the full picture. This is how I've interpreted it so far

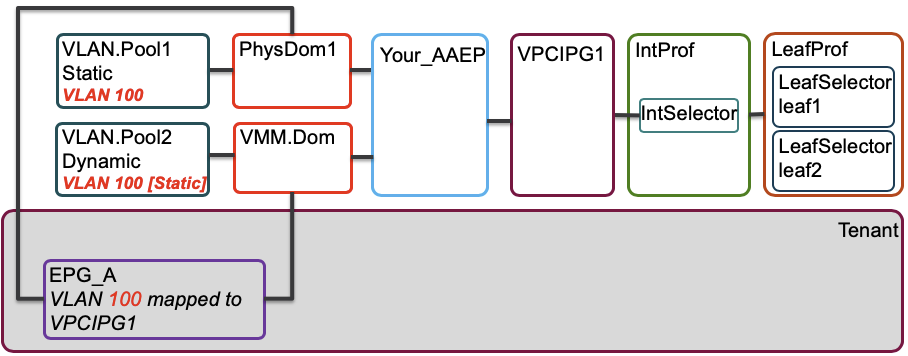

But maybe the L2Out is a red herring. Maybe you dont' have a L2Out at all! Maybe you have:

Which is more likely to explain error Code F3274!

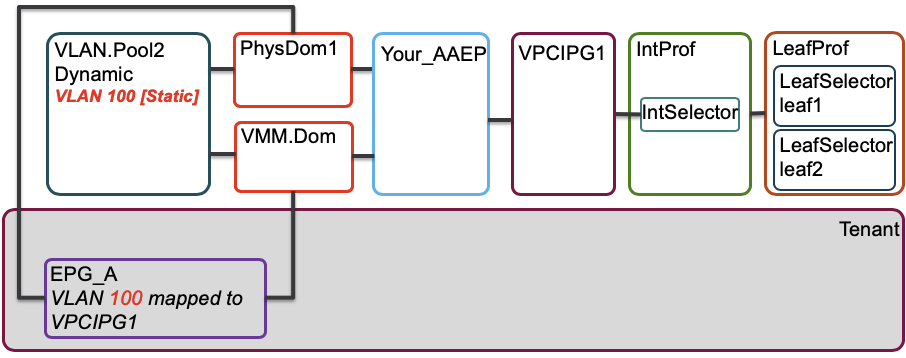

Sooooo. This is why you were looking at moving to:

And your original question:

Would this cause an outage for the vmm domain if I moved it to point at same vlan pool that the physical domain is using if they contained all relevant vlans?

Well, you'd probably have to move the Physical Domain to the dynamic VLAN Pool that the VMMs were linked to, rather than the VMM Domain to the Pool that the Physical Domain is using (Unless it happens to be a Dynamic Pool).

And that would be a quick action - I suspect it would cause some disription, but if you are getting a mismatched VNID for the BD (sorry - didn't put the BD in my pics - should have), you probably have some disruption anyway.

So my advice has to be to try this during a maintenance window, but my limited experience makes me think the disruption would be in the order of a few seconds.

Forum Tips: 1. Paste images inline - don't attach. 2. Always mark helpful and correct answers, it helps others find what they need.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-03-2020 01:55 PM

Hi @alwynaz84,

We have aci in network centric mode,

To me this says you have a plan for every VLAN, so it makes me think your "overlapping VLAN" problem already exists in your pre-ACI network

and have began migration of services into aci using a physical and a vmm domain,

Typically a VMM Domain has a dynamic VLAN pool, but some people don't like (or don't understand) that a VM that has been dynamically allocated VLAN xx for EPG_A can still communicate happily with legacy server on static VLAN yy also mapped to EPG_A ...

these vlans are statically configured as we are trunking the vlans into aci while we migrate,

... and that sounds like what you are doing - statically mapping your VM port groups to VLANs, which is perfectly fine if you have a plan for every VLAN

however at moment we have seen errors for overlapping vlans across the physical and vmm domain

if you are statically mapping VLAN xx to EPG_A in the VMM Domain and then statically mapping VLAN xx to EPG_A in the Physical Domain there should be no problem. So I don't understand why you are getting errors.

What sort of errors?

What's the error message?

When does the error occur?

You haven't given us much to go on

which we need as the vlans were spread across multiple phyiscal and virtual hosts in the old DC,

I still don't get this bit - do you mean that your VLAN numbering was not consistent across multiple physical and virtual hosts in the old DC? If so, moving to ACI and allowing the VMM Domain to dynamically allocate VLANs sounds like a great way to avoid this. But if you were using VLAN xx for one purpose in the old pysical and VLAN xx for a different purpose on your hypervisors, you'll NEVER be able to map both purposes to ACI whilst they are using the same VLAN ID for different purposes.

we started using separate vlan pools, which looks like we're it is going wrong, so I would like to move them to all use one vlan pool.

I would strongly advise using separate pools for VMM Domain VLAN allocation and static Physical Domain allocation. And further, I'd recommend that the VLAN IDs in each pool NOT overlap.

In short. Don't do that.

VMM Domains need to be linked to Dynamic Pools, and you can add static allocation blocks to Dynamic Pools. But at the end of the day, that is no different to using two different pools, so even if you move your Physical Domain to a Dynamic VLAN Pool that is shared with your VMM Domain, it is NOT going to solve your problem.

Would this cause an outage for the vmm domain if I moved it to point at same vlan pool that the physical domain is using if they contained all relevant vlans?

Well - as I said above, VMM Domains have to be linked to Dynamic Pools, so if your Physical Domain is using a static pool this idea is not going to sail anyway. And it is not going to solve your problem.

So after all that, I really don't know what your problem is, so I am unable to offer a solution. I thought that if I pulled your question apart and analysed it, I might spot the actual problem, so let me finish with this:

- Don't try and use the same pool for the VMM Domain and the Physical Domain

- Don't overlap VLANs in the VLAN Pool used for the Domains - but I suspect this advice may be too late - but at the end of the day, if you are statically mapping both VMM Domain VLANs and Physical Domain VLANs and are consistent with your VLAN usage, there should be no problem.

- IF the traffic from your legacy VMs is reaching ACI on different trunks to the legacy Physical servers, then assigning a L2 Interface Policy with the VLAN Scope set to Port Local scope may assist you, but that has it's own limitations and caveats. (Limitations: VMs and Physical Server will need to be in different BDs, and therefore different EPGs. Caveats: Consumes more internal VLAN mappings)

Forum Tips: 1. Paste images inline - don't attach. 2. Always mark helpful and correct answers, it helps others find what they need.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-03-2020 09:11 PM

Hi @RedNectar

Thanks for the reply.

To try and give you some detail to help.

So say we have vlan 100 in our current dc it has physcial servers and virtual servers that sit in it. The gateway still sits in the old datacentre switches.

I have trunked in vlan 100 into aci and mapped it to my l2out on vlan100.

I have 2 leaf switches in a vpc configuration, with both physical and virtual servers connected on vlan100.

I have a physical domain relating to a vlan pool with a static vlan 100 set and my physical server attached to a vpc that has a AEP pointing to that physical domain.

On that same pair of switches we have a vpc connected to a vmm domain that relates to a different vlan pool that is dynamic but has the statis vlan blocks set for vlan100.

I am using the same EPG_A and bridge domain for them.

The error I can see at the moment on the vpc to the vmm domain and on the vpc to the phy server is:

Code F3274

Fabric-encap-mismatch

Vnid mismatch between peers for encap vlan 100

Hope that makes it clearer in what I'm trying to achieve?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-03-2020 11:35 PM

Hi @alwynaz84,

I'll comment on a few things, and ask a few questions

I have trunked in vlan 100 into aci and mapped it to my l2out on vlan100.

Firstly, I'd suggest NOT using L2Outs. They are harder to manage and not as flexible as an ordinary Application EPG because you are locked to a single VLAN per L2Out. I don't believe that there is anything that a L2Out can do that an Application EPG can't do, but an Application EPG has much more flexibility.

I have 2 leaf switches in a vpc configuration, with both physical and virtual servers connected on vlan100.

This suggests to me that they currently share the same policy. I'm going to assume that you want the same policy for these VLAN 100 servers in ACI too. (For the most part). That's going to make that L2Out a very difficult beast.

I have a physical domain relating to a vlan pool with a static vlan 100 set and my physical server attached to a vpc that has a AEP pointing to that physical domain.

Hmm. This sounds, OK - but if you have a L2Out, you must also have a External L2 Domain. I'll assume it uses the same VLAN Pool and attaches to the same AAEP.

On that same pair of switches we have a vpc connected to a vmm domain that relates to a different vlan pool that is dynamic but has the statis vlan blocks set for vlan100.

I am using the same EPG_A and bridge domain for them.

Same EPG_A as what else?

The error I can see at the moment on the vpc to the vmm domain and on the vpc to the phy server is:

Code F3274

Fabric-encap-mismatch

Vnid mismatch between peers for encap vlan 100

Hope that makes it clearer in what I'm trying to achieve?

Somewhat clearer. But I still don't have the full picture. This is how I've interpreted it so far

But maybe the L2Out is a red herring. Maybe you dont' have a L2Out at all! Maybe you have:

Which is more likely to explain error Code F3274!

Sooooo. This is why you were looking at moving to:

And your original question:

Would this cause an outage for the vmm domain if I moved it to point at same vlan pool that the physical domain is using if they contained all relevant vlans?

Well, you'd probably have to move the Physical Domain to the dynamic VLAN Pool that the VMMs were linked to, rather than the VMM Domain to the Pool that the Physical Domain is using (Unless it happens to be a Dynamic Pool).

And that would be a quick action - I suspect it would cause some disription, but if you are getting a mismatched VNID for the BD (sorry - didn't put the BD in my pics - should have), you probably have some disruption anyway.

So my advice has to be to try this during a maintenance window, but my limited experience makes me think the disruption would be in the order of a few seconds.

Forum Tips: 1. Paste images inline - don't attach. 2. Always mark helpful and correct answers, it helps others find what they need.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-04-2020 08:21 AM

HI @RedNectar

Thanks again for the info and I appreciate the advise and guidance.

Yes the diagram with the EPG_A connecting to the VMM Domain and the Physical domain both using separate VLAN Pools is what I have currently configured, the Physical VLAN pool is a static and the VMM Domain is Dynamic so I will try moving the physical domain over to the Dynamic VMM configured VLAN Pool (obviously making sure the correct VLANs are in first and in static blocks).

I think what I haven't been clear on, is that these domain are all using different physical interfaces.

So the L2out is connecting back to the existing network via a different pair of switches and VPC.

The second pair of switches have 2 separate VPC's configured, one for a physical server connection and a different one for the VMware environment, so the physical server connection has the VLAN100 mapped to that VPC interface and then I have mapped VLAN100 statically to the VMM domain for the Virtual distributed switch configured/controlled via APIC.

When I was talking about using the same EPG_A and bridge domain I just meant I had attached the EPG_A to both the VPC/interface policy I configured for the physical server connection and attached the EPG to the VMM Domain (via the static vlan mapping configuration). I have attached a current policy view of what im trying to explain, and then also a to be diagram of all the domains calling the single vlan pool, once I make the change.

But to confirm from your original reply you stated:

"if you are statically mapping VLAN XX (Vlan100) in the VMM Domain and then statically mapping VLAN xx (Vlan100) to EPG_A in the Physical Domain there should be no problem."

So the configuration we are talking about moving the VLAN pools is this a done thing?

Also just to give you some more info, we have 2 other Leaf switches that only connect to a host within the VMware Environment (No physical domain is used) and that doesn't have any reported errors of VLAN overlap and we don't see and issues on Vlan100, whereas on the 2 Leafs in question that also have separate physical server connected we have the reported error of the VLAN overlap and if we spin up a VM on that VM its connectivity is very intermittent.

We do have the L2out, as you say we have trunked a lot of VLANs into ACI from the old network and it was a bit time consuming to configure each VLAN, however its mainly done now, so it shouldnt be a problem now. (altought I will now not use L2outs for the future) These trunked vlans will eventually be removed from the L2out as we migrate the gateways into ACI, but thats for another day. :-)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-09-2020 03:44 AM

Hi @RedNectar ,

I just wanted to advise that I made the change yesterday during a outage window, and it has worked with end devices only dropping a single packet. The overlapping faults have been removed and i can now see the VXLAN is consistent across the switches.

Thanks for the help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-09-2020 04:11 AM

Thanks so much for letting me (and the community) know!

Forum Tips: 1. Paste images inline - don't attach. 2. Always mark helpful and correct answers, it helps others find what they need.

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: