- Cisco Community

- Technology and Support

- Data Center and Cloud

- Application Centric Infrastructure

- ACI Multi-Pod - Traffic black-holing to leaf switch in maintenance mode (GIR)

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

ACI Multi-Pod - Traffic black-holing to leaf switch in maintenance mode (GIR)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-08-2020 10:14 PM

Dear community,

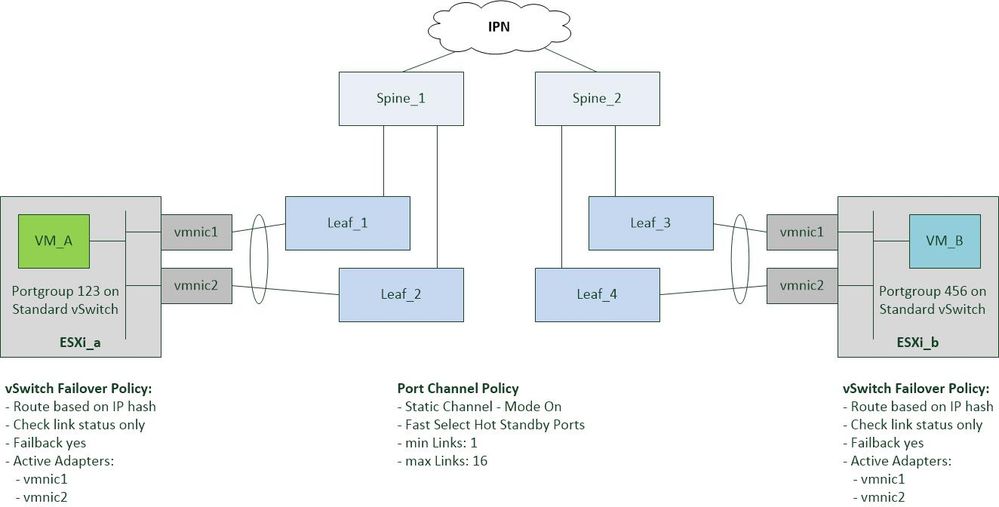

we have an ACI Multi-Pod environment with two Pods and one ESXi host connected to each of them. A static vPC is configured from the host to two leaf switches to provide failover redundancy:

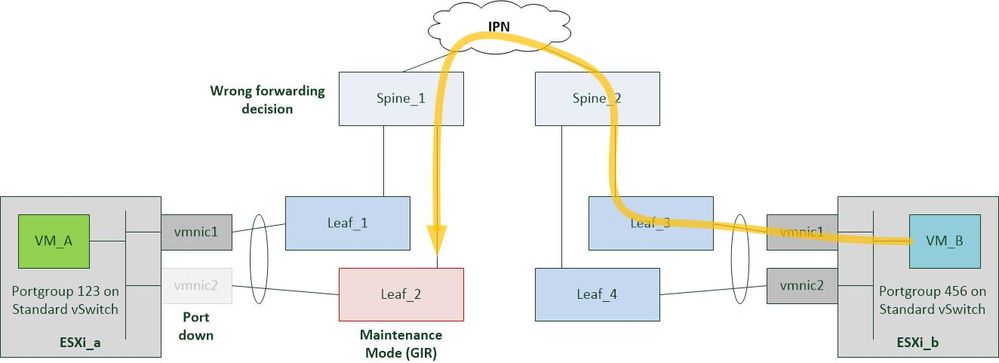

So far so good. If a leaf switch fails or a cable is detached we lose a packet or two and everything looks fine. However, if we put one leaf into maintenance mode, commission it, wait for it to fully recover (access ports come up, switch is active in fabric) and then put the second leaf of the same vPC domain into maintenance mode, traffic gets dropped for 6 to 7 minutes.

ELAM shows us that traffic is forwarded correctly up to the spine connecting to the vPC pair which for some reason decides to forward traffic to the switch in maintenance mode:

Coop says the endpoint's IP is known via the vPC TEP, which is fine. But the spine decides to still load balance traffic even though one of the potential destinations is (or should be) out of service. This is - at leas for us - unexpected behavior and now we would like to know if there is potentially something wrong with our configuration or if this is indeed expected.

Actually Maintenance Mode is not the real problem here, we only use that for reproduction of the issue which was originally discovered with firmware upgrade. The same happened when upgrading the even leaf switches right after finishing the odd ones.

"Just wait 10 minutes between upgrades" would apparently be a workaround but not explain why this is happening in the first place. There might be other issues related that we just haven't come across yet. It's also strange that the situation recovers after a more or less deterministic period of time, which makes you think that there is some cache and a timer involved.

One more thing: ISIS policy was set to a metric of 32, so the default value of 63 is not a problem here.

Any hints would be much appreciated

Thank you and kind regards

Nik

- Labels:

-

Cisco ACI

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-09-2020 04:39 AM

Hello,

I had a similar problem. Turned out I was missing the pim configuration on one of my IPN Node interfaces. There's an excellent Multi-Pod Troubleshooting white paper too.

HtH

Terry

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-09-2020 06:55 AM

Hello Terry,

thanks for your reply. While I agree that the IPN can be a tricky part I don't think it matters much in this case. Following the packets via ELAM we see that the ICMP request from VM_A to VM_B reaches it's destination and that the reply comes all the way back to the local spine. The spine then decides to forward it to the wrong leaf but the IPN has already been passed at this stage. Also we had other issues with the IPN before, so it has been double-, triple- and quadruple-checked already.

I will give the troubleshooting guide a try, anyway. As I see it, it's not only related to the IPN so it might reveal some new information.

Kind regards,

Nik

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

12-18-2020 07:46 AM

Just a guess:

Did you check the path metric for the vPC Tep address from the spine before reloading the second switch?

You should see 2 equal cost routes, otherwise there is still the "set-overload-bit on start-up"-timer active, preventing the spine from using the remaining path.

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: