- Cisco Community

- Technology and Support

- Data Center and Cloud

- Application Centric Infrastructure

- Re: Badly behaved endpoint on ACI

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-16-2022 12:20 PM

Hi,

We have a strange situation with a host and while this is clearly an application issue, I was hoping the considerable ACI brain trust here might help me wrap my head around how the fabric will behave in this situation.

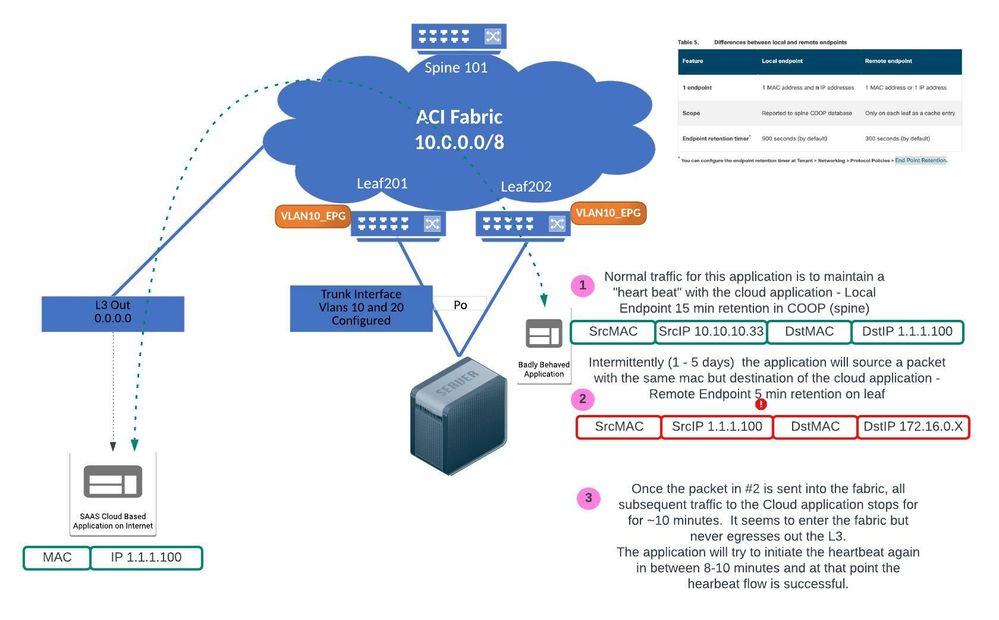

So this badly behaved application on the fabric instantiates a heartbeat to a cloud service out on the internet. Works just fine but periodically (no discernible pattern) a packet from the host leaks out with a source address of the Cloud service and a destination address of a 172.16.0.x which is not on the fabric (thought to be a tunnel address from the application that leaked out).

L3Out uses 0.0.0.0/0.

So one minute this is a local host on the fabric which COOP knows about, and the next minute its a remote IP. Once this "bad" packet leaks out we see the host generating more valid traffic but it never goes out the L3Out. After about 10 minutes, the application tries again to establish that heartbeat and then it works..until the next bad packet.

Any insight into how ACI would behave in this situation?

Solved! Go to Solution.

- Labels:

-

Cisco ACI

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-16-2022 01:25 PM

Hi @Claudia de Luna ,

What a great picture!

Firstly, I'm going to have to ask the obvious question trail

- Is Enforce Subnet Check set globally?

- If so, then the scenario you describe is a bug

- If not, then ...

- Is the Limit IP Learning To BD/EPG Subnet(s) option enabled on the relevant BD?

- If so, then the scenario you describe is a bug

- Is the Limit IP Learning To BD/EPG Subnet(s) option enabled on the relevant BD?

And that pretty much exhausts my TS logic for the situation, but, to answer your (paraphrased) Exam Question

How would ACI behave in this situation?

When packet 2 with source IP 1.1.1.100 reaches Leaf201 or Leaf202, and neither Enforce Subnet Check is set globally or Limit IP Learning To BD/EPG Subnet(s) option is enabled on the relevant BD, then the leaf learns this IP address and reports it to the spine proxy.

Since Leaf201 and Leaf202 are VPC peers, the peer IP Address table will also be updated.

Now any other traffic that is destined to the 1.1.1.100 IP address in the cloud that arrives on either Leaf101 or Leaf102 will be seen as locally attached (need to research that a bit more - will edit later)

More interesting though would be traffic that arrives at another leaf (if there are any). Those leaves would (should?) NOT send traffic destined to 1.1.1.100 to the proxy - they would send it to the border leaf advertising the 0.0.0.0/0 default route.

I'm sure I'm only re-enforcing what you already know. I hope others jump on and add their thoughts too

Forum Tips: 1. Paste images inline - don't attach. 2. Always mark helpful and correct answers, it helps others find what they need.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-16-2022 01:25 PM

Hi @Claudia de Luna ,

What a great picture!

Firstly, I'm going to have to ask the obvious question trail

- Is Enforce Subnet Check set globally?

- If so, then the scenario you describe is a bug

- If not, then ...

- Is the Limit IP Learning To BD/EPG Subnet(s) option enabled on the relevant BD?

- If so, then the scenario you describe is a bug

- Is the Limit IP Learning To BD/EPG Subnet(s) option enabled on the relevant BD?

And that pretty much exhausts my TS logic for the situation, but, to answer your (paraphrased) Exam Question

How would ACI behave in this situation?

When packet 2 with source IP 1.1.1.100 reaches Leaf201 or Leaf202, and neither Enforce Subnet Check is set globally or Limit IP Learning To BD/EPG Subnet(s) option is enabled on the relevant BD, then the leaf learns this IP address and reports it to the spine proxy.

Since Leaf201 and Leaf202 are VPC peers, the peer IP Address table will also be updated.

Now any other traffic that is destined to the 1.1.1.100 IP address in the cloud that arrives on either Leaf101 or Leaf102 will be seen as locally attached (need to research that a bit more - will edit later)

More interesting though would be traffic that arrives at another leaf (if there are any). Those leaves would (should?) NOT send traffic destined to 1.1.1.100 to the proxy - they would send it to the border leaf advertising the 0.0.0.0/0 default route.

I'm sure I'm only re-enforcing what you already know. I hope others jump on and add their thoughts too

Forum Tips: 1. Paste images inline - don't attach. 2. Always mark helpful and correct answers, it helps others find what they need.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-16-2022 02:55 PM

Hi,

Thank you for helping me work through this!

And apologies for not including those settings.

- Both Enforce Subnet check and Limit IP Learning are enabled.

What I'm not sure of is "Disable Remote EP Learning" which should only come into play with the #2 "bad" packet.

There is a timing component that is at play that I can't wrap my brain around. If its a remote endpoint then 5 minutes for age out, but it was also a Local endpoint in the COOP table so that would be 15 minutes to age out. And we know the heartbeat takes about 10 minutes to re-establish after one of these "bad" packets enters the fabric.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-17-2022 03:37 AM

Hi,

I kinda thought you probably had set those. Have you tried posting in the Cisco ACI Facebook group? There are a few people who monitor that that don't monitor this. With some luck you might get @tkishida to have a look at this one

Of course the real problem is why the App sends packets with the 1.1.1.100 Source IP.

Now I'm guessing that you've used ELAM to capture the errant packet anyway, but have you managed to see if the 1.1.1.100 address gets installed in the endpoint table?

This is really a nightmare to TS - wait 5 days then have a 10 min TS window...

I'll keep my thinking hat on in case I think of anything else.

Forum Tips: 1. Paste images inline - don't attach. 2. Always mark helpful and correct answers, it helps others find what they need.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-17-2022 07:16 AM

Hey Chris...No question this is an application issue. We were just looking for some ACI "knobs" that might help with the impact to the users when this happens while the application issue is worked. Also looking to understand what is happening with ACI under the hood in a mostly academic way.

We've not been able to catch the issue in time to do ELAM captures or to check whats in the endpoint table. Packet captures have been done at the application and on the other side of the L3Out. To your most excellent point..its been very difficult to troubleshoot!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-17-2022 06:24 AM

Claudia, which version are you running & is the L3out on the same two Leafs as your Server/Application? I see two Leafs in your awesome diagram, but it doesn't detail where the L3out is configured. This would be relevant to remote EP learning behavior.

Robert

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-17-2022 07:10 AM

Hi @Robert Burns,

Fabric is at 5.2(3g). L3Out is on another set of leafs. I didn't think about that!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-15-2022 01:58 PM

As it turns out, Enforce Subnet Check was *not* set!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-16-2022 08:01 AM

Thanks for closing the loop. Always good to verify with your own eyes

Robert

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-18-2022 11:39 AM - edited 03-18-2022 11:44 AM

Claudia,

Within the VRF of the misbehaving Application, there's no BD subnet defined for this 1.1.1.0/x subnet - correct?

Is it possible the "bad packet" being sent by the misbehaving application is Multicast? (Multicast would circumvent the Enforce Subnet check, which normally would prevent this from happening)

You should be able to setup the ELAM app trigger to look at the Leaf the mis-behaving app is connected and look for the 1.1.1.100 as the SRC IP trigger and leave it - it will hit when the app misbehaves. This would tell us a good deal.

Robert

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-19-2022 07:40 AM

Hi Robert,

No Bridge Domain for 1.1.1.0/x subnet. 100% External to the fabric. The L3Out does use 0.0.0.0.

The packet is decidedly not multicast. Its a TCP/TLS packet actually.

Of course!...I will work to get that set up...Thank you!

Claudia

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-19-2022 11:13 AM

Very interesting in that case. This is unexpected, for the endpoint learning to be affected with the subnet check active. I'd suggest to pursue the ELAM capture so we can see what the switch is doing with the bad packets, and open a TAC case and let us take a closer look. As soon as the ELAM hits (or as close as possible) gather a tech support bundle from the Leaf and border leafs in question. We should be able to verify if EPM and/or EPMC detail the endpoint change in HW.

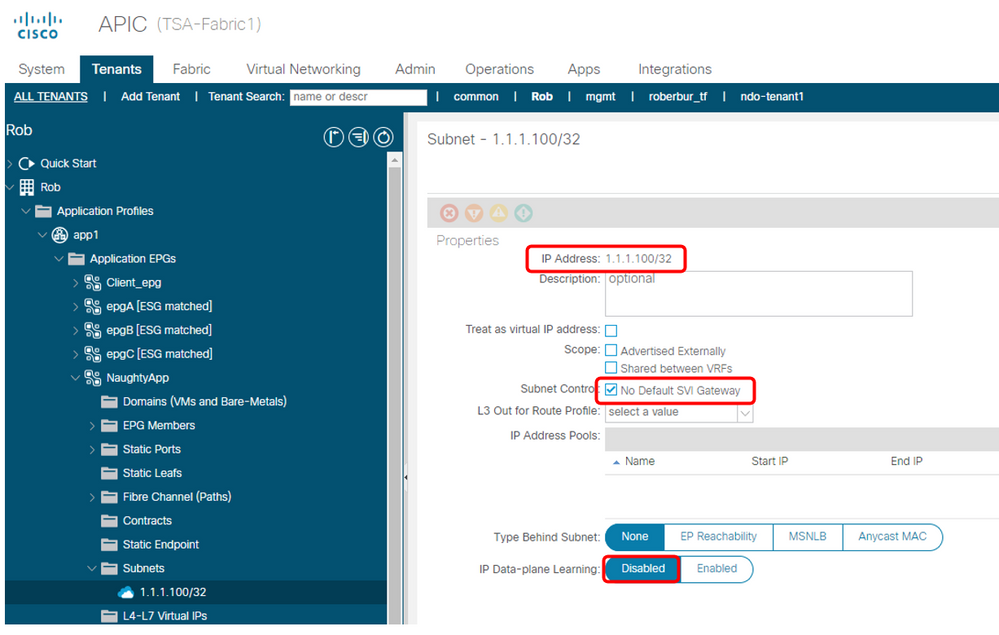

Obviously the application should be fixed, but the fabric also shouldn't be allowing this type of impact. One other long shot I was thinking as a preventative measure (I have not tested this on a spoofed IP btw) might be to Disable IP learning for that IP using a /32 entry within the Heartbeat endpoints EPG (Under EPG add Subnet, use 1.1.1.100/32, No Default SVI GW, and check 'Disable IP DP Learning').

This should stop any unexpected src IP packets from being learned as endpoints & affecting COOP. I would go this route after snagging the ELAM and tech support snapshot when it happens next, so we can RCA the issue.

Robert

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-19-2022 11:19 AM

Did you happen to get a packet capture? Sounds like you might if you know this is a TCP/TLS packet. If you have the capture, can you share it? Post here or DM me if you prefer.

Thanks,

Robert

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-21-2022 12:01 PM - edited 03-21-2022 12:03 PM

As Rob mentioned, if there are no bridge domains with a subnet matching 1.1.1.100 in the same VRF, "Enforce Subnet Check" should prevent any ACI leaf switches from learning 1.1.1.100 as either local or remote endpoints. If "Enforce Subnet Check" didn't prevent it, it would require TAC investigation on detailed logs of EPM/EPMC which are the processes managing endpoint learning on each switch.

The following is one idea you can check without diving into the log analysis:

- Identify which leaf each traffic is going through:

- 10.10.10.33 -> 1.1.1.100: leaf 201-202 -> border leaf (let's say leaf X)

- 1.1.1.100 -> 172.16.0.x: leaf 201-202 (as well?) -> which leaf? (same as border leaf?)

- Check the routing table on each leaf:

-

show ip route vrf <your tenant>:<your VRF> 1.1.1.100

- It should hit a route from the L3Out (0.0.0.0/0 in your case). If it's hitting a BD subnet (could be 1.0.0.0/8 or anything instead of 1.1.1.x), that's the configuration you need to fix. If it's a BD subnet, the command should show the "pervasive" flag.

-

An example of the RIB correctly pointing to the L3Out 0.0.0.0/0:

f2-leaf1# show ip route vrf TK:VRFA 1.1.1.100

--- snip ---

0.0.0.0/0, ubest/mbest: 2/0

*via 22.0.248.2%overlay-1, [200/1], 04w06d, bgp-65002, internal, tag 65002

recursive next hop: 22.0.248.2/32%overlay-1

*via 22.0.248.1%overlay-1, [200/1], 04w06d, bgp-65002, internal, tag 65002

recursive next hop: 22.0.248.1/32%overlay-1

If the routing table looks good and it's not realstic to capture the packet because the issue comes and goes randomly, here is the log that TAC would need to check on each leaf (especially the leaf "1.1.1.100 -> 172.16.0.x" is going through):

f2-leaf1# nxos_binlog_decode /var/log/dme/log/binlog_uuid_472_epmc_vdc_1_sub_1_level_3 | less

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-21-2022 06:16 PM

@tkishida @Robert Burns Thank you for the most excellent suggestions. The focus right now is on fixing the application but if there is an opportunity to execute your suggestions I will report back!

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide