- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-25-2016 12:58 PM - edited 03-01-2019 04:57 AM

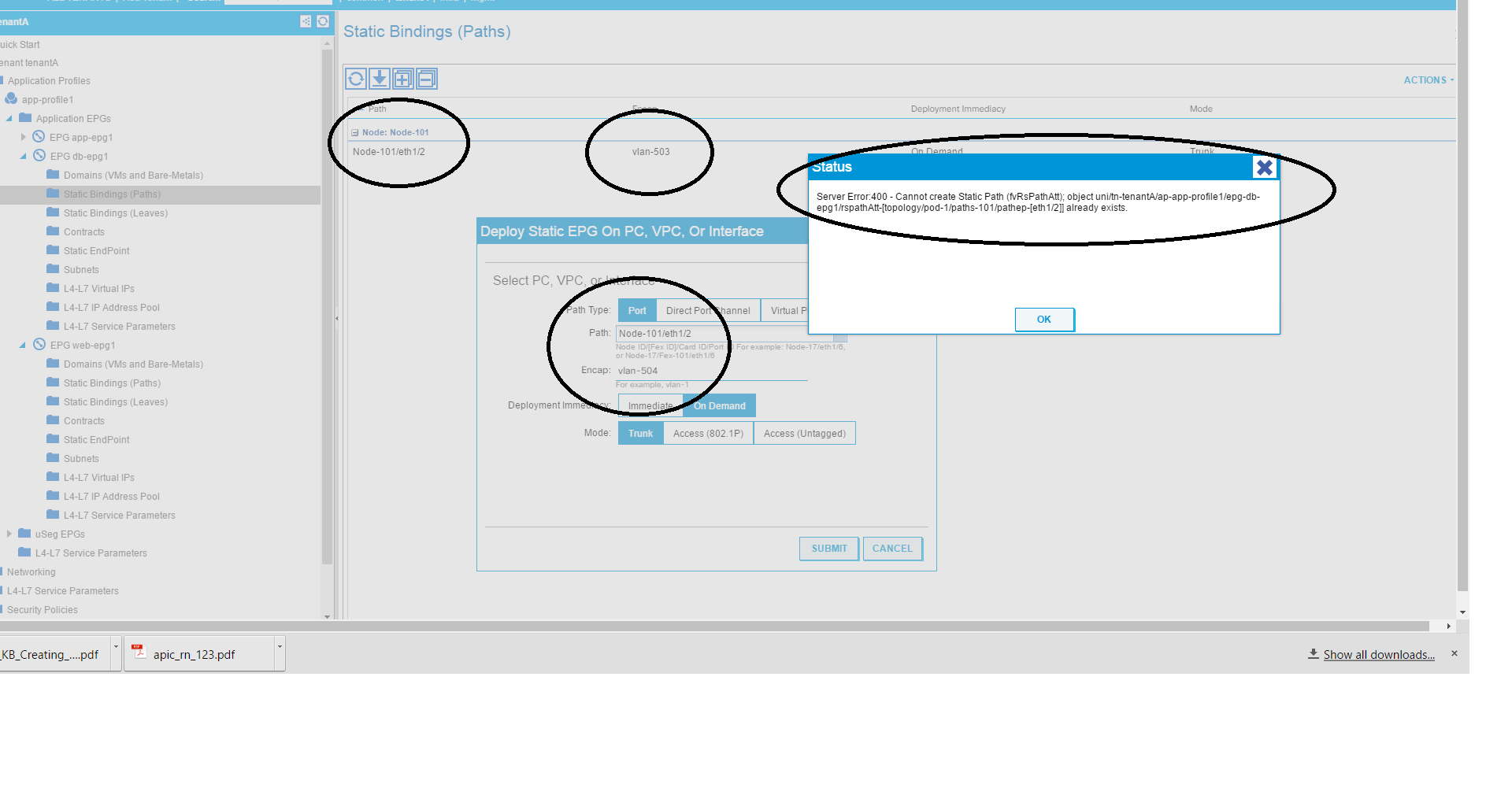

I am trying to put vlans 503 and 504 on leaf 101 port 1/2 in the web-epg. Why won't it let me? It says "already exists" but I can put another port:vlan combo in this EPG, I just can't put anything else on this port (Node-101/eth1/2). Also, if I go to another EPG, I can put another vlan on port 1/2 just fine.

-Bryan

Solved! Go to Solution.

- Labels:

-

Cisco ACI

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-25-2016 01:14 PM

Hi dodgerfan78, This behavior is expected. Associating multiple encaps on the same link to the same EPG/L2Out is not supported. You will need to create a separate EPG and make the association there.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-26-2016 09:27 AM

Hello,

It's hard to tell what the exact set of restrictions you're trying to work around as they are being given out piecemeal, but when working specifically with hypervisors and ACI, there is the option to perform "VMM Integration".

At a high level, this will allow the APIC to provision a port group (+ vlan assignment) to the hypervisor that we are subsequently integrating with ACI, for example, vCenter and ESXi hosts. This VMM domain also ties the association to any interfaces/leaf nodes that fall under the access policy tree, essentially provisioning and programming the matching vlan on interfaces to map to the portgroup (pushed as either a VDS or AVS).

In your example above, you are utilizing static paths to define your entryway into the fabric, which means you are essentially "hard coding" an interface with an expected vlan assignment and remove levels of flexibility sometimes needed in a virtualized environment (for example, vSwitch vs a VDS).

Static bindings are typically used for bare metal types of appliances sans virtualization. That's not to say it won't work, but you are essentially forcing restrictions upon yourself, as Leigh mentioned earlier.

Slide 23 in this presentation begins talking about aspects of VMM integration that may be worth reviewing:

Integration of Multi-Hypervisors with ACI

Cheers,

-Gabriel

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-26-2016 01:36 PM

Hey jdanrich, I've done this Vlan as an EPG migration a few times, and even for customer with plenty of VLANs, making multiple EPGs and BDs, one EPG and BD per VLAN, is trivial. Even when going with contracts and applying the default contract to each (VLAN) EPG, it is not too difficult or time consuming and actually doesn't use many resources (depending on the number of VLANs).

I can see the point that allowing this type of configuration would ease migration somewhat, but I believe by following these constructs customers will get a better understanding of the constructs within ACI and better use the new capabilities down the road.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-25-2016 01:14 PM

Hi dodgerfan78, This behavior is expected. Associating multiple encaps on the same link to the same EPG/L2Out is not supported. You will need to create a separate EPG and make the association there.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-25-2016 01:16 PM

Why on earth would this be the case? With separate EPGs, it means I need a contract between them. ACI should not dictate how and where I put my workloads. Why can VLAN 503 and 504 exist in the same EPG on different ports but not the same port? Why?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-26-2016 04:41 AM

Hello

Thanks for using SupportForums!

Leigh is correct, and here is the explanation.

The way ACI currently classifies traffic into EPGs is based on either VLAN or (PORT,VLAN). This means, in a simplistic way that an EPG = VLAN.

Precisely to your point, Its perfectly OK to use the same port (1/2 for example) to trunk different VLANs but only if the VLAN is part of a different EPG which you have confirmed to be the case. Then a contract is used to allow communication

I don't think it was expected that a single port would have a single endpoint with two different subnets/VLANs configured on it and that needed to communicate with each other or provided a similar service.

In the case of an ESXI host, each port group needs a VLAN. Therefore, the same physical interface on the switch can trunk many VLANs but they belong to different EPGs. Same with an External L2 device, same port can trunk multiple VLANs to the external switch but each External Bridge Network has its own EPG (or if extending the EPG, each EPG will have a static path to the port on a different vlan/EPG). Bare metal or physical servers don't really meet those conditions. its usually just a single OS with a single IP address. In the case of a linux machine with bonds and such it would still be expected that the individual interface be part of different VLANs with different IP addresses in different subnets. which would make sense to classify them in different EPGs

What type of endpoint are you trying to connect that has two VLANs/subnets that should be classified as similar endpoints, if i may ask? We are more than happy to assist in a solution to your configuration!

Looking forward to your response

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-26-2016 06:47 AM

So in essence, if we have an external Layer 2 switch that has hosts on two separate VLANs (503 & 504 as Dodger is saying) and the fabric is the def g/w, then we can put them in the same EPG and have to take the additional step to put them into an any-any contract between the external VLANs..

Correct?

If so, when will this get corrected? For I'll have several customers that will have this requirement as part of the migration

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-26-2016 07:01 AM

Hi jdanrich,

Contracts are placed between EPGs, not VLANs. So in your case, you could have one interface with VLAN 503 and 504. You would have two EPGs (one for each VLAN) in the same Bridge Domain, with that default gateway programmed on the Bridge Domain. Then you would apply contracts between the two EPGs to allow for traffic to flow, or you could unenforce the VRF.

Is there a reason that you would need to place 2+ VLANs on the same interface in the same EPG or would the above scenario work?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-26-2016 01:21 PM

Ipember,

The reason for this is somewhat simple. Say I have a customer that has two set's of switches which are span across to one another and both have the same VLAN's Normally the host devices in each VLAN always talk to one another and hosts in other VLANs.. In order to make the migration to ACI smoother, I would extend the infrastructure into the fabric and have the fabric become the Layer 3 gateway for each inbound. Then simply a single EPG can be created to put all of the hosts in for the time being while they are migrated over.. Then I don't need to use 4+ interfaces across the the fabric to bring them into the network and I don't have to have multiple EPG's upfront for this migration activity.

This allows for a quicker migration for customers that may have older sketchy Cisco hardware that is going EoL and then want to get the initial migration steps started without making it complicated. ACI based on what I ready, makes it complicated and customers don't think that it should.

IMHO this should be a simple exercise to perform and use a limited number of interfaces and configuration..

L8r

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-26-2016 01:36 PM

Hey jdanrich, I've done this Vlan as an EPG migration a few times, and even for customer with plenty of VLANs, making multiple EPGs and BDs, one EPG and BD per VLAN, is trivial. Even when going with contracts and applying the default contract to each (VLAN) EPG, it is not too difficult or time consuming and actually doesn't use many resources (depending on the number of VLANs).

I can see the point that allowing this type of configuration would ease migration somewhat, but I believe by following these constructs customers will get a better understanding of the constructs within ACI and better use the new capabilities down the road.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-27-2016 10:19 AM

stcorry, It maybe trivial to you, but customers that don't have the technical prowess that yours did or do will look at you and ask, "I'd like this simpler so we can adapt over time" something your construct does not provide. As such, your view of trivial is not aligned with theirs when they are taking what they view to be is a "leap-of-faith" to getting to a structure that they already know and then migrating over to newer configurations and capabilities.

Now if your answer and the other Cisco people in this thread is "Well we can't do that way cause we're limited to doing it like this" then fine, we can explain that and tell them about this "limitation".

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-27-2016 10:49 AM

Hi jdanrich,

Currently it is not possible to map multiple encaps on the same interface to the same EPG. There are likely other ways to accomplish your goals as stcorry mentioned, but that specific functionality doesn't exist today.

If this is a functionality that you wish to have then I recommend that you reach out to your account team so that they can communicate your use case to the BU and drive this for you.

Hope that helps!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-26-2016 06:53 AM

I am just in a lab. And I was thinking of a scenario where I had two web server VMs on the same subnet, and they would typically be in same EPG since they have same policy. But suppose I want to "micro-segment" using uEPG. The only way to do that would be to put them on separate VLANs to force traffic up to the leaf. Otherwise the vSwitch would allow traffic between them. If I had to actually put them on separate EPGs, then I need to create a contract for each one of those EPGs and the other EPGs they communicate with (External, APP), and it grows from there. These VMs may not always be on same hypervisor but they may...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-26-2016 06:58 AM

If you are trying to "micro-segment" the traffic by forcing it up to the leaf, placing both VLANs in the same EPG will not achieve that goal because there will be no traffic enforcement within the same EPG. So in that case the traffic would still be allowed between the two VLANs, but it would be done on the leaf and not the vSwitch. Are you referring to uSeg per this document? http://www.cisco.com/c/en/us/td/docs/switches/datacenter/aci/apic/sw/1-x/virtualization/b_ACI_Virtualization_Guide_1_2_1x/b_ACI_Virtualization_Guide_1_2_1x_chapter_01000.html

Because doing this won't require that you put two VLANs on the same interface in the same EPG. Just trying to get an understanding of your use case. Let me know if that makes sense!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-26-2016 07:11 AM

It just seems like an arbitrary restriction. My VMs could move anywhere and be on any host in any port group. Except now they can't. If I have VMs on host 1 port group with VLAN 503 and host 2 in port group with vlan 504 they can be on same EPG. But if the VM on host 2 gets "vmotioned" to host 1 it can no longer be in same EPG because that host would be trunked to the same leaf port for both port groups.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-26-2016 09:27 AM

Hello,

It's hard to tell what the exact set of restrictions you're trying to work around as they are being given out piecemeal, but when working specifically with hypervisors and ACI, there is the option to perform "VMM Integration".

At a high level, this will allow the APIC to provision a port group (+ vlan assignment) to the hypervisor that we are subsequently integrating with ACI, for example, vCenter and ESXi hosts. This VMM domain also ties the association to any interfaces/leaf nodes that fall under the access policy tree, essentially provisioning and programming the matching vlan on interfaces to map to the portgroup (pushed as either a VDS or AVS).

In your example above, you are utilizing static paths to define your entryway into the fabric, which means you are essentially "hard coding" an interface with an expected vlan assignment and remove levels of flexibility sometimes needed in a virtualized environment (for example, vSwitch vs a VDS).

Static bindings are typically used for bare metal types of appliances sans virtualization. That's not to say it won't work, but you are essentially forcing restrictions upon yourself, as Leigh mentioned earlier.

Slide 23 in this presentation begins talking about aspects of VMM integration that may be worth reviewing:

Integration of Multi-Hypervisors with ACI

Cheers,

-Gabriel

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-26-2016 01:48 PM

I think it would be beneficial if we worked together on this issue one on one. I recommend you open a TAC case with the ACI team. I am in RTP so eastern time and would be very happy to work with you to meet your requirements.

To answer your post. depending on how you do your configuration, your VMs will still be able to vMotion to any interface where there is a hypervisor in the VMM domain as Gabe pointed out. its actually a lot more dynamic and hands off than you would expect!

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: