- Cisco Community

- Technology and Support

- Data Center and Cloud

- Application Centric Infrastructure

- Re: Operation process in case of apic cluster failure

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-09-2020 03:19 AM

In 4 multi-pod configurations, 1 APIC in pod1, 2 APIC in pod2, 2 APIC in pod3, 5 clusters, 1 apic each, 2 dead, 3 I want to know the details of the operation process when I die. How many APICs must die to limit read / write? I am curious what the APIC will do when it continues to decrease by one. I know that APIC has no service impact when all die. Also, if you have any data on how to distribute shards in detail about apic that has 5 clusters, please. For reference, the number of leaves is 100 or less.

Solved! Go to Solution.

- Labels:

-

APIC

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-09-2020 05:16 AM - edited 04-09-2020 05:57 AM

Hi,

I would like to start by making a small correction: if you are in a multi-pod environment, all APIC controllers are part of the same cluster. So in your scenario, you have 1 cluster with 5 controllers.

The general rule when it comes to APIC distribution in a multi-pod environment, is to avoid placing more then two controllers in the same pod. As a guideline, you can refer to this:

The reason for this distribution is to avoid the total loss of a information for a shard. Now what is a shard you might ask? No worries, I got you:

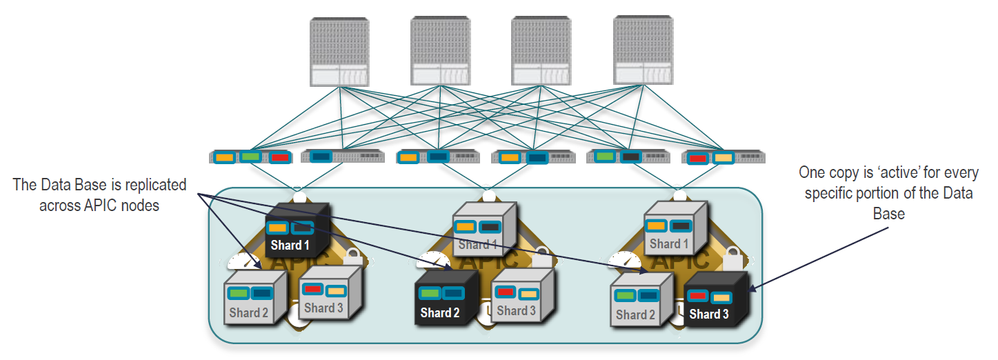

The APIC cluster uses a technology from large databases called sharding - very similar concept as horizontal database partitioning, but better. Better in the sense that it increase redundancy and performance because the db tables are split across servers, and smaller tables are replicated as complete units. Cisco APIC uses a replication factor of 3, meaning each shards has 3 replicas across the cluster.

To have a visual understanding on how sharding actually looks like, here is how it looks in a 3-node cluster:

In a cluster only one shard is active, while the other 2 are standby. If one out of 2 shards dies, the other two are still read-write. If 2 out of 3 dies, the remaining shard becomes read only. This is why, in the moment when 2 out of 3 controllers from an APIC cluster experiences a hardware failure, the remaining APIC goes into what is called a minority and becomes read-only.

If you increase the number of APICs, the replication factor remains the same (3) and the shards are distributed between all APICs, and very important, some APICs might have all shards, others only a subset of shards. Why is this important? Because in case of a failure, there might be a chance that some shards to be in read-only and other in read-write.

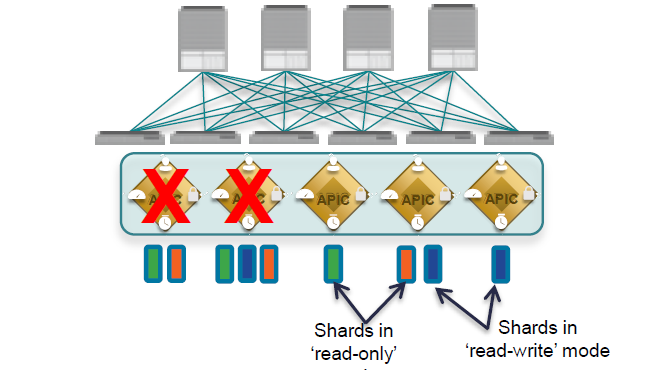

This is a bad scenario for a Single Pod Fabric, where some shards are in read only, other are in read write:

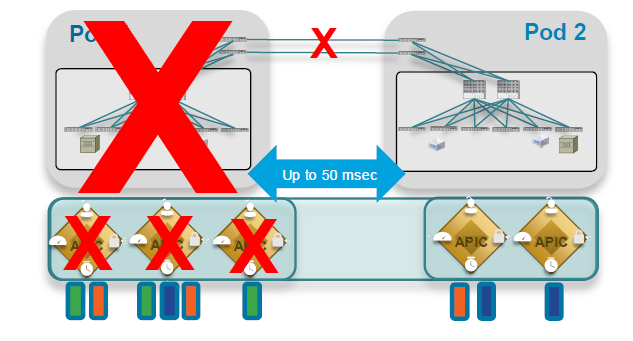

In your topology, the distribution is ok, as you have maximum 2 nodes per pod.

Now as we discussed about the sharding and shards distribution, we can talk about the potential loss of data in case of a h/w failure. How can that happen? Like this:

Here the "green" shard is lost. Why this happend is pretty clear in the picture - all 3 APICs containing the green shard died.

If you have max 2 APICs per POD, you eliminate this potential problem. However, if two APICs goes down, there is still a chance that some shards to go into read only, but no data is lost.

If there is a 3 out of 5 failure, the chance to loose some shards is high. if you get in a situation like this (or like the one illustrated above), you will need to contact TAC and BU. The procedure is called ‘ID Recovery’ and is used to restore the whole fabric state to the latest taken configuration snapshot (if you have one).

I hope I did not bored you with all these details, and you will find them useful.

Note: I took all these details and images from the following documents:

Ciscolive presentation - BRKACI-2003 https://www.ciscolive.com/c/dam/r/ciscolive/emea/docs/2019/pdf/BRKACI-2003.pdf

Regards,

Sergiu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-09-2020 06:13 AM

one thing I would like to add on top of what Sergiu already shared here.

Cold Standby APIC feature was introduced in 2.2x version to cover exactly these type of scenarios, where if you loose 2 APICs at the same time due to lost connectivity to a POD which has 2 APICs, you can promote the standby APIC into active APIC to get the cluster in healthy state.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-09-2020 05:16 AM - edited 04-09-2020 05:57 AM

Hi,

I would like to start by making a small correction: if you are in a multi-pod environment, all APIC controllers are part of the same cluster. So in your scenario, you have 1 cluster with 5 controllers.

The general rule when it comes to APIC distribution in a multi-pod environment, is to avoid placing more then two controllers in the same pod. As a guideline, you can refer to this:

The reason for this distribution is to avoid the total loss of a information for a shard. Now what is a shard you might ask? No worries, I got you:

The APIC cluster uses a technology from large databases called sharding - very similar concept as horizontal database partitioning, but better. Better in the sense that it increase redundancy and performance because the db tables are split across servers, and smaller tables are replicated as complete units. Cisco APIC uses a replication factor of 3, meaning each shards has 3 replicas across the cluster.

To have a visual understanding on how sharding actually looks like, here is how it looks in a 3-node cluster:

In a cluster only one shard is active, while the other 2 are standby. If one out of 2 shards dies, the other two are still read-write. If 2 out of 3 dies, the remaining shard becomes read only. This is why, in the moment when 2 out of 3 controllers from an APIC cluster experiences a hardware failure, the remaining APIC goes into what is called a minority and becomes read-only.

If you increase the number of APICs, the replication factor remains the same (3) and the shards are distributed between all APICs, and very important, some APICs might have all shards, others only a subset of shards. Why is this important? Because in case of a failure, there might be a chance that some shards to be in read-only and other in read-write.

This is a bad scenario for a Single Pod Fabric, where some shards are in read only, other are in read write:

In your topology, the distribution is ok, as you have maximum 2 nodes per pod.

Now as we discussed about the sharding and shards distribution, we can talk about the potential loss of data in case of a h/w failure. How can that happen? Like this:

Here the "green" shard is lost. Why this happend is pretty clear in the picture - all 3 APICs containing the green shard died.

If you have max 2 APICs per POD, you eliminate this potential problem. However, if two APICs goes down, there is still a chance that some shards to go into read only, but no data is lost.

If there is a 3 out of 5 failure, the chance to loose some shards is high. if you get in a situation like this (or like the one illustrated above), you will need to contact TAC and BU. The procedure is called ‘ID Recovery’ and is used to restore the whole fabric state to the latest taken configuration snapshot (if you have one).

I hope I did not bored you with all these details, and you will find them useful.

Note: I took all these details and images from the following documents:

Ciscolive presentation - BRKACI-2003 https://www.ciscolive.com/c/dam/r/ciscolive/emea/docs/2019/pdf/BRKACI-2003.pdf

Regards,

Sergiu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-09-2020 10:58 AM

Thank you for your kind answer. I have additional questions

** Question 1

When 3 or 4 out of 5 H / W failures cause some shards to be lost, the endpoints connected to the actual leaf

Are you saying that data traffic could be lost and affect the service?

When APIC is all dead, we know that there is no service impact in ACI environment where control plan and data plan are separated.

** Question 2

I have seen in the documentation that one shard has three replicas. Then how many shards are in one APIC?

** Question 3

Up to 80 leaf can be controlled with 3 APICs. Up to 300 leaf can be controlled with 5 APICs.

We are currently running over 112 leaf and 5 APIC.

If APIC is 1 or 2 dead, can I still control over 100 leaves?

I wonder what kind of loss and how it behaves when one or two or three die.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-09-2020 01:04 PM

Hello,

** Question 1When 3 or 4 out of 5 H / W failures cause some shards to be lost, the endpoints connected to the actual leaf

Are you saying that data traffic could be lost and affect the service?

When APIC is all dead, we know that there is no service impact in ACI environment where control plan and data plan are separated.

No. The data traffic will not be affected, regardless of how many APICs are down. What I mentioned, is about the shards which can be affected.

** Question 2

I have seen in the documentation that one shard has three replicas. Then how many shards are in one APIC?

You can use the following command to see the number of shards(32), replicas(3 for each shard) and their current state:

apic1# acidiag rvread \- unexpected state; /-unexpected mutator; s-> 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32lcl r->123123123123123123123123123123123123123123123123123123123123123123123123123123123123123123123123lcl 1 2 3 4 5 ...

The column on the left, represents the services (policyelem, policymgr, eventmgr etc)

More details about the command can be found here: https://www.cisco.com/c/en/us/td/docs/switches/datacenter/aci/apic/sw/4-x/troubleshooting/Cisco-APIC-Troubleshooting-Guide-42x/Cisco-APIC-Troubleshooting-Guide-42x_appendix_010101.html

** Question 3

Up to 80 leaf can be controlled with 3 APICs. Up to 300 leaf can be controlled with 5 APICs.

We are currently running over 112 leaf and 5 APIC.

If APIC is 1 or 2 dead, can I still control over 100 leaves?

I wonder what kind of loss and how it behaves when one or two or three die.

Once again, regardless of how many APICs are down - data plane / user data forwarding will not be affected. The problems we will discuss further are related to shards, logical model (config residing on APIC) and APIC performance. When I will mention down, I am referring to hardware problem, where APIC needs to be replaced.

If 1 APIC goes down, I do not expect any problems.

If 2 APICs are down, again, no shards will be lost, and the only thing which could appear is that the remaining APICs might respond a bit slower, but as long as you do not make any config changes you should be fine. If you are in the stage of 2 APICs down, you will anyway need to recover the rest APICs which are down.

If 3 APICs are down, two things will be experienced: the remaining APICs will be in read-only, and there is a very high chance to loose some shards. Here you will need to contact TAC directly. Do not try to recover the APICs by yourself.

If 4 APICs are down, I am not quite sure if this is recoverable. However, TAC should still be involved.

If 5 APICs are down.. well.. here is a scenario hard to imagine. If you reach this stage, you will need to recover the hardway. I explained the recovery and different scenarios here https://community.cisco.com/t5/application-centric/all-apic-controllers-in-fabric-go-down/td-p/4053569

Regards,

Sergiu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-09-2020 06:13 AM

one thing I would like to add on top of what Sergiu already shared here.

Cold Standby APIC feature was introduced in 2.2x version to cover exactly these type of scenarios, where if you loose 2 APICs at the same time due to lost connectivity to a POD which has 2 APICs, you can promote the standby APIC into active APIC to get the cluster in healthy state.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-09-2020 11:02 AM

Thank you for your kind answer. I have additional questions

** Question 1

When 3 or 4 out of 5 H / W failures cause some shards to be lost, the endpoints connected to the actual leaf

Are you saying that data traffic could be lost and affect the service?

When APIC is all dead, we know that there is no service impact in ACI environment where control plan and data plan are separated.

** Question 2

I have seen in the documentation that one shard has three replicas. Then how many shards are in one APIC?

** Question 3

Up to 80 leaf can be controlled with 3 APICs. Up to 300 leaf can be controlled with 5 APICs.

We are currently running over 112 leaf and 5 APIC.

If APIC is 1 or 2 dead, can I still control over 100 leaves?

I wonder what kind of loss and how it behaves when one or two or three die.

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide