- Cisco Community

- Technology and Support

- Data Center and Cloud

- Application Centric Infrastructure

- VMM integration fails after APIC crash

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-12-2019 09:50 AM

Hi,

In a lab environment the only APIC crashed without any known reason (so far..)and we had to restart it. After that all seems to work well except the VMM Vcenter integration. The faults we get are:

F16438

[FSM:STAGE:RETRY:]: Connect stage for VM Controller: VCENTER1 VM Domain: VCENTER1 VM Provider: VMware Error: Timeout(FSM-STAGE:ifc:vmmmgr:CompCtrlrAdd:Connect)

F606262

Add-FSM for VM Controller: VCENTER1 VM Domain: VCENTER1 VM Provider: VMware Error: Failed to retrieve ServiceContent from the vCenter server

and also F0130:

Connection to VMM controller: 10.10.10.10 with name VCENTER1 in datacenter DC1 in domain: VCENTER1 is failing repeatedly with error: [Failed to find datacenter DC1 in vCenter]. Please verify network connectivity of VMM controller 10.10.10.10 and check VMM controller user credentials are valid.

but the vcenter is reachable and the credentials are the good ones.

In the APIC we can see some errors in the log file /var/log/dme/log/svc_ifc_vmmmgr.bin.log:

20174||19-09-12 18:28:56.110+02:00||ifm||DBG3||to=ifc_eventmgr:2:1:3:0,co=ifm||peer acknowledged the reception of msgid 0xa19154d40928 (envelope 0x8000000002126)||../common/src/ifm/./Protocol.cc||759

18212||19-09-12 18:28:56.110+02:00||vmmConfigUpdate__||DBG4||fr=ifc_vmmmgr:2:1:14:0:6:3,to=ifc_vmmmgr:2:1:14:0:6:3,co=doer:6:3:0x300000000040b8d5:1,si=0x10e063a17af884:0 ms||(envelope 0x8000000002127: RECEIVE-SINGLE:REQUEST[vmmConfigUpdate/]) CONTENT :

<vmmConfigUpdate rule="8196" config="0" result="0" cookie="" transactionId="4750318142945412" function="0" srcContext="" destContext="comp/prov-VMware/ctrlr-[VCENTER1]-VCENTER1" destContextType="1275" userName="" isAdminToken="false" isRemoteUser="false" unixUserId="0" errorCode="0" errorDescr="" senderTermId="0" reservedInt1="2636025409" reservedInt2="0" reservedInt3="0" reservedInt4="0">

<inCtrlrDn value="comp/prov-VMware/ctrlr-[VCENTER1]-VCENTER1"/>

<inErrCode value="ERR-connect"/>

<inAction value="0"/>

<inCtxt>

<vmmCtxt childAction="" ctrlrDn="comp/prov-VMware/ctrlr-[VCENTER1]-VCENTER1" descr="" dn="" guid="" id="0" issues="" lcOwn="local" modTs="never" name="" nameAlias="" oid="" replicaId="3" rn="" runId="5" shardId="6" status="" trigDn="action/vmmmgrsubj-[comp/prov-VMware/ctrlr-[VCENTER1]-VCENTER1]/compCtrlrFsm-Add" trigId="31" trigT="fsm" uuid=""/>

</inCtxt>

<inConfigs>

<compCtrlr accessMode="read-write" apiVer="" aveSwitchingActive="no" aveTimeOut="30" childAction="" ctrlKnob="epDpVerify" ctrlrPKey="" deployIssues="" descr="" dn="comp/prov-VMware/ctrlr-[VCENTER1]-VCENTER1" domName="" dvsVersion="unmanaged" enableAVE="no" enableTag="no" epRetTime="0" hostOrIp="" id="0" inventoryStartTS="never" inventoryTrigSt="untriggered" issues="" key="" lagPolicyName="" lastEventCollectorId="datacenter" lastEventId="0" lastEventTS="0" lastInventorySt="completed" lastInventoryTS="never" lcOwn="local" maxWorkerQSize="defaultQueueSize" modTs="never" mode="default" model="" monPolDn="" name="" nameAlias="" operSt="unknown" port="0" remoteErrMsg="Timeout" remoteOperIssues="" rev="" rn="" rootContName="" scope="vm" ser="" setDeployIssues="" setRemoteOperIssues="" status="" unsetDeployIssues="" unsetRemoteOperIssues="" usr="" vendor="" vspherePHA="no" vsphereTag="no" vxlanDeplPref="vxlan"/>

</inConfigs>

<inEvtRec/>

</vmmConfigUpdate>||../common/src/framework/./core/proc/Stimulus.cc||1083

18212||19-09-12 18:28:56.110+02:00||ifc_vmmmgr||DBG4||co=doer:6:3:0x300000000040b8d5:1||applyConfigSet||../svc/vmmmgr/src/gen/ifc/app/./imp/vmm/Common.cc||165

18212||19-09-12 18:28:56.110+02:00||ifc_vmmmgr||DBG4||co=doer:6:3:0x300000000040b8d5:1||Applying Config:

<compCtrlr accessMode="read-write" apiVer="" aveSwitchingActive="no" aveTimeOut="30" childAction="" ctrlKnob="epDpVerify" ctrlrPKey="" deployIssues="" descr="" dn="comp/prov-VMware/ctrlr-[VCENTER1]-VCENTER1" domName="" dvsVersion="unmanaged" enableAVE="no" enableTag="no" epRetTime="0" hostOrIp="" id="0" inventoryStartTS="never" inventoryTrigSt="untriggered" issues="" key="" lagPolicyName="" lastEventCollectorId="datacenter" lastEventId="0" lastEventTS="0" lastInventorySt="completed" lastInventoryTS="never" lcOwn="local" maxWorkerQSize="defaultQueueSize" modTs="never" mode="default" model="" monPolDn="" name="" nameAlias="" operSt="unknown" port="0" remoteErrMsg="Timeout" remoteOperIssues="" rev="" rn="" rootContName="" scope="vm" ser="" setDeployIssues="" setRemoteOperIssues="" status="" unsetDeployIssues="" unsetRemoteOperIssues="" usr="" vendor="" vspherePHA="no" vsphereTag="no" vxlanDeplPref="vxlan"/>||../svc/vmmmgr/src/gen/ifc/app/./imp/vmm/Common.cc||204

18212||19-09-12 18:28:56.110+02:00||ifc_vmmmgr||DBG4||co=doer:6:3:0x300000000040b8d5:1,dn='"D/QcAAAcAVk13YXJlAAkAVkNFTlRFUjEACQBWQ0VOVEVSMQA="'||Mo Dn ||../svc/vmmmgr/src/gen/ifc/app/./imp/vmm/Common.cc||212

18212||19-09-12 18:28:56.110+02:00||ifc_vmmmgr||INFO||co=doer:6:3:0x300000000040b8d5:1||processStimulus - Received errorCode: 1572||../svc/vmmmgr/src/gen/ifc/app/./imp/vmm/Manager.cc||578

18212||19-09-12 18:28:56.110+02:00||ifc_vmmmgr||INFO||co=doer:6:3:0x300000000040b8d5:1||Received errorCode: 1572||../svc/vmmmgr/src/gen/ifc/app/./imp/vmm/Manager.cc||501

18212||19-09-12 18:28:56.110+02:00||ifc_vmmmgr||INFO||co=doer:6:3:0x300000000040b8d5:1||Received stimulus for FSM context||../svc/vmmmgr/src/gen/ifc/app/./imp/vmm/Manager.cc||510

18212||19-09-12 18:28:56.111+02:00||ifc_vmmmgr||INFO||co=doer:6:3:0x300000000040b8d5:1||Calling AsyncFailManual||../svc/vmmmgr/src/gen/ifc/app/./imp/vmm/Manager.cc||523

^

We've already restarted the VCSA appliance, redone the VMM integration with ACI but we get the same result..

I'd appreciate any clue..

Thanks.

Solved! Go to Solution.

- Labels:

-

Cisco ACI

-

Other ACI Topics

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-19-2019 02:36 PM

Hi @HelenaC ,

With VMM integration you have to remember two important things:

- all you see in ACI is a reflection of what is seen by vCenter, you can't change anything once it has been created

- the only influence the APIC has over vCentre is:

- Whenever you create a VMM Domain, the APIC will create a Distributed vSwitch (typicially a vMware Distributed Switch or VDS - let's not get distracted by the AVS)

- Every time you associate an EPG with that VMM Domain, a new port group gets created on that VDS. This port group will

- get the name in the format Tenant|ApplicationProfile|EndPointGroup

- be allocated a VLAN ID from the VLAN Pool linked to the VMM Domain

If your VMM Domain association gets corrupted, here is how you repair it

Step 1: Remove VMs from portgroups

- in vCenter, find every VM that has a NIC that has been assigned to an EPG style portgroup

- move the VM to another portgroup not associated with the DVS, like VM Network (in our setup we have created a special portgroup called isolated just for VMs that have no EPG to join yet)

This step ensures you don't see errors in vCenter when you perform Step 2

Step 2: Remove the VMM Domain associations [Optional, but makes it clean]

- Go to each EPG that has been associated with this VMM Domain

- Remove the VMM Domain association. This will make the APIC ask vCenter to remove the portgroup from the VDS. This may cause some errors to appear in vCenter if you did not complete Step 1 properly.

Step 3: Remove VMM Association

On the APIC, delete the VMM Domain. This will make the

- make the APIC ask vCenter to remove the entire VDS. This will cause some errors to appear in vCenter if you did not complete Step 2.

- If you DID skip step 2, or for some reason the VDS still remains in vCenter, manually remove the VDS in vCenter. You will NOT be able to do this if you did not complete step 1 correctly.

Step 4: Re-create the VMM Domain

- On the APIC, re-create your VMM Domain. Make sure you

- choose the correct VDS type and

- use the correct (case sensitive) vMware Datacenter name

- (and don't ever change the datacenter name in vCenter forever forward. If you do, go back to Step 1.)

- Check vCenter to see that the VDS gets created.

Step 5: Re-associate the EPGs

If you DID skip Step 2 above, 1. below not necessary, so long as the VMM Domain name is EXACTLY the same (you'll find out in 2. below)

- On the APIC, re-assign each EPG to the VMM Domain.

- As you do this, check vCenter to see that a new portgroup gets created on the VDS each time you associate an EPG to the VMM Domain

Step 6: Re-assign the VMs to the portgroups

- In vCenter, find each VM you previously removed from an ACI EPG Portgroup and reassign it.

- As you do this, check your Tenant > Applicaiton Profiles > ApplicationProfile > Application EPGs > EPG >| [Operational] tab and check you see the VMs appear in the list.

Job Done. Have a coffee.

I hope this helps

Don't forget to mark answers as correct if it solves your problem. This helps others find the correct answer if they search for the same problem

Forum Tips: 1. Paste images inline - don't attach. 2. Always mark helpful and correct answers, it helps others find what they need.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-16-2019 12:19 AM

If you remove and recreate the integrations with new names, does it come up then? Workaround for a similar issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-19-2019 10:02 AM

Hi,

thanks for your reply. I tried that but same faults. The thing is that later I found that the APIC was having problems with the lldpad process. To solve this issues I had to upgrade the fabric, and for a while it seemed to work but when I finished the upgrade process the Vcenter went offline. So, I will try to do the procedure again, now that I don't have the lldp problem.

Regards,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-16-2019 01:04 AM

Hello @HelenaC ,

If you made sure that:

- The connectivity is working, and if you are using names you have DNS working as well.

- You have the right credentials.

- There are no Firewalls blocking TCP 443.

- Data Center name is the correct one.

Then , since this is a Lab you can try restarting the VMM Manager process just in case it didnt came up fine after the reboot:(Only because it is a lab)

acidiag restart vmmmgr

In an ideal world this would not happen on a cluster of APICs , my concern resides that this is the only APIC and I would not expect the vCenter to still have the DVS created but can you double check and make sure it is not in there , and if it is and you are giving the same name can you delete it from the VCenter.

I am giving this disruptive ideas because this is a Lab, in another scenario we would need to check the full logs for the VMM and take it from there.

Alejandro Avila Picado .:|:.:|:.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-19-2019 10:10 AM

Thanks Alejandro for your reply. I also tried to restart the vmmmgr process but it still not working. I'm going to test adding a new vcenter with new name and IP.

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-19-2019 02:36 PM

Hi @HelenaC ,

With VMM integration you have to remember two important things:

- all you see in ACI is a reflection of what is seen by vCenter, you can't change anything once it has been created

- the only influence the APIC has over vCentre is:

- Whenever you create a VMM Domain, the APIC will create a Distributed vSwitch (typicially a vMware Distributed Switch or VDS - let's not get distracted by the AVS)

- Every time you associate an EPG with that VMM Domain, a new port group gets created on that VDS. This port group will

- get the name in the format Tenant|ApplicationProfile|EndPointGroup

- be allocated a VLAN ID from the VLAN Pool linked to the VMM Domain

If your VMM Domain association gets corrupted, here is how you repair it

Step 1: Remove VMs from portgroups

- in vCenter, find every VM that has a NIC that has been assigned to an EPG style portgroup

- move the VM to another portgroup not associated with the DVS, like VM Network (in our setup we have created a special portgroup called isolated just for VMs that have no EPG to join yet)

This step ensures you don't see errors in vCenter when you perform Step 2

Step 2: Remove the VMM Domain associations [Optional, but makes it clean]

- Go to each EPG that has been associated with this VMM Domain

- Remove the VMM Domain association. This will make the APIC ask vCenter to remove the portgroup from the VDS. This may cause some errors to appear in vCenter if you did not complete Step 1 properly.

Step 3: Remove VMM Association

On the APIC, delete the VMM Domain. This will make the

- make the APIC ask vCenter to remove the entire VDS. This will cause some errors to appear in vCenter if you did not complete Step 2.

- If you DID skip step 2, or for some reason the VDS still remains in vCenter, manually remove the VDS in vCenter. You will NOT be able to do this if you did not complete step 1 correctly.

Step 4: Re-create the VMM Domain

- On the APIC, re-create your VMM Domain. Make sure you

- choose the correct VDS type and

- use the correct (case sensitive) vMware Datacenter name

- (and don't ever change the datacenter name in vCenter forever forward. If you do, go back to Step 1.)

- Check vCenter to see that the VDS gets created.

Step 5: Re-associate the EPGs

If you DID skip Step 2 above, 1. below not necessary, so long as the VMM Domain name is EXACTLY the same (you'll find out in 2. below)

- On the APIC, re-assign each EPG to the VMM Domain.

- As you do this, check vCenter to see that a new portgroup gets created on the VDS each time you associate an EPG to the VMM Domain

Step 6: Re-assign the VMs to the portgroups

- In vCenter, find each VM you previously removed from an ACI EPG Portgroup and reassign it.

- As you do this, check your Tenant > Applicaiton Profiles > ApplicationProfile > Application EPGs > EPG >| [Operational] tab and check you see the VMs appear in the list.

Job Done. Have a coffee.

I hope this helps

Don't forget to mark answers as correct if it solves your problem. This helps others find the correct answer if they search for the same problem

Forum Tips: 1. Paste images inline - don't attach. 2. Always mark helpful and correct answers, it helps others find what they need.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-20-2019 09:53 AM - edited 09-20-2019 09:54 AM

Hi Chris,

after doing more troubleshooting I still haven't found the cause of my issue but at least I found a workaround. The iESX server is installed on a UCS C-Series integrated with UCS Manager. The fabric interconnects are connect to the leafs with vPCs.

As it happends that the vCenter came online for a while during the fabric upgrade and then offline again, I realized that it stopped working was when one of the leafs was rebooting, let say leaf-1, and then all the traffic was flowing through leaf-2. What I did then was to shutdown the interfaces connecting leaf-2 with the Fabtic Interconnects and then it started working. So, it wokrs when the active connections of the APIC and the FIs are on the same leaf.

The strange thing is that the ping worked all the time. Now I have to figure out what's wrong with leaf-2 but that's another story.

Anyway, I've marked you answer correct because I think it's a very good summary and as you said it may help others.

Regards,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-20-2019 03:25 PM

Hi @HelenaC ,

OK. So your problem seems to be related to something in the chain betwee UCS<->FI<->ACI_Leaf.

And there are quite a few things to consider here.

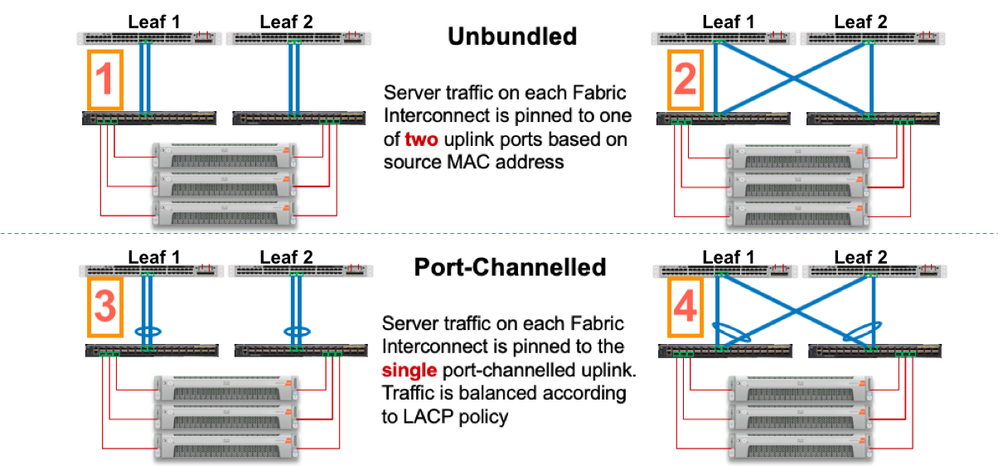

- How are the leaves connected to the FIs? The diagram below shows four options

- Options 1 and 2 requires ACI to be configured with Access Port Policy Groups (although you could use Port Channel/VPC policy groups with the Mac-Pinning option)

- Oprion 3 requires ACI to be configured with Port Channel Policy Groups with LACP on

- Option 4 requires ACI to be configured with VPC Policy Groups with LACP on

- How are the vSwitches inside the ESXi hosts configured? [Sorry, don't have a diagram for that]. When ESXi vSwitches send traffic to FIs, it is very important that the same MAC address ALWAYs lands on the same FI,

- if the ESXi vSwitches are configured with a SINGLE UPLINK, then all the traffic for that host will always hit the same FI. For load balancing, you would configure half the servers to connect to FI-A, half to FI-B

- if the ESXi vSwitches are configured with a dual uplinks, one to FI-A and one to FI-B, then all the traffic for that host will be balanced according to the vSwitch load balancing method

- Using Route Based on Source MAC Hash or Route Based on Originating Virtual Port will ensure that the same MAC hits the same FI each time.

I'm not sure if this is related to yoru problem, but it gives you a couple of questions you need to investigate.

Forum Tips: 1. Paste images inline - don't attach. 2. Always mark helpful and correct answers, it helps others find what they need.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-23-2019 07:44 AM

Hi Chris,

the connection between the leafs and the FIs is option 4. I have to check for the vSwithc, I really didn't pay attention to that and it may be the problem. I have to find time to check all the configuration.

Thank you very much for help.

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide