- Cisco Community

- Technology and Support

- Online Tools and Resources

- Cisco CLI Analyzer

- Cisco CLI Analyzer

- Re: Cisco IOS-XE Crashinfo Analyzer is currently unavailable [471]

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-18-2022 01:56 AM

Hi

I'm having issues with CLI Analayzer when running Crashinfo Analyzer for a WS-C3650-48PD running 16.9.4.

I get the message "Cisco IOS-XE Crashinfo Analyzer is currently unavailable [471]".

I've tried this on different PCs with different versions of CLI Analyzer 3.6.7 and 3.6.8 over the last few weeks. I eventually sent the crashinfo file to our Cisco support partner who ran a file analysis with CLI Analayzer with no issue.

I contacted Cisco web-help about getting this resolved. They sent me to Customer Service hub who referred me back to Cisco web-help who then wanted a service contract number to proceed.

Am I right in saying that I can run Crashinfo Analyzer on a switch whether the switch is on a maintenance contract or not? If so, does anyone have any tips on getting the CLI Analayzer working with my CCO?

Thanks

Andy

Solved! Go to Solution.

- Labels:

-

Cisco CLI Analyzer

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-19-2022 02:38 AM

@andrewswanson wrote:

does the field notice only apply with the direct method?

Yes.

@andrewswanson wrote:

I've been testing 16.12.07 in a dev environment and its looking good so far.

1. Upgrade to the latest 16.6.X or 16.9.X. 16.12.X is not worth the hassle.

2. Whatever firmware you pick, always reboot the switches once a year. Always.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-19-2022 10:36 AM

@andrew as a follow up to your original question about "Cisco IOS-XE Crashinfo Analyzer is currently unavailable [471]" error you were receiving with the Crashinfo Analyzer, that has been resolved and it should now work. With that said, the "crash" that you describe sounds like a loss of connectivity, where IOS-XE didn't actually reset resulting in the creation of a crashinfo or corefile. If that is the case, the Crashinfo Analyzer will not help you as the purpose of this tool is to simply upload crashes in the traditional (reset) form of the word "crash". Along the lines of loss of connectivity due to the increased load on the device, @Leo Laohoo is right, the message you saw on the device is an alert from the system indicating there is already elevated utilization and can serve as a "last gasp" when memory utilization begins increasing. In a situation like that, determining what is using the memory is vital in that case. If the system is rebooted manually, when this is the state, that information is lost and tracking the memory utilization over time becomes important. If you need further help with that the support forums or the TAC can help!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-18-2022 02:03 AM

Please post the crashinfo file so I can have a look.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-18-2022 02:19 AM

Thanks for the reply - see attached for crashinfo

We had an issue with 2 switch stacks that crashed. We have 802.1x enabled on these switches using ibns2 - the event violation was set to "restrict" which resulted in a large number of "%AUTHMGR-5-SECURITY_VIOLATION". syslogs I was hoping the crashinfo would point to a bug regarding this.

Our Support partner ran the analysis ok but this didn't point to any known issue.

Thanks

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-18-2022 04:56 AM

Hmmmm ... 16.9.4.

In the "flash:/core" directory are there any recently-created tar.gz files?

Can you share the complete output to the following commands:

1. sh version

2. sh log

3. sh crypto pki trustpool | i cn=

4. sh log on switch act up detail

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-18-2022 05:55 AM

See attached for requested output.

The "Critical software exception" reason for reload in the attached output started to occur at the same time we started to see "%AUTHMGR-5-SECURITY_VIOLATION" syslogs - we also saw syslogs at these times like the one below:

%PLATFORM-3-ELEMENT_CRITICAL: Switch 6 R0/0: smand: 6/RP/0: Used Memory value 99% exceeds critical level 95%

I've unpatched the clients that were causing the "%AUTHMGR-5-SECURITY_VIOLATION" syslogs and the switch is stable now.

We're looking to upgrade to 16.12.07 in the near future but I wanted to check whether we were seeing a known bug with the security violations. If it isn't a bug, I'll look at changing the violation event from "restricted" to "protected". to prevent the excessive logging.

Thanks

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-18-2022 06:06 AM

Forgot to add that there is nothing recent in the "flash:/core" folder

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-18-2022 04:03 PM - edited 05-18-2022 04:08 PM

@andrewswanson wrote:

%PLATFORM-3-ELEMENT_CRITICAL: Switch 6 R0/0: smand: 6/RP/0: Used Memory value 99% exceeds critical level 95%

Usually, if you see this message it is already too late. No matter what action is done (other than a cold- or warm reboot) the memory utilization will not be coming down. Either cold- or warm reboot the entire stack or wait for it to crash.

Can I see the complete output to the following commands:

- sh platform resource

- sh platform software status con brief

Ok, I have a hunch. But first, a word from our sponsors:

1. The output to the command "sh log on switch active uptime details" is a "WOW!" moment. IT tells me that the switch master has been constantly been crashing.

2. The output to the "sh version" tells me that this is a stack.

3. The output to the "sh crypto pki trustpool | i cn=" tells me the switch is affected by FN - 72323 - Cisco IOS XE Software: QuoVadis Root CA 2 Decommission Might Affect Smart Licensing, Smart Call Home, and Other Functionality

Judging from this, I suspect the stack has a memory leak affecting the "keyman" process. During normal operation, "keyman" process uses around <10k. Any switch/stack hit with the QuoVadis issue will experience the "keyman" process balloon to >100k.

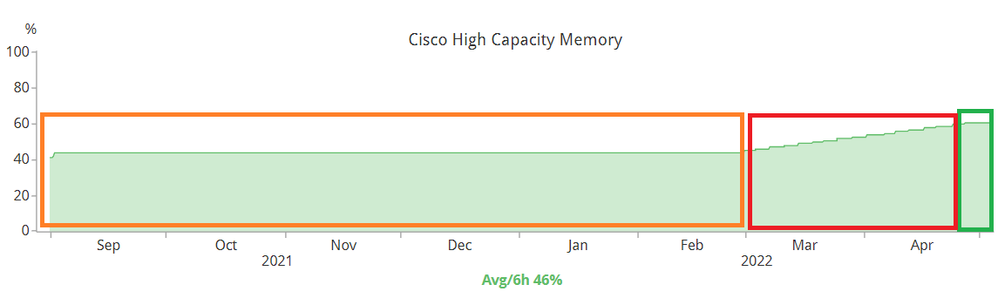

Look at the picture above. This is a graph of the control-plane memory of a single 3850 switch member of a stack (16.12.4). The box in orange is a "normal" 3850 memory operation. When the QuoVadis certificate expired, all our 3850, 9300, 9500 and routers experience a memory spike (RED BOX) and all of them point to the "keyman" process. About three days AFTER I applied the workaround, the utilization stabilized.

NOTE: If this switch was a standalone, the memory leak would not be so severe. Because this is a stack, the memory leak gets "accelerated" or compounded. It is well known that any switch running on IOS-XE and is stacked will get a memory leak in the stack-mgr process. Unfortunately, this is a "normal" behaviour.

Here are the options:

1. Apply the workaround to FN-2323 and observe if this makes any difference.

2. Upgrade to the latest 16.9.X.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-19-2022 01:49 AM

Thanks for your help with this Leo - much appreciated. See attached for requested platform output.

Interestingly, the crashed switches did become unregistered for smart licensing at the time of the crashes - we use on prem ssm rather than Cisco cloud direct method - does the field notice only apply with the direct method? After reload, I manually re-registered them again with on prem ssm with no problem.

The colleague who dealt with the crashed switches originally told me that they were unreachable via ssh but were "recovered" by bouncing the uplink L2 etherchannel on the upstream switch. After the uplink was bounced, the switches could be accessed via ssh but the terminal was sluggish.

I've been testing 16.12.07 in a dev environment and its looking good so far. If all goes well, I'll look to upgrade the affected switches first.

Thanks again

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-19-2022 02:38 AM

@andrewswanson wrote:

does the field notice only apply with the direct method?

Yes.

@andrewswanson wrote:

I've been testing 16.12.07 in a dev environment and its looking good so far.

1. Upgrade to the latest 16.6.X or 16.9.X. 16.12.X is not worth the hassle.

2. Whatever firmware you pick, always reboot the switches once a year. Always.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-19-2022 10:36 AM

@andrew as a follow up to your original question about "Cisco IOS-XE Crashinfo Analyzer is currently unavailable [471]" error you were receiving with the Crashinfo Analyzer, that has been resolved and it should now work. With that said, the "crash" that you describe sounds like a loss of connectivity, where IOS-XE didn't actually reset resulting in the creation of a crashinfo or corefile. If that is the case, the Crashinfo Analyzer will not help you as the purpose of this tool is to simply upload crashes in the traditional (reset) form of the word "crash". Along the lines of loss of connectivity due to the increased load on the device, @Leo Laohoo is right, the message you saw on the device is an alert from the system indicating there is already elevated utilization and can serve as a "last gasp" when memory utilization begins increasing. In a situation like that, determining what is using the memory is vital in that case. If the system is rebooted manually, when this is the state, that information is lost and tracking the memory utilization over time becomes important. If you need further help with that the support forums or the TAC can help!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-20-2022 02:02 AM

Thanks Nicholas - Crashinfo Analyzer now works for me. The affected switch did have a number of crashinfo files but none were from the dates/times when my colleague bounced the uplink. I've analyzed a number of these files but none match any known issues but at least the analyzer is working now.

Thanks again

Andy

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: