- Cisco Community

- Technology and Support

- Data Center and Cloud

- Data Center and Cloud Blogs

- Let's Talk ACI Multi-Pod: Configuring QOS

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

By: Jody

When configuring MultiPod, a frequently asked question that often comes up is how to configure QOS between the ACI Fabric and the IPN network, to ensure that critical ACI fabric traffic is not dropped. With this article, we will go through configuration examples of how to do just that!

What are we doing?

- Marking Control Plane and Policy Plane traffic (APIC to APIC traffic), with some DSCP, in this example, we are marking it with CS4 for Policy Plane traffic and CS7 for Control Plane traffic.

- On the IPN devices, we will check the ingress traffic coming from Spine, put a label of QOS group (qos-group 6)

- On egress from the IPN, we will put that qos-group 6 traffic in the priority queue.

* Note: User Level 1, 2, 3 are marked with EF, CS3, CS0 in this example (which in most scenarios are likely the case, based on rfc4594 or MediaNet QOS Design). However, keep in mind that QOS design must be done in a way so as to align with your existing QOS models. Level 1 traffic will often be set with EF marking, as this is often voice related traffic.

* Note: This example is focused on showing how to configure QOS for Multi-Pod, i.e., mark User Level traffic and Control Plane Policy Plane traffic on ACI and then treat the traffic according to the policy determined in the IP network (IPN to IPN). The actual markings of User Level 1, 2, 3 in a deployment scenario might be different in you deployment scenario, and will likely be based on your QOS requirement / existing QOS deployment standards already present your IP network. If that is the case, adjust the markings accordingly.

Fabric Side Configuration

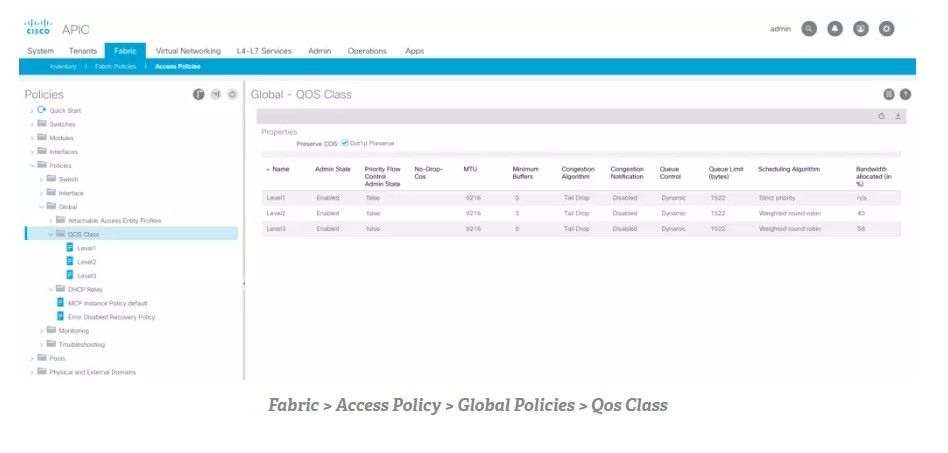

Note the default, from Fabric > Access Policies > Policies > Global > QOS Class*, the default is: Level1: 20%, Level2: 20%, Level3: 20%.

* In earlier versions of APIC code, this was located in the GUI @ Fabric > Access Policies > Global Policies > QOS Class

Here we are changing Level3 (default traffic) to 58%, User Level2 (CS3 traffic) to 40 % and we will change User Level1 traffic to Strict Priority Traffic. We will match this configuration to the IPN, “policy-map type queueing”.

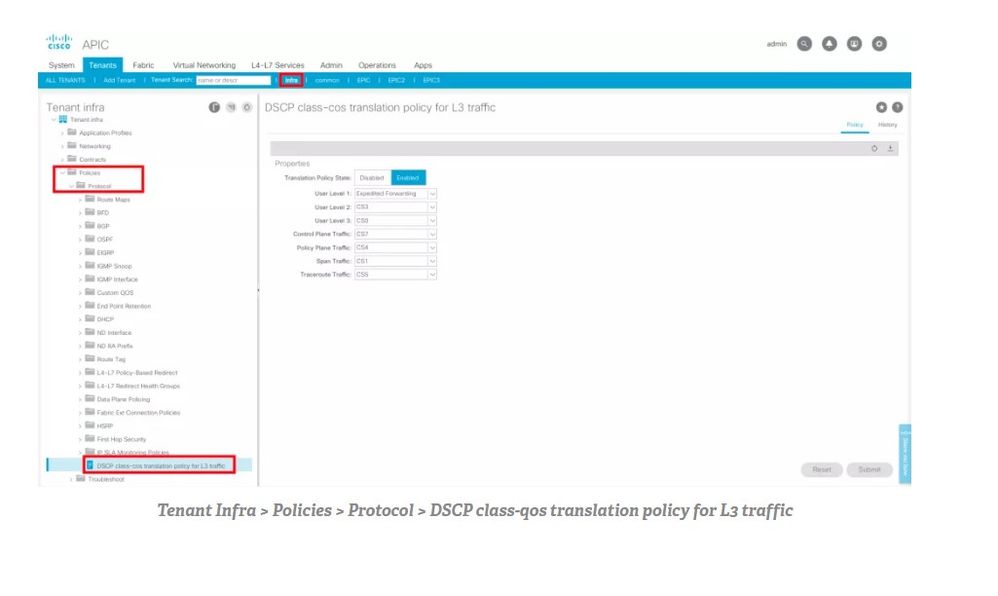

Next, go to Tenant Infra > Policies > Protocol > DSCP class-cos translation policy for L3 Traffic* and map Level1 traffic to EF, Level2 to CS3, Level3 to CS0, Control Plane Traffic to CS7, Policy Plane traffic to CS4, Span Traffic to CS1 and Traceroute Traffic to CS5.

* In earlier versions of APIC code, this was located in the GUI @ Tenant Infra > Networking > Protocol Policies > DSCP class-cos translation policy for L3 Traffic

Why are we making these changes?

For treatment of marked traffic, the graphic below illustrates an example based on aggregated diffserv model RFC5127. If your QOS model/standard is customized and is different from this, this should be modified based on your QOS standards.

- Level 1 UserTraffic is mapped to EF in this case (101110, decimal 46), since it carries voice and real time traffic.

- Level 2 User Traffic is mapped to CS3 (011000, decimal 24), as it is often used by customer for traffic marked for precedence 3 treatment.

- Level 3 User Traffic is mapped to CS0 (000000, decimal 0 or default), as it is the default traffic.

- Control Plane Traffic and Policy Plane Traffic is marked with CS7 (111000, decimal 56) and CS4 (100 000, decimal 32 ) and will be mapped to priority queue.

- Span Traffic is marked with CS1 (001000, decimal 8), as it is traditionally treated as background/scavenger class traffic.

- Traceroute Traffic is marked with CS5 (101000, decimal 40) which is often done.

* Note: In this example we are classifying the Control Plane traffic as CS7 and Policy Plane traffic as CS4. However, based on your requirements you might want to classify your traffic with a different DSCP class, based on your standards. The idea is to make sure that for traffic in the IP network (from IPN to IPN), you treat that traffic as priority traffic. Furthermore, we are classifying the Policy Plane traffic as CS4 (and not CS6) due to CSCva15076.

Graphic – Mapping User Levels and Control Plane/Policy Plane to DSCP levels in Infra Tenant

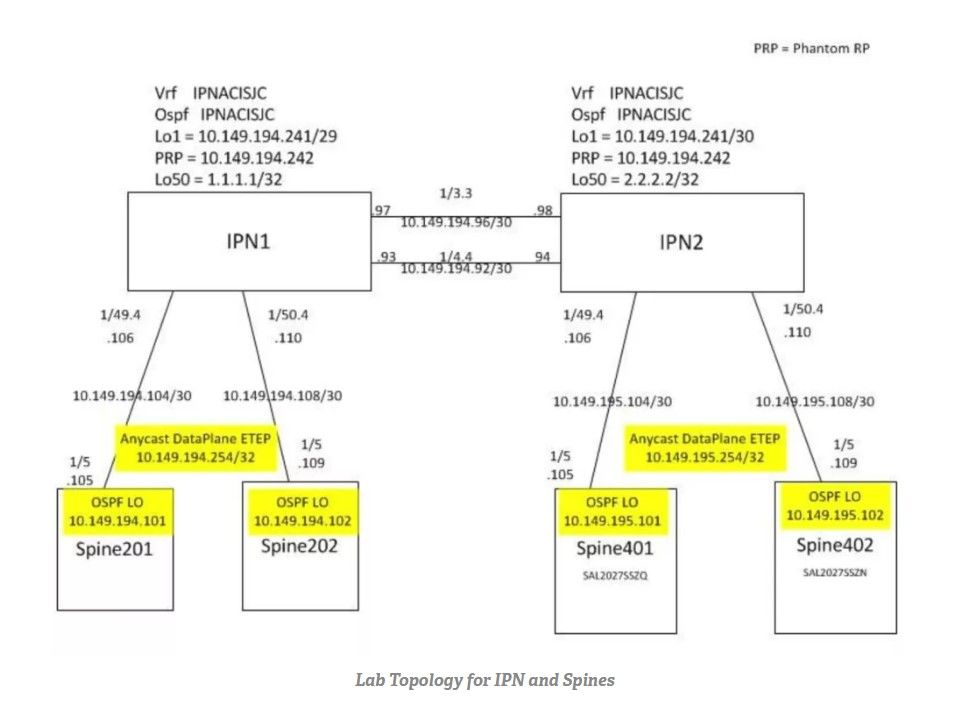

In this example, we are using -EX-based Leafs for our IPN device. -EX-based leaves have a total of (8) egress queues. The idea is to check for the ingress Control Plane and Policy Plane traffic coming from the Spine, place a label of the QOS group, then on egress place that traffic in a prority queue.

* Note: If you are using IPNs are not -EX-based Nexus 9Ks, the basic idea will be the same but configuration will vary depending on the amount of queues present. Remember the main idea is to put the control plane and policy plane traffic in the high priority queue.

In this example 1/49.4 and 1/50.4 are the interfaces on IPN that connect to Spine.

Here is the IPN Config:

! Below we are matching the markings based on what was configured on ACI

! Creation of Class Maps

!

class-map type qos match-all UserLevel1

match dscp 46

class-map type qos match-all UserLevel2

match dscp 24

class-map type qos match-all UserLevel3

match dscp 0

class-map type qos match-all SpanTraffic

match dscp 8

class-map type qos match-all iTraceroute

match dscp 40

class-map type qos match-all CONTROL-TRAFFIC

match dscp 32,56

!

! creation of Policy Map (to be applied on ingress interfaces of IPN, facing Spines)

!

policy-map type qos ACI-CLASSIFICATION

class CONTROL-TRAFFIC

set qos-group 7

class UserLevel1

set qos-group 6

class UserLevel2

set qos-group 3

class UserLevel3

set qos-group 0

class SpanTraffic

set qos-group 1

class iTraceroute

set qos-group 5

!

! Below we are configuring priority queue for qos group 6 (there is a default configuration already on the router that matches class map qos-group 6 traffic to queue c-out-8q-q6). We are mapping EF traffic to Priority Queue 1 and COS4 traffic to Priority Queue 2. Level 2, 3 traffic are given 40% and 58% of bandwidth (to match up with Global QOS config in APIC, Figure 1 and Figure 2).

!

policy-map type queuing IPN-8q-out-policy

class type queuing c-out-8q-q7

priority level 2

class type queuing c-out-8q-q6

priority level 1

class type queuing c-out-8q-q5

bandwidth remaining percent 0

class type queuing c-out-8q-q4

bandwidth remaining percent 0

class type queuing c-out-8q-q3

bandwidth remaining percent 40

class type queuing c-out-8q-q2

bandwidth remaining percent 0

class type queuing c-out-8q-q1

bandwidth remaining percent 1

class type queuing c-out-8q-q-default

bandwidth remaining percent 58

!

! Below we are applying the policy IPN-8q-out-policy to the system level, so all egress will be treated as policy dictates

!

!

system qos

service-policy type queuing output IPN-8q-out-policy

!

!

! Below we associate the interfaces going to spine with the input service policy so that EF and CS4 traffic will be marked with qos-group-7

!

!

interface Ethernet1/49.4

description POD2-Spine-401 e1/5

mtu 9150

encapsulation dot1q 4

vrf member IPNACISJC

service-policy type qos input ACI-CLASSIFICATION

ip address 10.149.195.106/30

ip ospf network point-to-point

ip router ospf IPNACISJC area 0.0.0.0

ip pim sparse-mode

ip dhcp relay address 10.0.0.1

ip dhcp relay address 10.0.0.2

ip dhcp relay address 10.0.0.3

no shutdown

!

!

interface Ethernet1/50.4

description POD2-Spine-402 e1/5

mtu 9150

encapsulation dot1q 4

vrf member IPNACISJC

service-policy type qos input ACI-CLASSIFICATION

ip address 10.149.195.110/30

ip ospf network point-to-point

ip router ospf IPNACISJC area 0.0.0.0

ip pim sparse-mode

ip dhcp relay address 10.0.0.1

ip dhcp relay address 10.0.0.2

ip dhcp relay address 10.0.0.3

no shutdown

Verification on IPN:

! Looking at ingress interface on IPN, (towards ACI Spine)

IPNPOD2# show policy-map interface ethernet 1/50.4 input

Global statistics status : enabled

Ethernet1/50.4

Service-policy (qos) input: ACI-CLASSIFICATION

SNMP Policy Index: 285215377

Class-map (qos): CONTROL-TRAFFIC (match-all)

Slot 1

1434 packets

Aggregate forwarded :

1434 packets

Match: dscp 32,56

set qos-group 7

Class-map (qos): UserLevel1 (match-all)

Aggregate forwarded :

0 packets

Match: dscp 46

set qos-group 6

Class-map (qos): UserLevel2 (match-all)

Aggregate forwarded :

0 packets

Match: dscp 24

set qos-group 3

Class-map (qos): UserLevel3 (match-all)

Slot 1

25 packets

Aggregate forwarded :

25 packets

Match: dscp 0

set qos-group 0

Class-map (qos): SpanTraffic (match-all)

Aggregate forwarded :

0 packets

Match: dscp 8

set qos-group 1

Class-map (qos): iTraceroute (match-all)

Aggregate forwarded :

0 packets

Match: dscp 40

set qos-group 5

IPNPOD2# show policy-map interface ethernet 1/49.4 input

Global statistics status : enabled

Ethernet1/49.4

Global statistics status : enabled

Ethernet1/49.4

Service-policy (qos) input: ACI-CLASSIFICATION

SNMP Policy Index: 285215373

Class-map (qos): CONTROL-TRAFFIC (match-all)

Slot 1

5149 packets

Aggregate forwarded :

5149 packets

Match: dscp 32,56

set qos-group 7

Class-map (qos): UserLevel1 (match-all)

Aggregate forwarded :

0 packets

Match: dscp 46

set qos-group 6

Class-map (qos): UserLevel2 (match-all)

Aggregate forwarded :

0 packets

Match: dscp 24

set qos-group 3

Class-map (qos): UserLevel3 (match-all)

Slot 1

960 packets

Aggregate forwarded :

960 packets

Match: dscp 0

set qos-group 0

Class-map (qos): SpanTraffic (match-all)

Aggregate forwarded :

0 packets

Match: dscp 8

set qos-group 1

Class-map (qos): iTraceroute (match-all)

Aggregate forwarded :

0 packets

Match: dscp 40

set qos-group 5

! Looking at egress interface of IPNPOD2 (towards IP network from IPN)

IPNPOD1# show queuing interface e 1/3 | b “GROUP 7”

slot 1

=======

Egress Queuing for Ethernet1/3 [System]

——————————————————————————

QoS-Group# Bandwidth% PrioLevel Shape QLimit

Min Max Units

——————————————————————————

7 – 2 – – – 9(D)

6 – 1 – – – 9(D)

5 0 – – – – 9(D)

4 0 – – – – 9(D)

3 20 – – – – 9(D)

2 0 – – – – 9(D)

1 1 – – – – 9(D)

0 59 – – – – 9(D)

+————————————————————-+

| QOS GROUP 0 |

+————————————————————-+

| | Unicast |Multicast |

+————————————————————-+

| Tx Pkts | 125631| 70|

| Tx Byts | 42902871| 8836|

| WRED/AFD & Tail Drop Pkts | 0| 0|

| WRED/AFD & Tail Drop Byts | 0| 0|

| Q Depth Byts | 0| 0|

| WD & Tail Drop Pkts | 0| 0|

+————————————————————-+

| QOS GROUP 1 |

+————————————————————-+

| | Unicast |Multicast |

+————————————————————-+

| Tx Pkts | 0| 0|

| Tx Byts | 0| 0|

| WRED/AFD & Tail Drop Pkts | 0| 0|

| WRED/AFD & Tail Drop Byts | 0| 0|

| Q Depth Byts | 0| 0|

| WD & Tail Drop Pkts | 0| 0|

+————————————————————-+

| QOS GROUP 2 |

+————————————————————-+

| | Unicast |Multicast |

+————————————————————-+

| Tx Pkts | 0| 0|

| Tx Byts | 0| 0|

| WRED/AFD & Tail Drop Pkts | 0| 0|

| WRED/AFD & Tail Drop Byts | 0| 0|

| Q Depth Byts | 0| 0|

| WD & Tail Drop Pkts | 0| 0|

+————————————————————-+

| QOS GROUP 3 |

+————————————————————-+

| | Unicast |Multicast |

+————————————————————-+

| Tx Pkts | 0| 0|

| Tx Byts | 0| 0|

| WRED/AFD & Tail Drop Pkts | 0| 0|

| WRED/AFD & Tail Drop Byts | 0| 0|

| Q Depth Byts | 0| 0|

| WD & Tail Drop Pkts | 0| 0|

+————————————————————-+

| QOS GROUP 4 |

+————————————————————-+

| | Unicast |Multicast |

+————————————————————-+

| Tx Pkts | 0| 0|

| Tx Byts | 0| 0|

| WRED/AFD & Tail Drop Pkts | 0| 0|

| WRED/AFD & Tail Drop Byts | 0| 0|

| Q Depth Byts | 0| 0|

| WD & Tail Drop Pkts | 0| 0|

+————————————————————-+

| QOS GROUP 5 |

+————————————————————-+

| | Unicast |Multicast |

+————————————————————-+

| Tx Pkts | 0| 0|

| Tx Byts | 0| 0|

| WRED/AFD & Tail Drop Pkts | 0| 0|

| WRED/AFD & Tail Drop Byts | 0| 0|

| Q Depth Byts | 0| 0|

| WD & Tail Drop Pkts | 0| 0|

+————————————————————-+

| QOS GROUP 6 |

+————————————————————-+

| | Unicast |Multicast |

+————————————————————-+

| Tx Pkts | 645609| 217|

| Tx Byts | 115551882| 25606|

| WRED/AFD & Tail Drop Pkts | 0| 0|

| WRED/AFD & Tail Drop Byts | 0| 0|

| Q Depth Byts | 0| 0|

| WD & Tail Drop Pkts | 0| 0|

+————————————————————-+

| QOS GROUP 7 |

+————————————————————-+

| | Unicast |Multicast |

+————————————————————-+

| Tx Pkts | 23428| 9|

| Tx Byts | 4132411| 1062|

| WRED/AFD & Tail Drop Pkts | 0| 0|

| WRED/AFD & Tail Drop Byts | 0| 0|

| Q Depth Byts | 0| 0|

| WD & Tail Drop Pkts | 0| 0|

+————————————————————-+

| CONTROL QOS GROUP |

+————————————————————-+

| | Unicast |Multicast |

+————————————————————-+

| Tx Pkts | 6311| 0|

| Tx Byts | 809755| 0|

| Tail Drop Pkts | 0| 0|

| Tail Drop Byts | 0| 0|

| WD & Tail Drop Pkts | 0| 0|

+————————————————————-+

| SPAN QOS GROUP |

+————————————————————-+

| | Unicast |Multicast |

+————————————————————-+

| Tx Pkts | 0| 0|

| Tx Byts | 0| 0|

| Tail Drop Pkts | 0| 0|

| Tail Drop Byts | 0| 0|

| WD & Tail Drop Pkts | 0| 0|

+————————————————————-+

Ingress Queuing for Ethernet1/3

—————————————————–

QoS-Group# Pause

Buff Size Pause Th Resume Th

—————————————————–

7 – – –

6 – – –

5 – – –

4 – – –

3 – – –

2 – – –

1 – – –

0 – – –

Per Port Ingress Statistics

——————————————————–

Hi Priority Drop Pkts 0

Low Priority Drop Pkts 0

Ingress Overflow Drop Pkts 0

PFC Statistics

——————————————————————————

TxPPP: 0, RxPPP: 0

——————————————————————————

PFC_COS QOS_Group TxPause TxCount RxPause RxCount

0 0 Inactive 0 Inactive 0

1 0 Inactive 0 Inactive 0

2 0 Inactive 0 Inactive 0

3 0 Inactive 0 Inactive 0

4 0 Inactive 0 Inactive 0

5 0 Inactive 0 Inactive 0

6 0 Inactive 0 Inactive 0

7 0 Inactive 0 Inactive 0

——————————————————————————

IPNPOD2# show queuing interface e 1/4

slot 1

=======

Egress Queuing for Ethernet1/4 [System]

——————————————————————————

QoS-Group# Bandwidth% PrioLevel Shape QLimit

Min Max Units

——————————————————————————

7 – 2 – – – 9(D)

6 – 1 – – – 9(D)

5 0 – – – – 9(D)

4 0 – – – – 9(D)

3 20 – – – – 9(D)

2 0 – – – – 9(D)

1 1 – – – – 9(D)

0 59 – – – – 9(D)

+————————————————————-+

| QOS GROUP 0 |

+————————————————————-+

| | Unicast |Multicast |

+————————————————————-+

| Tx Pkts | 63049| 0|

| Tx Byts | 15968783| 0|

| WRED/AFD & Tail Drop Pkts | 0| 0|

| WRED/AFD & Tail Drop Byts | 0| 0|

| Q Depth Byts | 0| 0|

| WD & Tail Drop Pkts | 0| 0|

+————————————————————-+

| QOS GROUP 1 |

+————————————————————-+

| | Unicast |Multicast |

+————————————————————-+

| Tx Pkts | 0| 0|

| Tx Byts | 0| 0|

| WRED/AFD & Tail Drop Pkts | 0| 0|

| WRED/AFD & Tail Drop Byts | 0| 0|

| Q Depth Byts | 0| 0|

| WD & Tail Drop Pkts | 0| 0|

+————————————————————-+

| QOS GROUP 2 |

+————————————————————-+

| | Unicast |Multicast |

+————————————————————-+

| Tx Pkts | 0| 0|

| Tx Byts | 0| 0|

| WRED/AFD & Tail Drop Pkts | 0| 0|

| WRED/AFD & Tail Drop Byts | 0| 0|

| Q Depth Byts | 0| 0|

| WD & Tail Drop Pkts | 0| 0|

+————————————————————-+

| QOS GROUP 3 |

+————————————————————-+

| | Unicast |Multicast |

+————————————————————-+

| Tx Pkts | 0| 0|

| Tx Byts | 0| 0|

| WRED/AFD & Tail Drop Pkts | 0| 0|

| WRED/AFD & Tail Drop Byts | 0| 0|

| Q Depth Byts | 0| 0|

| WD & Tail Drop Pkts | 0| 0|

+————————————————————-+

| QOS GROUP 4 |

+————————————————————-+

| | Unicast |Multicast |

+————————————————————-+

| Tx Pkts | 0| 0|

| Tx Byts | 0| 0|

| WRED/AFD & Tail Drop Pkts | 0| 0|

| WRED/AFD & Tail Drop Byts | 0| 0|

| Q Depth Byts | 0| 0|

| WD & Tail Drop Pkts | 0| 0|

+————————————————————-+

| QOS GROUP 5 |

+————————————————————-+

| | Unicast |Multicast |

+————————————————————-+

| Tx Pkts | 0| 0|

| Tx Byts | 0| 0|

| WRED/AFD & Tail Drop Pkts | 0| 0|

| WRED/AFD & Tail Drop Byts | 0| 0|

| Q Depth Byts | 0| 0|

| WD & Tail Drop Pkts | 0| 0|

+————————————————————-+

| QOS GROUP 6 |

+————————————————————-+

| | Unicast |Multicast |

+————————————————————-+

| Tx Pkts | 1141418| 0|

| Tx Byts | 237770324| 0|

| WRED/AFD & Tail Drop Pkts | 0| 0|

| WRED/AFD & Tail Drop Byts | 0| 0|

| Q Depth Byts | 0| 0|

| WD & Tail Drop Pkts | 0| 0|

+————————————————————-+

| QOS GROUP 7 |

+————————————————————-+

| | Unicast |Multicast |

+————————————————————-+

| Tx Pkts | 32440| 0|

| Tx Byts | 6986806| 0|

| WRED/AFD & Tail Drop Pkts | 0| 0|

| WRED/AFD & Tail Drop Byts | 0| 0|

| Q Depth Byts | 0| 0|

| WD & Tail Drop Pkts | 0| 0|

+————————————————————-+

| CONTROL QOS GROUP |

+————————————————————-+

| | Unicast |Multicast |

+————————————————————-+

| Tx Pkts | 6275| 0|

| Tx Byts | 804748| 0|

| Tail Drop Pkts | 0| 0|

| Tail Drop Byts | 0| 0|

| WD & Tail Drop Pkts | 0| 0|

+————————————————————-+

| SPAN QOS GROUP |

+————————————————————-+

| | Unicast |Multicast |

+————————————————————-+

| Tx Pkts | 0| 0|

| Tx Byts | 0| 0|

| Tail Drop Pkts | 0| 0|

| Tail Drop Byts | 0| 0|

| WD & Tail Drop Pkts | 0| 0|

+————————————————————-+

Ingress Queuing for Ethernet1/4

—————————————————–

QoS-Group# Pause

Buff Size Pause Th Resume Th

—————————————————–

7 – – –

6 – – –

5 – – –

4 – – –

3 – – –

2 – – –

1 – – –

0 – – –

Per Port Ingress Statistics

——————————————————–

Hi Priority Drop Pkts 0

Low Priority Drop Pkts 0

Ingress Overflow Drop Pkts 0

PFC Statistics

——————————————————————————

TxPPP: 0, RxPPP: 0

——————————————————————————

PFC_COS QOS_Group TxPause TxCount RxPause RxCount

0 0 Inactive 0 Inactive 0

1 0 Inactive 0 Inactive 0

2 0 Inactive 0 Inactive 0

3 0 Inactive 0 Inactive 0

4 0 Inactive 0 Inactive 0

5 0 Inactive 0 Inactive 0

6 0 Inactive 0 Inactive 0

7 0 Inactive 0 Inactive 0

——————————————————————————

That’s all for today’s ACI blog. More to come on the ACI Board here in Cisco Community. Drop us a comment and let us know if super technical blogs like these are helpful.

And while you’re at it, let us also know what kinds of topics relating to ACI you’d like posted on here.

A special thanks to Soumitra Mukherji for his contribution on this post.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: