- Cisco Community

- Technology and Support

- Data Center and Cloud

- Data Center Switches

- Re: 1000V port channel to N5K w/ FCOE

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

1000V port channel to N5K w/ FCOE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-02-2009 10:43 AM

Is it possible to port channel 2 10G ports on the Nexus 1000V (Emulex CNAs) with 2 10G ports on the Nexus 5000 if the ports on the Nexus 5000 ports are also doing FCOE?

I am getting the following error:

N5k(config-if-range)# int e1/5-6

N5k(config-if-range)# channel-group 10 mode on

command failed: port not compatible [port mode]

Previously I was getting an error along the lines of "Port already assigned to virtual interface (VIF).

The configuration of the 5k is attached.

Thanks,

- Labels:

-

Nexus 1000V

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-03-2009 03:00 PM

Hi. To your question about creating a Port-Channel from the Server (CNA ports) to a single N5K and then having FCoE traffic be used as well, is currently not supported. The reason is that when we bind the "vfc", we bind it to a physical Ethernet interface. We will allow to bind to a MAC Address but that is in the future. But from a FCoE perspective, having dual ports, connectivity from the servers would typically be connected to 2 different N5Ks. I'm just curious if you are building an environment where you would have 4 ports coming out of the server and then have 2 ports per N5K.

Can you expand on your use case on why if you only have 2 ports from the Server (CNA ports) that both ports would be connected to the same N5K? Storage deployments tend to see the single N5K as a single point of failure. Just like to understand the deployment scenario you are trying to create. If you have further questions, please let us know. Thanks,

Cuong

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-06-2009 07:42 AM

I am working on this issue as well. The point where we need two interfaces available is from the Ethernet perspective. For this environment we have ESX hosts attached and best practice is to have more than one connection available for each virtual switch. The n1000v will only allow one physical nic to be assigned to the vswitch unless port channel is available. Attaching to a traditional switch this is not an issue but with the N5K as you have stated it will not work. One of the major drivers for this type of switch is to converge both Ethernet and Fiber channel traffic and I would assume the virtual space (VMware) with help drive that. A typical ESX installation you may have 4 even 8 physical network interfaces available. ESX want to have redundancy and the ability to round robin VM guests to multiple network interfaces. If a cable or port were to fail there is redundancy to keep the VM from having to migrate to another ESX Host. Also if you try to enable HA for example VMware will complain that there is a lack of network redundancy available.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-08-2009 09:21 AM

I would like to expand on this conversation.

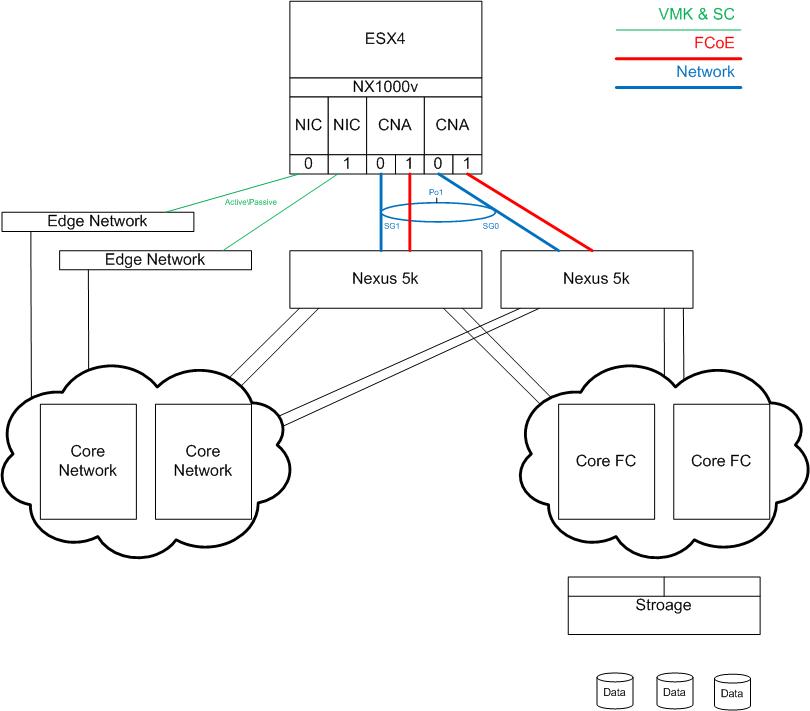

If we have the following configuration (see picture) what would be the best way to configure the CNA adapters. Each server would have 2 onboard NICs that could be used for VMware management traffic and two dual port CNA adapters. Most of the whitepapers I have found talk about reducing networking cables and show best practices around network configuration. I would like to better understand how the full stack should be configured VMware, NX1000v, consolidation layer and links to the FC/network clouds.

In a dual port dual CNA environment would it be best to run FCoE over two of the 4 ports and VM networking over the other two as a port channel?

Is it best to keep VMware kernel and Service Console traffic on the onboard NICs or can these be eliminated as well utilizing the converged network adapter?

For 1000v would it be best to break out the packet\management from the data uplinks? In this configuration the packet and management could utilize the onboard NICs and run all data traffic across the CNA portchannel.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-08-2009 11:03 AM

Hi there. Just a few things first. With regards to removing your current 2 NICs that are connected to the "Edge Network", those can be removed and the "service console"and "vmkernel - i.e. vmotion" can flow through your CNAs up to the N5Ks. So this will eliminate the need to have 2 more connections coming out of your server, unless you truly see the need to use all 40G of bandwidth from the CNAs for data traffic.

Since you have 4 CNA ports, there is a way for you to utilize all 4 ports to enable FCoE and also port-channel for Ethernet traffic as well. FCoE is supported through a vPC, as long as only 1 port is going to a separate Nexus 5000. If you have more than 1 port in the vPC going to the same Nexus 5000, FCoE will not work. So with that said, I would suggest the following implementation:

Create a vPC (say vPC 10) using CNA1-port0 (connected to N5K-1) + CNA2-port0 (connected to N5K-2). With this vPC configuration, FCoE can be utilize to access your SAN storage (or iSCSI/NAS storage). With this vPC 10 interfaces, I would also primarily use it for your "service console", "vmkernel" and Nexus 1000V system vlans (control and packet).

Then create another vPC (say vPC 20) using CNA1-port1 (connected to N5K-1) + CNA2-port1 (connected to N5K-2). You can also utilize FCoE for these 10G interfaces as well, which will distribute your shared storage traffic across all 4 10G interfaces. In case you don't want to use FCoE for these interfaces, then you don't have to create a vfc for those 10G interfaces. Then your shared SAN storage will flow through the other interfaces. But with this vPC 20, you can configure this vPC as your "data uplink" for your all your data vlan traffic, thus not being affected by the other vlans used for mgmt, vmkernel, control, etc.

Hope this makes sense.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-08-2009 11:55 AM

Yes that makes perfect sense. Would it still be valid to only use one of the vPC as the uplink for all networks such as service console, vmkernel, management, control, packet and data? This would have the second vPC dedicated to only FCoE in this case.

Thank you for the reply.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-08-2009 12:01 PM

Yeah that is also valid as well, if you want to separate storage and Ethernet traffic. But for the Storage vPC, you really don't need to do a vPC since you don't have non-FCoE traffic flowing through. But it doesn't hurt to have vPC configured in case you do want to utilize non-FCoE traffic.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-21-2010 06:05 AM

Hello all,

I have a question regarding a similar set up.

I have a Server (Windows 2008 with Dual port QLogic CNAs) connected to 2 Nexus (Nexus-1 and Nexus2)

QLogic CNAs -> Model : QLE8152

port 1 of the CAN connects to port eth1/1 on Nexus-1. Port 2 of the CNA connects to port eth1/1 of the Nexus-2

Ports eth19-20 on both Nexus are in portchannel 1 which also has the vpc peer-link.

I tried to use the above configuration to create a port-channel to the CNAs. What I did was the following:

Nexus-1

vpc domain 1

role priority 100

peer-keepalive destination 172.16.1.231

vlan 1

vlan 100

fcoe vsan 1

name SAN1_FCOE

interface port-channel1

switchport mode trunk

switchport trunk allowed vlan 1

vpc peer-link

spanning-tree port type network

interface port-channel10

switchport mode trunk

switchport trunk allowed vlan 1,100

spanning-tree port type edge trunk

vpc 10

speed 10000

interface vfc101

bind interface port-channel10

no shutdown

interface Ethernet1/1

switchport mode trunk

switchport trunk allowed vlan 1,100

spanning-tree port type edge trunk

channel-group 10

Nexus-2

vpc domain 1

role priority 200

peer-keepalive destination 172.16.1.230

vlan 1

vlan 200

fcoe vsan 1

name FABRIC2_FCoE

interface port-channel1

switchport mode trunk

switchport trunk allowed vlan 1

vpc peer-link

spanning-tree port type network

interface port-channel10

switchport mode trunk

switchport trunk allowed vlan 1,200

spanning-tree port type edge trunk

vpc 10

speed 10000

interface vfc201

bind interface port-channel10

no shutdown

interface Ethernet1/1

switchport mode trunk

switchport trunk allowed vlan 1,200

spanning-tree port type edge trunk

channel-group 10

Ok so the vPC comes up but I can't ping the host (The host is configured with NIC Teaming - fail over but I wanted Load Balance),

As soon as I just remove the vpc 10 statement from the Port-Channel10 (int po10 ... no vpc 10) it is OK

When I was configuring it it gave me a Warning to check some compatibility (I ignored and to be honest I couldn't reproduce again unfortunately ... and I dont know where that line log went)

Is the CNA not compatible ?I have updated everything to above the versions stated in this document

http://www.cisco.com/en/US/prod/collateral/switches/ps9441/ps9670/white_paper_c11-569320_v1.pdf

Is this not supported on this CNA ?

Thanks

Nuno Ferreira

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-21-2010 08:35 AM

Hello -

Is it possible for you to paste in the configuration from the Nexus 1000V as well?? I believe you are making a vPC connection between the Nexus 1000V and the Nexus 5000. Are you using LACP on the 1KV side? or static port-channeling??

Thanks,

Liz

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-21-2010 08:39 AM

Hello Liz ...

You probably missed the part where I say that my set up is a bit different. ![]()

I am not using nexus 1k ... My host is a Windows 2008 host with a dual port CNA.

Thanks

Nuno Ferreira

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-21-2010 08:51 AM

Sorry about that -- my reading skills dont kick in until after 9am ![]()

A couple of things to check:

- are you seeing the MAC addresses of the CNA on the Nexus 5k's

- after changing the load balancing option on the CNA, did you restart it??

I hit an issue like this last week where I couldn't ping after every change I made on the adapter side. I would have to restart in order for everything to take effect.

Thanks,

Liz

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-21-2010 08:54 AM

Liz,

I can see the 3 mac addresses on both nexus ....

I didn't restart the host no .... but I have deleted and created the TEAM a number of times (with load-balance and failover) and it never worked. All the times as soon as I remove the vpc 10 (so when I remove the vpc of the port-channel associated to the port connected to the host) everything works fine.

I did notice one thing that might be relevant ....

show platform software fcoe_mgr info interface eth 1/1

Eth1/40(0x81f3434), if_index: 0x1a027000, RID Eth1/1

FSM current state: FCOE_MGR_ETH_ST_UP

PSS Runtime Config:-

PSS Runtime Data:-

Oper State: up

VFC IF Index: vfc101

White Paper

© 2010 Cisco Systems, Inc. All rights reserved. This document is Cisco Public Information. Page 33 of 56

FCOE on ? : TRUE

LLS supported ? : FALSE

Thanks

Nuno Ferreira

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-21-2010 05:29 PM

Nuno,

What version of the QL driver & FW are you using?

[root@sv-vsphere04 ~]# ethtool -i vmnic2

driver: qlge

version: v1.00.00.38-022710

firmware-version: v1.35.11

bus-info: 0000:1c:00.0

Regards,

Robert

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-21-2010 05:34 PM

Hi Robert.

The latest on the QLogic site ... everything was upgraded prior to me setting up the vPC ....

Check below ....

Hostname : KFD-S-DYN01

Adapter Model : QLE8152

Chip Model : 8001

Chip Version : A1

Adapter Alias : None

Serial Number : RFC0938M58153

MAC Address : 00:c0:dd:11:41:58

MAC Address : 00:c0:dd:11:41:5a

Driver Information : Ndis 6.x 10GbE driver (AMD-64)

Driver Name : qlge.sys

Driver Version : 1.0.1.0

MPI Firmware Version : 1.35.11

PXE Boot Version : 01.11

VLAN & Team Driver Name : qlvtid.sys

VLAN & Team Driver Version : 1.0.0.11

FCoE Driver Version : 9.1.8.19

FCoE Firmware Version : 5.02.01

FCoE SDMAPI Version : 01.28.00.75

Is this enough info ? I can gather more if you need ?

Thanks for your help ...

Nuno Ferreira

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-21-2010 05:41 PM

Can you post the "ethtool -i vmnicx" output of one of your QL vmnics (see my previous example).

Thanks,

Robert

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide