- Cisco Community

- Technology and Support

- DevNet Hub

- DevNet Site

- DevNet Sandbox

- Re: First time Sandbox user - having issues accessing the switches

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

First time Sandbox user - having issues accessing the switches

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

08-29-2019 10:04 AM - edited 08-29-2019 10:48 AM

This whole environment is all new to me, so it's probably something simple I'm not doing.

I selected the "Always-on" option for the sandbox named "Open NX-OS with Nexus 9Kv". I received both emails and installed AnyConnect.

I connected to the VPN using Anyconnect.

I read through all the instructions and have all the management IPs and passwords.

I logged into my sandbox via the link provided in my email.

I can start the Linux box (CentOS). I "thought" I'd be able to ping the switches from the Linux box and log into them from there, but none respond.

So, how exactly do I access these switches???? I thought I'd see four icons to represent the switches, but I don't see that either. I'm assuming now I have to ssh from the Centos box, but since I can't ping any of the four switches from there, I'm at a loss.

In addition, I can access the Linux box from my PC using SecureCRT, but I still can't ping any of the switches. Something tells me I need to power these on, but I see no way to do that.

Any help is appreciated.

Thanks,

Andy

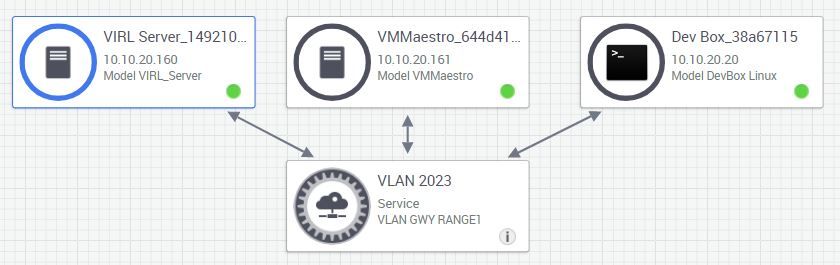

Here's what I see on my sandbox:

- Labels:

-

Other Sandbox Issues

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-08-2019 12:25 AM

Thanks Tom, That link takes me to my reservation page oddly - can you name the sandbox and copy the url from here please - https://devnetsandbox.cisco.com/RM/Topology

Thanks!

Connect with me https://bigevilbeard.github.io

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-08-2019 07:20 AM

I clicked on the link you provided, clicked on Data Center (on the right), then searched for Nexus. Two labs came up. I right-clicked on the lab I'm using and copied the link on that lab:

Open NX-OS with Nexus 9Kv

This isn't my specific lab of course, but it is the one I'm using.

HTH

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-08-2019 09:53 AM

Thanks Tom, let me step through this. I reserved my lab (same as yours) and connected the sandbox. Then from my shell on my mac, i connected to the jumphost/devbox.

You can see from the home directory, i see the same as you.

Welcome to the Open NX-OS Sandbox You can find sample code and information in the GitHub Repo at: https://github.com/DevNetSandbox/sbx_nxos This repo has been cloned to this devbox at the directory: cd ~/code/sbx_nxos A Python 3.6 virtual environment with relevant moduels is available by activating with: source ~/code/sbx_nxos/venv/bin/activate [developer@devbox ~]$virl ls --all Running Simulations ╒══════════════╤══════════╤════════════════════════════╤═══════════╕ │ Simulation │ Status │ Launched │ Expires │ ╞══════════════╪══════════╪════════════════════════════╪═══════════╡ │ ~jumphost │ ACTIVE │ 2018-08-02T19:21:08.764190 │ │ ╘══════════════╧══════════╧════════════════════════════╧═══════════╛ [developer@devbox ~]$

First enable the venv environment and the move the code folder, then the sbx folder as per the instruction from login. In this folder you can see the topology.virl file, however as we have not brought up the topology we see nothing on the virl nodes or virl ls

[developer@devbox ~]$ls code Desktop sync_log.txt sync_version thinclient_drives venv [developer@devbox ~]$source venv/bin/activate (venv) [developer@devbox ~]$cd code/sbx_nxos/ (venv) [developer@devbox sbx_nxos]$ls ansible-playbooks guestshell learning_labs LICENSE nx-api other pull-requests.md readme_images README.md requirements.txt sbx-mgmt topology.virl venv (venv) [developer@devbox sbx_nxos]$

(venv) [developer@devbox sbx_nxos]$virl nodes

Environment default is not running

(venv) [developer@devbox sbx_nxos]$virl ls

Running Simulations

╒══════════════╤══════════╤════════════╤═══════════╕

│ Simulation │ Status │ Launched │ Expires │

╞══════════════╪══════════╪════════════╪═══════════╡

╘══════════════╧══════════╧════════════╧═══════════╛

(venv) [developer@devbox sbx_nxos]$

To bring up the nexus/topology issue virl up from this directory (that last part is very important! Once you do that we can see our nexus devices, at this stage these are building. It will take around 5 - 10 mins to bring up the devices, the might show as active, but these do take time and if you logged in with the virl console (for example virl console nx-osv9000-1, you would see the device starting up)

(venv) [developer@devbox sbx_nxos]$virl up Creating default environment from topology.virl (venv) [developer@devbox sbx_nxos]$virl ls Running Simulations ╒═════════════════════════╤══════════╤════════════════════════════╤═══════════╕ │ Simulation │ Status │ Launched │ Expires │ ╞═════════════════════════╪══════════╪════════════════════════════╪═══════════╡ │ sbx_nxos_default_0jlb6i │ ACTIVE │ 2019-09-08T16:38:48.800535 │ │ ╘═════════════════════════╧══════════╧════════════════════════════╧═══════════╛ (venv) [developer@devbox sbx_nxos]$virl nodes Here is a list of all the running nodes ╒══════════════╤═════════════╤═════════╤═════════════╤════════════╤══════════════════════╤════════════════════╕ │ Node │ Type │ State │ Reachable │ Protocol │ Management Address │ External Address │ ╞══════════════╪═════════════╪═════════╪═════════════╪════════════╪══════════════════════╪════════════════════╡ │ nx-osv9000-1 │ NX-OSv 9000 │ ABSENT │ N/A │ N/A │ N/A │ N/A │ ├──────────────┼─────────────┼─────────┼─────────────┼────────────┼──────────────────────┼────────────────────┤ │ nx-osv9000-2 │ NX-OSv 9000 │ ABSENT │ N/A │ N/A │ N/A │ N/A │ ├──────────────┼─────────────┼─────────┼─────────────┼────────────┼──────────────────────┼────────────────────┤ │ nx-osv9000-3 │ NX-OSv 9000 │ ABSENT │ N/A │ N/A │ N/A │ N/A │ ├──────────────┼─────────────┼─────────┼─────────────┼────────────┼──────────────────────┼────────────────────┤ │ nx-osv9000-4 │ NX-OSv 9000 │ ABSENT │ N/A │ N/A │ N/A │ N/A │ ╘══════════════╧═════════════╧═════════╧═════════════╧════════════╧══════════════════════╧════════════════════╛

(venv) [developer@devbox sbx_nxos]$virl console nx-osv9000-1 Attempting to connect to console of nx-osv9000-1 Trying 10.10.20.160... Connected to 10.10.20.160. Escape character is '^]'. 2019 Sep 8 16:41:49 %$ VDC-1 %$ netstack: Registration with cli server complete 2019 Sep 8 16:42:04 %$ VDC-1 %$ %USER-2-SYSTEM_MSG: ssnmgr_app_init called on ssnmgr up - aclmgr 2019 Sep 8 16:42:04 %$ VDC-1 %$ %VMAN-2-INSTALL_STATE: Installing virtual service 'guestshell+' 2019 Sep 8 16:42:13 %$ VDC-1 %$ %USER-0-SYSTEM_MSG: end of default policer - copp 2019 Sep 8 16:42:13 %$ VDC-1 %$ %COPP-2-COPP_NO_POLICY: Control-plane is unprotected. 2019 Sep 8 16:42:15 %$ VDC-1 %$ %CARDCLIENT-2-FPGA_BOOT_GOLDEN: IOFPGA booted from Golden 2019 Sep 8 16:42:15 %$ VDC-1 %$ %CARDCLIENT-2-FPGA_BOOT_STATUS: Unable to retrieve MIFPGA boot status

Now we can see the nexus are active

(venv) [developer@devbox sbx_nxos]$virl nodes Here is a list of all the running nodes ╒══════════════╤═════════════╤═════════╤═════════════╤════════════╤══════════════════════╤════════════════════╕ │ Node │ Type │ State │ Reachable │ Protocol │ Management Address │ External Address │ ╞══════════════╪═════════════╪═════════╪═════════════╪════════════╪══════════════════════╪════════════════════╡ │ nx-osv9000-1 │ NX-OSv 9000 │ ACTIVE │ REACHABLE │ telnet │ 172.16.30.101 │ N/A │ ├──────────────┼─────────────┼─────────┼─────────────┼────────────┼──────────────────────┼────────────────────┤ │ nx-osv9000-2 │ NX-OSv 9000 │ ACTIVE │ REACHABLE │ telnet │ 172.16.30.102 │ N/A │ ├──────────────┼─────────────┼─────────┼─────────────┼────────────┼──────────────────────┼────────────────────┤ │ nx-osv9000-3 │ NX-OSv 9000 │ ACTIVE │ REACHABLE │ telnet │ 172.16.30.103 │ N/A │ ├──────────────┼─────────────┼─────────┼─────────────┼────────────┼──────────────────────┼────────────────────┤ │ nx-osv9000-4 │ NX-OSv 9000 │ ACTIVE │ REACHABLE │ telnet │ 172.16.30.104 │ N/A │ ╘══════════════╧═════════════╧═════════╧═════════════╧════════════╧══════════════════════╧════════════════════╛ (venv) [developer@devbox sbx_nxos]$ (venv) [developer@devbox sbx_nxos]$ping 172.16.30.101 PING 172.16.30.101 (172.16.30.101) 56(84) bytes of data. 64 bytes from 172.16.30.101: icmp_seq=1 ttl=254 time=1.72 ms 64 bytes from 172.16.30.101: icmp_seq=2 ttl=254 time=1.79 ms 64 bytes from 172.16.30.101: icmp_seq=3 ttl=254 time=1.80 ms 64 bytes from 172.16.30.101: icmp_seq=4 ttl=254 time=1.78 ms 64 bytes from 172.16.30.101: icmp_seq=5 ttl=254 time=1.75 ms ^C --- 172.16.30.101 ping statistics --- 5 packets transmitted, 5 received, 0% packet loss, time 4006ms rtt min/avg/max/mdev = 1.726/1.773/1.806/0.047 ms

From here we can access these a few ways, ssh via virl

(venv) [developer@devbox sbx_nxos]$virl ssh nx-osv9000-1 Attemping ssh connectionto nx-osv9000-1 at 172.16.30.101 Warning: Permanently added '172.16.30.101' (RSA) to the list of known hosts. User Access Verification Password: Cisco NX-OS Software Copyright (c) 2002-2018, Cisco Systems, Inc. All rights reserved. [removed] nx-osv9000-1#

Or from ssh from my terminal on my deskstop/mac

STUACLAR-M-R6EU:~ stuaclar$ ssh cisco@172.16.30.101

The authenticity of host '172.16.30.101 (172.16.30.101)' can't be established.

RSA key fingerprint is SHA256:iyOorfieUeJoKFz1dgCtW+2/euXC0SDhj2Xep3eEdqc.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '172.16.30.101' (RSA) to the list of known hosts.

User Access Verification

Password:

Cisco NX-OS Software

Copyright (c) 2002-2018, Cisco Systems, Inc. All rights reserved.

Nexus 9000v software ("Nexus 9000v Software") and related documentation,

[removed]

nx-osv9000-1#

The same steps can be followed for the other devices.

Connect with me https://bigevilbeard.github.io

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-10-2019 07:03 PM - edited 09-10-2019 07:04 PM

Let me start off with a quote from Seinfeld which pretty much sums up how I feel right now:

So please, a little respect, for I am Costanza, Lord Of The Idiots

I hate to admit this, but I never even looked at the info displayed when I logged into the devbox. I'm so used to ignoring messages when I log into Linux servers at work that I blew right past this. Ahhhhhhhhhhh I knew it had to be something stupid and simple.

It's all good now. I followed your directions (which were right in front of my face the whole time) and can access all the nodes now.

Thank you for your patience, help and sticking with this!!!! Hopefully you'll get a good laugh out of this one. LOL

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-31-2020 06:09 AM

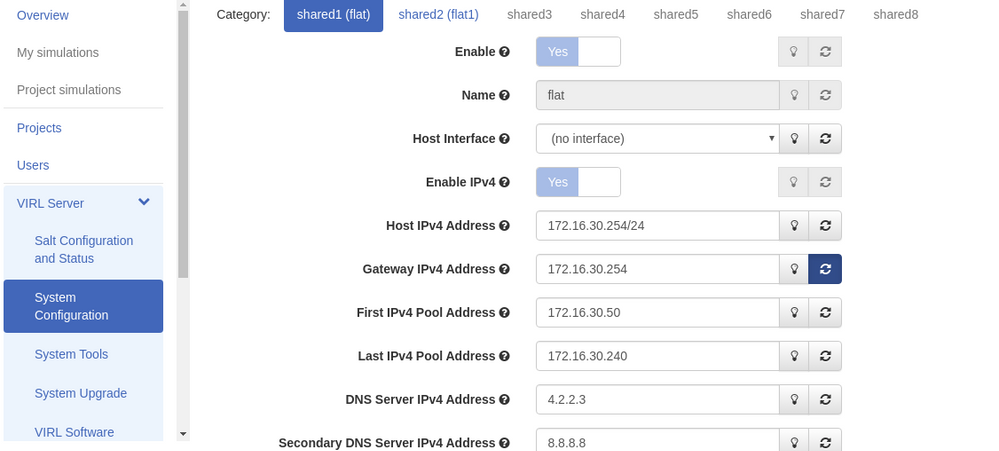

Hi @hikerguy , I've came to the same issue with connectivity. The secret is in Virl settings. Login as uwmadmin, go to the left panel: Virl Server->System configuration. You should see the management settings for simulated devices. By default it uses 172.16.30.0/24 network. Addresses are assigned to devices on startup by virl server. In my case - I use multi-ios sandbox, custom topology from file - the addresses were assigned to Gi0/0 interface of the router.

I modified them to look like in a original simulation eg. "2 ios network"

ip vrf Mgmt-intf

interface GigabitEthernet0/0

ip vrf forwarding Mgmt-intf

ip address 172.16.30.54 255.255.255.0

ip route vrf Mgmt-intf 0.0.0.0 0.0.0.0 172.16.30.254

then everything work fine. Boxes are reachable both from devbox and virl server itself.

Virl config:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-31-2020 06:22 AM

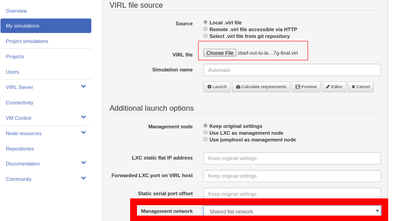

maybe one more thing: upon simulation launch make sure to choose Management network: "Shared flat network"

- « Previous

-

- 1

- 2

- Next »

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: