- Cisco Community

- Technology and Support

- Security

- Network Access Control

- Re: Programmatic Network Device Export

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-12-2018 10:28 AM

Looking for a way to programmatically export the ISE Network Device list (nightly).

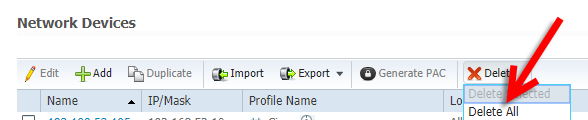

Because oops:

The following URI is sent when exporting from the GUI, but fails when sent from postman (likely due to csrf check)

/admin/GenericImportUploadAction.do?command=performExport&sort=name&importData.includeEmptyCells=false&importData.matrixName=undefined

I could iterate through the ERS api compile a table of the ~4000 devids (100 devices at a time) then iterate through each one of those capturing the pertinent information and placing it in a csv output file... but it seems rather intensive. Any way to call this function from the API or select from the database? Is there another way to export this data programmatically that I'm missing?

TIA.

Solved! Go to Solution.

- Labels:

-

Identity Services Engine (ISE)

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-13-2018 05:00 PM

Hi Jason/Arne, this script will pull all NADs from ISE as you've described (100 at the time), and then perform some work over them in 'workOver' function. From here you can add additional functions for your specific needs, etc....

#!/usr/bin/python

from __future__ import print_function

import requests

import json

def nadInfoGet(s,url):

res=s.get(url,verify=False)

nads=json.loads(res.text)

return nads

def workOver(nads):

for nad in nads['SearchResult']['resources']:

print('{} {}'.format(nad['id'],nad['name']))

def main():

url='https://<hostname>:9060/ers/config/networkdevice/?size=100'

s=requests.Session()

s.auth=('<username>', '<password>')

s.headers.update({'accept': "application/json",'cache-control': "no-cache"})

while True:

nads=nadInfoGet(s,url)

workOver(nads)

try:

url=nads['SearchResult']['nextPage']['href']

except:

break

if __name__=='__main__':

main()

Hope this helps!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-12-2018 12:54 PM

If referring to Network Access Devices, then ERS API can do this today. Yes, there is a need to fetch the IDs and then perform query based on IDs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-12-2018 01:08 PM

Yeah, I was hoping not to have to do that. Looks like chunks of 100 is the max i can iterate through at a time, need to get the length of the list, iterate grabbing the ids, check for a next page url, hope no one adds a NAD while the job is running then go back through and use the ids i gathered to grab the details.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-12-2018 05:15 PM

Jason's post reminded me that I also ran into this issue and due to my lack of REST/web skills, I gave up in the end. I was surprised that I had to iterate through a series of pages to get all the data I wanted.

Given that REST API is the way of the future, we're all going to get to grips with it sooner or later.

Therefore I was hoping that someone might post a Python example here of how to iterate an arbitrary list (e.g. NAD devices) using the REST API in ISE.

thanks in advance

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-13-2018 07:00 AM

I've done some initial investigation on what this would take and provided an estimate to my management team, I'm not sure if I'll be approved to work on it. I don't have an actual script as I was working in the shell and taking notes so please excuse this for what it is...

import requests

import json

s=requests.Session()

s.auth=('USERNAME', 'PASSWORD')

s.headers.update({'accept': "application/json",'cache-control': "no-cache"})

r=s.get('https://HOSTNAME:9060/ers/config/networkdevice/?size=100', verify=False)

nads=json.loads(r.text)

for x in nads['SearchResult']['resources']:

print('%s, %s' % (x['id'], x['link']['href']))

This should be the bare bones to get started....

This will go grab the first 100 network access devices... if you have more you need to determine how you want to check for that, there's a next page construct with a link href, I'm probably going to see if that exists then follow it for the next set of devices if I get approved to work on this.

With that first set of devices in the collection I'm iterating through the list dumping out the ID and the ERS link to get the full details.... I'll need to collect those and follow them to pull out the data and create a csv.

Play around in the shell with some of the constructs, there's also some developer scripts on the bottom tab of the ERS documentation too.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-13-2018 03:27 PM

HI Jason

i have done all this already and then gave up when I didn’t know how to fetch the next page. This is easily lab’d and tested even on my small ISE eval on my laptop. I can import fake devices into ISE with csv and then play around with ERS in my Python script. But I lack the web page know how.

CIsco did a great job in publishing the API in ISE itself. I was just hoping they could give us one small example of how to retrieve a list that extends beyond one API call!! Preferably in python

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-13-2018 03:32 PM

I asked our lab admin to help with Arne's ask as he is pretty good with Python. He will post to this discussion directly once he got it working.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-13-2018 05:00 PM

Hi Jason/Arne, this script will pull all NADs from ISE as you've described (100 at the time), and then perform some work over them in 'workOver' function. From here you can add additional functions for your specific needs, etc....

#!/usr/bin/python

from __future__ import print_function

import requests

import json

def nadInfoGet(s,url):

res=s.get(url,verify=False)

nads=json.loads(res.text)

return nads

def workOver(nads):

for nad in nads['SearchResult']['resources']:

print('{} {}'.format(nad['id'],nad['name']))

def main():

url='https://<hostname>:9060/ers/config/networkdevice/?size=100'

s=requests.Session()

s.auth=('<username>', '<password>')

s.headers.update({'accept': "application/json",'cache-control': "no-cache"})

while True:

nads=nadInfoGet(s,url)

workOver(nads)

try:

url=nads['SearchResult']['nextPage']['href']

except:

break

if __name__=='__main__':

main()

Hope this helps!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-13-2018 09:26 PM

Thanks nemsimic

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: