- Cisco Community

- Technology and Support

- Networking

- Networking Knowledge Base

- Cisco SD-Access Multicast

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

09-18-2020 11:19 AM - edited 11-16-2023 07:10 AM

- Introduction to SD-Access Multicast

- External RP

- SSM Multicast

- Head-End replication

- Native Multicast

- Topology used

- Cisco-DNAC Workflow for Multicast

- Fabric Device Configuration

- PIM ASM traffic Flow

- Traffic source outside fabric and receiver inside fabric using native multicast

- Traffic source inside fabric and receiver inside fabric using native multicast

- PIM SSM traffic Flow

- Traffic source outside fabric and receiver inside fabric using native multicast

- Conclusion

Introduction to SD-Access Multicast

The document describes the Cisco DNAC UI workflow introduced as part of the Cisco DNA Center1.3.3 release. The document covers the components of Multicast forwarding on SD-Access fabric and the configuration pushed to the network devices. The document highlights the show commands and their outputs which will help in basic understanding and troubleshooting of multicast on fabric.

As part of introducing the new workflow, we have taken three use cases to showcase the operation and outputs from different viewpoints within the network.

Note:The below matrix doesn't represent the supported combinations, rather the intent is to showcase the outputs when multicast traffic is flowing into the fabric irrespective of where the source resides.

|

Overlay Multicast mode |

Multicast Source |

Multicast receiver |

Transport mode in Fabric |

|

ASM |

Outside fabric |

Inside fabric |

Native |

|

ASM |

Inside fabric |

Inside fabric |

Native |

|

SSM |

Outside fabric |

Inside fabric |

Native |

Multicast forwarding on SD-Access fabric uses two methods for distributing the traffic on the underlay: Head-end Replication and Native Multicast

External RP

Starting from 1.3.3 the SD-Access fabric supports an external RP. Prior to 1.3.3, it was mandatory to have the RP inside the fabric. Once an external RP is configured using the Cisco DNAC workflow, the fabric devices, including the fabric border and edge, would be configured with the respective RP address.

The default behavior prior to 1.3.3 was to choose an internal RP within the fabric, which is usually the border node. Starting in 1.3.3, the administrator can decide if an internal or an external RP is appropriate for the overlay.

This feature was introduced because most brownfield deployments would have an existing RP and would want to re-use the same RP for their fabric.

The external RP workflow on Cisco DNAC 1.3.3 will allow an administrator to create a maximum of two RP’s; however we can only assign a single RP to a specific VN.

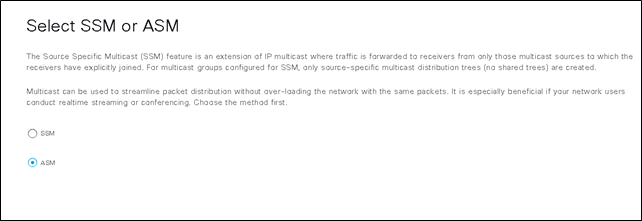

SSM Multicast

The 1.3.3 release of SD-Access introduces support for SSM for the overlay multicast. As part of the workflow an Administrator can customize the SSM multicast range that can be supported on the fabric.

Cisco DNAC will provision the SSM multicast CLI for the overlay VN with an ACL, stating the allowed SSM range.

For example:

ip access-list standard SSM_RANGE_VN

10 permit 232.127.0.0 0.0.255.255 <-----Range defined on the Cisco DNAC workflow

ip pim vrf Campus ssm range SSM_RANGE_VN

If there is no range specified for the overlay VN, then the default range is used (232.0.0.0 0/8).

An important point to note is that when SSM is used in the overlay, there is no RP required and hence an RP is not provisioned to the fabric devices on the overlay VN. The Cisco DNAC workflow is designed in such a manner that if the SSM is preferred mode in the overlay, the system will not prompt for an RP address.

Head-End replication

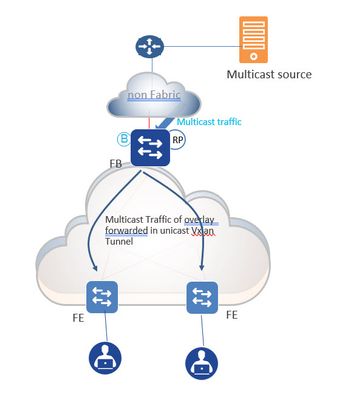

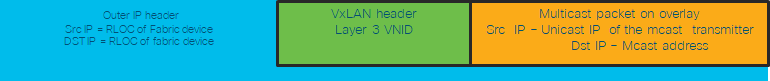

With head-end replication, packets for multicast flows in the overlay VN need to be transported in the underlay. The packets are transported in unicast VxLAN tunnels between the RLOC’s in the fabric. If we look at the packet trace, the inner IP packet is the multicast packet in the overlay VN, while the outer IP header contains the source and destination RLOC. The packets are forwarded only to those destinations which have interested receivers for the multicast group.

The interested receivers and the respective RLOC's are derived based on the PIM join received in the overlay VN.

Native Multicast

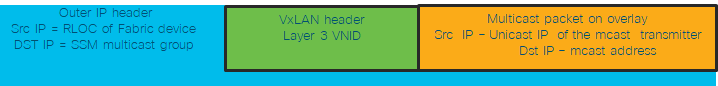

With Native multicast the multicast packets in the overlay VN’s are forwarded as multicast in the underlay. One of the prerequisites of Native multicast is to have multicast enabled and SSM configured on the underlay.

Native multicast works in conjunction with SSM in the underlay. Based on the overlay multicast group address the receivers are subscribed to, the fabric edge derives an underlay SSM group and sends out a PIM join to the border node.

The SSM groups used in the underlay are derived as follows:

For ASM in the overlay, the ASM group address is used to derive the SSM group address. For SSM in the overlay space, the source and group address are used to derive the SSM group address. The principle for generating the SSM group address ensures that all fabric devices which have receivers will generate the exact same SSM group address.

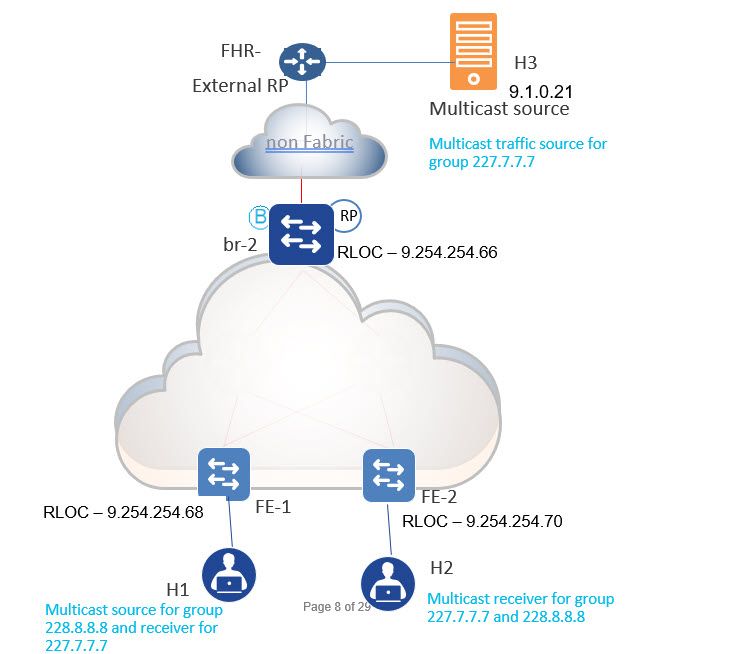

Topology used

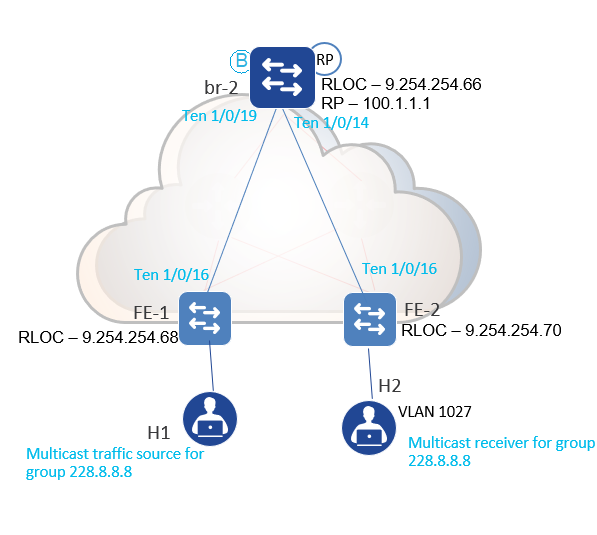

In the sample Topology, we have 2 Hosts inside the fabric called H1 and H2 subscribers to the multicast traffic destined to 227.7.7.7.

The source for the Multicast group resides outside the fabric – H3.

Cisco-DNAC Workflow for Multicast

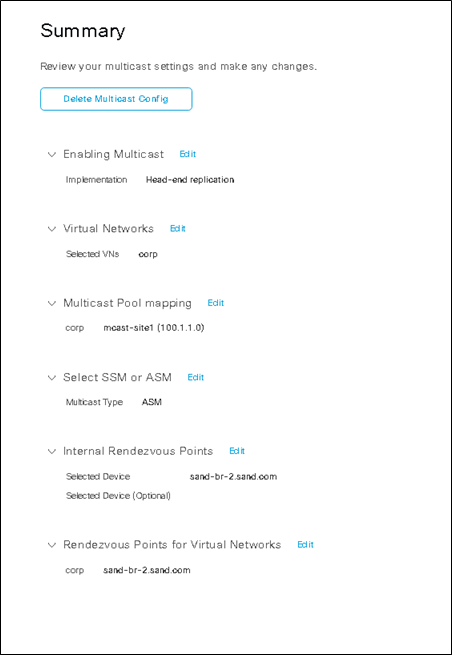

The following section highlights the workflow on Cisco DNAC 1.3.3 to enable multicast on the fabric.

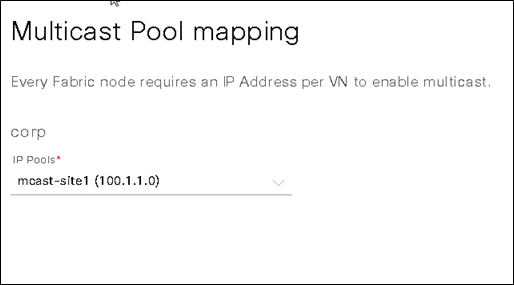

Before enabling the workflow, an IP pool needs to be created on the DNAC. The pool needs to be mapped to a respective site which is used in an overlay to create the PIM relationship and to create an RP (in the case of an internal RP).

The Pool is used to create a loopback in the overlay VN on the fabric devices and to communicate PIM joins on the overlay VN. The loopbacks are created on those VNs that have multicast enabled.

-

To create a pool on the Cisco DNAC.

Navigate to the Design--> Network Settings-- >IP address pool.

Create a pool under the global site hierarchy and reserve the pool in the respective site:Reserve an IP pool to be used for Multicast

Reservation of the pool at the site level

-

The next step is to enable Multicast within a site.

The below section covers the workflow enabling multicast within a site.

From the Cisco DNAC home page, navigate to the Provision>Fabric> Fabric domain>Fabric enabled site.

Hover the mouse over the gear icon on the site to enable multicast.

Enable Multicast on a Fabric site

- Define the method of enabling multicast forwarding within the fabric site. The forwarding refers to how the packets are forwarded in the underlay. As described before we have 2 options:

Native multicast and head-end replication.

-

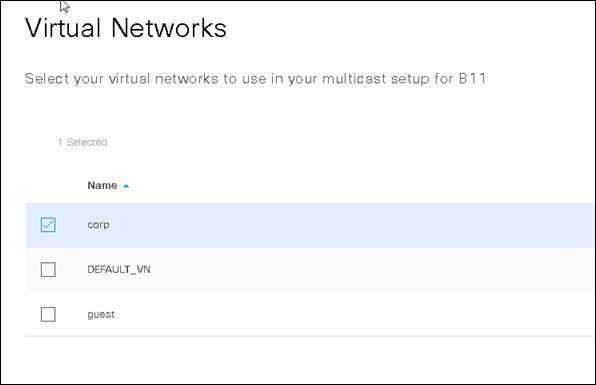

Define the VN for which multicast needs to be enabled. The list shows the VN’s configured on the DNAC. The administrator has the option to choose the VN where multicast has to be enabled.

- Use the pool that was defined in the network settings for creating the RP (internal) and loopbacks for PIM conversations.

- Define the Multicast model that needs to be used on the overlay VN. The Current options are to select an ASM or an SSM tree.

-

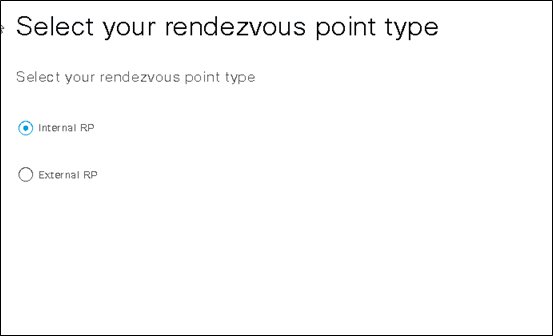

Define the rendezvous point for the fabric. This option only shows if we have selected the ASM mode on the overlay VN. The RP can be internal or external.

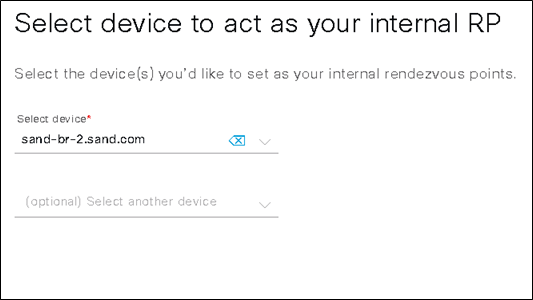

- Select the device which acts as an RP in the case of an internal RP. In the case of an external RP define the external RP address.

For redundancy, you can tag multiple devices to act as an RP. In this case, Cisco DNAC will configure a Multicast Source Discovery Protocol(MSDP) peering sessions between the devices and select an anycast RP to be used on the SD-Access fabric.

-

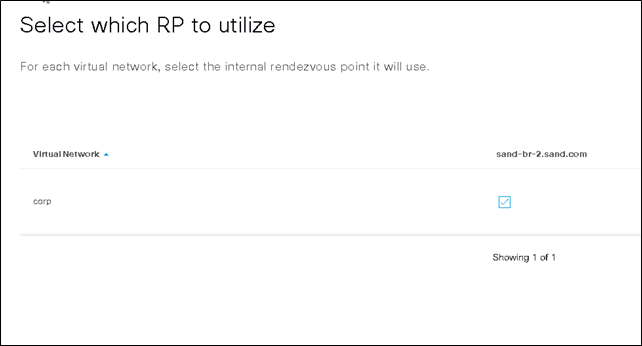

Map the RP to the respective VN:

- Cisco DNAC shows the summary of the multicast configuration for the fabric site.

Fabric Device Configuration

This section will highlight the configuration pushed by the Cisco DNA Center to fabric devices

Configuration on the Fabric border/RP.

##############Creates a loop back interface on the border

interface Loopback4099

vrf forwarding corp

ip address 100.1.1.1 255.255.255.255

ip pim sparse-mode

########## Use the loopback as an RP

ip pim vrf corp rp-address 100.1.1.1

*NOTE: In case of External RP , the RP address would reflect an RP External to the fabric

#########Register the RP address with the CP of the fabric

router lisp

instance-id 4099

<…..snip….>

eid-table vrf corp

database-mapping 100.1.1.1/32 locator-set rloc_fc278376-07be-4ef8ec5

######### Advertise the Loop back interface to the external domain

router bgp 65002

address-family ipv4 vrf corp

network 100.1.1.1 mask 255.255.255.25

######## CLI to enable Native Multicast.Not applicable for Head-end replication

interface LISP0.4099 <---Native Multicast config under LISP Interface

ip pim lisp transport multicast <---Enables native multicast

ip pim lisp core-group-range 232.0.0.1 1000 <--- Groups used for the SSM underlay

Configuration on the Fabric Edge.

##### Creates a loop back interface on the border

fe-1#show run int loo 4099

interface Loopback4099

vrf forwarding corp

ip address 100.1.1.3 255.255.255.255

ip pim sparse-mode

end

########## Use the loopback of the border as an RP

ip pim vrf corp rp-address 100.1.1.1

*NOTE: In case of External RP , the RP address would reflect an RP External to the fabric

######## CLI to enable Native Multicast.Not applicable for Head-end replication

interface LISP0.4099 <---Native Multicast config under LISP Interface

ip pim lisp transport multicast <---Enables native multicast

ip pim lisp core-group-range 232.0.0.1 1000 <--- Groups used for the SSM underlay

PIM ASM traffic Flow

Traffic source outside fabric and receiver inside fabric using native multicast

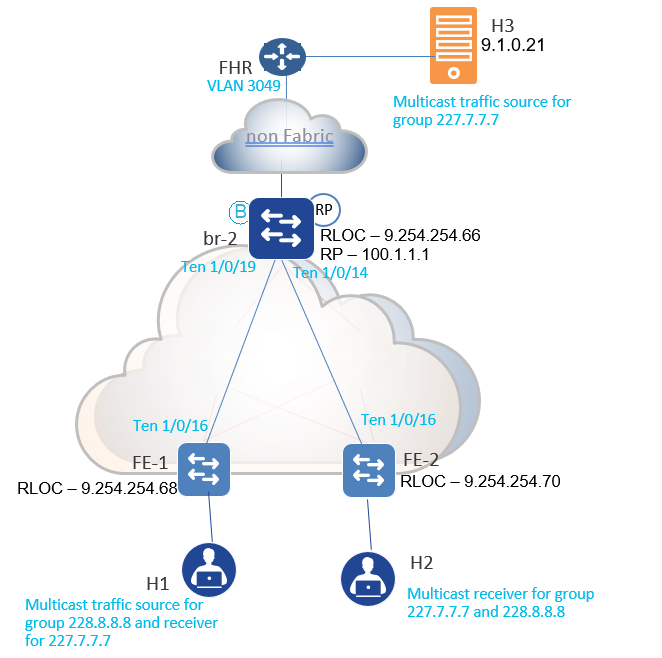

In this section as per our topology we have a host H3 sending out multicast data streams to the multicast group 227.7.7.7. We have receivers inside the fabric subscribed to that multicast address. We will show the multicast routing table and the expected output from the FHR, the border, and the fabric edge.

First Hop Router(FHR) output

FHR #show ip mroute vrf corp 227.7.7.7

(*, 227.7.7.7), 00:09:35/stopped, RP 100.1.1.1, flags: SPF

Incoming interface: Vlan3049, RPF nbr 9.253.253.1

Outgoing interface list: Null

(9.1.0.21, 227.7.7.7), 00:09:35/00:02:51, flags: FT

Incoming interface: Vlan1, RPF nbr 0.0.0.0

Outgoing interface list:

Vlan3049, Forward/Sparse-Dense, 00:08:53/00:02:47

The entries for the group 227.7.7.7 show a (*,G) rooted at the RP, the (S,G) entry is the SPT tree build from the destination or the LHR all the way till the FHR.

In this case Vlan 3049 is the interface used to reach the RP as well the Last-Hop Router (LHR).

Now, let us look at the multicast routing (mroute) table from the fabric border (BR2):

br-2#sh ip mroute vrf corp 227.7.7.7 verbose

(*, 227.7.7.7), 00:41:40/00:03:20, RP 100.1.1.1, flags: Sp

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

LISP0.4099, (9.254.254.66, 232.0.3.170), Forward/Sparse, 00:41:39/stopped, p

9.254.254.68, 00:41:33/00:03:20 <----------RLOC of FE1

9.254.254.70, 00:41:39/00:03:07 <----------RLOC of FE2

(9.1.0.21, 227.7.7.7), 00:41:40/00:01:58, flags: Tp

Incoming interface: Vlan3049, RPF nbr 9.253.253.2

Outgoing interface list:

LISP0.4099, (9.254.254.66, 232.0.3.170), Forward/Sparse, 00:41:40/stopped, p

9.254.254.68, 00:41:33/00:03:21

9.254.254.70, 00:41:40/00:03:09

The above example is taken from the fabric border which acts as the RP in this use-case. The output shows that there are interested receivers for the group 227.7.7.7 on fabric edge with an RLOC IP of 9.254.254.68(FE1) and 9.254.254.70 (FE2).

The highlighted section on the fabric border shows that the overlay multicast is carried in an underlay multicast group which is based on a Source-Specific multicast tree (SSM) with a source IP of 9.254.254.66 (RLOC of the border) and the underlay multicast group 232.0.3.170.

Now, lets us look at the underlay multicast route table for the group 232.0.3.170:

br-2#show ip mroute 232.0.3.170 verbose

(9.254.254.66, 232.0.3.170), 02:47:10/00:03:29, flags: sTp

Incoming interface: Null0, RPF nbr 0.0.0.0

Outgoing interface list:

TenGigabitEthernet1/0/19, Forward/Sparse, 02:38:11/00:03:29, p

TenGigabitEthernet1/0/14, Forward/Sparse, 02:43:08/00:02:47

The interfaces TenGigabitEthernet1/0/19 and 1/0/14 are the downstream outgoing interfaces to the fabric edges.

Let’s take a look at the Multicast routing (mroute) table at the Fabric edge:

fe-1#sh ip mroute vrf corp 227.7.7.7 verbose

(*, 227.7.7.7), 1d01h/stopped, RP 100.1.1.1, flags: SJC

Incoming interface: LISP0.4099, RPF nbr 9.254.254.66, LISP: [9.254.254.66, 232.0.3.170]

Outgoing interface list:

Vlan1027, Forward/Sparse-Dense, 1d01h/00:02:54

(9.1.0.21, 227.7.7.7), 1d01h/00:01:10, flags: JT

Incoming interface: LISP0.4099, RPF nbr 9.254.254.66, LISP: [9.254.254.66, 232.0.3.170]

Outgoing interface list:

Vlan1027, Forward/Sparse-Dense, 1d01h/00:02:54

From the above output, the highlighted section shows that for the overlay VN, the incoming interface is through a VxLAN tunnel interface with an instance ID of 4099 and the underlay multicast packets are sourced at 9.254.354.66 destined to 232.0.3.170.

The (*,G) and (S,G) state on the devices is due to the basic principle of multicast where the SPT tree is built towards the source once the initial packet arrives on the shared tree. This document covers the flow of multicast in fabric, the basic fundamentals of multicast are out of the scope of this document.

fe-1#sh ip mroute 232.0.3.170

(9.254.254.66, 232.0.3.170), 1d01h/00:02:24, flags: sT

Incoming interface: TenGigabitEthernet1/0/16, RPF nbr 9.254.254.145

Outgoing interface list:

Null0, Forward/Dense, 1d01h/stopped

The above output shows an entry in the underlay for 232.0.3.170. The multicast tree for the group is built using Source-Specific Multicast (SSM).

Traffic source inside fabric and receiver inside fabric using native multicast

In this section as per our topology we have a host H1 sending out multicast data streams to the multicast group 228.8.8.8. We have receivers inside the fabric subscribed to the same multicast address. We will showcase the multicast routing table and the expected output from the fabric edge switches FE1 and FE2.

Let us now look at the multicast routing (mroute) table where the source H1 is connected which is streaming multicast data to 228.8.8.8.

fe-1#show ip mroute vrf corp 228.8.8.8 verbose

(*, 228.8.8.8), 01:43:00/stopped, RP 100.1.1.1, flags: SPF

Incoming interface: LISP0.4099, RPF nbr 9.254.254.66, LISP: [9.254.254.66, 232.0.1.106]

Outgoing interface list: Null

(9.10.60.200, 228.8.8.8), 01:43:00/00:01:56, flags: PFT

Incoming interface: Vlan1027, RPF nbr 0.0.0.0

Outgoing interface list: Null

We could see a (*,G) and an (S,G) entry on the FHR, but the (S,G) is not built as the receiver has not sent an IGMP join for the group.

Let us look at the multicast routing (mroute) table once the receiver on FE2 has initiated an IGMP join for the group 228.8.8.8:

fe-1#show ip mroute vrf corp 228.8.8.8 verbose

(*, 228.8.8.8), 01:44:27/stopped, RP 100.1.1.1, flags: SPF

Incoming interface: LISP0.4099, RPF nbr 9.254.254.66, LISP: [9.254.254.66, 232.0.1.106]

Outgoing interface list: Null

(9.10.60.200, 228.8.8.8), 01:44:27/00:02:29, flags: FTp

Incoming interface: Vlan1027, RPF nbr 0.0.0.0

Outgoing interface list:

LISP0.4099, (9.254.254.68, 232.0.1.106), Forward/Sparse, 00:00:30/stopped, p

9.254.254.70, 00:00:30/00:02:59

Once the receiver has sent a Join message, the initial multicast packets are forwarded using the shared tree. Once the LHR receives the multicast packet, it will build an SPT tree towards the source. We could see the (S,G) is created on the overlay. The output highlighted shows the multicast packets are transported using the underlay multicast group 232.0.1.106 with a source address of 9.254.254.68 which is the RLOC of FE1.

Let us check the (S,G) for the underlay multicast group:

fe-1#show ip mroute 232.0.1.106 verbose

(9.254.254.68, 232.0.1.106), 00:00:08/00:03:21, flags: sTp

Incoming interface: Null0, RPF nbr 0.0.0.0

Outgoing interface list:

TenGigabitEthernet1/0/16, Forward/Sparse, 00:00:08/00:03:21, p

(9.254.254.66, 232.0.1.106), 00:00:50/00:02:09, flags: sT

Incoming interface: TenGigabitEthernet1/0/16, RPF nbr 9.254.254.145

lisp eid refcnt: 1

Outgoing interface list:

Null0, Forward/Dense, 00:00:50/stopped

The first (S,G) entry, which is (9.254.254.68, 232.0.1.106), was created to build the SPT tree to forward the multicast data of the overlay.

The second (S,G) entry (9.254.254.66, 232.0.1.106), is used to transport multicast data from the shared tree rooted at the RP:

fe-2#show ip mroute vrf corp 228.8.8.8 verbose

(*, 228.8.8.8), 00:00:00/stopped, RP 100.1.1.1, flags: SJC

Incoming interface: LISP0.4099, RPF nbr 9.254.254.66, LISP: [9.254.254.66, 232.0.1.106]

Outgoing interface list:

Vlan1027, Forward/Sparse-Dense, 00:00:00/00:02:59

(9.10.60.200, 228.8.8.8), 00:00:00/00:02:59, flags: JT

Incoming interface: LISP0.4099, RPF nbr 9.254.254.68, LISP: [9.254.254.68, 232.0.1.106]

Outgoing interface list:

Vlan1027, Forward/Sparse-Dense, 00:00:00/00:02:59

The highlighted output shows active receivers on VLAN 1027.The overlay multicast being transported in the underlay multicast.

PIM SSM traffic Flow

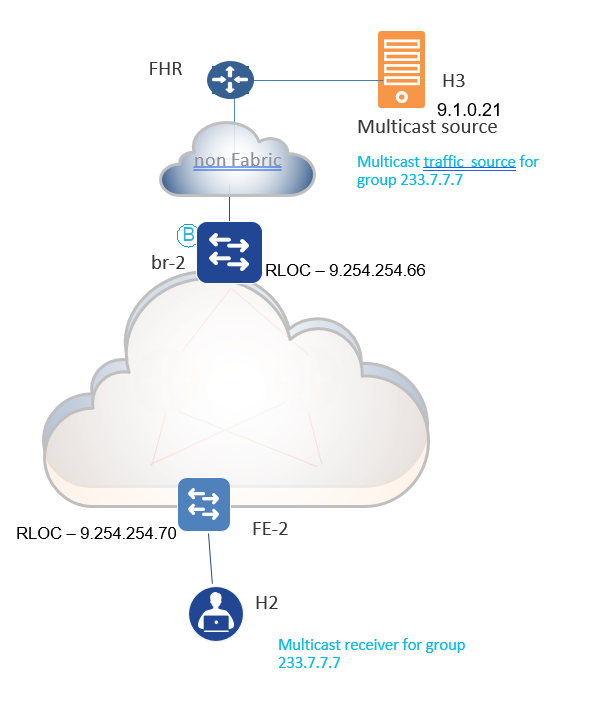

Traffic source outside fabric and receiver inside fabric using native multicast

In this section we will use the Cisco DNAC workflow to configure the overlay multicast support for SSM. A host in the fabric generates an IGMPv3 Join and subscribes to a multicast traffic for the group 233.0.0.1.

The source resides outside the fabric streaming multicast to the group address 233.0.0.1.

Since SSM needs to know the source of the stream, IGMPv3 is used to inform the LHR of the source so it can build an SPT towards the FHR.

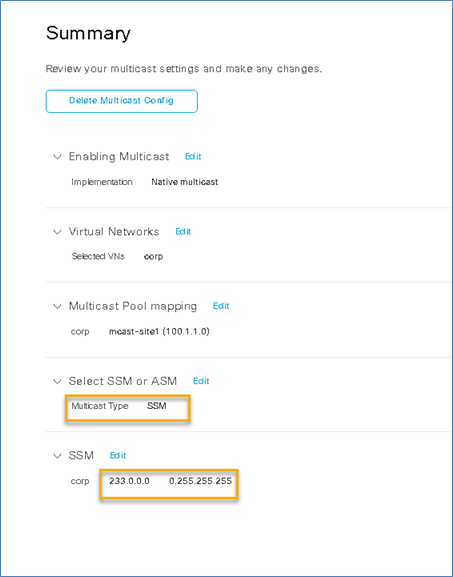

The following shows the summary of the config pushed through the Cisco DNA Center to a fabric site. An important detail to note is that there is no RP configured on the overlay since SSM does not use an RP.

The topology used for the SSM workflow is as shown below:

Now let us look at the relevant configuration that is provisioned on the device as part of the above workflow on DNAC.

Configuration on Fabric edge:

fe-2#show run | in pim

#####Create a loopback for PIM relationship

#####Create an SSM range defined in the workflow

ip pim vrf corp register-source Loopback4099

ip pim vrf corp ssm range SSM_RANGE_corp

fe-2#sh ip access-lists SSM_RANGE_corp

Standard IP access list SSM_RANGE_corp

10 permit 233.0.0.0, wildcard bits 0.255.255.255 (252 matches)

fe-2#sh run int loo 4099

interface Loopback4099

vrf forwarding corp

ip address 100.1.1.5 255.255.255.255

ip pim sparse-mode

Configuration on the border:

br-2#show run | in pim

#####Create a loopback for PIM relationship

#####Create an SSM range defined in the workflow

ip pim vrf corp register-source Loopback4099

ip pim vrf corp ssm range SSM_RANGE_corp

br-2#sh ip access-lists SSM_RANGE_corp

Standard IP access list SSM_RANGE_corp

10 permit 233.0.0.0, wildcard bits 0.255.255.255 (10 matches)

br-2#sh run int loo 4099

interface Loopback4099

vrf forwarding corp

ip address 100.1.1.2 255.255.255.255

ip pim sparse-mode

Now that we have seen the configuration pushed, let us verify the outputs for a traffic flow:

fe-2#show ip igmp vrf corp groups 233.7.7.7 detail

Flags: L - Local, U - User, SG - Static Group, VG - Virtual Group,

SS - Static Source, VS - Virtual Source,

Ac - Group accounted towards access control limit

Interface: Vlan1027

Group: 233.7.7.7

Flags: SSM

Uptime: 01:06:29

Group mode: INCLUDE

Last reporter: 9.10.60.25

Group source list: (C - Cisco Src Report, U - URD, R - Remote, S - Static,

V - Virtual, M - SSM Mapping, L - Local,

Ac - Channel accounted towards access control limit)

Source Address Uptime v3 Exp CSR Exp Fwd Flags

9.1.0.21 01:06:29 00:02:50 stopped Yes R

Verification of the multicast routing table on FE2

fe-2#show ip mroute vrf corp 233.7.7.7 verbose

IP Multicast Routing Table

(9.1.0.21, 233.7.7.7), 01:34:35/00:02:45, flags: sTI

Incoming interface: LISP0.4099, RPF nbr 9.254.254.66, LISP: [9.254.254.66, 232.0.0.213]

Outgoing interface list:

Vlan1027, Forward/Sparse-Dense, 01:34:35/00:02:45

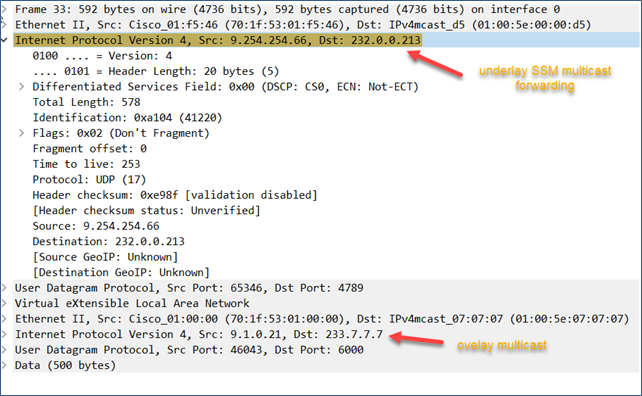

The highlighted output shows the IGMPv3 report from the receiver. The IGMPv3 reports carry the address of the multicast source. The Multicast routing table(mroute) highlights the overlay tree built from the receiver to the source and the corresponding underlay multicast transport mode –(9.254.254.66, 232.0.0.213).

fe-2#show ip mroute 232.0.0.213 verbose

Outgoing interface flags: H - Hardware switched, A - Assert winner, p - PIM Join

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(9.254.254.66, 232.0.0.213), 01:34:55/00:01:04, flags: sT

Incoming interface: GigabitEthernet1/0/23, RPF nbr 9.254.254.121

lisp eid refcnt: 1

Outgoing interface list:

Null0, Forward/Dense, 01:34:55/stopped

br-2#show ip mroute vrf corp 233.7.7.7 verbose

(9.1.0.21, 233.7.7.7), 01:36:06/00:02:47, flags: sTp

Incoming interface: Vlan3049, RPF nbr 9.253.253.2

Outgoing interface list:

LISP0.4099, (9.254.254.66, 232.0.0.213), Forward/Sparse, 01:36:06/stopped, p

9.254.254.70, 01:36:06/00:02:47

br-2#show ip mroute 232.0.0.213 verbose

(9.254.254.66, 232.0.0.213), 01:36:29/00:03:23, flags: sTp

Incoming interface: Null0, RPF nbr 0.0.0.0

Outgoing interface list:

TenGigabitEthernet1/0/14, Forward/Sparse, 01:36:29/00:03:23, p

A packet capture shows an SSM overlay multicast getting transported on an SSM underlay multicast group.

Conclusion

We have discussed Native Multicast and the use cases for PIM ASM and PIM SSM. The head-end replication mode of operation is discussed in detail and covered in a document attached.

In summary, Native Multicast enables efficient delivery of multicast packets within the fabric and the new workflow added to Cisco DNAC makes it easier for the administrator to choose the right options and automate the intent.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Didn't even think about Multicast with SD-Access, aka Multicast forwarding on SD-Access. Thanks for sharing!

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: