- Cisco Community

- Technology and Support

- Networking

- Networking Knowledge Base

- Evaluating Cisco SDWAN Cloud onRamp for Colocations with dCloud

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

03-21-2019 08:51 AM - edited 03-28-2019 12:29 PM

Note: This lab utilizes the dCloud SD-WAN platform. You must schedule this ahead of time before proceeding below!

Digitization is placing unprecedented demands on IT to increase the speed of services and applications delivered to customers, partners, and employees, all while maintaining security and a high quality of experience. With the adoption of multi-cloud infrastructure, the need to connect multiple user groups in an optimized and secure manner places additional demands on IT teams.

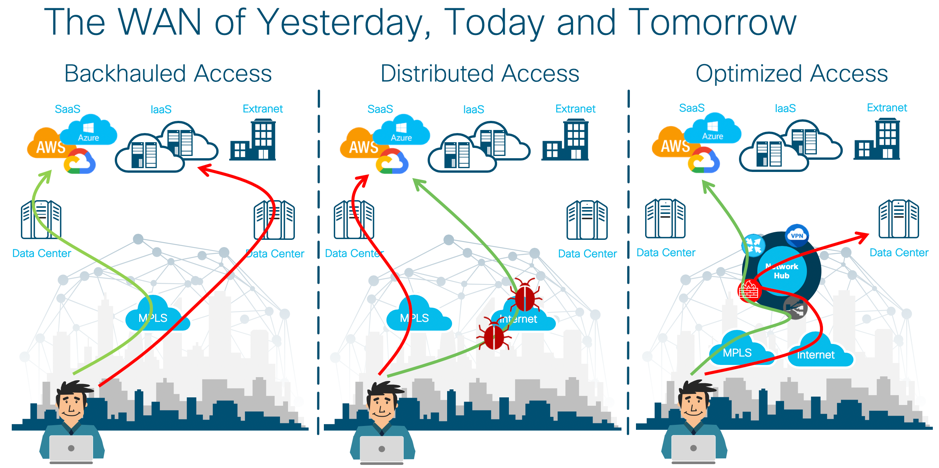

The traditional architectural method of delivering traffic optimization (i.e. load balancing, security policy, WAN optimization, etc.) relied on centralized provisioning of elements, such as Firewalls, Intrusion Detection/Prevention sensors, Data Leak Prevention systems, URL filtering, Proxies and other such devices at aggregation points within the network (most commonly the organization’s Data Centers). For SaaS applications and Internet access, this approach resulted in backhauling user traffic from remote sites into the main Data Centers, which increased application latency and negatively impacted overall user experience. For applications hosted in the Data Center, this approach resulted in the potential waste of Data Center bandwidth resources. Additionally, this architectural method also proved to be challenging to effectively mitigate security incidents, such as virus outbreaks, malware exploits and internally sourced denial of service attacks.

Today, as we move into the era of SDWAN, this problem is exacerbated by the architectural shift into a distributed access model. Branches and users are now free to access SaaS applications and Internet resources directly – bypassing the aggregation points highlighted above. While this provides a much more efficient method of moving data from point A to point B, it poses a challenge to IT teams looking to maintain their traditional optimization and security policies.

Cloud onRamp for CoLocation solves these challenges by offering the capability of virtualizing your network edge and extending it to colocation centers – bringing the cloud to you, versus you extending to the cloud. Cisco Cloud onRamp for CoLocation provides virtualization, automation and orchestration for your enterprise – negating the need to design infrastructure for future requirements or scale by providing an agile way of scaling up and down as required.

Step 1: Building a Cloud onRamp CoLocation Cluster

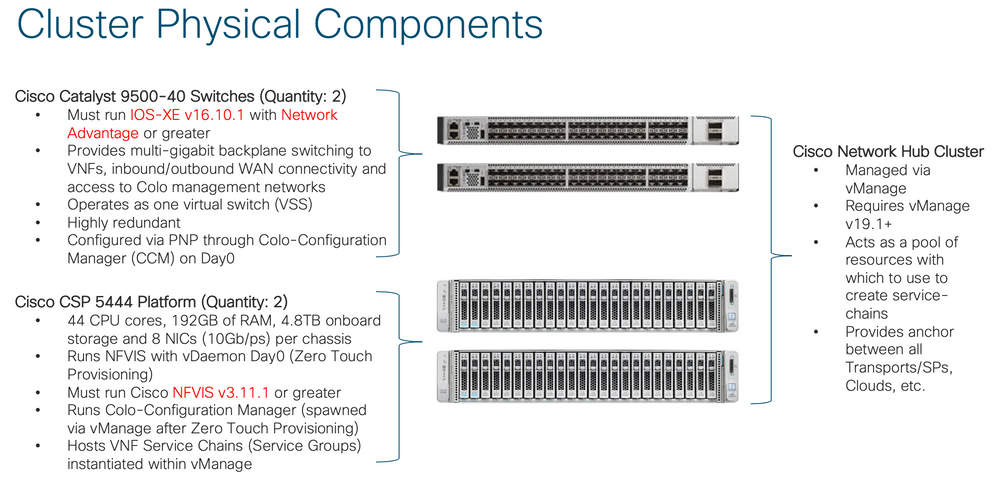

Cloud onRamp for CoLocation is designed to be prescriptive and turn-key. Hence, when the solution is purchased, the equipment can be drop-shipped to the colocation facility of choice, where it will be racked, stacked and cabled by local resources.

Upon initial boot-up, the inbuilt components of NFVIS (namely, vDaemon) will begin the process of auto-provisioning. This is where our lab picks up. Our assumption here is that the equipment is racked, cabled and booted up. It has built a control channel to vManage and is awaiting further provisioning by the administrator.

The following requirements must be met when implementing Cisco SDWAN Cloud onRamp for CoLocation:

- You must be running IOS-XE v16.10.1 or greater on your Catalyst switches

- You must be running Network Advantage or greater on your Catalyst switches

- CSPs must be running NFVIS v3.11.1 or greater

- You must be running vManage v19.1 or greater

- You must have a DHCP server (capable of DHCP Option 43) running in the management subnet of the colocation

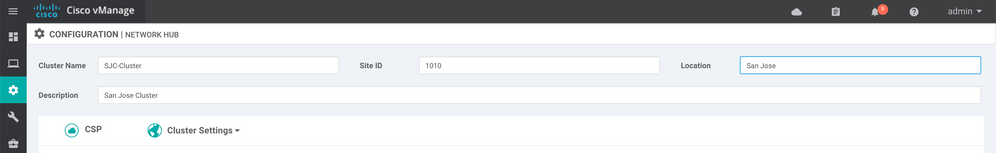

1. The first step to cluster creation is to allocate the devices appropriately within vManage. Navigate to Configuration, Network Hub (Note: this menu item will be renamed with v19.1+).

2. Click the Configure & Provision Cluster button at the bottom of the screen.

3. Provide a Name, Site ID, Location and Description for your cluster. With the exception of Site ID, these values are somewhat arbitrary and useful for distinguishing clusters in the event that your organization has many. In a real world deployment, ensure that the value you choose for Site ID falls inline with the organizations Site ID structure for other overlay elements. Here, I will use the following values:

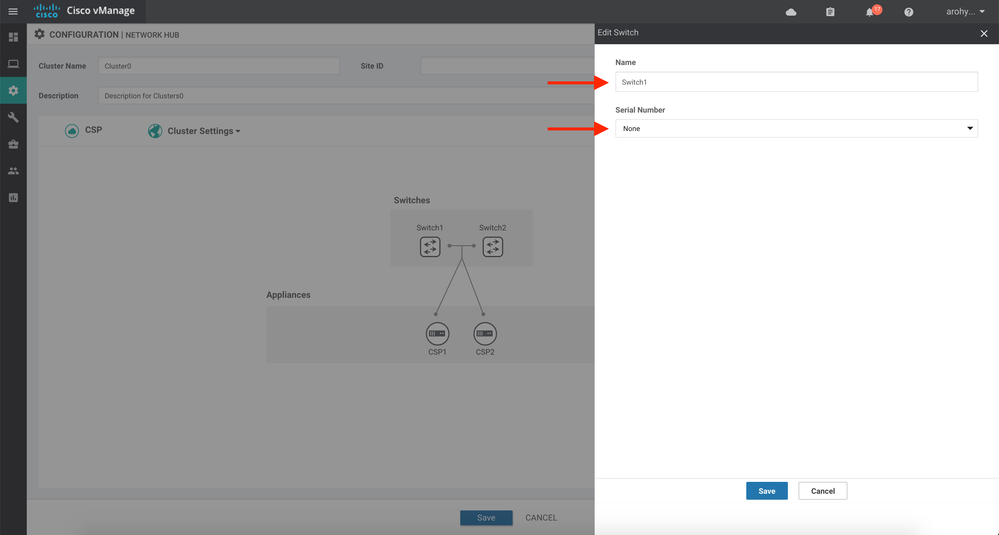

4. Next, we need to assign switches and CSPs to this cluster. Click on the Switch1 icon in the graphic.

5. Provide a name for your switch and select a serial number from the dropdown list and click the Save button (Note: it does not matter which serial number you choose. The switches will form a Virtual Switch Stack and operate as one logical entity):

6. Repeat the previous step for Switch2 and each CSP (Note: the CSPs have identical cabling to each Catalyst switch in the cluster. Hence, it does not matter which serial number you choose from the dropdown for each CSP).

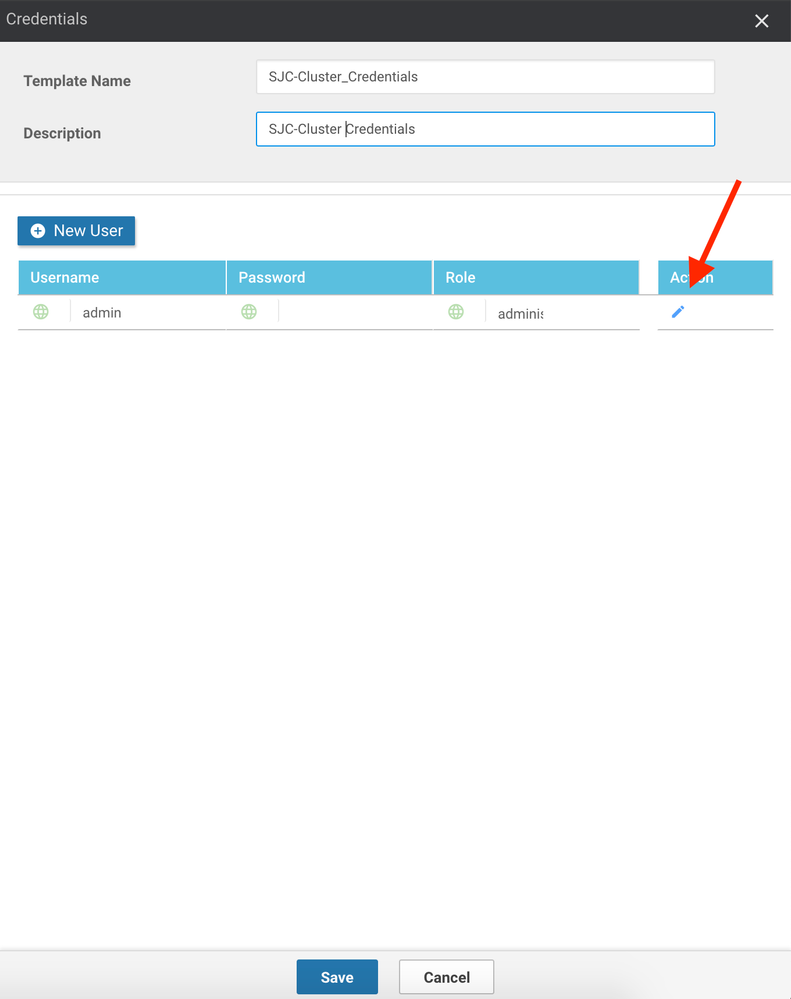

7. Click the Credentials button on the right pane.

8. Since the solution ships with a blank configuration, it is important to configure credentials on each device. Though, as a customer accessing the console of these devices is unlikely, they will be necessary for Cisco TAC troubleshooting and for more advanced review of configuration. Click the pencil icon next to the existing admin credentials already present (or, create your own with the New User button):

9. Provide a username, password and role (or simply a password, if using the default admin account) and click the Save Changes button.

10. Click the Save button to close out the credential window.

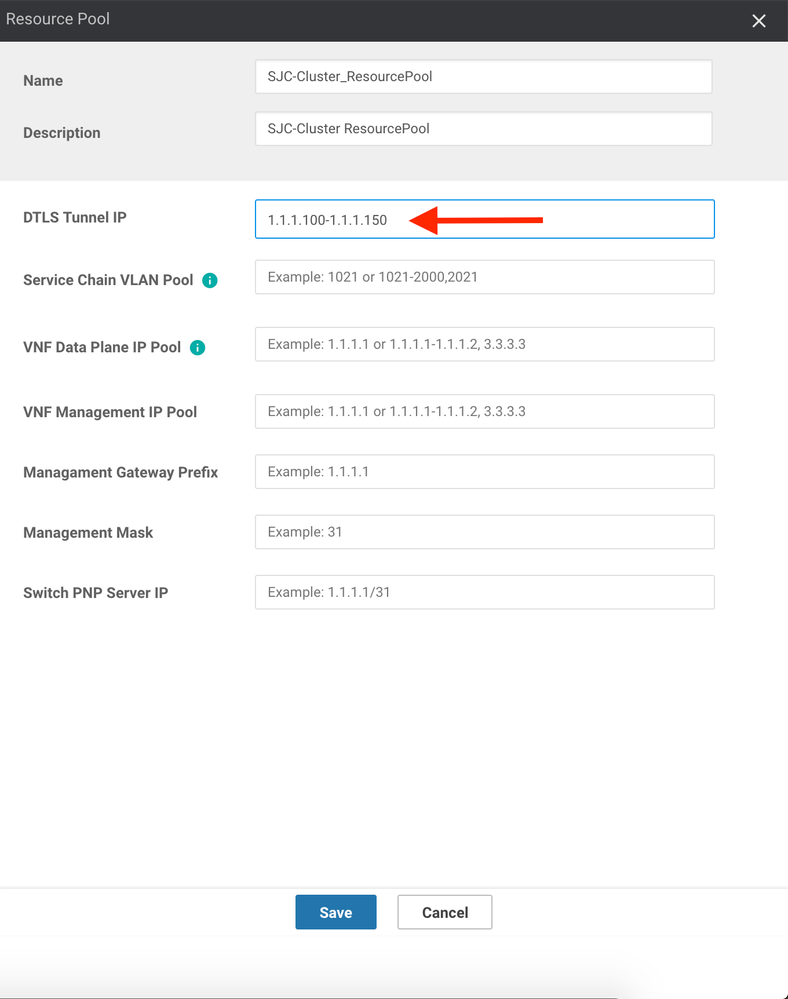

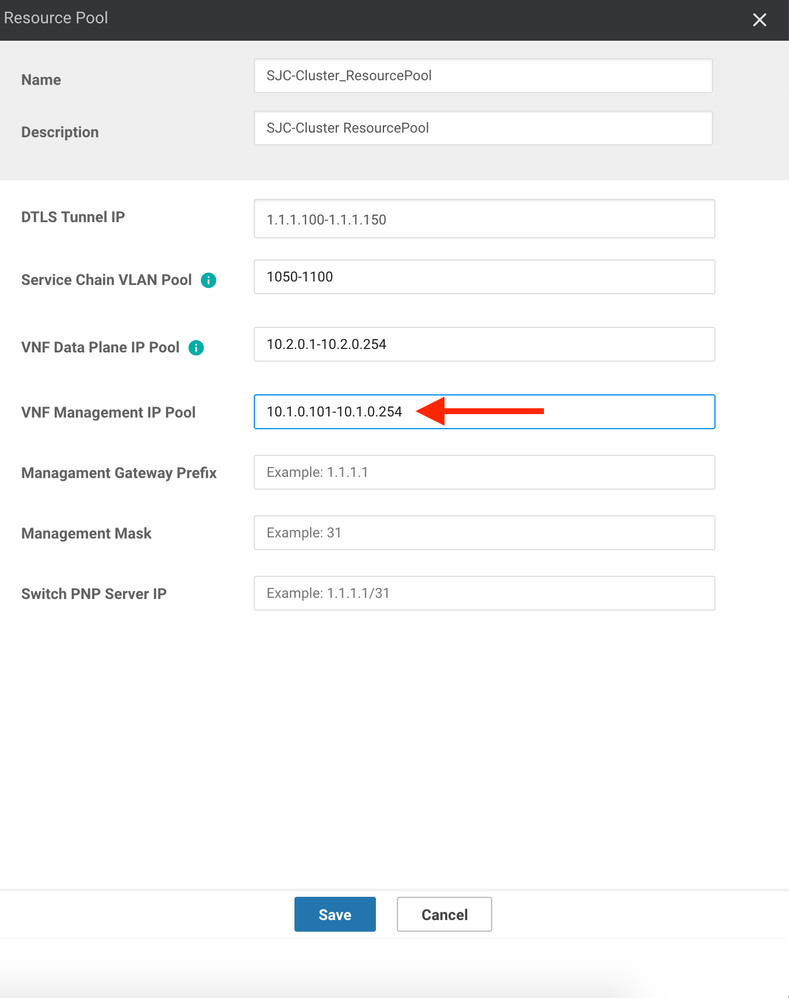

11. Click the Resource Pool button.

12. Here, we will set the "underpinnings" of how the cluster will stitch together the various service chains that we build. As VNFs are built on each cluster, the solution needs a pool of VLANs (L2) and IP Addresses (L3) to pull from that can be used to stitch devices together. For the DTLS Tunnel IP (a.k.a. System IP), enter a pool of IP Addresses that can be assigned as System IPs to devices that will join the SDWAN overlay (in the real world, this should fall in line with the organization's System IP policy). For this lab, we will use 1.1.1.100 through 1.1.1.150:

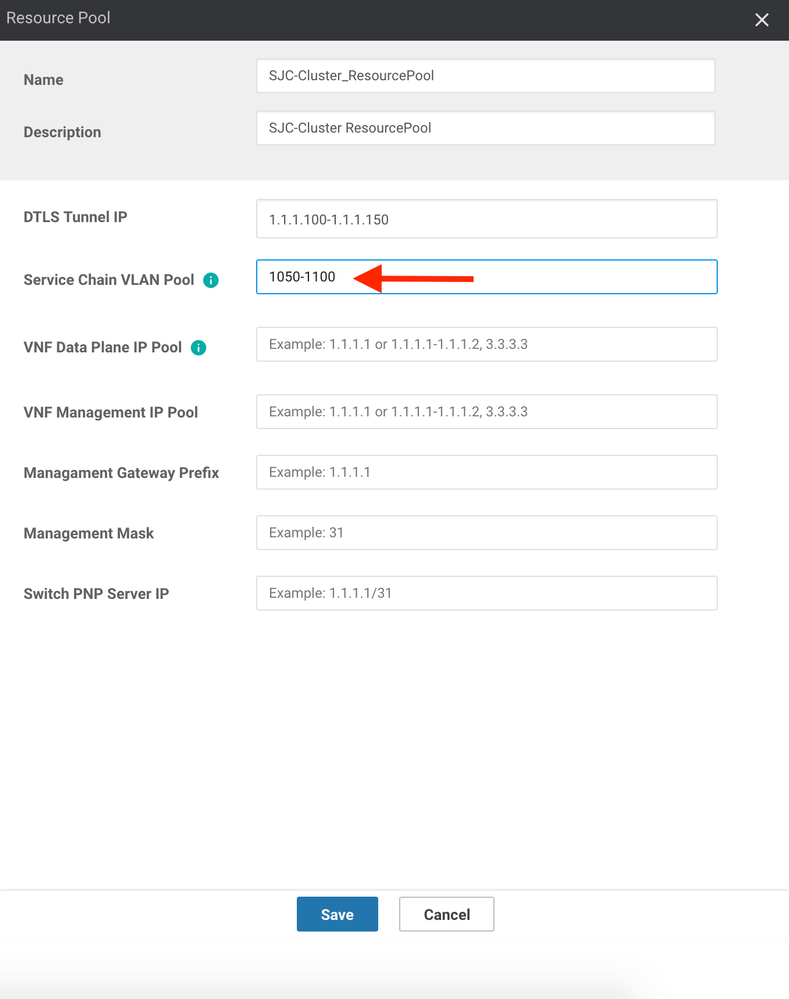

13. Enter the VLAN pool that can be used in the Service Chain VLAN Pool. These VLANs are (typically) not exposed outside of the cluster, though they can be. Ensure that you are using VLANs that do not overlap with other Data Center resources. This pool will be used to stitch VNFs together from a Layer 2 perspective. Here, I will use 1050 through 1100:

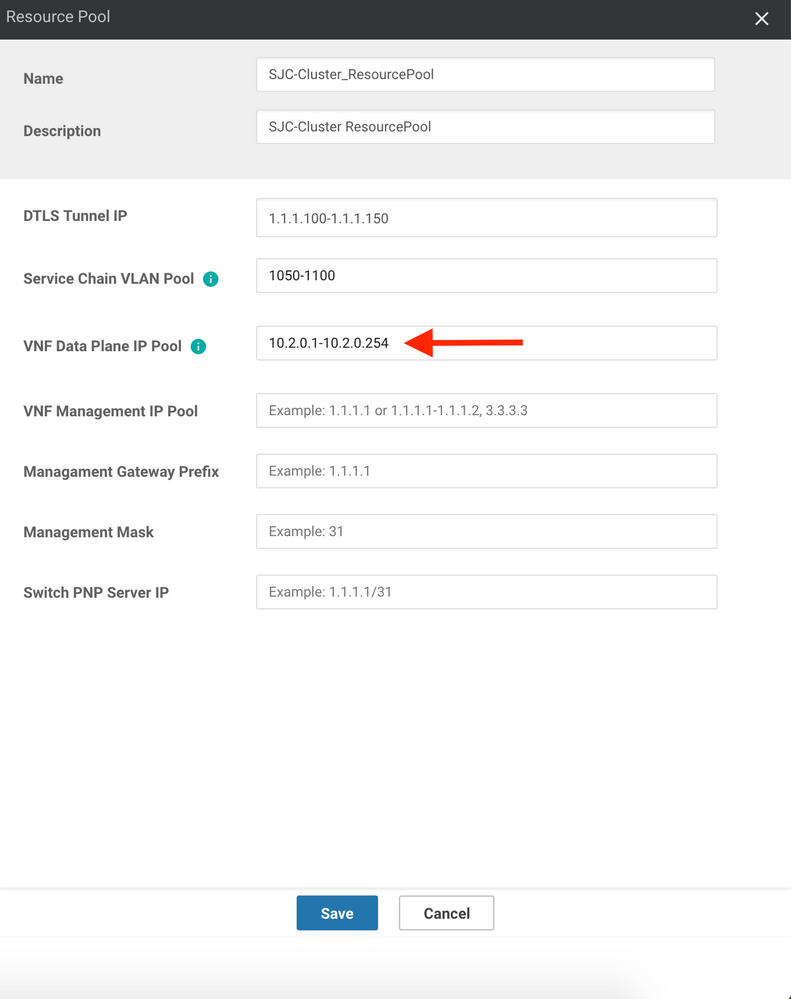

14. Since the solution is capable of importing basic values into VNFs as they boot up (as part of Day0 provisioning), provide a pool of IP Address that the system can use to assign to data interfaces of VNFs. This value stitches the VNFs together in a Service Chain at Layer 3. Here I will use 10.2.0.1 through 10.2.0.254:

15. Next, enter an IP Address pool that can be used by each VNF for management purposes. If the VNF has a dedicated management interface, the solution will automatically assign an IP Address to it from this pool. This address should be routable throughout your organization so that you have management access to each individual VNF. Here, I will use 10.1.0.101 through 10.1.0.254:

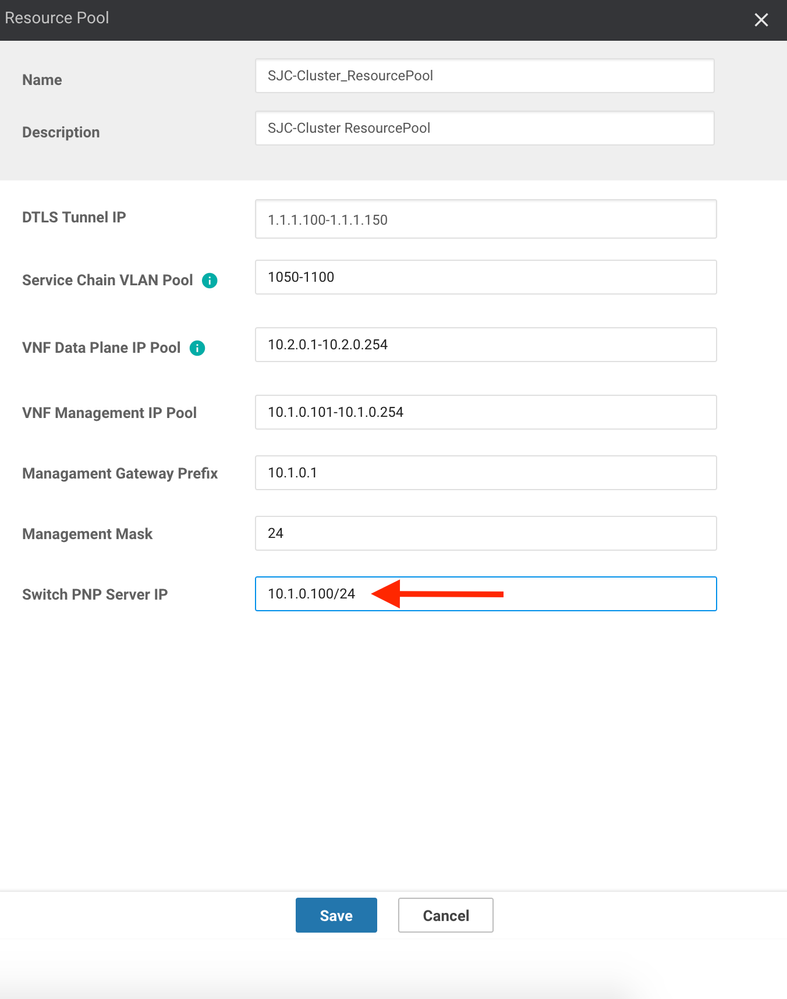

16. Next, set the Management Gateway Prefix (i.e. the Default Gateway) for the the management subnet you just entered. Here, I will use 10.1.0.1.

17. Set the Management Mask to the correct subnet mask for your management subnet. Here, I will use /24.

18. Lastly, enter the IP Address that will be used for Colo Configuration Manager (CCM) in the Switch PNP Server IP field. Since the Catalyst switches do not run vDaemon (and, hence, cannot connect directly with vManage), a separate container is spawned on the CSPs called Colo Configuration Manager. This "mini server" acts as a proxy between the switches and vManage. vManages pushes configuration for the switches to the CCM container, which then relays that configuration directly to the Catalyst 9500s (Note: CCM does not have a GUI, nor is it expected that the customer will interface directly with CCM. It is a silent install outside of providing the IP it should use). Here, I will use 10.1.0.100/24:

19. Click the Save button.

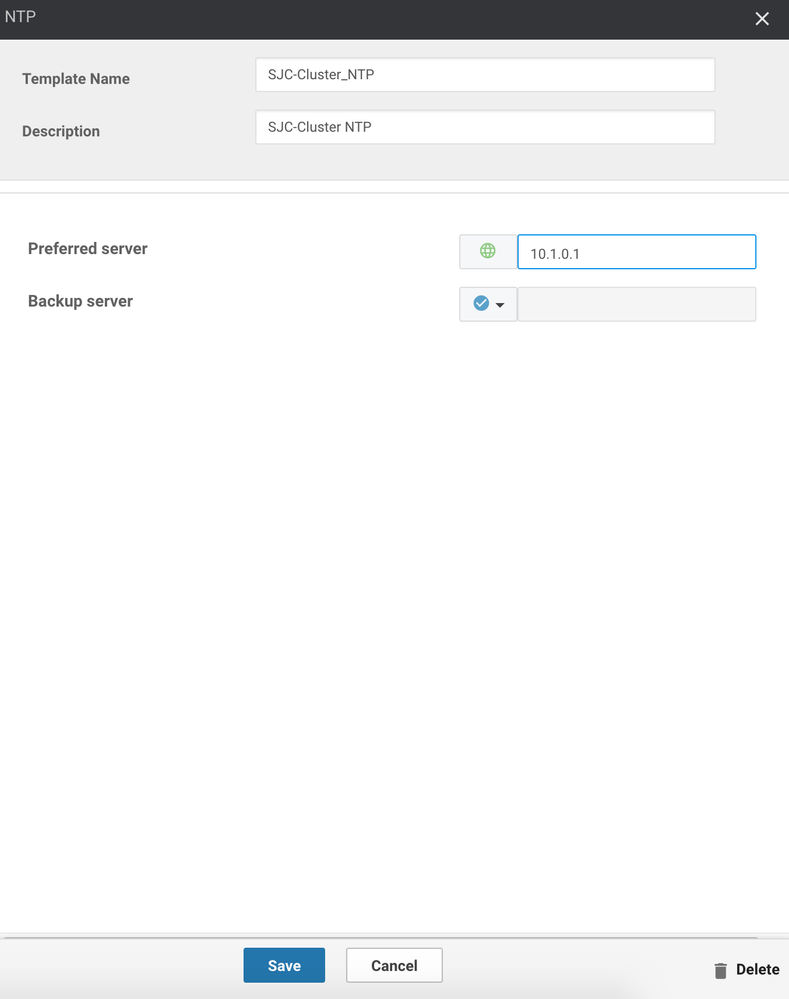

20. Next, click the Cluster Settings button, followed by NTP. Notice that you can also specify a Syslog server using this method.

21. Enter the NTP server(s) you wish to use for this cluster (Note: NTP is an important consideration here as authentication to the SDWAN overlay relies on certificate validation). Here, I will use 10.1.0.1:

22. Click the Save button.

23. Click the Save button again to save the entire cluster configuration.

24. Now that our cluster is configured, we need to activate it. This process generates the templates that will be used to configure the CSPs as well as CCM. Click on the ellipsis (three dots) to the right of your new cluster and choose Activate.

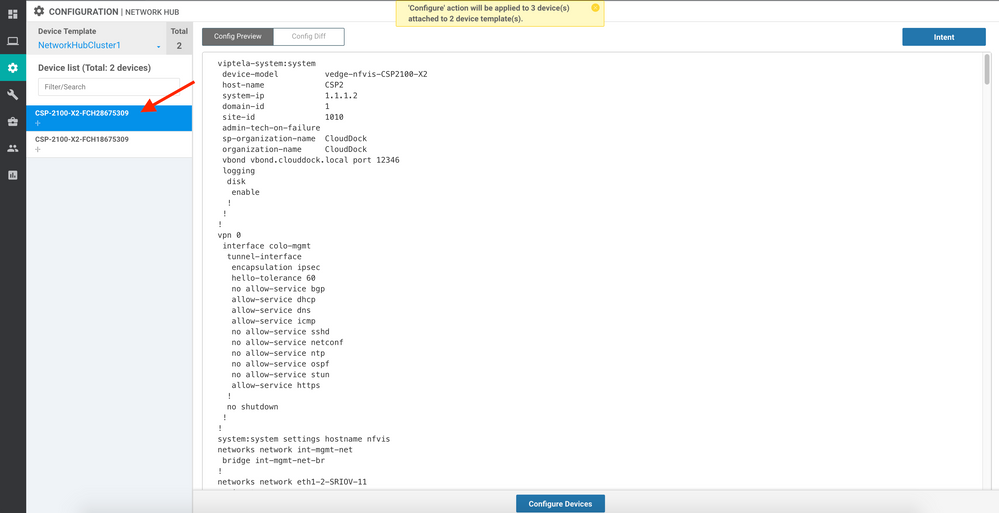

25. In the screen that follows, you may preview the configuration that was generated by clicking on each of your CSPs:

26. Click the Configure Devices button.

27. Confirm the fact that there will be three devices configured in the window that appears (2x CSP and 1x CCM).

28. Your cluster will now be activated and each device configured. This process can take upwards of 15 minutes. If your devices have not yet built a control channel to vManage, the cluster status will change to Pending. If your devices have already built their control channels, they will begin configuration.

Step 2: Building a Service Chain and Adjusting Policy

The next step in our journey is to build our Service Chains and start pushing traffic through them. To do this, we must first upload the necessary VNFs to vManage's Software Repository.

1. Navigate to Maintenance, Software Repository.

2. Click the Virtual Images tab.

3. Your dCloud lab has a few packages already downloaded for you to upload as practice. Click the Upload Virtual Image button.

4. Click the Upload Virtual Image button.

5. Click the Browse button and navigate to the C:\Users\Administrator\Desktop\SD-WAN Demo\Software folder.

6. There are two *.tar.gz files in this folder. One for vEdge Cloud and one for Firepower Threat Defense (FTD). Select these two files (Shift + Click) and click the Upload button.

7. Allow the files a few moments to upload (30-60 seconds).

8. Next, navigate to Configuration, Network Hub.

9. Click the Service Group tab at the top of the page. Since multiple Service Chains can be created that each serve a broader purpose, the concept of Service Groups has been introduced. As an example, suppose you have a Service Group called "Business Partners." Some business partners may not require the same level of scrutiny that others do, while some may require very specific optimization policies. Hence, you can create multiple Service Chains to satisfy these needs all under the broader Service Group. Additionally, Service Groups allow for both lateral and North/South movement between Service Chains. In essence, traffic can enter one Service Chain, but be handed off to a different Service Chain within the Group, when/where necessary.

10. Click the Create Service Group button.

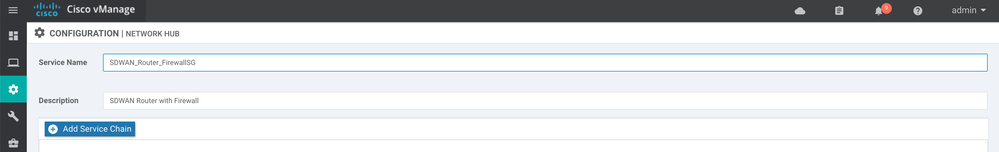

11. Provide a Name and Description for your Service Group. Here, we can use "SDWAN_Router_FirewallSG" and "SDWAN Router with Firewall":

12. Click the Add Service Chain button.

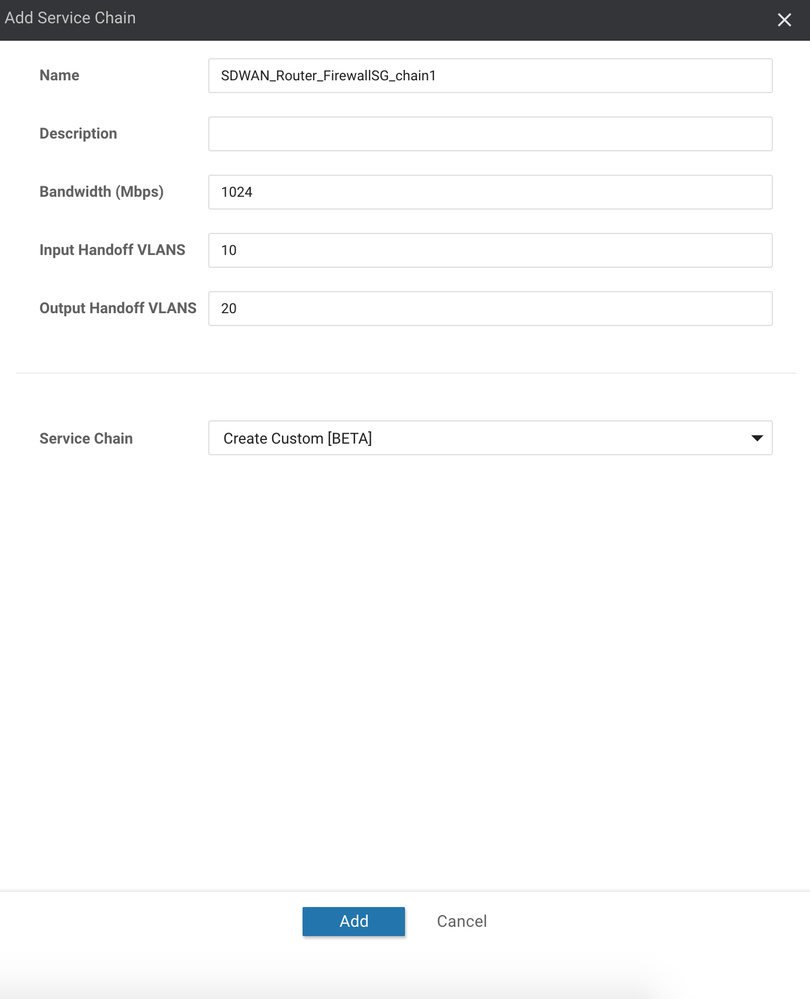

13. In the pane that appears, enter the details of your Service Chain. Specifically, enter a Bandwidth (used to ensure that the chain is placed on a CSP that can support your requirement and for allocating the correct QoS shaping/placing values), Input VLAN (used to tell the solution how you will deliver traffic to the Service Chain), Output VLAN (used to determine how traffic will exit the Service Chain) and Service Chain structure (used to derive the order of VNFs). Here, we will use 1024Mb for the bandwidth, VLAN 10 for the Input VLAN, VLAN 20 for the Output VLAN and Create Custom:

14. Click the Add button.

15. In the window that appears, click and drag the router and firewall icons to the blank Service Chain pane:

16. Click the router icon in the Service Chain.

17. In the window that appears, select the software package that will be used to instantiate this VNF (in our case, vEdge Cloud).

18. Click the Fetch VNF Properties button that appears.

19. Name your router and select CPU, memory and and Disk allocations (these should pre-populate for you as part of the package).

20. Select an available Serial Number from the dropdown list (any available is fine, since these are all vEdge Cloud serials).

21. Enter the WAN IP Address and Gateway. Here, I will use 192.168.10.180 and 192.168.10.1, respectively.

22. Enter the Service VPN Number. Devices that follow in the Service Chain will exist within this VPN/VRF on the SDWAN overlay. Here, I will use VPN 10.

23. Click the Configure button.

24. Next, click on the firewall icon within the Service Chain.

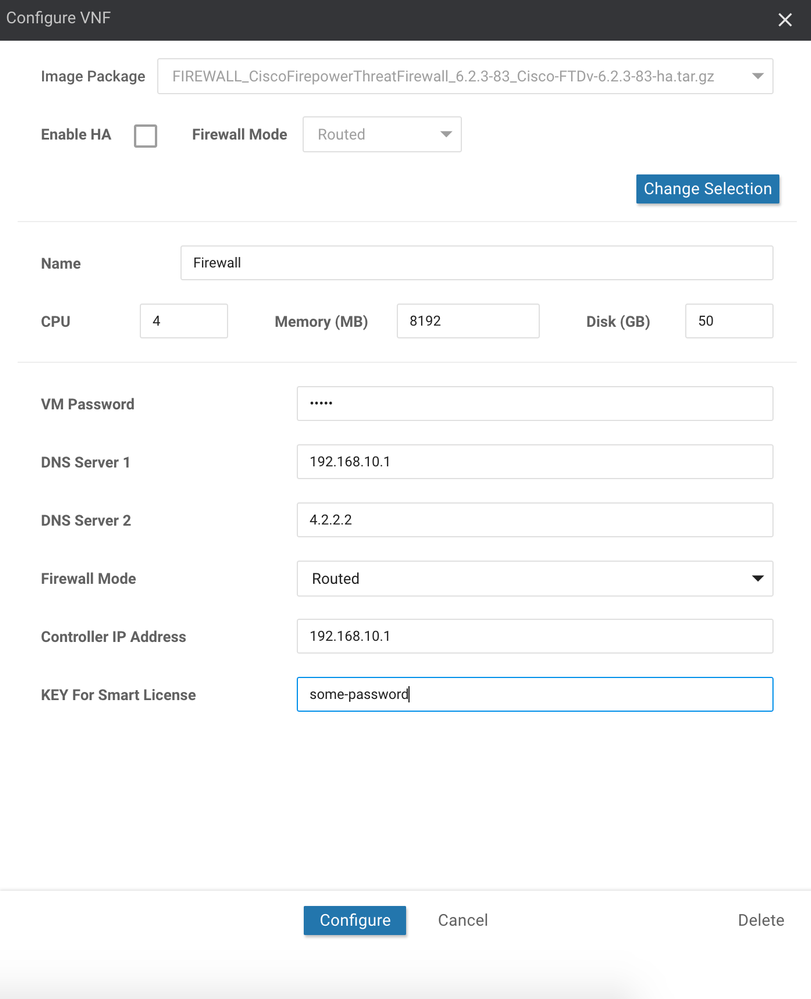

25. Select your FTD package.

26. Notice the option for HA appears as well as a VNF termination mode (Routed/Transparent). For capable VNFs, these options will be presented and, if checked, automatically configured for you (i.e. dual FTD appliances will be built and automatically joined as an HA pair). Click the Fetch VNF Properties button.

27. Configure your FTD appliance as follows:

28. Click the Configure button.

29. Click the Save button to save your Service Chain.

30. In the screen that follows, click on the three dots (ellipsis) to the right of your new Service Chain and choose Attach Cluster. Select your newly built cluster and click the Attach button. This will fail in your dCloud lab since no actual hardware is present!

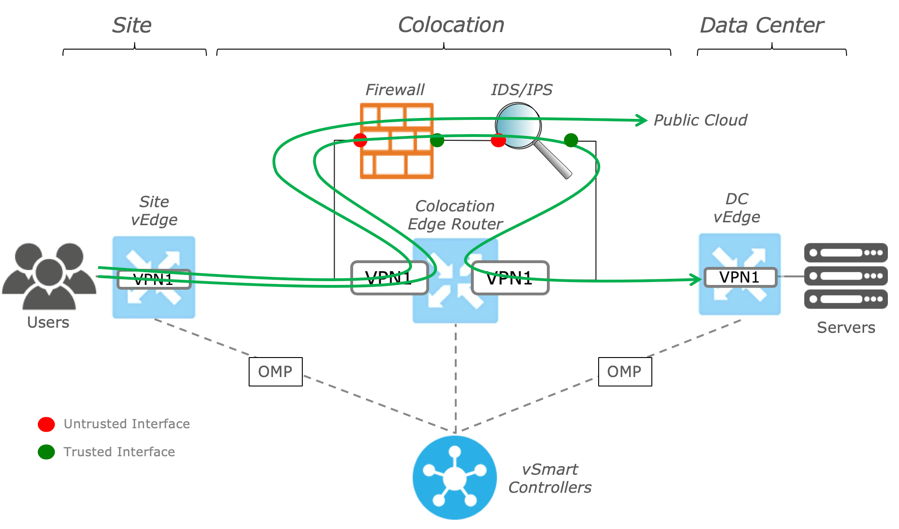

vManage will then begin the process of installing the necessary software and providing basic configuration to your new Service Group VNFs. After a brief period (3-5 minutes), your Service Group is ready to use. At this point, you may want to finish configuration on these VNFs outside of vManage (i.e. if this is a Firewall, you may want to finish setting up a more granular security policy). Once finished, it's time to adjust policy to influence traffic through your Service Chain. There are a few ways you can push traffic through Service Chains. One is through traditional routing (i.e. have the Service Chain advertise a Default Route into the overlay), while the second is through a Service Insertion policy (explained below). The following picture, though not specific to this lab, illustrates this concept:

1. Navigate to Configuration, Policies.

2. Click the Add Policy button.

3. Identify which applications will qualify for service insertion. Here, we will use the default Microsoft_Apps Application Family, though you can create your own.

4. Click the Next button.

5. Click the Next button again, since we will not be modifying the topology for this VPN.

6. Click the Traffic Data tab (we will be creating a Centralized Data Policy).

7. Click the Create Policy button, followed by Add New.

8. Give your policy a Name and a Description. Here, we will use CoR-CoLo-Policy and Cloud onRamp for CoLo Policy.

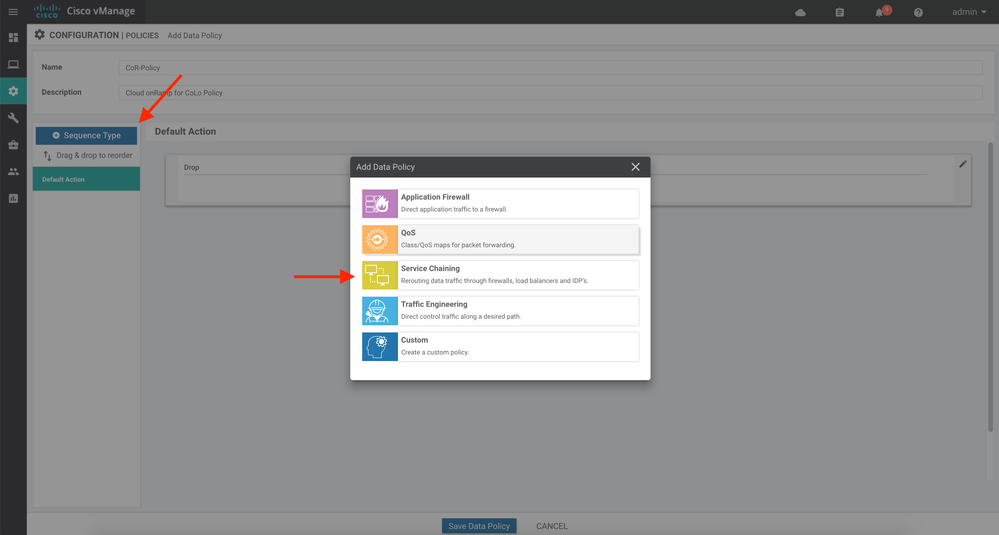

9. Click the Sequence Type button, followed by Service Chaining:

10. Click the Sequence Rule button.

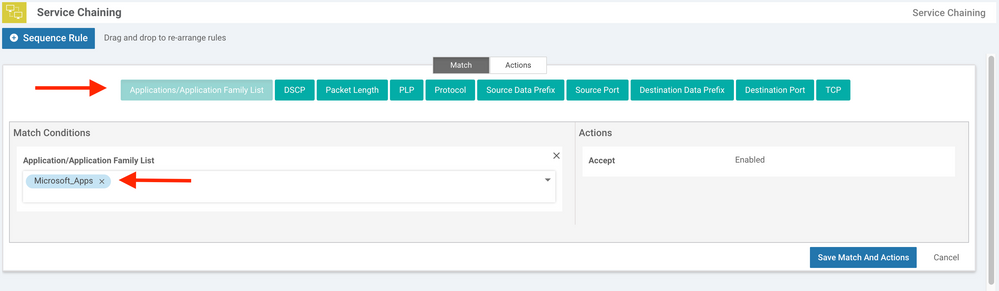

11. In the Match tab, click the Applications/Application Family List button.

12. Select your Application Family list created in the above step. In our case, we will select Microsoft_Apps:

13. Click the Actions tab at the top of the window pane.

14. Click the Service button.

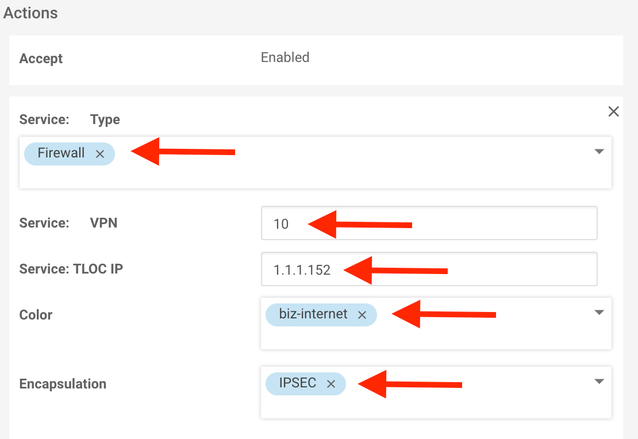

15. Choose the Firewall service in the dropdown, enter 10 in the in the Service: VPN field, enter 1.1.1.152 in the Service: TLOC IP field, select biz-internet from the Color dropdown and select IPSEC from the Encapsulation dropdown. This entry establishes which SDWAN router to forward Microsoft traffic to:

16. Click the Save Match and Actions button.

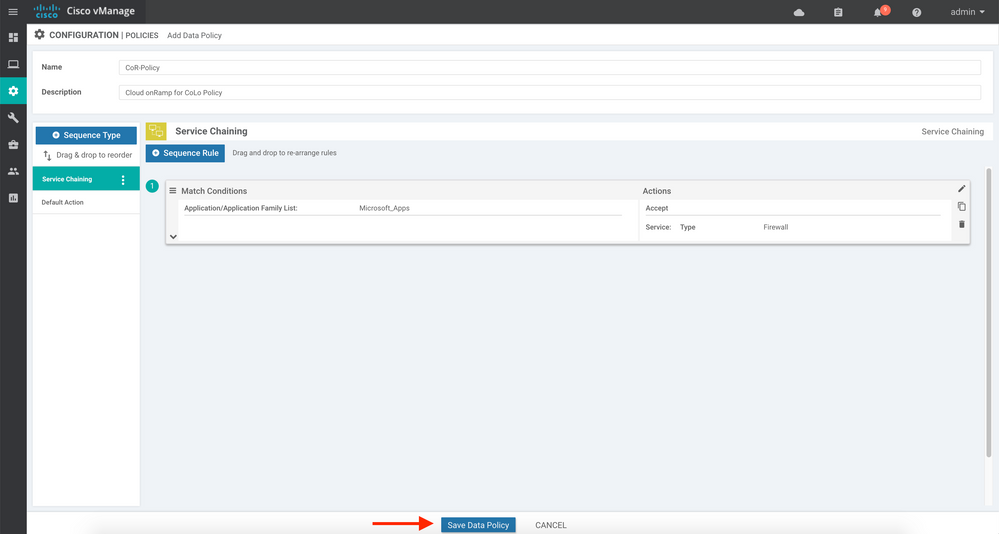

17. Click the Save Data Policy button:

18. Click the Next button.

19. Click the Traffic Data tab on the Policy Application screen.

20. Enter a Name and Description for your new policy.

21. Click the New Site List and VPN List button.

22. Select the From Service radio button.

23. Select the AllBranches list in the Select Site List dropdown (created for you).

24. Select the dataVPN list in the Select VPN List dropdown (created for you).

25. Click the Add button.

26. Click the Save Policy button.

27. Activate your policy by clicking on the three dots (ellipsis) next to your new policy and clicking Activate.

28. Validate traffic flow by generating traffic from a branch location to any Microsoft application on the Internet (instructor only!).

Step 3: Monitoring

1. As a final step, you can monitor your new service chains and clusters via vManage. Navigate to the Monitor, Network menu.

2. Under normal circumstances, any SDWAN devices that are part of the overlay show in this screen - including those hosted on the Cloud onRamp for CoLo cluster. Notice the Network Hub Clusters menu at the top of the screen. Click on this link.

3. This screen shows provides a brief status overview of all clusters (both CSP and VNF operational status). Click on your cluster.

4. The screen that appears shows the status of individual cluster components. Notice the CPU, RAM and Disk Space allocations. Notice also that switch status appears on this screen. Click on the Services tab at the top:

5. Had your Service Chain successfully attached (with real hardware), each chain and its associated status would show on this screen (see instructor screen):

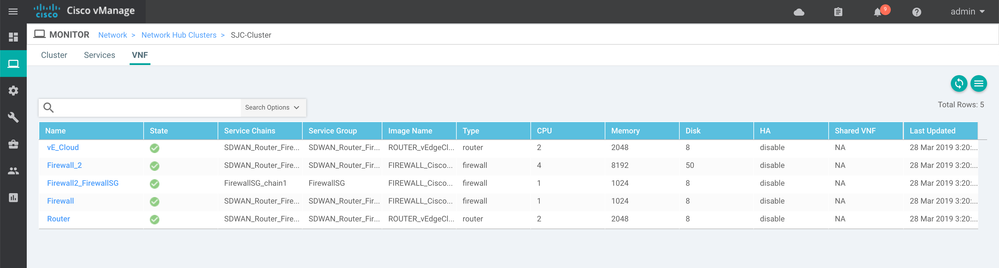

6. Click on the VNF tab at the top.

7. For individual monitoring of VNFs, you can utilize this tab. Clicking on any of the VNFs will result in a monitoring screen that displays elements such as CPU, Disk and Network usage (see instructor screen):

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi arohyans

Thanks for the lab but my SD-WAN dCloud environment is on 17.x. How do you get a dCloud environment with the latest vManage software.

Cheers

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: