- Cisco Community

- Technology and Support

- Networking

- Routing

- Re: CISCO 9300L - MULTICAST ROUTING

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

CISCO 9300L - MULTICAST ROUTING

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-11-2020 08:08 AM

Hello all, been a couple of days in total since I started to investigate Multicast Routing on the 9300 CISCO Catalyst (Gibraltar V.16.12.02) Have been looking around on forums and seems like my application is a little bit too specific, not a common scenario and behavior wise seems like is not working at all. I would like to present this simple scenario that I would like to get working first and then I might be able to expand on the topic a little bit more and see if there are any solutions for that.

SIMPLE SCENARIO Drawing

From left to right, I am generating Traffic using IPERF2 that arrives on SW Interface Gi1/0/36 (can be seen incrementing in the Multicast counters) unfortunately I don't see any MULTICAST traffic in the stats of VLAN 40. Ultimate goal would be to be able to funnel this traffic over to the MODULATOR on the right side of the drawing.

Interface VLAN 40 has "ip pim dense-mode" enabled and interface VLAN 254 has "ip pim dense-mode" as well in addition to "ip igmp static-group" with the required multicast IP address.

I have tried ip igmp snooping = on/off, did not make a difference. The is got to be a simple explanation to this, and please don't refer me to https://www.cisco.com/c/en/us/td/docs/switches/lan/catalyst9300/software/release/16-6/configuration_guide/ip_mcast_rtng/b_166_ip_mcast_rtng_9300_cg.pdf I have gone through this booklet and I could not find any useful info or restrictions ... great book but did not help in this particular scenario.

In addition, this scenario gets a little bit more complicated in a real deployment / application ... I am using the same CISCO 9300 to send traffic to a PEP server, which then comes back to the 9300 and I am using PBR to direct traffic to the modulator ... I am not even sure how that will be handled just yet, but before I get there I need to solve this Physical to VLAN Interface mystery - if someone has any ideas please

NOTE: I am also using HSRP on VLAN 40, for that I have included the "IP PIM REDUNDANCY ..." which I am not entirely sure helps in any way at this stage at least

Any ideas will be very much appreciated

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-11-2020 08:48 AM - edited 11-11-2020 08:48 AM

Hello @Oleksandr Y. ,

two questions first :

a) what is the multicast address you are using with IPERF2 traffic simulator?

be aware that multicast addresses of type 224.0.0.X are link local and not routable by definition . You need to use something like 224.10.20.30 as your multicast group

b) TTL : generated traffic is still IP traffic and need to have a TTL > 1 to be able to reach the intended destination as the switch will decrease TTL.

Hope to help

Giuseppe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-11-2020 09:02 AM - edited 11-11-2020 09:03 AM

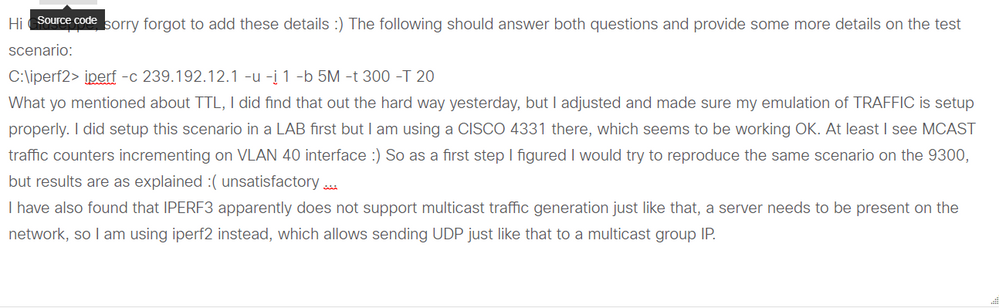

for some reason was not allowing me to post, and after multiple "reply" attempts was flagged as flood post :s here is a screenshot :

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-11-2020 09:09 AM - edited 11-11-2020 09:10 AM

Hello @Oleksandr Y. ,

ok group and TTL are fine

on catalyst 9300

post

show ip mroute 239.192.12.1

you should see two entries

(*, 239.192.12.1) this is the sparse shared tree mode

and one for

(10.10.40.50, 239.192.12.1) where the source is your IPERF2 simulator

the OLIST should contain VLAN 254 ( for the static igmp join you did )

Hope to help

Giuseppe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-11-2020 09:19 AM

HI Giuseppe, here are some more details on the CONFIG of the two Interfaces and some outputs:

I have also added the MFIB Count output at the end

CORE-TRF-DSTRO-SW1-P#sh run int vlan 40

Building configuration...

Current configuration : 336 bytes

!

interface Vlan40

ip address 10.10.40.2 255.255.255.0

ip pim redundancy HSRP40 hsrp dr-priority 110

ip pim dense-mode

standby 40 ip 10.10.40.1

standby 40 timers 1 3

standby 40 priority 110

standby 40 preempt

standby 40 name HSRP40

load-interval 30

end

CORE-TRF-DSTRO-SW1-P#sh run int vlan 254

Building configuration...

Current configuration : 315 bytes

!

interface Vlan254

ip address 192.168.254.2 255.255.255.0

ip pim dense-mode

standby 254 ip 192.168.254.1

standby 254 timers 1 3

standby 254 priority 110

standby 254 preempt

ip igmp static-group 239.192.12.1

ip policy route-map From-RLSS

end

CORE-TRF-DSTRO-SW1-P#sh ip mroute 239.192.12.1

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry, E - Extranet,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group,

G - Received BGP C-Mroute, g - Sent BGP C-Mroute,

N - Received BGP Shared-Tree Prune, n - BGP C-Mroute suppressed,

Q - Received BGP S-A Route, q - Sent BGP S-A Route,

V - RD & Vector, v - Vector, p - PIM Joins on route,

x - VxLAN group, c - PFP-SA cache created entry,

* - determined by Assert

Outgoing interface flags: H - Hardware switched, A - Assert winner, p - PIM Join

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 239.192.12.1), 00:32:20/stopped, RP 0.0.0.0, flags: DC

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Vlan254, Forward/Dense, 00:28:07/stopped

Vlan40, Forward/Dense, 00:32:20/stopped

CORE-TRF-DSTRO-SW1-P#sh ip mroute 239.192.12.1 count

Use "show ip mfib count" to get better response time for a large number of mroutes.

IP Multicast Statistics

7 routes using 6840 bytes of memory

6 groups, 0.16 average sources per group

Forwarding Counts: Pkt Count/Pkts per second/Avg Pkt Size/Kilobits per second

Other counts: Total/RPF failed/Other drops(OIF-null, rate-limit etc)

Group: 239.192.12.1, Source count: 0, Packets forwarded: 0, Packets received: 0

CORE-TRF-DSTRO-SW1-P#sh ip mfib 239.192.12.1 count

Forwarding Counts: Pkt Count/Pkts per second/Avg Pkt Size/Kilobits per second

Other counts: Total/RPF failed/Other drops(OIF-null, rate-limit etc)

Default

13 routes, 10 (*,G)s, 2 (*,G/m)s

Group: 239.192.12.1

RP-tree,

SW Forwarding: 0/0/0/0, Other: 0/0/0

HW Forwarding: 0/0/0/0, Other: 0/0/0

Groups: 1, 0.00 average sources per group

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-11-2020 09:23 AM

Sorry, the "ip mroute ..." command not showing what is expected because the source is no longer transmitting

CORE-TRF-DSTRO-SW1-P#sh ip mroute 239.192.12.1

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry, E - Extranet,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group,

G - Received BGP C-Mroute, g - Sent BGP C-Mroute,

N - Received BGP Shared-Tree Prune, n - BGP C-Mroute suppressed,

Q - Received BGP S-A Route, q - Sent BGP S-A Route,

V - RD & Vector, v - Vector, p - PIM Joins on route,

x - VxLAN group, c - PFP-SA cache created entry,

* - determined by Assert

Outgoing interface flags: H - Hardware switched, A - Assert winner, p - PIM Join

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 239.192.12.1), 00:37:43/stopped, RP 0.0.0.0, flags: DC

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Vlan254, Forward/Dense, 00:33:30/stopped

Vlan40, Forward/Dense, 00:37:43/stopped

(10.10.40.50, 239.192.12.1), 00:00:02/00:02:57, flags: T

Incoming interface: Vlan40, RPF nbr 0.0.0.0

Outgoing interface list:

Vlan254, Forward/Dense, 00:00:02/stopped

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-12-2020 07:43 AM

Hello @Oleksandr Y. ,

configure SVIs vlan 40 and vlan 254 for pim sparse-dense mode

define a loopback

interface loopback 10

desc RP

ip address 10.10.10.1 255.255.255.255

ip pim sparse-mode

ip pim rp 10.10.10.1

Let's see if in sparse mode it works the T flag means SPT flag

Hope to help

Giuseppe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-13-2020 05:47 AM

Hi Giuseppe, sorry was a hectic day at work yesterday

Did not try that just yet, the example above is about 40% true setup, in real life is a bit more complicated but I wanted to simplify as making it work at a basic level will then allow me to integrate the more complex multicast traffic forwarding

I will try to get back to you if I find some other tricks once I have a chance to play with the 9300 again ... right now just doing a PoC of MCAST in LAB

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-11-2020 09:43 AM

Maybe this will also help a bit more, showing the stats on the physical interface and it's config

CORE-TRF-DSTRO-SW1-P#sh run int gi1/0/36

Building configuration...

Current configuration : 210 bytes

!

interface GigabitEthernet1/0/36

switchport trunk allowed vlan 40,100,103,203,303,4093

switchport mode trunk

load-interval 30

end

CORE-TRF-DSTRO-SW1-P#sh int gi1/0/36

GigabitEthernet1/0/36 is up, line protocol is up (connected)

Hardware is Gigabit Ethernet, address is 4ce1.7675.bba4 (bia 4ce1.7675.bba4)

MTU 1500 bytes, BW 100000 Kbit/sec, DLY 100 usec,

reliability 255/255, txload 1/255, rxload 10/255

Encapsulation ARPA, loopback not set

Keepalive set (10 sec)

Full-duplex, 100Mb/s, media type is 10/100/1000BaseTX

input flow-control is on, output flow-control is unsupported

ARP type: ARPA, ARP Timeout 04:00:00

Last input 00:00:01, output 00:00:01, output hang never

Last clearing of "show interface" counters 00:00:25

Input queue: 0/2000/0/0 (size/max/drops/flushes); Total output drops: 0

Queueing strategy: fifo

Output queue: 0/40 (size/max)

30 second input rate 4022000 bits/sec, 433 packets/sec

30 second output rate 100000 bits/sec, 79 packets/sec

13665 packets input, 16820899 bytes, 0 no buffer

Received 11508 broadcasts (11432 multicasts)

0 runts, 0 giants, 0 throttles

0 input errors, 0 CRC, 0 frame, 0 overrun, 0 ignored

0 watchdog, 11432 multicast, 0 pause input

0 input packets with dribble condition detected

2035 packets output, 315289 bytes, 0 underruns

0 output errors, 0 collisions, 0 interface resets

0 unknown protocol drops

0 babbles, 0 late collision, 0 deferred

0 lost carrier, 0 no carrier, 0 pause output

0 output buffer failures, 0 output buffers swapped out

CORE-TRF-DSTRO-SW1-P#sh int gi1/0/36

GigabitEthernet1/0/36 is up, line protocol is up (connected)

Hardware is Gigabit Ethernet, address is 4ce1.7675.bba4 (bia 4ce1.7675.bba4)

MTU 1500 bytes, BW 100000 Kbit/sec, DLY 100 usec,

reliability 255/255, txload 1/255, rxload 11/255

Encapsulation ARPA, loopback not set

Keepalive set (10 sec)

Full-duplex, 100Mb/s, media type is 10/100/1000BaseTX

input flow-control is on, output flow-control is unsupported

ARP type: ARPA, ARP Timeout 04:00:00

Last input 00:00:00, output 00:00:03, output hang never

Last clearing of "show interface" counters 00:00:37

Input queue: 0/2000/0/0 (size/max/drops/flushes); Total output drops: 0

Queueing strategy: fifo

Output queue: 0/40 (size/max)

30 second input rate 4475000 bits/sec, 470 packets/sec

30 second output rate 100000 bits/sec, 79 packets/sec

19107 packets input, 23547198 bytes, 0 no buffer

Received 16117 broadcasts (16009 multicasts)

0 runts, 0 giants, 0 throttles

0 input errors, 0 CRC, 0 frame, 0 overrun, 0 ignored

0 watchdog, 16009 multicast, 0 pause input

0 input packets with dribble condition detected

2824 packets output, 440471 bytes, 0 underruns

0 output errors, 0 collisions, 0 interface resets

0 unknown protocol drops

0 babbles, 0 late collision, 0 deferred

0 lost carrier, 0 no carrier, 0 pause output

0 output buffer failures, 0 output buffers swapped out

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

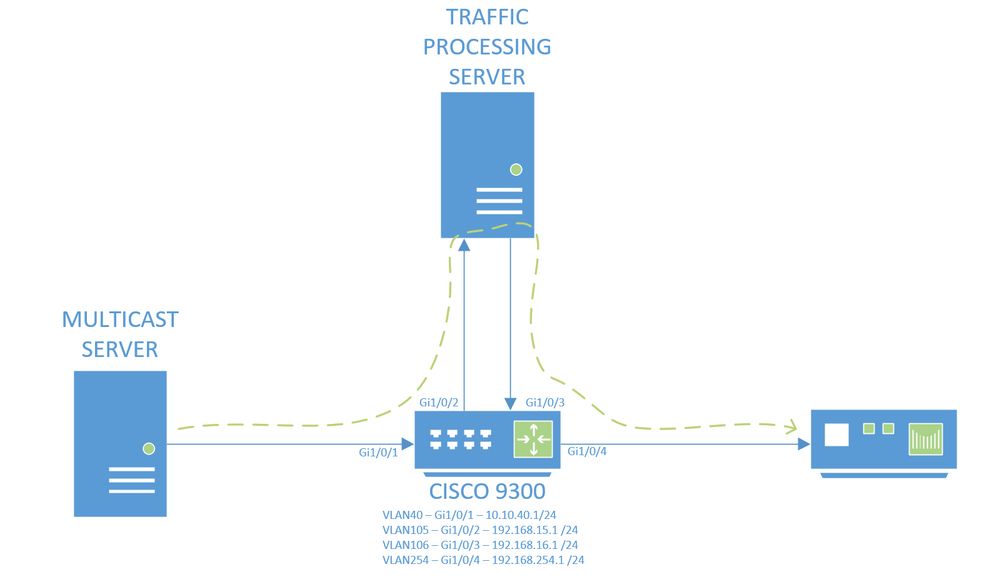

11-13-2020 12:45 PM

Well, after this adventurous experience I am getting closed to the actual production environment and I figured I would post this sooner than later, maybe someone has an idea about this. Attached is the drawing representing the Multicast path, once again no IGMP anywhere. The idea here would be to process multicast traffic through the "Traffic Processing Server" (don't mind the reason) but this traffic will be coming back to the same CISCO 9300 and I have to somehow force route this INCOMING traffic out of port Gi1/0/4 further down the line. For unicast I am using a route-map that matches ANY to ANY and send's to that same device out interface Gi1/0/4 ... wondering if there is way to do the same thing with Multicast, because ANY to ANY does not seem to have much effect on this traffic.

IGMP and PIM configuration will most probably just pick up the incoming traffic on Gi1/0/1 and forward directly but it's imperative for my application to pass MCAST over the TOP server and only then out Gi1/0/4

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: