- Cisco Community

- Technology and Support

- Networking

- Routing

- Re: PIM Dense Mode and IGMP Multicast

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

PIM Dense Mode and IGMP Multicast

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-17-2019 01:09 PM

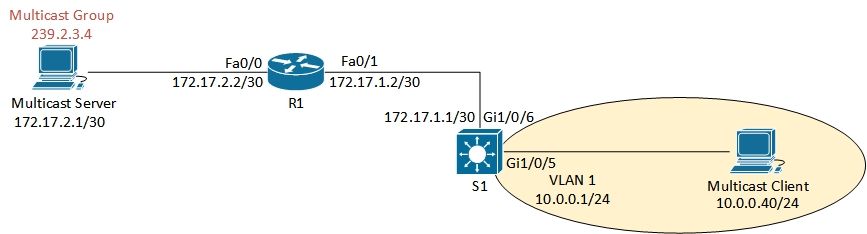

I'm trying to get this Multicast scenario to work for a troubleshooting I'm working on but for some reason it is not working as expected, maybe you guys can help me out. I have Pim Dense Mode and IGMP enable on both R1 and S1. I'm using iperf 2.x to send multicast traffic. Multicast Client (MC) is the iperf server subscribing to Multicast Group (MG) 239.2.3.4:

[root@MC ~]# iperf -s -u -B 239.2.3.4 -i 1 ------------------------------------------------------------ Server listening on UDP port 5001 Binding to local address 239.2.3.4 Joining multicast group 239.2.3.4 Receiving 1470 byte datagrams UDP buffer size: 208 KByte (default) ------------------------------------------------------------

When I do that, S1 receives an IGMP Membership Report and creates a IGMP Group on S1 and an entry on PIM mroute table:

S1#sh ip igmp groups

IGMP Connected Group Membership

Group Address Interface Uptime Expires Last Reporter Group Accounted

239.1.1.2 Vlan1 05:08:38 00:04:52 10.0.0.1

239.2.3.4 Vlan1 00:02:03 00:04:51 10.0.0.40

224.0.1.40 Vlan1 23:02:51 00:04:52 10.0.0.1

S1#

S1#sh ip mroute

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry, E - Extranet,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group,

G - Received BGP C-Mroute, g - Sent BGP C-Mroute,

N - Received BGP Shared-Tree Prune, n - BGP C-Mroute suppressed,

Q - Received BGP S-A Route, q - Sent BGP S-A Route,

V - RD & Vector, v - Vector, p - PIM Joins on route,

x - VxLAN group

Outgoing interface flags: H - Hardware switched, A - Assert winner, p - PIM Join

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 239.1.1.2), 05:09:14/00:04:16, RP 0.0.0.0, flags: DCL

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Vlan1, Forward/Dense, 05:09:14/stopped

GigabitEthernet1/0/6, Forward/Dense, 05:09:14/stopped

(*, 239.2.3.4), 00:02:39/00:04:15, RP 0.0.0.0, flags: DC

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Vlan1, Forward/Dense, 00:02:39/stopped

GigabitEthernet1/0/6, Forward/Dense, 00:02:39/stopped

(*, 224.0.1.40), 1d00h/00:04:16, RP 0.0.0.0, flags: DCL

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

GigabitEthernet1/0/6, Forward/Dense, 05:10:30/stopped

Vlan1, Forward/Dense, 23:03:27/stopped

S1#But that entry does not get propagated to R1:

R1#sh ip mroute

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry, E - Extranet,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group,

G - Received BGP C-Mroute, g - Sent BGP C-Mroute,

N - Received BGP Shared-Tree Prune, n - BGP C-Mroute suppressed,

Q - Received BGP S-A Route, q - Sent BGP S-A Route,

V - RD & Vector, v - Vector, p - PIM Joins on route,

x - VxLAN group

Outgoing interface flags: H - Hardware switched, A - Assert winner, p - PIM Join

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 239.255.255.250), 05:11:40/00:04:53, RP 0.0.0.0, flags: DC

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Ethernet0/1, Forward/Dense, 05:11:39/stopped

Ethernet0/0, Forward/Dense, 05:11:40/stopped

(*, 224.0.1.40), 05:11:48/00:04:59, RP 0.0.0.0, flags: DCL

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Ethernet0/1, Forward/Dense, 05:11:39/stopped

Ethernet0/0, Forward/Dense, 05:11:48/stopped

R1#R1 and S1 have formed a neighbor relationship:

R1#sh ip pim nei

PIM Neighbor Table

Mode: B - Bidir Capable, DR - Designated Router, N - Default DR Priority,

P - Proxy Capable, S - State Refresh Capable, G - GenID Capable,

L - DR Load-balancing Capable

Neighbor Interface Uptime/Expires Ver DR

Address Prio/Mode

172.17.1.1 Ethernet0/1 05:13:44/00:01:41 v2 1 / S P G

R1#

S1# sh ip pim nei

PIM Neighbor Table

Mode: B - Bidir Capable, DR - Designated Router, N - Default DR Priority,

P - Proxy Capable, S - State Refresh Capable, G - GenID Capable,

L - DR Load-balancing Capable

Neighbor Interface Uptime/Expires Ver DR

Address Prio/Mode

172.17.1.2 GigabitEthernet1/0/6 05:14:10/00:01:41 v2 1 / DR S P G

S1#R1 and S1 configs are attached

Thanks in advance!

- Labels:

-

Routing Protocols

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-18-2019 12:35 AM

Hi

I am not an expert in multicast ..

IGMP is the protocol used by multicast receivers to communicate their willingness to listen to a particular multicast group. When a host wants to join a multicast group.

- why IGMP with dense mode? dense mode is going flood traffic anyway.

I think you only need IGMP config on S1 and not on R1 interfaces.

There is bi-dir pim command on S1.

I would remove the bi-dir pim command from S1 .. change to sparse-mode or remove the IGMP commands and test.

I dont think there is any need of IGMP with dense mode.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-18-2019 05:59 AM

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-18-2019 06:34 AM

I agree with OMZ in that I don't think the IGMP configuration is needed on the routed interfaces on both the router and switch.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-18-2019 08:57 AM

PIM initially floods to all the multicast-routers. IGMP controls whether a multicast router will continue to flood to edge hosts. I.e. if there are no multicast receivers, the multicast router, using PIM dense, should prune. Also, if the switch supports IGMP snooping, only client ports wanting the multicast will get it.

So, unlike PIM sparse, which will only pull multicast when requested, PIM dense cycles through flood and prune. That's not as "nice" as pulling a multicast stream only when needed, but without IGMP there would be constant flooding with PIM dense.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-18-2019 09:17 AM

Thanks for your response. That is my understanding as well. Once the Multicast Server (MS) starts streaming, PIM Dense Mode on R1 should flood that Multicast stream down to S1, whether it has listeners or not, or until S1 prunes it.

Unfortunately this is not the behavior I'm observing. When I start the multicast stream with iperf, I don't see that stream being flooded by S1, S1 has IGMP Snooping disabled by the way.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-24-2019 08:58 AM

if S1 (IGMP Snooping Disabled) does not have active listeners and R1 is sending Multicast Traffic, how long before S1 sends a PRUNE msg to R1? Also, Would in this scenario Multicast Packets been flooded to all ports on S1 until the Prune msg is received by R1? or S1 would drop all Multicast traffic because it has no members for that group?

Thanks in advance

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-24-2019 10:03 AM

Also, BTW, a prune message would be from a downstream multicast router to an upstream multicast router, informing the latter to stop forwarding it a multicast stream. Switches, with IGMP snooping don't, as I recall, stop their local multicast router from forwarding multicast traffic to them if there are no active multicast clients. I believe they rely on the multicast router to determine that there are no active multicast clients (using IGMP query messages) and for the router to stop forwarding the multicast stream. Of course, the switch wouldn't forward multicast traffic except to ports that have replied they want it. (Interestingly, I recall multicast clients also listen for other clients that desire the same stream, so they then don't need to reply to the multicast router. A IGMP snooping switch doesn't forward the multicast desired replies, except the first, to the multicast router. This preserves the expected hub behavior to the multicast router, but allows the switch to "know" of all the clients wanting the stream.)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-24-2019 10:09 AM

Hi joseph,

Thanks for your answer. In this case both R1 and S1 have PIM (Dense Mode) and IGMPv2 enabled, only IGMP Snooping has been disabled. R1 and S1 have a PIM Neighbor relantionship formed thus S1 can send prune messages to R1. I'm just wondering if flooding will occur on S1 before it gets pruned or not.

Thanks again

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-24-2019 11:53 AM

Rereading the posts, and doing some research on PIM dense, it appears to me, with IGMP snooping disabled, when you start multicast to R1, it should flood it, and in turn S1 should flood it too. S1, as it has only downstream edge, if it "knows" that edge doesn't have any hosts that want the multicast it might immediately send a prune to R1. That aside, looking at your OP we don't see the multicast server pushing the multicast stream on R1.(?)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-24-2019 12:19 PM

Hello,

You don't need PIM enabled on the switch (S1) and the router doesn't need IGMP. Enabling PIM on the Router and IGMP snooping on the Switch should work.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-24-2019 12:53 PM

S1 is in L3 mode so I do believe I need PIM enabled. Also it seems IGMP needs to be enable for PIM to work on Cisco switches.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-24-2019 02:02 PM

OK. I didn't know that you're doing L3 between your switch and router. Once PIM is enabled, IGMP is also enabled. Just use IGMPv2 and not 3. Typically, enabling PIM would've enabled IGMPv2 by default, except you manually set it to IGMPv3.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-18-2019 01:05 AM

Hello amartinsbrz,

you are trying to use IGMP version 3 for Source Specific Multicast with PIM dense mode.

I don't think the two are compatible.

Either you move to PIM sparse mode and use an SSM address range that includes the group you are using for tests, or you change IGMP version to IGMP version 2.

Hope to help

Giuseppe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-18-2019 05:57 AM

Thanks

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: