- Cisco Community

- Technology and Support

- Data Center and Cloud

- Server Networking

- USC-X: network configuration

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-07-2023 01:34 AM

Hi! We have:

- servers UCSX-210C-M6

- chassis UCSX-9508

- fabric UCS-FI-6454

We plan to deploy a cluster based on vSphere. What are the best practices network configuration for this type of equipment? Below is an example

1 variant

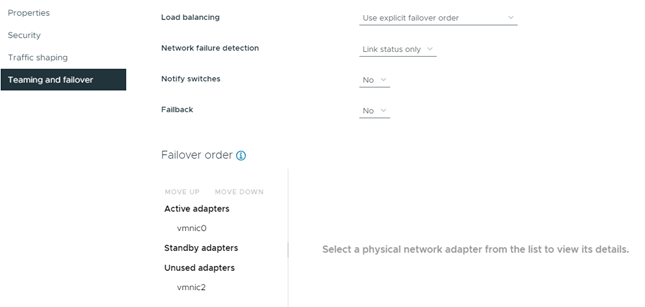

Example, for Management create one vNIC adapters, turn on Failover. Perform the following setting in vCenter:

2 variant

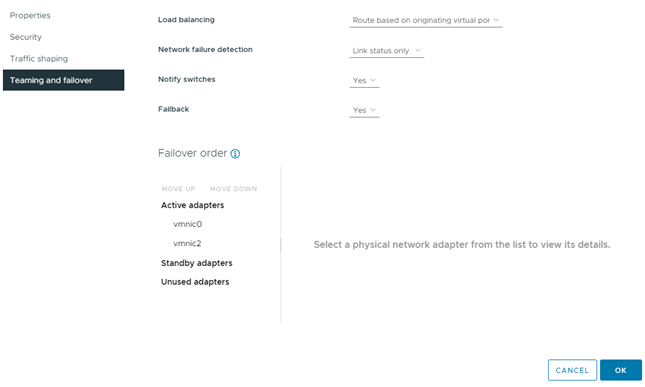

Example, for Management create two vNIC adapters, turn off Failover. Perform the following setting in vCenter:

Is it possible to implement balancing through the fabric so that two adapters are active?

Do I need to create a separate virtual switch for each service? e.g. vMotion, Management, Provision, VMs

Solved! Go to Solution.

- Labels:

-

Server Networking

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-11-2023 07:43 AM - edited 06-11-2023 07:49 AM

The fabric interconnects do not share a dataplane, so a Host's pair of links pinned to each FI can't do LACP type teaming.

If you look at the way Hyperflex installs setup networking, that might be a good place to start.

- For specific L2 communication you know is going between hosts connected to same FIs, create single vnic pinned to specific FI side, and make that the 'active' vmnic in esxi teaming. For the 2nd vnic, pinned to the other FI side, make that the 'standby' vmnic in ESXi teaming. This design minimizes the amount of l2 hops traffic has to make, and keeps latency low, but still has redundancy capabilities that will kick in if 'active' links/paths go down.

- If you have multiple types of src/dst L2 adjacent traffic , then you could use the aforementioned design and make some active on A and some active on B to even out the traffic load between FI-A and FI-B.

- For traffic that has to go through L3 gateway northbound, then set those to have multiple active links (side a, side b) using the default VMware teaming option (originating port id) that round robins the guestVM nic outbound connections among the active VMNICs available to that guestVMs portgroup. This design also has built in redundancy as traffic will be immediately switched over in the event a link goes down.

Kirk...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-11-2023 07:43 AM - edited 06-11-2023 07:49 AM

The fabric interconnects do not share a dataplane, so a Host's pair of links pinned to each FI can't do LACP type teaming.

If you look at the way Hyperflex installs setup networking, that might be a good place to start.

- For specific L2 communication you know is going between hosts connected to same FIs, create single vnic pinned to specific FI side, and make that the 'active' vmnic in esxi teaming. For the 2nd vnic, pinned to the other FI side, make that the 'standby' vmnic in ESXi teaming. This design minimizes the amount of l2 hops traffic has to make, and keeps latency low, but still has redundancy capabilities that will kick in if 'active' links/paths go down.

- If you have multiple types of src/dst L2 adjacent traffic , then you could use the aforementioned design and make some active on A and some active on B to even out the traffic load between FI-A and FI-B.

- For traffic that has to go through L3 gateway northbound, then set those to have multiple active links (side a, side b) using the default VMware teaming option (originating port id) that round robins the guestVM nic outbound connections among the active VMNICs available to that guestVMs portgroup. This design also has built in redundancy as traffic will be immediately switched over in the event a link goes down.

Kirk...

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide