- Cisco Community

- Technology and Support

- Data Center and Cloud

- Server Networking

- why vPC member ports are all down ?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

why vPC member ports are all down ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-28-2016 01:01 AM

Hi guys

I really would like to your help..

<Processes>

1. Disconnected N7K's vPC member ports

2. Also Disconnected Keepalive and then Peer-link

3. OS upgraded N7K-2

4. After upgraded, I connect Keepalive first and then peer-link ---> Problem occur !!!

5. .......omit...

<Problem>

When I connected peer-link again..., vPC member ports all down. after few minutes, be up state...(about..2 minutes..)

I don't understand that... why all vPC member ports down.....

Please tell me something advice...T^T

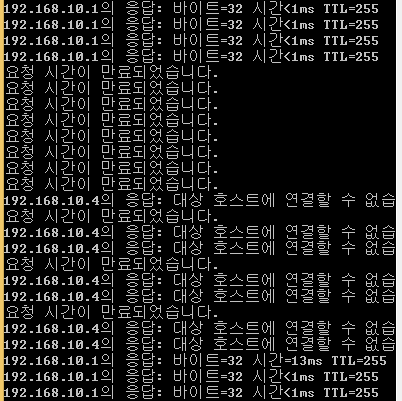

ping loss...

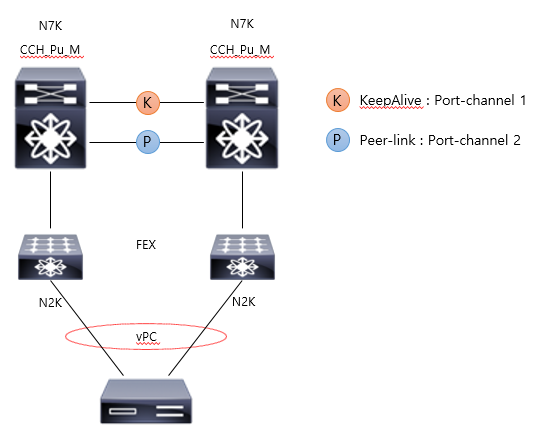

<Topology>

<vPC Configuration>

[N7K-1]

feature vpc

vpc domain 10

role priority 100

peer-keepalive destination 10.10.10.2 source 10.10.10.1 vrf keepalive-vrf

interface e3/3

description ## vPC Keep-Alive Link ##

no cdp enable

channel-group 1 mode active

no shutdown

interface port-channel1

description # PC-1 # vPC-Keep-Alive-Link

vrf member keepalive-vrf

ip address 10.10.10.1/30

interface Ethernet4/1

description ## vPC Peer Link ##

no cdp enable

switchport

switchport mode trunk

channel-group 2 mode active

no shutdown

interface port-channel2

description # PC-2 # vPC-Peer-Link

switchport

switchport mode trunk

spanning-tree cost 1400

spanning-tree port type network

vpc peer-link

interface Ethernet3/4

channel-group 100 mode active

interface port-channel100

description # PC-100 # C4948

switchport

switchport mode trunk

vpc 100

[N7K-2]

feature vpc

vrf context keepalive-vrf

vpc domain 10

role priority 105

no auto-recover

peer-keepalive destination 10.10.10.1 source 10.10.10.2 vrf keepalive-vrf

interface e3/23

description ## vPC Keep-Alive Link ##

no cdp enable

channel-group 1 mode active

no shutdown

interface port-channel1

description # PC-1 # vPC-Keep-Alive-Link

vrf member keepalive-vrf

ip address 10.10.10.2/30

interface Ethernet4/5

description ## vPC Peer Link ##

no cdp enable

switchport

switchport mode trunk

channel-group 2 mode active

no shutdown

interface port-channel2

description # PC-2 # vPC-Peer-Link

switchport

switchport mode trunk

spanning-tree cost 1400

spanning-tree port type network

vpc peer-link

interface Ethernet3/24

channel-group 100 mode active

interface port-channel100

description # PC-100 # C4948

switchport

switchport mode trunk

vpc 100

and running HSRP

- Labels:

-

Server Networking

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-28-2016 02:05 AM

My assumption is that you might be ending in the dual active scenario due to which the secondary vpc box is suspending all the members link.

Could you please answer me the following:-

1- Whats the current situation? Is the issue still persist are is it resolved?

2- If it is not resolved what is the status of VPC( Show vpc brief on both the devices)?

3- On Which VPC device/box you are seeing the members going down?

Regards

Inayath

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-28-2016 04:53 PM

1- Whats the current situation? Is the issue still persist are is it resolved?

-> Current state is fine but why all vPC member ports on both switches when Peer-link became UP

2- If it is not resolved what is the status of VPC( Show vpc brief on both the devices)?

->

<N7K-1>

switch# show vpc

Legend:

(*) - local vPC is down, forwarding via vPC peer-link

vPC domain id : 10

Peer status : peer adjacency formed ok

vPC keep-alive status : peer is alive

Configuration consistency status : success

Per-vlan consistency status : success

Type-2 consistency status : success

vPC role : primary, operational secondary

Number of vPCs configured : 1

Peer Gateway : Disabled

Dual-active excluded VLANs : -

Graceful Consistency Check : Enabled

Auto-recovery status : Disabled

vPC Peer-link status

---------------------------------------------------------------------

id Port Status Active vlans

-- ---- ------ --------------------------------------------------

1 Po2 up 1,10

vPC status

----------------------------------------------------------------------

id Port Status Consistency Reason Active vlans

-- ---- ------ ----------- ------ ------------

100 Po100 up success success 1,10

switch#

<N7K-2>

switch_2# show vpc

Legend:

(*) - local vPC is down, forwarding via vPC peer-link

vPC domain id : 10

Peer status : peer adjacency formed ok

vPC keep-alive status : peer is alive

Configuration consistency status : success

Per-vlan consistency status : success

Type-2 consistency status : success

vPC role : secondary, operational primary

Number of vPCs configured : 1

Peer Gateway : Disabled

Dual-active excluded VLANs : -

Graceful Consistency Check : Enabled

Auto-recovery status : Enabled (timeout = 240 seconds)

vPC Peer-link status

---------------------------------------------------------------------

id Port Status Active vlans

-- ---- ------ --------------------------------------------------

1 Po2 up 1,10

vPC status

----------------------------------------------------------------------

id Port Status Consistency Reason Active vlans

-- ---- ------ ----------- ------ ------------

100 Po100 up success success 1,10

switch_2#

3- On Which VPC device/box you are seeing the members going down?

-> both switches....

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-29-2016 07:02 PM

Both sides will flap the VPC links when recovering from dual active state. This is expected behavior but can be avoided by following the steps mentioned at the link below w.r.t vpc sticky bit

Doc for avoiding vpc flap on both 7ks

-Raj

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-31-2016 04:15 PM

Thank you so much, Raj

I have a one more question

After flap on both N7Ks, took much time untill UP state of vPC member ports(about 48s ~ 60s)

Is this right????

-Jun

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

02-01-2016 06:27 AM

un,

The delay restore timer is most likely kicking in and delaying the VPC bring up. It is an expected behavior.

You can run the below command and go through the sequence of events for vpc bring up-

sh sys intern vpcm event-history interactions

You should see a sequence like below - the newest messages are on the top.

7) Event:E_DEBUG, length:30, at 955875 usecs after Mon Apr 14 14:11:49 2014

[104] Reiniting down vpc : 100

8) Event:E_DEBUG, length:29, at 955873 usecs after Mon Apr 14 14:11:49 2014

[104] Reiniting down vpc : 20

9) Event:E_DEBUG, length:29, at 955871 usecs after Mon Apr 14 14:11:49 2014 >>> reiniting the VPC

[104] Reiniting down vpc : 10

10) Event:E_DEBUG, length:37, at 955834 usecs after Mon Apr 14 14:11:49 2014 >>D_RESTORE 30sec ended

[104] vPC restore delay timer expired

11) Event:E_DEBUG, length:46, at 947738 usecs after Mon Apr 14 14:11:19 2014 >>D_RESTORE 30sec started

[104] Sending mts message to delay vPC bringup

These event logs can roll over if there were a lot of VPC events incase if you don't see messages for the corresponding time stamp.

-Raj

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide