- Cisco Community

- Technology and Support

- Service Providers

- Service Providers Knowledge Base

- ASR9000/XR Feature Order of operation

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

on 04-13-2013 06:35 AM

Introduction

This document provides an overview as to in what order features and forwarding is applied in the ASR9000 ucode architecture.

After reading this document you understand better when packets are subject to a type of classification or action.

Also you will better understand how PPS rates are affected when certain features are enabled.

Feature order of operation

The following picture gives a (simplified) overview how packet are handled from ingress to egress

INGRESS

The following main building blocks compose the ingress feature order of operation

I/F Classification

When a packet is received the TCAM is queried to see what (sub)interface this packet belongs to.

The flexible vlan matching rules apply here that you can read more on in the EVC architecture document.

Once we know to which (sub)interface the packet belongs to, we derive the uIDB (micro IDB, Interface Descriptor Block) and know which features need to be applied.

ACL Classification

If there is an ACL applied to the interface, a set of keys are being built and sent over to the TCAM to find out if this packet is a permit or deny on that configured ACL. Only the result is received, no action is taken yet.

QOS Classification

If there is a qos policy applied, the TCAM is queried with the set of keys to find out if a particular class-map is being matched. The return result is effectively an identifier to the class of the policy-map, so we know what functionality to apply on this packet when QOS actions are executed.

NOTE:

As you can see, enabling either ACL or QOS will result in a TCAM lookup for a match. ACL only will result in an X percent of performance degredation. QOS only will result in a Y performance degredation (and X is not equal to Y).

Enabling both ACL and QOS would not give you an X+Y pps degredation because the TCAM lookup for them both is done in parallel. So we save that overhead. It is not the case that 2 separate tcam lookups are done.

BGP flowspec, Open flow and CLI Based PBR use PBR lookup, which happens between ACL and Qos logically.

Forwarding lookup

The ingress forwarding lookup is rather simple, we dont traverse the whole forwarding tree here, but only trying to find out what egress interface, or better put, what egress LC is to be used here for forwarding. The reason for this is that the 9k is distributed in its architecture and therefore the ingress linecard has to do some sort of FIB lookup to find the egress LC.

Also when bundles are in play, and members are spread over different linecards, we need to have knowledge and compute the hash to identify the member the egress LC will be choosing. This so we can forward the packet over only to that linecard which serves that member that is actually going to forward the packet.

NOTE:

If uRPF is enabled, a full ingress FIB lookup, same as the egress is done, this is intense and therefore uRPF will have a relative larger impact on the PPS.

IFIB Lookup

In this stage we determine if the packet is for us and if it is for us, where it needs to go to. For isntance, ARP and netflow are handled by the LC, but BGP and OSPF are handled by the RSP. The iFIB lookup gives us the direction as to where the packet internally needs to go to when we are the recipient.

Security ACL action

If the packet is subject to an ACL deny for instance, the packet is dropped during this stage.

QOS Policer action

Any policer action is done during this stage as well as marking. QOS shaping and buffering is done by the traffic manager which is a separate stage in the NPU.

L2 rewrite

During the L2 rewrite in the ingress stage we are applying the fabric header.

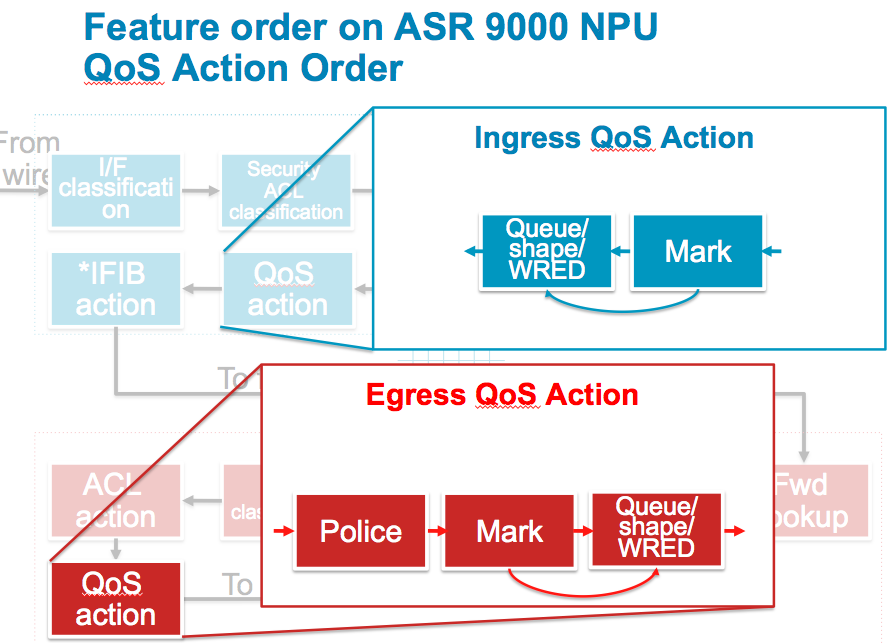

QOS Action

Any other qos action, such as Queuing and Shaping and WRED are executed during this stage. Note that packets that were previously policed or dropped by ACL are not seen in this stage anymore. It is important to understand that dropped packets are removed from the pipeline, so when you think there are counter discrepancies, they may have been processed/dropped by an earlier feature.

See also the next section with more details on the different QOS actions.

iFIB action

Either the packet is forwarded over the fabric or handed over to the LC CPU here. If the packet is destined for the RSP CPU, it is forwarded over the fabric to the RSP destination. Remember that the RSP is a linecard from a fabric point of view. The RSP requests fabric access to inject packets in the same fashion an LC would.

General

The ingress linecard will also do the TTL decrement on the packet and if the TTL exceeds the packet is punted to the LC CPU for an ICMP TTL exceed message. The number of packets we can punt is subject to LPTS policing.

EGRESS

The following section describes the forwarding stages of the egress linecard.

Forwarding lookup

The egress linecard will do a full fib lookup down the leaf to get the rewrite string. This full fib lookup will provide us everything for the packet routing, such as egress interface, encapsulation, and adjacency information.

L2 rewrite

With the information received from the forwarding lookup we can rewrite the packet headers, applying the ethernet header, vlans etc.

Security ACL classification

During the fib lookup we have determined the egress interface and know which features are applied.

Same as in ingress we are able to build the keys and query the tcam for an ACL result based on the application to the egress interface.

QOS Classification

Same as on ingress, the TCAM is queried to identify the QOS class-map and matching criteria for this packet on an egress qOS policy.

The same note as above applies when it comes to ACL and or QOS application to the interface.

ACL action

ACL action is executed

QOS action

QOS action is executed, see next section for more details on the QOS actions

General

MTU verification is only done on the egress linecard to determine if fragmentation is needed.

The egress linecard will punt the packet to the LC CPU of the egress linecard for that fragmentation requirement.

Remember that fragmentation is done in software and no features are applied on these packets on the egress linecard.

The number of packets that can be punted for fragmentation is NPU bound and limited by LPTS.

QOS action notes

The above picture expands on the different QOS actions that can be taken and how they intersect. What is important to understand from this picture is that WRED (that is precedence or DSCP aware) will use the rewritten values from the packet headers.

The almost same applies on egress: shaping, queueing and wred will reuse rewritten PREC/DSCP values from either ingress or the egress policer/remarker.

Packets that were subject to a police drop action will not be seen anymore by the shaper.

Conclusion

From the above a few conclusions can be drawn:

1) Ingress processing is more intense then egress processing.

2) Enabling more features will affect the total PPS performance of the NPU as more cycles are needed in a particular stage of forwarding

3) Packets denied on ingress will not go over the fabric

4) Permitted packets by qos or acl on ingress go over the fabric and might get dropped on egress ACL or QOS. This means that there is a potential of fabric bandwidth wasting if there is a very restrictive ACL or low rate policer/shaper on egress and a high input rate on ingress. (Note that oversubscription will result in back pressure so the ingress FIA will prevent the packets from being sent over the fabric, the head of line blocking from the ASR9000 QOS architecture document).

5) Packets that are remarked on ingress will get their QOS (or ACL) applied based on the REWRITTEN values from ingress

6) Netflow will account for ACL denied packets (not specifically called out in the feature order of operation)

7) WRED uses the rewritten values from ingress or egress policers

8) The ingress linecard is not aware of features configured on the egress linecard (remember the qos priority propagation from the ASR9000 quality of service architecture document)

Related Information

Xander Thuijs CCIE #6775

Principal Engineer ASR9000

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Let me explain what the next hop ID is:

NHID uses the RIB allocated next hop id to connect packet processing on ingress LC and egress LC. The advantage if NHID based forwarding is for convergence, it keeps all the benefits of the fast convergence of the 2 stage forwarding.

On two stage forwarding platform, the egress LC has to do L3 address lookup and path selection again.

- The L3 address lookup (IPv4 address, IPv6 address and MPLS label) takes multiple memory accesses in the data plane

- The path selection goes over multiple levels of load balancing which takes several memory and some execution cycles.

This slows down the packet forwarding performance on egress LC. This can be avoided by passing the lookup result from ingress LC to the egress LC.

Due to the code space limitations and search memory size and structure limitations, Trident and SIP700 cannot support this NHID.

xander

- « Previous

- Next »

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: