- Cisco Community

- Technology and Support

- Service Providers

- Service Providers Knowledge Base

- ASR9000/XR Understanding PLATFORM-DIAGS-3-PUNT_FABRIC_DATA_PATH_FAILED

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

04-15-2013 11:51 AM - edited 09-30-2017 05:42 AM

Introduction

This document provides an explanation on the punt fabric data path failure symptom occasionally seen on the ASR9000 platform.

What are Diag messages

DIAG messages are sent from EACH of the RSP's down to all NPU's in the system, similar like a ping packet. These DIAG messages are then responded by the NPU and sent back to the orignating RSP.

If a number of DIAG packets are not received in a particular period of time this error message is alerted.

The underlying issue can be manifold, it can range from a faulty NPU, a loaded NPU, other faulty FPGA's. In this document we'll highlight a few of the causes and how it can be determined and what to do next.

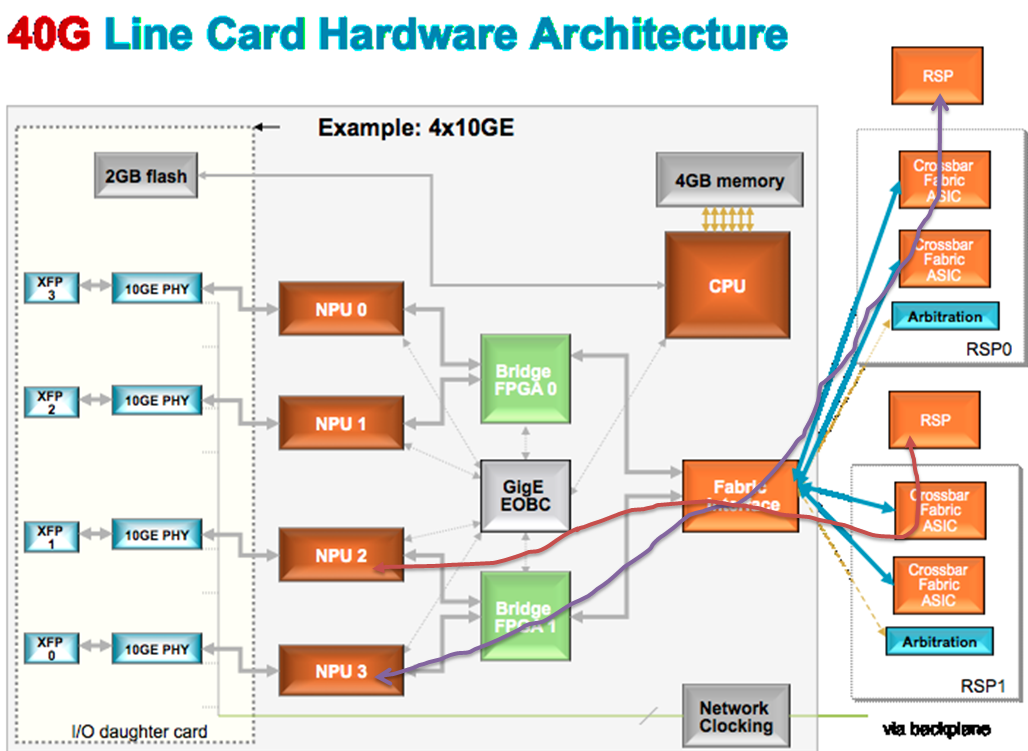

This picture visualizes how the np diag messages flow. These are just 2 examples from the active and standby RSP to an individual NPU, but it needs to be understood that BOTH RSP's send DIAG messages to ALL NPU's.

Understanding the error message

An NP DIAG failure can be hardware, can be software and can be transient. One of the most important things to correlate from this error is the SET or CLEAR operation in the error mesage.

RP/0/RSP0/CPU0:Feb 5 05:05:44.051 : pfm_node_rp[354]:

%PLATFORM-DIAGS-3-PUNT_FABRIC_DATA_PATH_FAILED : Set|online_diag_rsp[237686]|System Punt/F

In this case it is a SET mesage, a CLEAR obviosuly means that the condition was no longer there.

Next in the message there is a sign of the slot/location and NPU that originate the error:

%PLATFORM-DIAGS-3-PUNT_FABRIC_DATA_PATH_FAILED : Set|online_diag_rsp[237686]|System

Punt/Fabric/data Path Test(0x2000004)|failure threshold is 3, (slot, NP) failed: (0/2/CPU0, 0)

Note that older releases report the logical slot instead of the more common external name, the following table details the logical to phyiscal slot mapping

Logical (L) vs Physical Slot mapping

For a9010 (10 slot chassis)

#0 --- #0

#1 --- #1

#2 --- #2

#3 --- #3

RSP0 -- #4

RSP1 -- #5

#4 --- #6

#5 --- #7

#6 --- #8

#7 --- #9

For a9006 (6 slot chassis)

RSP0 --- #0

RSP1 --- #1

#0 ----- #2

#1 ----- #3

#2 ----- #4

#3 ----- #5

Correlating the error message

Next thing that is important that if there is a hard set, that is a set without a clear, then derive the NPU's that generate the error message:

Are they belonging to the same bridge, are they belonging to the same FIA, are they belonging to the same LC, is it the standby or the active that is complaining.

Also important is, is the port in use or not.

That could yield some potential info on which ASIC is at fault or if there is a hardware issue to start with.

Few examples on that:

ALL NP FAILURE:

Dec 16 02:06:38.387 pfm/node.log 0/RSP1/CPU0 t1 pfm_writer.c:227: pfm_log_write: Set|online_diag_rsp[209011]|System Punt/Fabric/data Path Test(0x2000004)|failure threshold is 3, (slot, NP) failed: (0/1/CPU0, 0) (0/1/CPU0, 1) (0/1/CPU0, 2) (0/1/CPU0, 3) (0/1/CPU0, 4) (0/1/CPU0, 5) (0/1/CPU0, 6) (0/1/CPU0, 7)

This is indicative of a situation with the common component on this linecard, such as for instance the linecard fabric. It could also be that all np's have a lockup on this card. If that is the case they would need to carry the same feature sets likely and take the same amount of traffic.

SINGLE NP FAILURE:

RP/0/RSP1/CPU0:Apr 21 01:58:06.788 IST: pfm_node_rp[366]: %PLATFORM-DIAGS-3-PUNT_FABRIC_DATA_PATH_FAILED : Set|online_diag_rsp[213108]|System Punt/Fabric/data Path Test(0x2000004)|failure threshold is 3, (slot, NP) failed: (0/4/CPU0, 1)

A single NP failure is either a local NP fault in sw or hardware.

MULTIPLE NP FAILURE:

RP/0/RSP0/CPU0:May 13 02:56:51.208 IST: pfm_node_rp[366]: %PLATFORM-DIAGS-3-PUNT_FABRIC_DATA_PATH_FAILED : Set|online_diag_rsp[221304]|System Punt/Fabric/data Path Test(0x2000004)|failure threshold is 3, (slot, NP) failed: (0/0/CPU0, 6) (0/0/CPU0, 7)

RP/0/RSP0/CPU0:Sep 23 07:43:55.936 IST: pfm_node_rp[366]: %PLATFORM-DIAGS-3-PUNT_FABRIC_DATA_PATH_FAILED : Set|online_diag_rsp[217208]|System Punt/Fabric/data Path Test(0x2000004)|failure threshold is 3, (slot, NP) failed: (0/6/CPU0, 0) (0/6/CPU0, 3)

MULTIPLE LC FAILURE:

RP/0/RP1/CPU0:Aug 28 16:09:17.210 IST: pfm_node_rp[374]: %PLATFORM-DIAGS-3-PUNT_FABRIC_DATA_PATH_FAILED : Set|online_diag_rsp[213114]|System Punt/Fabric/data Path Test(0x2000004)|failure threshold is 3, (slot, NP) failed: (0/0/CPU0, 1) (0/0/CPU0, 2) (0/0/CPU0, 3) (0/0/CPU0, 4) (0/0/CPU0, 5) (0/1/CPU0, 3) (0/9/CPU0, 0) (0/9/CPU0, 2)

If the issue appears on multiple linecards it could be the RSP or the central fabric. If it is the central fabric, you'd also expect teh standby RSP to send a similar failure. This is not necessary but it provides extra proof of the suspicion.

INTERMITTENT NP FAILURE:

| RP/0/RSP0/CPU0:Sep 23 07:43:55.936 IST: pfm_node_rp[366]: %PLATFORM-DIAGS-3-PUNT_FABRIC_DATA_PATH_FAILED : Set|online_diag_rsp[217208]|System Punt/Fabric/data Path Test(0x2000004)|failure threshold is 3, (slot, NP) failed: (0/6/CPU0, 0) (0/6/CPU0, 3)

| RP/0/RSP0/CPU0:Sep 23 07:43:55.936 IST: pfm_node_rp[366]: %PLATFORM-DIAGS-3-PUNT_FABRIC_DATA_PATH_FAILED : Clear|online_diag_rsp[217208]|System Punt/Fabric/data Path Test(0x2000004)|failure threshold is 3, (slot, NP) failed: (0/6/CPU0, 0)

| RP/0/RSP1/CPU0:Sep 23 07:53:53.772 IST: pfm_node_rp[366]: %PLATFORM-DIAGS-3-PUNT_FABRIC_DATA_PATH_FAILED : Set|online_diag_rsp[213108]|System Punt/Fabric/data Path Test(0x2000004)|failure threshold is 3, (slot, NP) failed: (0/6/CPU0, 0)

| RP/0/RSP1/CPU0:Sep 23 07:53:53.772 IST: pfm_node_rp[366]: %PLATFORM-DIAGS-3-PUNT_FABRIC_DATA_PATH_FAILED : Clear|online_diag_rsp[213108]|System Punt/Fabric/data Path Test(0x2000004)|failure threshold is 3, (slot, NP) failed: (0/6/CPU0, 0) (0/6/CPU0, 3)

| RP/0/RSP1/CPU0:Sep 23 07:56:55.293 IST: pfm_node_rp[366]: %PLATFORM-DIAGS-3-PUNT_FABRIC_DATA_PATH_FAILED : Set|online_diag_rsp[213108]|System Punt/Fabric/data Path Test(0x2000004)|failure threshold is 3, (slot, NP) failed: (0/6/CPU0, 0) (0/6/CPU0, 3)

| RP/0/RSP1/CPU0:Sep 23 07:56:55.293 IST: pfm_node_rp[366]: %PLATFORM-DIAGS-3-PUNT_FABRIC_DATA_PATH_FAILED : Clear|online_diag_rsp[213108]|System Punt/Fabric/data Path Test(0x2000004)|failure threshold is 3, (slot, NP) failed: (0/6/CPU0, 0)

| RP/0/RSP0/CPU0:Sep 23 08:04:06.060 IST: pfm_node_rp[366]: %PLATFORM-DIAGS-3-PUNT_FABRIC_DATA_PATH_FAILED : Set|online_diag_rsp[217208]|System Punt/Fabric/data Path Test(0x2000004)|failure threshold is 3, (slot, NP) failed: (0/6/CPU0, 0)

| RP/0/RSP0/CPU0:Sep 23 08:04:06.060 IST: pfm_node_rp[366]: %PLATFORM-DIAGS-3-PUNT_FABRIC_DATA_PATH_FAILED : Clear|online_diag_rsp[217208]|System Punt/Fabric/data Path Test(0x2000004)|failure threshold is 3, (slot, NP) failed: (0/6/CPU0, 0) (0/6/CPU0, 3)

| RP/0/RSP0/CPU0:Sep 23 08:07:07.582 IST: pfm_node_rp[366]: %PLATFORM-DIAGS-3-PUNT_FABRIC_DATA_PATH_FAILED : Set|online_diag_rsp[217208]|System Punt/Fabric/data Path Test(0x2000004)|failure threshold is 3, (slot, NP) failed: (0/6/CPU0, 0) (0/6/CPU0, 3)

| RP/0/RSP0/CPU0:Sep 23 08:07:07.582 IST: pfm_node_rp[366]: %PLATFORM-DIAGS-3-PUNT_FABRIC_DATA_PATH_FAILED : Clear|online_diag_rsp[217208]|System Punt/Fabric/data Path Test(0x2000004)|failure threshold is 3, (slot, NP) failed: (0/6/CPU0, 0)

Verifying NP Diag packets in the NPU

A simple command to verifyt the diag packets that are being set

RP/0/RSP0/CPU0:A9K-BNG#show controller np count np0 loc 0/0/CPU0 | i DIAG

Mon Apr 15 16:00:26.722 EDT

235 PARSE_RSP_INJ_DIAGS_CNT 3268 0

248 PARSE_LC_INJ_DIAGS_CNT 3260 0

788 DIAGS 3260 0

902 PUNT_DIAGS_RSP_ACT 3268 0

Potential causes

Set followed by a clear

If the message is SET but subsequently followed by a clear then there is no direct need for concern. While not ideal, this can be ignored and no further action is required.

If it continues to happen, capture the outputs from the suggestion below and report that in a case.

It could mean that the NPU was temporarily overloaded.

In case of oversubscription scenarios it needs to be know that the DIAG packets are high priority and should get preferred service, but due to timing it could be missed resulting in an erroneous DIAG failure.

NP lockup

In XR 4.1 we had a string of NP lockup sw faults. This means that a thread inside the NPU enters an endless loop that it cant break out of. The NPU lockup eventually blocks all threads and stages inside the npu and prevents it from forwarding traffic.

Including DIAG packets.

If you are running XR 4.1 make sure that you are at least use 4.1.2 and run the latest pack to take care of npu lockups.

Also that release has the right instrumentation in place in order to derive the reason for lockup and bring it to closure.

Lockups have not been seen yet in XR 4.2.x nor XR 4.3.x

NP failure

If an NPU fails for whatever reason, besides traffic impact you'll see a message of the datapath failure for this /NPU only.

It is important to see if there is an issue with the traffic on the ports served also.

Bridge failure

NPU's failing belonging to the same Bridge might suggest a problem with the bridge FPGA.

This is uncommon, but can happen, usually we see bridge CRC errors along side.

FIA failure

If multiple NPU's are failing that report to the same FIA, then it could be very much an FIA failure. We would usually see sync loss messages on the SERDES (see show commands below) or a fabric path failure on that port.

a reseat of the LC is recommended, or a slot location move if the reseat does not resolve the issue.

If still at fail, replace the LC.

LC failure

If ALL NPU's are generating an alert, and then especially reported by BOTH RSP's then it is clearly an indication of an LC failure.

RSP or Fabric path failure

If there is a complete RSP or fabric failure, all linecards will report the error (Eventually).

it can mean an affectd fabric, backplane or bad RSP.

Though I am personally aware of only one chassis swap, this is not a common scenario and highly recommended against doing so.

A replacement, reseat of the RSP in question is the first order of action to be conducted.

LC NPU loopback test has failed

This is a failure in the line card local punt path.

Example: LC/0/7/CPU0:Aug 18 19:17:26.924 : pfm_node[182]: %PLATFORM-PFM_DIAGS-2-LC_NP_LOOPBACK_FAILED : Set|online_diag_lc[94283]|Line card NPU loopback Test(0x2000006)|link failure mask is 0x8. This means this test failed to get loopback packet from NP3: "link failure mask is 0x8" i.e. bit 3 set==>NP3.

Information to collect

| Command | Description |

|---|---|

admin show diagnostic result location 0/x/cpu0 test 9 detail |

Displays the detailed results of the test at the specified location |

show controllers NP counter NP(0-3) location 0/x/cpu0 |

Displays the various counters in the respective NPs |

Troubleshooting This test sends 1 unicast packet to each local NP from the line card CPU. Check the Line card local Punt/Inject Path trouble shooting guide for debugging.

Re-Training of Fabric Links

PUNT_FABRIC_DATA_PATH_FAILED messages are sometimes reported during the "re-training" of fabric links. If this is the root cause, error will be reported for all NPs on the slot:

RP/0/RSP0/CPU0:Sep 3 13:49:36.595 UTC: pfm_node_rp[358]: %PLATFORM-DIAGS-3-PUNT_FABRIC_DATA_PATH_FAILED: Set|online_diag_rsp[241782]|System Punt/Fabric/data Path Test(0x2000004)|failure threshold is 3, (slot, NP) failed: (0/7/CPU0, 1) (0/7/CPU0, 2) (0/7/CPU0, 3) (0/7/CPU0, 4) (0/7/CPU0, 5) (0/7/CPU0, 6) (0/7/CPU0, 7)

To confirm the root cause, note the timestamp of the message and check whether fabric link was re-trained within a few minutes before the

PUNT_FABRIC_DATA_PATH_FAILED error was reported:

show controller fabric ltrace crossbar location 0/rsp1/cpu0 | include rcvd link_retrain

Sep 3 13:47:06.975 crossbar 0/RSP1/CPU0 t1 detail xbar_fmlc_handle_link_retrain: rcvd link_retrain for (5,1,0),(9,1,0),1.

Alternative possible output:

show controller fabric ltrace crossbar location 0/rsp0/cpu0 | include rcvd link_retrain

Sep 3 13:47:07.027 crossbar 0/7/CPU0 t1 detail xbar_fmlc_handle_link_retrain: rcvd link_retrain for (9,1,0),(5,1,0),1.

If this issue ever happens on an ASR9000 router, it typically happens within the first month of a system being deployed, up and active. The possibility of a later occurrence is minimal.

If this issue is observed on your ASR9000, there is no need for a hardware replacement (i.e no need for RMA).

Cisco is working on the fix through CSCuj10837. Once the fix is verified, Cisco will make it available to customers through a SMU.

Possible Workarounds:

- Reseat the line card showing fabric re-train.

- Move the affected line card closer to RSP.

ASR9922 SFC upgrade failure

ASR9K A99-SFC2 Fabric Cards (FC) is down (IN-RESET) after an IOS XR Release 5.3.3 Field Programmable Device (FPD) upgrade. With the fabric down, punt diags can fail as the fabric is not properly forwarding traffic.

If A99-SFC2 doesn't have enough disk1a: available space during a IOS XR Release 5.3.3 FPD upgraded, the FC will only be partially upgraded, will fail to boot up and remain in an “In-Reset” state after the upgrade.

This issue has been fixed in IOS XR Release 5.3.4 + by CSCuz13904

A disk utilization check is needed before starting an IOS XR Release 5.3.3 FDP upgrade to prevent partial upgrades on the A99-SFC2 Fabric cards

Ensure disk1a: has enough space by running the following command:

# cd /disk1a:

#ls

. .boot dumper

.. .inodes powerup_info.puf

.altboot .longfilenames

.bitmap LOST.DIR

#cd /dumper

#ls

.

..

ipv4_rib_1152.20151125-082551.node0_RP1_CPU0.x86.txt

ipv4_rib_1152.20151125-084427.node0_RP1_CPU0.x86.txt

ipv4_rib_1152.20151125-091418.node0_RP1_CPU0.x86.txt

ipv4_rib_1152.20151125-100422.node0_RP1_CPU0.x86.txt

ipv4_rib_1152.20151125-102916.node0_RP1_CPU0.x86.txt

ipv4_rib_1152.20151125-104523.node0_RP1_CPU0.x86.txt

If there is not enough space remove unwanted files by running:

# rm -f ipv4*x86.txt

This is not the sign of a hw failure but a failed fpd update that can be done

if there is enough diskspace as per guidance above.

What to collect if there is still an issue

If after this explanation there is still an issue that can't be explained by the write up, and a support case is in order, make sure you collect at minimum the following information from the router when filing that case:

- show install active sum

!gives an overview of the running sw version and applied SMU's

- show log (or syslog output from the time of the problem)

!dumps the logging buffer to for correlation to other events

- admin show platform

!provides details of the linecards and types installed in the system

- admin show hw fpd location all

!clarity on whether the right fpd versions are run on the FPGA's

- admin show diagnostic result location 0/0/CPU0 detail

!more details on the diagnostic results that are run inline on the linecard.

- admin sh diagnostic trace engineer location 0/0/cpu0

!extensive detail on the diag traces

- show controllers fabric fia ltrace location 0/0/cpu0

!Ltrace for the fabric interface asic

- show controllers fabric fia stats location 0/0/CPU0

!Fabric interface asic statistics (FIA)

- show controllers fabric fia stats location 0/RSP1/CPU0

!FIA stats from the RSP (inject side)

- sh controllers fabric fia errors egress location loc 0/0/cpu0

!Reported errors from the FIA

- sh controllers fabric fia link-status location 0/0/cpu0

!Link status details from the FIA

- show controllers fabric fia bridge sync-status location 0/0/cpu0

!Link status between the FIA and the Bridge FPGA

- show pfm location 0/0/cpu0

!Platform Fault Manager details of the linecard

- sh controller np ports all loc 0/0/cpu0

!Port mapping from teh NPU to the physical port numbering.

-show pfm location all

!All faults reported in the system

-show tech fabric

!most relevant traces and captures from the fabric. Copy out the result file as it shows on console when the command is finished.

-admin sh diagnostic trace error loc 0/rsp0/cpu0

-admin sh diagnostic trace error loc 0/rsp1/cpu0

!Diagnostic traces from both RSP's.

replace the RSP keyword with 0 or 1 depending on who is the active.

replace 0/0/CPU0 with the LC in question that is having the issue.

Note: A deliberate "!" sign was added to the explanation of the command to ease copy pasting to a router console

Related Information

Xander Thuijs CCIE #6775

Principal Engineer ASR9000

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Xander,

There is one thing I would like to double check. In the doc, you mentioned that "Note that older releases report the logical slot instead of the more common external name". Here the logical slot, do you mean "L" column or your mean "P" column.

Since L has 0, 1, 2, 3 RSP0 ...

P has only numbers.

I would like to confirm if you are try to say physical slot.

Here is the example on 9010:

pfm_node_rp[357]: %PLATFORM-DIAGS-3-PUNT_FABRIC_DATA_PATH_FAILED : Set|online_diag_rsp[229489]|System Punt/Fabric/data Path Test(0x2000004)|failure threshold is 3, (slot, NP) failed: (7, 0)

I think here (slot, NP) failed: (7, 0) refer to NP0 on 0/5/CPU0, right?

#0 --- #0

#1 --- #1

#2 --- #2

#3 --- #3

RSP0 -- #4

RSP1 -- #5

#4 --- #6

#5 --- #7

#6 --- #8

#7 --- #9

Thanks,

Bill

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Correct Bill! logical slot 7 is physical slot 5!

regards

xander

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Xander,

This weekend i was the logs while a L2 loop was on course.

RP/0/RSP1/CPU0:XXXXXXX:pfm_node_rp[282]: %PLATFORM-DIAGS-3-PUNT_FABRIC_DATA_PATH_FAILED : Set|online_diag_rsp[229493]|System Punt/Fabric/data Path Test(0x2000004)|failure threshold is 3, (slot, NP) failed: (1, 2) (1, 3)

RP/0/RSP1/CPU0:XXXXXXX : pfm_node_rp[282]: %PLATFORM-DIAGS-3-PUNT_FABRIC_DATA_PATH_FAILED : Clear|online_diag_rsp[229493]|System Punt/Fabric/data Path Test(0x2000004)|failure threshold is 3, (slot, NP) failed: (1, 2) (1, 3)

The loop was generate by a 3400 connect to 2 ASRs that form part of a L3 Ring giving L2VPN services, SPT was disable on the 3400 and switchport backup was configure in the wrong way.

Just saing that under this scenarios you can see the logs also.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

thanks for sharing that! good detail.

Somewhat unexpected I would say, although loops create an unnecessary load on the NPU consuming pps cycles that may take that away from the np diag frames, but since the diag frames are top prio I didnt expect this to happen to be honest.

ps, am sure you know, but just in case, you can potentially "mitigate" loops with mac security to prevent a mac being learnt on another port.

cheers!

xander

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello Xander,

last night one of the LC has crashed with following message

LC/0/0/CPU0:Jan 15 03:56:40.775 : prm_server_tr[292]: %PLATFORM-NP-4-FAULT : prm_process_parity_tm_cluster: 1 Unrecoverable error(s) found. Reset NP4 now

LC/0/0/CPU0:Jan 15 03:56:42.858 : ipv4_mfwd_partner[230]: %ROUTING-IPV4_MFWD-4-FROM_MRIB_UPDATE : MFIB couldn't process update from MRIB : failed to create route 0xe0000000:(10.120.3.77,239.192.4.40/32) - 'asr9k-ipmcast' detected the 'warning' condition 'Platform MFIB: Platform Lib not ready; NP Not running'

LC/0/0/CPU0:Jan 15 03:56:52.185 : pfm_node_lc[282]: %PLATFORM-NP-0-TMB_CLUSTER_PARITY : Set|prm_server_tr[155731]|Network Processor Unit(0x1008004)|TMb cluster parity interrupt. Indicates an internal SRAM problem in TMb cluster, NP=4 memId=6, mask=0x2000000, PMask=0x2000000 SRAMLine=166 Rec=1 Rewr=1

LC/0/0/CPU0:Jan 15 03:56:52.187 : pfm_node_lc[282]: %PLATFORM-PFM-0-CARD_RESET_REQ : pfm_dev_sm_perform_recovery_action, Card reset requested by: Process ID: 155731 (prm_server_tr), Fault Sev: 0, Target node: 0/0/CPU0, CompId: 0x1f, Device Handle: 0x1008004, CondID: 1008, Fault Reason: TMb cluster parity interrupt. Indicates an internal SRAM problem in TMb cluster, NP=4 memId=6, mask=0x2000000, PMask=0x2000000 SRAMLine=166 Rec=1 Rewr=1

RP/0/RSP1/CPU0:Jan 15 03:56:52.380 : shelfmgr[394]: %PLATFORM-SHELFMGR-6-NODE_KERNEL_DUMP_EVENT : Node 0/0/CPU0 indicates it is doing a kernel dump.

RP/0/RSP1/CPU0:Jan 15 03:56:52.381 : shelfmgr[394]: %PLATFORM-SHELFMGR-6-NODE_STATE_CHANGE : 0/0/CPU0 A9K-8T-L state:IOS XR FAILURE

RP/0/RSP1/CPU0:Jan 15 03:56:52.384 : ospf[1011]: %ROUTING-OSPF-5-ADJCHG : Process 8000, Nbr 10.100.96.204 on TenGigE0/0/0/1 in area 0 from FULL to DOWN, Neighbor Down: BFD session down, vrf default vrfid 0x60000000

RP/0/RSP1/CPU0:Jan 15 03:56:52.397 : shelfmgr[394]: %PLATFORM-SHELFMGR-6-NODE_STATE_CHANGE : 0/0/CPU0 A9K-8T-L state:BRINGDOWN

I could not find anything related on the internet and the available cisco tools didn't help.

I just want to ask you if you have seen this message before. I not then I will open a case.

IOS-XR is 4.3.1 (old, I know)

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

hey smail! long time! good to hear from you!

ah this: PLATFORM-NP-4-FAULT : prm_process_parity_tm_cluster: 1 Unrecoverable error(s) found.

it means that the NP number 4 on the linecard in slot 0 incurred a memory parity error on the traffic manager portion of the NPU (the portion that handles Q'ing and scheduling) and it could not correct that error and therefore decided to reinit and crash.

Generally with memory parity errors we always advice to catch it once, monitor it and if this happens again to replace the card.

If you are uncomfortable "waiting" until a next event, you could decide to replace it now, but many times parity errors are transient and caused by a what we used to call "cosmic radiation" which is merely an assembly of uncommon not likely to happen events such as a power spike or drop, or other intangible events.

cheers

xander

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Yes, long time :).

Your reply is exactly what I need. We have similar issues on C7600 where LC's do an one-time only restart.

On ASR it's not that often. Maybe 2-3 cases.

I will tell this to our customer and if it happens again we will request RMA.

Many thanks Xander!!

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: