BFD Support on Cisco ASR 9000

Introduction

In both Enterprise and Service Provider networks, the convergence of business-critical applications onto a common IP infrastructure is becoming more common. Given the criticality of the data, these networks are typically constructed with a high degree of redundancy. While such redundancy is desirable, its effectiveness is dependant upon the ability of individual network devices to quickly detect failures and reroute traffic to an alternate path.

This detection is now typically accomplished via hardware detection mechanisms. However, the signals from these mechanisms are not always conveyed directly to the upper protocol layers. When the hardware mechanisms do not exist (eg: Ethernet) or when the signaling does not reach the upper protocol layers, the protocols must rely on their much slower strategies to detect failures. The detection times in existing protocols are typically equal to or greater than one second, and sometimes much longer. For some applications, this is too long to be useful.

The process of network convergence can be broken up into a set of discreet events:

- Failure detection: the speed with which a device on the network can detect and react to a failure of one of its own components, or the failure of a component in a routing protocol peer.

- Information dissemination: the speed with which the failure in the previous stage can be communicated to other devices in the network.

- Repair: the speed with which all devices on the network-having been notified of the failure-can calculate an alternate path through which data can flow.

An improvement in any one of these stages provides an improvement in overall convergence.

The first of these stages, failure detection can be the most problematic and inconsistent:

- Different routing protocols use varying methods and timers to detect the loss of a routing adjacency with a peer.

- Link-layer failure detection times can vary widely depending on the physical media and the Layer 2 encapsulation used.

- Intervening devices (eg: Ethernet switch) can hide link-layer failures from routing protocol peers.

Packet over SONET (POS) tends to have the best failure detection time amongst the different Layer 1/2 media choices. It can typically detect and react to media or protocol failures in ~50 milliseconds. This has become the benchmark against which other protocols are measured.

Bi-directional Forwarding Detection (BFD) provides rapid failure detection times between forwarding engines, while maintaining low overhead. It also provides a single, standardized method of link/device/protocol failure detection at any protocol layer and over any media. BFD is a simple, light weight hello protocol and is targeted to achieve a detection time as short as a few tenths of milliseconds.

A secondary benefit of BFD, in addition to fast failure detection, is that it provides network administrators with a consistent method of detecting failures. Thus, one availability methodology could be used, irrespective of the Interior Gateway Protocol (IGP) or the topology of the target network. This eases network profiling and planning, because re-convergence time should be consistent and predictable. BFD function is defined in RFC 5880.

The Fundamental difference between the BFD Hellos and the Protocol Hellos (OSPF, RSVP etc.) is that BFD adjacencies do not go down on Control-Plane restarts (e.g. RSP failover) since the goal of BFD is to detect only the forwarding plane failures. BFD is essentially a Control plane protocol designed to detect the forwarding path failures.

BFD is a simple Hello protocol that, in many respects, is similar to the detection components of well-known routing protocols. It is a UDP-based layer-3 protocol that provides very fast routing protocol independent detection of layer-3 next hop failures.

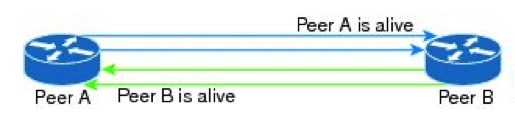

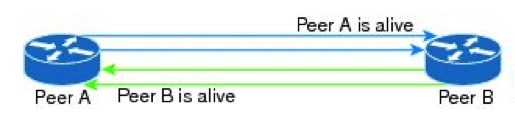

A pair of systems transmits BFD packets periodically over each path between the two systems, and if a system stops receiving BFD packets for long enough, some component in that particular bidirectional path to the neighboring system is assumed to have failed.

BFD runs on top of any data-link protocol that is in use between two adjacent systems.

BFD has two versions: Version 0 and Version 1. The ASR 9000 Router based on Cisco IOS XR software supports BFD Version 1.

BFD has two operating modes that may be selected, as well as an additional function that can be used in combination with the two modes.

The primary mode is known as Asynchronous mode. In this mode, the systems periodically send BFD Control packets to one another, and if a number of those packets in a row are not received by the other system, the session is declared to be down.

The second mode is known as Demand mode. In this mode, it is assumed that a system has an independent way of verifying that it has connectivity to the other system. Once a BFD session is established, such a system may ask the other system to stop sending BFD Control packets, except when the system feels the need to verify connectivity explicitly, in which case a short sequence of BFD Control packets is exchanged, and then the far system quiesces. Demand mode may operate independently in each direction, or simultaneously.

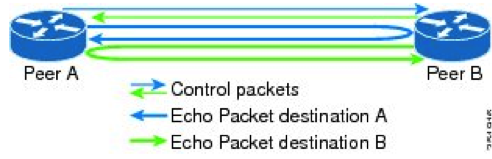

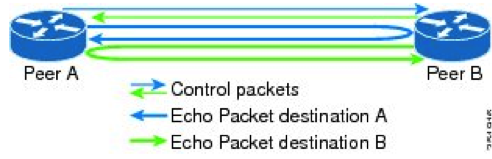

An adjunct to both modes is the Echo function. When the Echo function is active, a stream of BFD Echo packets is transmitted in such a way as to have the other system loop them back through its forwarding path. If a number of packets of the echoed data stream are not received, the session is declared to be down. The Echo function may be used with either Asynchronous or Demand mode. Since the Echo function is handling the task of detection, the rate of periodic transmission of Control packets may be reduced (in the case of Asynchronous mode) or eliminated completely (in the case of Demand mode).

Cisco ASR 9000 only supports asynchronous mode and has echo enabled by default.

BFD Async Mode

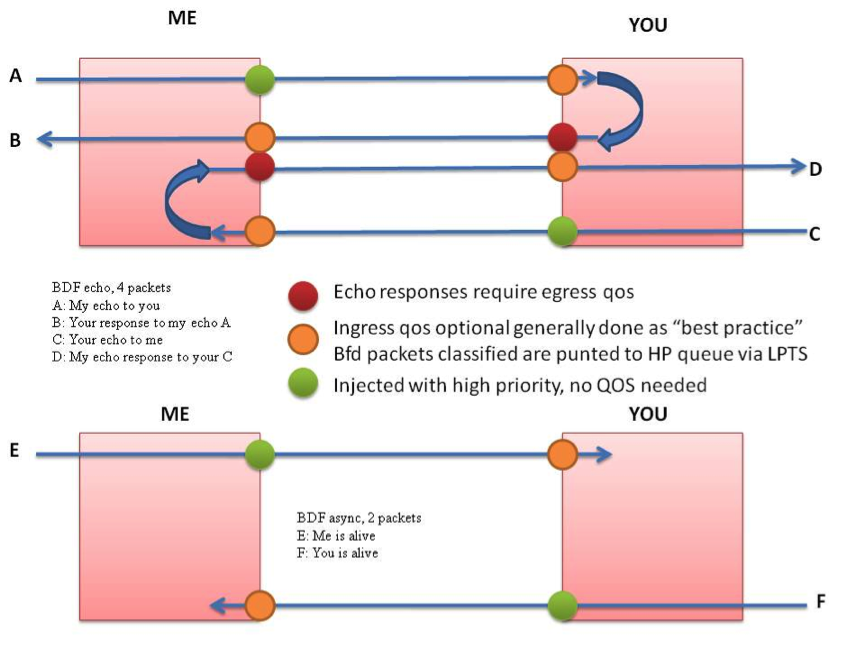

Node running BFD in Async mode transmits periodically BFD Control packets. BFD control packets are encapsulated into UDP, with Source port 49152 and Destination port 3784. The Source address of the IP packet is the local interface address and the destination address is the remote interface address.

BFD control packets are unidirectional, i.e. they normally don’t require a response. Their purpose is to confirm that BFD control plane on the remote peer is operational.

If a predetermined number of intervals passes without receiving a BFD control placket from the peer, the local node will declare the BFD session down.

BFD Echo Mode

The Echo mode is designed to test only the forwarding path and not the host stack.

BFD Echo packets are transmitted over UDP with source and destination ports as 3785. The source address of the BFD Echo packet is the router ID and the destination address is the local interface address.

BFD Echo packets do not require a response. Choice of destination IP address is such that it forces the remote peer to route the original packet back via the same link, without punting to the BFD control plane. Thus, BFD control plane on peer B is not even aware of BFD Echo packets originating from peer A.

If a predetermined number of intervals passes without receiving its own BFD Echo placket, the local node will declare the BFD session down.

Source address selection of the BFD echo packet:

- check for an echo source address configuration for the interface

- check for a global echo source address configuration

- use the RID as the echo source address

- use the interface address

Default source/destination IP address and UDP port for BFD Asycn and Echo packets:

| |

BFD Async |

BFD Echo |

| Source IP address |

Local Interface |

Router ID |

|

Destination IP address

|

Remote interface

|

Local Interface

|

|

Source UDP Port

|

49152

|

3785

|

| Destination UDP Port |

3784 |

3785 |

BFD Session

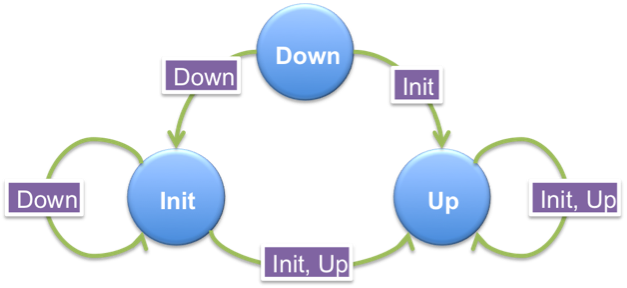

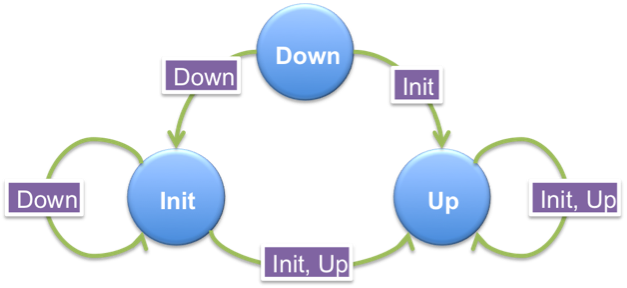

The BFD session parameters are negotiated between the BFD peers in a three-way handshake. Each Peer communicates its session state in the State (Sta) field in the BFD Control packet, and that received state, in combination with the local session state, drives the state machine. When the local peer wants to bring the session up, it sends a control packet with Down state. Figure 3 shows the transition from Down to Up state. Circles represent the state of the local peer and rectangles the received state of remote peer.

If this peer receives a BFD Control packet from the remote system with a Down state, it advances the session to Init state. If it receives a BFD Control packet from the remote system with an Init state, it moves the session to Up state.

The BFD peers continuously negotiate their desired transmit and receive rates. The peer that reports the slower rate determines the transmission rate. The periodic transmission of BFD Control packets is jittered on a per-packet basis by a random value of 0 to 25%, in order to avoid self-synchronization with other systems on the same subnet. Thus, the average interval between packets is roughly 12.5% less than that negotiated.

In a BFD session, each system calculates its session down detection time independently. In each direction, this calculation is based on the value of Detect Mult received from the remote system and the negotiated transmit interval.

Negotiated_Transmit_Interval =

max (required minimum received interval, last received desired minimum transmit interval)

Detection Time = Detection_Multiplier x Negotiated_Transmit_Interval

If the Detection Time is passed without receiving a control packet, the session is declared to be down.

“Static BFD” refers to a BFD session that protects a single static route. “Dynamic BFD” refers to a BFD session between dynamic routing protocol peers, by which all of the routes having this peer as the next-hop are protected by BFD.

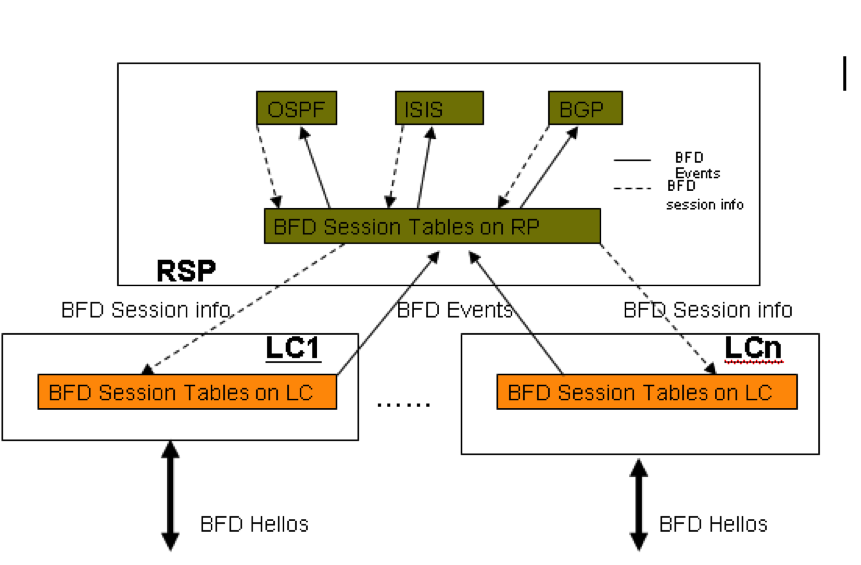

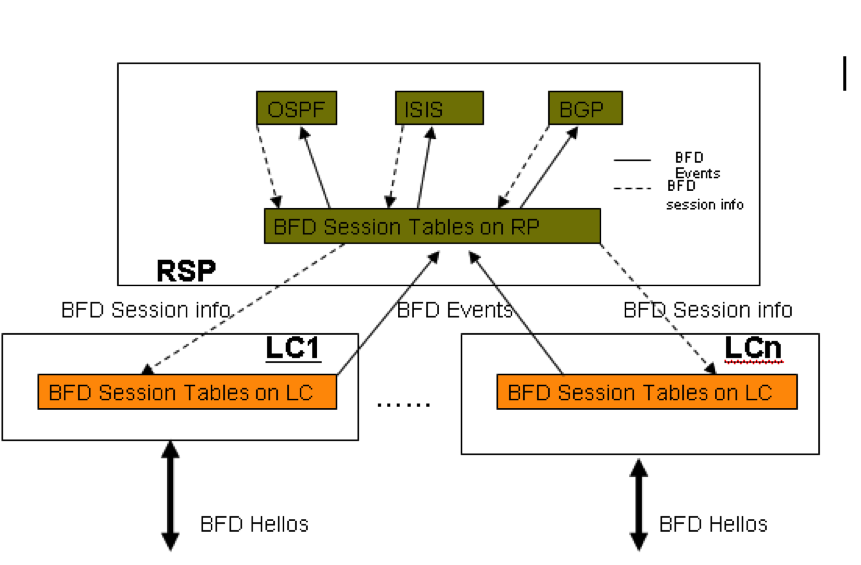

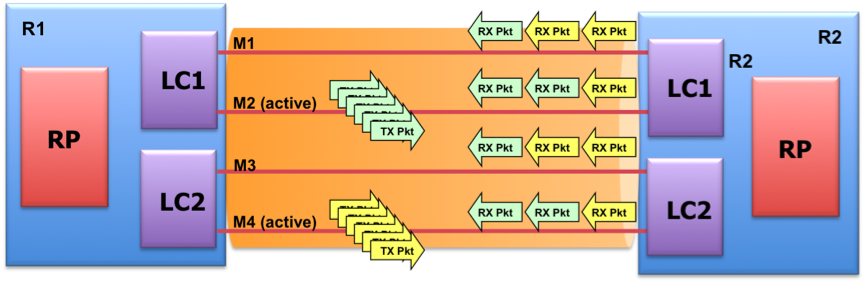

In Cisco ASR 9000, BFD Functionality is implemented between RSP and Line card in a distributed architecture. There is a BFD Server Process running on the RSP and a BFD Agent Process running on the Line card. The Architecture is as shown in this figure:

- BFD process on RSP:

- Responsible for interacting with BFD clients and passing BFD session creation/deletion request to LC BFD processes upon configuration request, and maintaining a adjacency database to track all BFD sessions on the router.

- Notify clients of BFD (OSPF, IS-IS or BGP) interface configuration change and session down events upon receiving BFD event notification from LC.

- Service show and configuration commands.

-

- BFD process on LC:

- Create and delete BFD sessions based on commands from BFD RSP process

- Maintain a database for all BFD sessions on the LC. There is an instance of BFD adjacency per session on the LC that owns the session.

- Notify BFD RSP process in any event of transmit, receive failure or session down detection.

- Maintain and update BFD session transmit and receive counters

- Run BFD FSM for all BFD sessions on the LC, transmit and receive BFD control packets, update and check BFD session detection timers.

BFD communication between RSP and LC is through reliable IPC messaging which is designed for communication with minimal delay and being able to survive RSP switchover. In particular:

- LC BFD event message to RSP is sent on a reserved IPC port;

- On RSP, BFD event port is opened as an IPC reliable port and BFD event sent from LC gets received in RSP IPC receive interrupt context. The BFD event port gets open and ready to receive BFD event on standby RSP in case of a switchover and it becomes active RSP.

- During RSP switchover, BFD event from LC to RSP will not be sent. Instead, it is registered with switchover call-backs and get sent after the RSP switchover.

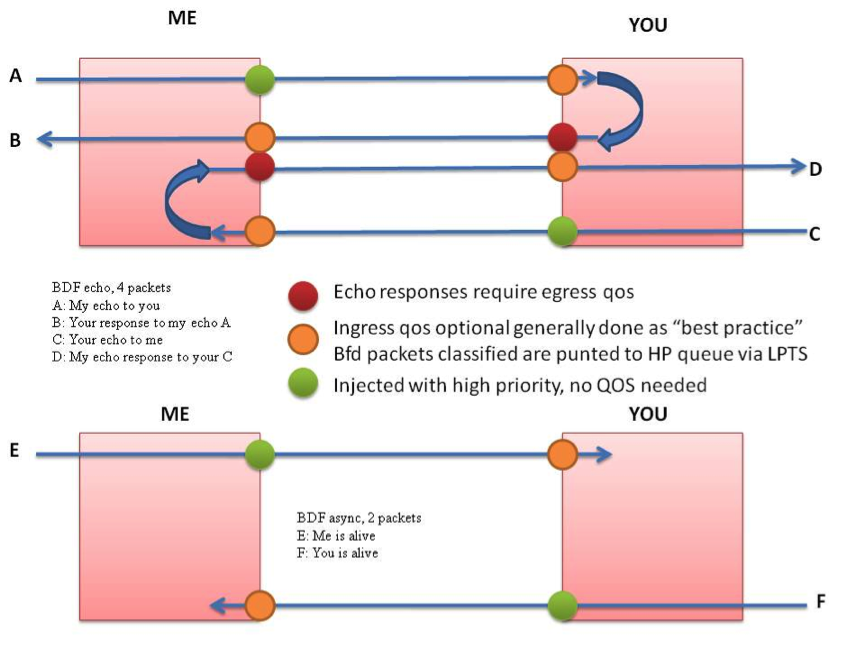

The BFD packets have to be punted to the Line Card CPU and follow the slow path. Injected and punted BFD packets have a preferential treatment on all line card architectures. In other words, following BFD packets have a preferential treatment on all ASR9000 line cards:

- BFD control packets

- BFD Echo packets originated on the LC

- Response to BFD Echo packets originated on the LC

Egress QoS policy map needs to be considered to ensure preferential treatment of BFD Echo packets originated by the BFD peer.

Configuring BFD

|

NOTE: In IOS XR an application must “terminate“ the BFD session.

Unlike IOS, direct peering without any application using the session is not allowed.

|

Simplest application is router-static:

router static

address-family ipv4 unicast

172.16.1.1/32 10.1.1.1 bfd fast-detect minimum-interval 500 multiplier 3

!

interface GigabitEthernet0/0/0/0

ipv4 address 10.1.1.2 255.255.255.0

This is a special case in IOS-XR, supported after 4.2.3 (through CSCua18314). This is a static BFD to a /32 prefix in a directly attached network.

interface GigabitEthernet0/1/0/22

ipv4 address 10.52.61.1 255.255.255.0

!

router static

address-family ipv4 unicast

10.52.61.2/32 GigabitEthernet0/1/0/22 10.52.61.2 bfd fast-detect

Corresponding configuration on IOS device:

interface GigabitEthernet4/17

ip address 10.52.61.2 255.255.255.0

bfd interval 500 min_rx 500 multiplier 3

bfd neighbor ipv4 10.52.61.1

ISIS application sample:

router isis lab

net 49.0111.0111.0111.0111.00

address-family ipv4 unicast

redistribute connected

!

interface GigabitEthernet0/2/0/1

bfd minimum-interval 500

bfd multiplier 3

bfd fast-detect ipv4

Top level BFD configuration mode is used to configure global BFD parameters (dampening, global echo source address, etc.) and interface specific BFD paramaters (disable/enable echo mode per interface, set echo source address, etc.):

bfd

interface GigabitEthernet0/2/0/1

echo disable

!

echo ipv4 source 10.0.0.21

dampening secondary-wait 7500

dampening initial-wait 3000

dampening maximum-wait 180000

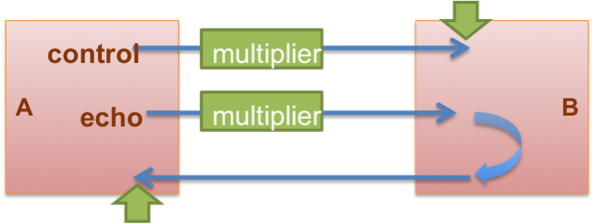

In IOS-XR BFD Control and Echo packet intervals are not configured independently. If Echo mode is enabled, user can configure the BFD Echo interval, and the BFD Control interval is set to 2 seconds. If BFD Echo is disabled, user configures the BFD Control interval. Same multiplier is applied to both values to calculate the ‘dead’ timer.

If Echo mode is enabled, locally configured multiplier will be used by remote peer to calculate detection time for BFD Control packets sent by local peer. And for locally generated echo packets, locally configured multiplier will be used to calculate the detection time.

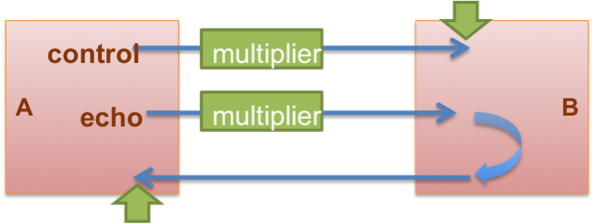

BFD Multiplier

(fat green arrow indicates where is the multiplier applied)

Injected and punted BFD packets have a preferential treatment on all line card architectures.

Egress QoS policy map needs to be considered to ensure preferential treatment of BFD Echo packets originated by the BFD peer. Sample configuration:

ipv4 access-list BFD

5 permit udp any any eq 3785

!

class-map match-any BFDCLASS

match access-group ipv4 BFD

!

policy-map OUT

class BFDCLASS

priority level 1

police rate 10 kbps

!

interface GigabitEthernet0/2/0/1

service-policy output OUT

If the above still doesn't prevent BFD flaps during sever congestions, create a parent shaper over this policy, shape it to 100% and apply the parent policy to the interface.

HSRP has to learn what the physical addresses of the Active and Standby router are (from the HSRP control packets). Once one of these routers change state, it's possible that another (third) router could take over as Standby. HSRP cannot assume that the same router will come back (configured the same way) following the failure - it must wait for the Standby to be newly elected and discover its physical address to recreate the BFD session to that address. As a consequence BFD session may flap when HSRP state changes.

|

NOTE: BFD scale limits differ per line card architecture and may change from one IOS-XR release to another.

|

BFD scale is determined by rate of BFD packets that are allowed to be punted to LC CPU. Also, it depends on the pps limit and max session limit per LC. Limits differ per line card architecture and may change from one IOS-XR release to another. Exec command “show bfd summary” can be used to observe the limits on the router:

RP/0/RSP0/CPU0:ASR9K#show bfd summary

Node All PPS usage MP PPS usage Session number

% Used Max % Used Max Total MP Max

---------- --------------- --------------- ------------------

0/2/CPU0 0 2 16000 0 0 16000 1 0 8000

0/4/CPU0 0 0 9600 0 0 9600 0 0 4000

0/6/CPU0 0 0 16000 0 0 16000 0 0 8000

“All PPS Usage” indicates the PPS limit on all BFD sessions on a given slot, and “Session number Max” indicates the maximum number of BFD sessions on a given slot.

For example, to calculate how many BFD sessions can be hosted presuming a 150ms Echo interval on slots 0/2 or 0/6 from the above sample:

- BFD pps rate = 16000 pps

- Echo packet interval = 150ms = 0.15s

- ==> 16000 * 0.15 = 2400 sessions

Received BFD packets are policed by the NP. To see the rate of received BFD packets and to check for any drops, run the “show controllers np counters <np|all> location <location>” command and look for BFD counters.

Monitoring BFD

Essential commands for BFD monitoring are

show bfd session [detail]

show bfd counters packet

Sample outputs for given configuration:

interface GigabitEthernet0/2/0/1

ipv4 address 10.0.9.2 255.255.255.0

!

router isis lab

net 49.0111.0111.0111.0111.00

address-family ipv4 unicast

redistribute connected

!

interface GigabitEthernet0/2/0/1

bfd minimum-interval 500

bfd multiplier 3

bfd fast-detect ipv4

RP/0/RSP0/CPU0:ASR9K-1#show bfd session

Interface Dest Addr Local det time(int*mult) State

Echo Async

--------------- --------------- ---------------- ---------------- ----------

Gi0/2/0/1 10.0.9.1 1500ms(500ms*3) 6s(2s*3) UP

RP/0/RSP0/CPU0:WEST-PE-ASR9K-1#show bfd counters packet

GigabitEthernet0/2/0/1 Recv Xmit Recv Xmit

Async: 44048 44182 Echo: 175384 175384

RP/0/RSP0/CPU0:ASR9K-1#show bfd session detail

I/f: GigabitEthernet0/2/0/1, Location: 0/2/CPU0

Dest: 10.0.9.1

Src: 10.0.9.2

State: UP for 0d:21h:4m:54s, number of times UP: 1

Session type: PR/V4/SH

Received parameters:

Version: 1, desired tx interval: 2 s, required rx interval: 2 s

Required echo rx interval: 1 ms, multiplier: 3, diag: None

My discr: 2148335618, your discr: 2148073474, state UP, D/F/P/C/A: 0/0/0/1/0

Transmitted parameters:

Version: 1, desired tx interval: 2 s, required rx interval: 2 s

Required echo rx interval: 1 ms, multiplier: 3, diag: None

My discr: 2148073474, your discr: 2148335618, state UP, D/F/P/C/A: 0/0/0/1/0

Timer Values:

Local negotiated async tx interval: 2 s

Remote negotiated async tx interval: 2 s

Desired echo tx interval: 500 ms, local negotiated echo tx interval: 500 ms

Echo detection time: 1500 ms(500 ms*3), async detection time: 6 s(2 s*3)

Local Stats:

Intervals between async packets:

Tx: Number of intervals=100, min=1663 ms, max=1993 ms, avg=1834 ms

Last packet transmitted 876 ms ago

Rx: Number of intervals=100, min=1665 ms, max=2 s, avg=1832 ms

Last packet received 272 ms ago

Intervals between echo packets:

Tx: Number of intervals=50, min=25 s, max=25 s, avg=25 s

Last packet transmitted 142 ms ago

Rx: Number of intervals=50, min=25 s, max=25 s, avg=25 s

Last packet received 137 ms ago

Latency of echo packets (time between tx and rx):

Number of packets: 100, min=1 ms, max=5 ms, avg=3 ms

Session owner information:

Desired Adjusted

Client Interval Multiplier Interval Multiplier

-------------------- --------------------- ---------------------

isis-escalation 500 ms 3 2 s 3

Explanation of the relevant fields in the show bfd session detail command output:

| Field |

Description |

| dest: 10.0.9.1 |

The Destination IP address in the BFD async control packet |

| src: 10.0.9.2 |

The source IP address used in the BFD async control packet |

| State: UP |

The current state of the BFD session at the local router |

| for 0d:21h:4m:54s |

Indicates the time in which this BFD session has maintained this State |

| number of times UP: 1 |

Indicates the number of times this session has been UP since the last time of flap or establishment |

| Received parameters: |

Explanation of the received parameters is as below |

| Version: 1 |

BFD protocol version running on the Remote router |

| desired tx interval: 2 s |

The Async packet interval used by Remote router in sending |

| required rx interval: 2 s |

The Async packet interval expected by Remote router of this Router |

| Required echo rx interval: 1 ms |

The echo interval that can be supported by the Remote router |

| multiplier: 3 |

The multiplier that the Remote router chooses to use |

| diag: None |

The reason indicated by remote router if at all the BFD session is not in UP state at it's side |

| My discr: 2148335618 |

The Discriminator used by the Remote Router for this BFD session |

| your discr: 2148073474 |

The Discriminator belonging to this Router as understood by Remote router for this BFD session |

| state UP |

The state of BFD session at the Remote router |

| D/F/P/C/A: 0/0/0/1/0 |

Bit settings as seen in the Async control packet sent by Remote router:

D - Set if Demand Mode is used by Remote router

F - Final bit in the BFD packet sent by remote router. Remote router is ACKing change in BFD parameter sent by Local router

P - Poll bit in the BFD packet sent by remote router. Remote router has changed some BFD parameters and waits for ACK from Local router

C - Is BFD independent of the Control plane on Remote Router

A - Is Authentication used by Remote router for this session |

| Transmitted parameters: |

Explanation of the transmitted parameters |

| Version: 1 |

BFD protocol version running on the Local router |

| desired tx interval: 2 s |

The Async packet interval used by Local router in sending |

| required rx interval: 2 s |

The Async packet interval expected of the Remote router by this Router |

| Required echo rx interval: 1 ms |

The echo interval that can be supported by the Local router |

| multiplier: 3 |

The multiplier that the Local router chooses to use |

| diag: None |

The reason indicated by local router if at all the BFD session is not in UP state |

| My discr: 2148073474 |

The Discriminator used by the local Router for this BFD session |

| your discr: 2148335618 |

The Discriminator belonging to remote Router as understood by the local router for this BFD session |

| state UP |

The state of BFD session at the Local router |

| D/F/P/C/A: 0/0/0/1/0 |

Bit settings as seen in the Async control packet sent by the local router:

D - Set if Demand Mode is used by local router

F - Final bit in the BFD packet sent by local router. Local Router is ACKing a change in BFD parameter sent by Remote router

P - Poll bit in the BFD packet sent by local router. Local Router is indicating a change in BFD parameter to the Remote Router

C - Set if BFD is independent of the Control plane on the local Router. As per IOX implementation, this will be set.

A - Set if Authentication used by local router for this session |

| Timer Values: |

Details of the timer values exchanged between Local and Remote routers |

| Local negotiated async tx interval: 2 s |

The Async interval used by Local Router for transmit after negotiation |

| Remote negotiated async tx interval: 2 s |

The Async interval used by Local Router for reception after negotiation |

| Desired echo tx interval: 500 ms |

The configured (or default) echo interval used by Local router. |

| local negotiated echo tx interval: 500 ms |

Based on the upper value negotiated between Local (configured minimum-interval or default value) and Remote router (published capability for Echo receive interval). In case of IOS-XR, the published interval is 1ms. Between (600ms, 1ms), 600ms is chosen. |

| Echo detection time: 1500 ms(500 ms*3) |

The product of local negotiated echo tx interval and the multiplier configured locally |

| async detection time: 6 s (2 s*3) |

The product of Remote negotiated async tx interval and the multiplier sent by remote router/td> |

State and Diag

In traces and show commands sometimes the state is provided in an enumeration. This table decodes the state enum to the explanation and meaning of that state /Diag number.

STATE:

| State |

Explanation |

| State = 0 |

Admin down (configured) |

| State = 1 |

DOWN (loss reported) |

| State = 2 |

INIT (starting up) |

| State = 3 |

UP (Bfd session all running fine). |

DIAG:

| DIAG |

Explanation |

| Diag = 0 |

DIAG_NONE/reserved |

|

Diag = 1

|

Control Expired |

| Diag = 2 |

Echo Failed |

| Diag = 3 |

Neighbor down |

| Diag = 4 |

Forward plane reset |

| Diag = 5 |

Path down |

| Diag = 6 |

Concatenated path down |

| Diag = 7 |

Admin down |

| Diag = 8 |

Reverse concath path down. |

For SNMP monitoring use CISCO-IETF-BFD-MIB OIDs.

Multi-Hop And Multi-Path BFD

Multi-hop BFD session runs between two endpoints, across one or more layer 3 forwarding devices. Key aspect of BFD multi-hop session is that the return path may be asymmetric, hence it is also a multi-path session by definition.

A BFD session between virtual interfaces (BVI, BE, PWHE, GRE tunnel) of directly connected peers is also a multi-path session because of possible asymatrical routing.

In asr9k nv edge solutions (aka cluster), BFD Multi-path sessions are supported starting with XR release 5.2.2.

On ASR 9000 BFD instance may only run on a line card CPU. User must manually designate the line card CPU on which the BFD session will run. If multiple line card CPUs are designated, multi-hop BFD sessions will be load-balanced between them. There are no mechanisms to bind a specific BFD session to a specific LC CPU. The line card hosting the BFD session doesn't have to be the one that owns the transport interfaces through which BFD packets are exchanged.

BFD Async packets pertaining to a single BFD session are always processed by the same LC CPU. The discriminator field in the BFD packet header is used to determine the destination LC CPU for received BFD packets.

BFD packets are always originated with TTL=255. To effectively impose a limit on the number of hops that a BFD packet should traverse, TTL threshold can be applied on received BFD packets. If the TTL is less than the configured threshold, packet will be dropped. BFD TTL threshold is configurable only in global BFD configuration mode.

BFD Echo mode is not supported in multi-hop BFD.

Sample multi-hop BFD configuration for BGP:

bfd

multihop ttl-drop-threshold 240 !<-- optional

multipath include location 0/1/CPU0 !<-- mandatory

!

router bgp 100

address-family ipv4 unicast

!

address-family vpnv4 unicast

!

neighbor 10.0.0.22

remote-as 100

bfd fast-detect

bfd multiplier 3

bfd minimum-interval 1000

Since echo mode is not supported, configured timers are applied to the async mode.

With multi-path BFD sessions the system cannot predict the exact physical interface on which the BFD packet from the peer may be transmitted/received because of changes in routing. If preferential treatment of MP BFD packets is required, user must explicitly ensure such treatment along the path, including the QoS on possible input/output interfaces on the source/destination of MP BFD packets.

ipv4 access-list BFD

5 permit udp any any eq 4784

!

class-map match-any BFDCLASS

match access-group ipv4 BFD

!

policy-map BFD

class BFDCLASS

priority level 1

police rate 10 kbps

!

interface GigabitEthernet0/2/0/1

service-policy output BFD

service-policy input BFD

RP/0/RSP0/CPU0:ASR9K#show bfd session detail

Mon Apr 15 16:27:28.991 UTC

Location: 0/1/CPU0 ! <------ LC CPU hosting the BFD session

Dest: 10.0.0.22

Src: 10.0.0.52

VRF Name/ID: default/0x60000000

State: UP for 0d:0h:45m:14s, number of times UP: 1

Session type: SW/V4/MH ! <------ Multi-Hop

<...>

Timer Values:

Local negotiated async tx interval: 1 s ! <------ min interval

Remote negotiated async tx interval: 1 s

async detection time: 3 s(1 s*3) ! <------ multiplier

<...>

MP download state: BFD_MP_DOWNLOAD_ACK ! <------ Multi-Path

State change time: Apr 15 15:40:16.123

Session owner information:

Desired Adjusted

Client Interval Multiplier Interval Multiplier

-------------------- --------------------- ---------------------

bgp-default 1 s 3 1 s 3

BFD Hardware Offload

Introduction

When BFD Hardware Offload is enabled, async packets are not generated and received any more by the LC CPU, but by the Network Processor (NP), thus increasing the BFD scale. BFD HW Offload is supported on Enhanced Ethernet line cards (aka "Typhoon"), on single-hop IPv4/IPv6 sessions over physical interfaces and sub-interfaces, and on MPLS-TP LSP Single-Path sessions.

Configuring BFD HW Offload

Configure "hw-module bfd-hw-offload enable" in the admin configuration mode and reload the line card for the command to take effect.

RP/0/RSP1/CPU0:9K(admin-config)#hw-module bfd-hw-offload enable location 0/0/CPU0

RP/0/RSP1/CPU0:9K(admin-config)#commit

RP/0/RSP1/CPU0:9K(admin-config)#end

RP/0/RSP1/CPU0:9K#hw-module location 0/0/CPU0 reload

WARNING: This will take the requested node out of service.

Do you wish to continue?[confirm(y/n)]y

Scale and Restrictions

Refer to XR release 5.3 routing configuration guide.

Monitoring BFD HW Offload

RP/0/RSP1/CPU0:9K#sh bfd session detail

I/f: TenGigE0/0/0/12.1, Location: 0/0/CPU0

Dest: 201.1.1.1

Src: 201.1.1.2

State: UP for 0d:0h:0m:14s, number of times UP: 1

Session type: PR/V4/SH

Received parameters:

Version: 1, desired tx interval: 150 ms, required rx interval: 150 ms

Required echo rx interval: 1 ms, multiplier: 3, diag: None

My discr: 2148335618, your discr: 2147549186, state UP, D/F/P/C/A: 0/0/0/1/0

Transmitted parameters:

Version: 1, desired tx interval: 300 ms, required rx interval: 300 ms

Required echo rx interval: 0 us, multiplier: 3, diag: None

My discr: 2147549186, your discr: 2148335618, state UP, D/F/P/C/A: 0/1/0/1/0

Timer Values:

Local negotiated async tx interval: 300 ms

Remote negotiated async tx interval: 300 ms

Desired echo tx interval: 0 s, local negotiated echo tx interval: 0 us

Echo detection time: 0 us(0 us*3), async detection time: 900 ms(300 ms*3)

Local Stats:

Intervals between async packets:

Tx: Number of intervals=2, min=5 ms, max=3469 ms, avg=1737 ms

Last packet transmitted 14 s ago

Rx: Number of intervals=6, min=5 ms, max=1865 ms, avg=444 ms

Last packet received 13 s ago

Intervals between echo packets:

Tx: Number of intervals=0, min=0 s, max=0 s, avg=0 s

Last packet transmitted 0 s ago

Rx: Number of intervals=0, min=0 s, max=0 s, avg=0 s

Last packet received 0 s ago

Latency of echo packets (time between tx and rx):

Number of packets: 0, min=0 us, max=0 us, avg=0 us

Session owner information:

Desired Adjusted

Client Interval Multiplier Interval Multiplier

-------------------- --------------------- ---------------------

isis-Escalation 150 ms 3 300 ms 3

H/W Offload Info:

H/W Offload capability : Y, Hosted NPU : 0/0/CPU0/NPU4

Async Offloaded : Y, Echo Offloaded : N

Async rx/tx : 7/3

Platform Info:

NPU ID: 4

Async RTC ID : 1 Echo RTC ID : 0

Async Feature Mask : 0x8 Echo Feature Mask : 0x0

Async Session ID : 0x0 Echo Session ID : 0x0

Async Tx Key : 0x801 Echo Tx Key : 0x0

Async Tx Stats addr : 0x205ee800 Echo Tx Stats addr : 0x0

Async Rx Stats addr : 0x215ee800 Echo Rx Stats addr : 0x0

BFD Over Bundle Member Interfaces

Implementation of various BFD flavours over bundle interfaces in IOS XR was carried out in 3 phases:

- IPv4 BFD session over individual bundle sub-interfaces. This feature was called “BFD over VLAN over bundle”.

- IOS XR releases 4.0.1 and beyond: “BFD Over Bundle (BoB)” feature was introduced.

- IOS XR releases 4.3.0 and beyond: full support for IPv4 and IPv6 BFD sessions over bundle interfaces and sub-interfaces. For disambiguation from the BoB feature, this implementation is called BLB, and sessions are often referred to as native BFD sessions over bundle interfaces and/or sub-interfaces. BFD multipath must be enabled for any of these BFD flavours to work.

“BFD Over VLAN Over Bundle” refers to a static or dynamic IPv4 BFD session over bundle-ethernet sub-interface. No other flavours of BFD over bundle interfaces or sub-interfaces were supported.

From configuration and monitoring aspect this feature was identical to BFD over physical interface or sub-interafce.

Example of a “BFD over VLAN over bundle” protecting a static route:

interface Bundle-Ether1.10

ipv4 address 10.0.10.1 255.255.255.0

encapsulation dot1q 10

!

router static

address-family ipv4 unicast

172.16.1.1/32 Bundle-Ether1.10 10.0.10.2 bfd fast-detect minimum-interval 500 multiplier 3

Example of a “BFD over VLAN over bundle” session with IGP peer:

interface Bundle-Ether1.20

ipv4 address 10.0.20.1 255.255.255.0

encapsulation dot1q 10

!

router isis lab

net 49.0111.0111.0111.0111.00

address-family ipv4 unicast

redistribute connected

!

interface Bundle-Ether1.20

bfd minimum-interval 500

bfd multiplier 3

bfd fast-detect ipv4

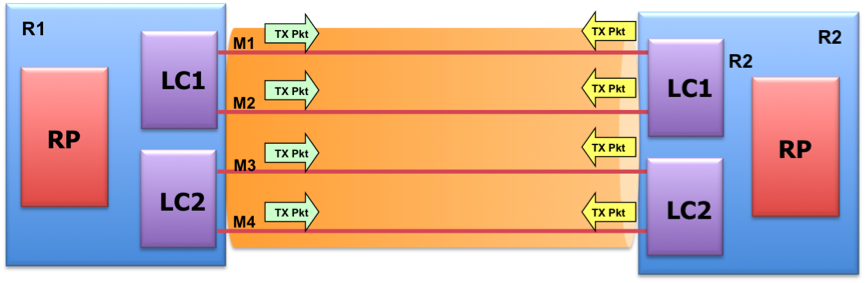

In “BFD Over Bundle (BoB)” feature IPv4 BFD session runs over every active bundle member.

Bundlemgr considers BFD states, in addition to existing L1/L2 states, to determine member link usability. Bundle member state is a function of :

- L1 state (physical link)

- L2 state (LACP)

- L3 state (BFD)

BFD Agent still runs on the line card. BFD states of bundle member links are consolidated on RP.

Member links must be connected back-to-back, without any L2 switches inbetween.

Async Mode

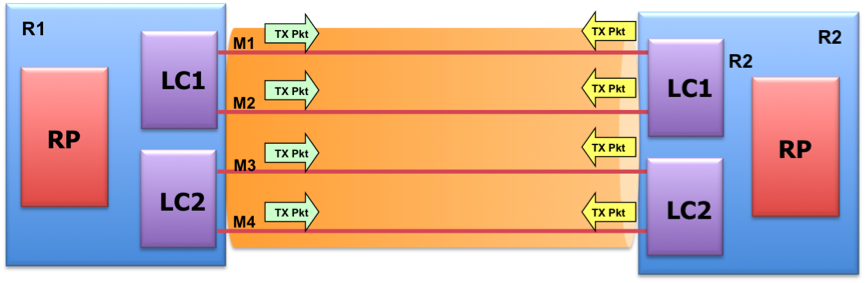

BoB supports both async mode and echo mode. Async mode is very similar to BFD over physical interface, as sync packets are running over each individual bundle member.

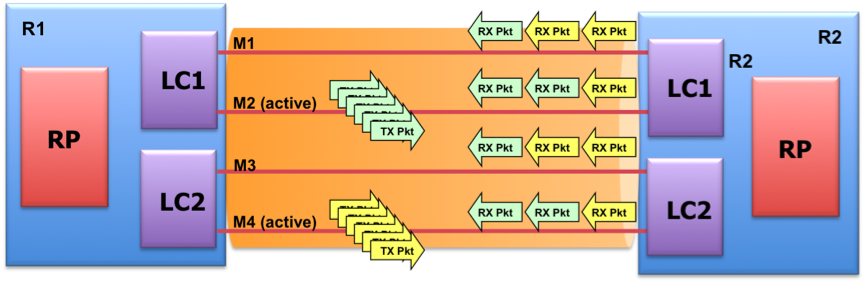

Echo Mode

Echo mode is also very similar to BFD over physical interface, with some special considerations for the TX/RX path. One member link per line card is designated to be the Echo Active member. Echo Active member is simultaneously sending a sufficient number of Echo packets to ensure that at least one will be returned over each active member link. BoB Echo packet header contains a discriminator field identifying the originating rack&slot and a sequence number which is used by the peer for hashing the Echo packets on the return path. Returned Echo packets are punted to LC CPU only if they were originated by the local Echo Active member.

By default, BFD Over Bundle member link runs in echo mode.

L2 Encapsulation

Ethernet frames carrying BoB packets are sent with CDP destination MAC address 0100.0CCC.CCCC.

On POS bundles, only the cHDLC encapsulation is supported.

BFD Over Bundle (BoB) Configuration

Configuration for enabling/disabling BFD per member link is under bundle interface submode.

interface Bundle-Ether1

bfd address-family ipv4 timers start 60

bfd address-family ipv4 timers nbr-unconfig 3600

bfd address-family ipv4 multiplier 3

bfd address-family ipv4 destination 1.2.3.4

bfd address-family ipv4 fast-detect

bfd address-family ipv4 minimum-interval 300

There is no need to configure BFD in IGP. In case of BFD failure bundle manager is notified immediately. If the number of active members is below minimum, bundle is immediately brought down, bringing down the IGP peering.

The fast-detect and destination are mandatory for bringing the session up.

The nbr-unconfig option was created to allow disabling BFD per member link without interrupting the bundle interface.

Async mode can be forced by disabling the echo mode at following sub-modes:

- global BFD configuration → applies to all sessions.

- bundle interface configuration → applies to all member link sessions for this bundle interface.

- Member link configuration → applies only to member link session.

Echo packet source IP address is configurable with same granularity.

Monitoring BFD Over Bundle (BoB)

Monitoring BoB Via CLI

In example below output BFD runs on Bundle-Ether1, with members being Gi0/0/0/2 and Gi0/0/0/3:

RP/0/RP0/CPU0:ASR9K#show bfd session

Interface Dest Addr Local det time(int*mult) State

Echo Async

----------- --------------- ---------------- ---------------- -----

Gi0/0/0/2 192.168.1.2 1350ms(450ms*3) 450ms(150ms*3) UP

Gi0/0/0/3 192.168.1.2 1350ms(450ms*3) 450ms(150ms*3) UP

BE1 192.168.1.2 n/a n/a UP

RP/0/RP0/CPU0:ASR9K#sh bfd count packet private detail location 0/0/CPU0

Bundle-Ether1 Recv Rx Invalid Xmit Delta

Async: 0 0 0

Echo: 0 0 0 0

GigabitEthernet0/0/0/2 Recv Rx Invalid Xmit Delta

Async: 1533 0 1528

Echo: (14976 ) 0 29952 14976

GigabitEthernet0/0/0/3 Recv Rx Invalid Xmit Delta

Async: 1533 0 1529

Echo: (14976 ) 0 0 14976

Monitoring BoB Via SNMP

Since the BFD session states are consolidated on the RP, SNMP get works only for basic CISCO-IETF-BFD-MIB OIDs. OIDs that can be used for monitoring BoB are:

- ciscoBfdSessIndex

- ciscoBfdSessDiscriminator

- ciscoBfdSessState

- ciscoBfdSessAddrType

- ciscoBfdSessAddr

- ciscoBfdSessVersionNumber

- ciscoBfdSessType

- ciscoBfdSessInterface

- coBfdSessUpTime

- ciscoBfdSessPerfLastSessDownTime

- ciscoBfdSessPerfSessUpCount

IOS XR releases 4.3.0 has introduced full support for IPv4 and IPv6 BFD sessions over bundle interfaces and sub-interfaces. For disambiguation from the BoB feature, this implementation is called BLB, and sessions are often referred to as native BFD sessions over bundle interfaces and/or sub-interfaces.

BLB operation relies on BFD multipath. Hence, echo mode is not supported.

Configuring BLB

BFD multipath must be enabled for any of these BFD flavours to work. All other configuration aspects are identical to “BFD over VLAN over bundle” configuration.

bfd

multipath include location 0/1/CPU0

!

interface Bundle-Ether1.10

ipv4 address 10.52.61.1 255.255.255.0

encapsulation dot1q 10

!

router static

address-family ipv4 unicast

10.52.61.2/32 Bundle-Ether1.10 10.52.61.2 bfd fast-detect minimum-interval 900 multiplier 3

BLB QoS Considerations

As in any multipath BFD session, egress BFD packets are not injected into high priority egress queue. If user desires to ensure that BFD packets are treated as high priority, such a QoS policy must be created and applied to the bundle (sub)interface.

Monitoring BLB

RP/0/RSP0/CPU0:ASR9K#sh bfd session interface bundle-e1.10 detail

Fri Apr 19 12:19:34.330 UTC

I/f: Bundle-Ether1.10, Location: 0/1/CPU0 ! <------ LC CPU hosting the BFD session

Dest: 10.52.61.2

Src: 10.52.61.1

State: UP for 0d:2h:6m:58s, number of times UP: 1

Session type: SW/V4/SH/BL ! <------ BLB session

< ... >

Timer Values:

Local negotiated async tx interval: 900 ms ! <--- configured timers applied to async

Remote negotiated async tx interval: 900 ms

Desired echo tx interval: 0 s, local negotiated echo tx interval: 0 s

Echo detection time: 0 s(0 s*3), async detection time: 2700 ms(900 ms*3)

< ... >

MP download state: BFD_MP_DOWNLOAD_ACK ! <------ Multi-Path

State change time: Apr 19 10:12:32.593

Session owner information:

Desired Adjusted

Client Interval Multiplier Interval Multiplier

-------------------- --------------------- ---------------------

ipv4_static 900 ms 3 900 ms 3

BoB-BLB coexistence is enabled using the “bundle coexistence bob-blb logical” global BFD configuration command:

bfd

multipath include location 0/1/CPU0

bundle coexistence bob-blb logical

Due to scaling considerations, only IPv4 BFD sessions runs over member links. Application IPv6 sessions are inheriting the states from the IPv4 BFD session for the same bundle.

When BoB feature is enabled, “BFD over VLAN over bundle” sessions (i.e. sessions on bundle sub-interfaces) of all address families are also inheriting the states from IPv4 BFD session for corresponding bundle interface.

| BFD Session Type |

BFD Session state |

| Up to IOS XR release 4.2.3 |

Starting with IOS XR release 4.3.0 |

| With BoB |

Without BoB |

With BoB |

Without BoB |

BoB-BLB coexistence |

| IPv4 over bundle interface |

Active |

Dormant |

Active |

Native |

Active |

| IPv4 over bundle sub-interface |

Inherits state |

"BFD Over VLAN Over Bundle" |

Inherits state |

Native |

Native |

| IPv6 over bundle interface |

Inherits state |

Dormant |

Inherits state |

Native |

Native |

| IPv6 over bundle sub-interface |

Inherits state |

Dormant |

Inherits state |

Native |

Native |

Troubleshooting BFD

Was the session created by the application?

- Use the "show bfd all session" command to see if the session has been created. If the session is not visible using the bfd show command, check that the adjacency for which the BFD session is being created exists in the application.

Can you ping the destination address for which the BFD session is to be created?

- The BFD session will only be created by an application for which the application adjacency/neighbour exists.

Has BFD been configured on both sides?

Has BFD been configured with correct destination address?

- Using non-default IP addresses with BFD requires special attention. The source IP address being used for BFD sessions can be verified with "show bfd all session detail" command.

If the session is visible using the BFD show commands but is not UP and the destination ip address of the BFD peer is reachable by pinging:

- execute the "show bfd all session detail" command and check whether the "Local Stats" from that show command indicate that packets are being transmitted and received?

- If not, check which NP corresponds to the interface in question and whether that NP is receiving BFD packets by running:

sh controllers np ports all location <location>”

sh controllers np counters <np> location <location> | inc "Rate|BFD"

sh uidb data location <location> <interface> ingress

sh uidb data location <location> <interface> ing-extension

If the MAX-PPS LIMIT is reached BFD session will be kept in ADMIN_DOWN state. In this scenario message BFD-6-SESSION_NO_RESOURCES will be reported in the syslog, e.g.:

RP/0/RP1/CPU0:Mar 13 09:51:06.556 : bfd[143]: %L2-BFD-6-SESSION_NO_RESOURCES : No resources for session to neighbor 10.10.10.2 on interface TenGigE0/1/0/2, interval=300 ms

Is the allowed policer rate for BFD packets reached?

- Run “show controllers np counters <np|all> location <location>” and look for BFD packet and drop counters.

Does this BFD session require BFD multi-path?

- Look for %L2-BFD-6-SESSION_NO_RESOURCES message in the syslog

- Run 'show bfd session detail | inc “^(Location|MP)”’ to confirm that a line card CPU was designated to host the BFD session.

Determine if there really is a connectivity issue that BFD has rightly discovered.

- Run a continuous ping to local i/f address (which is used by local echo and remote async) and remote i/f address (used by remote echo and local async) with a timeout of 1s and repeat the test.

Determine if the application adjacency has flapped (for example after RSP failover), which would cause the BFD session to be removed by the application.

- Examine the "show logging | inc bfd_agent" output on the neighbour to confirm whether the session was removed by the application.

Determine if the outgoing interface for a next-hop IP address changed (for example after APS link switch), which would cause the BFD session to be removed by the application, followed by creation of the BFD session with new outgoing interface.

- Examine the "show logging | inc bfd_agent" output on the neighbour to confirm whether the session was removed by the application.

If the log says "Nbor signalled down", check the logs on the peer to verify if the application has removed the session or if the routing adj has flapped.

If BFD is flapping continuously because of echo failure, it can be due to:

- The link is over-subscribed. Async and echo packets are sent to high priority queue by the sender but on the remote router which is switching back the echo packets, these echo packets are regular data packets. So with over-subscription, they can be dropped. Work-around is to add qos policy at each end to send UDP port 3785 packets to high priority queue.

- uRPF is configured on the interface. Need self-ping and remote routerid to be reachable.

- There could be timing issues where (e.g. because of SPF delay in IGPs) the remote router-id is installed in CEF after the BFD session comes up. This also causes flaps due to echo packet drops. Good practice is to configure BFD dampening (delay of the BFD session initialisation):

bfd

dampening secondary-wait 7500

dampening initial-wait 3000

dampening maximum-wait 180000

- BFD flapping due to echo failure but no echo packet loss. This is usually due to delay in the echo packets and one of the possible causes is traffic shaping. To check whether echo packets are delayed or dropped, run "show bfd counters packet private location <location>". If tx and rx count differ this means there's echo packet drops. If the tx and rx are the same but the "Rx Invalid" count is non-zero, this means echo packets are being delayed or coming back on wrong interface.

- BFD flapping after configuration change related to the BFD session. Clear the stale data by executing clear bfd persistent-data unassociated location <location>.

BFD flaps on a clear route.

- This is expected behaviour since "clear route" command clears all FIB entries in s/w and h/w, so it is expected that BFD will fail (since there is an expected forwarding outage). This can be confirmed by doing continuous local and remote ping. See TRG 17921. Similar issue is when fib_mgr is crashed which can cause forwarding disruption due to shmem init

Collect the following on both peers terminating the BFD session:

show tech-support routing bfd file

show logging

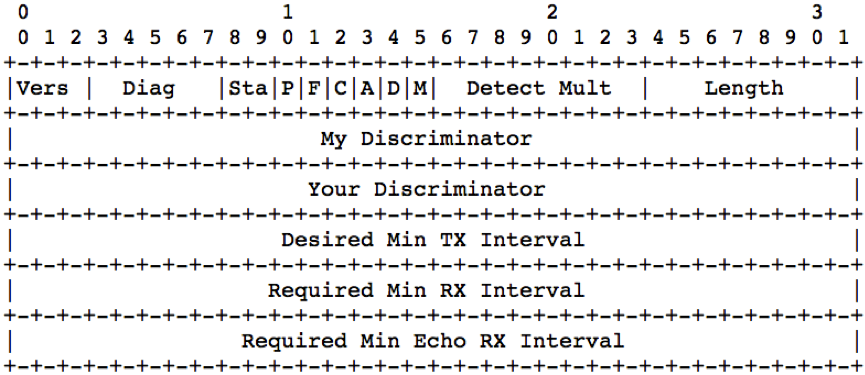

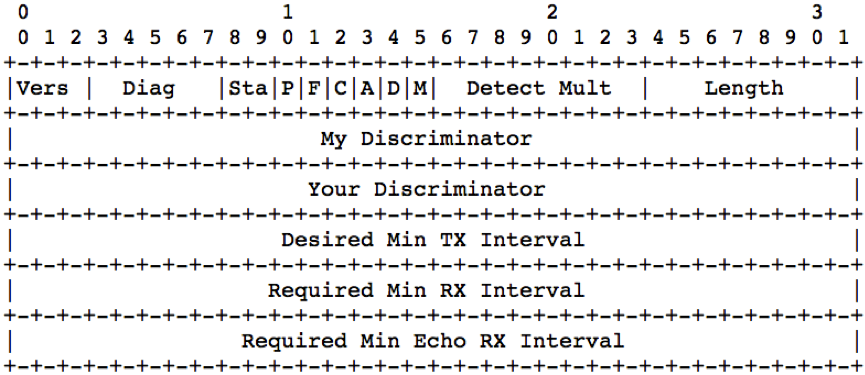

BFD has two versions: Version 0 and Version 1. The Difference in Version 1 is the “STA” bits replacing the “H” bit in Version 0.

- Vers: Version of BFD control header. XR runs version 1 as default, but can run version 0 as well.

- Diag: A diagnostic code specifying the local system's reason for the last change in session state, detection time expired, echo failed, etc.

- Sta: The current BFD session state as seen by the transmitting system.

- P: Poll bit, if set, the transmitting system is requesting verification of connectivity, or of a parameter change, and is expecting a packet with the Final (F) bit in reply.

- F: Final bit, if set, the transmitting system is responding to a received BFD Control packet that had the Poll (P) bit set.

- C: Set if BFD is independent of the Control plane. In Cisco implementation this bit is set.

- A: - Set if Authentication used. In Cisco implementation authentication is not supported.

- D: Set if Demand Mode is used.

- Detect Mult: Detection time multiplier. The negotiated transmit interval, multiplied by this value, provides the Detection Time for the transmitting system in Asynchronous mode.

- My Discriminator: A unique, nonzero discriminator value generated by the transmitting system, used to demultiplex multiple BFD sessions between the same pair of systems. Rack, Slot, Instance is encoded as upper 16bits:

RRRR RRRR SSSS SSII XXXX XXXX XXXX XXXX

- Your Discriminator: The discriminator received from the corresponding remote system. This field reflects back the received value of My Discriminator, or is zero if that value is unknown.

- Desired Min TX Interval: This is the minimum interval, in microseconds, that the local system would like to use when transmitting BFD Control packets.

- Desired Min RX Interval: This is the minimum interval, in microseconds, between received BFD Control packets that this system is capable of supporting.

- Required Min Echo RX Interval: This is the minimum interval, in microseconds, between received BFD Echo packets that this system is capable of supporting.