- Cisco Community

- Technology and Support

- Service Providers

- Service Providers Knowledge Base

- BNG Geo-Redundancy for L2-connected IPoE subscriber sessions

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

08-12-2018 09:18 AM - edited 03-01-2019 02:01 PM

- What is BNG Geo-Redundancy in a nutshell

- Technical Terms to be familiarized:

- BNG Geo-Redundancy in steady state operation

- SRG set up details:

- Control plane establishment:

- Data path establishment:

- BNG SRG handling while Access failure:

- How does subscriber choose IPv6 default gateway MAC?

- Influencing IPv6 default gateway over parent access-interface with RA preference:

- How point-to-point subscriber communication handled in BNG with fast switchover feature

- SRG system maintenance/upgrade best practice

- SRG Convergence while different fault triggers

- Without fast-switchover feature enabled:

- With fast-switchover feature enabled:

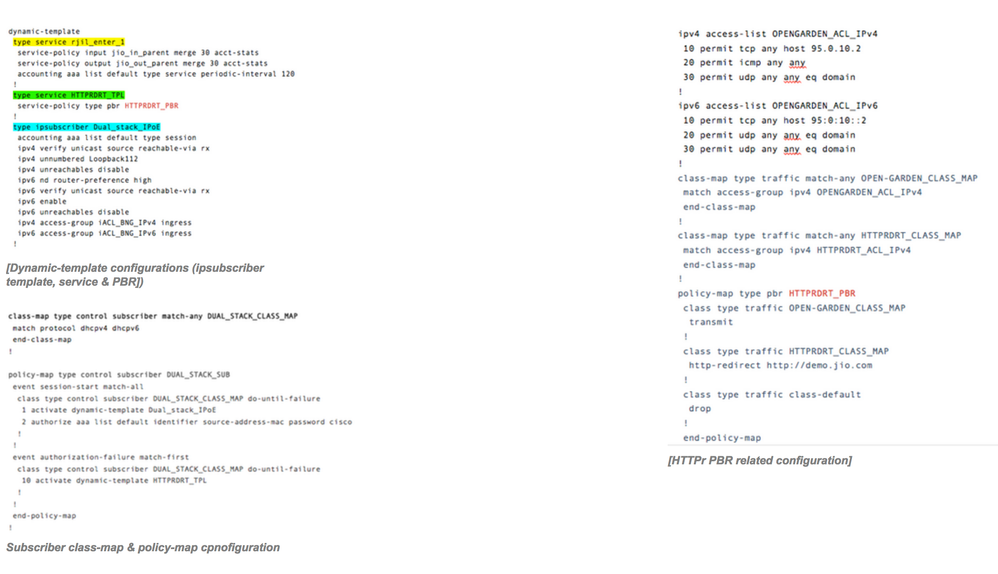

- BNG SRG Configuration saga [IPoE DS]

- Configuration :-

- Things to be taken care while SRG configuration:

- CLIs for verification and debugging

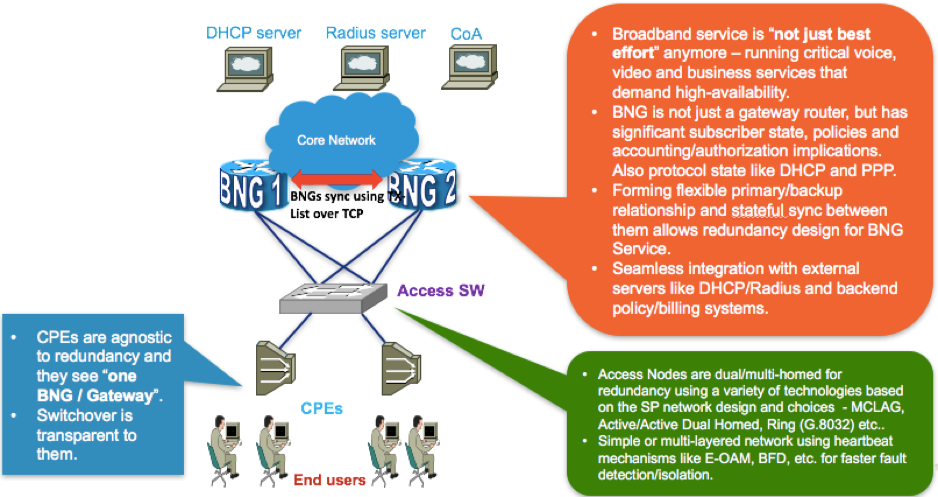

What is BNG Geo-Redundancy in a nutshell

BNG Geo-Redundancy provides redundancy for subscriber sessions (state full) across two or more BNGs which may be geographically spread out and without any dedicated layer-1/2 connectivity between the devices (i.e. they have L3 connectivity over a shared core network via usual IP/MPLS routing). Geographical redundancy for subscribers is delivered by transferring relevant session state from master to slave BNG which can then help in failover (FO) or planned switchover (SO) of sessions from one BNG device to another

Technical Terms to be familiarized:

SRG (Subscriber Redundancy Group): is a set of access interfaces (or just single one) and all subscribers over them would FO/SO as a group

Master/Slave: SRG system is a pair of 2 BNG nodes where one is Master and other acts as Slave in the context of SRG groups. The notion of master/slave is always in the context of a specific SRG and not for the BNG device as a whole

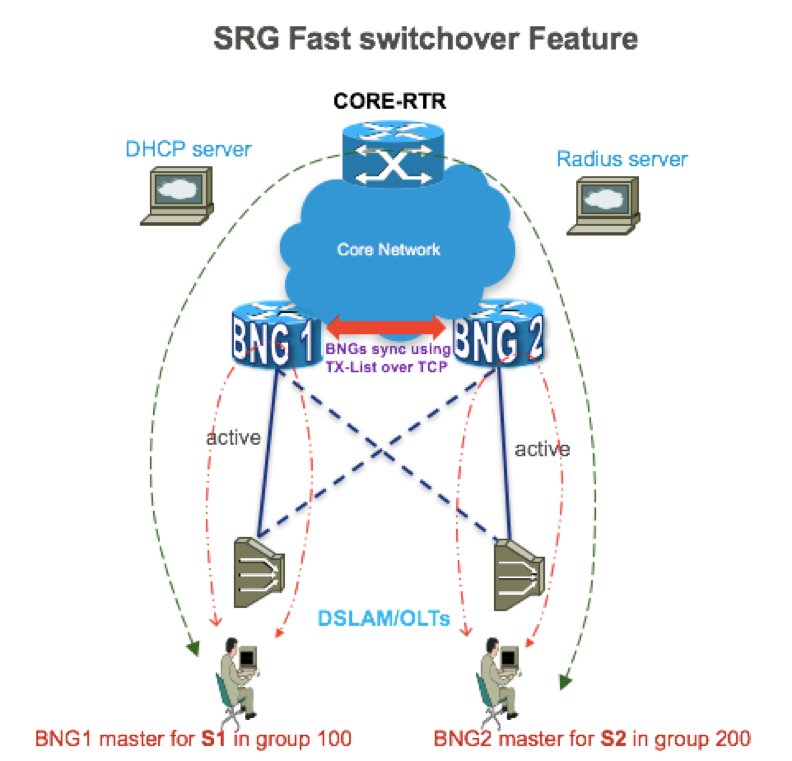

Active/Active SRG system: SRG system where both BNG nodes acting as Master/Slave for redundancy groups. Like BNG1 is master for some SRGs and for which BNG2 acting as slave AND BNG2 as master for some groups for which BNG1 is acting as Slave. Load balancing can be achieved In this deployment model as overall upstream/downstream subscriber traffic is taken over both BNGs

Active/Standby SRG system: here same BNG node will be set as master for all hosted SRGs. Like BNG1 node as master and BNG2 as slave for all SRGs hosted in the system

Revertive switchover: after Master role switch to the other node because of any failure on current master role switch back will be triggered to preferred master node once the failure get recovers on that node.

Virtual-mac: this defines the common MAC to be used for redundant BNG gateways. Both BNGs will use this MAC for their BNG access interfaces which are defined under the SRGs. This has group level scope. This is the MAC address which is seen as the gateway MAC by all subscribers in that SRG group and helps to switch the data from old Master to new Master seamlessly even after switchover due to core/access failures as both the gateway address and MAC remain unchanged. All the control packets (like DHCPv4, DHCPv6 ) and data packets will use vMAC as the source MAC at L2. Similarly, client will use the vMAC as the destination mac in upstream direction

Core-tracking: this parameter is to define the tracking object for core link.SRG SO reason can be access link failure, core link failure OR administratively triggered SO, so here SRG SO will be triggered when core-tracking is down

Access-tracking: this parameter is to define the tracking object for access-link. So here SRG SO will be triggered when access-tracking is down

SRG SO/FO: SRG switchover/failover is group level. SO/FO can be triggered by access link/core link failure, node level failure OR else switchover can be triggered administratively

Preferred-role: we can define which BNG node to be set as Master or Slave as per the network preference we have

Hold-timer: This configuration helps to avoid back-to-back SRG switchovers if want to be avoided. Once SRG-SO triggered by any means, hold timer kick in on current Master so that no more switchover until hold-timer expires.

Revertive-timer: this timer helps to enable revertive SO and revertive SO will be held till timer expires. By default, revertive-SO is disabled and this configuration enables it.

Slave-mode: slave mode can be hot-standby OR warm-standby, where hot-standby means subscriber sessions synced on slave node will be consuming HW resource on Slave also as complete HW programming of sessions happens on it. Warm-standy means HW resources won’t be consumed on slave and it just holds the sessions in PI and don’t program in HW. Currently warm-standby is not supported.

State-control-route: subscriber routes will be available on both master & slave nodes. With that we can’t distribute subscriber routes in dynamic routing protocols as upstream nodes will see subscriber reachability through both BNG nodes. To avoid that we can configure state-control route (usually subscriber network prefixes, ipv4/ipv6) under each SRGs based on that respective subscriber address pools. And these state-control routes can be redistributed over dynamic protocols based on route-policy. And only master node installs state-control routes in RIB so there won’t be any routing inconsistency.

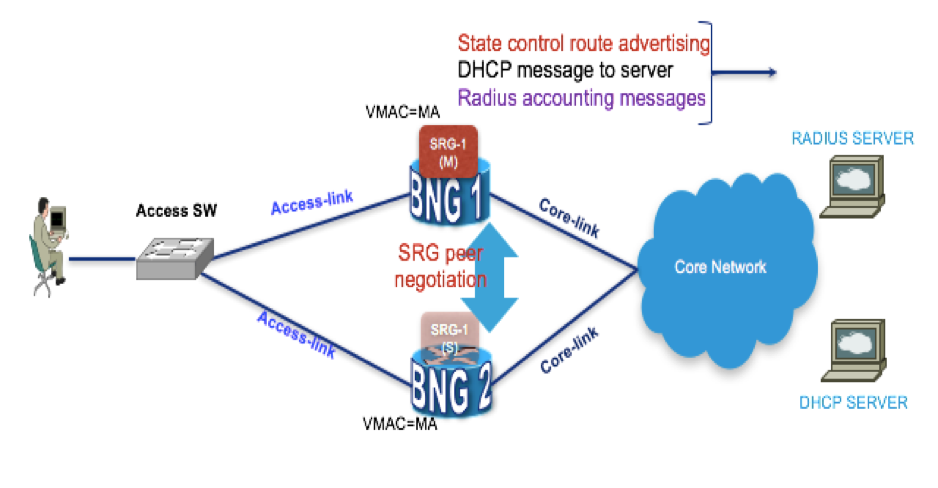

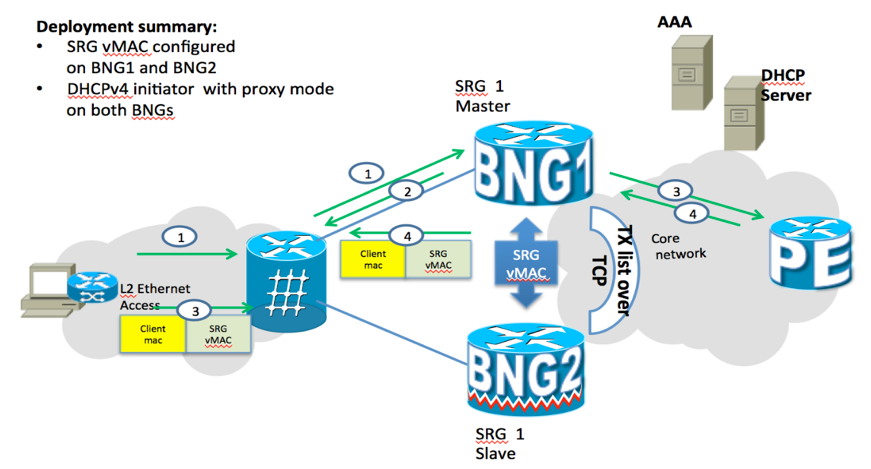

BNG Geo-Redundancy in steady state operation

SRG set up details:

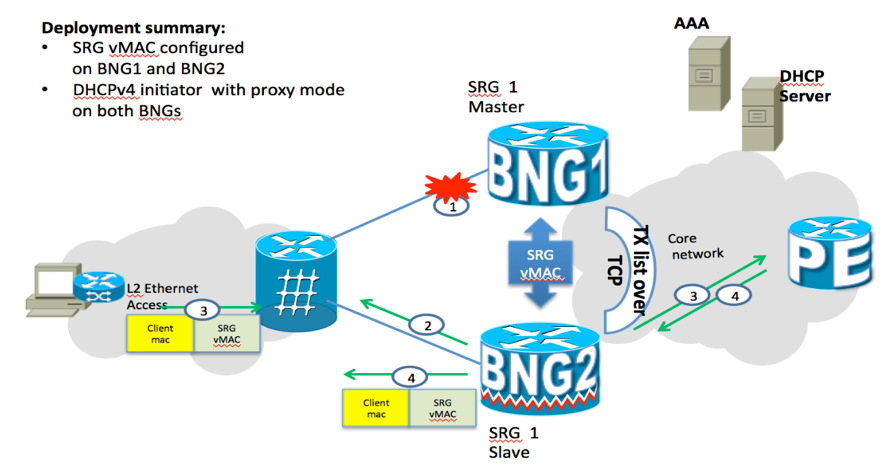

- Above deployment model is dual-home active/active access links: ex:- same bridge-domain on access SW hosting 3 links, one towards subscriber, other towards BNGs. Same broadcast domain for all 3 links

- Access links towards both BNGs are active mean control/data traffic can take any link from access side

- In above BNG1 is master and BNG2 is Slave for all SRGs

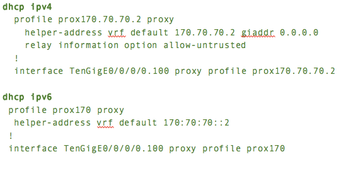

- BNGs acting in DHCP proxy mode

- RADIUS server and DHCP sever are routable through CORE network from both BNGs

- Dynamic routing protocols are set up from BNGs to CORE for upstream/downstream routing

- Gi-addr / subscriber sub-net is advertised to CORE from BNGs using state-control routes

- Access and Core links are tracked for failure detection on both BNGs

- Same vMAC configured on both BNGs in a given SRG

- L3 Reachability between BNGs over IP/MPLS

- Make sure identical configuration is maintained on both BNGs like, SRG group, interface IDs maped to each access interfaces in SRG, state-control routes, DHCP profile configs, vMAC in SRGs etc.

Control plane establishment:

- BNG nodes negotiate master/slave role while SRG peering establishment

- DHCP discover broadcasted in the bridge-domain on access-SW

- Discover message hit on both BNG nodes, but only Master node process it and Slave drops it

- RADIUS authorization kick in as per the control policy configuration and Master BNG trigger RADIUS access request to RADIUS server

- RADIUS server responds with access-accept to Master with associated user-profile

- Master BNG processes the discover and forwards unicast discover towards the server as per the dhcp proxy profile attached to the access interface

- Source address of DHCP message egress out of Master BNG will be Gi-addr configured in proxy profile. If Gi-addr is not configured then subscriber facing access interface IP will be used as the Gi-addr

- DHCP server respond to Master node with DHCP offer as the Gi-addr route prefix received from master [state control route active only on Master so master only advertise it to CORE]

- Master node forwards the offer to subscriber where source-mac will be the configured vMAC in the respective SRGs on BNG

- There onwards DHCP request/ack message transactions takes pace through Master

- Master node creates DHCP binding after DHCP four stage message transaction

- Master builds the subscriber database and sync to Slave [make sure config on both BNGs are identical]

- Master initiate gARP with vMAC immediately after subscriber session comes up

- Master node triggers Accounting start request for both session as well as service as per configuration towards RADIUS server, RADIUS server reply with accounting response message to Master-BNG

Data path establishment:

- Subscriber sends ARP request for default gateway IP address configured on BNG [IP address of subscriber parent access interface]

- Master SRG which owns the vMAC for the access-interface responds with vMAC

- Subscriber sends traffic with SRG vMAC as DMAC in Ethernet header; and upstream traffic is forwarded using BNG1 towards CORE

- Remote PEs sends the downstream traffic destined to subscribers to BNG1 as subscriber prefix state-control-route is active and advertised by BNG1(Master) only to CORE .And traffic egress out of BNG towards subscribers will have SMAC as SRG vMAC in Ethernet header.

BNG SRG handling while Access failure:

- On BNG1, access interface goes down when Geo-Red in steady state with all existing subscriber database in sync between BNG nodes

- Object-tracking notifies the tracking change to SRG, then role change scenario communicated to peer BNG over Txlist/TCP channel. And this role change scope is at individual subscriber redundancy group level as per the state of the access/core link status tracked in object tracking in the corresponding SRGs

- BNG1 decide to update role as Slave and BNG2 decide to take Master role given that access-link is and core-link are up on BNG2

- BNG2 (current master) sends the gratuitous ARP declaring ownership of SRG vMAC, and DHD updates its MAC table, Client continues sending traffic with SRG vMAC as DMAC, upstream traffic forwarded through BNG2 now

- Similarly, IPv6 ND RA message get triggered from BNG2 instead of BNG1 earlier

- On Slave (BNG1) State-control-route installed in RIB get deleted and same time state-control-route get installed in RIB on BNG2 (current master)

- Downstream traffic is now forwarded towards BNG2 from core network as subscriber state-control-route prefix received from BNG2

- BNG2 (current master) triggers fresh accounting start to RADIUS server for session and service profiles.

- BNG1 (current slave) triggers accounting-stop message towards RADIUS server for all sessions active on it before SRG switchover

How does subscriber choose IPv6 default gateway MAC?

With SRG vMAC configured, ND on BNG will send out two RAs to the client

- One for access interface with the original bundle/interface MAC

- Another out of the subscriber interface using the vMAC

Both RAs will hit client and order is not guaranteed, so the client could pick the default gateway from any of these RAs who comes late. This behaviour is undesirable in geo-redundancy since we want the client to populate IPv6 Gateway as vMAC from the RA received. This behaviour will also ensure that the client sees the same gateway even after the switchover so that data switches seamlessly from old master to new master.

Influencing IPv6 default gateway over parent access-interface with RA preference:

By default, ND on BNG sends RAs with preference as medium; We can modify the preference from the default to other values ‘Low’ or ‘High’

either through configuration on access-interface or through configuration on dynamic-template (Introduced in 5.2.2 release)

Configuration to achieve this:

interface <if-name>

ipv6 nd router-preference high|medium|low

[no] ipv6 nd router-preference

dynamic-template …

ipv6 nd router-preference high|medium|low

[no] ipv6 nd router-preference Route advertisement:

So to solve the problem explained above, we need one of the below pieces of configuration code which will ensure that the client selects the RA with vMAC always to populate its ipv6 default gateway.

interface Bundle-Ether7.10

ipv4 point-to-point

ipv4 unnumbered Loopback1

ipv6 nd other-config-flag

ipv6 nd router-preference low

ipv6 nd managed-config-flag

ipv6 enable

service-policy type control subscriber DUAL-STACK

ethernet cfm

mep domain OLT10 service OLT10 mep-id 111

!

!

encapsulation dot1q 10

ipsubscriber ipv4 l2-connected

initiator dhcp

!

ipsubscriber ipv6 l2-connected

initiator dhcp

!

!

OR

dynamic-template

type ipsubscriber DUAL-STACK

ipv4 unnumbered Loopback1

ipv6 nd other-config-flag

ipv6 nd router-preference high

ipv6 nd managed-config-flag

ipv6 enable

!

!

How point-to-point subscriber communication handled in BNG with fast switchover feature

Without “fast-switchover” feature enabled:

- Subscriber S1 is active with BNG1 in group 100 (BNG1 is master and BNG2 is slave for S1)

- Subscriber S2 is active with BNG2 in group 200 (BNG2 is master and BNG1 is slave for S1)

- But both S1 & S2 subscriber IP will be programmed in main table irrespective of master or slave

- With this, when traffic sourced from S1/S2 destined to S2/S1 will get locally switched on the respective Master nodes and eventually it fails

- However, the expectation is to get the subscriber to subscriber traffic routed through CORE network.

To avoid this local switching, we have ‘fast-switchover’ feature which works as below

- Configure ”enable-fast-switchover” under the groups 100 & 200 on both BNGs

Configuration example:

subscriber

redundancy

group 100

preferred-role Master

virtual-mac 0001.0301.0301

peer 1.0.0.1

enable-fast-switchover

!

!

!

- With this BNG1 installs S1 prefix in expected main routing table as well as in fallback-table and S2 route get added only in fallback-table. Fallback-table is an internally created VRF table (VRF name is derived from group no: fallback-table for SRG 100 is “**srg_100” & for SRG 200 is **srg_200)

- Similarly on BNG2, S1 route is installed in fallback-table and S2 route is installed in both main as well as fallback table.

- So routing is isolated between main-table & fallback-table as both are in different VRF tables. So there wont be any local switching of traffic between S1 & S2

- Resulting to, BNG1 learn S2 prefix from CORE and similarly BNG2 learn S1 from CORE

SRG system maintenance/upgrade best practice

- Active/active SRG scenario [Both BNGs are acting as Master for different set of groups]

- Trigger admin SO on BNG2 for all groups so that same BNG1 node become Master for all groups

- Now SRG system has same set of Master/Slave for all groups which is BNG1, all traffic flow thorugh BNG1 for all groups

- Shut all access interfaces across all SRGs on Slave , BNG2: To make sure there is no revertive SO while maintenance or upgrade after revertive timer expiry

- Perform the system upgrade/maintenance on the Slave BNG2 node

- Bring up all access interfaces on Slave BNG2 node after routing is converged and SRG peering is established fine for all SRGs

- Peers will negotiate SRG roles as per configuration in each SRGs

- Perform SRG SO on BNG1 and make sure all groups are activated as Master on the upgraded BNG node which is BNG2

- Perform upgrade/maintenance of BNG1 while all traffic flow been takne care through BNG2 for al lgroups

- Follow same steps as above which was carried out for BNG2 upgrade

SRG Convergence while different fault triggers

Without fast-switchover feature enabled:

| Trigger |

Upstream convergence (subscriber to CORE) |

Downstream convergence (CORE to subscriber) |

| Admin SO | 50msec |

250msec

|

| Access down | 50msec | 200msec |

| Master node power down | 40sec | 45sec |

With fast-switchover feature enabled:

| Trigger |

Upstream convergence (subscriber to CORE) |

Downstream convergence (CORE to subscriber) |

| Admin SO | 1.4sec | 250msec |

| Access down | 1.5sec | 500msec |

| Master node power down | 65sec | 40sec |

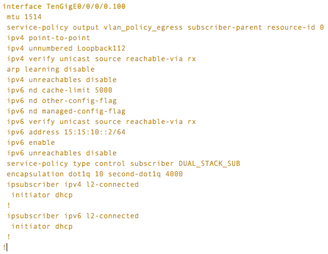

BNG SRG Configuration saga [IPoE DS]

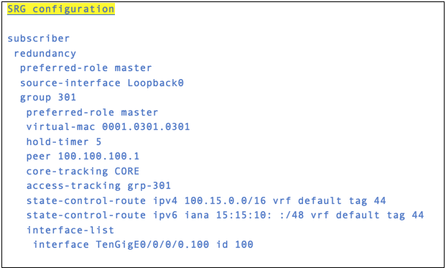

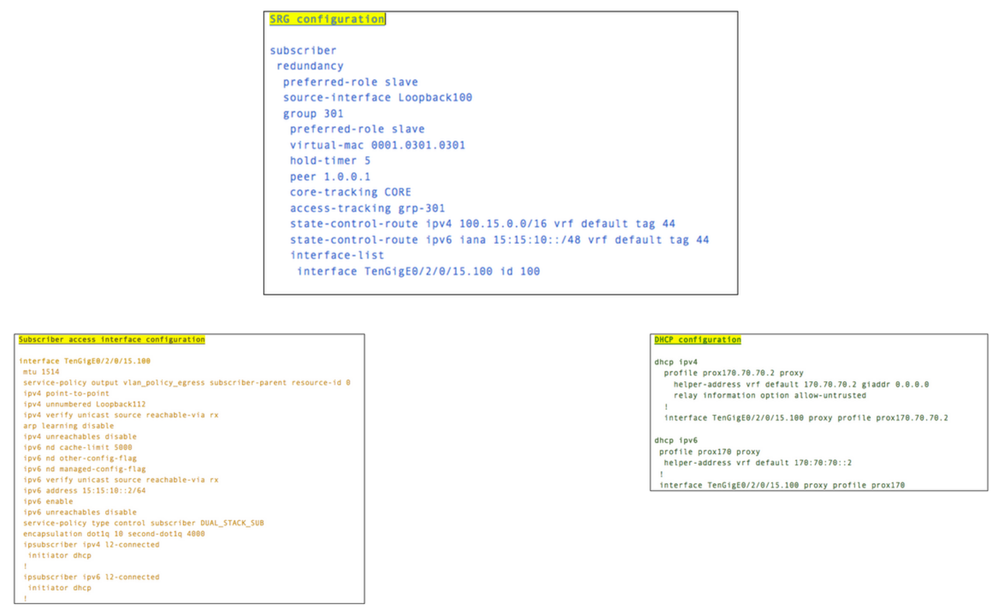

Configuration :-

Common configurations on both BNG nodes:

Above mentioned common configuration need to be replicated on BNG2 as well.

BNG1 node specific configurations

BNG2 node specific configurations

Things to be taken care while SRG configuration:

- Make sure to configure SRG source interface on both BNGs which are reachable each other

- Make sure reundancy group number is same on both BNGs for the intended group configuration

- Make sure interface ID associated with an access-intf is unique within the same SRG on both BNGs

- Make sure interface ID associated with an access-intf pair across BNG nodes in the same in a given SRG

- same inteface-id can be used across different SRGs

- srg_agt run on each LC location in case of LC subscribers, so SRG scaling is per LC and make sure scale is wthin supported

- make sure both BNGs are equal in HW resource while scaling subscribers

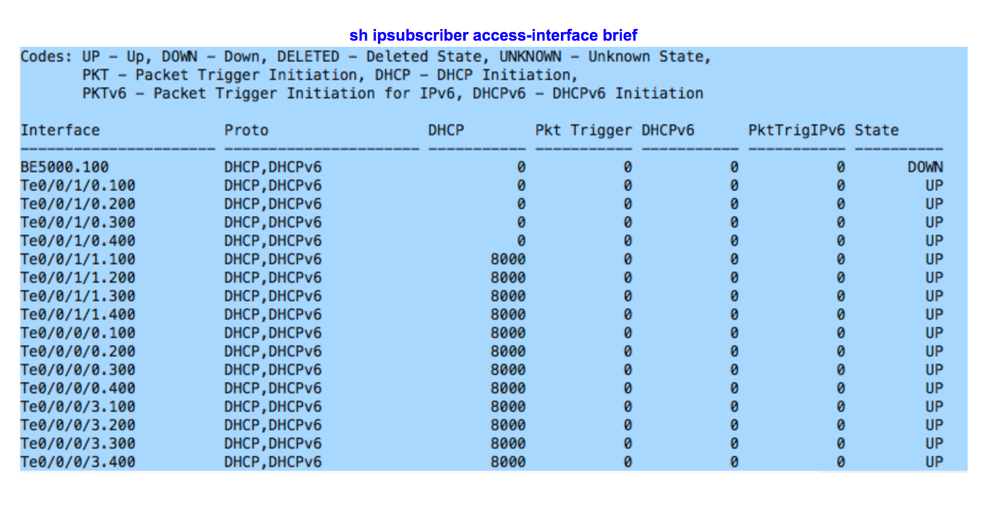

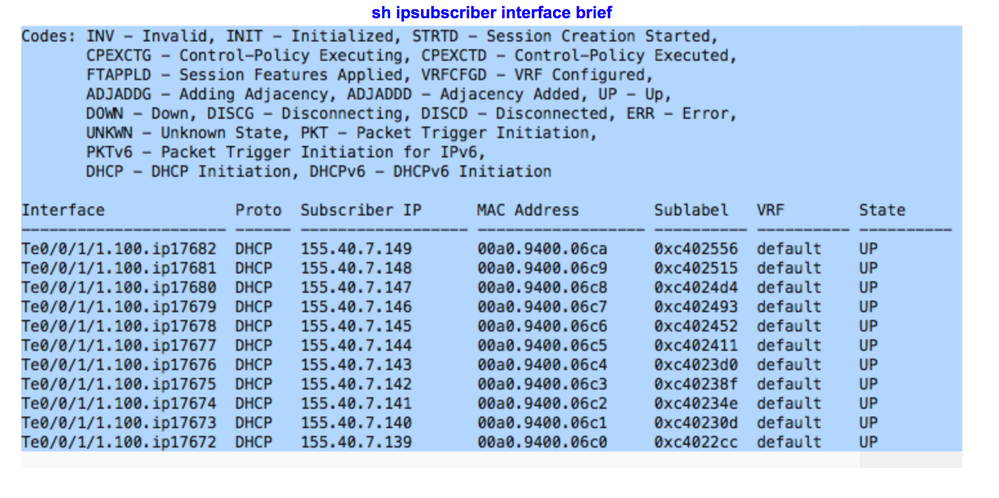

CLIs for verification and debugging

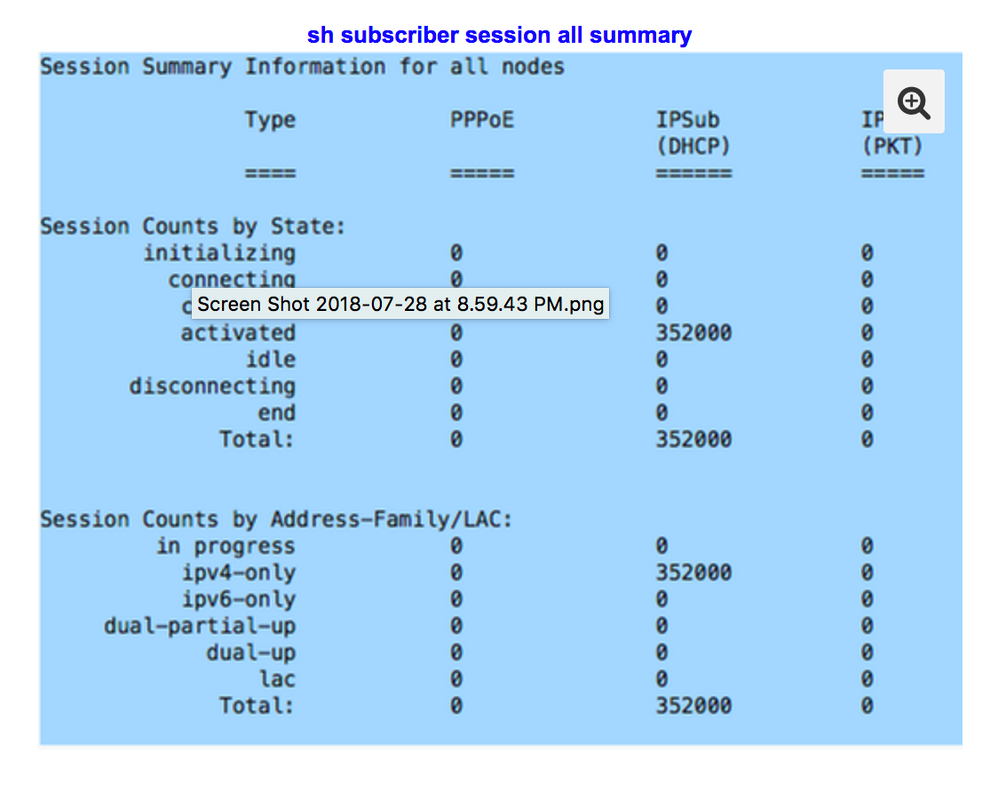

Verify over all subscriber session & interface statistics :

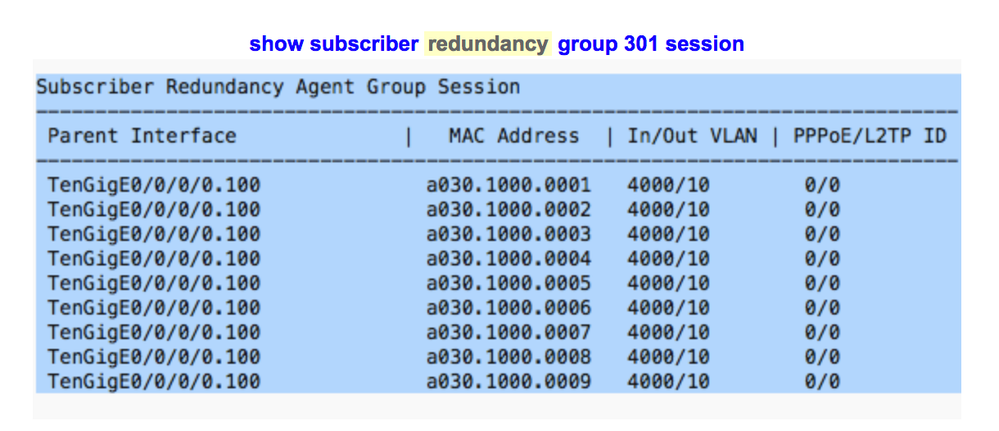

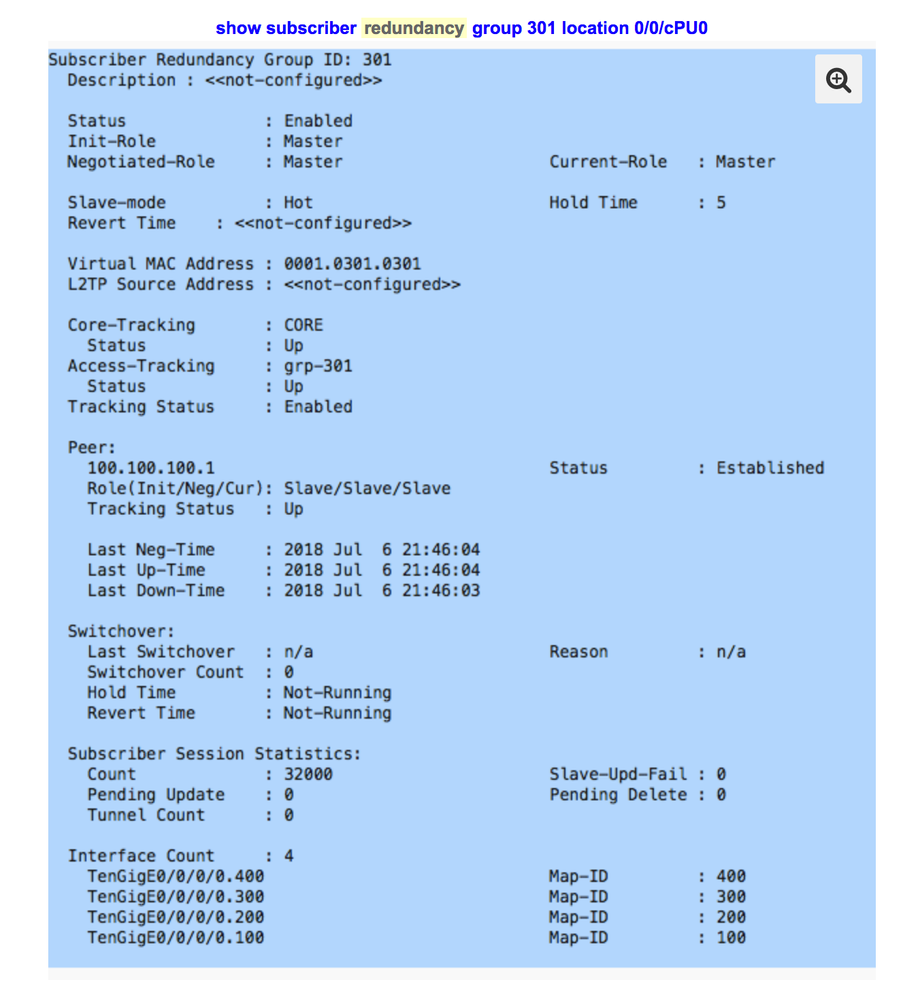

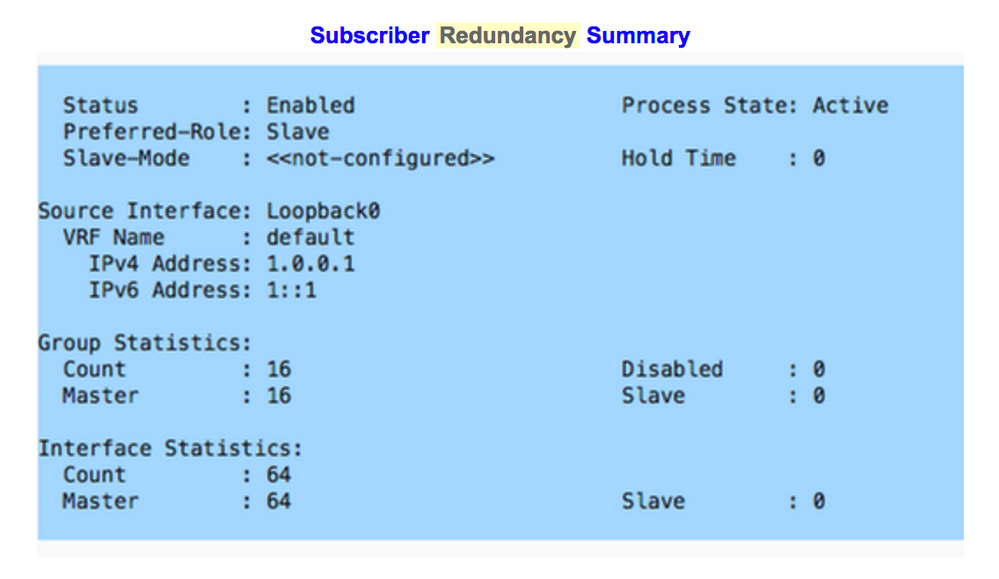

Subscriber Redundancy groups statistics and sessions information verification:

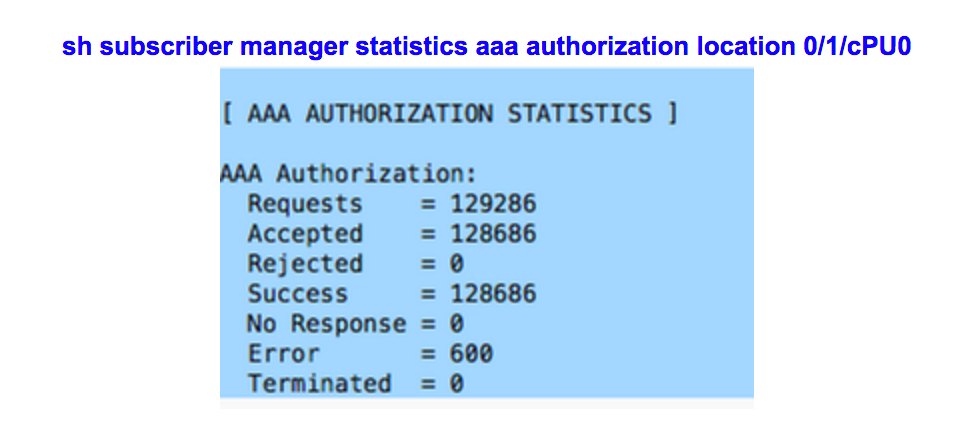

Subscriber session / service accounting & authorization statistics verification:

Only SRG master will hold these stats

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

- Gi-addr / subscriber sub-net is advertised to CORE from BNGs using state-control routes

If in DHCP proxy mode, Does this mean that only the BNGs in the master state will advertise the subnet route of the giaddr interface to the upper layer router? So that the downstream DHCP messages also return to the Master but not slave?

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: