- Cisco Community

- Technology and Support

- Networking

- Switching

- Re: Broken switch stack leads to 10Gbps flood

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Broken switch stack leads to 10Gbps flood

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-01-2022 02:33 AM - edited 06-01-2022 03:06 AM

Hello!

We have a stack of two 2960X switches. From the stack we have an uplink to our core switch on a portchannel with 4 10 Gig interfaces. Our core switch is a VSS with two Cisco 6880-XL switches.

Here are the connections between the 2960X stack and core switch:

VSS2 Ten 2/0/1 173 R S I C6880-X-L Ten 1/2/13

VSS2 Ten 2/0/2 145 R S I C6880-X-L Ten 2/2/14

VSS2 Ten 1/0/1 157 R S I C6880-X-L Ten 1/2/14

VSS2 Ten 1/0/2 152 R S I C6880-X-L Ten 2/2/13

At some point the someting happened to the stack (switch times in UTC):

May 30 23:39:08.517: %STACKMGR-4-SWITCH_REMOVED: Switch 2 has been REMOVED from the stack

May 30 23:39:09.534: %LINEPROTO-5-UPDOWN: Line protocol on Interface Port-channel3, changed state to down

May 30 23:39:10.534: %LINK-3-UPDOWN: Interface GigabitEthernet2/0/1, changed state to down

May 30 23:39:10.537: %LINK-3-UPDOWN: Interface GigabitEthernet2/0/3, changed state to down

May 30 23:39:10.537: %LINK-3-UPDOWN: Interface GigabitEthernet2/0/13, changed state to down

May 30 23:39:10.537: %LINK-3-UPDOWN: Interface GigabitEthernet2/0/25, changed state to down

May 30 23:39:10.537: %LINK-3-UPDOWN: Interface GigabitEthernet2/0/26, changed state to down

May 30 23:39:10.544: %LINK-3-UPDOWN: Interface Port-channel3, changed state to down

May 30 23:39:10.544: %LINK-3-UPDOWN: Interface GigabitEthernet2/0/27, changed state to down

May 30 23:39:10.544: %LINK-3-UPDOWN: Interface GigabitEthernet2/0/28, changed state to down

May 30 23:39:10.544: %LINK-3-UPDOWN: Interface GigabitEthernet2/0/29, changed state to down

May 30 23:39:10.544: %LINK-3-UPDOWN: Interface GigabitEthernet2/0/30, changed state to down

May 30 23:39:10.551: %LINK-3-UPDOWN: Interface GigabitEthernet2/0/31, changed state to down

May 30 23:39:10.551: %LINK-3-UPDOWN: Interface GigabitEthernet2/0/32, changed state to down

May 30 23:39:10.551: %LINK-3-UPDOWN: Interface TenGigabitEthernet2/0/1, changed state to down

May 30 23:39:10.558: %LINK-3-UPDOWN: Interface TenGigabitEthernet2/0/2, changed state to down

May 30 23:39:11.537: %LINEPROTO-5-UPDOWN: Line protocol on Interface GigabitEthernet2/0/1, changed state to down

May 30 23:39:11.540: %LINEPROTO-5-UPDOWN: Line protocol on Interface GigabitEthernet2/0/3, changed state to down

May 30 23:39:11.540: %LINEPROTO-5-UPDOWN: Line protocol on Interface GigabitEthernet2/0/13, changed state to down

May 30 23:39:11.540: %LINEPROTO-5-UPDOWN: Line protocol on Interface GigabitEthernet2/0/25, changed state to down

May 30 23:39:11.540: %LINEPROTO-5-UPDOWN: Line protocol on Interface GigabitEthernet2/0/26, changed state to down

May 30 23:39:11.547: %LINEPROTO-5-UPDOWN: Line protocol on Interface GigabitEthernet2/0/27, changed state to down

May 30 23:39:11.547: %LINEPROTO-5-UPDOWN: Line protocol on Interface GigabitEthernet2/0/28, changed state to down

May 30 23:39:11.547: %LINEPROTO-5-UPDOWN: Line protocol on Interface GigabitEthernet2/0/29, changed state to down

May 30 23:39:11.547: %LINEPROTO-5-UPDOWN: Line protocol on Interface GigabitEthernet2/0/30, changed state to down

May 30 23:39:11.554: %LINEPROTO-5-UPDOWN: Line protocol on Interface GigabitEthernet2/0/31, changed state to down

May 30 23:39:11.554: %LINEPROTO-5-UPDOWN: Line protocol on Interface GigabitEthernet2/0/32, changed state to down

May 30 23:39:11.554: %LINEPROTO-5-UPDOWN: Line protocol on Interface TenGigabitEthernet2/0/1, changed state to down

May 30 23:39:11.561: %LINEPROTO-5-UPDOWN: Line protocol on Interface TenGigabitEthernet2/0/2, changed state to down

May 31 03:05:37.343: %STACKMGR-4-STACK_LINK_CHANGE: Stack Port 1 Switch 1 has changed to state DOWN

May 31 03:05:37.343: %STACKMGR-4-STACK_LINK_CHANGE: Stack Port 2 Switch 1 has changed to state DOWN

These were the last messages in the logging buffer on stack member 1. At 3:05 I power cycled stack member 2.

The serial console on stack member 2 was unresponsive.

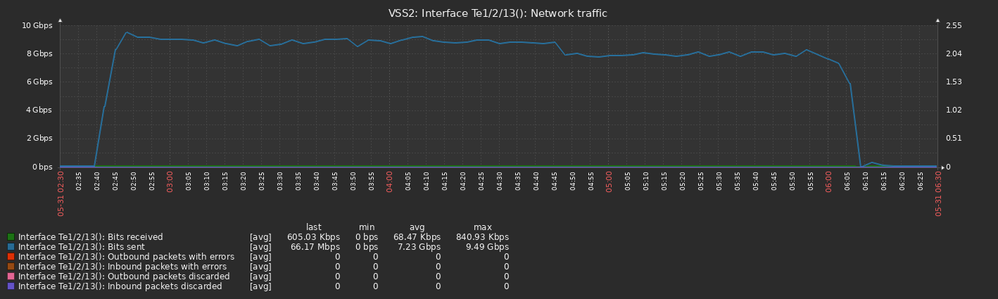

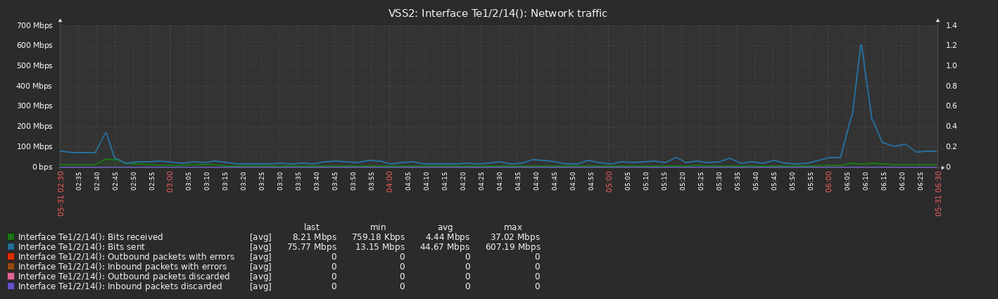

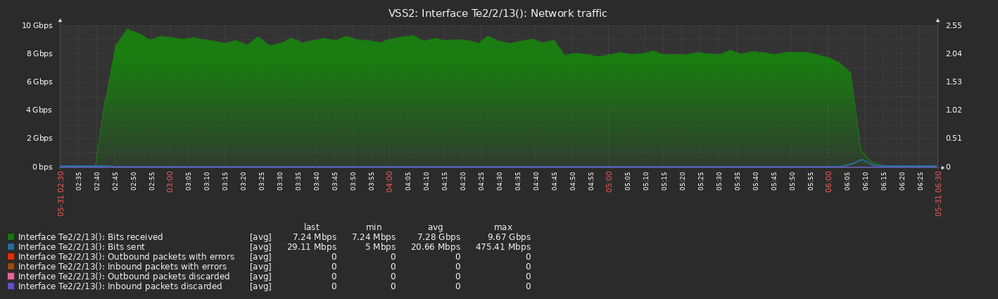

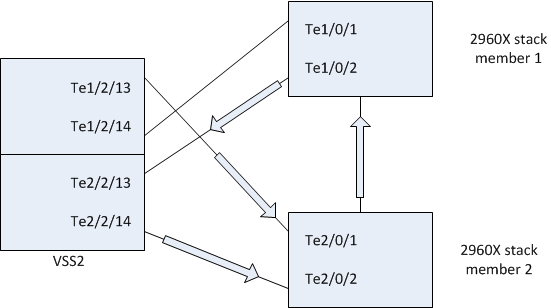

The instant the stack got broken the stack 1 member started a 10 Gbps flood on one of the portchannel members. The core switch received the traffic on Te2/2/13 (connected to Te1/0/2 on the stack) and looped back the traffic to the stack member 2 on Te1/2/13 (connected to Te2/0/1) and Te2/2/14 (connected to Te 2/0/2).

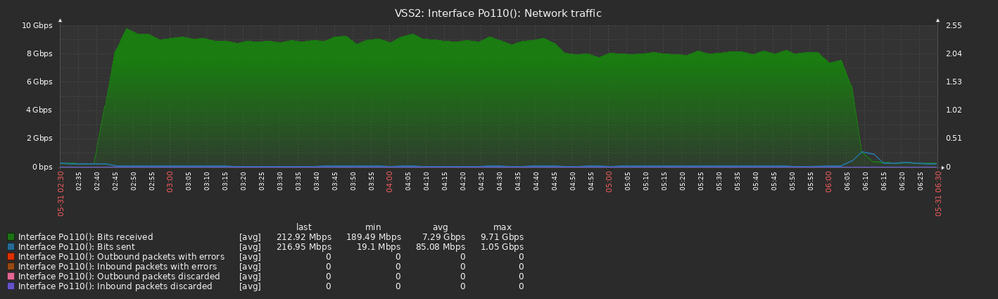

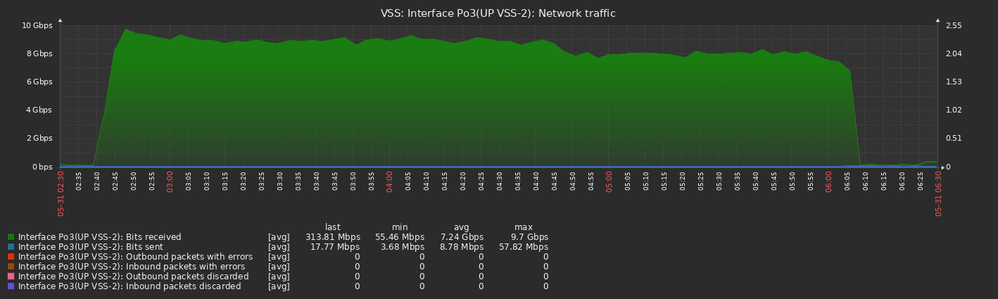

All graph times are UTC+3 and collected from the core switch (VSS2):

The flood propagated from the core switch to all other switches connected in our network. Here is the uplink to the other VSS 6880-XL switch:

Received traffic from core VSS2 on VSS1:

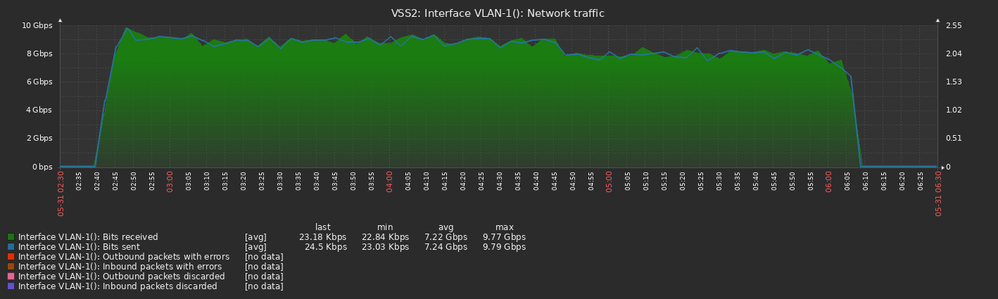

All this traffic was just on Vlan 1 which we don't use:

Here are the relevant configuration bits from the 2960X stack:

interface Port-channel1

switchport mode trunk

!

interface Te1/0/1, Te1/0/2, Te2/0/1, Te2/0/2

switchport mode trunk

channel-group 1 mode active

And the relevant configuration bits from the core switch:

interface Port-channel110

switchport

switchport mode trunk

!

interface Te1/2/13, Te1/2/14, Te2/2/13, Te2/2/14

switchport

switchport mode trunk

channel-group 110 mode active

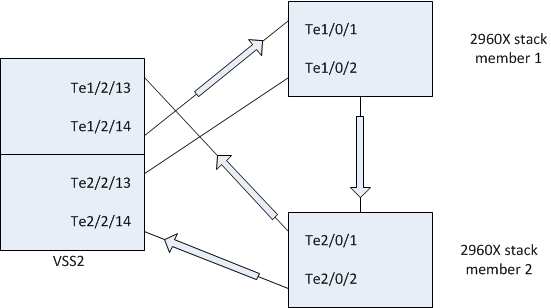

Please excuse the crudity of this design. I didn't have time to build it to scale or to paint it.

[EDIT]: The diagaram is wrong. The arrow should be reversed. I will upload a new one in the comments

From VSS2 to the stack member 1, through the stacking cables and from stack member 2 back to VSS on both uplinks. Also from VSS2 to all other switches in the network just on vlan1.

Now for the questions: what kind of traffic was it? Could it be some LACP negotiation packets? Spanning tree? What other traffic goes between switches on the default vlan 1? Was it a "split brain" situation with both stack members going master? Was the control plane on stack member 2 completly dead?

Thanks for your input.

- Labels:

-

Catalyst 2000

-

Catalyst 6000

-

LAN Switching

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-01-2022 03:04 AM

The arrows on the diagram should be the other way around. I will update the diagram

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-01-2022 03:05 AM

You can issue show interface command to see what kind of traffic, is this broadcast or any strom traffic.

how is your spanning tree config, using default vlan1 all over network is bad design.

allowing all VLAN in the port-channels not recomended, if the VLAN not exitist, they not required to be pruned.

you can also aplly strom controm on the port-channel if more can sutdown the ports.

all the informaiton you provided was usefull, but we need to look more information

each level interface output also spanning tree information

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-01-2022 03:10 AM - edited 06-01-2022 07:55 AM

VSS-2#sh int po110 counters etherchannel

Port InOctets InUcastPkts InMcastPkts InBcastPkts

Po110 108057163759917 144922439190 17210226 109188614

Te1/2/13 702057383158 3561883060 3115917 97703635

Te1/2/14 4580409008181 5224641257 254692 210715

Te2/2/13 24921884735903 33650068859 4640095 416347

Te2/2/14 77914159197137 102572407299 9237533 10914940

Port OutOctets OutUcastPkts OutMcastPkts OutBcastPkts

Po110 97149233532462 139047947510 271684624 111465541

Te1/2/13 46394711065877 61365151583 27476544 27579221

Te1/2/14 30233546112399 47517381775 26255888 35488944

Te2/2/13 16726984127394 21425677325 190887963 30658041

Te2/2/14 29937594139852 33524578884 29733024 19367775

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-01-2022 03:59 AM

that is expected behavour, still like to see your other information i have requested.

each level interface output also spanning tree information

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-01-2022 08:04 AM - edited 06-01-2022 08:10 AM

Hello!

What do you mean by each level interface output? I have posted the couters a few posts up. If that is not the correct information, can you give me the show command, please?

Here is the spanning tree config on both switches:

VSS-2#show spanning-tree summary

Switch is in rapid-pvst mode

Root bridge for: VLAN0001, VLAN0010-VLAN0016, VLAN0020-VLAN0022, VLAN0024

VLAN0031-VLAN0033, VLAN0040, VLAN0050-VLAN0059, VLAN0100-VLAN0113

VLAN0115-VLAN0129, VLAN0132-VLAN0142, VLAN0146-VLAN0147, VLAN0200-VLAN0201

VLAN0218, VLAN0226, VLAN0230, VLAN0235, VLAN0355, VLAN0555-VLAN0556

VLAN0560-VLAN0561, VLAN0563, VLAN0666-VLAN0667, VLAN0900-VLAN0918, VLAN0950

VLAN0960-VLAN0961, VLAN0999, VLAN1042-VLAN1043, VLAN1555

EtherChannel misconfig guard is enabled

Extended system ID is enabled

Portfast Default is disabled

Portfast Edge BPDU Guard Default is disabled

Portfast Edge BPDU Filter Default is disabled

Loopguard Default is disabled

Platform PVST Simulation is enabled

PVST Simulation Default is enabled but inactive in rapid-pvst mode

Bridge Assurance is enabled

UplinkFast is disabled

BackboneFast is disabled

Pathcost method used is short

edge-2960#sh spanning-tree summary

Switch is in rapid-pvst mode

Root bridge for: none

EtherChannel misconfig guard is enabled

Extended system ID is enabled

Portfast Default is disabled

Portfast Edge BPDU Guard Default is disabled

Portfast Edge BPDU Filter Default is disabled

Loopguard Default is disabled

PVST Simulation Default is enabled but inactive in rapid-pvst mode

Bridge Assurance is enabled

UplinkFast is disabled

BackboneFast is disabled

Configured Pathcost method used is short

Here is the correct diagram:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-01-2022 03:49 AM

Hello

When you have two stack members that are the MASTER/STANDBY switches with MEC uplinks then not only will the uplinks fail but control/distribution planes will get disrupted bit it for a very small amount of time IF before failure the stack had a full stack ring connection

Are the stack port stable, flapping stack port could cause unnecessary outage not only to the stack but will incurred STP tcn to propagate throughout your domains

How is your spanning-tree designed, what mode are your running?,,

Do you have any storm control applied to the edge/uplinks?

Please rate and mark as an accepted solution if you have found any of the information provided useful.

This then could assist others on these forums to find a valuable answer and broadens the community’s global network.

Kind Regards

Paul

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-01-2022 08:07 AM

Hello Paul!

I have posted in another reply the spanning tree configuration. We don't have any storm control confgured.

As for the stack ports, they were not flapping. They stayed up untill the stack member 2 was powered off.

I have also correced the diagram in my reply to BB.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-01-2022 08:35 AM

Is it normal to have *dead* memory on the 2nd place?

edge-2960#show processes memory sorted

Processor Pool Total: 366118500 Used: 75802564 Free: 290315936

I/O Pool Total: 33554432 Used: 12683508 Free: 20870924

Driver te Pool Total: 1048576 Used: 40 Free: 1048536

PID TTY Allocated Freed Holding Getbufs Retbufs Process

0 0 168482724 91524332 72143428 0 0 *Init*

0 0 297453368 1163321928 3048172 12451527 949166 *Dead*

408 0 1253944 16600 1247512 0 0 EEM Server

104 0 4536032 2132048 1124600 436080 0 Stack Mgr Notifi

0 0 0 0 525968 0 0 *MallocLite*

447 0 209897920 208154152 426880 0 0 hulc running con

444 0 13391544 11178112 410352 477144 0 LACP Protocol

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-01-2022 10:24 AM

Hello

@andreis wrote:

The instant the stack got broken the stack 1 member started a 10 Gbps flood on one of the portchannel members

The core switch received the traffic on Te2/2/13 (connected to Te1/0/2 on the stack)

and looped back the traffic to the stack member 2 on Te1/2/13

(connected to Te2/0/1) and Te2/2/14 (connected to Te 2/0/2).

I assume when you say "stack got broken" you are referring to when switch 2 dropped? As such the loop you mention should not have happened unless it wasn't down when you thought it was and as such you incurred some physical/logical loop.

I don't see any mention of you having UDLD or loopback applied for spanning-tree also you dont seen to have portfast enabled globally or at edge level so it could be a good possibility you did incurred stp convergence/loop that caused the high utilization.

Suggest you apply portfast on ALL edge ports and UDLD/Loopguard as well, even go a bit further and append some port security and storm control.

Please rate and mark as an accepted solution if you have found any of the information provided useful.

This then could assist others on these forums to find a valuable answer and broadens the community’s global network.

Kind Regards

Paul

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-03-2022 02:20 AM

Hello!

Yes, by broken I mean the fact that the serial console on member 2 was hung and running show switch on member 1 said that switch 2 was removed.

However, the activity leds on the Te2/0/1 and Te2/0/2 were blinking like crazy. We don't have UDLD configured. We use twinax cables for the uplinks (SFP-H10GB-CU3M).

I'm more interested in the failure mode. We already put in place some mitigation strategies.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-13-2023 12:55 AM

Hi Paul,

I'm interested in your input for the potential behaviour of half a ring stack topology should one module or cable fail(half ring, as in only 2 modules and 1 cable setup for two switches, 1 module per switch).

Would both switches continue to operate in the above topology connecting to different stack members at VSS? Because the hostname would still be shared, and interface set.

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: