- Cisco Community

- Technology and Support

- Networking

- Switching

- Re: %ID_MANAGER-3-INVALID_ID: Switch 1 R0/0: sessmgrd: bad id in id_ge

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-23-2022 11:14 AM

Has anyone ever come across this message before? We have a stack of 9300's running 16.9.4. This message started coming up every few minutes and since then MAB stopped working on sporadic ports. If we strip mab off the interface it works. This stack has been up for over two years with no mab issues until we started seeing these messages. Any suggestions?

May 23 13:12:07.104 edt: %ID_MANAGER-3-INVALID_ID: Switch 1 R0/0: sessmgrd: bad id in id_get (Out of IDs!) (id: 0x00000000) -Traceback= 1#e8963c1f27a9cfd7dabf2c1379086815 errmsg:7FE097E2C000+17D4 id_manager:7FE07F08F000+1C75 bi_baaa_core:7FE090966000+58507 bi_baaa_core:7FE090966000+5895B bi_baaa_core:7FE090966000+53185 bi_rcl_server:7FE0928B7000+2FC51 rcl_event_ipc:7FE08BD27000+539BF rcl_event_ipc:7FE08BD27000+A37F2 rcl_event_ipc:7FE08BD27000+A35E0 bi_rcl_server:7FE0928B7000+2C94B bi_rcl_server:7FE0928B7000+2D1A8 evlib:7FE081BE3000+8942 evlib:7FE081BE30

Solved! Go to Solution.

- Labels:

-

Catalyst 9000

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-23-2022 11:37 AM

Traceback messages are usually signs of software issues/bugs and possibly a memory leak issue. So, if you reload the switch, it may fix the problem temporarily, but at some point, you would have to upgrade to a different version to remedy the issue.

HTH

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-23-2022 06:07 PM

I recommend upgrading to the latest 16.9.X.

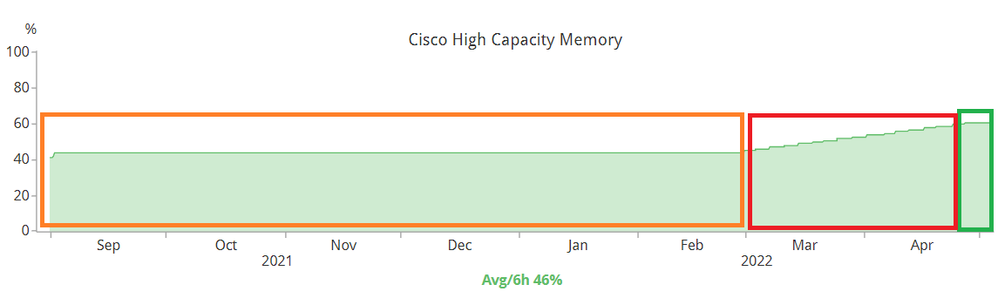

If left unattended, there will be a memory leak on the "keyman" process and it would look like this:

- The Orange block is the "normal" memory utilization.

- The RED block is when "keyman" process starts to leak.

- The GREEN block is when I applied the workaround. It will take several days for AFTER the workaround is applied for the memory utilization to stabilize.

@Steve_81 wrote:

Upgraded 80 switches, 6 of them bricked. Wouldnt even get to POST. Cisco BU is investigating.

How were the stack upgraded? Was it done using DNAC?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-09-2022 06:27 AM

For anyone else that runs into this similar issue, reload is a temporary fix. Upgrading code seems to have fixed the issue.

Thank you, Leo and Reza.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-23-2022 11:37 AM

Traceback messages are usually signs of software issues/bugs and possibly a memory leak issue. So, if you reload the switch, it may fix the problem temporarily, but at some point, you would have to upgrade to a different version to remedy the issue.

HTH

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-23-2022 04:06 PM

Post the complete output to the following commands:

- sh platform resource

- sh platform software status control-processor brief

- sh log

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-23-2022 04:29 PM

The sh log output only shows the initial error I posted and some MAB failures on specific ports/MACs. See below for the other outputs:

ESA1-U-X60-1083#sh platform software status control-processor brief

Load Average

Slot Status 1-Min 5-Min 15-Min

1-RP0 Healthy 0.20 0.13 0.10

2-RP0 Healthy 0.52 0.42 0.39

3-RP0 Healthy 0.35 0.40 0.37

4-RP0 Healthy 0.25 0.31 0.34

Memory (kB)

Slot Status Total Used (Pct) Free (Pct) Committed (Pct)

1-RP0 Healthy 7711568 4322916 (56%) 3388652 (44%) 5501284 (71%)

2-RP0 Healthy 7711568 2519484 (33%) 5192084 (67%) 3178332 (41%)

3-RP0 Healthy 7711568 1759764 (23%) 5951804 (77%) 1328456 (17%)

4-RP0 Healthy 7711568 1766020 (23%) 5945548 (77%) 1298748 (17%)

CPU Utilization

Slot CPU User System Nice Idle IRQ SIRQ IOwait

1-RP0 0 1.39 0.39 0.00 98.20 0.00 0.00 0.00

1 1.49 0.39 0.00 98.10 0.00 0.00 0.00

2 1.60 0.50 0.00 97.90 0.00 0.00 0.00

3 1.69 0.39 0.00 97.80 0.00 0.09 0.00

4 0.90 0.30 0.00 98.80 0.00 0.00 0.00

5 0.30 0.10 0.00 99.60 0.00 0.00 0.00

6 0.79 0.29 0.00 98.90 0.00 0.00 0.00

7 0.40 0.10 0.00 99.50 0.00 0.00 0.00

2-RP0 0 36.10 0.00 0.00 63.90 0.00 0.00 0.00

1 1.20 0.40 0.00 98.40 0.00 0.00 0.00

2 1.00 0.20 0.00 98.80 0.00 0.00 0.00

3 1.50 0.30 0.00 98.20 0.00 0.00 0.00

4 0.10 0.00 0.00 99.90 0.00 0.00 0.00

5 0.00 0.00 0.00 100.00 0.00 0.00 0.00

6 0.29 0.19 0.00 99.50 0.00 0.00 0.00

7 0.09 0.00 0.00 99.90 0.00 0.00 0.00

3-RP0 0 8.10 0.30 0.00 91.60 0.00 0.00 0.00

1 3.90 0.40 0.00 95.70 0.00 0.00 0.00

2 1.09 0.69 0.00 98.20 0.00 0.00 0.00

3 23.00 0.10 0.00 76.90 0.00 0.00 0.00

4 0.30 0.30 0.00 99.39 0.00 0.00 0.00

5 0.00 0.00 0.00 100.00 0.00 0.00 0.00

6 0.00 0.00 0.00 100.00 0.00 0.00 0.00

7 0.10 0.00 0.00 99.90 0.00 0.00 0.00

4-RP0 0 11.68 0.19 0.00 88.11 0.00 0.00 0.00

1 1.50 0.00 0.00 98.50 0.00 0.00 0.00

2 4.89 0.09 0.00 95.00 0.00 0.00 0.00

3 12.51 0.10 0.00 87.38 0.00 0.00 0.00

4 0.00 0.00 0.00 100.00 0.00 0.00 0.00

5 0.00 0.00 0.00 100.00 0.00 0.00 0.00

6 1.20 0.10 0.00 98.69 0.00 0.00 0.00

7 0.00 0.00 0.00 100.00 0.00 0.00 0.00

ESA1-U-X60-1083#

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-23-2022 04:31 PM

Forgot the show platform resources:

ESA1-U-X60-1083#sh platform resources

**State Acronym: H - Healthy, W - Warning, C - Critical

Resource Usage Max Warning Critical State

----------------------------------------------------------------------------------------------------

Control Processor 2.00% 100% 5% 10% H

DRAM 4221MB(56%) 7530MB 90% 95% H

ESA1-U-X60-1083#

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-23-2022 05:04 PM

@Steve_81 wrote:

some MAB failures on specific ports/MACs

I want to see those errors (change the last 6 characters of the MAC addresses but leave the OUI alone). I want to determine if high frequency of failing MAB is causing the stack to "not catch up" and generating this traceback.

The output from the two commands show nothing unusual.

Wait ... 16.9.4. What is the uptime of this stack?

Do the logs contain anything about Cisco Smart License/CSSM/CSLU?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-23-2022 05:30 PM

Switch uptime was just over 2 years, and yes it is 16.9.4. I want to upgrade the IOS but we recently had some bad experiences with 9300's running that same code. Upgraded 80 switches, 6 of them bricked. Wouldnt even get to POST. Cisco BU is investigating.

Just rebooted stack, so far so good. Won't really know until tomorrow morning but wanted a temp fix until we can get an upgrade time.

Below are some more MAB failures as well as the message in between in case there is some correlation. The VLAN failure message wasn't as common as the other auth failure. There were some Smart License messages, but it was all success messages. Let me know if you need more.

MAB Failure examples:

-notice local7 May 23 08:11:17 10.4.22.194 edt: %SESSION_MGR-5-FAIL: Switch 1 R0/0: sessmgrd: Authorization failed or unapplied for client (e454.e8xxxxxxx) on Interface TwoGigabitEthernet2/0/28 AuditSessionID C216040A0001B38EF0D42855. Failure Reason: VLAN Failure. Failed attribute name VLANNAME.

-error local7 May 23 08:14:19 10.4.22.194 edt: %ID_MANAGER-3-INVALID_ID: Switch 1 R0/0: sessmgrd: bad id in id_get (Out of IDs!) (id: 0x00000000) -Traceback= 1#e8963c1f27a9cfd7dabf2c1379086815 errmsg:7FE097E2C000+17D4 id_manager:7FE07F08F000+1C75 bi_baaa_core:7FE090966000+58507 bi_baaa_core:7FE090966000+58381 bi_baaa_core:7FE090966000+5BF0F bi_baaa_core:7FE090966000+51F91 bi_session_mgr:7FE095A17000+B6B36 bi_session_mgr:7FE095A17000+6D7AB bi_sadb:7FE092480000+729D bi_sadb:7FE092480000+764B bi_session_mgr:7FE095A17000+6EBD8 bi_session_mgr:7FE095A17000+57542 bi_session_mgr:7FE

-notice local7 May 23 08:14:19 10.4.22.194 edt: %SESSION_MGR-5-FAIL: Switch 1 R0/0: sessmgrd: Authorization failed or unapplied for client (f439.09xxxxxxxx) on Interface TenGigabitEthernet3/0/44 AuditSessionID C216040A0001B38FF0D6EC3E.

Smart License (received more of the same message after reload):

info local7 May 19 10:45:45 10.4.22.194 edt: %SMART_LIC-6-AUTH_RENEW_SUCCESS: Authorization renewal successful. State=authorized for udi PID:C9300-48U,SN:xxxxxxxx

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-23-2022 06:07 PM

I recommend upgrading to the latest 16.9.X.

If left unattended, there will be a memory leak on the "keyman" process and it would look like this:

- The Orange block is the "normal" memory utilization.

- The RED block is when "keyman" process starts to leak.

- The GREEN block is when I applied the workaround. It will take several days for AFTER the workaround is applied for the memory utilization to stabilize.

@Steve_81 wrote:

Upgraded 80 switches, 6 of them bricked. Wouldnt even get to POST. Cisco BU is investigating.

How were the stack upgraded? Was it done using DNAC?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-23-2022 06:24 PM

The stacks that had issues were upgraded using the 'request platform software package install' commands and SecureCRT to copy the bin to the switches. Initially Cisco thought the request platform commands could have played a role. We have been using the 'install add file file:filename activate commit' since and haven't had any issues, but have only upgraded a few stacks.

Cisco BU was torn between ios and hardware, but seem to be leaning toward a possible hardware issue. They had us look for specific memory leak errors related to the bricked switches and we are only seeing them in the one location we had problems. So possibly a bad batch of 9300's. At least, that's what we're hoping.

This was the engineers latest response, in case you were curious:

"The development team has created a bug ID to track this issue. The Bug ID is CSCwb57624. It is public-facing so you can view it. The root cause of the BOOT failure you experienced was errors in the physical memory.

CMRP-3-DDR_SINGLE_BIT_ERROR: Switch 1 R0/0: cmand: Single-bit DRAM ECC error: mme: 0,"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-23-2022 06:29 PM

I should also note, the problem switches were upgraded to 17.3.4.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-23-2022 06:31 PM

@Steve_81 wrote:

"The development team has created a bug ID to track this issue. The Bug ID is CSCwb57624. It is public-facing so you can view it. The root cause of the BOOT failure you experienced was errors in the physical memory.

CMRP-3-DDR_SINGLE_BIT_ERROR: Switch 1 R0/0: cmand: Single-bit DRAM ECC error: mme: 0,"

That Bug ID is now "private".

@Steve_81 wrote:

The stacks that had issues were upgraded using the 'request platform software package install' commands and SecureCRT to copy the bin to the switches. Initially Cisco thought the request platform commands could have played a role. We have been using the 'install add file file:filename activate commit' since and haven't had any issues, but have only upgraded a few stacks.

No, it is not.

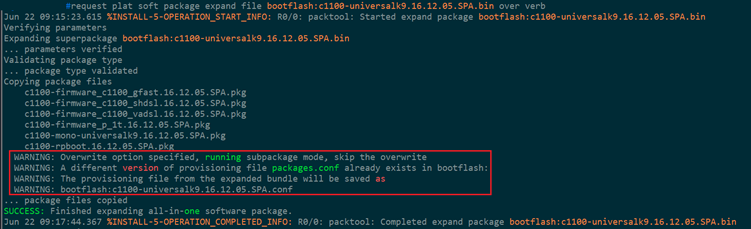

I always use (and recommend) the command "request platform software package install switch all file flash:filename on-reboot new auto-copy verbose" because it gives me the opportunity to reboot the stack at a later date as well as make verification that I am not hitting the "package.conf" not being created bug (see below).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-23-2022 06:39 PM

I didn't think so either. And I'm with you, I prefer request platform as well just for that reason. I'll be sure to respond to this once I have the stack upgraded in case anyone else has anything similar. In short, a reload of the stack seems to have fixed the issue for now but ios upgrade is needed ASAP.

And I thought you'd get a kick out of the public facing bug ID

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-09-2022 06:27 AM

For anyone else that runs into this similar issue, reload is a temporary fix. Upgrading code seems to have fixed the issue.

Thank you, Leo and Reza.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-18-2022 08:14 AM - edited 10-18-2022 08:18 AM

Ran into the same issue with the C9300 running 16.9.4 and ended up resolving it by upgrading to 17.4.1. The interesting part is that we have hundreds of c9300 running the same code for years now and have not seen, at least at any recognizable frequency, this problem before. The stack in question was also only up for 34 weeks.

The second part of the problem you had sounds like you may have used deprecated commands: "request platform"

To avoid install issues use the following guide:

Upgrade Guide for Cisco IOS XE Catalyst 9000 Switches - Cisco

OR TLDR;

software auto-upgrade enable

install add file bootflash:<new .bin file> activate commit

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-18-2022 12:45 PM

There are two commands to upgrade the firmware of a 9300 and a vanilla 9500, and they are:

- request platform software package install switch all file flash:filename on-reboot new auto-copy verbose

- install add file bootflash:filename activate commit

The first one has the "on-reboot" option. This is actually a bug itself because it is a type-o (CSCve94966). If I use this option, I can reboot the stack at a future time.

The 2nd option will perform a one-hit-wonder upgrade. There is no "official" method to reboot the stack at a later date. Once the "y" is entered, the stack will reboot.

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide