- Cisco Community

- Technology and Support

- Networking

- Switching

- Nexus 5000 switch and jumbo frames

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Nexus 5000 switch and jumbo frames

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-24-2019 04:39 PM

Hello all, I'll start off with some details:

Cisco Nexus 5010 Chassis ("20x10GE/Supervisor")

System: version 5.2(1)N1(9a)

There is a Nimble device plugged into Eth1/17 which we will use for iSCSI traffic. This interface is in VLAN 80, which has its own layer 3 vlan interface with the IP address being 192.168.80.250.

I want to enable jumbo frames so on the Nimble interface I've enabled Jumbo Frames (9000). On the switch I have entered the global configuration to enable jumbo frames:

policy-map type network-qos jumbo class type network-qos class-default mtu 9216 exit exit system qos service-policy type network-qos jumbo

I then set it on the SVI vlan interface:

interface vlan80 mtu 9216

However when I then try to ping the Nimble iSCSI interface (which has an IP address of 192.168.80.248) with a frame size of 9000 I get time-outs:

ping 192.168.80.248 packet-size 9000 c 10 PING 192.168.80.248 (192.168.80.248): 9000 data bytes Request 0 timed out Request 1 timed out Request 2 timed out Request 3 timed out Request 4 timed out Request 5 timed out Request 6 timed out Request 7 timed out Request 8 timed out Request 9 timed out

But - when I change the SVI vlan interface back to 1500, I can then ping with a 9000 packet size but I do suffer packet loss (with a higher ping count):

TEST-5K-01(config-if)# ping 192.168.80.248 packet-size 9000 c 100 PING 192.168.80.248 (192.168.80.248): 9000 data bytes 9008 bytes from 192.168.80.248: icmp_seq=0 ttl=63 time=1.555 ms 9008 bytes from 192.168.80.248: icmp_seq=1 ttl=63 time=1.281 ms 9008 bytes from 192.168.80.248: icmp_seq=2 ttl=63 time=1.233 ms 9008 bytes from 192.168.80.248: icmp_seq=3 ttl=63 time=1.285 ms ...remaining pings omitted --- 192.168.80.248 ping statistics --- 100 packets transmitted, 96 packets received, 4.00% packet loss round-trip min/avg/max = 1.217/1.825/2.264 ms

show queuing interface ethernet 1/17 shows that MTU 9216 is set.

I've tried different MTU values and anything above 4000 starts to encounter packet loss.

Does anybody know why its behaving like this - is it a switch misconfiguration or does the problem lie on the Nimble side?

Thanks.

- Labels:

-

Other Switches

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-24-2019 05:19 PM

Hi @Kernel-Panic ,

According to this guide, this configuration is only for the nexus 7000:

interface vlan80 mtu 9216

Try removing those parameters

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-24-2019 06:19 PM

Hi,

On the 5ks and 6ks, Jumbo frame config is global (It applies to the whole switch) and so the jumbo frame command does not apply to an SVI interface.

HTH

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-25-2019 03:28 AM

Thanks both, I removed the MTU config from the SVI interface and it is now back to the default 1500:

show int vlan80 Vlan80 is up, line protocol is up Hardware is EtherSVI, address is 547f.ee68.56bc Internet Address is 192.168.80.250/24 MTU 1500 bytes, BW 1000000 Kbit, DLY 10 usec

However I still getting 4% packet loss when pinging the Nimble storage with a packet-size of 9000

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-25-2019 03:39 AM

policy-map type network-qos jumbo

class type network-qos class-default

mtu 9216

multicast-optimize

system qos

service-policy type network-qos jumbo

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-25-2019 05:03 AM

Thanks Mark, unfortunately that didn't work, I'm still getting 4-5% packet loss with MTU of 9000

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-25-2019 05:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-25-2019 06:06 AM

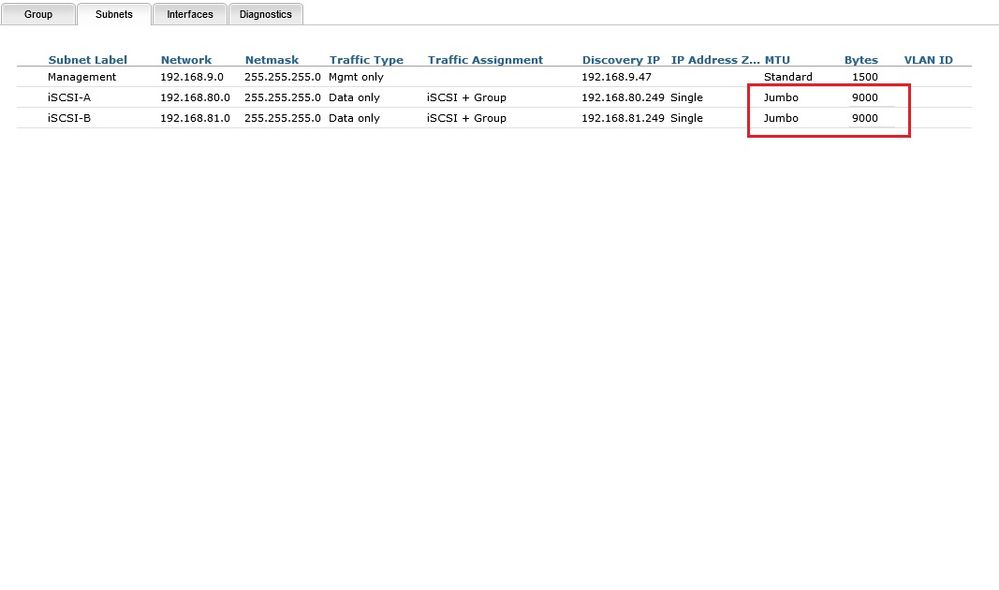

Hello again, yes its set on the storage side:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-25-2019 06:40 AM

We also run Jumbo on our 5548UP Nexus and don't have any issues.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-25-2019 06:50 AM

I suppose its possible but when I ping the iSCSI-B address (which is a different switch and cable) I get the same result.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-25-2019 07:47 AM

Does the iSCSI Interface show you any statistics on storage side?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-25-2019 08:04 AM

There's no increasing error counts on the switch interface; it does behave rather oddly - I've just done a ping with 9000 mtu and as expected it suffered a small percentage of packet loss; I then did the same ping again and it completely failed with all pings timing out and 100% packet loss.

I then did a normal ping without specifying packet size which worked as expected with no packet loss; after that I was able to ping with 9000 MTU but again with a small percentage of packets lost.

I get the feeling this is the Nimble interface.

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: