- Cisco Community

- Technology and Support

- Networking

- Switching

- Re: QoS default-class not using leftover bandwidth C9500

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-28-2019 02:07 AM

Hello

I am wondering if someone can check my policy configuration to see if there is any issue.

We are detecting output drops in all the active TenG interfaces of a C9500-16X, they are connected to some downstream switches with a 1G interfaces, the negotiation is obviously 1G and the utilization in the interfaces are not more than 20% and we have many packet drops.

The policy config is:

policy-map out-queue

class Voice

priority level 1

police cir percent 30

conform-action transmit

exceed-action drop

class Protocols

bandwidth percent 5

class PriorData

bandwidth percent 25

class stream

bandwidth percent 10

class lesspriority

bandwidth percent 5

class class-default

bandwidth percent 25

The problem here is that there are many drops packets even though the load in the interface is not more than 8/255, As commented previously it is happening in several Catalyst C9500 As far as I know with the COS configuration implemented (CBWFQ + Bandwidth and LLQ Configuration), there shouldn't be any problem when the percent of the bandwidth used for the Class Default is bigger than the configured as this is the minimum bandwidth guarantee. But why is not using the leftover bandwidth?

We suspected that could be because the kind of traffic, that could be bursty and we changed the buffers allocation, but it didnt totally solved the issue. we configured "qos queue-softmax-multiplier 1200"

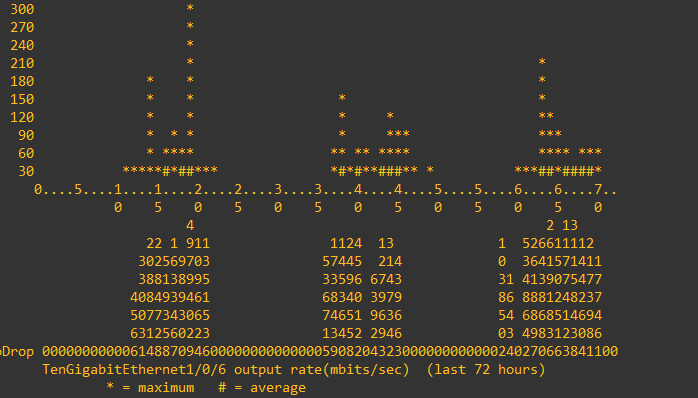

I activated the history utilization and drops in some of the interfaces an this are the results (attached image), you can see that we are dropping when the utilization is not more that 150mbits/sec

thank you in advance

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-28-2019 06:42 AM - edited 07-01-2019 12:01 AM

Thank you, now I see. Total output drops show that there will be a problem with buffers.

More queue you create, less buffer memory is left per queue.

Here is a document, which will lead you to the solution.

Georg Pauwen did good advice. Tweak the output buffer.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-28-2019 03:28 AM

Hello,

in addition to increasing the softmax value, try and apply the service policy below to the interfaces that have the drops. Below is an example:

qos queue-softmax-multiplier 1200

!

class-map match-any OUTPUT_CLASS

match access-group name OUTPUT_ACL

!

policy-map OUTPUT_POLICY

class class-default

bandwidth percent 100

!

interface GigabitEthernet1/0/3

service-policy output OUTPUT_POLICY

!

interface GigabitEthernet2/0/3

service-policy output OUTPUT_POLICY

!

ip access-list extended OUTPUT_ACL

permit ip any any

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-28-2019 05:54 AM

Hello Georg

But with this configuration I will have just one Queue and there will not be prioritizing of the Voice, Video traffic, right?

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-28-2019 04:34 AM

Hello.

If interfaces are no more than 20 % utilized than no QoS drops should be on the line.

Post show interface command for a particular interface with drops.

Post show policy-map <policy-name> for a particular policy map applied on that interface.

Post show policy-map interface <interface> for the same interface with drops.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-28-2019 06:22 AM

Hello

Yeah in theory if I am not wrong, the default class should be able to use the 70% (100%-Voice Class) of the bandwidth if there is not traffic in the rest of the classes. Not sure why it is dropping if the utilization of the interface is so low

#show policy-map out-queue

Policy Map out-queue

Class Voice

priority level 1

police cir percent 30

conform-action transmit

exceed-action drop

Class Protocols

bandwidth 5 (%)

Class Data

bandwidth 25 (%)

Class stream

bandwidth 10 (%)

Class lesspriority

bandwidth 5 (%)

Class class-default

bandwidth 25 (%)

#show policy-map interface TenGigabitEthernet1/0/2 | i drops|band

(total drops) 0

(total drops) 0

bandwidth 5% (50000 kbps)

(total drops) 0

bandwidth 25% (250000 kbps)

(total drops) 0

bandwidth 10% (100000 kbps)

(total drops) 8504

bandwidth 5% (50000 kbps)

(total drops) 4215209812

bandwidth 25% (250000 kbps)

#show inter TenGigabitEthernet1/0/2

TenGigabitEthernet1/0/2 is up, line protocol is up (connected)

Hardware is Ten Gigabit Ethernet, address is X

MTU 1500 bytes, BW 1000000 Kbit/sec, DLY 10 usec,

reliability 255/255, txload 1/255, rxload 1/255

Encapsulation ARPA, loopback not set

Keepalive not set

Full-duplex, 1000Mb/s, link type is auto, media type is 1000BaseSX SFP

input flow-control is on, output flow-control is unsupported

ARP type: ARPA, ARP Timeout 04:00:00

Last input 00:00:00, output 00:00:00, output hang never

Last clearing of "show interface" counters never

Input queue: 0/2000/0/0 (size/max/drops/flushes); Total output drops: 4215209812

Queueing strategy: Class-based queueing

Output queue: 0/40 (size/max)

30 second input rate 7422000 bits/sec, 1187 packets/sec

30 second output rate 5440000 bits/sec, 1210 packets/sec

13984292237 packets input, 16790176837929 bytes, 0 no buffer

Received 33701231 broadcasts (21282386 multicasts)

0 runts, 0 giants, 0 throttles

0 input errors, 0 CRC, 0 frame, 0 overrun, 0 ignored

0 watchdog, 21282386 multicast, 0 pause input

0 input packets with dribble condition detected

9274003733 packets output, 4347665568329 bytes, 0 underruns

0 output errors, 0 collisions, 2 interface resets

245674 unknown protocol drops

0 babbles, 0 late collision, 0 deferred

0 lost carrier, 0 no carrier, 0 pause output

0 output buffer failures, 0 output buffers swapped out

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-28-2019 06:42 AM - edited 07-01-2019 12:01 AM

Thank you, now I see. Total output drops show that there will be a problem with buffers.

More queue you create, less buffer memory is left per queue.

Here is a document, which will lead you to the solution.

Georg Pauwen did good advice. Tweak the output buffer.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-28-2019 06:50 AM

As your wonder in your OP, it could be due to bursty traffic and a Catalyst 9500's architecture, both physical and logical. Catalyst 3K series switches has often not had lots of buffer RAM and their default QoS configuration often doesn't work well for ports dealing with microbursts. (If you initial configuration change somewhat mitigated the drop counts, and if you use Georg's recommendation, and if they also mitigates the drops, you'll have some confirmation of the issue of Cisco's architecture.)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

06-28-2019 06:42 AM

Surprisingly often, that's not true.

Utilization is generally an average over several minutes, while microbursts can have drops down in the millisecond range.

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide