- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

FI FC ports saturated?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-19-2019 05:58 AM - edited 10-03-2019 07:04 AM

We have Fabric A and fabric b

each FI has 2 x 8 Gbps - these are NOT connected via Port-channel

We have concerns that these links may becoming saturated.

How can i verify this from UCS manger? is it do-able or do i need to look at the SAN switching side of things

thx, .

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-19-2019 07:42 AM

What kind of problems are you seeing that make you think the links are saturated?

You can run 'show interface fcX/Y" and check the statistics accordingly. You can also check to see if your vHBA are evenly distributed across all the links. If you were using port-channel you would not need to worry about that.

Best practice is port-channel to upstream MDS which will automatically distribute workloads evenly across all links in the port-channel.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-19-2019 08:35 AM - edited 09-19-2019 08:36 AM

Brocade says that port-channel can cause performance issues with MDS is this not the case anymore?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-19-2019 08:40 AM

How would i check this?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-19-2019 01:41 PM

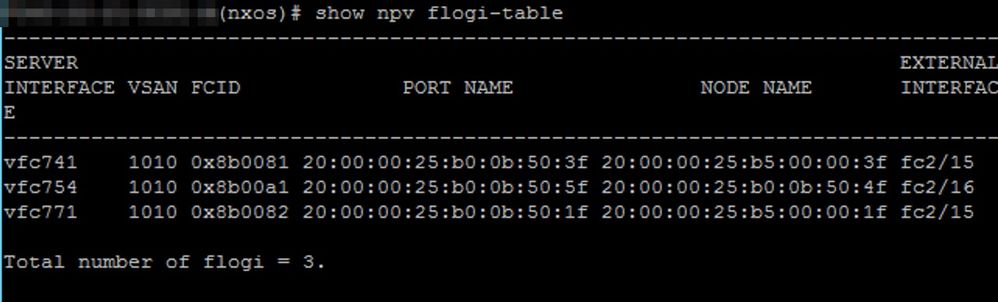

Run the following command from nxos on each fabric interconnect. The column on the right shows which vHBA is pinned to which external facing interface. I do not recall any performance issues using san port channels. We recommend using them in the event of a physical link failure, there is no link down events and the underlying vHBA do not go down. All vHBA stay pinned to the logical san port channel.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-01-2019 02:10 PM

If you are using Brocade Fabric switches - Port channels are not supported officially by Cisco

You can also check your Chassis/IO Module/Fabric Ports to make sure all are "Up" and not stuck in Discovery or initializing.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-03-2019 06:49 AM - edited 10-03-2019 07:03 AM

Looks balanced....

ucs-6248-1-A(nxos)# show npv flogi-table

--------------------------------------------------------------------------------

SERVER EXTERNAL

INTERFACE VSAN FCID PORT NAME NODE NAME INTERFACE

--------------------------------------------------------------------------------

vfc938 200 0x3c1c1b 20:ee:01:03:00:00:0a:ff 20:ee:01:02:00:00:00:8f fc1/29

vfc965 200 0x3c1d11 20:ee:01:03:00:00:0a:00 20:ee:01:02:00:00:00:7f fc1/30

vfc997 200 0x3cdc23 20:ee:01:03:00:00:0a:01 20:ee:01:02:00:00:00:4f fc1/31

vfc1005 200 0x3cdd1a 20:ee:01:03:00:00:0a:02 20:ee:01:02:00:00:00:5f fc1/32

vfc1013 200 0x3c1c04 20:ee:01:03:00:00:0a:03 20:ee:01:02:00:00:00:2f fc1/29

vfc1021 200 0x3cdc1d 20:ee:01:03:00:00:0a:04 20:ee:01:02:00:00:00:3f fc1/31

vfc1031 200 0x3cdd05 20:ee:01:03:00:00:0a:05 20:ee:01:02:00:00:00:0f fc1/32

vfc1054 200 0x3c1d2d 20:ee:01:03:00:00:0a:08 20:ee:01:02:00:00:00:df fc1/30

vfc1062 200 0x3cdc27 20:ee:01:03:00:00:0a:09 20:ee:01:02:00:00:00:ef fc1/31

vfc1090 200 0x3c1c14 20:ee:01:03:00:00:0a:0c 20:ee:01:02:00:00:00:af fc1/29

vfc1094 200 0x3cdd0d 20:ee:01:03:00:00:0a:0b 20:ee:01:02:00:00:00:cf fc1/32

vfc1112 200 0x3c1d0d 20:ee:01:03:00:00:0a:0d 20:ee:01:02:00:00:00:8e fc1/30

vfc1120 200 0x3c1c11 20:ee:01:03:00:00:0a:0e 20:ee:01:02:00:00:00:9e fc1/29

vfc1128 200 0x3cdc10 20:ee:01:03:00:00:0a:0f 20:ee:01:02:00:00:00:6e fc1/31

vfc1136 200 0x3cdd1e 20:ee:01:03:00:00:0a:10 20:ee:01:02:00:00:00:7e fc1/32

vfc1172 200 0x3c1d14 20:ee:01:03:00:00:0a:12 20:ee:01:02:00:00:00:5e fc1/30

vfc1192 200 0x3c1c13 20:ee:01:03:00:00:0a:13 20:ee:01:02:00:00:00:2e fc1/29

vfc1200 200 0x3c1d0f 20:ee:01:03:00:00:0a:14 20:ee:01:02:00:00:00:3e fc1/30

vfc1208 200 0x3cdc12 20:ee:01:03:00:00:0a:16 20:ee:01:02:00:00:00:1e fc1/31

vfc1216 200 0x3cdd1c 20:ee:01:03:00:00:0a:15 20:ee:01:02:00:00:00:0e fc1/32

Total number of flogi = 20.

ucs-6248-1-A(nxos)#

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-02-2019 06:06 AM

Ran into this issue, w/ 8Gbps uplinks and Brocade switches. If the links are saturated, you will not see failures or errors. FC is a lossless protocol, so there aren't drops, just delays. We had this issue during backups, fun times when your VMs are taking snapshots during this. I didn't really have a good handle on it until I added the domain to Solarwinds and was able to view bandwidth. You should be able to also see bandwidth usage from the Brocade side; if you're hitting about 6.7 Gbps on these links then you're saturated. The only things you can do is add uplinks and start rebooting servers to add to the new links, or move workloads around.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-04-2019 05:02 AM

You can reset the vHBA from the equipment view without having to reboot OS, and the vsan/vHBA pinning evaluation process will potentially repin a given vHBA to a different uplink that has fewer pinned vHBAs to it.

Obviously, this expects you have fully functional dual paths,,, and you may want to disable the path in question from the OS first.

I have had to work with some large customer's environment doing Oracle Backups, and in lieu of having SAN port-channels that would have even distributed the workload bandwidth, we ended up having to create multiple vsans/uplink pairs in order to keep the heavy talkers all separated, as well as having enough uplinks to have some redundancy.

Ideally, you would want to have MDS, and san port-channels, and then would not only have better automatic vHBA traffic distribution, but even at a vHBA level you would get hashing distribution based on SRC/DST/OXID.

Kirk...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-04-2019 07:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-04-2019 09:41 AM - edited 10-04-2019 09:42 AM

Brocade port trunking is (from what I can find via HPE) specific to ISL between fabric switches.

Cisco MDS allows Port Channels to correspond with port-channels on the Fabric Interconnect side of UCS

As Kirk said - Having additional VSANs can allow you to isolate heavy SAN consumers to specific ports on the FI pair, while allowing normal consumers to utilize other available FC ports

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-04-2019 11:13 AM - edited 10-04-2019 11:24 AM

Brocade and Cisco devices do not use the same SAN- port-channel config and are not compatible, which is why I referenced a MDS (N5K/N6K will also do FC san port-channels ;)

Kirk...

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: