- Cisco Community

- Technology and Support

- Data Center and Cloud

- Data Center and Cloud Blogs

- Pondering Automation: Less is More Part 1

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Howdy out there in Automation land!!! Today... I have the start of a long set of two blogs for my readers. We are going to do something exciting and really useful... but purely about system setup and design... no real "automation" today. But first... we have to have our movie poster. Since the goal of these is 30 minutes or less... I think this will do:

So what are we going to do today? We are going to install a 4 node (or VM) cluster of Kubernetes and then install CloudCenter Suite(CCS) and Action Orchestrator(AO) on it! (the CCS install will be on the Part 2 of this 2 part series). The main goal here is to allow you guys to create smaller and more agile clusters that you can use to run development, stage, pre-production style environments, or in the event that you do not feel you need all the compute power that the self-driven CCS cluster install gives you. For this... single line commands will be in BOLD. Anything multi-line will be broken out separately.

A reminder... when you install CCS and have it do the Kubernetes setup, it will make 3x masters (in HA), 1x internal load balancer, and 5-8+ workers, so you are looking at 12-14 VMs normally with lots of compute space and need. While this makes a ton of sense for production deployments, we want something smaller for non-production... and that is what I'm going to help you with today! Pleas note these steps are accurate as of this blog post... Kubernetes/Docker/Linux change often so I might have to update or just point you to other places. You can always find the Kuberentes documenation for setup here.

First, like any good recipe, we need the ingredients, so what you will need is...

- 4x Virtual Machines (on any cloud)

- These VMs should have... 4x-8x vCPU, 8-16GB Ram, a base 50GB hard dive and a 100GB secondary storage hard drive. 1 IP address per VM is fine. It is easier to have IPs that are NATed to the internet versus behind a proxy, but we can address both scenarios. I highly suggest 8x vCPU and 16GB RAM per node, but it will work with the lower power if you must.

- These VMs should have CentOS 7.5 or later installed. They can be a pretty basic install for a compute node. If you need guidance in adding an additional hard drive, follow the post here. You could do this on windows VMs or other linux VMs, but this blog will not take you through that. If you need windows Kubernetes guidance, look here. You could also look into pre-constructed packages, but again, this part of the blog is install of Kubernetes from scratch.

- You will want to configure each /etc/hosts file with the IP addresses and hostnames of the 4x nodes.

- These VMs should be updated to the lastest codebase via

yum -y update

You will need root or sudo access... prefer root, it's just easier! I would suggest you take the above "setup" and copy/clone it. Do it once and make it a template so you can spawn these nodes as you need them. (you might even want to do a template below right before kubeadm init)

Docker Install

- You will need to install the docker prerequisites via

yum install -y yum-utils \ device-mapper-persistent-data \ lvm2

- Add the docker-ce repo, we will install docker-ce 18.06 to be our container runtime

yum-config-manager \ --add-repo \ https://download.docker.com/linux/centos/docker-ce.repo - Install Docker and enable it via

yum update && yum install docker-ce-18.06.2.ce systemctl enable docker && systemctl start docker

- You can check the docker version via docker --version

- Setup docker to use systemd as a cgroupdriver via

cat > /etc/docker/daemon.json <<EOF { "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "100m" }, "storage-driver": "overlay2", "storage-opts": [ "overlay2.override_kernel_check=true" ] } EOF - Then restart docker via systemctl daemon-reload and then systemctl restart docker

- If your nodes are behind a proxy, you will most likely have to follow these sub-steps. If you do not have a proxy, skip to the Kubernetes install. You can test this by doing curl https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64 and see if you get back anything.

- Proxy Sub Steps - you can also find this guidance here.

- Create a directory for docker via mkdir -p /etc/systemd/system/docker.service.d

- Add PROXY configuration to your /etc/profile, something like this:

HTTPS_PROXY="http://myproxy.cisco.com:80" HTTP_PROXY="http://myproxy.cisco.com:80" NO_PROXY="127.0.0.1,localhost,<List of 4x VM IPs>" export HTTPS_PROXY HTTP_PROXY NO_PROXY

- Inside of your docker.service.d folder, create two files, http-proxy.conf and https-proxy.conf

- Inside of them, you must add the proxy configuration like this (for HTTPS). Do the same for HTTP

[Service] Environment="HTTPS_PROXY=http://myproxy.cisco.com:80" "NO_PROXY=127.0.0.1,localhost,<YOUR SYSTEMS IP ADDRESSES>"

- After making these changes, restart and flush the daemon via systemctl daemon-reload and systemctl restart docker.

- You can then verify the changes via systemctl show --property=Environment docker

- You can also try the curl again at the top and it should work.

Kubernetes Install

- Add Kubernetes to your yum repo via

cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg exclude=kube* EOF

- Turn off setenforce via setenforce 0 for this session. Turn if off permantenly then via sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

- Find the Swap Space via free -h and blkid. You will see something like this:

/dev/mapper/centos-root: UUID="390ed2f2-7111-465d-9bfc-0b2d5d9285ac" TYPE="xfs" /dev/sda2: UUID="isHFES-V66X-ZSE7-R8T1-fh0b-HztV-vFo2US" TYPE="LVM2_member" /dev/sdb1: UUID="af19aa1f-759d-4612-9d83-ebf96fd83324" TYPE="ext4" /dev/sda1: UUID="dcdd2d1e-41b7-48dd-920b-d784b3f60ba8" TYPE="xfs" /dev/mapper/centos-swap: UUID="41322535-274e-498a-9b76-7b6a6dc5ca1e" TYPE="swap" /dev/mapper/centos-home: UUID="707eae72-2fd4-4112-acc0-170b1783661e" TYPE="xfs" - You will need to disable the swap space via below. You can find an article on it here.

swapoff <swap area> #most likely /dev/mapper/centos-swap #so most likely swapoff /dev/mapper/centos-swap swapoff -a #Permantly disable via sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

- Install Kuberadm, Kubectl, Kubelet via yum install -y kubelet kubeadm kubectl cri-tools --disableexcludes=kubernetes

- Kubeadm is the cluster initialization module, Kubectl is the CLI tool, and Kubelet is the agent/service

- Enable and set Kubernetes to auto-start via systemctl enable kubelet && systemctl start kubelet

- We need to setup the call-iptables for CentOS routing for Kubernetes via

cat <<EOF > /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF sysctl --system

- You have a choice to disable and stop the firewall or figure out *ALL* the ports and punch a hole for each one... I highly recommend disable/stop via systemctl stop firewalld and then systemctl disable firewalld.

- If you really want to punch holes in your firewall, below is a start... but you might come across other ports you need. Much easier to do step 9 instead... but if you want to keep the firewall up, start with this:

firewall-cmd --zone=public --add-port=6443/tcp --permanent firewall-cmd --zone=public --add-port=10250/tcp --permanent firewall-cmd --zone=public --add-port=2379/tcp --permanent firewall-cmd --zone=public --add-port=2380/tcp --permanent firewall-cmd --zone=public --add-port=8080/tcp --permanent firewall-cmd --zone=public --add-port=80/tcp --permanent firewall-cmd --zone=public --add-port=443/tcp --permanent firewall-cmd --zone=public --add-port=10255/tcp --permanent firewall-cmd --reload

- This is not a bad place to do a template/snapshot. You could deploy clones from this step, but not after.

- Start your cluster via kubeadm init --apiserver-advertise-address=<MASTER-IP> --pod-network-cidr=192.168.1.0/16

- You need to fill out the master IP address (the box you are running this on) and then set the pod CIDR since we will be using Calico for our network.

- You will see a lot of output... you want to grab parts after it says "Your Kubernetes control-plane...". We need the mkdir command and then kubeadm join command. The kubeadm join will be used on your worker nodes.

- Using the mkdir command, we run this to give us access to the admin config:

export KUBECONFIG=/etc/kubernetes/admin.conf mkdir -p $HOME/.kube cp -i /etc/kubernetes/admin.conf $HOME/.kube/config chown $(id -u):$(id -g) $HOME/.kube/config

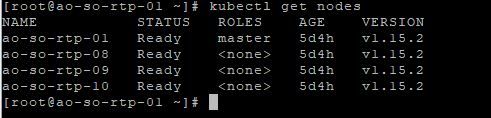

- Now you can run kubectl get nodes and see your single node. (It should be NotReady)

- I *highly* suggest using the kubectl auto-complete via kubectl completion bash and then kubectl completion bash >/etc/bash_completion.d/kubectl . This makes using kubectl a lot easier!!!!

- Now we have to install Calico, grab it via curl https://docs.projectcalico.org/v3.7/manifests/calico.yaml -O

- And then install it via kubectl apply -f calico.yaml

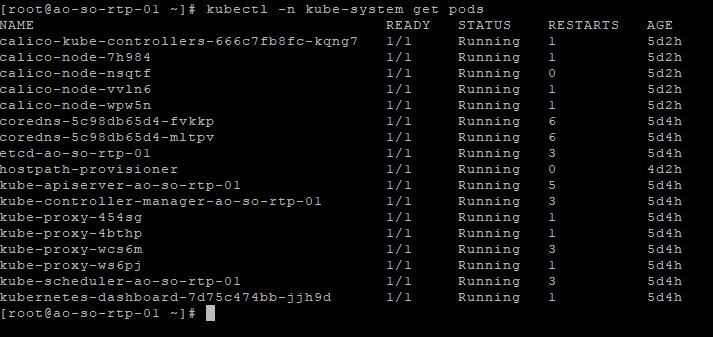

- You should see coredns pods coming up and calico nodes come up when you do kubectl -n kube-system get pods

Setup Workers And Install Kubernetes Dashboard

- Now we need to stand up our workers and then an optional part, the Kubernetes dashboard

- You should setup all the workers with the same base as the above. Then proceed to do the docker install the same. Then proceed to do the Kubernetes Install and stop after step 10(firewall step) and proceed with step 3 below.

- You should have copied the join command from the above (when you init the master) and now you should run this on each worker...

kubeadm join 10.122.138.195:6443 --token xv7uhj.9tzey70dka4v9gh7 \ --discovery-token-ca-cert-hash sha256:055f6fc799bbfff68ba93f84ea2c07389bfceb2226235df0e2868091f126f412NOTE: Your IP, TOKEN, HASH will all be different than the above, it is just an example. - You should see some more kube init text but it will be smaller. On your master, you can run kubectl get nodes to see your workers being added. Once you have done step 3 on each worker, proceed to step 5.

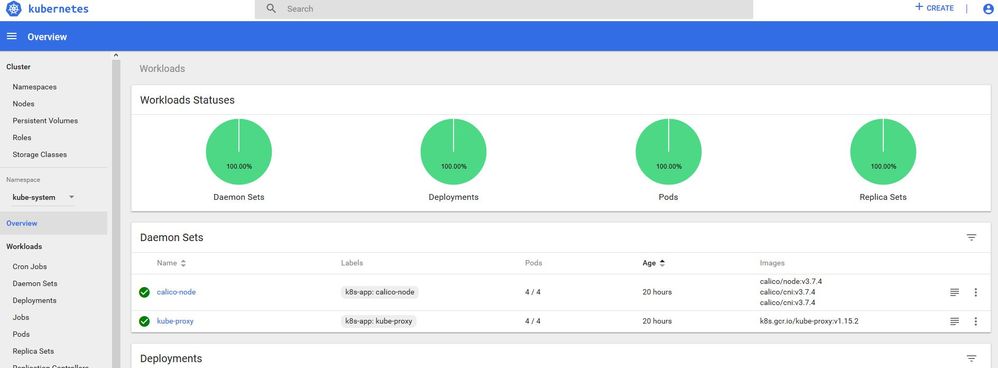

- Step 5 on is purely OPTIONAL. It is to install the Kubernetes dashboard which is a web based UI of Kubernetes. You do not have to have this for CCS, if you do not want this... you are done! (you can move onto the next blog-part 2) If you want the dashboard, continue on...

- Install Kubernetes Dashboard via kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml

- We need to edit the dashboard service to expose it to the outside world, so let's do that via kubectl -n kube-system edit service kubernetes-dashboard

- This will open VI up and you need to go find the part that says type: ClusterIP and change it to say type: NodePort

- Save it via hitting ESC, then wq!

- Now we need to find the port! Run kubectl -n kube-system get service kubernetes-dashboard. You will see a ports list and something like 443:XXXXX/TCP. That means your dashboard is running on https://<IP-OF-MASTER>:<XXXXX>

- But first, we have to configure a service user for us to access the dashboard... Kubernetes is very strict on RBAC, etc

- I have attached a yaml file to the blog that you can use, and it is below... but you need to apply it. So if you use the file just do kubectl create -f clusterRoleBindingForDashboard.yaml otherwise here is the yaml:

apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: kubernetes-dashboard labels: k8s-app: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: kubernetes-dashboard - Please note this setup is meant to be a little "open"... remember, dev/stage environment, not PRODUCTION!

- Lastly we need to bind the cluster role to the account so run this command:

kubectl create clusterrolebinding varMyClusterRoleBinding \ --clusterrole=cluster-admin \ --serviceaccount=kube-system:default

- Then we need to get the token or password for the dashboard, we can do that via kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep kubernetes-dashboard | awk '{print $1}')

- Just copy out the "token" at the very end of the output of the above command

- Now we can go to our dashboard, which remember, is like https://<ip-of-master>:<xxxxx>, at the prompt paste in your token and sign in. I suggest saving that login... :) You now have a fully working Kubernetes cluster with dashboard! Congrats to you!!!!

Now you guys did not think I would leave this without a VOD right? I'm going to guide you through it!!! So ONTO THE VIDEO:

This VOD covers docker+master install+calico:

|

Play recording (20 min 23 sec) |

|

|

Recording password: kJE6Wbbc |

|

This VOD covers worker install:

|

Play recording (7 min 28 sec) |

|

|

Recording password: uBpejFT3 |

|

Standard End-O-Blog Disclaimer:

Thanks as always to all my wonderful readers and those who continue to stick with and use CPO and now those learning AO! I have always wanted to find good questions, scenarios, stories, etc... if you have a question, please ask, if you want to see more, please ask... if you have topic ideas that you want me to blog on, Please ask! I am happy to cater to the readers and make this the best blog you will find :)

AUTOMATION BLOG DISCLAIMER: As always, this is a blog and my (Shaun Roberts) thoughts on CPO, AO, CCS, orchestration, and automation, my thoughts on best practices, and my experiences with the products and customers. The above views are in no way representative of Cisco or any of it's partners, etc. None of these views, etc are supported and this is not a place to find standard product support. If you need standard product support please do so via the current call in numbers on Cisco.com or email tac@cisco.com

Thanks and Happy Automating!!!

--Shaun Roberts

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: