- Cisco Community

- Technology and Support

- Data Center and Cloud

- Data Center and Cloud Knowledge Base

- Cisco ACI Ask the Experts FAQ: Distributed Networking

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

on

09-27-2021

03:07 AM

- edited on

02-15-2023

08:01 PM

by

Vivien Chia

![]()

- What is Cisco ACI Anywhere?

- What are ACI connectivity options for managing Primary On-Prem DCs?

- What are ACI options for extending your Data center to secondary remote locations (physical)?

- How ACI provides centralized network policy framework for workloads deployed in Cloud?

- How ACI achieves visibility to Containerized workloads running On-Prem and in Cloud?

- How can one have common policies across Cisco SD-Access and Cisco ACI essentially to simplify policy management?

- <Useful Links>

Here are some commonly asked questions and answers of Distributed Networking deployment for Cisco Application Centric Infrastructure (ACI). Subscribe (how-to) to this post to stay up-to-date with the latest Q&A and recommended Ask the Experts (ATXs) sessions to attend.

What is Cisco ACI Anywhere?

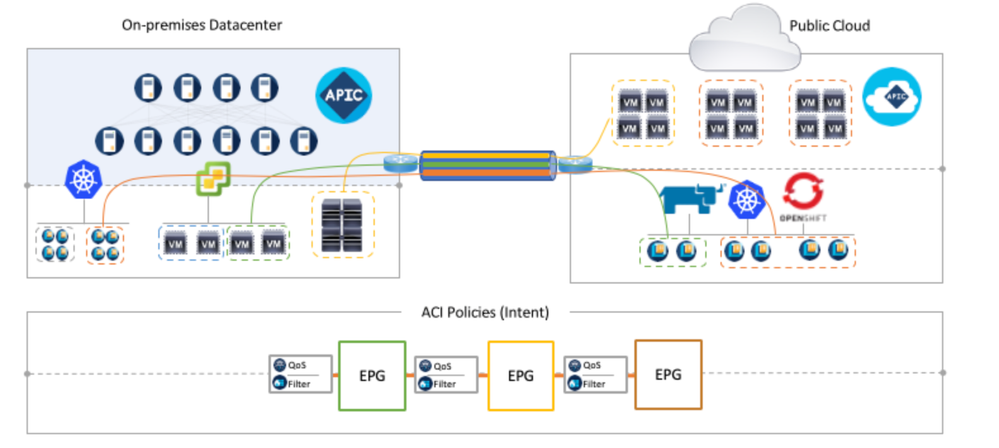

A. Cisco ACI Anywhere solution delivers true hybrid Multi-cloud capability for customers, taking a holistic, policy driven abstraction on top of cloud native APIs , regardless of the type of workload – physical, virtual, or containerized, across on-premises and/or public cloud.

While customers benefit from an ACI policy driven infrastructure in the on-premises environment with Multi-Pod, Remote Leaf and Multi Site Flexibility Architectures.

Cisco Cloud APIC allows them to automate the management of end-to-end connectivity, as well as the agentless enforcement of consistent security policies, for workloads across on-premises and in public clouds through a single pane of glass.

Key components within the integrated Cloud solution includes:

- Multisite Orchestrator (hosted anywhere) for inter-site policy definition

- Cisco’s Cloud Application Policy Infrastructure Controller (APIC) runs natively in public clouds to provide automated connectivity, policy translation, day two operations, and enhanced visibility of workloads in the public cloud

- CSR 1000V instance (runs in the cloud) for IPSec VPN tunnel (Underlay provides IP reachability), and VXLAN (overlay termination) for control-plane and data-plane connectivity between on-premises and cloud

ACI has provided Container Network Interface (CNI) plugin for Kubernetes platforms since release 3.0. Also with the release of ACI 5.0 introduces Cloud CNI which extends capabilities to make containers that are deployed in the cloud and on-prem first class citizens in the ACI network and policy translation.

Recommended ATXs: Use Case Overview and Planning: Distributed Networking for Cisco ACI

What are ACI connectivity options for managing Primary On-Prem DCs?

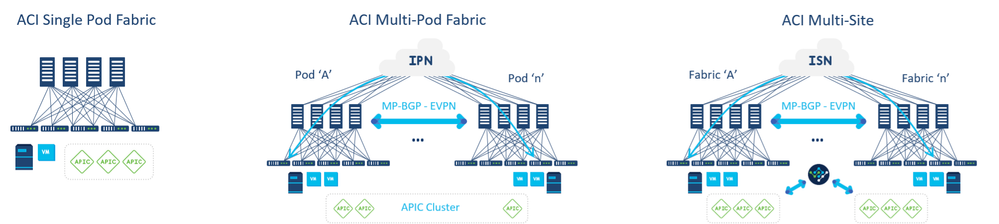

A. ACI Single Fabric: Locally connected Leaf-spine network sharing a common control plane under the scope of same APIC.

ACI Multi-POD:

- Single APIC Cluster managing multiple Leaf Spine Networks (PODs).

- PODs which are sub-unit of Multi-Pod are leaf and spine network sharing common control plane, with maximum latency supported between Pods is 50 msec RTT, which roughly translates to a geographical distance of up to 2500 miles.

ACI Multi-Site:

- Centralized management of Multiple APIC cluster and associated PODs(Multi Pod Sites) under single umbrella of Multi Site Orchestrator or Nexus Dashboard Orchestrator (NDO).

- The maximum latency between an Nexus Dashboard node and the APIC cluster is reduced to 500 msec RTT.

Recommended ATXs: Architecture Transformation Planning: Multi-Pod for Cisco ACI and Architecture Transformation Planning: Multi-Site for Cisco ACI

What are ACI options for extending your Data center to secondary remote locations (physical)?

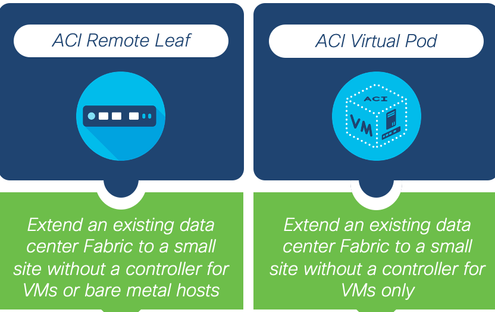

A. Remote Leaf - This gives you the ability to extend your data center to a small site that does not have a controller by placing a physical Nexus 9k leaf switch on site which allows you to connect both virtual machines and bare metal hosts.

ACI Mini - This is something that you may not typically hear about in the context of extending the data center to remote sites. This is a small scale, stand-alone instance of ACI. But let us say in the past you have deployed a stand-alone ACI Mini at one of your Data Centers. You now have the ability to connect that ACI Mini deployment to larger data centers with the ACI Multi-Site Orchestrator. So it will probably not be your first choice in most cases when it comes to extending an existing data center to a remote site. However, keep it in mind because there could be some technical requirements(bandwidth constraints) that make it the right fit in some solution.

Recommended ATXs: Feature Overview: Remote Leaf for Cisco ACI

How ACI provides centralized network policy framework for workloads deployed in Cloud?

A. The component which does the magic(Policy Translation to Cloud Native constructs) is Cisco Cloud APIC. It plays the equivalent of APIC for a cloud site.

Like APIC for on-premises Cisco ACI sites, a Cloud APIC manages network policies for the cloud site that it is running on AWS and Azure Clouds by using the Cisco ACI network policy model to describe the policy intent.

Cloud APIC is a software-only solution that is deployed using cloud-native instruments such as, for example, Cloud Formation templates on AWS and Azure Resource Manager (ARM) templates on Microsoft Azure.

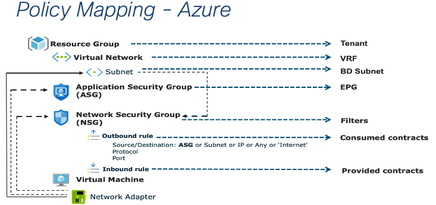

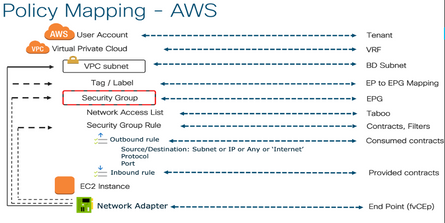

It accomplishes the task by translating the Cisco ACI network policies to the AWS and Azure native network policies

- Uses the AWS-native policy API to automate the provisioning of the needed AWS-native cloud resources, such as VPCs, cloud routers (Cisco® CSR 1000V Series and AWS Virtual Private Gateway (VGW)), security groups, security group rules, etc.

- Uses the Microsoft Azure-native policy API to automate the provisioning of the needed Microsoft Azure-native cloud resources, such as Virtual Network (VNet), cloud routers (Cisco CSR 1000V Series IOS® XE SD-WAN Routers and Microsoft Azure Virtual Network Gateway), Application Security Group, Network Security Group, etc

Mapping of ACI policy Constructs to Cloud Native network policies:

Recommended ATXs: Component Integration: Cisco Cloud APIC on AWS

How ACI achieves visibility to Containerized workloads running On-Prem and in Cloud?

A. It's correct to say, if Kubernetes makes individual Docker nodes come together, CNI(Container Network Interface) makes them play together nicely. This it does via bridging the networking gap between Docker nodes.

Cisco ACI CNI provides ACI capability to manage and implement ACI policy in container networking. Provides embedded fabric and virtual switch load balancing with in fabric PBR.

With ACI CNI Kubernetes or Open Shift Clusters can be configured as a VMM Domain in ACI GUI, managed using OpFlex and OpenFlow protocols.

The Cisco ACI CNI Plugin is supported with the following container solutions:

- Kubernetes on Ubuntu 20.04

- Red Hat OpenShift 3.11 on RHEL 7

- Red Hat OpenShift 4.x on RHCOS 4.x

- Docker Enterprise on RHEL 7

- Rancher RKE 1.2.x

Latest support matrix on ACI Virtualization Compatibility Matrix

Leveraging a single abstracted policy model, ACI now allows you to mix and match any type of workloads (including containers) and attach them to an overlay network that is always hardware-optimized on premises while relying on software components in the cloud. The container cloud-networking solution is composed of the ACI CNI and the CSR 1000v virtual router. Whether Kubernetes clusters are running in the cloud or within the datacenter doesn’t matter anymore, they can live in different ACI managed domains without any compromise on agility and security.

In the cloud, the ACI 5.0 integration relies on Cloud APIC, programming cloud and container networking constructs using native policies.

This allows network admins to access all relevant container information as well as enables end-to-end visibility from cloud to cloud or cloud to on-prem. It further delivers multi-layer security: at the application layer with the support of Kubernetes Network Policies and container EPG, at the physical network layer using ACI hardware, and in the Cloud by automating native security constructs.

Recommended ATXs: Architecture Transformation Planning: Cisco ACI and Container Networking

How can one have common policies across Cisco SD-Access and Cisco ACI essentially to simplify policy management?

A. With Cisco SD-Access (SDA) Integration with Cisco Application Centric Infrastructure (ACI) we will be able to maintain common policy management across domains by providing common groups for different domain management applications to leverage. we will have consistent security policy groups in Cisco SD-Access (SDA) and ACI domains.

How the Integration works:

- Cisco DNAC and ISE are integrated over pxgrid and group information is exchanged between them.

- Similarly, ISE and APIC-DC exchange groups and member information.

- ISE and APIC-DC exchange SGT to EPG mappings and ISE and APIC exchange IP endpoint to EPG/SGT mappings.

Recommended ATXs: Expanding to New Use Cases: Cisco ACI and SD-Access Policy Integration

<Useful Links>

- Cisco ACI for Data Center

- ACI Multi-Pod White Paper

- Cisco ACI Multi-Site Architecture White Paper

- Cisco ACI Remote Leaf Architecture White Paper

- Cisco Mini ACI Fabric and Virtual APICs

- Cisco Cloud ACI on AWS White Paper

- Cisco Cloud ACI on Microsoft Azure White Paper

- Cisco ACI and Kubernetes Integration

- Cisco ACI CNI Plugin for Red Hat OpenShift Container Platform

- Cisco SD-Access (SDA) Integration with Cisco Application Centric Infrastructure (ACI)

|

Need more resources? Access the latest guides, recordings, other FAQs and more via Cisco ACI ATXs Resources. Want to learn more and get real-time Cisco expert advice? Through live Q&A and solution demos, Ask the Experts (ATXs) real-time sessions help you tackle deployment hurdles and learn advanced tips to maximize your use of Cisco technology. View and register for the upcoming Ask the Experts (ATXs) sessions today. [Pro tip: Subscribe to the event listing for new session updates.] |

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: