- Cisco Community

- Technology and Support

- Service Providers

- Service Providers Blogs

- IOS-XR MPLS-TE Self-Ping

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Current Implementation of MPLS TE LSP Reoptimization

- Potential Issue With Current Implementation of MPLS TE LSP Reoptimization

- Introducing MPLS-TE Self-Ping Feature in IOS XR 7.5.3

- MPLS-TE Self-ping Probe Packet Format

- MPLS-TE Self-ping Operation

- Supported Software Version

- Supported Hardware

- MPLS-TE Self-Ping Feature and NSR

- Configuring MPLS-TE Self-Ping Feature

- Using group-based configuration

- Using regular no-group configuration

- MPLS-TE Self-Ping feature in action

- Topology

- Operation

- Working State

- Failure State

- Debugging Self-ping Feature

- Config Summary

- on head end router:

- on transit / tail-end router

- Related Commands

- Logs to provide to Cisco TAC for MPLS-TE Self-Ping issues

- Article change log

Current Implementation of MPLS TE LSP Reoptimization

In RSVP-TE networks/deployments, LSP make-before-break or reoptimization is the mechanism of replacing an existing LSP without affecting the traffic that is carried on this LSP. The procedure in make-before-break is as follows:

- Create and signal a new LSP.

- Wait for this LSP to be ready to carry traffic or for the HW programming to be done at every hop in the path.

- Switch the traffic from the existing LSP to the newly created one.

- Wait for the HW programming to be done at the head end (HE) router, and then delete the existing/old LSP.

This mechanism is widely used in RSVP-TE network because it allows changing LSP paths without dropping any traffic.

Potential Issue With Current Implementation of MPLS TE LSP Reoptimization

One of the main parameters of make-before-break is the time the HE router has to wait before switching the traffic onto the new LSP.

If the HE router does not wait long enough and the programming is not completely finished at a given hop along the path, then traffic will be dropped at that hop.

Conversely, if the HE router waits too long before switching the traffic, then the old path will stay on longer than needed which can cause congestion, and create more delays for traffic to switch to new LSP.

Operators use a conservative time ("reopt-install timer") to wait for switching the traffic to avoid loss over the new LSP. This time length should be set to such value as to allow enough time for all hops in the LSP path to fully program the path. But even so, there is still a possibility for traffic blackholing to happen if some node along the path is very slow in programming its hardware.

Introducing MPLS-TE Self-Ping Feature in IOS XR 7.5.3

Based on RFC7746, "MPLS-TE self-ping" feature tries to solve the problem of figuring out how long to wait until the new reopt LSP is ready to carry the traffic.

In a nutshell, instead of waiting for an arbitrary period of time, the self-ping feature sends probes over the new LSP that loop back to the HE router.

If the probe is received back, the HE router has a solid indication that the programming is fully done along the path and based on this the HE router switches the traffic over the new LSP. It will take negligible time (ms) for a probe to be looped back and received on the HE router, thus the new LSP can be activated much faster while making sure that the LSP path is really operational.

Note:

that there is also a feature called "LSP-Ping" which is also a part of MPLS TE engineers' toolbox, "LSP-Ping" is not the same as "Self-Ping" feature described here.

MPLS-TE Self-ping Probe Packet Format

The probe packet follows RFC7746 format, it is an MPLS packet with UDP payload with following spec:

- Source IPv4 Address

Set to the destination of the tunnel.

Static value, nonconfigurable in XR. - Destination IPv4 Address

Set to the router ID of the HE router.

Static value, nonconfigurable in XR. - IP TTL

Set to 255.

Static value, nonconfigurable in XR. - IP DSCP (Differentiated Services Code Point)

Set to 110000 (i.e. cs6 / InterNetwork Control).

Static value, nonconfigurable in XR. - UDP Source Port

Set to a value between 49152-65535.

Statically predetermined value, nonconfigurable in XR. - UDP Destination Port

Set to the lsp-self-ping port (8503) defined in IANA standard. - Payload of the UDP packet

a 64-bit Session-ID.

This session ID has scope/meaning only in the HE router that generates it. It is used to associate the received probe with the LSP that carried the probe in the downstream direction.

With such defined probe packet:

- No special config needed on transit LSR:

The probe can transit transparently on transit LSR since it's just another MPLS traffic. - No special config needed on tail end router:

The probe will be received as regular IPv4 packet on tail end router and looped back to HE router direction as the destination IP address is set to the router ID of the HE router.

MPLS-TE Self-ping Operation

The self-ping kicks in when the new reopt LSP is UP in the control plane: i.e. the RESV RSP message is received at the HE router.

The following are the actions that the HE router executes during self-ping:

- The HE router builds a probe message.

- The HE router sends this probe over the new reopt LSP.

- The HE router starts a retry timer (1 second, which is not configurable).

- If the retry timer expires then the HE router sends another probe.

- If the probe is received back, then the HE router declares that the LSP is UP in the forwarding plane and then triggers the switchover of the tunnel traffic from the old LSP to the new LSP. The HE router does not send anymore probes over this LSP.

- If the HE router does not receive back any probes and it has sent out the maximum number of probe packets (number configurable), it will fall back to the way we do things before:

Wait for "reopt-install timer" to expire before switching the traffic to new reopt LSP.

Supported Software Version

This feature is introduced in IOS XR 7.5.3.

Note:

IOS XR 7.5.3 might not be a GA release, please check with your account team for feature availability.

Supported Hardware

This feature is supported on ASR9000, NCS5500, and 8000 platform.

MPLS-TE Self-Ping Feature and NSR

If NSR failover happens during self-ping, the new active RP will restart the self-ping procedure from the beginning and the retry count will be reset.

No stats are replicated to the standby RP.

Configuring MPLS-TE Self-Ping Feature

Note:

- By default, this feature is disabled.

- To enable, the operator needs to add and commit self-ping configuration.

- Only supported with named tunnels, not supported on numbered tunnels.

Two options available when configuring this feature:

- Using group-based configuration.

- Using regular no-group configuration.

Using group-based configuration

group yotsuha

mpls traffic-eng

named-tunnels

tunnel-te '.*'

...

self-ping

max-count <1-180>

!

!

!

!

end-group

mpls traffic-eng

named-tunnels

tunnel-te itomori

apply-group yotsuha

!

!

!

Using regular no-group configuration

mpls traffic-eng

named-tunnels

tunnel-te tokyo

...

self-ping

max-count <1-180>

!

!

!

!

"max-count" is an optional parameter and denotes the maximum number of probes sent out.

The default for the maximum number of probes is 60 (if we don't have explicit "max-count" config) and the range is 1 to 180.

XR sends out 1 probe packet per second (pps hardcoded in 7.5.3) up to this "max-count" number until it receives the looped probe packet back.

When the probe is received back, then the HE router will stop sending any more probe packets, declares that the LSP is UP in the forwarding plane and then triggers the switchover of the tunnel traffic from the old LSP to the new LSP.

MPLS-TE Self-Ping feature in action

Topology

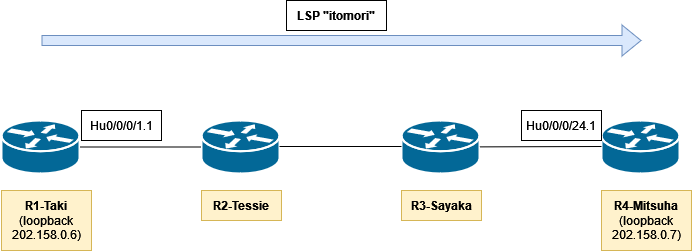

We have LSP "itomori" with R1-Taki as the head-end router, and R4-Mitsuha as the tail-end router.

This LSP will be reoptimized upon which new reopt LSP will be brought up, self-ping will run, tunnel traffic switches to the reopt tunnel, and the old LSP will finally be torn down.

Operation

Working State

Let's start from the basic, with no self-ping configured.

With the above topology, we would have something like the following at R1-Taki (only relevant config is shown, go to "Config Summary" section for a more complete example).

mpls traffic-eng

...

attribute-set path-option one_gig

signalled-bandwidth 1000000 class-type 0

!

attribute-set path-option two_gig

signalled-bandwidth 2000000 class-type 0

!

named-tunnels

tunnel-te itomori

path-option yotsuha

preference 10

computation dynamic

attribute-set one_gig

!

autoroute announce

!

destination 202.158.0.7

!

!

!

Let's show the LSP info, note that "Self-ping" state is currently shown as "Disabled".

RP/0/RP0/CPU0:R1-Taki#sh mpls traffic-eng tunnels name itomori detail

Mon Aug 22 17:39:29.491 -07

Name: itomori Destination: 202.158.0.7 Ifhandle:0xf00065c

Tunnel-ID: 32965

Status:

Admin: up Oper: up Path: valid Signalling: connected

path option yotsuha, preference 10, type dynamic (Basis for Setup, path weight 30)

Accumulative metrics: TE 30 IGP 30 Delay 900000

Path-option attribute: one_gig

Bandwidth: 1000000 (CT0)

G-PID: 0x0800 (derived from egress interface properties)

Bandwidth Requested: 1000000 kbps CT0

Creation Time: Mon Aug 22 17:38:46 2022 (00:00:43 ago)

Config Parameters:

Bandwidth: 0 kbps (CT0) Priority: 7 7 Affinity: 0x0/0xffff

Metric Type: TE (global)

Path Selection:

Tiebreaker: Min-fill (default)

Hop-limit: disabled

Cost-limit: disabled

Delay-limit: disabled

Delay-measurement: disabled

Path-invalidation timeout: 10000 msec (default), Action: Tear (default)

AutoRoute: enabled LockDown: disabled Policy class: not set

Forward class: 0 (not enabled)

Forwarding-Adjacency: disabled

Autoroute Destinations: 0

Loadshare: 0 equal loadshares

Load-interval: 300 seconds

Auto-bw: disabled

Auto-Capacity: Disabled:

Self-ping: Disabled

Fast Reroute: Disabled, Protection Desired: None

Path Protection: Not Enabled

BFD Fast Detection: Disabled

Reoptimization after affinity failure: Enabled

Soft Preemption: Disabled

SNMP Index: 296

Binding SID: 0

History:

Tunnel has been up for: 00:00:43 (since Mon Aug 22 17:38:46 -07 2022)

Current LSP:

Uptime: 00:00:43 (since Mon Aug 22 17:38:46 -07 2022)

Current LSP Info:

Instance: 2, Signaling Area: IS-IS main level-1

Uptime: 00:00:43 (since Mon Aug 22 17:38:46 -07 2022)

Outgoing Interface: HundredGigE0/0/0/1.1, Outgoing Label: 24000

Router-IDs: local 202.158.0.6

downstream 202.158.0.1

Soft Preemption: None

SRLGs: not collected

Path Info:

Outgoing:

Explicit Route:

Strict, 101.1.2.1

Strict, 101.1.4.3

Strict, 101.1.3.7

Strict, 202.158.0.7

Record Route: Disabled

Tspec: avg rate=1000000 kbits, burst=1000 bytes, peak rate=1000000 kbits

Session Attributes: Local Prot: Not Set, Node Prot: Not Set, BW Prot: Not Set

Soft Preemption Desired: Not Set

Resv Info: None

Record Route: Disabled

Fspec: avg rate=1000000 kbits, burst=1000 bytes, peak rate=1000000 kbits

Persistent Forwarding Statistics:

Out Bytes: 136

Out Packets: 2

Displayed 1 (of 7) heads, 0 (of 0) midpoints, 0 (of 0) tails

Displayed 1 up, 0 down, 0 recovering, 0 recovered heads

RP/0/RP0/CPU0:R1-Taki#

Now let's induce LSP reoptimization for this LSP by changing its signalled-bandwidth.

mpls traffic-eng

named-tunnels

tunnel-te itomori

path-option yotsuha

attribute-set two_gig

!

!

!

!

RP/0/RP0/CPU0:R1-Taki(config)#commit

Mon Aug 22 17:42:22.050 -07

RP/0/RP0/CPU0:Aug 22 17:42:22.117 -07: config[68351]: %MGBL-CONFIG-6-DB_COMMIT : Configuration committed by user 'cafyauto'. Use 'show configuration commit changes 1000000069' to view the changes.

RP/0/RP0/CPU0:R1-Taki(config)#end

RP/0/RP0/CPU0:Aug 22 17:42:23.085 -07: config[68351]: %MGBL-SYS-5-CONFIG_I : Configured from console by cafyauto on vty0 (223.255.254.247)

RP/0/RP0/CPU0:R1-Taki#RP/0/RP0/CPU0:Aug 22 17:42:27.503 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_REROUTE_PENDING : itomori (signalled-name: itomori, LSP Id: 2) is in re-route pending state.

RP/0/RP0/CPU0:Aug 22 17:42:27.503 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_EXPLICITROUTE : itomori (signalled-name: itomori, LSP Id: 3) explicit-route, 101.1.2.1, 101.1.4.3, 101.1.3.7, 202.158.0.7.

RP/0/RP0/CPU0:Aug 22 17:42:27.547 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_RECORDROUTE : itomori (signalled-name: itomori, T:32965, LSP Id: 3, Role: Reopt, src: 202.158.0.6, dest: 202.158.0.7) record-route empty.

RP/0/RP0/CPU0:Aug 22 17:42:27.548 -07: te_control[1088]: %ROUTING-MPLS_TE-5-S2L_SIGNALLING_STATE : itomori (signalled-name: itomori,T:32965, LSP id: 3, Role: Reopt, src: 202.158.0.6, dest: 202.158.0.7): signalling state changed to Up

RP/0/RP0/CPU0:Aug 22 17:42:47.648 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_BW_CHANGE : Bandwidth change on itomori (signalled-name: itomori, LSP id: 3): new bandwidth 2000000 kbps

RP/0/RP0/CPU0:Aug 22 17:42:47.648 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_REROUTE_PENDING_CLEAR : itomori (signalled-name: itomori, old LSP Id: 2, new LSP Id: 3) has been reoptimized.

RP/0/RP0/CPU0:Aug 22 17:42:47.648 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_REOPT : itomori (signalled-name: itomori, old LSP Id: 2, new LSP Id: 3) has been reoptimized; reason: Bandwidth CLI Change.

RP/0/RP0/CPU0:Aug 22 17:43:07.748 -07: te_control[1088]: %ROUTING-MPLS_TE-5-S2L_SIGNALLING_STATE : itomori (signalled-name: itomori,T:32965, LSP id: 2, Role: Clean, src: 202.158.0.6, dest: 202.158.0.7): signalling state changed to Down

Note that it took 20 seconds to get the LSP reoptimized from the time of new LSP coming up (17:42:27, "signalling state changed to Up") until reoptimization done (17:42:47, "has been reoptimized").

This is because we do have an impilicit reopt-install timer to delay the install of the new LSP as described in "Potential issue with current implementation of MPLS TE LSP reoptimization" section.

In XR, reopt-install timer is set with default value of 20 seconds, that is why there's a delay of 20 seconds to get reoptimization done as shown above.

This timer can be tuned. Let's try decreasing the delay timer to try to make reoptimized LSP to be available quicker.

mpls traffic-eng

reoptimize timers delay installation 15

And try to induce reoptimization again:

mpls traffic-eng

named-tunnels

tunnel-te itomori

path-option yotsuha

attribute-set one_gig

!

!

!

!

RP/0/RP0/CPU0:R1-Taki(config)#commit

Mon Aug 22 17:52:06.492 -07

RP/0/RP0/CPU0:Aug 22 17:52:06.558 -07: config[66420]: %MGBL-CONFIG-6-DB_COMMIT : Configuration committed by user 'cafyauto'. Use 'show configuration commit changes 1000000071' to view the changes.

RP/0/RP0/CPU0:R1-Taki(config)#end

RP/0/RP0/CPU0:Aug 22 17:52:07.421 -07: config[66420]: %MGBL-SYS-5-CONFIG_I : Configured from console by cafyauto on vty0 (223.255.254.247)

RP/0/RP0/CPU0:R1-Taki#

RP/0/RP0/CPU0:R1-Taki#RP/0/RP0/CPU0:Aug 22 17:52:27.504 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_REROUTE_PENDING : itomori (signalled-name: itomori, LSP Id: 3) is in re-route pending state.

RP/0/RP0/CPU0:Aug 22 17:52:27.504 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_EXPLICITROUTE : itomori (signalled-name: itomori, LSP Id: 4) explicit-route, 101.1.2.1, 101.1.4.3, 101.1.3.7, 202.158.0.7.

RP/0/RP0/CPU0:Aug 22 17:52:27.549 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_RECORDROUTE : itomori (signalled-name: itomori, T:32965, LSP Id: 4, Role: Reopt, src: 202.158.0.6, dest: 202.158.0.7) record-route empty.

RP/0/RP0/CPU0:Aug 22 17:52:27.549 -07: te_control[1088]: %ROUTING-MPLS_TE-5-S2L_SIGNALLING_STATE : itomori (signalled-name: itomori,T:32965, LSP id: 4, Role: Reopt, src: 202.158.0.6, dest: 202.158.0.7): signalling state changed to Up

RP/0/RP0/CPU0:Aug 22 17:52:42.650 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_BW_CHANGE : Bandwidth change on itomori (signalled-name: itomori, LSP id: 4): new bandwidth 1000000 kbps

RP/0/RP0/CPU0:Aug 22 17:52:42.650 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_REROUTE_PENDING_CLEAR : itomori (signalled-name: itomori, old LSP Id: 3, new LSP Id: 4) has been reoptimized.

RP/0/RP0/CPU0:Aug 22 17:52:42.650 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_REOPT : itomori (signalled-name: itomori, old LSP Id: 3, new LSP Id: 4) has been reoptimized; reason: Bandwidth CLI Change.

RP/0/RP0/CPU0:Aug 22 17:53:02.750 -07: te_control[1088]: %ROUTING-MPLS_TE-5-S2L_SIGNALLING_STATE : itomori (signalled-name: itomori,T:32965, LSP id: 3, Role: Clean, src: 202.158.0.6, dest: 202.158.0.7): signalling state changed to Down

Note that now it took 15 seconds to get the LSP reoptimized from the time of new LSP coming up (17:52:27, "signalling state changed to Up") until reoptimization done (17:52:42, "has been reoptimized"). Now it's 5 seconds quicker than before.

Though we can get even more aggressive by setting "reoptimize timers delay installation" value, the more aggressive we are, the bigger risk that the LSP would start switching traffic before the downstream hops are actually ready.

This is where self-ping feature comes into picture. Recall that self-ping will not rely on this delay timer but instead will send probe packet to verify if the downstream data plane has established.

Let's configure self ping now.

mpls traffic-eng

named-tunnels

tunnel-te itomori

self-ping

!

!

!

!

And try to induce reoptimization again:

mpls traffic-eng

named-tunnels

tunnel-te itomori

path-option yotsuha

attribute-set two_gig

!

!

!

!

RP/0/RP0/CPU0:Aug 23 11:10:57.608 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_REROUTE_PENDING : itomori (signalled-name: itomori, LSP Id: 4) is in re-route pending state.

RP/0/RP0/CPU0:Aug 23 11:10:57.608 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_EXPLICITROUTE : itomori (signalled-name: itomori, LSP Id: 5) explicit-route, 101.1.2.1, 101.1.4.3, 101.1.3.7, 202.158.0.7.

RP/0/RP0/CPU0:Aug 23 11:10:57.651 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_RECORDROUTE : itomori (signalled-name: itomori, T:32965, LSP Id: 5, Role: Reopt, src: 202.158.0.6, dest: 202.158.0.7) record-route empty.

RP/0/RP0/CPU0:Aug 23 11:10:57.651 -07: te_control[1088]: %ROUTING-MPLS_TE-5-S2L_SIGNALLING_STATE : itomori (signalled-name: itomori,T:32965, LSP id: 5, Role: Reopt, src: 202.158.0.6, dest: 202.158.0.7): signalling state changed to Up

RP/0/RP0/CPU0:Aug 23 11:10:57.653 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_BW_CHANGE : Bandwidth change on itomori (signalled-name: itomori, LSP id: 5): new bandwidth 2000000 kbps

RP/0/RP0/CPU0:Aug 23 11:10:57.653 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_REROUTE_PENDING_CLEAR : itomori (signalled-name: itomori, old LSP Id: 4, new LSP Id: 5) has been reoptimized.

RP/0/RP0/CPU0:Aug 23 11:10:57.653 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_REOPT : itomori (signalled-name: itomori, old LSP Id: 4, new LSP Id: 5) has been reoptimized; reason: Bandwidth CLI Change.

RP/0/RP0/CPU0:Aug 23 11:11:17.753 -07: te_control[1088]: %ROUTING-MPLS_TE-5-S2L_SIGNALLING_STATE : itomori (signalled-name: itomori,T:32965, LSP id: 4, Role: Clean, src: 202.158.0.6, dest: 202.158.0.7): signalling state changed to Down

Now it took 2 miliseconds (!) to get the LSP reoptimized from the time of new LSP coming up (11:10:57.651, "signalling state changed to Up") until reoptimization done (11:10:57.653, "has been reoptimized").

15 seconds to 2 miliseconds, which is a huge welcome improvement.

Let's see the self-ping statistics.

RP/0/RP0/CPU0:R1-Taki#show mpls traffic-eng self-ping statistics

Tue Aug 23 11:16:22.474 -07

Self-Ping Statistics:

Collected since: Tue Aug 23 11:10:29 2022 (00:05:53 ago)

Operations:

Started 1 <--- total number of self-ping operations have been done.

Running 0 <--- total number of self-ping operations currently running.

Successful 1 <--- how many self-ping operations are successful (probe packet is successfully received back)

Timed-out 0 <--- how many self-ping operations are timed-out (probe packet not received back)

Terminated 0 <--- how many self-ping operations are terminated ("reoptimize timers delay installation" expired before self-ping finishes)

Probes sent 1 <--- total number of probe packet have been sent out

Probes failed 0 <--- total number of probe packet that fail to be send out (very rare case).

Received responses 1 (Average response time 00:00:00) <--- total number of probe packet that is received back on this head end.

Mismatched responses 0 <--- total number of probe packet that is received back on this head end but has mismatch problem (very rare case).

And the self-ping info for the LSP.

RP/0/RP0/CPU0:R1-Taki#sh mpls traffic-eng tunnels name itomori detail

Tue Aug 23 11:22:26.583 -07

Name: itomori Destination: 202.158.0.7 Ifhandle:0xf00065c

Tunnel-ID: 32965

Status:

Admin: up Oper: up Path: valid Signalling: connected

path option yotsuha, preference 10, type dynamic (Basis for Setup, path weight 30)

Accumulative metrics: TE 30 IGP 30 Delay 900000

Path-option attribute: two_gig

Bandwidth: 2000000 (CT0)

G-PID: 0x0800 (derived from egress interface properties)

Bandwidth Requested: 2000000 kbps CT0

Creation Time: Mon Aug 22 17:38:46 2022 (17:43:40 ago)

Config Parameters:

Bandwidth: 0 kbps (CT0) Priority: 7 7 Affinity: 0x0/0xffff

Metric Type: TE (global)

Path Selection:

Tiebreaker: Min-fill (default)

Hop-limit: disabled

Cost-limit: disabled

Delay-limit: disabled

Delay-measurement: disabled

Path-invalidation timeout: 10000 msec (default), Action: Tear (default)

AutoRoute: enabled LockDown: disabled Policy class: not set

Forward class: 0 (not enabled)

Forwarding-Adjacency: disabled

Autoroute Destinations: 0

Loadshare: 0 equal loadshares

Load-interval: 300 seconds

Auto-bw: disabled

Auto-Capacity: Disabled:

Self-ping: Enabled

Maximum-probes: 60

Probes-period: 1 second(s)

Fast Reroute: Disabled, Protection Desired: None

Path Protection: Not Enabled

BFD Fast Detection: Disabled

Reoptimization after affinity failure: Enabled

Soft Preemption: Disabled

Self-ping:

Status: Succeeded (in 0 seconds)

LSP-ID: 5

Probes sent: 1

Started: Tue Aug 23 11:10:57 2022 (00:11:29 ago)

Stopped: Tue Aug 23 11:10:57 2022 (00:11:29 ago)

Response Received: Tue Aug 23 11:10:57 2022 (00:11:29 ago)

SNMP Index: 296

Binding SID: 0

History:

Tunnel has been up for: 17:43:40 (since Mon Aug 22 17:38:46 -07 2022)

Current LSP:

Uptime: 00:11:29 (since Tue Aug 23 11:10:57 -07 2022)

Prior LSP:

ID: 4 Path Option: 10

Removal Trigger: reoptimization completed

Current LSP Info:

Instance: 5, Signaling Area: IS-IS main level-1

Uptime: 00:11:29 (since Tue Aug 23 11:10:57 -07 2022)

Outgoing Interface: HundredGigE0/0/0/1.1, Outgoing Label: 24001

Router-IDs: local 202.158.0.6

downstream 202.158.0.1

Soft Preemption: None

SRLGs: not collected

Path Info:

Outgoing:

Explicit Route:

Strict, 101.1.2.1

Strict, 101.1.4.3

Strict, 101.1.3.7

Strict, 202.158.0.7

Record Route: Disabled

Tspec: avg rate=2000000 kbits, burst=1000 bytes, peak rate=2000000 kbits

Session Attributes: Local Prot: Not Set, Node Prot: Not Set, BW Prot: Not Set

Soft Preemption Desired: Not Set

Resv Info: None

Record Route: Disabled

Fspec: avg rate=2000000 kbits, burst=1000 bytes, peak rate=2000000 kbits

Persistent Forwarding Statistics:

Out Bytes: 136

Out Packets: 2

Displayed 1 (of 7) heads, 0 (of 0) midpoints, 0 (of 0) tails

Displayed 1 up, 0 down, 0 recovering, 0 recovered heads

RP/0/RP0/CPU0:R1-Taki#

Note that now:

- Even more info are showing related to self-ping.

- Self-ping Maximum-probes is set at 60 by default, which means the router will send out up to 60 probe for this LSP until it receives a probe back. Once a probe manages to get back, sending out of probe will stop.

- Probes-period is shown as 1 sec. This is a non configurable value in XR 7.5.3.

Now let's try to change the value of Maximum-probes from the default value of 60 to 30.

mpls traffic-eng

named-tunnels

tunnel-te itomori

self-ping

max-count 30

!

!

!

!

And induce reoptimization again:

mpls traffic-eng

named-tunnels

tunnel-te itomori

path-option yotsuha

attribute-set one_gig

!

!

!

!

RP/0/RP0/CPU0:Aug 23 11:31:27.610 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_REROUTE_PENDING : itomori (signalled-name: itomori, LSP Id: 5) is in re-route pending state.

RP/0/RP0/CPU0:Aug 23 11:31:27.610 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_EXPLICITROUTE : itomori (signalled-name: itomori, LSP Id: 6) explicit-route, 101.1.2.1, 101.1.4.3, 101.1.3.7, 202.158.0.7.

RP/0/RP0/CPU0:Aug 23 11:31:27.653 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_RECORDROUTE : itomori (signalled-name: itomori, T:32965, LSP Id: 6, Role: Reopt, src: 202.158.0.6, dest: 202.158.0.7) record-route empty.

RP/0/RP0/CPU0:Aug 23 11:31:27.653 -07: te_control[1088]: %ROUTING-MPLS_TE-5-S2L_SIGNALLING_STATE : itomori (signalled-name: itomori,T:32965, LSP id: 6, Role: Reopt, src: 202.158.0.6, dest: 202.158.0.7): signalling state changed to Up

RP/0/RP0/CPU0:Aug 23 11:31:27.657 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_BW_CHANGE : Bandwidth change on itomori (signalled-name: itomori, LSP id: 6): new bandwidth 1000000 kbps

RP/0/RP0/CPU0:Aug 23 11:31:27.657 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_REROUTE_PENDING_CLEAR : itomori (signalled-name: itomori, old LSP Id: 5, new LSP Id: 6) has been reoptimized.

RP/0/RP0/CPU0:Aug 23 11:31:27.657 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_REOPT : itomori (signalled-name: itomori, old LSP Id: 5, new LSP Id: 6) has been reoptimized; reason: Bandwidth CLI Change.

RP/0/RP0/CPU0:Aug 23 11:31:47.757 -07: te_control[1088]: %ROUTING-MPLS_TE-5-S2L_SIGNALLING_STATE : itomori (signalled-name: itomori,T:32965, LSP id: 5, Role: Clean, src: 202.158.0.6, dest: 202.158.0.7): signalling state changed to Down

Still similar result, it took 4 miliseconds to get the LSP reoptimized from the time of new LSP coming up (11:31:27.653, "signalling state changed to Up") until reoptimization done (11:31:27.657, "has been reoptimized").

Let's see the self-ping statistics.

RP/0/RP0/CPU0:R1-Taki#show mpls traffic-eng self-ping statistics

Tue Aug 23 11:38:46.028 -07

Self-Ping Statistics:

Collected since: Tue Aug 23 11:10:29 2022 (00:28:17 ago)

Operations:

Started 2

Running 0

Successful 2

Timed-out 0

Terminated 0

Probes sent 2

Probes failed 0

Received responses 2 (Average response time 00:00:00)

Mismatched responses 0

RP/0/RP0/CPU0:R1-Taki#

Note that max-count has no bearing on how many probes really being sent.

max-count value only denotes the maximum number of probes sent out until the router manages to receive a probe back.

Now let's see the self-ping info for the LSP.

RP/0/RP0/CPU0:R1-Taki#sh mpls traffic-eng tunnels name itomori detail

Tue Aug 23 11:42:49.901 -07

Name: itomori Destination: 202.158.0.7 Ifhandle:0xf00065c

Tunnel-ID: 32965

Status:

Admin: up Oper: up Path: valid Signalling: connected

path option yotsuha, preference 10, type dynamic (Basis for Setup, path weight 30)

Accumulative metrics: TE 30 IGP 30 Delay 900000

Path-option attribute: one_gig

Bandwidth: 1000000 (CT0)

G-PID: 0x0800 (derived from egress interface properties)

Bandwidth Requested: 1000000 kbps CT0

Creation Time: Mon Aug 22 17:38:46 2022 (18:04:03 ago)

Config Parameters:

Bandwidth: 0 kbps (CT0) Priority: 7 7 Affinity: 0x0/0xffff

Metric Type: TE (global)

Path Selection:

Tiebreaker: Min-fill (default)

Hop-limit: disabled

Cost-limit: disabled

Delay-limit: disabled

Delay-measurement: disabled

Path-invalidation timeout: 10000 msec (default), Action: Tear (default)

AutoRoute: enabled LockDown: disabled Policy class: not set

Forward class: 0 (not enabled)

Forwarding-Adjacency: disabled

Autoroute Destinations: 0

Loadshare: 0 equal loadshares

Load-interval: 300 seconds

Auto-bw: disabled

Auto-Capacity: Disabled:

Self-ping: Enabled

Maximum-probes: 30

Probes-period: 1 second(s)

Fast Reroute: Disabled, Protection Desired: None

Path Protection: Not Enabled

BFD Fast Detection: Disabled

Reoptimization after affinity failure: Enabled

Soft Preemption: Disabled

Self-ping:

Status: Succeeded (in 0 seconds)

LSP-ID: 6

Probes sent: 1

Started: Tue Aug 23 11:31:27 2022 (00:11:22 ago)

Stopped: Tue Aug 23 11:31:27 2022 (00:11:22 ago)

Response Received: Tue Aug 23 11:31:27 2022 (00:11:22 ago)

SNMP Index: 296

Binding SID: 0

History:

Tunnel has been up for: 18:04:03 (since Mon Aug 22 17:38:46 -07 2022)

Current LSP:

Uptime: 00:11:22 (since Tue Aug 23 11:31:27 -07 2022)

Prior LSP:

ID: 5 Path Option: 10

Removal Trigger: reoptimization completed

Current LSP Info:

Instance: 6, Signaling Area: IS-IS main level-1

Uptime: 00:11:22 (since Tue Aug 23 11:31:27 -07 2022)

Outgoing Interface: HundredGigE0/0/0/1.1, Outgoing Label: 24000

Router-IDs: local 202.158.0.6

downstream 202.158.0.1

Soft Preemption: None

SRLGs: not collected

Path Info:

Outgoing:

Explicit Route:

Strict, 101.1.2.1

Strict, 101.1.4.3

Strict, 101.1.3.7

Strict, 202.158.0.7

Record Route: Disabled

Tspec: avg rate=1000000 kbits, burst=1000 bytes, peak rate=1000000 kbits

Session Attributes: Local Prot: Not Set, Node Prot: Not Set, BW Prot: Not Set

Soft Preemption Desired: Not Set

Resv Info: None

Record Route: Disabled

Fspec: avg rate=1000000 kbits, burst=1000 bytes, peak rate=1000000 kbits

Persistent Forwarding Statistics:

Out Bytes: 136

Out Packets: 2

Displayed 1 (of 7) heads, 0 (of 0) midpoints, 0 (of 0) tails

Displayed 1 up, 0 down, 0 recovering, 0 recovered heads

RP/0/RP0/CPU0:R1-Taki#

Note that now Maximum-probes is correctly updated to 30 as configured.

Failure State

Now let's try a failure scenario where self-ping packets fail to loop back to the head end router.

Recall that self-ping packet has UDP payload with the head end address (202.158.0.6) as IP destination, and UDP destination port of 8503 per RFC7746.

We can configure an inbound ACL filtering out such packets on R4-Mitsuha's core facing interface Hu0/0/0/24.1 like so:

ipv4 access-list deny_self_ping

6 deny udp any host 202.158.0.6 eq 8503

99999 permit ipv4 any any

!

interface HundredGigE0/0/0/24.1

ipv4 access-group deny_self_ping ingress

!

Let's try to induce reoptimization again:

First let's config reopt timer back to default value of 20 seconds, and use self-ping max-count 10.

mpls traffic-eng

no reoptimize timers delay installation 15

named-tunnels

tunnel-te itomori

self-ping

max-count 10

!

!

!

!

Reoptimize the LSP:

mpls traffic-eng

named-tunnels

tunnel-te itomori

path-option yotsuha

attribute-set two_gig

!

!

!

!

RP/0/RP0/CPU0:Aug 23 14:19:27.626 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_REROUTE_PENDING : itomori (signalled-name: itomori, LSP Id: 6) is in re-route pending state.

RP/0/RP0/CPU0:Aug 23 14:19:27.626 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_EXPLICITROUTE : itomori (signalled-name: itomori, LSP Id: 7) explicit-route, 101.1.2.1, 101.1.4.3, 101.1.3.7, 202.158.0.7.

RP/0/RP0/CPU0:Aug 23 14:19:27.669 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_RECORDROUTE : itomori (signalled-name: itomori, T:32965, LSP Id: 7, Role: Reopt, src: 202.158.0.6, dest: 202.158.0.7) record-route empty.

RP/0/RP0/CPU0:Aug 23 14:19:27.670 -07: te_control[1088]: %ROUTING-MPLS_TE-5-S2L_SIGNALLING_STATE : itomori (signalled-name: itomori,T:32965, LSP id: 7, Role: Reopt, src: 202.158.0.6, dest: 202.158.0.7): signalling state changed to Up

RP/0/RP0/CPU0:Aug 23 14:19:47.770 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_BW_CHANGE : Bandwidth change on itomori (signalled-name: itomori, LSP id: 7): new bandwidth 2000000 kbps

RP/0/RP0/CPU0:Aug 23 14:19:47.770 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_REROUTE_PENDING_CLEAR : itomori (signalled-name: itomori, old LSP Id: 6, new LSP Id: 7) has been reoptimized.

RP/0/RP0/CPU0:Aug 23 14:19:47.770 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_REOPT : itomori (signalled-name: itomori, old LSP Id: 6, new LSP Id: 7) has been reoptimized; reason: Bandwidth CLI Change.

Now it took 20 seconds to get the LSP reoptimized from the time of new LSP coming up (14:19:27, "signalling state changed to Up") until reoptimization done (14:19:47, "has been reoptimized").

Here's what happened:

- Start reopt timer of 20 seconds.

- Taki router builds a probe message.

- Taki router sends this probe over the LSP, 1 probe each second, up to 10 probes.

- Taki router did not receive back any probes, self-ping "timed out" after 10 seconds, router will now fall back to wait for reopt timer of 20 seconds to expire.

- Reopt timer expired, traffic switches to new reoptimized LSP and reoptimization is marked as done.

Here's what we got from self-ping statistics.

Before:

RP/0/RP0/CPU0:R1-Taki#show mpls traffic-eng self-ping statistics

Tue Aug 23 11:38:46.028 -07

Self-Ping Statistics:

Collected since: Tue Aug 23 11:10:29 2022 (00:28:17 ago)

Operations:

Started 2

Running 0

Successful 2

Timed-out 0

Terminated 0

Probes sent 2

Probes failed 0

Received responses 2 (Average response time 00:00:00)

Mismatched responses 0

RP/0/RP0/CPU0:R1-Taki#

Now:

RP/0/RP0/CPU0:R1-Taki#show mpls traffic-eng self-ping statistics

Tue Aug 23 14:22:55.565 -07

Self-Ping Statistics:

Collected since: Tue Aug 23 11:10:29 2022 (03:12:26 ago)

Operations:

Started 3

Running 0

Successful 2

Timed-out 1

Terminated 0

Probes sent 12

Probes failed 0

Received responses 2 (Average response time 00:00:00)

Mismatched responses 0

RP/0/RP0/CPU0:R1-Taki#

And LSP detail showing latest self-ping status as "Timed-out":

RP/0/RP0/CPU0:R1-Taki#sh mpls traffic-eng tunnels name itomori detail

Tue Aug 23 14:32:11.396 -07

Name: itomori Destination: 202.158.0.7 Ifhandle:0xf00065c

Tunnel-ID: 32965

Status:

Admin: up Oper: up Path: valid Signalling: connected

path option yotsuha, preference 10, type dynamic (Basis for Setup, path weight 30)

Accumulative metrics: TE 30 IGP 30 Delay 900000

Path-option attribute: two_gig

Bandwidth: 2000000 (CT0)

G-PID: 0x0800 (derived from egress interface properties)

Bandwidth Requested: 2000000 kbps CT0

Creation Time: Mon Aug 22 17:38:46 2022 (20:53:25 ago)

Config Parameters:

Bandwidth: 0 kbps (CT0) Priority: 7 7 Affinity: 0x0/0xffff

Metric Type: TE (global)

Path Selection:

Tiebreaker: Min-fill (default)

Hop-limit: disabled

Cost-limit: disabled

Delay-limit: disabled

Delay-measurement: disabled

Path-invalidation timeout: 10000 msec (default), Action: Tear (default)

AutoRoute: enabled LockDown: disabled Policy class: not set

Forward class: 0 (not enabled)

Forwarding-Adjacency: disabled

Autoroute Destinations: 0

Loadshare: 0 equal loadshares

Load-interval: 300 seconds

Auto-bw: disabled

Auto-Capacity: Disabled:

Self-ping: Enabled

Maximum-probes: 10

Probes-period: 1 second(s)

Fast Reroute: Disabled, Protection Desired: None

Path Protection: Not Enabled

BFD Fast Detection: Disabled

Reoptimization after affinity failure: Enabled

Soft Preemption: Disabled

Self-ping:

Status: Timed-out (in 9 seconds)

LSP-ID: 7

Probes sent: 10

Started: Tue Aug 23 14:19:27 2022 (00:12:44 ago)

Stopped: Tue Aug 23 14:19:36 2022 (00:12:35 ago)

SNMP Index: 296

Binding SID: 0

History:

Tunnel has been up for: 20:53:25 (since Mon Aug 22 17:38:46 -07 2022)

Current LSP:

Uptime: 00:12:44 (since Tue Aug 23 14:19:27 -07 2022)

Prior LSP:

ID: 6 Path Option: 10

Removal Trigger: reoptimization completed

Current LSP Info:

Instance: 7, Signaling Area: IS-IS main level-1

Uptime: 00:12:44 (since Tue Aug 23 14:19:27 -07 2022)

Outgoing Interface: HundredGigE0/0/0/1.1, Outgoing Label: 24001

Router-IDs: local 202.158.0.6

downstream 202.158.0.1

Soft Preemption: None

SRLGs: not collected

Path Info:

Outgoing:

Explicit Route:

Strict, 101.1.2.1

Strict, 101.1.4.3

Strict, 101.1.3.7

Strict, 202.158.0.7

Record Route: Disabled

Tspec: avg rate=2000000 kbits, burst=1000 bytes, peak rate=2000000 kbits

Session Attributes: Local Prot: Not Set, Node Prot: Not Set, BW Prot: Not Set

Soft Preemption Desired: Not Set

Resv Info: None

Record Route: Disabled

Fspec: avg rate=2000000 kbits, burst=1000 bytes, peak rate=2000000 kbits

Persistent Forwarding Statistics:

Out Bytes: 136

Out Packets: 2

Displayed 1 (of 7) heads, 0 (of 0) midpoints, 0 (of 0) tails

Displayed 1 up, 0 down, 0 recovering, 0 recovered heads

RP/0/RP0/CPU0:R1-Taki#

As you can see from the above experiment, failure of self-ping merely means we will fall back to the way we do things before:

Wait for "reopt-install timer" to expire before switching the traffic to new reoptimized LSP.

Here's an interesting case, what's going to happen if "reopt-install timer" expired while self-ping is still ongoing?

Let's configure the setup with these values (these are actually default values for both knobs):

mpls traffic-eng

reoptimize timers delay installation 20

named-tunnels

tunnel-te itomori

self-ping

max-count 60

!

!

!

!

With ACL filtering out self-ping packets still active on R4-Mitsuha, now try to reoptimize on R1-Taki:

mpls traffic-eng

named-tunnels

tunnel-te itomori

path-option yotsuha

attribute-set one_gig

!

!

!

!

RP/0/RP0/CPU0:Aug 23 14:50:27.629 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_REROUTE_PENDING : itomori (signalled-name: itomori, LSP Id: 7) is in re-route pending state.

RP/0/RP0/CPU0:Aug 23 14:50:27.629 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_EXPLICITROUTE : itomori (signalled-name: itomori, LSP Id:

RP/0/RP0/CPU0:Aug 23 14:50:27.672 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_RECORDROUTE : itomori (signalled-name: itomori, T:32965, LSP Id: 8, Role: Reopt, src: 202.158.0.6, dest: 202.158.0.7) record-route empty.

RP/0/RP0/CPU0:Aug 23 14:50:27.672 -07: te_control[1088]: %ROUTING-MPLS_TE-5-S2L_SIGNALLING_STATE : itomori (signalled-name: itomori,T:32965, LSP id: 8, Role: Reopt, src: 202.158.0.6, dest: 202.158.0.7): signalling state changed to Up

RP/0/RP0/CPU0:Aug 23 14:50:47.773 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_BW_CHANGE : Bandwidth change on itomori (signalled-name: itomori, LSP id: 8): new bandwidth 1000000 kbps

RP/0/RP0/CPU0:Aug 23 14:50:47.773 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_REROUTE_PENDING_CLEAR : itomori (signalled-name: itomori, old LSP Id: 7, new LSP Id:

RP/0/RP0/CPU0:Aug 23 14:50:47.773 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_REOPT : itomori (signalled-name: itomori, old LSP Id: 7, new LSP Id:

RP/0/RP0/CPU0:Aug 23 14:51:07.873 -07: te_control[1088]: %ROUTING-MPLS_TE-5-S2L_SIGNALLING_STATE : itomori (signalled-name: itomori,T:32965, LSP id: 7, Role: Clean, src: 202.158.0.6, dest: 202.158.0.7): signalling state changed to Down

It took 20 seconds to get the LSP reoptimized from the time of new LSP coming up (14:50:27, "signalling state changed to Up") until reoptimization done (14:50:47, "has been reoptimized").

Here's what happened:

- Start reopt timer of 20 seconds.

- Taki router builds a probe message.

- Taki router sends this probe over the LSP, 1 probe each second, up to 60 probes.

- Taki router did not receive back any probes, "reopt-install timer" expired at 20 seconds while self-ping is still ongoing.

- Self-ping is terminated before it finishes, traffic switches to new reoptimized LSP and reoptimization is marked as done.

Here's what we got from self-ping statistics.

Before:

RP/0/RP0/CPU0:R1-Taki#show mpls traffic-eng self-ping statistics

Tue Aug 23 14:22:55.565 -07

Self-Ping Statistics:

Collected since: Tue Aug 23 11:10:29 2022 (03:12:26 ago)

Operations:

Started 3

Running 0

Successful 2

Timed-out 1

Terminated 0

Probes sent 12

Probes failed 0

Received responses 2 (Average response time 00:00:00)

Mismatched responses 0

RP/0/RP0/CPU0:R1-Taki#

Now:

RP/0/RP0/CPU0:R1-Taki#show mpls traffic-eng self-ping statistics

Tue Aug 23 15:02:03.161 -07

Self-Ping Statistics:

Collected since: Tue Aug 23 11:10:29 2022 (03:51:34 ago)

Operations:

Started 4

Running 0

Successful 2

Timed-out 1

Terminated 1

Probes sent 33

Probes failed 0

Received responses 2 (Average response time 00:00:00)

Mismatched responses 0

RP/0/RP0/CPU0:R1-Taki#

LSP info, note that it now shows last self-ping status as "Terminated":

RP/0/RP0/CPU0:R1-Taki#sh mpls traffic-eng tunnels name itomori detail

Tue Aug 23 15:04:19.833 -07

Name: itomori Destination: 202.158.0.7 Ifhandle:0xf00065c

Tunnel-ID: 32965

Status:

Admin: up Oper: up Path: valid Signalling: connected

path option yotsuha, preference 10, type dynamic (Basis for Setup, path weight 30)

Accumulative metrics: TE 30 IGP 30 Delay 900000

Path-option attribute: one_gig

Bandwidth: 1000000 (CT0)

G-PID: 0x0800 (derived from egress interface properties)

Bandwidth Requested: 1000000 kbps CT0

Creation Time: Mon Aug 22 17:38:46 2022 (21:25:33 ago)

Config Parameters:

Bandwidth: 0 kbps (CT0) Priority: 7 7 Affinity: 0x0/0xffff

Metric Type: TE (global)

Path Selection:

Tiebreaker: Min-fill (default)

Hop-limit: disabled

Cost-limit: disabled

Delay-limit: disabled

Delay-measurement: disabled

Path-invalidation timeout: 10000 msec (default), Action: Tear (default)

AutoRoute: enabled LockDown: disabled Policy class: not set

Forward class: 0 (not enabled)

Forwarding-Adjacency: disabled

Autoroute Destinations: 0

Loadshare: 0 equal loadshares

Load-interval: 300 seconds

Auto-bw: disabled

Auto-Capacity: Disabled:

Self-ping: Enabled

Maximum-probes: 60

Probes-period: 1 second(s)

Fast Reroute: Disabled, Protection Desired: None

Path Protection: Not Enabled

BFD Fast Detection: Disabled

Reoptimization after affinity failure: Enabled

Soft Preemption: Disabled

Self-ping:

Status: Terminated (in 20 seconds)

LSP-ID: 8

Started: Tue Aug 23 14:50:27 2022 (00:13:52 ago)

Stopped: Tue Aug 23 14:50:47 2022 (00:13:32 ago)

SNMP Index: 296

Binding SID: 0

History:

Tunnel has been up for: 21:25:33 (since Mon Aug 22 17:38:46 -07 2022)

Current LSP:

Uptime: 00:13:52 (since Tue Aug 23 14:50:27 -07 2022)

Prior LSP:

ID: 7 Path Option: 10

Removal Trigger: reoptimization completed

Current LSP Info:

Instance: 8, Signaling Area: IS-IS main level-1

Uptime: 00:13:52 (since Tue Aug 23 14:50:27 -07 2022)

Outgoing Interface: HundredGigE0/0/0/1.1, Outgoing Label: 24000

Router-IDs: local 202.158.0.6

downstream 202.158.0.1

Soft Preemption: None

SRLGs: not collected

Path Info:

Outgoing:

Explicit Route:

Strict, 101.1.2.1

Strict, 101.1.4.3

Strict, 101.1.3.7

Strict, 202.158.0.7

Record Route: Disabled

Tspec: avg rate=1000000 kbits, burst=1000 bytes, peak rate=1000000 kbits

Session Attributes: Local Prot: Not Set, Node Prot: Not Set, BW Prot: Not Set

Soft Preemption Desired: Not Set

Resv Info: None

Record Route: Disabled

Fspec: avg rate=1000000 kbits, burst=1000 bytes, peak rate=1000000 kbits

Persistent Forwarding Statistics:

Out Bytes: 136

Out Packets: 2

Displayed 1 (of 7) heads, 0 (of 0) midpoints, 0 (of 0) tails

Displayed 1 up, 0 down, 0 recovering, 0 recovered heads

RP/0/RP0/CPU0:R1-Taki#

Debugging Self-ping Feature

For troubleshooting, we can activate debug for self-ping to get more granular info:

RP/0/RP0/CPU0:R1-Taki#debug mpls traffic-eng self-ping error

RP/0/RP0/CPU0:R1-Taki#debug mpls traffic-eng self-ping event

RP/0/RP0/CPU0:Aug 23 15:09:57.631 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_REROUTE_PENDING : itomori (signalled-name: itomori, LSP Id:

RP/0/RP0/CPU0:Aug 23 15:09:57.631 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_EXPLICITROUTE : itomori (signalled-name: itomori, LSP Id: 9) explicit-route, 101.1.2.1, 101.1.4.3, 101.1.3.7, 202.158.0.7.

RP/0/RP0/CPU0:Aug 23 15:09:57.675 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_RECORDROUTE : itomori (signalled-name: itomori, T:32965, LSP Id: 9, Role: Reopt, src: 202.158.0.6, dest: 202.158.0.7) record-route empty.

RP/0/RP0/CPU0:Aug 23 15:09:57.675 -07: te_control[1088]: %ROUTING-MPLS_TE-5-S2L_SIGNALLING_STATE : itomori (signalled-name: itomori,T:32965, LSP id: 9, Role: Reopt, src: 202.158.0.6, dest: 202.158.0.7): signalling state changed to Up

RP/0/RP0/CPU0:Aug 23 15:09:57.675 -07: te_control[1088]: DBG-SP-EVT[9961], FSM: Sending SP-START msg

RP/0/RP0/CPU0:Aug 23 15:09:57.675 -07: te_control[1088]: DBG-SP-EVT[9961], probes_max 60, probe_period 1, tunnel_id 32965, lsp_id 9, src_addr 202.158.0.7, dst_addr 202.158.0.6, nexthop 101.1.2.1

RP/0/RP0/CPU0:Aug 23 15:09:57.675 -07: te_control[1088]: DBG-SP-EVT[9961], [140340004452288] te_selfping_send_msg id 0

RP/0/RP0/CPU0:Aug 23 15:09:57.675 -07: te_control[1088]: DBG-SP-EVT[10088], [140339901060864] SP-START: Received probes_max 60, probe_period 1, tunnel_id 32965, lsp_id 9, src_addr 202.158.0.7, dst_addr 202.158.0.6, label 24001, if 251660008, nexthop 101.1.2.1

RP/0/RP0/CPU0:Aug 23 15:09:57.675 -07: te_control[1088]: DBG-SP-EVT[10088], Created self-ping ctx 0x7fa338007c60

RP/0/RP0/CPU0:Aug 23 15:09:57.675 -07: te_control[1088]: DBG-SP-EVT[10088], Retry timer - START

RP/0/RP0/CPU0:Aug 23 15:09:57.675 -07: te_control[1088]: DBG-SP-EVT[10088], Created SP ipv4 probe: 202.158.0.7:64206 -> 202.158.0.6:8503, mpls label 24001, ttl 255, traffic-class: 0, dscp: 48

RP/0/RP0/CPU0:Aug 23 15:09:57.675 -07: te_control[1088]: DBG-SP-EVT[10088], NETIO: Sending probe tunnel-id 32965, lsp-id 9, probe number 0

RP/0/RP0/CPU0:Aug 23 15:09:57.675 -07: te_control[1088]: DBG-SP-EVT[10088], [140339901060864] te_start_selfping_stats (0x7fa379185c68)

RP/0/RP0/CPU0:Aug 23 15:09:57.678 -07: te_control[1088]: DBG-SP-EVT[10088], SP-UDP: Packet received size 60

RP/0/RP0/CPU0:Aug 23 15:09:57.678 -07: te_control[1088]: DBG-SP-EVT[10088], SP-UDP: RESPONSE tunnel_id 32965, lsp_id 9, probe_number 0

RP/0/RP0/CPU0:Aug 23 15:09:57.678 -07: te_control[1088]: DBG-SP-EVT[10088], [140339901060864] te_stop_selfping_stats (0x7fa379185c68)

RP/0/RP0/CPU0:Aug 23 15:09:57.678 -07: te_control[1088]: DBG-SP-EVT[10088], FSM: Sending SP-RESULT msg

RP/0/RP0/CPU0:Aug 23 15:09:57.678 -07: te_control[1088]: DBG-SP-EVT[10088], status 4, tunnel_id 32965, lsp_id 9, first_probe_sent 1661292597, last_probe_sent 1661292597, response_received 1661292597, probes_sent 1

RP/0/RP0/CPU0:Aug 23 15:09:57.678 -07: te_control[1088]: DBG-SP-EVT[10088], [140339901060864] te_selfping_send_msg id 2

RP/0/RP0/CPU0:Aug 23 15:09:57.678 -07: te_control[1088]: DBG-SP-EVT[10088], Retry timer - STOP

RP/0/RP0/CPU0:Aug 23 15:09:57.678 -07: te_control[1088]: DBG-SP-EVT[10088], Deleting self-ping ctx 0x7fa338007c60

RP/0/RP0/CPU0:Aug 23 15:09:57.678 -07: te_control[1088]: DBG-SP-EVT[10088], SP-UDP: free pkt buffer

RP/0/RP0/CPU0:Aug 23 15:09:57.678 -07: te_control[1088]: DBG-SP-EVT[9961], [140340004452288] SP-RESULT: Received tunnel_id 32965, lsp_id 9, probes_sent 1

RP/0/RP0/CPU0:Aug 23 15:09:57.678 -07: te_control[1088]: DBG-SP-EVT[9961], Install timer - STOP

RP/0/RP0/CPU0:Aug 23 15:09:57.678 -07: te_control[1088]: DBG-SP-EVT[9961], Switch to reopt lsp

RP/0/RP0/CPU0:Aug 23 15:09:57.678 -07: te_control[1088]: DBG-SP-ERR[10088], SP-UDP: Packet read error (No data available)

RP/0/RP0/CPU0:Aug 23 15:09:57.679 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_BW_CHANGE : Bandwidth change on itomori (signalled-name: itomori, LSP id: 9): new bandwidth 2000000 kbps

RP/0/RP0/CPU0:Aug 23 15:09:57.679 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_REROUTE_PENDING_CLEAR : itomori (signalled-name: itomori, old LSP Id: 8, new LSP Id: 9) has been reoptimized.

RP/0/RP0/CPU0:Aug 23 15:09:57.679 -07: te_control[1088]: %ROUTING-MPLS_TE-5-LSP_REOPT : itomori (signalled-name: itomori, old LSP Id: 8, new LSP Id: 9) has been reoptimized; reason: Bandwidth CLI Change.

RP/0/RP0/CPU0:Aug 23 15:10:17.779 -07: te_control[1088]: %ROUTING-MPLS_TE-5-S2L_SIGNALLING_STATE : itomori (signalled-name: itomori,T:32965, LSP id: 8, Role: Clean, src: 202.158.0.6, dest: 202.158.0.7): signalling state changed to Down

Don't forget to undebug once troubleshooting is done.

RP/0/RP0/CPU0:R1-Taki#undebug mpls traffic-eng self-ping error

RP/0/RP0/CPU0:R1-Taki#undebug mpls traffic-eng self-ping event

We can also clear self-ping statistics:

RP/0/RP0/CPU0:R1-Taki#clear mpls traffic-eng self-ping statistics

Tue Aug 23 15:03:19.222 -07

RP/0/RP0/CPU0:R1-Taki#show mpls traffic-eng self-ping statistics

Tue Aug 23 15:03:20.631 -07

Self-Ping Statistics:

Collected since: Tue Aug 23 15:03:19 2022 (00:00:01 ago)

Operations:

Started 0

Running 0

Successful 0

Timed-out 0

Terminated 0

Probes sent 0

Probes failed 0

Received responses 0 (Average response time 00:00:00)

Mismatched responses 0

RP/0/RP0/CPU0:R1-Taki#

Now the self-ping stats is cleared.

Config Summary

on head end router:

ipv4 unnumbered mpls traffic-eng Loopback0

interface Loopback0

ipv4 address 202.158.0.6 255.255.255.255

ipv6 address 202:158::6/128

!

interface HundredGigE0/0/0/1.1

ipv4 address 101.1.2.6 255.255.255.0

ipv6 address 101:1:2::6/64

encapsulation dot1q 1

!

router isis main

net 47.0005.6666.6666.6666.00

nsr

distribute link-state

log adjacency changes

address-family ipv4 unicast

metric-style wide

mpls traffic-eng level-1-2

mpls traffic-eng router-id Loopback0

router-id Loopback0

!

address-family ipv6 unicast

metric-style wide

router-id Loopback0

!

interface Loopback0

passive

address-family ipv4 unicast

!

address-family ipv6 unicast

!

!

interface HundredGigE0/0/0/1.1

point-to-point

address-family ipv4 unicast

!

address-family ipv6 unicast

!

!

!

rsvp

interface HundredGigE0/0/0/1.1

bandwidth 10000000

!

!

mpls traffic-eng

interface HundredGigE0/0/0/1.1

!

logging events all

attribute-set path-option one_gig

signalled-bandwidth 1000000 class-type 0

!

attribute-set path-option two_gig

signalled-bandwidth 2000000 class-type 0

!

attribute-set path-option nine_gig

signalled-bandwidth 9000000 class-type 0

!

soft-preemption

!

reoptimize timers delay installation 20

named-tunnels

tunnel-te itomori

path-option yotsuha

preference 10

computation dynamic

attribute-set two_gig

!

self-ping

max-count 60

!

autoroute announce

!

destination 202.158.0.7

!

!

!

on transit / tail-end router

There is no special config needed on transit and tail end router.

Here's relevant config on tail end router:

ipv4 unnumbered mpls traffic-eng Loopback0

interface Loopback0

ipv4 address 202.158.0.7 255.255.255.255

ipv6 address 202:158::7/128

!

interface HundredGigE0/0/0/24.1

ipv4 address 101.1.3.7 255.255.255.0

ipv6 address 101:1:3::7/64

encapsulation dot1q 1

!

router isis main

net 47.0005.7777.7777.7777.00

nsr

distribute link-state

log adjacency changes

address-family ipv4 unicast

metric-style wide

mpls traffic-eng level-1-2

mpls traffic-eng router-id Loopback0

router-id Loopback0

!

address-family ipv6 unicast

metric-style wide

router-id Loopback0

!

interface Loopback0

passive

address-family ipv4 unicast

!

address-family ipv6 unicast

!

!

interface HundredGigE0/0/0/24.1

point-to-point

address-family ipv4 unicast

!

address-family ipv6 unicast

!

!

!

rsvp

interface HundredGigE0/0/0/24.1

bandwidth 100000000

!

!

mpls traffic-eng

interface HundredGigE0/0/0/24.1

!

logging events all

!

soft-preemption

!

!

Related Commands

show mpls traffic-eng tunnels name itomori detail

show mpls traffic-eng self-ping statistics

clear mpls traffic-eng self-ping statistics

debug mpls traffic-eng self-ping error

debug mpls traffic-eng self-ping event

Logs to provide to Cisco TAC for MPLS-TE Self-Ping issues

If possible, activate "debug mpls traffic-eng self-ping" so that granular info can be recorded in syslog.

And then grab these logs:

show log | file harddisk:/show_log.txt

show run | file harddisk:/show_run.txt

sh tech mpls traffic-eng file harddisk:/show_tech_mpls_traffic_eng

Article change log

- 2022/08/24: Initial article post

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: