- Cisco Community

- Technology and Support

- Data Center and Cloud

- Application Centric Infrastructure

- Re: Multipod configuration did not work

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-25-2020 05:06 PM

I did all the configuration of multipod and I will do some 3x the leafs and spines.

Here is some information:

I'm making my second Fabric, so I'm putting it as Fabric ID: 2

I got to the part where I discover the POD2 Spines, however, I configure the IPN and Multipod and they are in discovering.

In this Fabric, POD1 has only 1 APIC and in POD2 it has the other two, I am starting the configurations by POD1. Is there a minimum number of APICs to climb the Multipod?

Could I upload all of POD2 and start configuring it?

Solved! Go to Solution.

- Labels:

-

ACI Multi-Site

-

ACI Virtual Pod

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-26-2020 06:45 AM

Hello Robert

Now I configured it with Fabric ID: 1, but I have the same problem.

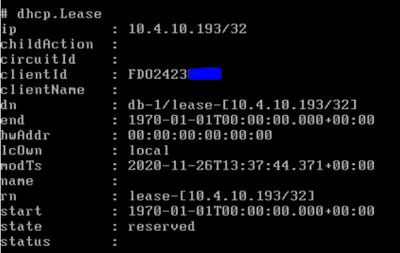

I configured the Multipod, registered the POD2 Spines, I see that the APIC caused the IPs as "Reserved" and I checked the IPL LLDP that is directly connected with the POD2 Spines and I already see them with the right name.

But it has not yet gone up.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-25-2020 05:28 PM - edited 11-25-2020 05:29 PM

First, don't use anything but fabric ID 1. You will distinguish fabrics by name. Second, you can discover the second pod fine with a single controller. If it's discovering you missed some config on the IPN most likely. Recheck everything like the DHCP relay and other critical ospf config.

Robert

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-26-2020 06:45 AM

Hello Robert

Now I configured it with Fabric ID: 1, but I have the same problem.

I configured the Multipod, registered the POD2 Spines, I see that the APIC caused the IPs as "Reserved" and I checked the IPL LLDP that is directly connected with the POD2 Spines and I already see them with the right name.

But it has not yet gone up.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-26-2020 06:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-26-2020 07:49 AM

Do you have any Leafs connected (physically and powered up) to the Spine in Pod2?

Also between the time you reconfigured the Fabric ID (which can only be done by erasing everything), did you also re-initialize the switches in Pod2 by running the script 'setup-clean-config.sh' locally on the Spine in Pod2?

Robert

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-26-2020 01:36 PM

I will do everything in POD2, but without success.

I checked the IPs of the OSPF interfaces and the IPN were changed. After fixing the IPs, the Multipod closed.

Thank you for your help!

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide