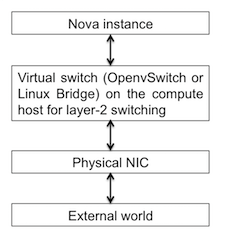

In an OpenStack deployment, when packets from a nova instance need to reach the external world, they first go through the virtual switch (OpenvSwitch or Linux Bridge) for layer-2 switching, then through the physical NIC of the compute host, and then leave the compute host. Hence, the path of the packets is:

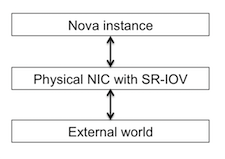

When, single Root I/O Virtualisation (SR-IOV) and PCI pass-through are deployed in OpenStack, the packets from the nova instance do not use the virtual switch (OpenvSwitch or Linux Bridge). They directly go through the physical NIC, and then leave the compute host, thereby bypassing the virtual switch. In this case, the physical NIC does the layer-2 switching of the packets. Hence, the path of the packets is:

A virtual switch (OpenvSwitch or Linux Bridge) may also be used with SR-IOV if an instance needs a virtual switch for layer-2 switching. A virtual switch is just software and obviously does not scale well like a physical NIC when the packet rate is high.

In order to deploy SR-IOV on Cisco's UCS servers, we need the UCSM ml2 neutron plugin developed by Cisco. Here are few links about the UCSM ml2 neutron plugin:

ML2 Mechanism Driver for Cisco UCS Manager — Neutron Specs ed6fc9a documentation

OpenStack/UCS Mechanism Driver for ML2 Plugin Liberty - DocWiki

Cisco UCS Manager ML2 Plugin for Openstack Networking : Blueprints : neutron

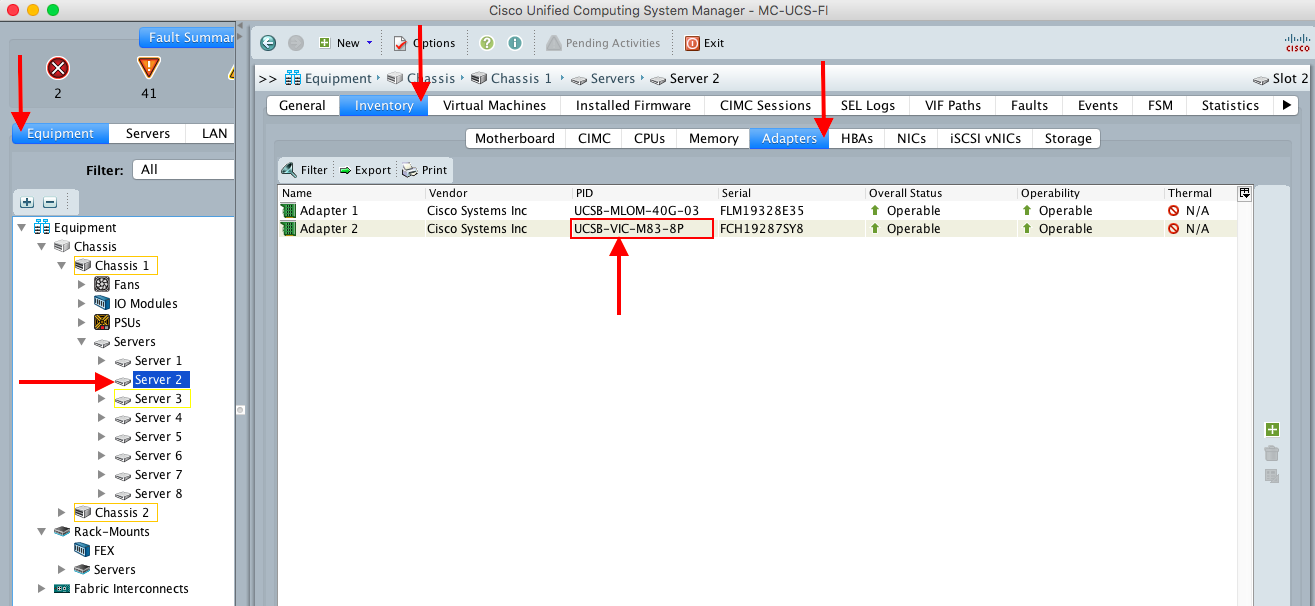

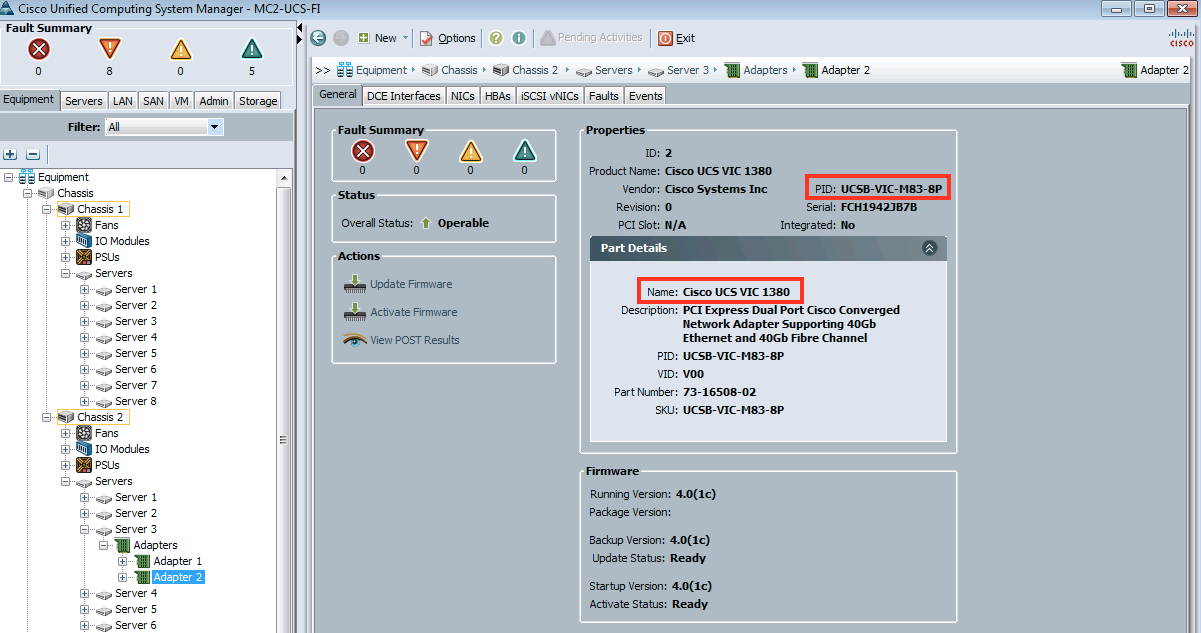

SR-IOV needs specific NICs and currently works only on the following Cisco VICs:

- Cisco VIC 1380 on UCS B-series M4 blades

- Cisco VIC 1340 on UCS B-series M4 blades

- Cisco VIC 1240 on UCS B-series M3 blades

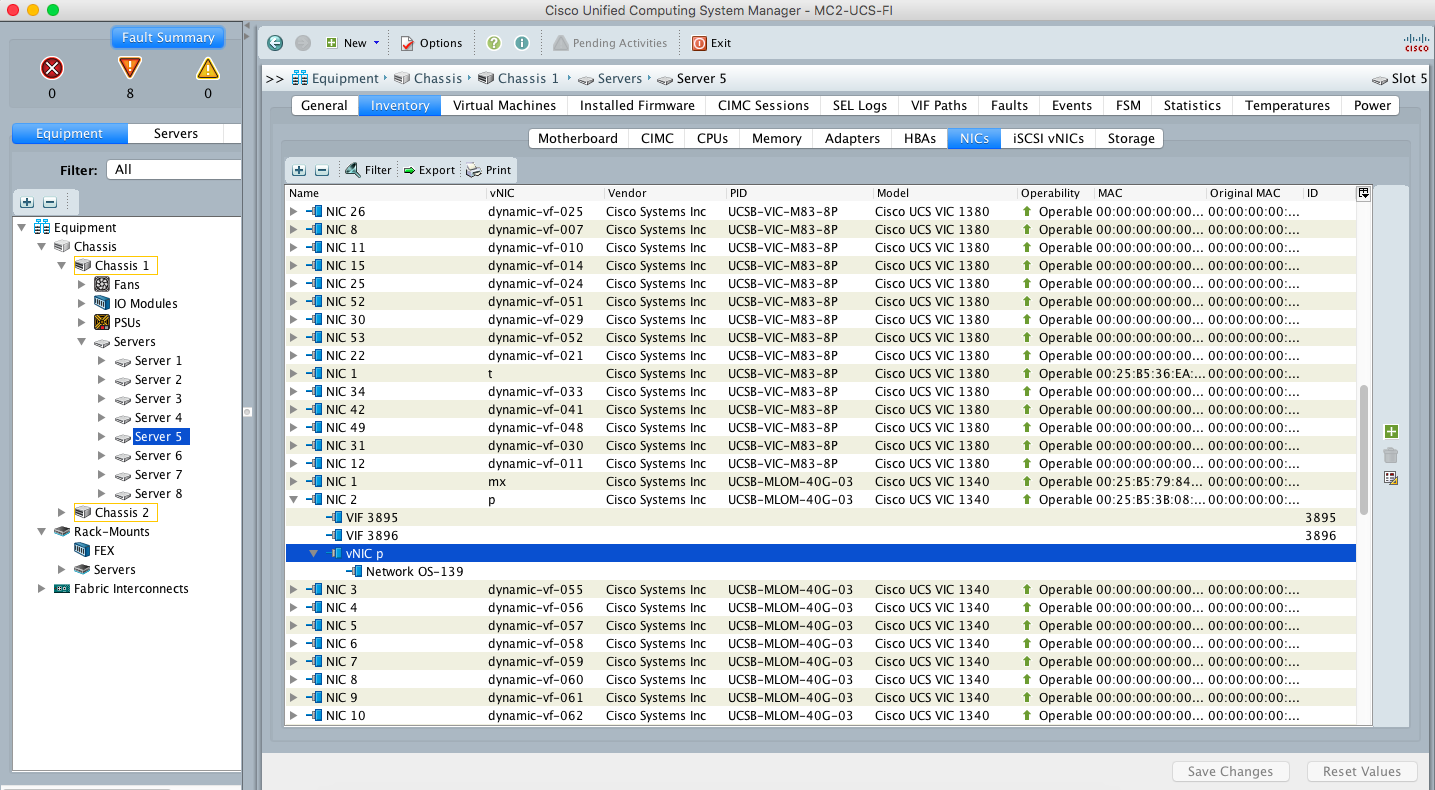

Here is the Cisco VIC 1380 in the UCSM GUI:

Below are the neutron and nova configurations needed to enable SR-IOV in OpenStack:

In /etc/neutron/plugins/ml2/ml2_conf_sriov.ini:

[sriov_nic]

physical_device_mappings = physnet2:eth3

exclude_devices =

In /etc/nova/nova.conf:

[default]

pci_passthrough_whitelist = { "devname": "eth3", "physical_network": "physnet2"}

Pass /etc/neutron/plugins/ml2/ml2_conf_sriov.ini as --config-file and start neutron-server.

$ neutron-server \

--config-file /etc/neutron/neutron.conf \

--config-file /etc/neutron/plugin.ini \

--config-file /etc/neutron/plugins/ml2/ml2_conf_sriov.ini

Pass /etc/neutron/plugins/ml2/ml2_conf_sriov.ini as --config-file and start neutron-sriov-nic-agent.

$ neutron-sriov-nic-agent \

--config-file /etc/neutron/neutron.conf -\

--config-file /etc/neutron/plugins/ml2/ml2_conf_sriov.ini

OpenStack Docs: Using SR-IOV functionality

OpenStack Docs: Attaching physical PCI devices to guests

Not all OpenStack images can be booted with SR-IOV port. The image must have the following ENIC driver that interacts with the VF (Virtual Function) for SR-IOV to work.

$ modinfo enic

filename: /lib/modules/3.10.0-327.10.1.el7.x86_64/kernel/drivers/net/ethernet/cisco/enic/enic.ko

version: 2.1.1.83

license: GPL

author: Scott Feldman <scofeldm@cisco.com>

description: Cisco VIC Ethernet NIC Driver

rhelversion: 7.2

srcversion: E3ADA231AA76168CA78577A

alias: pci:v00001137d00000071sv*sd*bc*sc*i*

alias: pci:v00001137d00000044sv*sd*bc*sc*i*

alias: pci:v00001137d00000043sv*sd*bc*sc*i*

depends:

intree: Y

vermagic: 3.10.0-327.10.1.el7.x86_64 SMP mod_unload modversions

signer: Red Hat Enterprise Linux kernel signing key

sig_key: E3:9A:6C:00:A1:DE:4D:FA:F5:90:62:8C:AB:EC:BC:EB:07:66:32:8A

sig_hashalgo: sha256

Create an SR-IOV neutron port by using the argument "--binding:vnic-type direct". Note the port ID in the output below. It will be needed to boot the nova instance and attach it to the SR-IOV port.

neutron port-create provider_net --binding:vnic-type direct --name=sr-iov-port

neutron port-list

Boot a nova VM and attach the SR-IOV port to it.

nova boot --flavor m1.large --image RHEL-guest-image --nic port-id=<port ID of SR-IOV port> --key-name mc2-installer-key sr-iov-vm

nova list

Once the instance is up, ping it and SSH into it (security groups do not work for SR-IOV ports. Please refer the "Known limitations of SR-IOV ports" section at the end of this blog). Make sure that you can ping the gateway of the instance. The packets from the instance will not go through the virtual switch (OpenvSwitch or Linux Bridge). They directly go through the physical NIC of the compute host, and then leave the compute host, thereby bypassing the virtual switch.

Below are the steps to verify SR-IOV (VF) in the UCSM GUI.

Below are the steps to verify that the SR-IOV port does not use OpenvSwitch and bypasses it when sending/receiving packets:

1. Find the compute node on which sr-iov-vm is running.

# nova show sr-iov-vm | grep host

| OS-EXT-SRV-ATTR:host | mc2-compute-12 |

| OS-EXT-SRV-ATTR:hypervisor_hostname | mc2-compute-12 |

2. Find the port ID of the SR-IOV neutron port. d8379d3e-8877-4fec-8b50-ab939dbca797 is the port ID of the SR-IOV port in this case.

# neutron port-list | grep sr-iov-port

3. SSH into the compute host (mc2-compute-12 in this case) and make sure that:

1. There is no interface with d8379d3e in its name on the compute host, and,

2. There is no interface with d8379d3e in the output of "ovs-vsctl show".

# ssh root@mc2-compute-12

[root@mc2-compute-12 ~]# ip a | grep d8379d3e

[root@mc2-compute-12 ~]#

[root@mc2-compute-12 ~]# ovs-vsctl show | grep d8379d3e

[root@mc2-compute-12 ~]#

The above outputs must be empty. This shows that the SR-IOV port is not using OpenvSwitch for layer-2 switching and the physical NIC connected to the SR-IOV port does the layer-2 switching for packets from the instance sr-iov-vm.

On the other hand, in case of an instance booted with a regular OVS (non-SR-IOV virtio) port, the above outputs will not be empty. Below is an example of regular OVS (non-SR-IOV virtio) port (ID f9936cb7-885d-451d-9fc2-a86a670be732) attached to a instance. This OVS (non-SR-IOV virtio) port is part of the br-int (OVS integration bridge) in the output of "ovs-vsctl show".

# nova list

+--------------------------------------+-----------+--------+------------+-------------+----------------------------+

| ID | Name | Status | Task State | Power State | Networks |

+--------------------------------------+-----------+--------+------------+-------------+----------------------------+

| 206a42e9-fa07-4128-b276-4764beaf9a9b | test-vm | ACTIVE | - | Running | mc2_provider=10.23.228.102 |

+--------------------------------------+-----------+--------+------------+-------------+----------------------------+

# neutron port-list | grep 10.23.228.102

| f9936cb7-885d-451d-9fc2-a86a670be732 | | fa:16:3e:df:30:32 | {"subnet_id": "9258aa43-59b2-4f57-8207-b7d30e7963e0", "ip_address": "10.23.228.102"} |

SSH into the compute host running the instance.

# ssh root@mc2-compute-4

[root@mc2-compute-4 ~]# ip a | grep f9936cb7

14: qbrf9936cb7-88: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc noqueue state UP

15: qvof9936cb7-88@qvbf9936cb7-88: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 9000 qdisc pfifo_fast master ovs-system state UP qlen 1000

16: qvbf9936cb7-88@qvof9936cb7-88: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 9000 qdisc pfifo_fast master qbrf9936cb7-88 state UP qlen 1000

17: tapf9936cb7-88: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc pfifo_fast master qbrf9936cb7-88 state UNKNOWN qlen 500

[root@mc2-compute-4 ~]# ovs-vsctl show

Bridge br-int

fail_mode: secure

Port br-int

Interface br-int

type: internal

Port "qvof9936cb7-88"

tag: 1

Interface "qvof9936cb7-88"

Advantages of SR-IOV ports:

- Faster packet processing as the virtual switch (OpenvSwitch or Linux Bridge) is not used.

- Can be used for multicast, media applications, video streaming apps, and real time applications.

Known limitations of SR-IOV ports:

- Security groups are not supported when using SR-IOV.

- When using Quality of Service (QoS), max_burst_kbps (burst over max_kbps) is not supported.

- SR-IOV is not integrated into the OpenStack Dashboard (horizon). Users must use the CLI or API to configure SR-IOV interfaces.

- Live migration is not supported for instances with SR-IOV ports.

- OpenStack Docs: SR-IOV

Hope this blog is helpful!