- Cisco Community

- Technology and Support

- Networking

- Networking Knowledge Base

- Cisco Software-Defined Access for Airports

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

08-16-2023 01:21 PM - edited 10-12-2023 10:13 AM

|

|

Cisco Public |

|

|

||

Contents

- Authors

- SD-Access Overview

- Scope and Audience for this Document

- Implementation Overview

- Reference Hardware/Software Matrix

- Airport Network

- Requirements

- Airport Challenges

- Security Compliance Mandates

- Mobility of Staff and Devices

- Increased Reliance on IoT Endpoints

- Resiliency and High Availability

- Passenger Safety and Asset Tracking

- IT Network Operational Challenges

- Cisco SD-Access Benefits for Airports

- Design

- Airport Subsystems

- Design Considerations

- Layer 3 Design

- Layer 2 Design

- Wireless Design

- Security

- Multicast

- Deploy

- Implementation Prerequisites

- Deployment considerations

- L2 only Networks - Tenants

- Segmentation:

- Multicast for CCTV / IPTV

- ASM

- SSM

- Silent Host

- Wireless for Tenants & Passengers

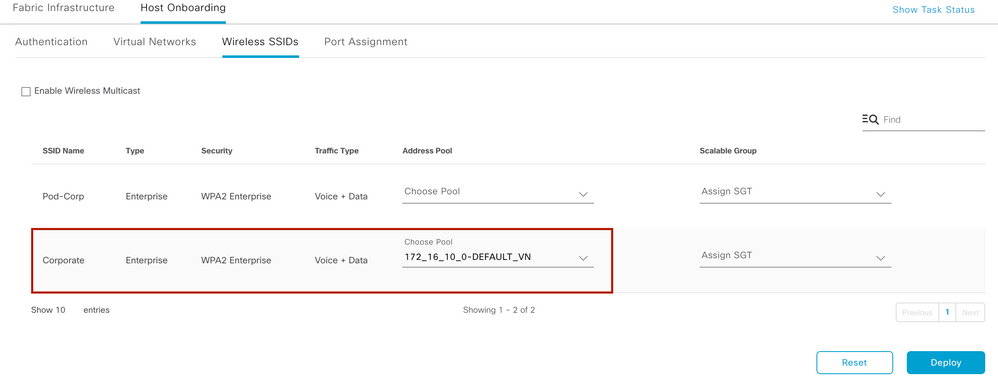

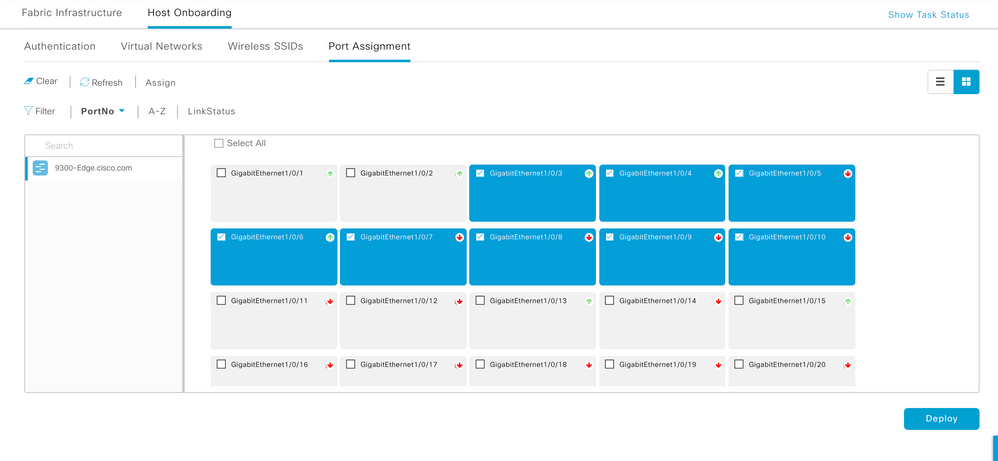

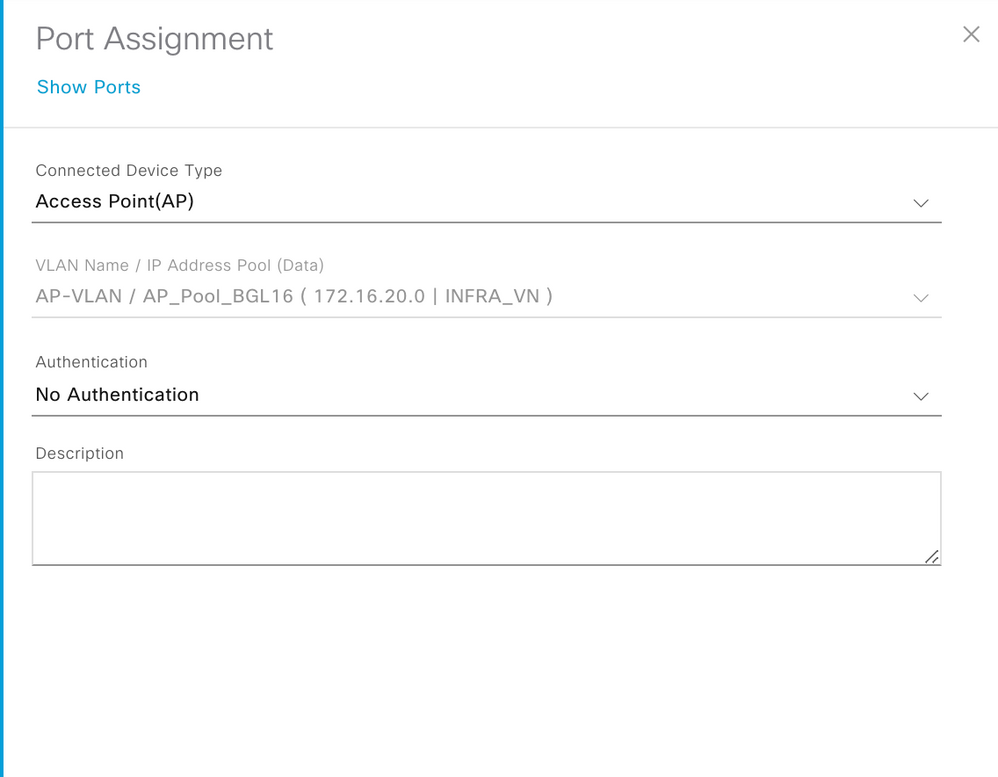

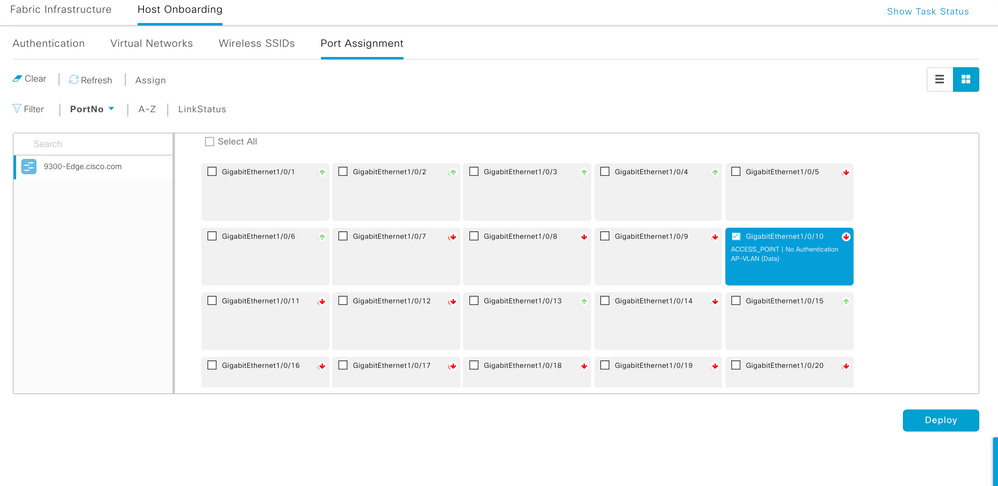

- Assign Wireless Clients to VN and Enable Connectivity

- IT-OT Deployment

- Business & Technical Requirements

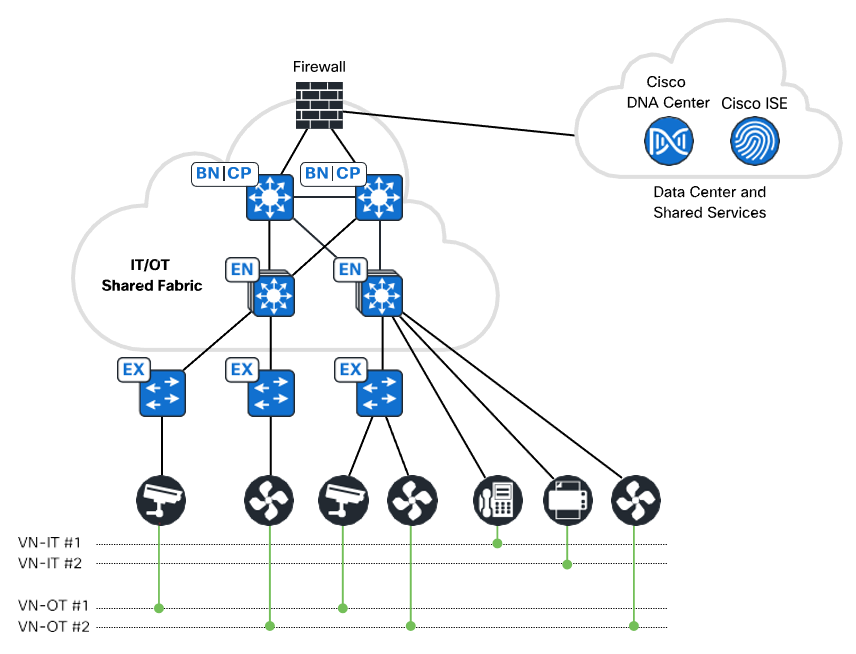

- Shared IT-OT Fabric

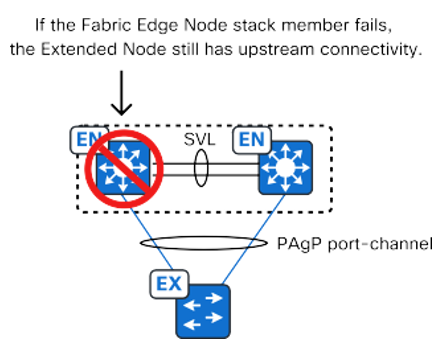

- Extended Node

- Extended Nodes – Ring Topology

- Operations & Management

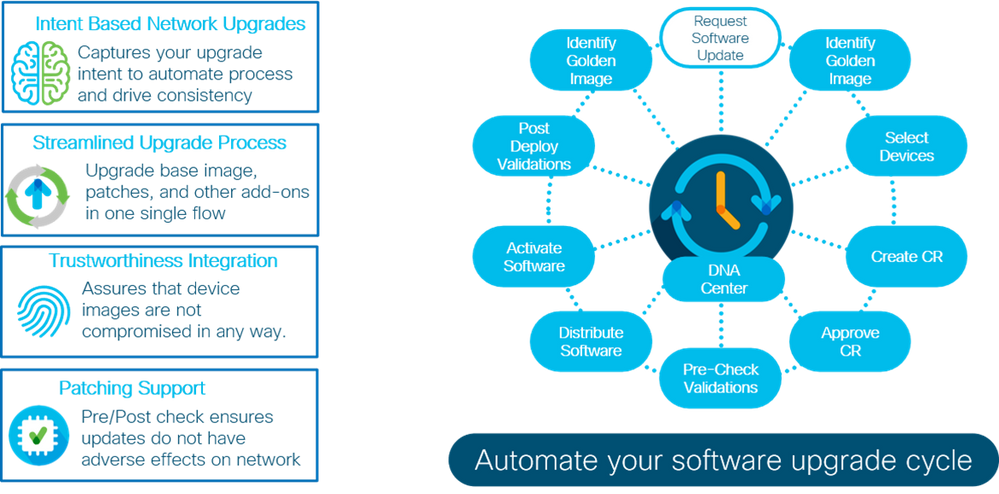

- SWIM

- Architecture Diagramof SWIM

- Benefits of SWIM in an Airport Network

- Minimizes Human Errors

- Reducing Service Impact Time

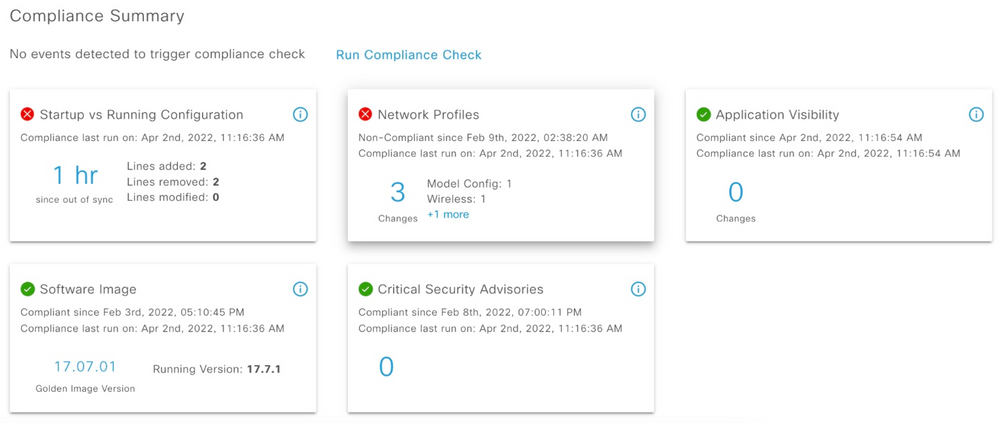

- Configuration Compliance

- Assurance

- Underlay Deployment

- References

Authors

Makesh Srinivasan

Customer Delivery Architect

Naveen Philip

Consulting Engineer

Nitesh Syal

Leader, Customer Delivery

Pavan Kuchinad

Consulting Engineer

SD-Access Overview

Challenges in managing a network is manifold due to the manual configuration and various tools and orchestration points. Also, Manual operations are slow and error-prone, and these issues are exacerbated due to the constantly changing environment with more users, devices, and applications. With the growth of users and different device types coming into the network, configuring user credentials, and maintaining a consistent policy across the network is more complex. If your policy is not consistent, there is the added complexity of maintaining separate policies between wired and wireless. As users move around the network, locating the users and troubleshooting issues also become more difficult. Without adequate knowledge of who and what is on the network and how they are using it, network administrators cannot create endpoint inventories or map traffic flows, leaving them unable to properly control the network. Network cannot respond quickly to evolving business needs if changes need to be done manually. Such modifications take a long time and are error prone. Traditional way of building a network does not cater to the needs evolving network and ever-growing security concerns.

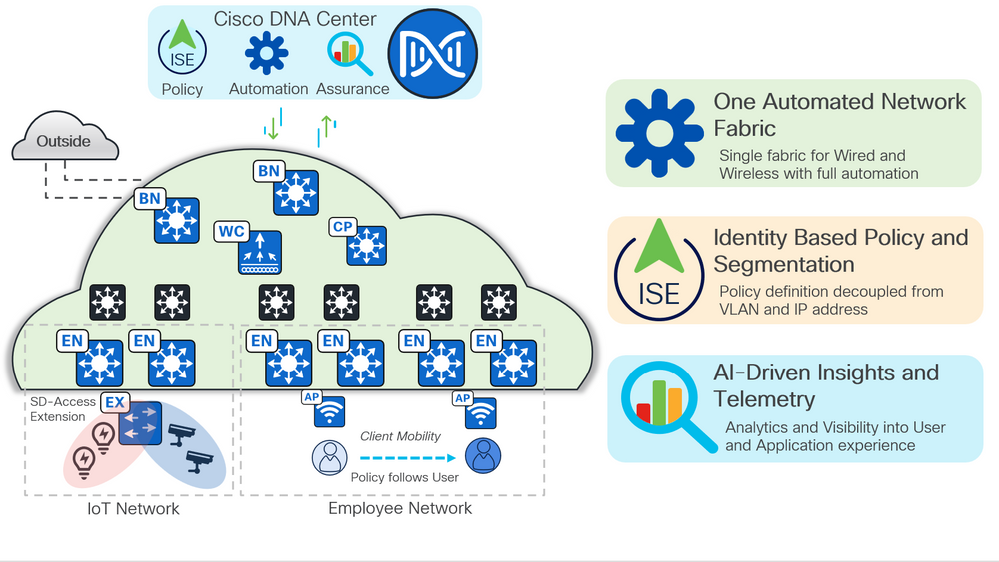

Cisco® Software-Defined Access (SD-Access) is a solution within Cisco Digital Network Architecture (Cisco DNA) which is built on intent-based networking principles. Cisco SD-Access provides visibility-based, automated end-to-end segmentation to separate user, device, and application traffic without redesigning the underlying physical network. Cisco SD-Access automates user-access policy so organizations can make sure the right policies are established for any user or device with any application across the network. This is accomplished by applying unified access policies across LAN and WLAN, which creates a consistent user experience anywhere without compromising on security.

Benefits

-

Enhance visibility by using advanced analytics for user and device identification and compliance. Employ artificial intelligence and machine learning techniques to classify similar endpoints into logical groups.

-

Leverage policy analytics by a thorough analysis of traffic flows between endpoint groups and use it to define the right group-based access policies. Define, author, and enforce these policies using a simple and intuitive graphical interface.

-

Segment to secure by automatically setting up all wired and wireless network devices for granular two-level segmentation for complete zero-trust security and regulatory compliance.

-

Exchange operating policies and ensure consistency by utilizing Cisco’s intent-based networking multidomain architecture for enforcement throughout the access, WAN, and multi-cloud data center networks.

Figure 1 SD-Access Overview

Scope and Audience for this Document

This Deployment guide provides guidance for deploying SD-Access in an Airport network. It is a companion to the associated design and deployment guides for enterprise networks, which provide guidance in how to deploy the most common implementations of SD-Access.

This document discusses the implementation for Cisco Software-Defined Access (SD-Access) deployments for Airports. For the associated deployment guides, design guides, and white papers, refer to the following documents:

- Cisco Enterprise Networking design guides: — https://www.cisco.com/go/designzone

Implementation Overview

The SD-Access for an Airport deployment is based on the Cisco Software-Defined Access Design Guide: https://cs.co/sda-sdg. The design enables wired and wireless communications between devices in an Indoor/outdoor or group of outdoor environments, as well as interconnection to the WAN and Internet edge at the network core.

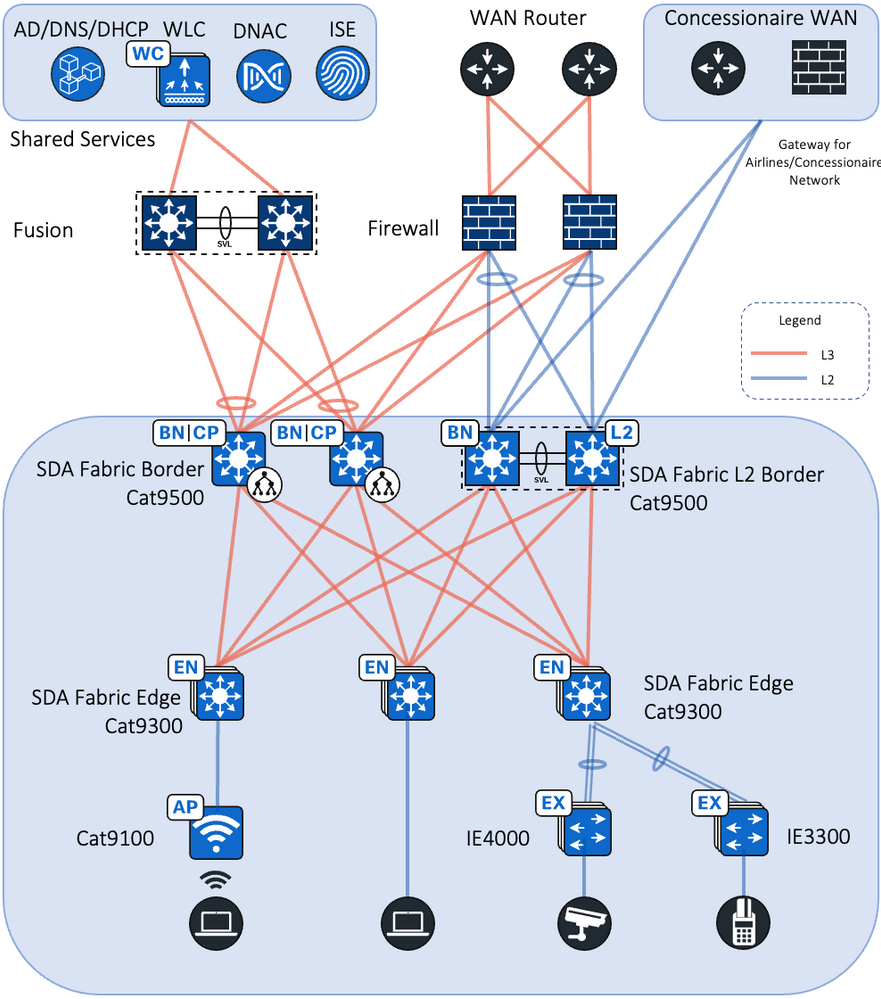

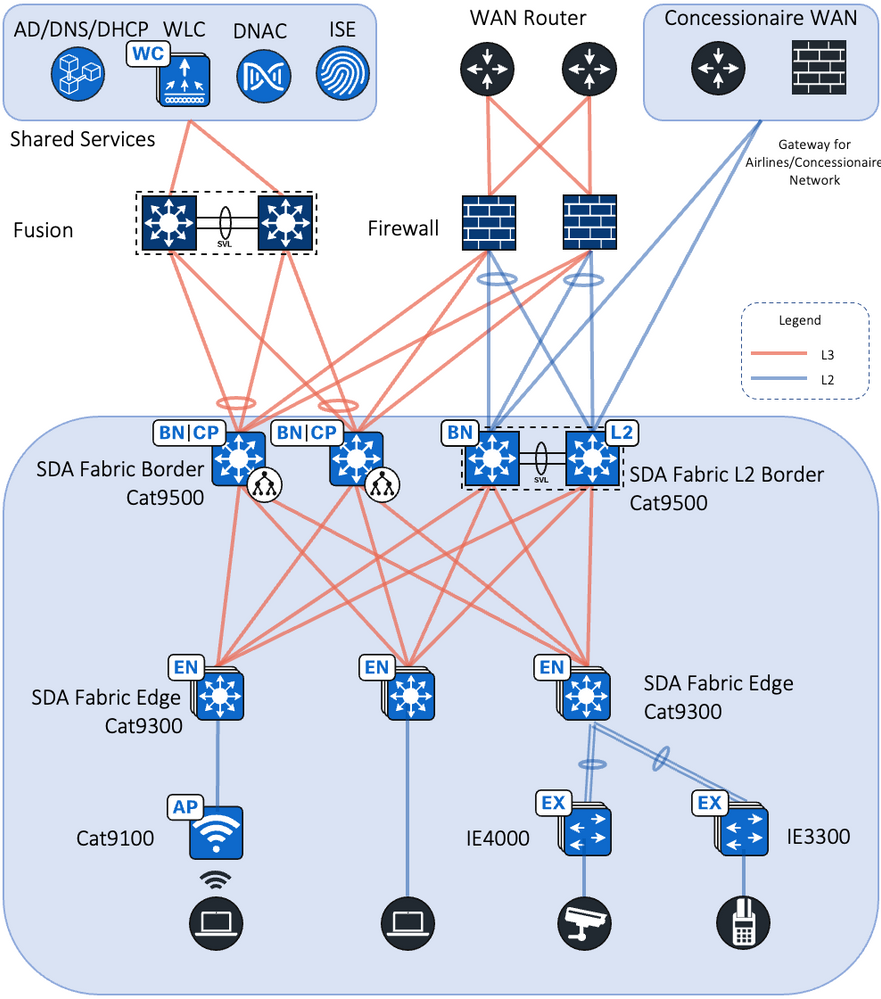

This document provides implementation guidelines for a Single site SD-Access deployment. The validation topology showcases an example of a deployment site as described below.

This site in the deployment guide is connected directly to the fusion devices by IP transit links. Its node roles are divided so that the Cisco Catalyst 9500s act as the combined fabric border and control nodes, and Cisco Catalyst 9300s in stacking configuration serve as the fabric edge nodes. This site has the WLC located on the shared services block in this implementation.

Note: Border/Control Plane & Edge node roles can be planned based on the SDA Compatibility Matrix

For latency requirements from Cisco DNA Center to a fabric edge, refer to the Cisco DNA Center User Guide at the following URL:

For network latency requirements from the AP to the WLC, refer to the latest Campus LAN and Wireless LAN

Design Guide at Cisco Design Zone:

Each site, building, floor, or geographic location has an enterprise access switch (for example, the Cisco Catalyst 9300) with at least two switches arranged in a stack. Ruggedized Cisco Industrial Ethernet (IE) switches are connected to the enterprise fabric edges as extended nodes or policy extended nodes and thus extend the enterprise network to the non-carpeted spaces. Figure 2 shows the validation topology. Figure 1 shows the validation topology.

Figure 2 SD-Access Fabric Topology

Security policies are uniformly applied, which provides consistent treatment for a given service across the enterprise and Extended Enterprise networks. Controlled access is given to shared services and other internal networks by appropriate authorization profile assignments.

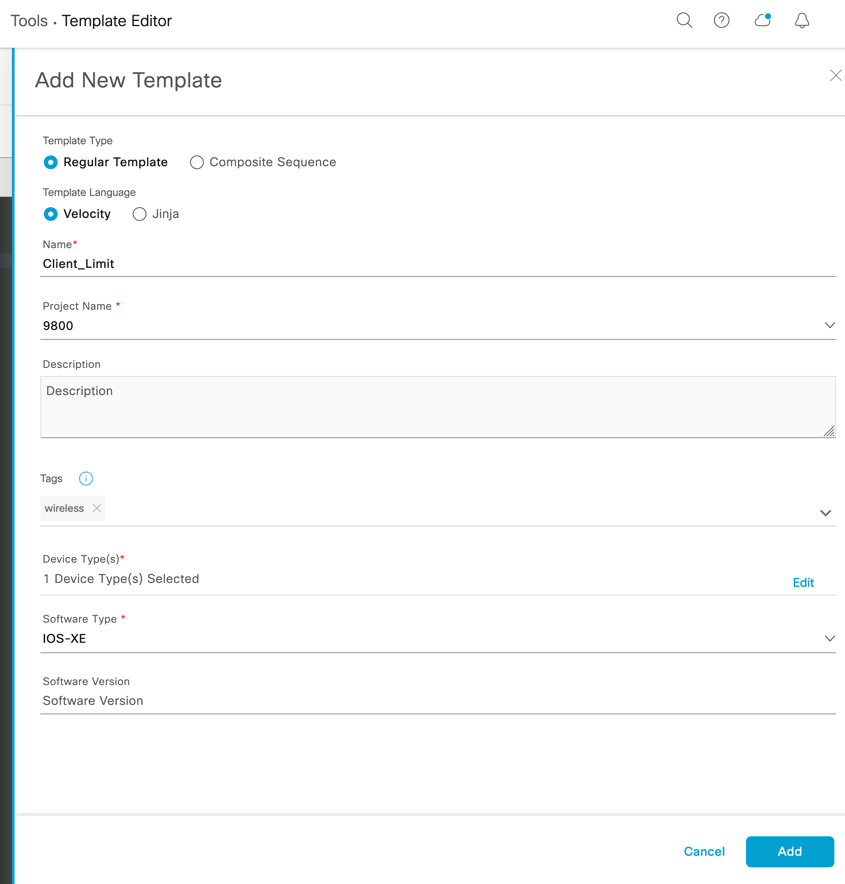

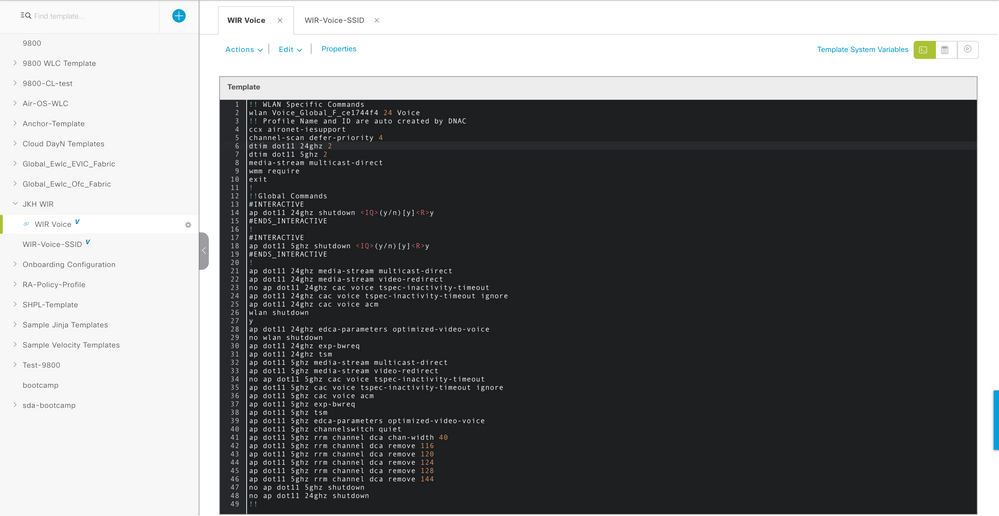

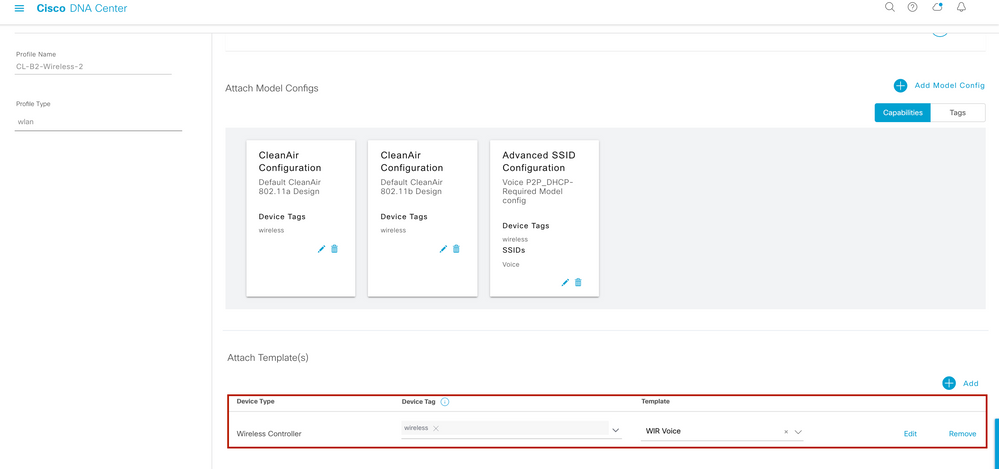

Application policies are applied to extended nodes using template functionality on Cisco DNA Center.

Reference Hardware/Software Matrix

Table 1 contains a list of the Reference hardware and software components but not limited to the below

| Role / Platform |

Part Number |

Software Version |

| Cisco DNA Center Appliance |

DN2-HW-APL (M5-based chassis) |

2.3.3.6 |

| Cisco Identity Services Engine |

SNS-3695-k9 |

3.1 |

| Cisco Wireless LAN Controller |

Cisco Catalyst 9800 Series Wireless Controllers |

IOS XE 17.6.4 or above |

| Fabric Border and Control |

Cat 9500 Series switches |

IOS XE 17.6.4 or above |

| Fabric Edge |

Cat 9300 Series switches |

IOS XE 17.6.4 or above |

| Access Points |

C9100 Series Access Points |

IOS XE 17.6.4 or above |

| Extended Nodes |

Cisco Catalyst IE4000 / Cisco Catalyst IE5000 series |

IOS 15.2(7)E1a or above |

Notes:

-

The above list is only for reference that is used in this guide. Refer > the SDA Compatibility > matrix > for the supported hardware

-

Fusion Router used in the reference is Catalyst 9300 series switch, any device with support for BGP can used as a fusion device

-

DNAC 3 Node Cluster is Recommended

Airport Network

Airport network serves for various critical airport applications, other applications and as a managed service provider for Airlines/concessionaire not limiting to the below.

Figure 1 Airport Network Applications

-

Check-In Systems

-

Airport Operation Command Center User machines

-

Flight Information Display Systems

-

Workstations.

-

Phones

-

Printers

-

Attendance Systems

-

Scanners

-

Kiosks

-

CCTV Cameras

-

CCTV Monitoring Stations

-

Access Control Systems

Requirements

Below are the requirements in an Airport Network including the following:

-

Digital transformation of airport network

-

Multi-tenant network

-

Increase operational efficiency

-

Improve security Assurance

-

Enhance User/Travel Experience

-

Paving the way toward smart airports.

-

Extend connectivity to rugged and outdoor areas

-

Meet compliance and regulatory goals

Airport Challenges

Some of the challenges seen in Airport Networks including the following:

-

Need End to end segmentation for Security reasons.

-

Compliance requirements

-

Provide day-to-day management of network services & infrastructure management along with security.

-

Network & Service level resiliency must exist for geographically dispersed network

-

IT along with OT

-

Control & Complexity

-

Business Process – People – Tech – Digital Twins

Given the critical nature of the services delivered by Airports, the network architecture challenges they face are often quite unique compared to customers in other verticals. The following sections outline several of the most critical capabilities required by Airport when deploying a network.

Security Compliance Mandates

Airport systems need to protect highly sensitive personal records and information of passengers and are strictly bound by government regulations (for example, HIPAA in the United States and GDPR in the European Union). Smart connected Airport systems bring new opportunities and better performance, but also new risks as cyberattacks grow in number and sophistication. No computer system, including the systems used to fly aircraft or air traffic control, can ignore this fact, especially in view of the potentially devastating consequences of an attack.

Airport Systems and related entities must provide regulation-compliant wired and wireless networks. These networks must provide complete and constant visibility for network management and monitoring. Sensitive data and devices such as Airport Operational Data Base (AODB) servers must be protected so that malicious devices cannot compromise the network. The proliferation of the Internet of Things (IoT) devices and Bring Your Own Device (BYOD) policies is adding to the need for segmentation between different groups and also within the groups between different types of users and devices. This means that macro-segmentation and micro-segmentation capabilities must be top priorities for any Airport network architecture.

Finally, given that staff, Airlines, and passenger traffic all share the same network infrastructure, every group must be isolated from one another and restricted to only the resources they are permitted to access.

Mobility of Staff and Devices

Within the Airport environment, staff and equipment must often move between rooms and floors in a rapid amount of time. It is also quite common for devices to have static IP addresses resulting in subnets must be stretched across the facility to allow the equipment to move anywhere it is needed. To increase operational efficiency, device and staff mobility should be completely dynamic without needing network staff to constantly reconfigure networking infrastructure. Mobility must be available for devices and users whether they attach to the network via wired or wireless media.

Increased Reliance on IoT Endpoints

Airport networks are populated by a wide variety of devices in multiple locations. This makes locating and identifying all of the devices in a network time-consuming and tedious. In some cases, these devices will sit silently on the network and will not send any traffic unless they are first contacted by another device. In other cases, the network staff may not even know all of the types of devices in their network which makes onboarding an extremely difficult task. Modern security threats seek vulnerable points of entry, such as undetected or silent hosts, to exploit the network’s valuable resources. These devices must be identified and tracked to meet the secure framework of the network.

Resiliency and High Availability

Airport Network demand levels of high availability and resiliency beyond most other enterprises. The network is a key component in delivering access to key information and services, such as Flight information, and it must be part of a wider resiliency and high availability strategy across IT. Any network solution must provide user and device access to critical resources in the event of loss of communication to the authentication infrastructure. To minimize service impact, strict network-level and application-level resiliency must exist.

Passenger Safety and Asset Tracking

Given the steadily increasing reliance on IoT endpoints in Airport Networks, asset management (baggage) is becoming more difficult as increasingly more diverse devices become mobile and are shared across locations. There are times when asset tracking is crucial to ensure that a asset has not left a specific area - for example, baggage is loaded / unloaded from a flight. The ability to track both passenger and devices in a single system is a critical requirement for every modern Airport network provider.

IT Network Operational Challenges

The combination of ever-increasing devices and security requirements on the network is putting immense operational pressure on Airport IT staff. These are manifested in the complexity of traditional IP-Based rule management and the struggle of managing those policies across large enterprise footprints. Timely troubleshooting is becoming more critical and difficult without onsite staff.

Cisco SD-Access Benefits for Airports

Below are some of the benefits seen in Airport Networks including the following:

-

Unleash the power of data from the edge to gain operational insights and improve processes and systems

-

Enable new digital experiences for your customers and increase customer satisfaction

-

Generate new incremental revenue for your business by digitizing your airport

-

Manage the enterprise network centrally and reduce operating expenses (OpEx)

-

Simplify, secure, and control IT-run Airport environment

Design

Cisco SD-Access (Software-Defined Access) is a network architecture that provides a unified fabric for wired and wireless networks. It is designed to simplify network operations, improve security, and enhance user experience through automation and policy-based network management. The components, roles, and planes of Cisco SD-Access include:

Components of Cisco SD-Access:

Campus Fabric: It is the foundation of Cisco SD-Access and consists of network devices such as switches, routers, and wireless controllers that are part of the fabric. These devices are typically deployed in the campus network and form the underlay for the overlay network.

Fabric Edge Nodes: These are the network devices that connect end-user devices, such as PCs, laptops, and access points, to the campus fabric. They include access switches and wireless controllers that provide connectivity to end devices and enforce policies defined in the fabric.

Control Plane Nodes: These are the devices responsible for running the SD-Access control plane, which manages the overlay network and policy enforcement. Control plane nodes include fabric border nodes, which connect the campus fabric to external networks, and control plane border nodes, which provide policy-based segmentation within the fabric.

Fabric Wireless Controller: It is a key component of Cisco SD-Access for managing wireless access points and wireless clients in the fabric. It provides wireless connectivity and enforces policies defined in the fabric.

Fabric Roles in Cisco SD-Access:

Fabric Edge Node: The Fabric Edge Node is responsible for connecting end-user devices, such as PCs, laptops, servers, and access points, to the campus fabric. It includes access switches and wireless controllers that provide wired and wireless connectivity to end devices. The Fabric Edge Node enforces policies defined in the fabric, such as segmentation and access control, and acts as the entry point for end devices into the fabric.

Control Plane Border Node: The Control Plane Border Node is responsible for enforcing policies and providing policy-based segmentation within the campus fabric. It acts as a gateway between different segments or virtual networks within the fabric, and controls the routing and forwarding decisions based on the defined policies. Control Plane Border Nodes are typically deployed at the edges of the fabric to provide inter-segment connectivity and enforce security policies.

Fabric Border Node: The Fabric Border Node is responsible for connecting the campus fabric to external networks, such as the Internet, other campuses, or data centres. It provides the external connectivity for the fabric and acts as a gateway for traffic entering or leaving the fabric. The Fabric Border Node enforces policies defined in the fabric, such as access control and traffic filtering, at the border of the fabric.

Control Plane Node: The Control Plane Node is responsible for running the SD-Access control plane, which manages the overlay network and policy enforcement. It includes devices that participate in the control plane protocols, such as routing and policy distribution, and work together to ensure consistent policy enforcement across the fabric. Control Plane Nodes can include Control Plane Border Nodes, as well as other devices within the fabric that participate in the control plane.

Fabric Wireless Controller: The Fabric Wireless Controller is responsible for managing wireless access points (APs) and wireless clients in the campus fabric. It provides wireless connectivity, enforces policies defined in the fabric, and manages wireless network operations, such as AP configuration, roaming, and security settings.

These different fabric device roles work together to create a unified fabric in Cisco SD-Access, providing automated, policy-based, and secure network access for wired and wireless devices in the campus network.

Planes of Cisco SD-Access:

Control Plane: It is responsible for managing the overlay network and enforcing policies defined in the fabric. Control plane nodes, including fabric border nodes and control plane border nodes, run the control plane protocols and manage the routing and forwarding decisions in the fabric.

Data Plane: It is responsible for forwarding data traffic between end devices in the fabric. Fabric edge nodes, including access switches and wireless controllers, perform data plane functions, such as switching, routing, and policy enforcement, based on the policies defined in the fabric.

Management Plane: It is responsible for managing the configuration, monitoring, and troubleshooting of the fabric. Fabric administrators and network administrators use management plane tools, such as Cisco DNA Center, to configure and manage the fabric, monitor its performance, and troubleshoot issues.

Overall, Cisco SD-Access comprises a distributed architecture with multiple components, roles, and planes that work together to provide automated, policy-based, and secure network access for wired and wireless devices in the campus network.

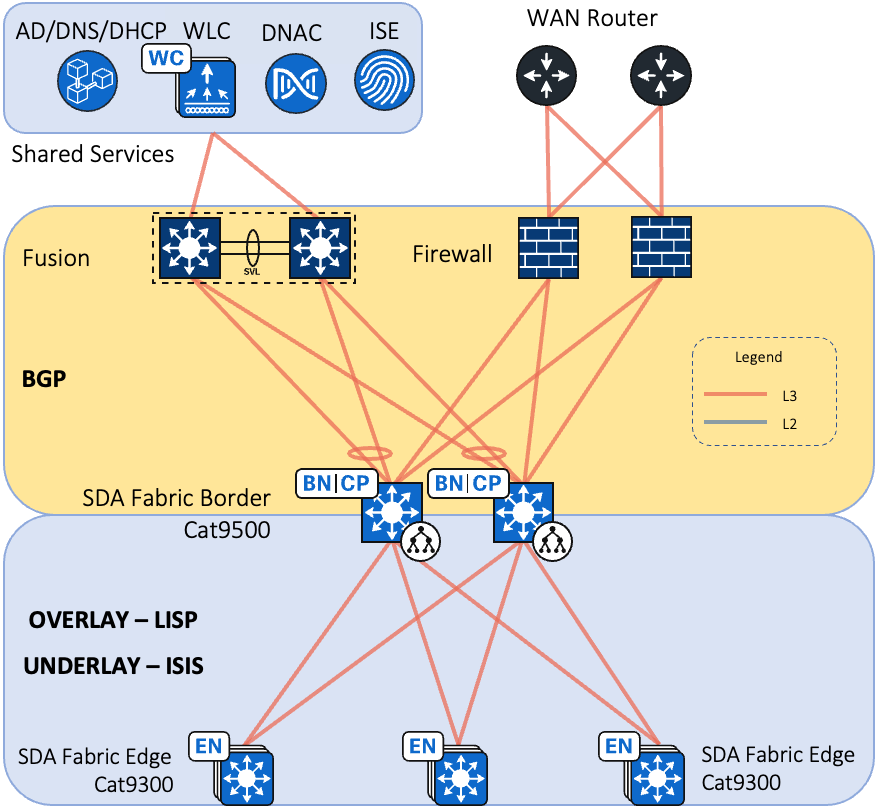

This section provides an overview of the topology used throughout this guide as well as the routing modalities used to provide IP reachability.

The validation topology represents a medium site as described in the companion Software-Defined Access Solution Design Guide. It shows a single building with multiple wiring closets that is part of a larger multiple-building network that aggregates each building’s core switches to a pair of super core routers. The shared services block contains a virtual switching system (VSS) Catalyst 6800/9600 switch providing access to the Wireless LAN Controllers, Cisco DNA Center, the Identity Services Engine, Windows Active Directory, and DHCP/DNS servers.

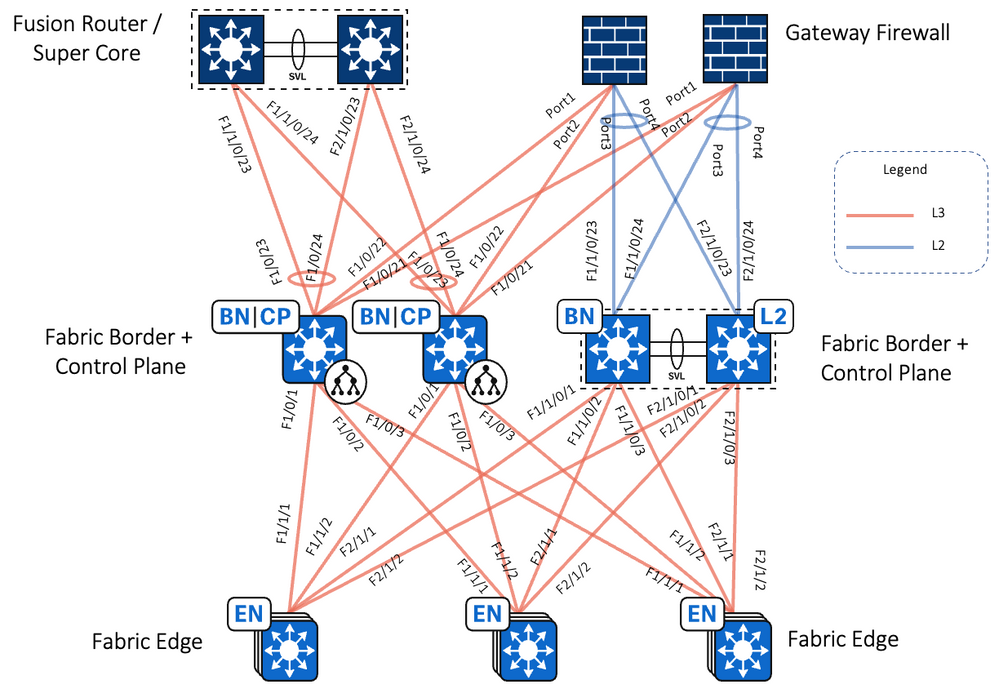

Figure 2 Airport Network SD-Access Topology

| For diagram simplicity and ease of reading, the intermediate nodes (distribution layer) is not show in the topology. |

Figure 3 Airport Network SD-Access Routing Topology

The Airport network deployment uses Static Routes towards Firewall and WAN/Internet from Fusion. This provides IP reachability between the Fusion, shared services, and the enterprise edge firewalls.

The building core switches operate as the fabric border and control plane nodes creating the northbound boundary of the fabric site. Taking advantage of the LAN automation capabilities of Cisco DNA Center, the network infrastructure southbound of the core switches run the Intermediate System to Intermediate System (IS-IS) routing protocol.

Between the fabric border and the Fusion (super core), BGP is used. Routes from the existing enterprise network are redistributed into BGP on the enterprise edge Firewall. Routes from the SD-Access fabric site are redistributed from IS-IS to BGP and default routes per virtual networks from BGP to ISIS would be advertised allowing end-to-end IP reachability. Static Routes are configured on the WAN/Internet routers towards enterprise edge firewalls for Fabric subnets reachability allowing end-to-end IP reachability.

IP prefixes for shared services must be available to both the fabric underlay and overlay networks while maintaining isolation among overlay networks. To maintain the isolation, VRF-lite extends from the fabric border nodes to a set of fusion routers. The Peer device implement VRF route leaking using a BGP route target import and export configuration. This is completed in later procedures after the fabric roles are provisioned.

During initial discovery, for IP reachability between Cisco DNA Center and the fabric site, BGP or Static Routes can be used. Once a seed device is discovered and managed by Cisco DNA Center, it is recommended practice to not manually add configuration, particularly any configuration that might be overridden through the automated configuration placing the device in an unintended state. With the introduction of LISP Pub-Sub feature, simplifies the communication between border & control plane nodes.

| In deployments with multiple points of redistribution between several routing protocols, using BGP for initial IP reachability northbound of the border nodes helps ensure continued connectivity after the fabric overlay is provisioned without the need for additional manual redistribution commands on the border nodes. |

Airport Subsystems

Below are the Airport Subsystems that are available on an Airport network

-

Modular Terminal Communications System (MTCS)

-

Building Management System (BMS)

-

Access Control System (ACS)

-

Close Circuit TV (CCTV)

-

Automated Flight Announcement System (AFAS)

-

Public Address System (PA)

-

Wi-fi

-

Videowall

-

Self-Baggage Drop (SBD)

-

Baggage Handling System (BHS)

-

Distributed Antenna System (DAS)

-

Lighting Management System (LMS)

-

Chiller Plant Manager

-

SCADA

-

First Bag Last Bag (FBLB)

-

Fire Alarm System (FAS)

-

Vertical & Horizontal Transport Systems (VHT)

-

Passenger boarding Bridge

-

TETRA Mobile Radio (TMR)

-

Internet Protocol TV (IPTV)

-

Radio Over IP (RoIP)

-

Information Broker (IB)

-

Airlines departure control system (DCS)

-

Airport Operational Database (AODB)

-

Beacon Infrastructure

-

Car Park Management System (CPMS)

-

Common User Passenger Processing System (CUPPS)

-

Common-Use Self Service (CUSS)

-

Electronic Gates

-

Electronic Point of Sales (EPoS)

-

Flight Information Display System (FIDS)

-

Information Kiosk (INK)

-

Interactive Voice Response System (IVRS)

-

Message Distribution System (MDS)

-

Preconditioned Air Units (PCA)

-

System Application Product (SAP)

-

Voice over IP (VoIP)

-

Visual Docking Guidance System (VDGS)

Design Considerations

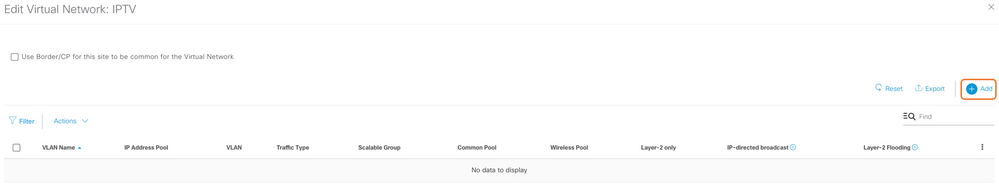

Many Airports have physically segmented their networks to achieve consolidation while maintaining separation and isolation. Deploying multiple Virtual Networks is also an effective way to increase the Return on Investment (ROI) of assets and reduce the overall cost of implementation.

Airport Networks consists of Layer 3 Networks and Layer 2 networks.

Layer 3 Design

Virtual Networks (VNs) in the fabrics are used to isolate IP reachability or routing table visibility between two segments or entities. VNs are essential VRFs in traditional routing terminology. A logically separated routing instance that, as of now, can only get to another VN via fusion router. Fusion Router is used to facilitate (leak routes) communication between VNs (VRFs).

One of the important requirements for any Airport network is Isolation between various departments / Applications, this is taken care using Virtual Networks (VN). And based on the business requirements controlled communication between VNs is to be allowed, this is done using a Fusion router.

Below are some of the Virtual Networks (VNs) in an Airport Network:

-

AIRPORT_APP_VN

-

COMMUNICATION_VN

-

RETAILER_VN (L2 VN)

-

AIRLINE_VN (L2 VN)

-

INFRASTRUCTURE_VN

-

PHYSICAL_SECURITY_VN

-

PA_VN

-

WIRELESS_VN

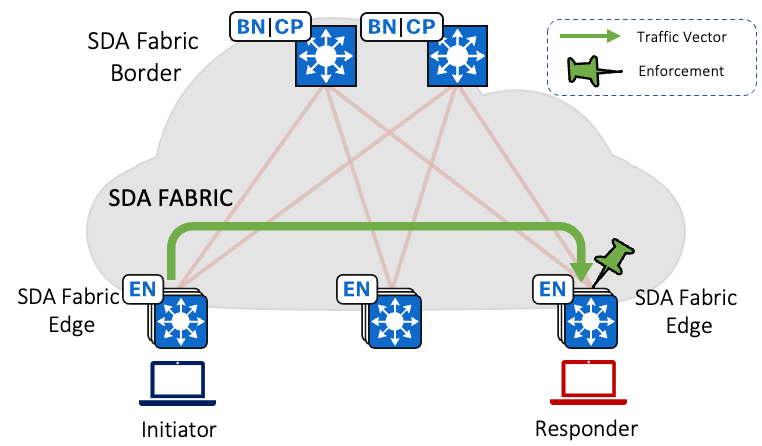

Traffic Flow for a VNs is depicted below

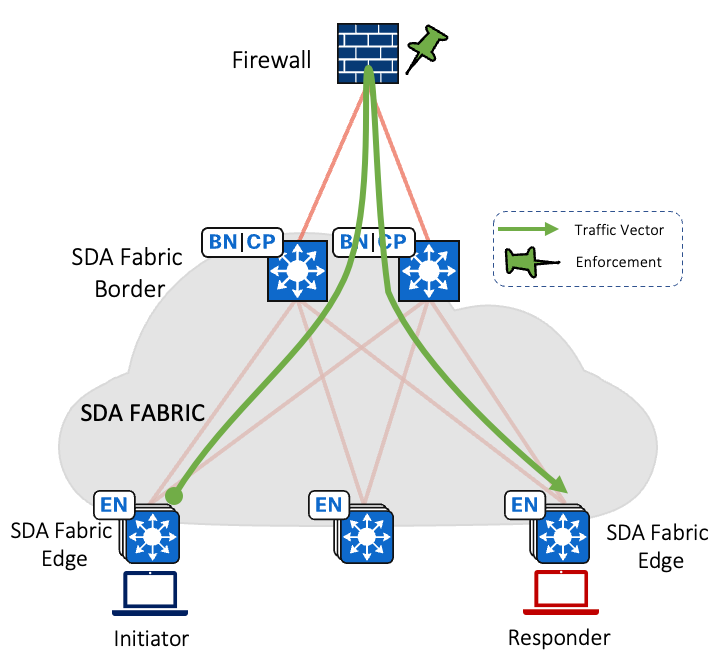

Traffic Flow for Inter VN via Firewall/Fusion device is depicted below

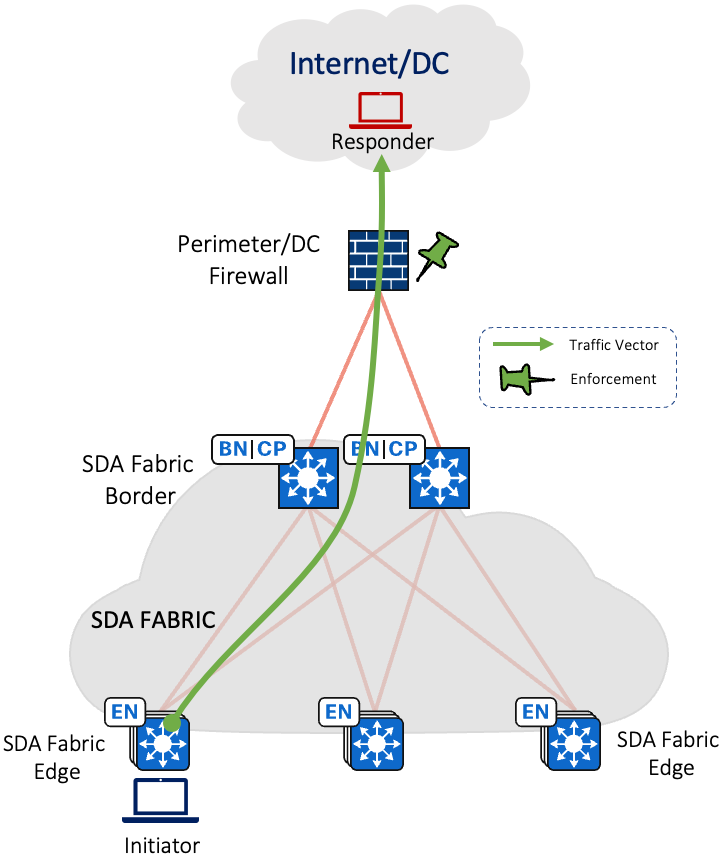

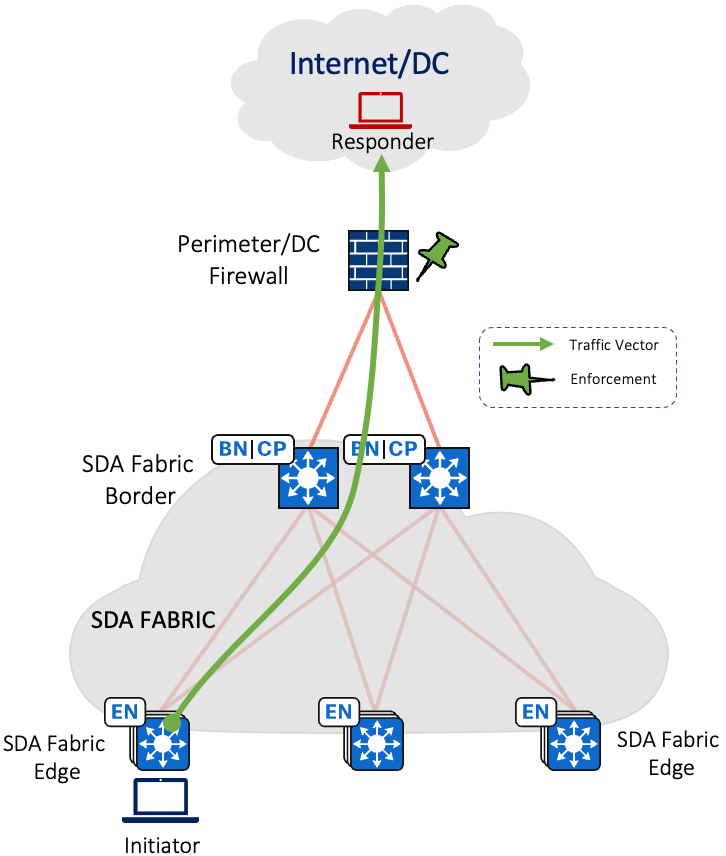

Traffic Flow for VNs with Gateway (Firewall) outside the fabric is depicted below

All airport internal services/applications will use Layer 3 services.

| System | IP Pool | Virtual Network (VN) |

| Modular Terminal Communications System (MTCS) | 10.1.0.0/24 | COMMUNICATION_VN |

| Building Management System (BMS) | 10.2.0.0/24 | INFRASTRUCTURE_VN |

| Access Control System (ACS) | 10.3.0.0/24 | PHYSICAL_SECURITY_VN |

| Close Circuit TV (CCTV) | 10.4.0.0/24 | PHYSICAL_SECURITY_VN |

| Automated Flight Announcement System (AFAS) | 10.5.0.0/24 | AIRPORT_APP_VN |

| Public Address System | 10.6.0.0/24 | PA_VN |

| Wi-fi | 10.7.0.0/24 | WIRELESS_VN |

| Videowall | 10.8.0.0/24 | PHYSICAL_SECURITY_VN |

| SELF BAGGAGE DROP SBD | 10.9.0.0/24 | AIRPORT_APP_VN |

| Baggage Handling System (BHS) | 10.10.0.0/24 | AIRPORT_APP_VN |

| Distributed Antenna System (DAS) | 10.11.0.0/24 | AIRPORT_APP_VN |

| Lighting Management System (LMS) | 10.12.0.0/24 | INFRASTRUCTURE_VN |

| Chiller Plant Manager | 10.13.0.0/24 | INFRASTRUCTURE_VN |

| Scada | 10.14.0.0/24 | AIRPORT_APP_VN |

| First Bag Last Bag (FBLB) | 10.15.0.0/24 | AIRPORT_APP_VN |

| Fire Alarm System (FAS) | 10.16.0.0/24 | INFRASTRUCTURE_VN |

| Vertical & Horizontal Transport Systems (VHT) | 10.17.0.0/24 | AIRPORT_APP_VN |

| Passenger boarding Bridge | 10.18.0.0/24 | AIRPORT_APP_VN |

| TETRA Mobile Radio (TMR) | 10.19.0.0/24 | AIRPORT_APP_VN |

| Internet Protocol TV (IPTV) | 10.20.0.0/24 | IPTV_VN |

| Radio Over IP (RoIP) | 10.21.0.0/24 | COMMUNICATION_VN |

| Information Broker (IB) | 10.22.0.0/24 | AIRPORT_APP_VN |

| Airlines DCS | 10.23.0.0/24 | AIRPORT_APP_VN |

| Airport Operational Database (AODB) | 10.24.0.0/24 | AIRPORT_APP_VN |

| Beacon Infrastructure | 10.25.0.0/24 | COMMUNICATION_VN |

| Car Park Management System (CPMS) | 10.26.0.0/24 | INFRASTRUCTURE_VN |

| Common User Passenger Processing System (CUPPS) | 10.27.0.0/24 | AIRPORT_APP_VN |

| Common-Use Self Service (CUSS) | 10.28.0.0/24 | AIRPORT_APP_VN |

| Electronic Gates | 10.29.0.0/24 | AIRPORT_APP_VN |

| Electronic Point of Sales (EPoS) | 10.30.0.0/24 | AIRPORT_APP_VN |

| Flight Information Display System (FIDS) | 10.31.0.0/24 | AIRPORT_APP_VN |

| Information Kiosk (INK) | 10.32.0.0/24 | INFRASTRUCTURE_VN |

| Interactive Voice Response System (IVRS) | 10.33.0.0/24 | COMMUNICATION_VN |

| Message Distribution System (MDS) | 10.34.0.0/24 | COMMUNICATION_VN |

| Preconditioned Air Units (PCA) | 10.35.0.0/24 | INFRASTRUCTURE_VN |

| System Application Product (SAP) | 10.36.0.0/24 | INFRASTRUCTURE_VN |

| Voice over IP (VoIP) | 10.37.0.0/24 | COMMUNICATION_VN |

| Visual Docking Guidance System (VDGS) | 10.38.0.0/24 | AIRPORT_APP_VN |

| Multicast Pool 1 (Loopbacks) – PHYSICAL SECURITY VN | 192.168.1.0/24 | - |

| Multicast Pool 2 (Loopbacks) – IPTV_VN | 192.168.2.0/24 | - |

| Multicast Pool 3 (Loopbacks) – PA_VN | 192.168.3.0/24 | - |

Note: Multicast Pools is used for Loopback addresses for every Fabric edge. /24 considered here can support maximum for 255 fabric edge nodes. If the network is more, consider to use a larger subnet.

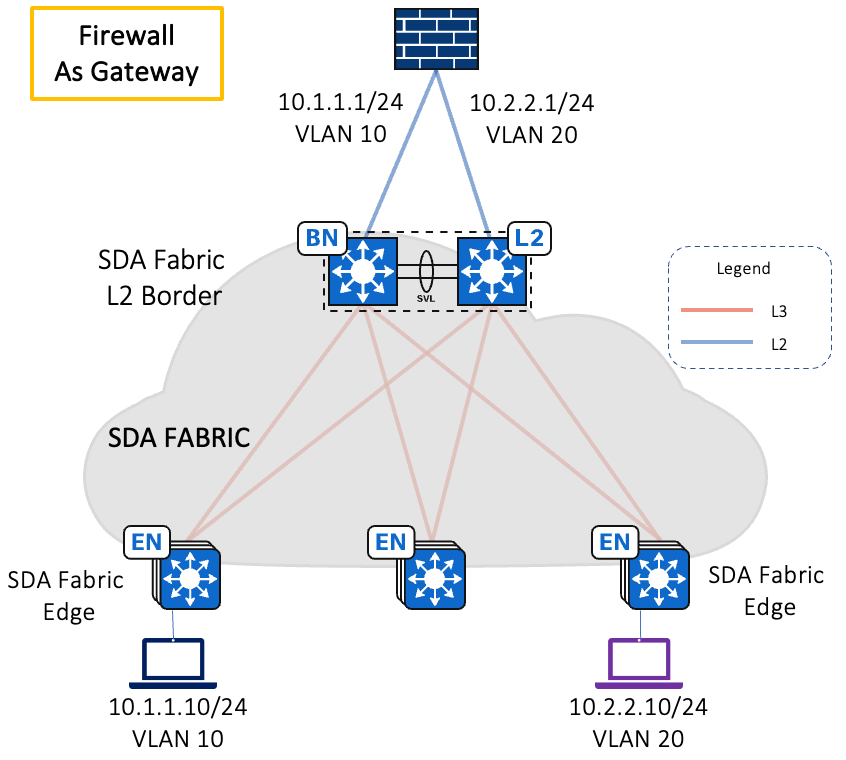

Layer 2 Design

In SD Access environment, unlike the traditional networks, the gateway of a particular VLAN is present right at the Edge / Access switch and not on the Core/Distribution switch. As there is an anycast gateway present on the Edge, the Layer 2 boundary for that client ends at the access level itself, eliminating the traditional problems with STP and allowing for seamless roaming of the end client devices across the Fabric.

In an Airport network there are multiple customers that are served by providing infrastructure for appropriate services. In these cases, the IP Space is not under the control of Airport and just a VLAN will be provided for which the gateway would be outside the Airport Network/fabric. So, the traffic flow inside fabric has to be Layer 2 only.

Airlines Back office networks and Retailers are the major users of Layer 2 only networks in an airport network. In most cases the gateway for these are on a Firewall.

Airline & Retail L2 Network details

| Customer | VLAN Mapped | Virtual Network (VN) |

| Airline 1 | VLAN 101 | AIRLINE_VN |

| Airline 2 | VLAN 102 | AIRLINE_VN |

| Airline 3 | VLAN 103 | AIRLINE_VN |

| Airline 4 | VLAN 104 | AIRLINE_VN |

| Retailer 1 | VLAN 201 | RETAILER_VN |

| Retailer 2 | VLAN 202 | RETAILER_VN |

| Retailer 3 | VLAN 203 | RETAILER_VN |

Wireless Design

Airport Wireless Network is highly mobile and dynamic environment. Wireless has become the primary access medium for a wide range of business-critical devices in an airport. A properly designed and deployed SD-Access Wireless network is required for the network infrastructure with the below wireless architectures:

• CUWN Wireless Over The Top (OTT),

• SD-Access Wireless (also referred as Fabric Enabled Wireless),

• Mixed-Mode (Fabric and Non-Fabric on same WLC).

Wireless is major service in an airport network which enhances the passengers experience in the Airport.

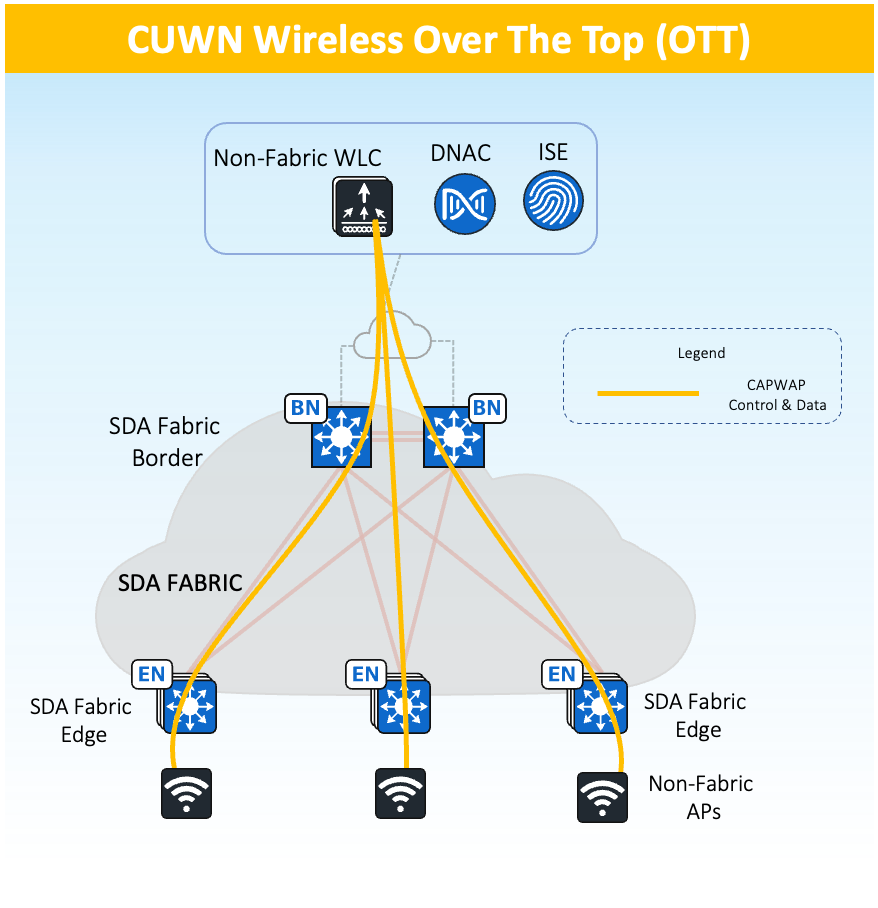

Wireless Over the Top (OTT) of SDA Fabric Architecture

Wireless Over The Top (OTT)

-

Works with the existing WLC/AP IOS/AireOS.

-

Support for SD-Access incompatible devices.

-

Used for Migration scenarios.

-

CAPWAP for Control Plane and Data Plane

-

SDA Fabric is just a transport

-

Supported on any WLC/AP software and hardware

-

Only Centralized mode is supported

When to use Wireless Over The Top (OTT)

-

No SDA advantages for wireless

-

Migration step to full SD-Access

-

Customer wants/needs to first migrate wired (different Ops teams managing wired and wireless, get familiar

with fabric, different buying cycles, etc.) and leave wireless “as it is.”

- Customer cannot migrate to fabric yet (Older AP, needs to certify the software, etc.)

With OTT based Wireless deployments, wireless control, management, and data plane traffic traverse the Fabric using a (CAPWAP) tunnel between the APs and WLC. This CAPWAP tunnel uses the Cisco SD-Access Fabric as a transport network.

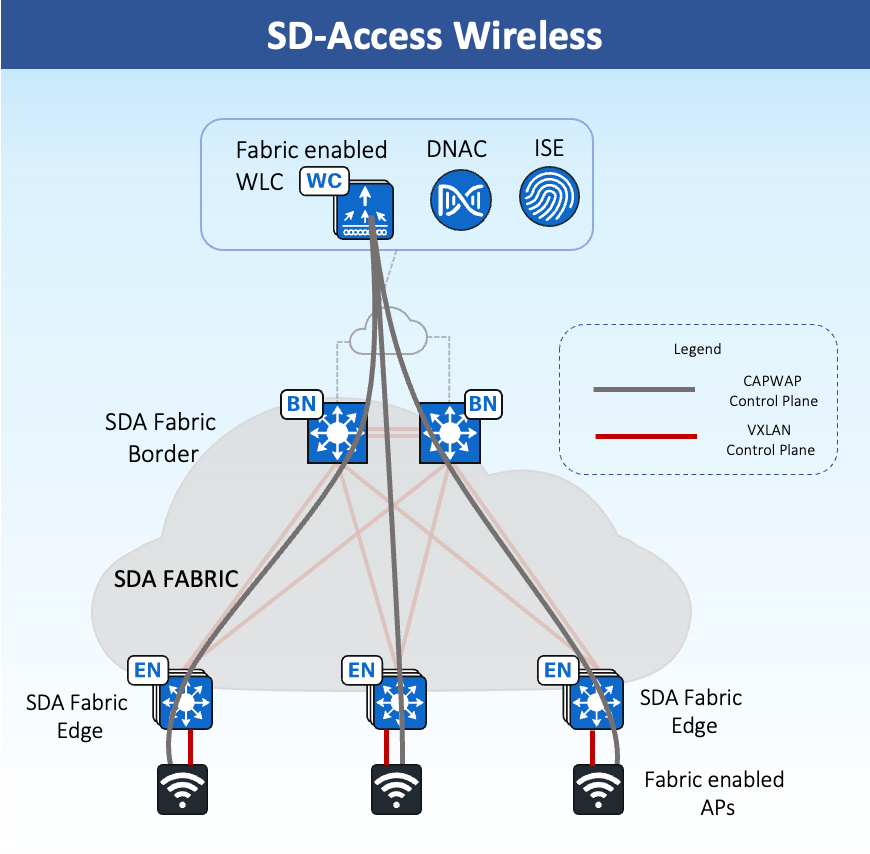

SD-Access Wireless

Airports can integrate wireless natively into SD-Access using two additional components—fabric wireless controllers and fabric mode APs. Supported Cisco Wireless LAN Controllers are configured as fabric wireless controllers to communicate with the fabric control plane, registering L2 client MAC addresses, SGT, and L2 VNI information. The fabric mode APs are associated with the fabric wireless controller and configured with fabric-enabled SSIDs.

In SD-Access Wireless, the Control plane is centralized: CAPWAP tunnel is maintained between APs and WLC. The main difference is that; the data plane is distributed using VXLAN directly from the Fabric enabled APs. WLC and APs are integrated into the Fabric and the Access Points connect to the Fabric overlay (Endpoint ID space) network as “special” clients.

Some of these benefits are:

-

Centralized Wireless control plane: the same innovative RF features that Cisco has today in Cisco Unified Wireless Network (CUWN) deployments will be leveraged in SD-Access Wireless as well. Wireless operations stay the same as with CUWN in terms of RRM, client onboarding and client mobility and so on which simplifies IT adoption.

-

Optimized Distributed Data Plane: The data plane is distributed at the edge switches for optimal performance and scalability without the hassles usually associated with distributing traffic (spanning VLANs, subnetting, large broadcast domains, etc.).

-

Seamless L2 Roaming everywhere: SD-Access Fabric allows clients to roam seamlessly across the campus while retaining the same IP address.

-

Simplified Guest and mobility tunnelling: An anchor WLC controller is not needed anymore, and the guest traffic can directly go to the DMZ without hopping through a foreign controller.

-

Policy simplification: SD–Access breaks the dependencies between Policy and network constructs (IP address and VLANs) simplifying the way we can define and implement Policies. For both wired and wireless clients.

-

Segmentation made easy: Segmentation is carried end to end in the Fabric and is hierarchical, based on Virtual Networks (VNIs) and Scalable Group Tags (SGTs). Same segmentation policy is applied to both wired and wireless users.

Following are the key aspects of SD Access wireless if considering for Airport deployments:

-

All SSIDs should be Fabric enabled SSID - Corporate, Guest, Passenger, Tenant SSIDs

-

Fabric enabled APs terminate VXLAN directly into the Fabric Edge

-

Fabric enabled WLC needs to be physically located on the same site as APs

-

For wireless, client MAC address is used as EID.

-

WLC Interacts with the Host Tracking DB on Control-Plane node for Client MAC address registration with SGT and Virtual Network Information

-

The WLC is responsible for updating the Host Tracking DB with roaming information for wireless clients

-

The VN information is mapped to a VLAN on the Fes

Note: Overlapping IP Pools is not supported

All these advantages can be achieved at any FEW Airport with best-in-class wireless with future-ready Wi-Fi 6 Access Points (APs) & Cisco Catalyst Wireless LAN Controller

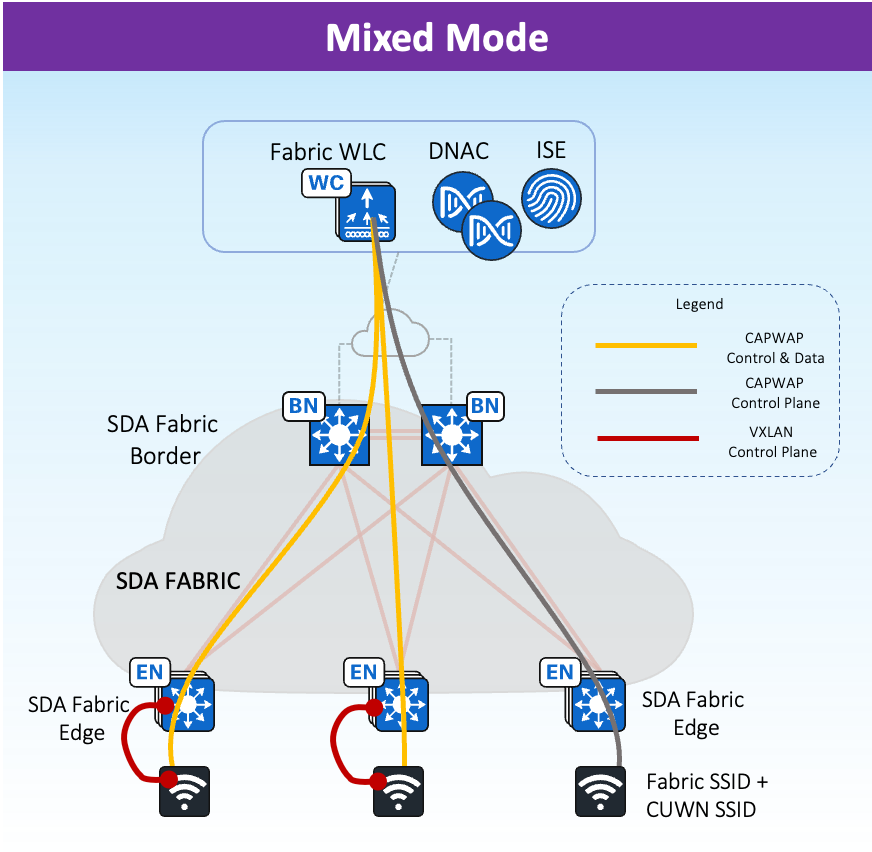

Mixed-Mode (Fabric and Non-Fabric on same WLC)

Airports can also go with mixed-mode where in both fabric and non-fabric wireless can co-exist on the same WLC.

-

Allows to use both SDA fabric wireless and OTT on the same WLC/AP.

-

Can be a migration path to full SD-Access wireless.

-

Supports the need for some SSIDs to remain CUWN OTT.

-

Supported for Green Field deployments

Security

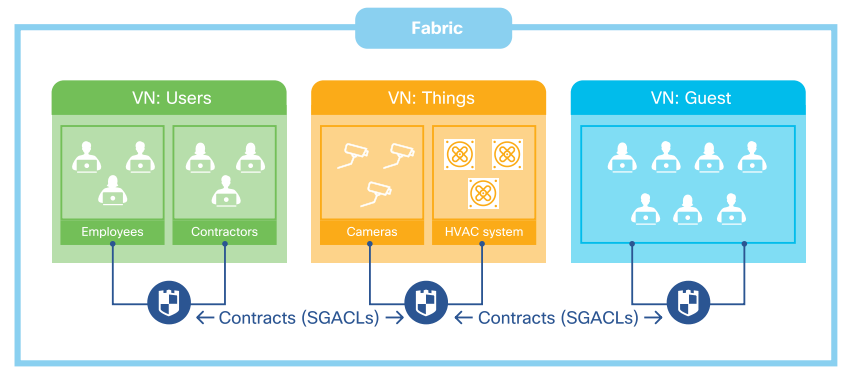

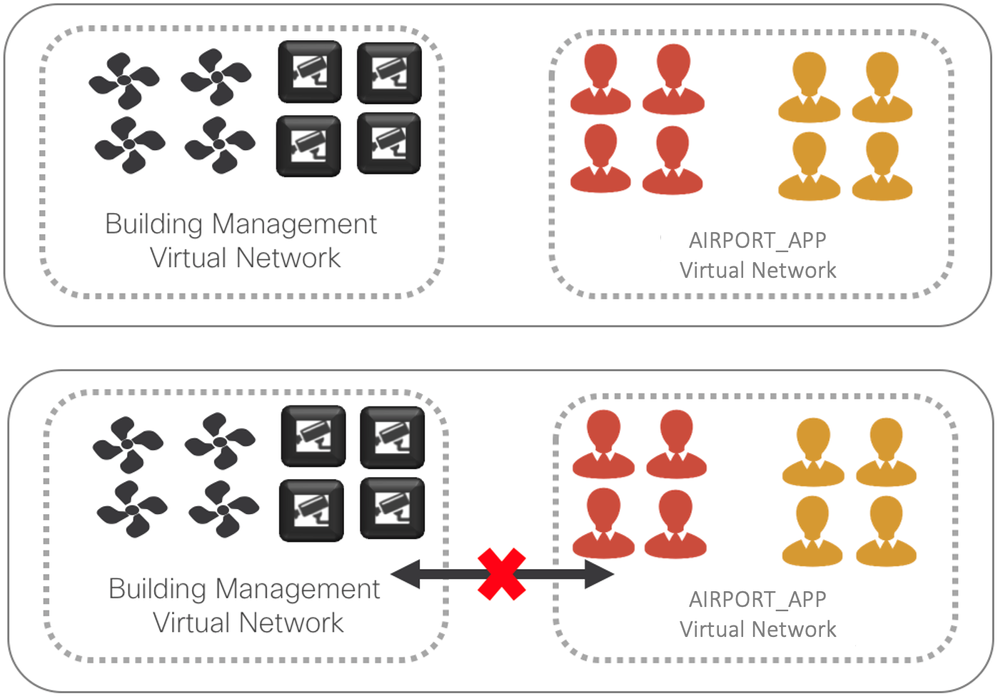

Network segmentation is essential for protecting critical business assets. In SD-Access, Security is integrated in the network using segmentation. Segmentation is a method used to separate specific group of users or devices from other group(s) for security purposes. Segmentation within SD-Access is done using,

-

Macro Segmentation - Virtual Networks (VN)

-

Micro Segmentation – Secure Group Tags (SGT)

Macro Segmentation

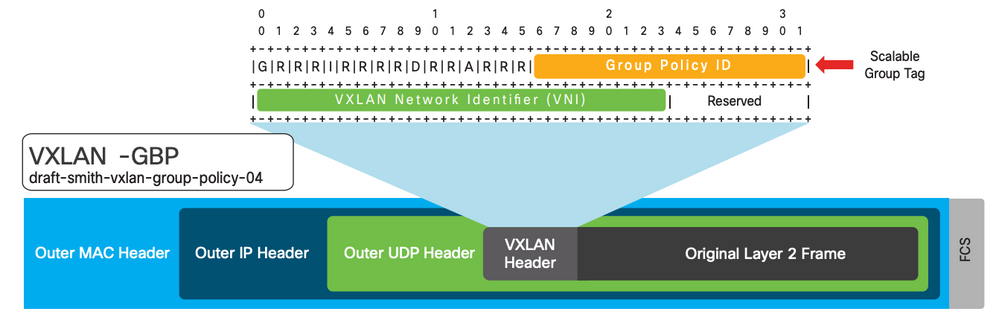

SD-Access network Macro segmentation can be described as a process of breaking down or splitting a single large network with a single routing table into any number of smaller logical networks (segments/VNs) providing isolation between segments, minimizing attack surface and introducing enforcement points between segments.. By providing two levels of segmentation, SD-Access makes a secure network deployment possible for Airports, and at the same time provides the simplest approach for Airport networks to understand, design, implement and support. In SD-Access Fabric, information identifying the virtual network and scalable group tag (SGT) are carried in the VXLAN network identifier (VNI) field with the VXLAN-GPO header.

Macro Segmentation logically separates a network topology into smaller virtual networks, using a unique network identifier and separate forwarding tables. This is instantiated as Virtual Routing and Forwarding (VRF) instance on switches or routers and referred to as a Virtual network (VN) on Cisco DNA Center.

A Virtual network (VN) is a logical network instance within the SD-Access fabric, providing layer 2 or Layer 3 services and defining a Layer 3 routing domain. As described above, within the SD-Access fabric, information identifying the virtual network is carried in the VXLAN Network Identifier (VNI) field within the VXLAN header. The VXLAN VNI is used to provide both the Layer 2(L2 VNI) and Layer 3(L3 VNI) segmentation.

Within the SD-Access fabric, LISP is used to provide control plane forwarding information. LISP instance ID provides a means of maintaining unique address spaces in the control plane and this is the capability in LISP that supports virtualization. External to the SD-Access fabric, at the SD-Access border, the virtual networks map directly to VRF instances, which may be extended beyond the fabric. The table below provides the terminology mapping across all three technologies.

|

Cisco DNA Center |

Switch/Router Side |

LISP |

|

Virtual Network (VN) |

VRF |

Instance ID |

|

IP Pool |

VLAN/SVI |

EID Space |

|

Underlay Network |

Global Routing Table |

Global Routing Table |

Micro Segmentation

Cisco Group Based Policy balances the demands for agility and security without the operational complexity and difficulty of deploying into existing environments seen with traditional segmentation. Endpoints are classified into groups that can be used anywhere on the network in both fabric and non-fabric Cisco environments. This allows us to decouple the segmentation policies from the underlying network infrastructure.

By classifying systems using human-friendly logical groups, security rules can be defined using these groups, not IP addresses. Controls using these endpoint roles are more flexible and much easier to manage than using IP address based controls. Scalable Groups, aka Security Groups (SG), can indicate the role of the system or person, the type of application and server hosts, the purpose of an IoT device, or the threat-state of a system, which IP addresses alone cannot. These scalable groups can simplify firewall and next-gen firewall rules, Web Security Appliance policies and the access control lists used in switches, WLAN controllers, and routers. Group Based Policy is much easier to enable and manage than VLAN-based segmentation and avoids the associated processing impact on network devices.

In SD-Access, some enhancements to the original VXLAN specifications have been added, most notably the use of scalable group tags (SGTs). This new VXLAN format is currently an IETF draft known as Group Policy Option (or VXLAN-GPO).

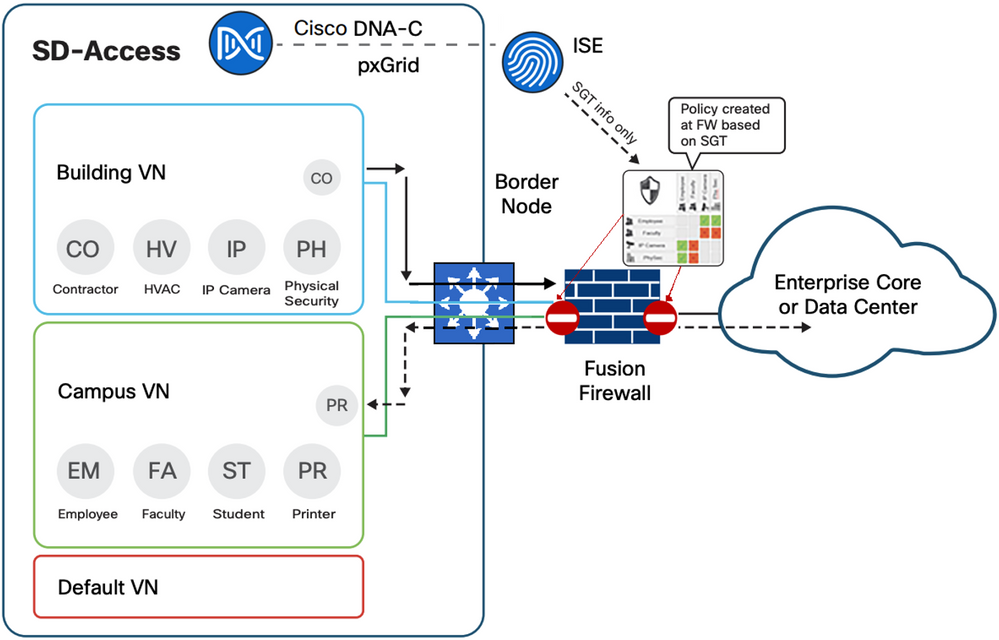

Fusion Router / Firewall

Segmentation within SD-Access takes place at both a macro and a micro level through virtual networks and SGTs, respectively. Virtual networks are completely isolated from one another within the SD-Access fabric, providing macro-segmentation between endpoints within one VN from other VNs. By default, all endpoints within a virtual network can communicate with each other. Because each virtual network has its own routing instance, an external, non-fabric device known as a fusion router or firewall is required to provide the inter-VRF forwarding necessary for communications between the VNs.

Firewalls that use SGTs in the rules are called Scalable Group Firewalls (SGFWs). SGFWs receive only the names and scalable group tag value from ISE; they do not receive the actual policies/rules. Unlike switches, where SGACLs are configured at Cisco DNA Center and deployed by ISE, SGT-based rule definition is performed locally at the SGFW through either the CLI or other management tool. Using a SGFW, using SGFW is optional, but when used provides tight integration for the End to end security and also avoid the disadvantage of using IP address for policy as IP address could change over time when using DHCP.

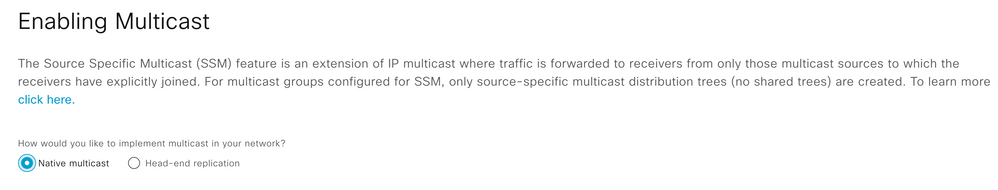

Multicast

Multicast is supported in both the Overlay Virtual Networks and the physical Underlay networks in SD-Access.

The multicast source can either reside outside the Fabric or can be inside Fabric Overlay. Multicast receivers are commonly directly connected to Edge Nodes or Extended Nodes, however multicast receivers can also be outside of the Fabric Site if the source is in the Overlay. PIM Any-Source Multicast (PIM-ASM) and PIM Source-Specific Multicast (PIM-SSM) are supported.

Multicast Design options

SD-Access supports two different transport methods for forwarding multicast.

-

Head-End Replication - uses the Overlay

-

Native Multicast - uses the Underlay

Head-End Replication

Head-End Replication (or Ingress Replication) is performed either by the multicast first-hop router (FHR) when the multicast source is in the Fabric Overlay, or by the Border Nodes when the source is outside the Fabric Site.

Native Multicast

Native multicast does not require the ingress Fabric Node to do unicast replication. Rather, the whole Underlay including Intermediate Nodes is used to do the replication. To support Native multicast, the FHRs, Last-Hop Routers (LHRs), and all network infrastructure between them must be enabled for multicast.

Multicast at Scale in Airports

Due to technology changes and the proliferation of new capabilities within the client endpoints that users are bringing into the network, Cisco SD- Access must address multicast at scale at airport networks.

Native Multicast enables efficient delivery of multicast packets within the fabric and the workflow in Cisco DNAC makes it easier for the administrator to choose the right options and automate the intent.

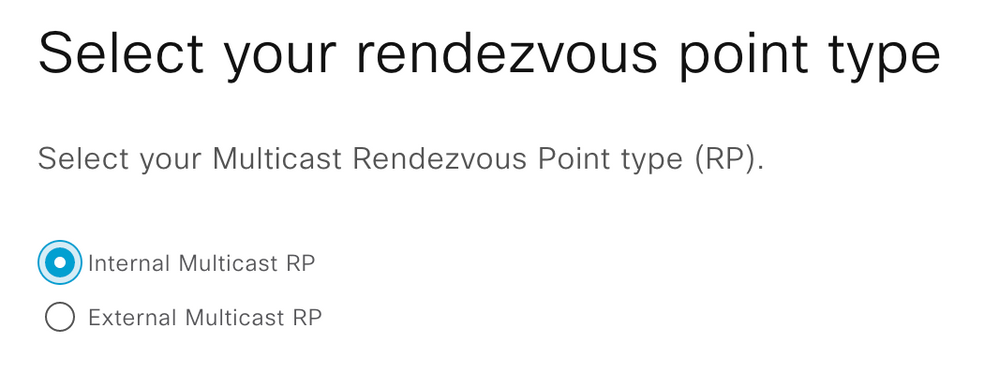

In the Airport network SD-Access Fabric, multicast forwarding will use Any Source Multicast (ASM) design aspects using the Native Multicast option for Scale. The Rendezvous Point (RP) outside the Fabric is selected within each Virtual Network as the RP the clients should use to join the tree. Only the First multicast packet is forwarded to the RP, subsequent packets are forwarded to the destination using the SPT. The concepts of Shared Tree and Source Tree are still in operation.

Note:

-

Any-Source Multicast (ASM) is used when the multicast endpoints don’t support IGMPv3. Source Specific Multicast (SSM) can be used when IGMPv3 is supported.

-

Multicast Pools is used for Loopback addresses for every Fabric edge. /24 considered here can support maximum for 255 fabric edge nodes. If the network is more, consider to use a larger subnet.

Deploy

Implementation Prerequisites

This document is a companion of the CVDs: Software-Defined Access & Cisco DNA Center Management Infrastructure, Software-Defined Access Medium and Large Site Fabric Provisioning, and Software-Defined Access for Distributed Campus. This document assumes the user is already familiar with the prescribed implementation and the following tasks are completed:

-

Cisco DNA Center installation

-

ISE nodes installation

-

Integration of ISE with Cisco DNA Center

-

Discovery and provision of network infrastructure

-

Fabric domain creation

-

Fabric domain role provision for border, control, and edge nodes

-

WLC installation per fabric site

-

Configuration of fabric Service Set Identifier (SSID) and wireless profiles

-

Provisioning WLC with SSIDs and addition to the fabric

Tip : When implementing SD-Access wireless, one WLC is required per fabric site.

For further information, refer to the following documents for implementation details:

CVD Software-Defined Access & Cisco DNA Center Management Infrastructure

CVD Software-Defined Access Medium and Large Site Fabric Provisioning

CVD Software-Defined Access for Distributed Campus

Deployment considerations

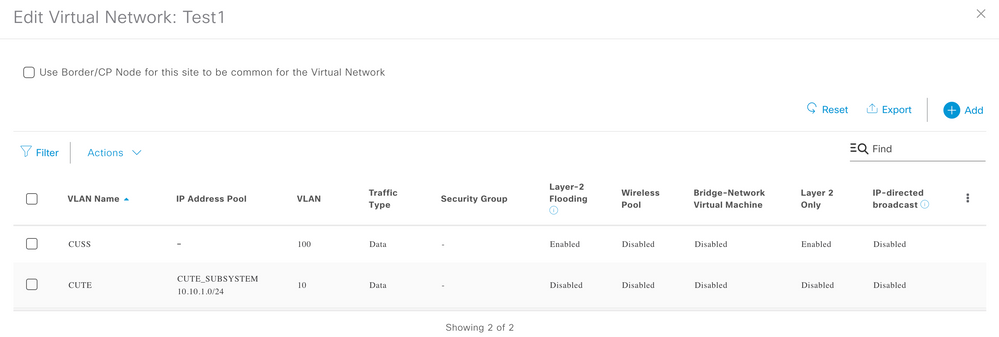

L2 only Networks - Tenants

Logically the Fabric works as a L2 Switch in this. In an airport network, tenants line Airlines & Concessionaires are independent networks and require the Airport network to provide a transparent L2 network. In such scenarios, the Airport network acts like a L2 service provider for these tenants. These tenants have their default gateway to be on an external firewall/router. Firewall traffic inspection is a common security and compliance requirement in many networks. In such Scenarios, today we have support for Overlapping IP Address pools in the Wired Fabric only, overlapping IP address pools are not supported in Wireless (OTT/FEW).

NOTE: L2B can be a standalone switch/ Stackwise Virtual / Stack switch. Only one L2B per VNI is supported.

The Below configurations are pushed by DNAC, shown for representation purposes only

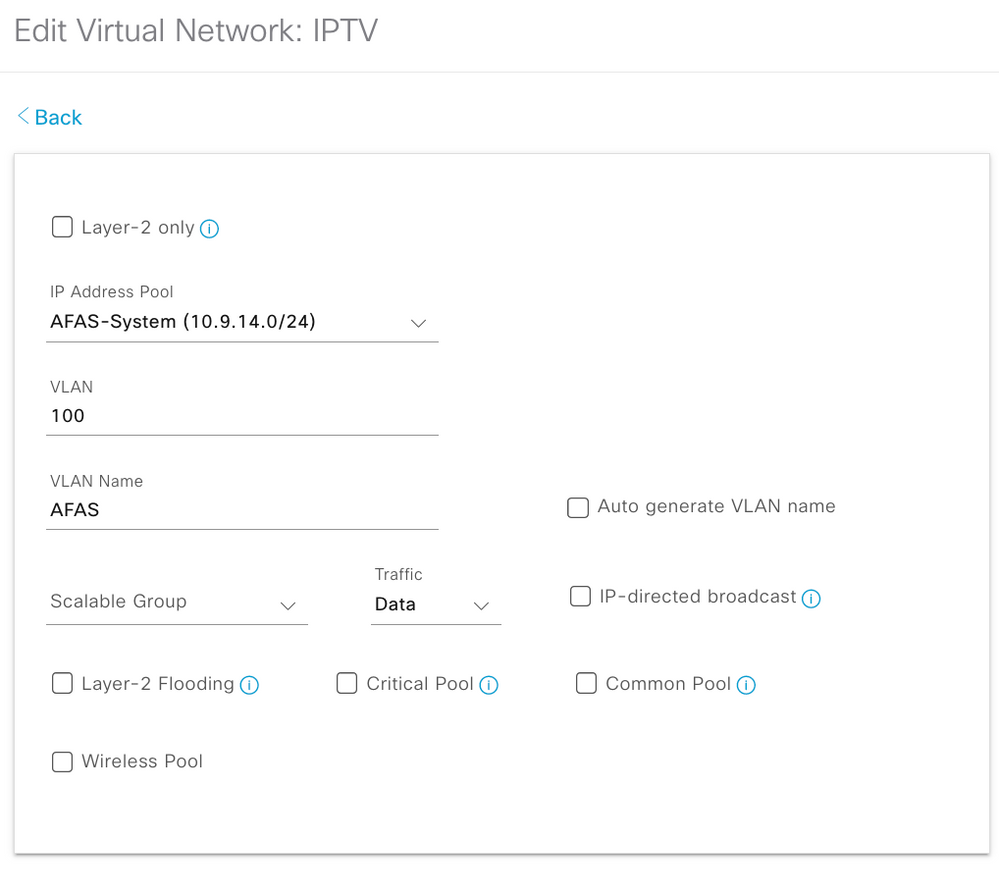

Procedure 1. L2 Only VLAN in the Virtual Network

Step 1. Add the L2 Only VLAN to the Virtual Network and deploy it using the workflow

Example Border/Edge Node Configuration

[Border]

router lisp

eid-record instance-id <instance-id> any-mac

[Edge]

no ipv6 mld snooping vlan <vlan-id>

no ip igmp snooping vlan <vlan-id>

cts role-based enforcement vlan-list <vlan-id>

vlan <vlan-id>

name <vlan-name>

exit

router lisp

instance-id-range <instance-id> override

service ethernet

eid-table vlan <vlan-id>

database-mapping mac locator-set <rloc>

!

instance-id <instance-id>

service ethernet

eid-table vlan <vlan-id>

broadcast-underlay 239.0.17.1

flood arp-nd

flood unknown-unicast

exit-service-ethernet

exit-instance-id

|

Step 2. Add the L2 Only VLAN to the L2 Handoff Border

Example L2 Border Configuration

|

[L2 Handoff Border] no ipv6 mld snooping vlan <vlan id>

no ip igmp snooping vlan <vlan id>

cts role-based enforcement vlan-list <vlan id>

vlan <vlan id>

name <vlan name>

exit

router lisp

instance-id <instance-id>

service ethernet

eid-table vlan <vlan id>

broadcast-underlay 239.0.17.1

flood arp-nd

flood unknown-unicast

exit-service-ethernet

exit-instance-id

no ipv6 mld snooping vlan 100

no ip igmp snooping vlan 100

cts role-based enforcement vlan-list 100

vlan 100

name CUSS

exit

router lisp

instance-id 6186

service ethernet

eid-table vlan 100

broadcast-underlay 239.0.17.1

flood arp-nd

flood unknown-unicast

exit-service-ethernet

exit-instance-id

|

**

**

Example Border/Edge Node Configuration

[Border]

router lisp

eid-record instance-id 6186 any-mac

[Edge]

no ipv6 mld snooping vlan 100

no ip igmp snooping vlan 100

cts role-based enforcement vlan-list 100

vlan 100

name CUSS

exit

router lisp

instance-id-range 6186 override

service ethernet

eid-table vlan 100

database-mapping mac locator-set rloc_c2600e62-d09f-4f05-b344-5ba8c24962e5

!

instance-id 6186

service ethernet

eid-table vlan 100

broadcast-underlay 239.0.17.1

flood arp-nd

flood unknown-unicast

exit-service-ethernet

exit-instance-id

|

Segmentation:

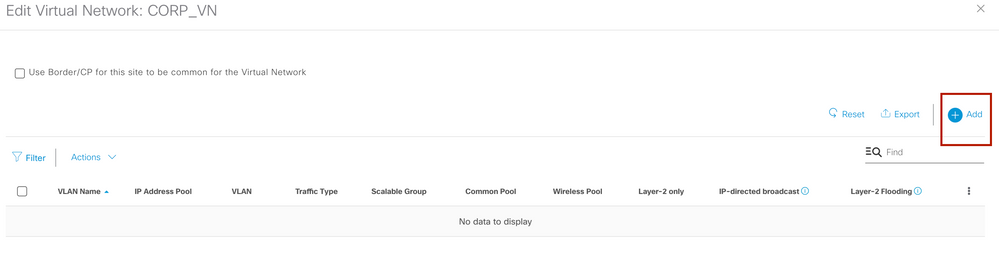

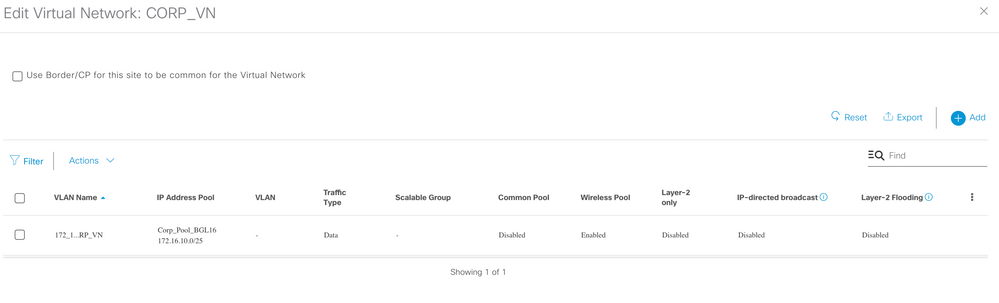

Macro Segmentation: One of the preferred segmentation methods for Airport networks is to isolate the user endpoints (such as PCs, Tablets, IP Phones, etc.) from the Airport sub systems, and enterprise IoT endpoints by placing them in different virtual routing and forwarding instances (VRFs). SD-Access offers the flexibility for macro-segmentation of endpoints in different VRFs, all of which can be provisioned in the network from Cisco DNA Center. The number of VRFs supports on the Fabric today is about 256, however the recommendation is to keep this as minimal as required.

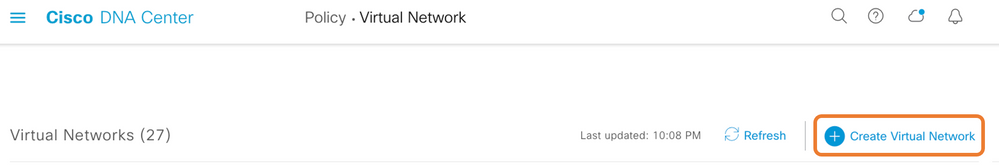

Procedure 2. Create Virtual Network

Step 1. Create Virtual Network (VN) and deploy it using the workflow and save it.

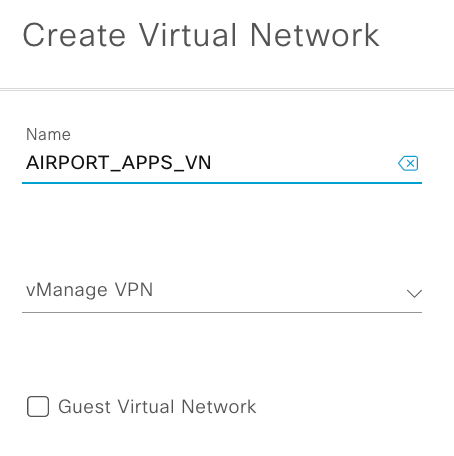

Step 2. Create VN to the Fabric.

Step 3. Add VN to the Fabric under host onboarding

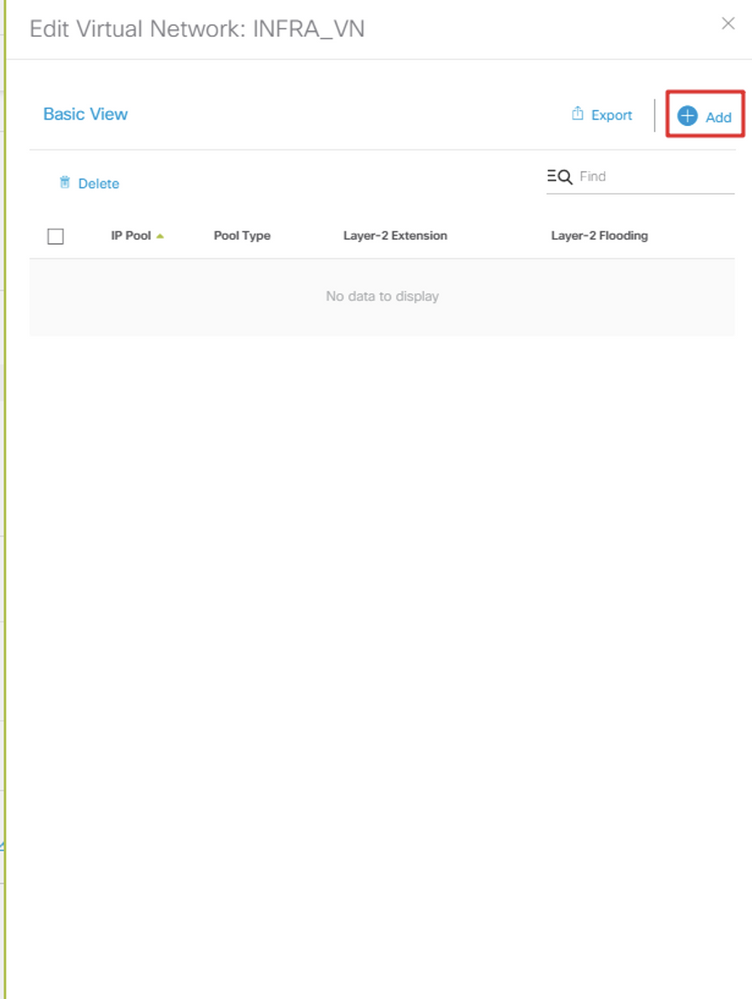

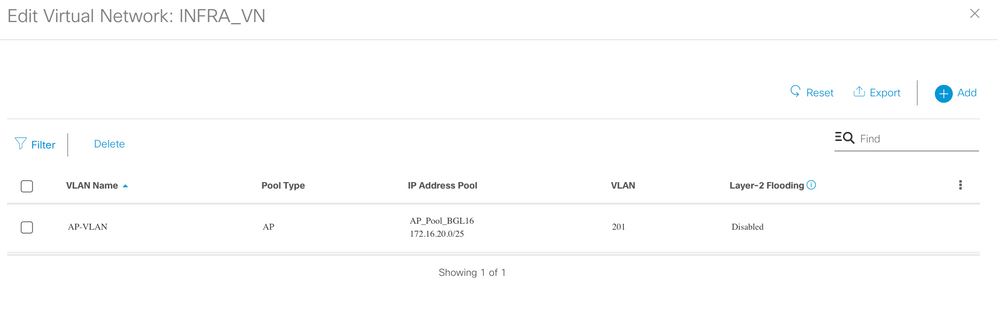

Note: INFRA_VN is only for Access Points and Extended Nodes mapped to the Global routing table. WLC sits outside the fabric, the border node is responsible for providing reachability between the WLC management interface subnet and the APs’ Management IP pool, so that the CAPWAP tunnel can form, and the AP can register to the WLC.

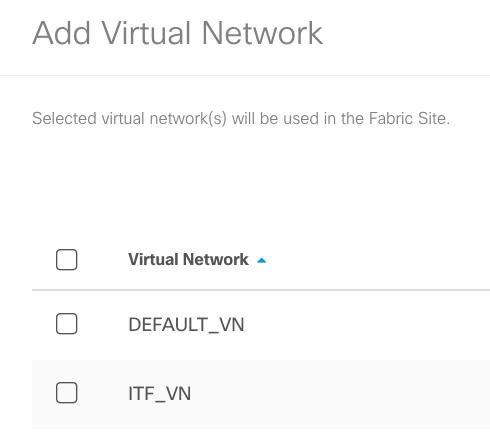

Step 4. Add necessary IP Pools to the VN as required and deploy it

Step 5. Border & Edge Configuration

Example Border/Edge Node Configuration

|

[Border] vrf definition <vrf name> rd 1:<L3 VNI instance-id> address-family ipv4 route-target import 1:<L3 VNI instance-id> route-target export 1:<L3 VNI instance-id> interface Loopback<vlan-id> description Loopback Border vrf forwarding <vrf name> ip address <Loopback ip> 255.255.255.255 exit router bgp <AS number> address-family ipv4 vrf <vrf name> bgp aggregate-timer 0 aggregate-address <Subnet> <subnet mask> summary-only redistribute lisp metric 10 network <Loopback ip> mask 255.255.255.255 router lisp instance-id <L3 VNI instance-id> service ipv4 eid-table vrf <vrf name> route-export site-registrations distance site-registrations 250 map-cache site-registration exit-service-ipv4 remote-rloc-probe on-route-change exit-instance-id site site_uci authentication-key ****** eid-record instance-id <L3 VNI instance-id> 0.0.0.0/0 accept-more-specifics eid-record instance-id <L3 VNI instance-id> <Subnet>/<mask> accept-more-specifics eid-record instance-id <L2 VNI instance-id> any-mac [Edge] vrf definition <vrf name> address-family ipv4 cts role-based enforcement vlan-list <vlan id> vlan <vlan id> name <vlan name> interface Vlan<vlan id> no lisp mobility liveness test no ip redirects mac-address 0000.0c9f.f64d description Configured from Cisco DNA-Center vrf forwarding <vrf name> ip address <L3 Subnet ip> 255.255.255.0 ip helper-address vrf <vrf name> <DHCP IP> ip route-cache same-interface lisp mobility <vlan name>-IPV4 router lisp instance-id-range <L2 VNI instance-id> override remote-rloc-probe on-route-change service ethernet eid-table vlan <vlan id> database-mapping mac locator-set <rloc> instance-id <L2 VNI instance-id> service ethernet eid-table vlan <vlan id> exit-service-ethernet exit-instance-id instance-id <L3 VNI instance-id> dynamic-eid <vlan name>-IPV4 database-mapping <Subnet>/<mask> <rloc> exit-dynamic-eid service ipv4 eid-table vrf <vrf name> map-cache 0.0.0.0/0 map-request exit-service-ipv4 remote-rloc-probe on-route-change exit-instance-id ip dhcp snooping vlan <vlan id> ! |

Example Border/Edge Node Configuration

|

[Border] vrf definition AIRPORT_APP_VRF rd 1:4104 address-family ipv4 route-target import 1:4104 route-target export 1:4104 ! interface Loopback98 description Loopback Border vrf forwarding AIRPORT_APP_VRF ip address 10.9.14.1 255.255.255.255 exit router bgp 65002 address-family ipv4 vrf AIRPORT_APP_VRF bgp aggregate-timer 0 aggregate-address 10.9.14.0 255.255.255.0 summary-only redistribute lisp metric 10 network 10.9.14.1 mask 255.255.255.255 router lisp instance-id 4104 service ipv4 eid-table vrf AIRPORT_APP_VRF route-export site-registrations distance site-registrations 250 map-cache site-registration exit-service-ipv4 remote-rloc-probe on-route-change exit-instance-id site site_uci authentication-key ****** eid-record instance-id 4104 0.0.0.0/0 accept-more-specifics eid-record instance-id 4104 10.9.14.0/24 accept-more-specifics eid-record instance-id 6186 any-mac [Edge] vrf definition AIRPORT_APP_VRF address-family ipv4 cts role-based enforcement vlan-list 98 vlan 98 name BRS exit interface Vlan98 no lisp mobility liveness test no ip redirects mac-address 0000.0c9f.f64d description Configured from Cisco DNA-Center vrf forwarding AIRPORT_APP_VRF ip address 10.9.14.1 255.255.255.0 ip helper-address vrf AIRPORT_APP_VRF 10.16.255.3 ip route-cache same-interface lisp mobility BRS-IPV4 exit router lisp instance-id-range 6186 override remote-rloc-probe on-route-change service ethernet eid-table vlan 98 database-mapping mac locator-set rloc_b4614cb7-c156-47f1-bc50-01733dbd2c52 instance-id 6186 service ethernet eid-table vlan 98 exit-service-ethernet exit-instance-id instance-id 4104 dynamic-eid BRS-IPV4 database-mapping 10.9.14.0/24 locator-set rloc_b4614cb7-c156-47f1-bc50-01733dbd2c52 exit-dynamic-eid service ipv4 eid-table vrf AIRPORT_APP_VRF map-cache 0.0.0.0/0 map-request exit-service-ipv4 remote-rloc-probe on-route-change exit-instance-id ip dhcp snooping vlan 98 ! |

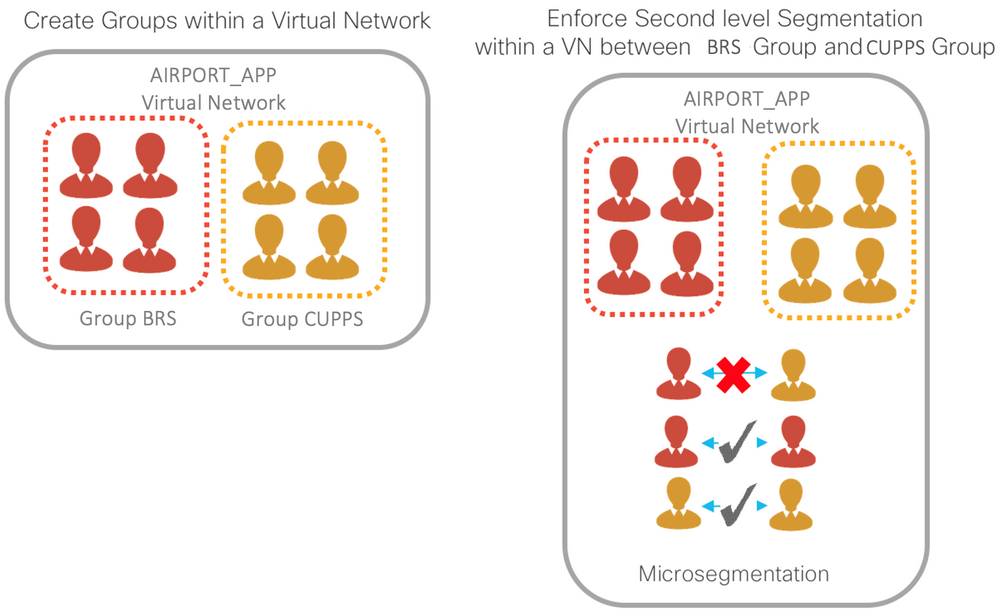

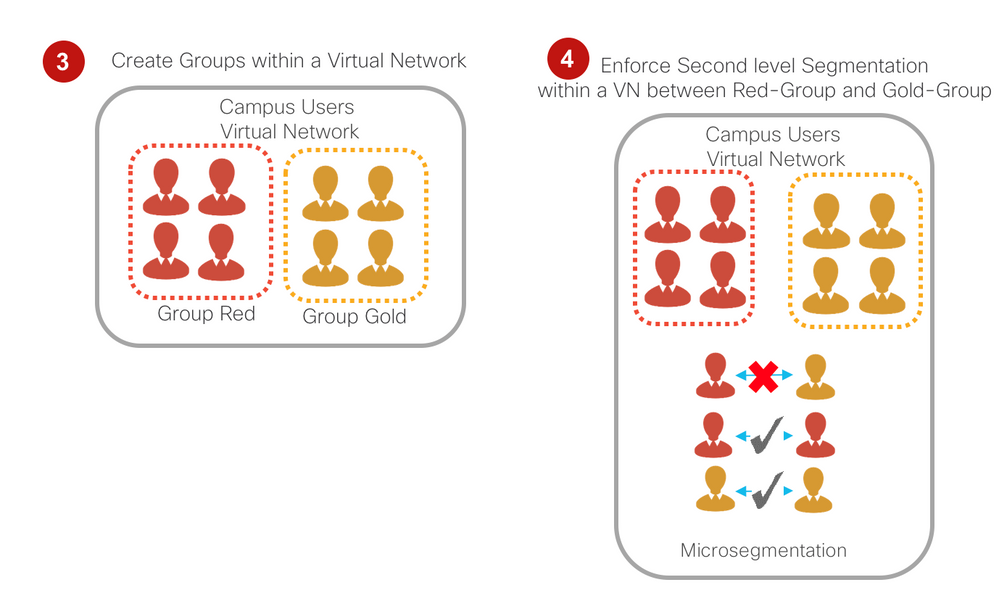

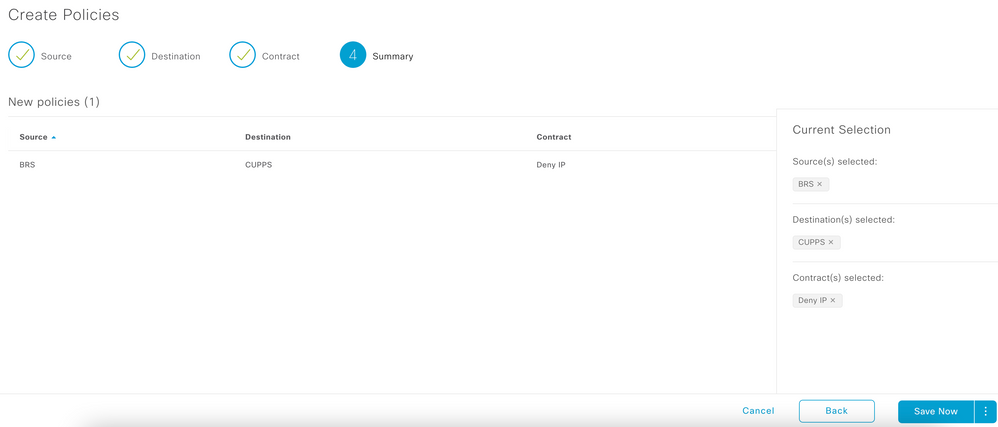

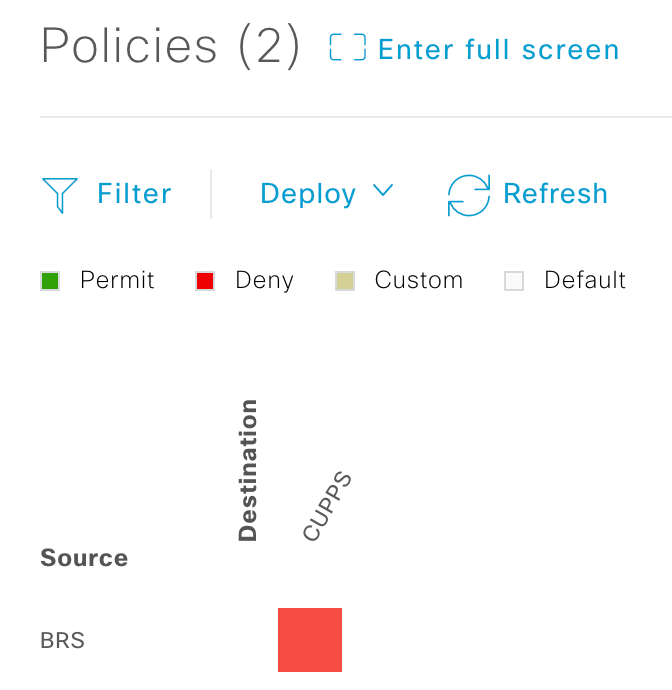

Micro-segmentation: Within the scope of a single VRF, further segmentation is required in most of the cases such as:

-

Placing Various departments in different groups E.g. AIRPORT_APP_VN (VRF)

-

Placing BRS and CUPPS in different groups in AIRPORT_APP_VRF

For such requirements, in the traditional network architecture, the only means to segment was by placing groups in different subnets enforced by IP ACLs. In Cisco SD-Access, in addition to providing the flexibility of using different subnets, we provide the flexibility of micro-segmentation, i.e., using the same subnet in a more user and endpoint-centric approach.

Referring to the visiting and resident doctor example, each group can still be placed in the same subnet. However, by leveraging dynamic authorization, they can be assigned different Security Group Tags (SGTs) by ISE based on their authentication credentials. These SGT-based rules can then be enforced by Security-Group Access Control Lists (SGACLs).

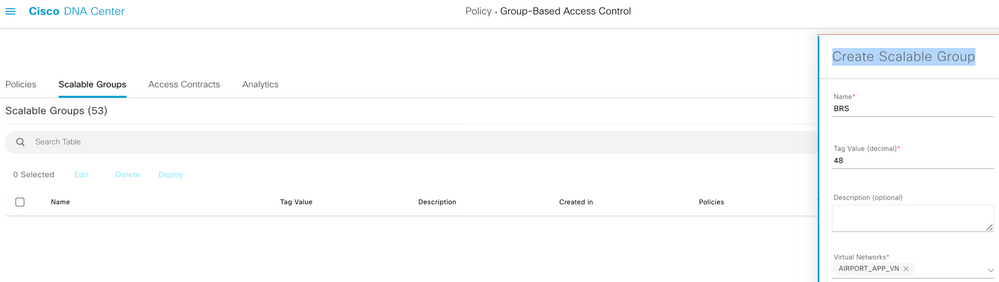

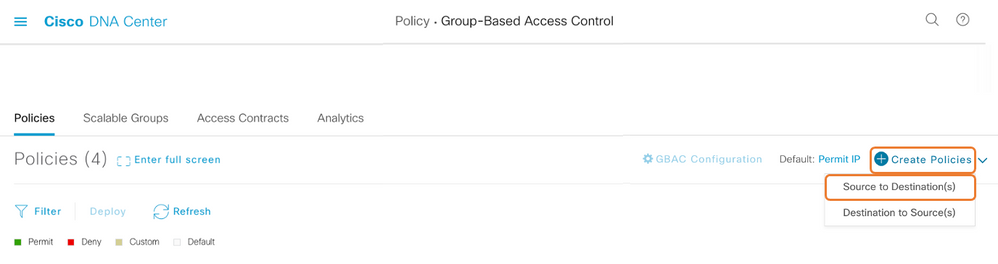

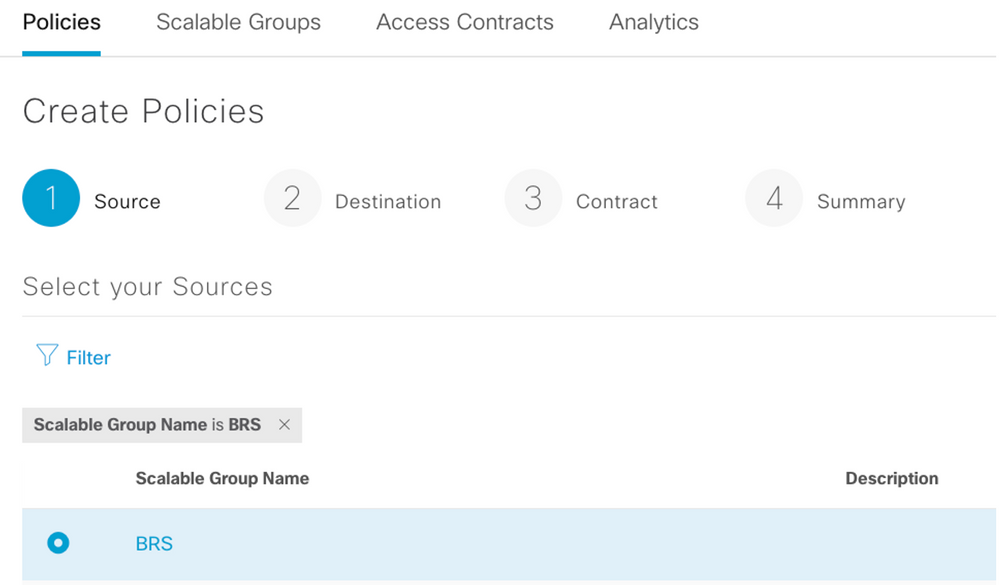

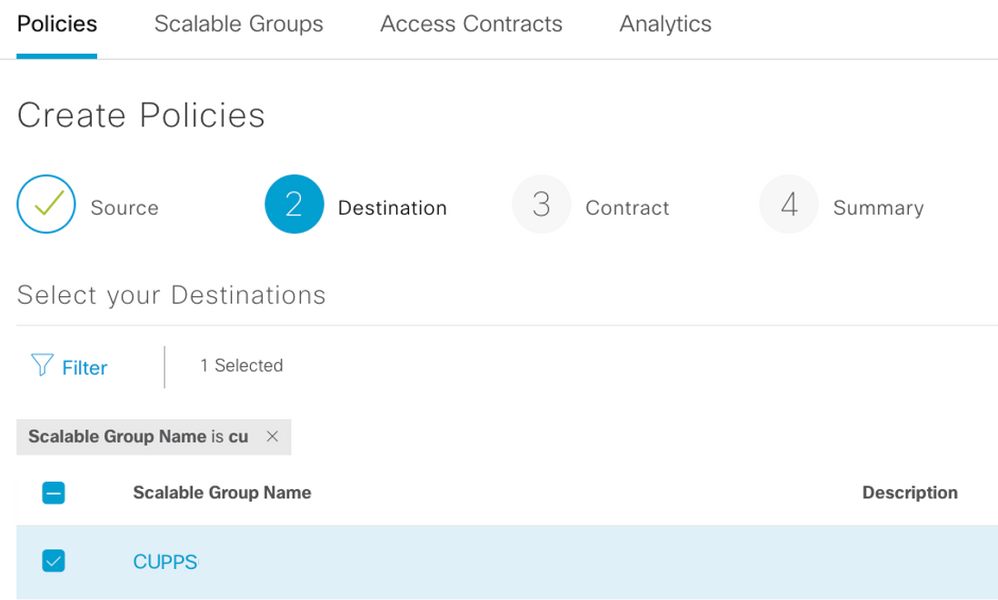

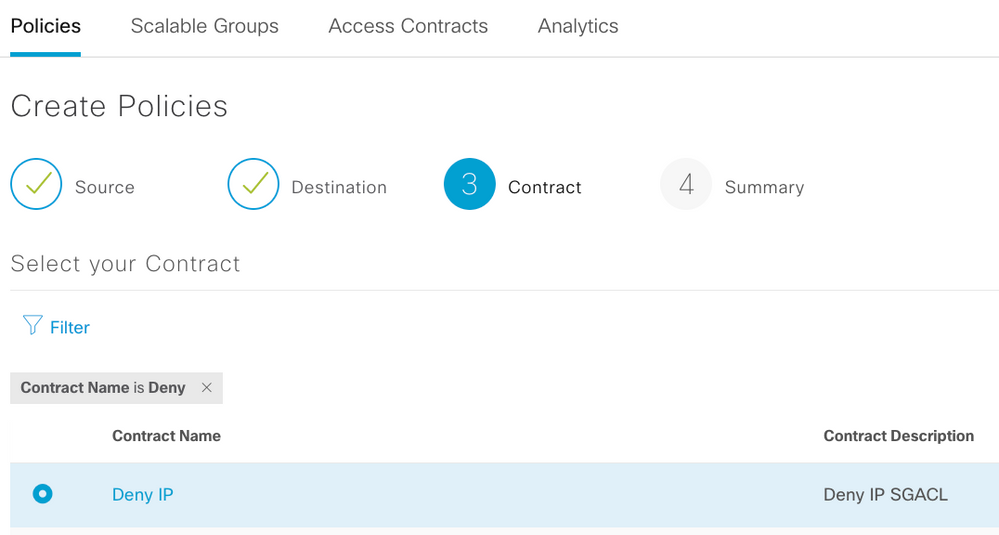

Procedure 3. Create Scalable Group

Step 1. Create Scalable Group (SG) and deploy it using the workflow and save it.

Step 2. Create Policies to control traffic

Step 3. Select your Source Scalable Group to create Policy

Step 4. Select your Destination Scalable Group to create Policy

Step 5. Select your Contract to create Policy

Step6. Save Policy to control traffic

Step 7. View the create Policy

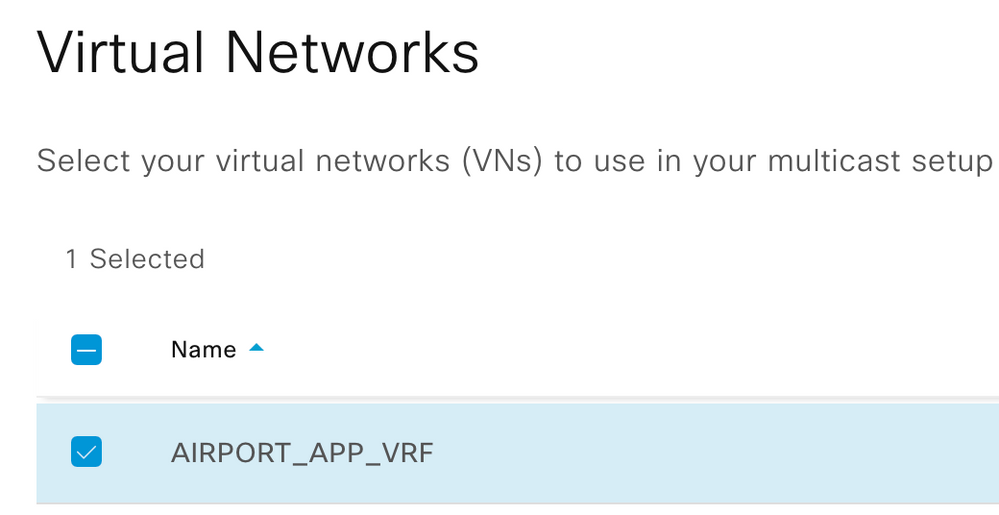

Multicast for CCTV / IPTV

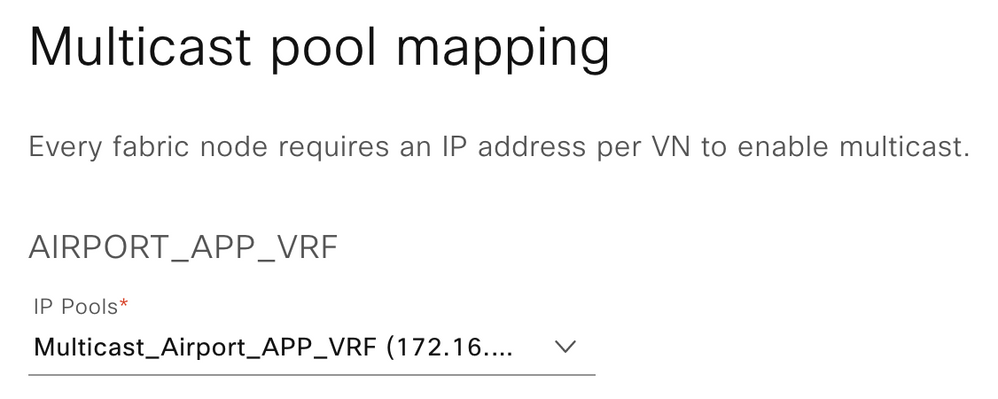

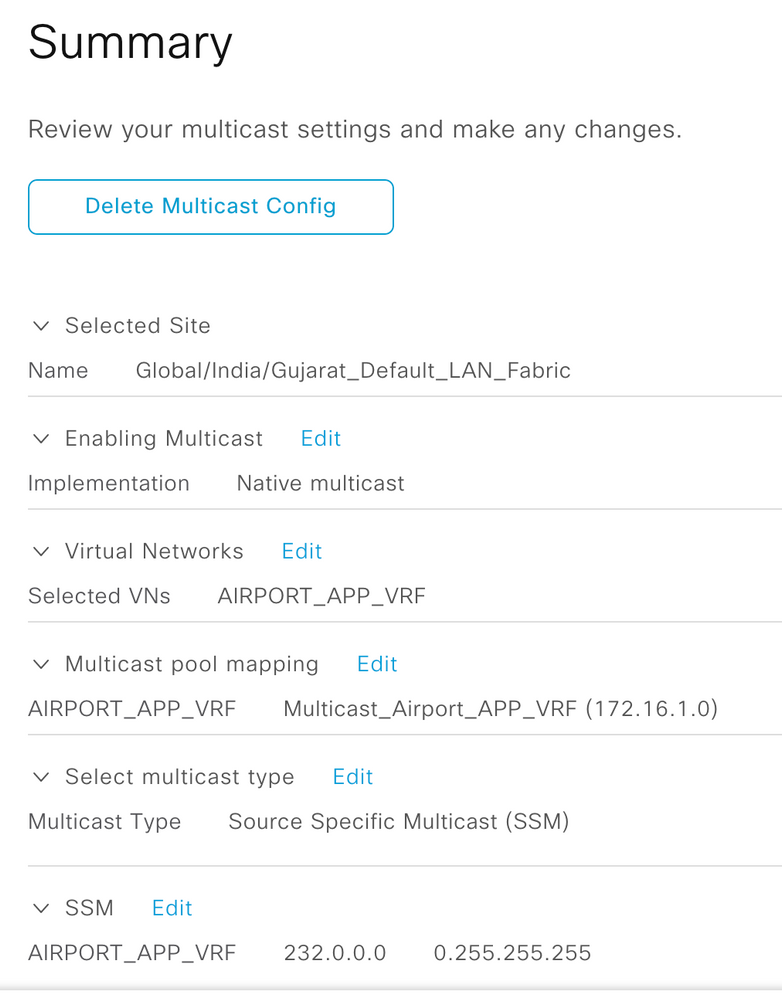

Procedure 4. Configure Multicast

Step 1. Enable Multicast with Native Multicast

Step 2. Select Virtual Networks for which multicast is to be enabled

Step 3. Select Multicast IP Pool the Virtual Network

Note: Multicast IP Pool for each VN must be created as a pre-requisite

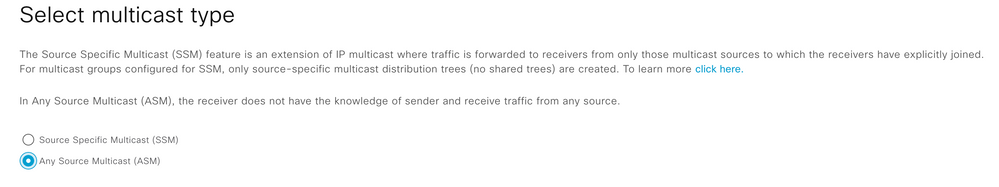

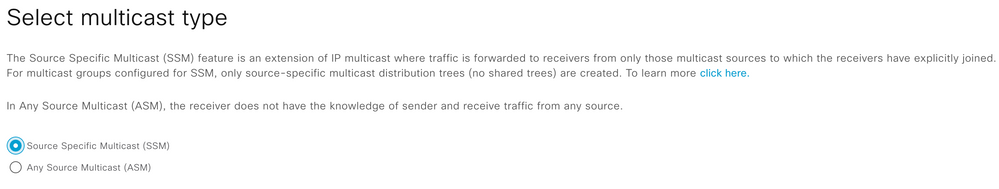

Step 4. Select Multicast deployment type for the Virtual Network

Note: ASM Multicast type is selected where all multicast endpoints don’t support IGMPv3

Note: SSM Multicast type is selected where all multicast endpoints support IGMPv3.

Step 5. Select the RP type for the VN

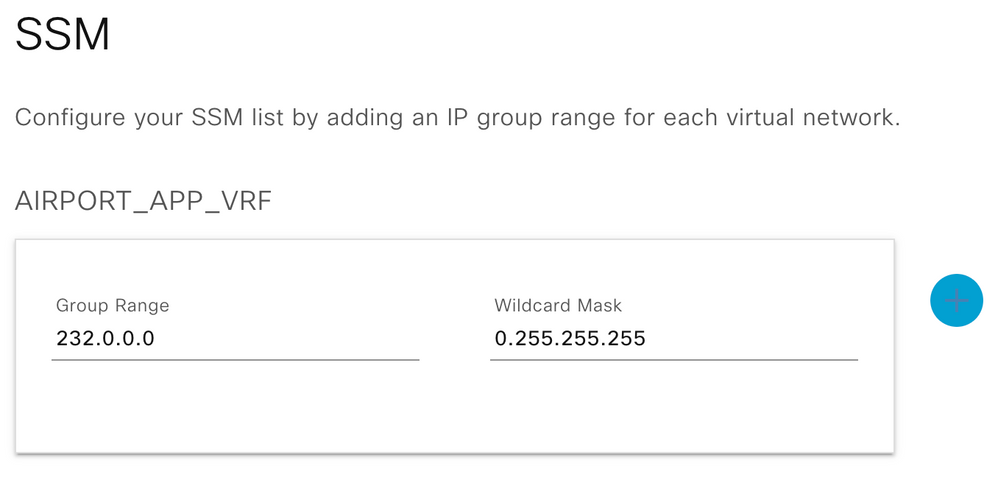

Step 5a. Select SSM Multicast IP group address for the VN

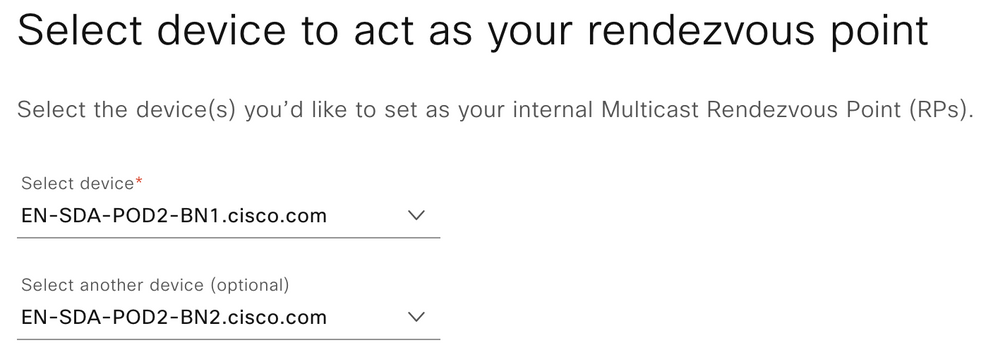

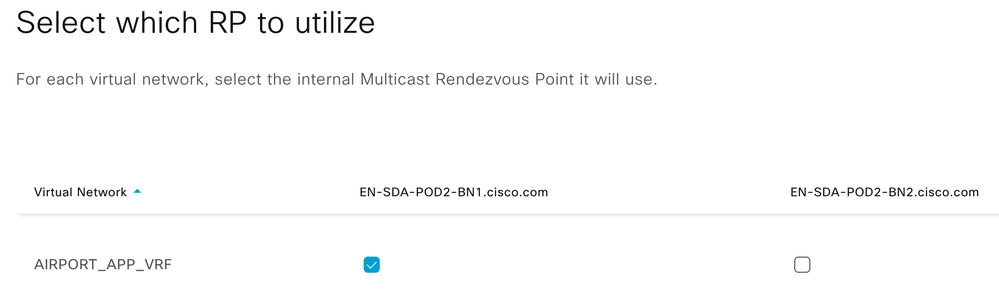

Step 6. Select the RP device for the Virtual Network

Step 7. Select the RP device to utilize for the Virtual Network

Step 8. Save and Deploy Multicast for the Virtual Network

ASM

Example Border/Edge Node Configuration

|

[Border] ip multicast-routing vrf <vrf name> interface Loopback<L3 instance id> vrf forwarding <vrf name> ip address <Multicast Loopback ip> 255.255.255.255 ip pim sparse-mode interface LISP0.<L3 instance id> ip pim lisp transport multicast ip pim lisp core-group-range 232.0.0.1 1000 ip pim vrf <vrf name> rp-address <Multicast Loopback ip> ip pim vrf <vrf name> register-source Loopback<L3 instance id> ip pim vrf <vrf name> ssm default router bgp <BGP AS#> address-family ipv4 vrf <vrf name> aggregate-address <Multicast Subnet> <Subnet Mask> summary-only network <Multicast Loopback ip> mask 255.255.255.255 router lisp instance-id <L3 instance id> service ipv4 eid-table vrf <vrf name> route-export site-registrations distance site-registrations 250 map-cache site-registration database-mapping <Multicast Loopback ip>/32 locator-set <rloc> exit-service-ipv4 remote-rloc-probe on-route-change exit-instance-id site site_uci authentication-key ****** eid-record instance-id <L3 instance id> <Multicast subnet>/<Mask> accept-more-specifics |

|

[Border] ip multicast-routing vrf AIRPORT_APP_VRF interface Loopback4102 vrf forwarding AIRPORT_APP_VRF ip address 172.16.1.1 255.255.255.255 ip pim sparse-mode interface LISP0.4102 ip pim lisp transport multicast ip pim lisp core-group-range 232.0.0.1 1000 ip pim vrf AIRPORT_APP_VRF rp-address 172.16.1.1 ip pim vrf AIRPORT_APP_VRF register-source Loopback4102 ip pim vrf AIRPORT_APP_VRF ssm default router bgp 65001 address-family ipv4 vrf AIRPORT_APP_VRF aggregate-address 172.16.1.0 255.255.255.0 summary-only network 172.16.1.1 mask 255.255.255.255 router lisp instance-id 4102 service ipv4 eid-table vrf AIRPORT_APP_VRF route-export site-registrations distance site-registrations 250 map-cache site-registration database-mapping 172.16.1.1/32 locator-set rloc_6d2284a0-ba3c-469a-99b5-099ce544b7df exit-service-ipv4 remote-rloc-probe on-route-change exit-instance-id site site_uci authentication-key ****** eid-record instance-id 4102 172.16.1.0/24 accept-more-specifics ! |

SSM

Example Border/Edge Node Configuration

|

[Border] ip access-list standard SSM_RANGE_<vrf name> permit <multicast subnet> <wildcard mask> vrf definition <vrf name> rd 1:<L3 instance id> address-family ipv4 route-target import 1:<L3 instance id> route-target export 1:<L3 instance id> ip multicast-routing vrf <vrf name> interface Loopback<L3 instance id> vrf forwarding <vrf name> ip address <Multicast rloc ip> 255.255.255.255 ip pim sparse-mode interface LISP0.<L3 instance id> ip pim lisp transport multicast ip pim lisp core-group-range 232.0.0.1 1000 ip pim vrf <vrf name> register-source Loopback<L3 instance id> ip pim vrf <vrf name> ssm range SSM_RANGE_<vrf name> router bgp <BGP AS#> address-family ipv4 vrf <vrf name> bgp aggregate-timer 0 aggregate-address <Multicast Subnet> <Subnet mask> summary-only redistribute lisp metric 10 network <Multicast Loopback ip> mask 255.255.255.255 router lisp instance-id <L3 instance id> service ipv4 eid-table vrf <vrf name> route-export site-registrations distance site-registrations 250 map-cache site-registration database-mapping <Multicast Loopback ip >/32 locator-set <rloc> exit-service-ipv4 remote-rloc-probe on-route-change exit-instance-id site site_uci authentication-key ****** eid-record instance-id <L3 instance id> <Multicast Subnet>/<mask> accept-more-specifics ! [Edge] ip access-list standard SSM_RANGE_<vrf name> permit <multicast subnet> <wildcard mask> vrf definition <vrf name> address-family ipv4 route-target import 1:<L3 instance id> route-target export 1:<L3 instance id> ip multicast-routing vrf <vrf name> interface Loopback<L3 instance id> vrf forwarding <vrf name> ip address <Multicast rloc ip> 255.255.255.255 ip pim sparse-mode interface LISP0.<L3 instance id> ip pim lisp transport multicast ip pim lisp core-group-range 232.0.0.1 1000 ip pim vrf <vrf name> register-source Loopback<L3 instance id> ip pim vrf <vrf name> ssm range SSM_RANGE_<vrf name> router lisp instance-id <L3 instance id> service ipv4 eid-table vrf <vrf name> map-cache 0.0.0.0/0 map-request database-mapping <Multicast rloc ip>/32 locator-set rloc_416ae5cb-ead9-42d2-af2d-d7738dc8f0b2 exit-service-ipv4 remote-rloc-probe on-route-change exit-instance-id |

Example Border/Edge Configuration

Silent Host

Certain IoT endpoints on an Airport networks exhibit unique communication behaviour. They are broadly categorized into two categories based on their communication characteristics:

-

Once the endpoints are onboarded onto the network, they do not send any further packets or frames. These endpoints are essentially hibernating and only respond to specific frames or packets directly addressed to them. These devices may be DHCP-capable or statically addressed.

-

Endpoints that are cabled and have not registered to the Fabric Overlay because of a static IP address

These types of devices are sometimes referred to as Silent Hosts due to their unique communication.

For the first category of endpoints, even though the endpoint is active, the Edge Node that is connected to that endpoint may have removed that endpoint from the Edge Node’s IP Device Tracking Database (IPDT) host database. Since the endpoint is hibernating, the Edge Node is not receiving any response to periodic Internet Control Message Protocol (ICMP) probes, so the host is considered as no longer present on the Edge Node. Any other endpoint in the network with a previous record of communicating with the silent host will continue to communicate using the Silent Host’s MAC address.

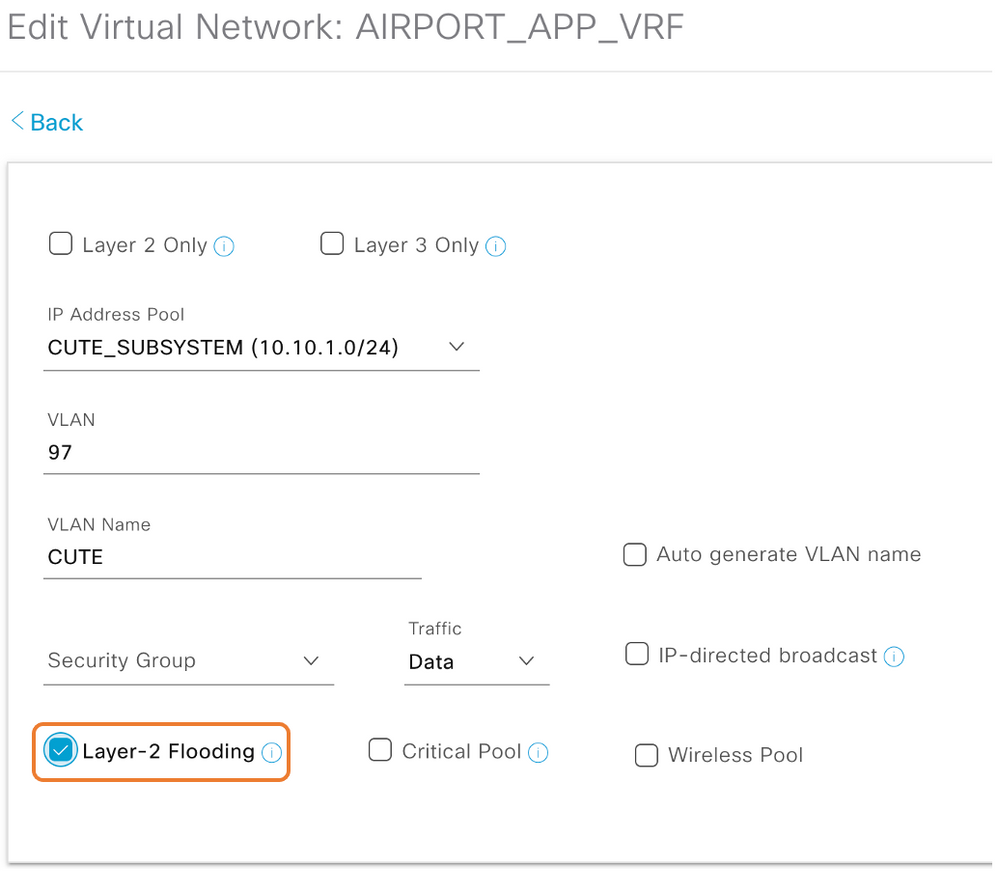

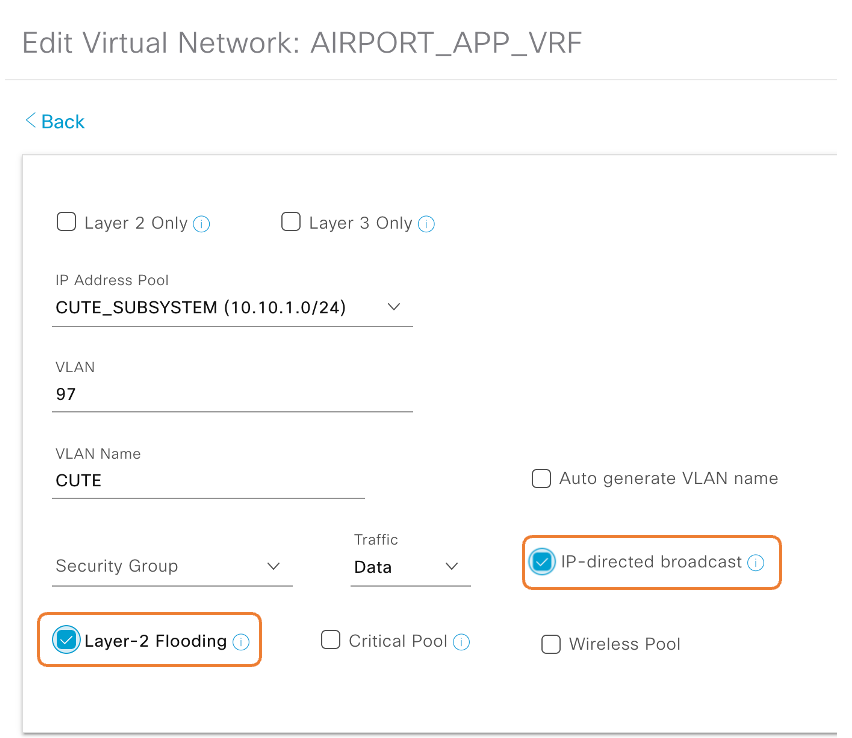

Cisco SD-Access supports handling unknown unicast frames by flooding them to every Edge Node within an IP Address Pool that is enabled for Layer 2 Flooding in the Fabric Site. While not responding to ICMP, hibernating devices will respond to specific payloads of traffic that are more directly relevant. As a result, the Silent Host will respond and re-join the Fabric network if the unknown unicast frames are relevant to it. Flooding to the endpoint will also happen if the endpoint is part of a Layer 2 VNI, which requires the Layer 2 Flooding feature to be enabled.

Once the endpoint is registered in the Control Plane Node, any further communication to the endpoint can continue without concern.

For the Second category of endpoints, they have never registered with the Control Plane Node for the second set of endpoints. The only way the Fabric can even know of the presence of such an endpoint is by configuring a manual IP Device Tracking (IPDT) entry on the Edge Node to which such a Silent Host is connected. This status mapping permanently ties the host’s MAC address to the Device Tracking database. Once configured, such hosts will never lose traction from the Control Plane and can continue to attract traffic. The Below configuration is recommended to be pushed using a DNAC Template.

Example Edge Node Configuration

|

[Edge] device-tracking binding vlan 10 192.168.1.10 interface gigabitEthernet 1/0/1 abc.abc.abc reachable-lifetime infinite |

IP Directed Broadcast:

A Catalyst 9000 SD-Access Border switch can convert an IP-directed broadcast into an Ethernet broadcast and flood to all endpoints in the destination VLAN. This feature can be used for silent host challenge.

IP-directed broadcast requires the Layer 2 flooding feature to be enabled. This in turn requires multicast in the Underlay.

IP-Directed Broadcasts are forwarded to Wired Hosts only, as the broadcast packets are not forwarded between the Edge Node and Fabric AP

Wireless for Tenants & Passengers

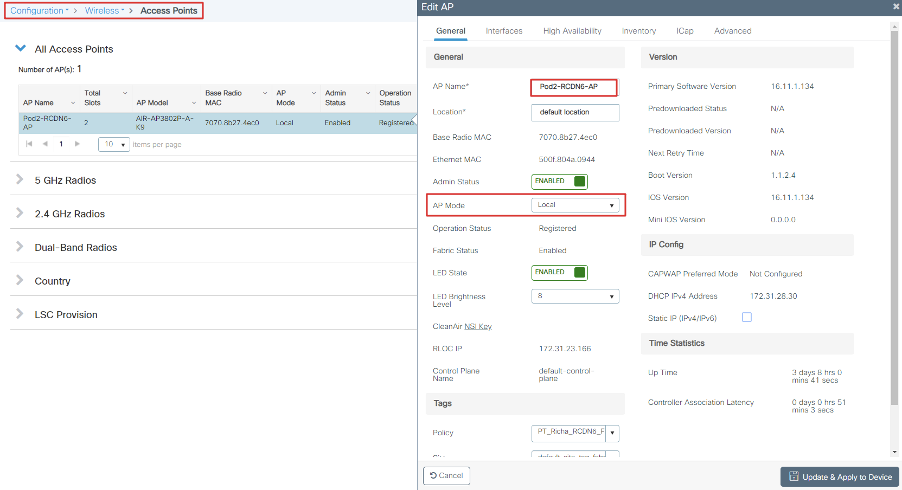

Process 1: Preparing for Wireless

An initial configuration is required to be applied to 9800 WLCs before discovery and provisioning can occur.

The Cisco Catalyst 9800 Wireless Controller boots up similarly to any other Cisco router or switch running the IOS-XE software, which may be familiar to the user.

After setting up the WLCs, ensure they are upgraded to the same SDA compatible software version. The below link is the SDA compatibility matrix:

https://www.cisco.com/c/dam/en/us/td/docs/Website/enterprise/sda_compatibility_matrix/index.html

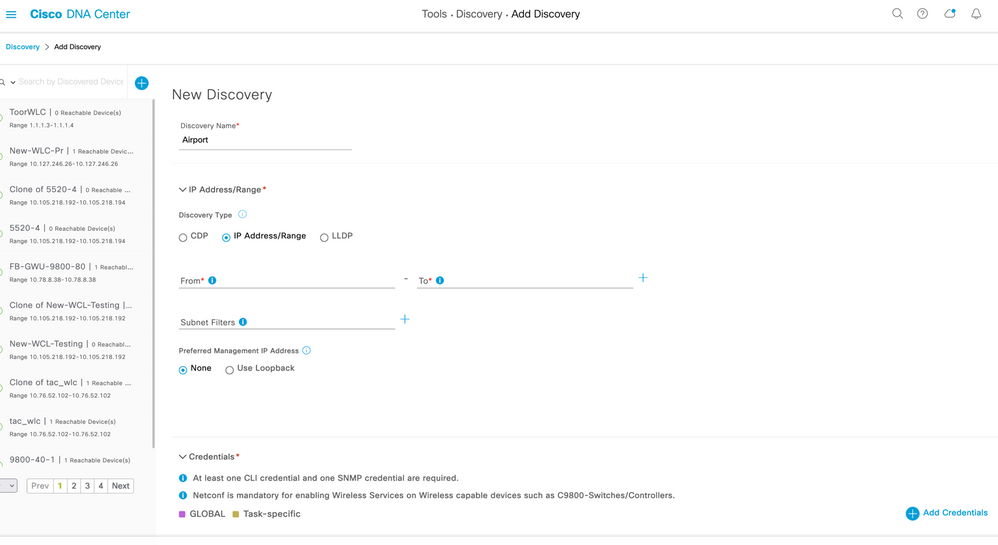

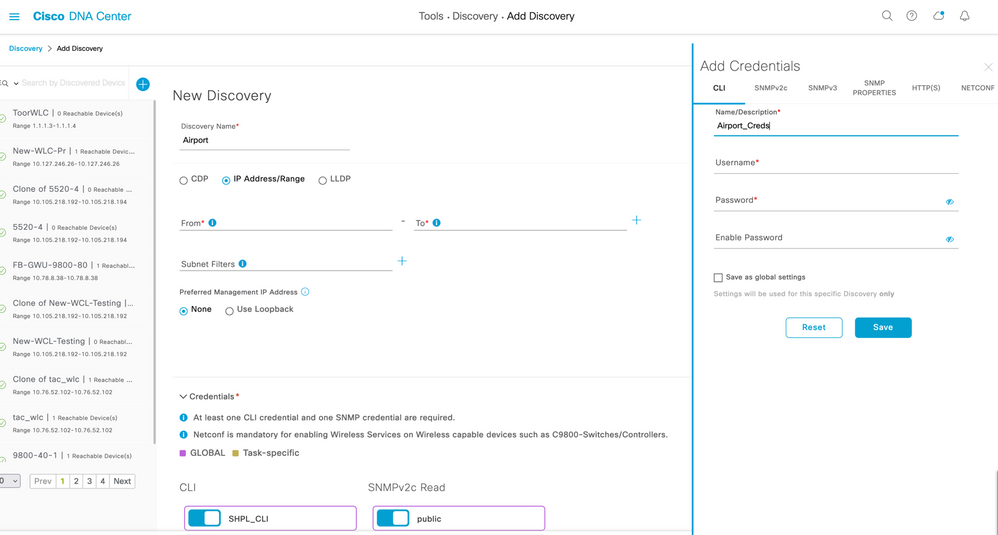

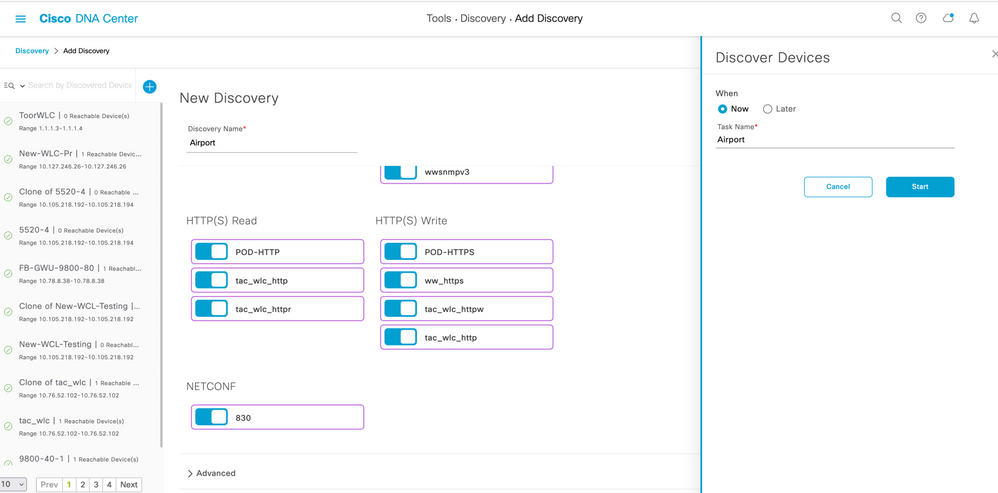

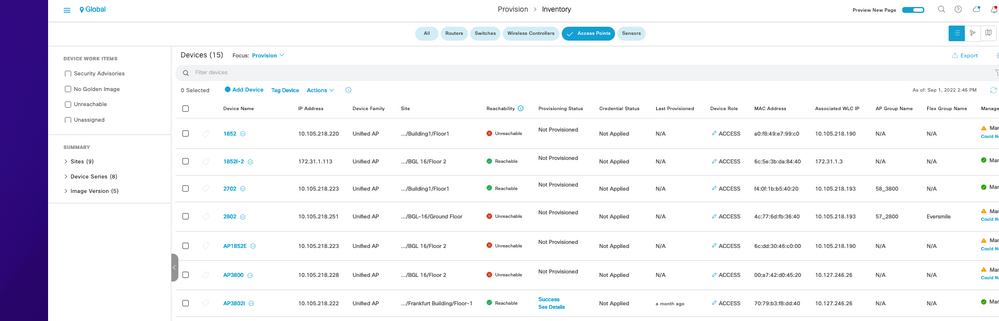

Procedure 1. Discover and Manage Wireless LAN Controllers

WLCs need to be discovered via DNAC to make it fabric enabled and configure via DNAC. You can discover devices using an IP address range, CDP, or LLDP.

This procedure shows you how to discover devices and hosts using an “IP address range”. We need to fill in both the Primary and Secondary IP addresses.

Table 1 Discovery

| Discovery Name | Discovery Type | From IP | To IP | CLI User Name | SNMPv2 Read Community | SNMPv2 Write Community | SNMPv3 Username | Netconf port |

| WLC | IP Address/Range | x.x.x.x | y.y.y.y | dnac | Read Community | Write Community | 830 |

-

Login to DNAC and click on the ‘tiles’ icon at the upper right corner of the screen. Click on Tools-> Discovery.

-

In the next page, click on + symbol for creating a new discovery.

-

Fill in the details as shown in the images below.

-

Click on the + symbol to add WLC specific credentials and save the config for each parameter.

-

Once all the details are filled in as shown below, start the discovery.

Step 1: From the DNA Center home page, click on Tools > Discovery.

Figure Add Discovery

Step 2. In the Discovery Name field, enter a name.

Step 3. Expand the IP Address/Ranges area, if it is not already visible, and configure the following fields:

-

For Discovery Type, click Range.

-

In the IP Ranges field, enter the beginning and ending IP addresses (IP address range) for DNA Center to scan and click.

-

You can enter a single IP address range or multiple IP addresses for the discovery scan.

|

Management IP to be used for Discovery Cisco Wireless Controllers must be discovered using the Management IP address instead of the Service Port IP address. If not, the related wireless controller 360 and AP 360 pages will not display any data. |

Step 4. Add the Credentials area and configure the credentials that you want to use for the Discovery job. Fill CLI, SNMPv2/SNMPv3 and Netconf Port 830 fields. Once saved as Global under each field, Expand the Credentials and Select the CLI, SNMP and Netconf credentials we just created.

Figure 1 Discovery Credentials

Step 5 Netconf is mandatory for enabling wireless services on wireless capable devices such as C9800 switches/controllers. Recommended port number is 830.

- Run Discovery

Step 6. Select the protocol & Click Start

The Discovery window displays the results of your scan.

Figure 2 Run Discovery

Once discovery is complete, go to inventory and verify that both the WLCs are present.

Procedure 2. Configure Fabric Wireless SSIDs

- Configure Corporate SSID

Global wireless network settings include settings for Service Set Identifier (SSID), wireless interfaces, wireless radio frequency (RF), and sensors.

Perform the below steps to configure SSIDs for an enterprise wireless network.

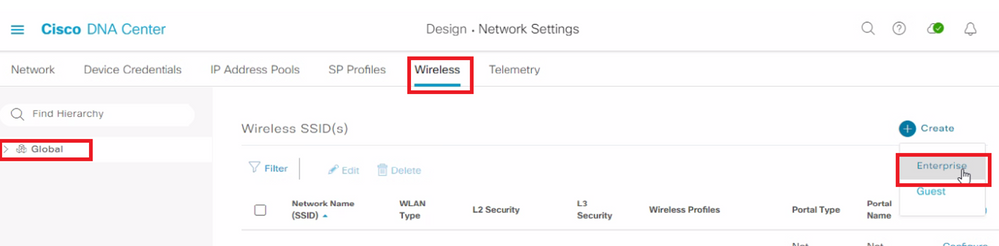

Step 1: Navigate to Design → Network Settings → Wireless.

Step 2: Under +Add Click Enterprise

Figure 3 Add SSID

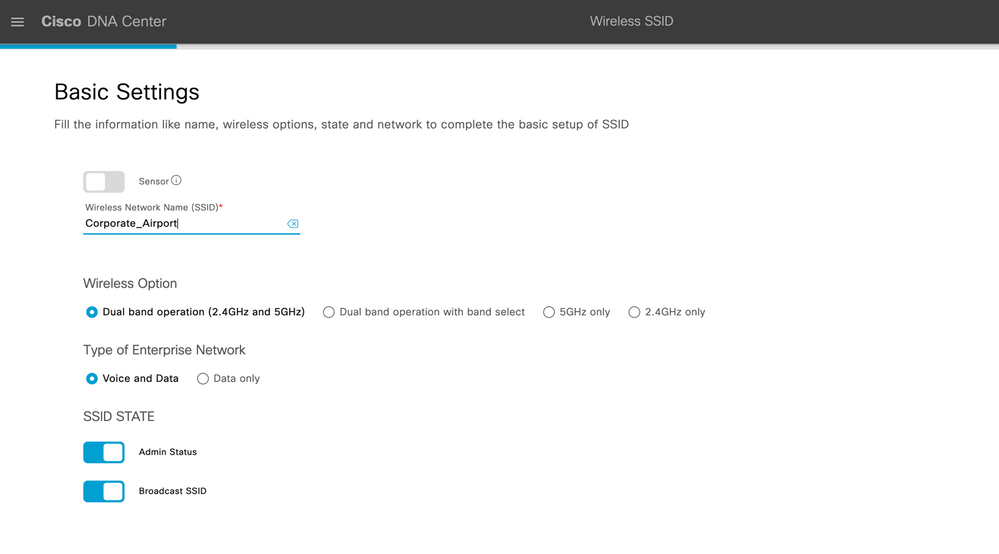

Step 3: In the Wireless Network Name (SSID) text box, enter a unique name “Corporate_Airport” for the wireless network.

Step 4: Under Type of Enterprise Network, click Voice and Data radio button. The selection type defines the quality of service that is provisioned on the wireless network.

Step 5: Configure wireless band preferences by selecting one of the Wireless Options:

-

Dual band operation (2.4 GHz and 5 GHz)—The WLAN is created for both 2.4 GHz and 5 GHz. The band select is disabled by default.

-

Dual band operation with band select—The WLAN is created for 2.4 GHz and 5 GHz and band select is enabled.

-

5 GHz only—The WLAN is created for 5 GHz and band select is disabled.

-

2.4 GHz only—The WLAN is created for 2.4 GHz and band select is disabled.

Step 6: Check the Fast Lane check box to enable fast lane capabilities on the network.

Step 7: Click the Admin Status button On, to Enable the admin status.

Step 8: Click the BROADCAST SSID button off, if you do not want the SSID to be visible to all wireless clients within the range.

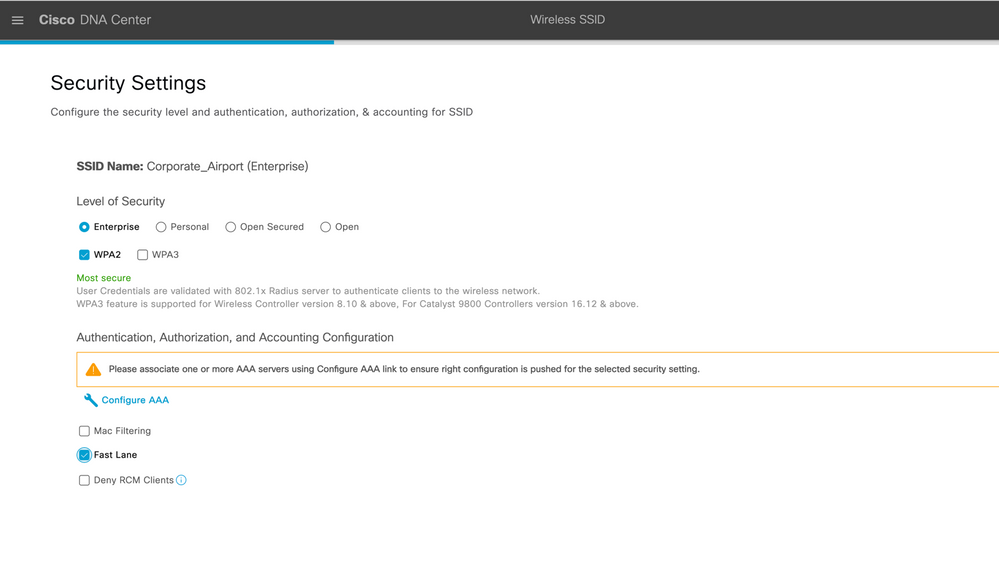

Step 9: Under Level of Security, set the encryption and authentication type for the network. The security options selected are:

- WPA2 Enterprise—Provides a higher level of security using Extensible Authentication Protocol (EAP) (802.1x) to authenticate and authorize network users with a RADIUS server.

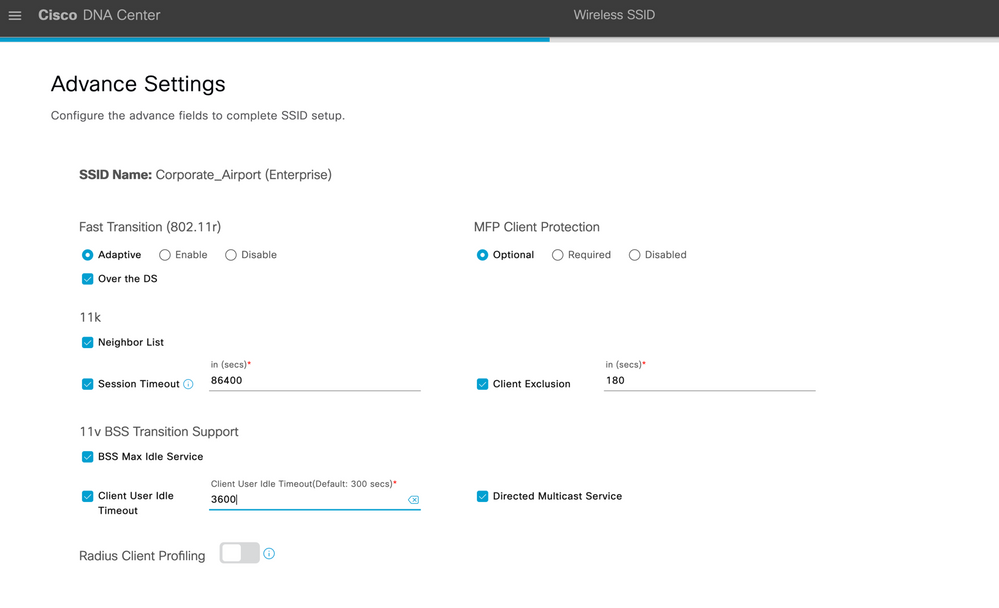

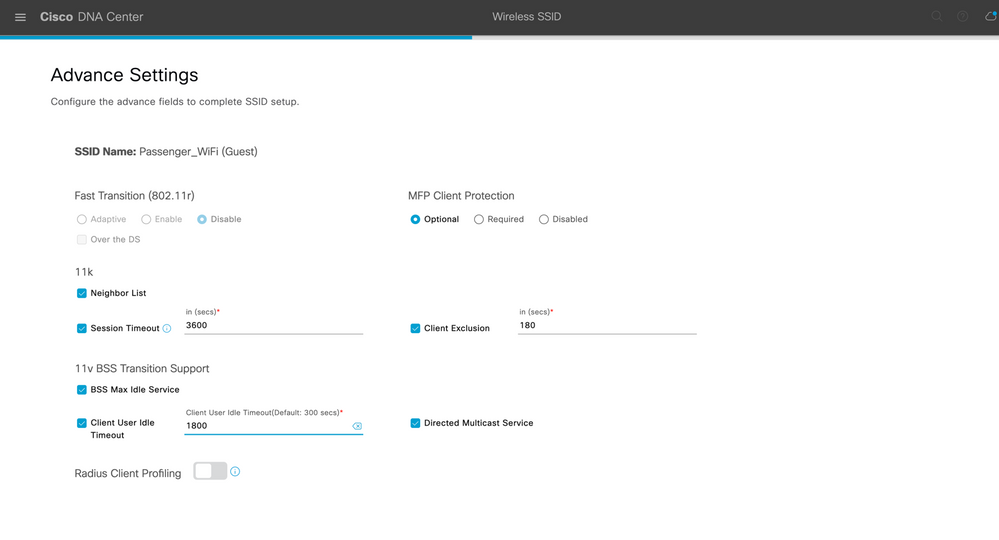

Figure 4 Advanced Settings

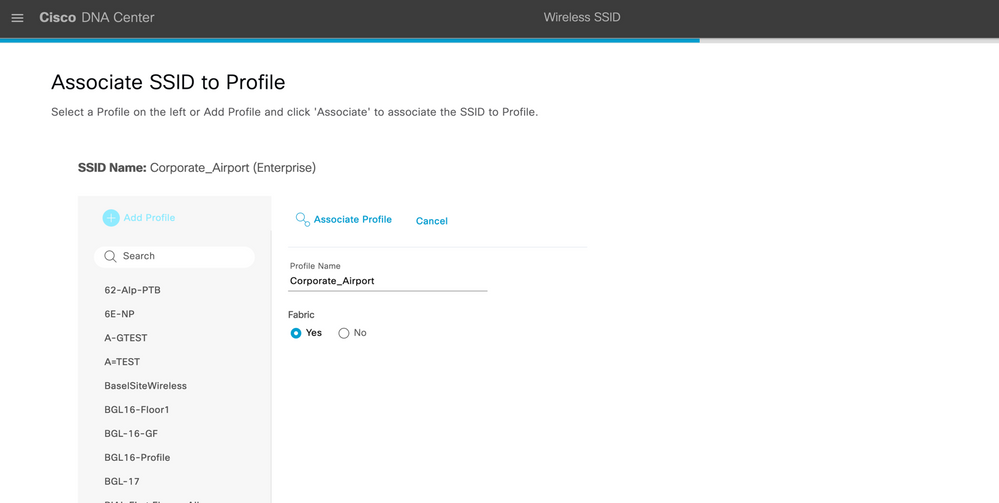

Step 11: After clicking Next, In the Wireless Profiles window, click + Add to create a new wireless profile.

Step 12: Click on Add profile and add a new profile name.

Step 13: Click on Associate profile and click on Next and hit Save

Table 2 SSID Parameters

| Features | Settings |

| Wireless Network Name (SSID) | Corporate_Airport |

| Admin status | Enabled |

| Broadcast SSID | Enabled |

| Wireless Option | 5GHz only |

| Level of Security |

Encryption: WPA/WPA2-AES Authentication: 802.1x-FT |

| Mac Filtering | Unchecked |

| Authentication Servers | x.x.x.x |

| Type of Enterprise Network | Voice and Data |

| WMM Policy | Enabled |

| AAA Override | Checked |

| Fastlane | Checked |

| Fast Transition (802.11r) | Enabled |

| Over the DS | Un-Checked |

| Peer-2-Peer Blocking | Disabled |

| Coverage Hole Detection | Checked |

| Session timeout | 84600 |

| Client Exclusion | 0 |

| MFP Client Protection | Optional |

| 11k Neighbor List | Checked |

| 11v BSS TRANSITION SUPPORT | BSS Max Idle Service - Checked |

| Directed Multicast Service – Checked | |

| Client Idle User Timeout Checked, 3600 Seconds |

- Configure Passenger SSID

Step 1: Navigate to Design → Network Settings → Wireless.

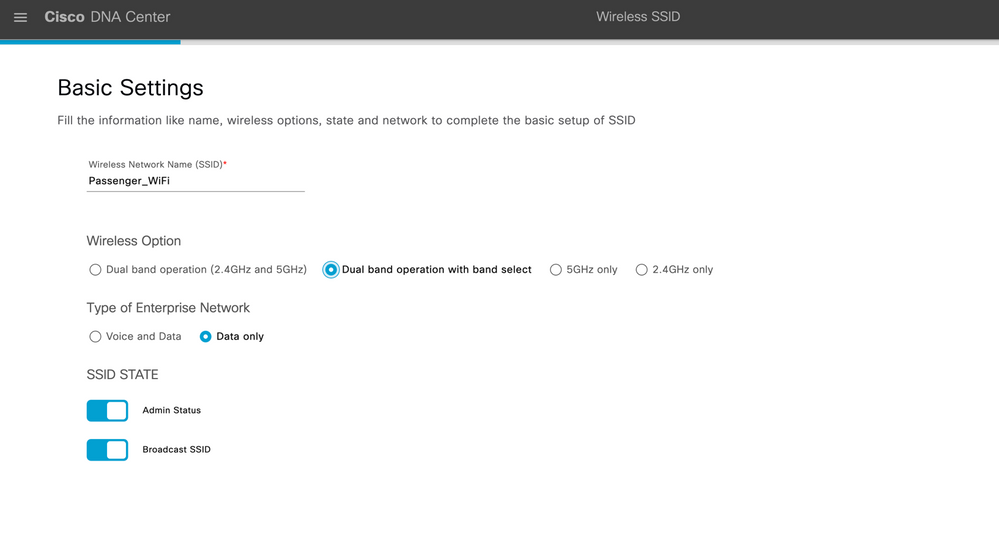

Figure 5 Wireless Settings

Step 2: Under Guest Wireless, click +Add → Select Guest to create new SSIDs.

Step 3: In the Wireless Network Name (SSID) text box, enter a unique name for the guest SSID that you are creating.

Figure 6 SSID CREATION

Step 4: Under SSID STATE, configure the following:

-

Click the Admin Status button off, to disable the admin status.

-

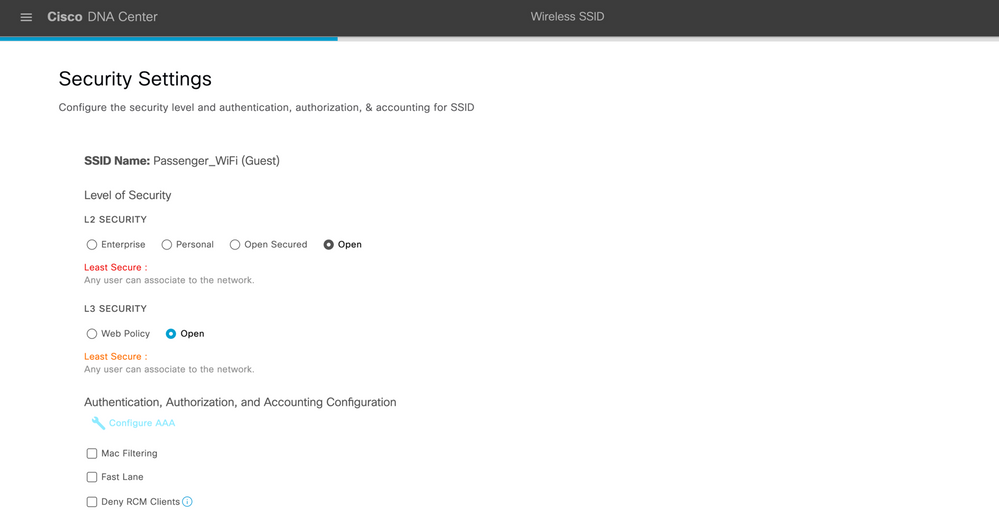

Click the BROADCAST SSID button off, if you do not want the SSID to be visible to all wireless clients within the range.

Step 5: Under Level of Security, select the encryption and authentication type for this guest network: Web Policy and Open.

Step 6: The Open policy type provides no security. It allows any device to connect to the wireless network without any authentication.

Step 7: After clicking Next, In the Wireless Profiles window, click + Add to create a new wireless profile.

Step 8: Click on Add profile and add a new profile name.

Step 9: Click on Associate profile and click on Next and hit Save

Note: There is no authentication selected for passenger SSID as it is generally taken care by ISP and clients are authenticated using OTP on their mobile phones. The traffic from this VLAN is passed directly passed to ISP router or firewall. Rate limiting can be applied on this SSID if customer demands it.

Table 3 SSID Parameters

| Features | Settings |

| Wireless Network Name (SSID) | Passenger_WiFi |

| Admin status | Enabled |

| Broadcast SSID | Enabled |

| Wireless Option | 2.4Ghz and 5GHz only |

| Level of Security | None |

| Mac Filtering | Unchecked |

| Authentication Servers | x.x.x.x |

| Type of Enterprise Network | Voice and Data |

| WMM Policy | Enabled |

| AAA Override | Checked |

| Fastlane | Checked |

| Fast Transition (802.11r) | Enabled |

| Over the DS | Un-Checked |

| Peer-2-Peer Blocking | Disabled |

| Coverage Hole Detection | Checked |

| Session timeout | 3600 |

| Client Exclusion | 0 |

| MFP Client Protection | Optional |

| 11k Neighbor List | Checked |

| 11v BSS TRANSITION SUPPORT | BSS Max Idle Service - Checked |

| Directed Multicast Service – Checked | |

| Client Idle User Timeout Checked, 3600 Seconds |

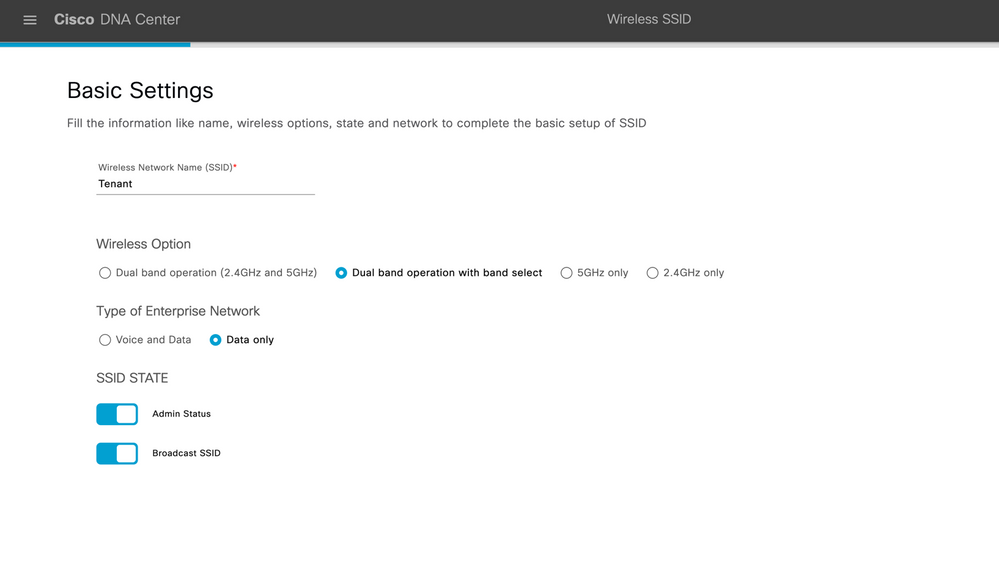

- Configure Tenant/BRS SSID

Step 1: Navigate to Design → Network Settings → Wireless.

Figure 7 Wireless Settings

Step 2: Under Guest Wireless, click +Add → Select Enterprise to create new SSIDs.

Step 3: In the Wireless Network Name (SSID) text box, enter a unique name for the enterprise SSID that you are creating.

Step 4: Under SSID STATE, configure the following:

-

Click the Admin Status button off, to disable the admin status.

-

Click the BROADCAST SSID button off, if you do not want the SSID to be visible to all wireless clients within the range.

Step 5: Under Level of Security, select the L2 Security as Personal. Enter a Pre-shared key (PSK). L3 security can be set to open

Note: IPSK can also be used if in case a common SSID is used across multiple tenants. The client MAC addresses of these tenants should be present in AAA server database.

Step 6: Associate SSID to Profile as in above steps.

| Features | Settings |

| Wireless Network Name (SSID) | Tenants |

| Admin status | Enabled |

| Broadcast SSID | Enabled |

| Wireless Option | 2.4Ghz and 5GHz |

| Level of Security | WPA2/WPA3 |

| Mac Filtering | Unchecked |

| Authentication Servers | x.x.x.x |

| Type of Enterprise Network | Voice and Data |

| WMM Policy | Enabled |

| AAA Override | Checked |

| Fastlane | Checked |

| Fast Transition (802.11r) | Enabled |

| Over the DS | Un-Checked |

| Peer-2-Peer Blocking | Disabled |

| Coverage Hole Detection | Checked |

| Session timeout | 3600 |

| Client Exclusion | 0 |

| MFP Client Protection | Optional |

| 11k Neighbor List | Checked |

| 11v BSS TRANSITION SUPPORT | BSS Max Idle Service - Checked |

| Directed Multicast Service – Checked | |

| Client Idle User Timeout Checked, 3600 Seconds |

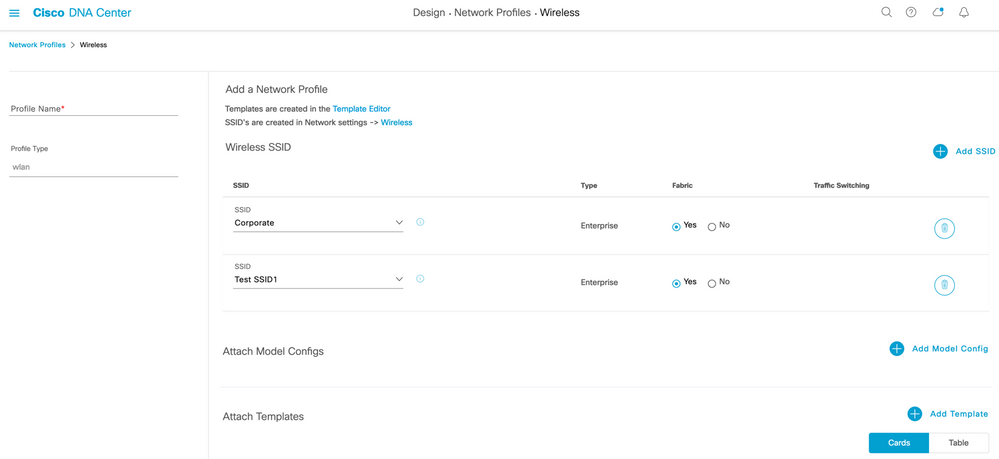

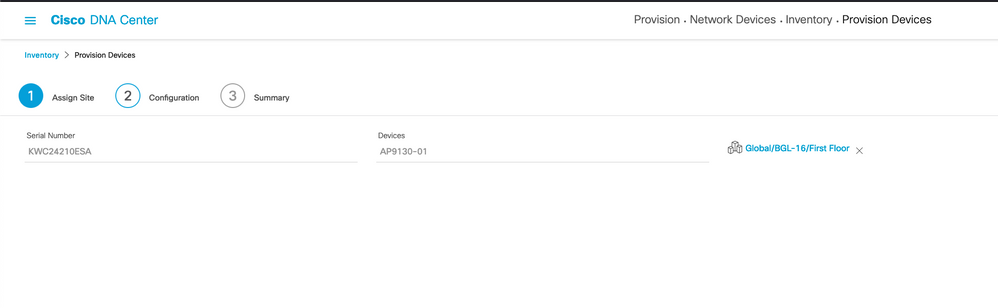

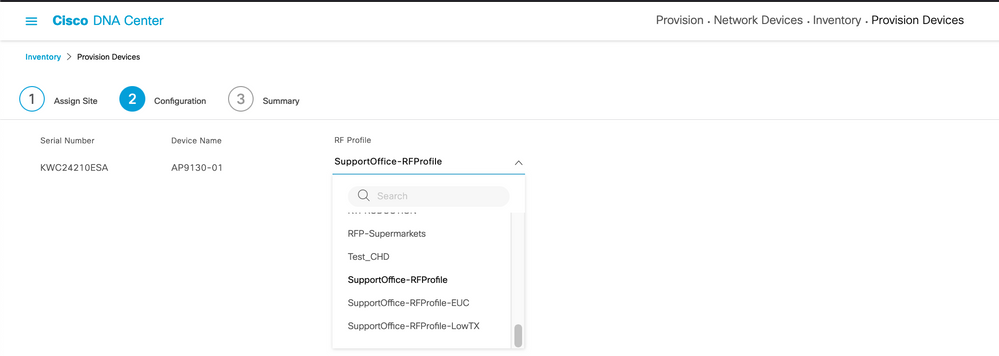

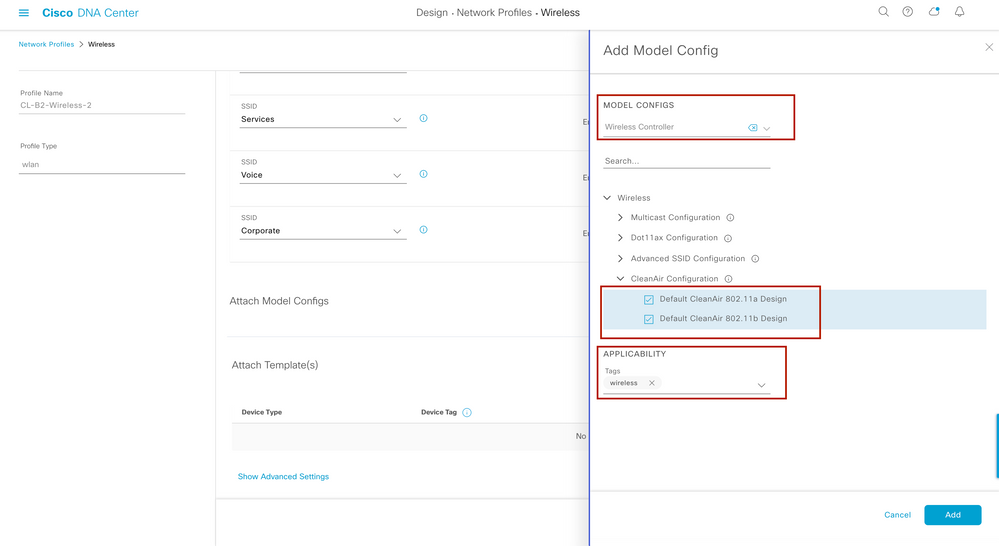

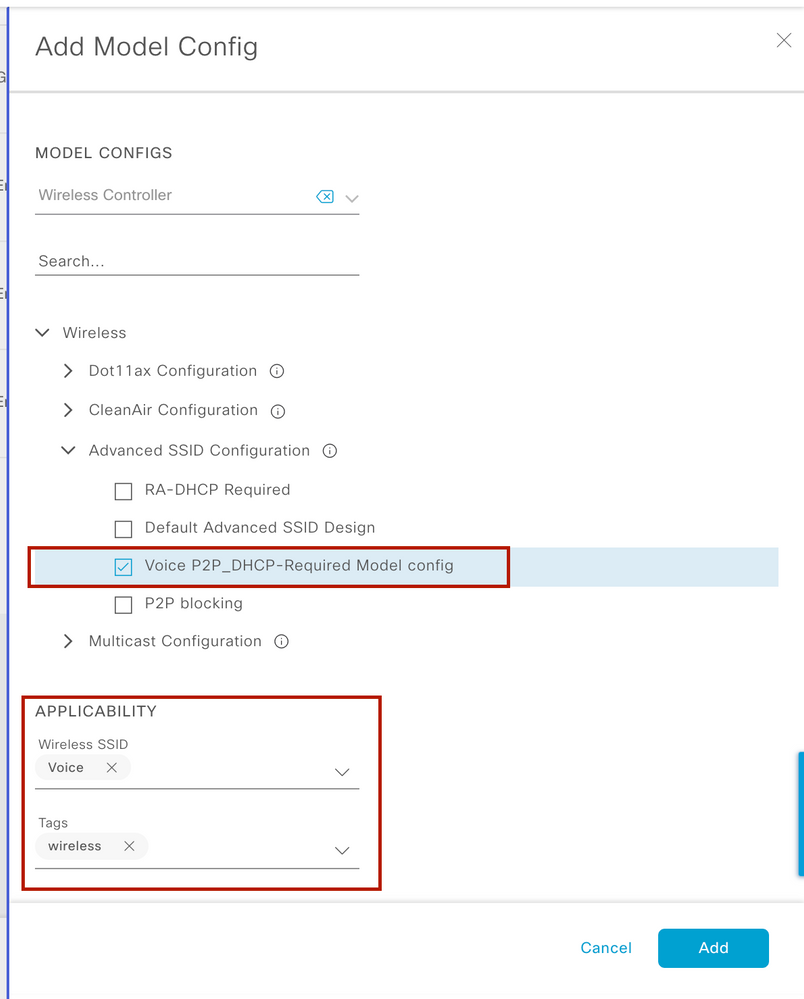

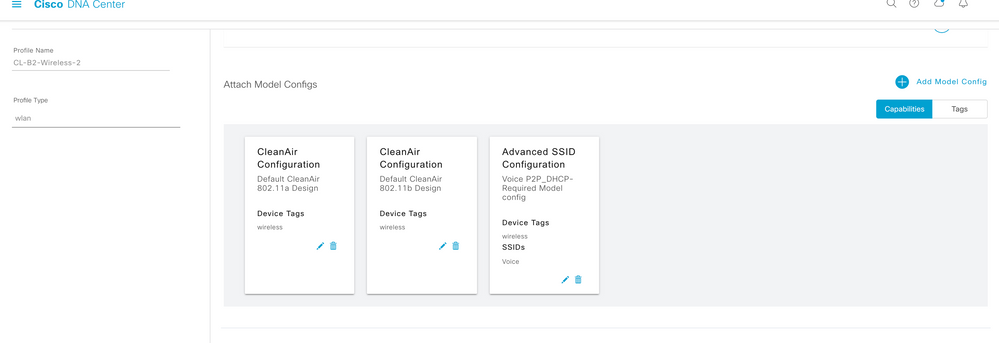

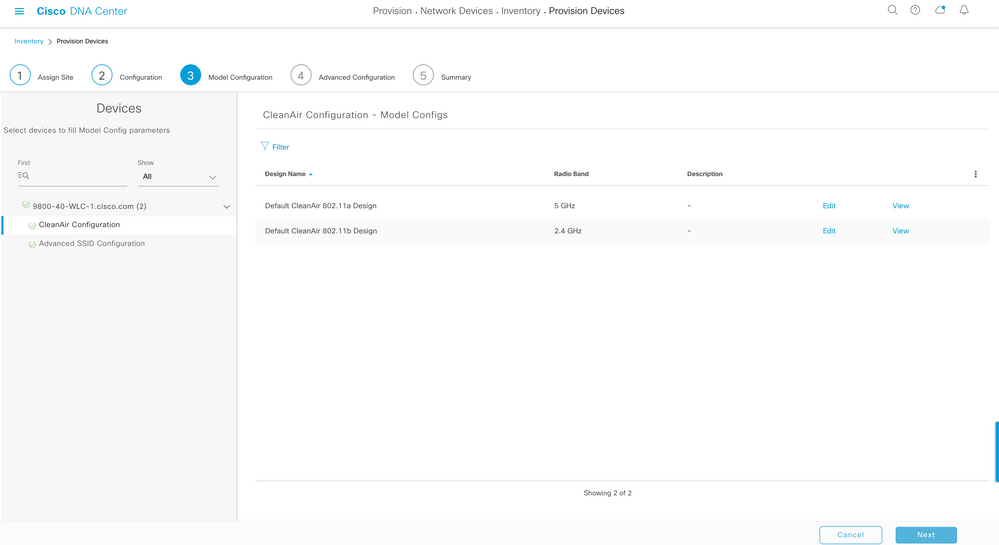

Procedure 3. Create Network Profile

The following procedure describes how to configure Network Profile for wireless network. SSIDs will be mapped at the later stage to the Network Profile after creating all the necessary SSID Profiles

In the Cisco DNA Center GUI, click the Hamburger Menu icon and choose Design > Network Profiles > Wireless -→ Add a new Profile Name “Terminal” and click on Save button at the bottom right.

Below Screenshot shows the final stage after creating all the SSIDs.

Figure 8 Network Profile

| We will use the above created Network profile for separate set of SSID that might be needed in different sections of Airport. |

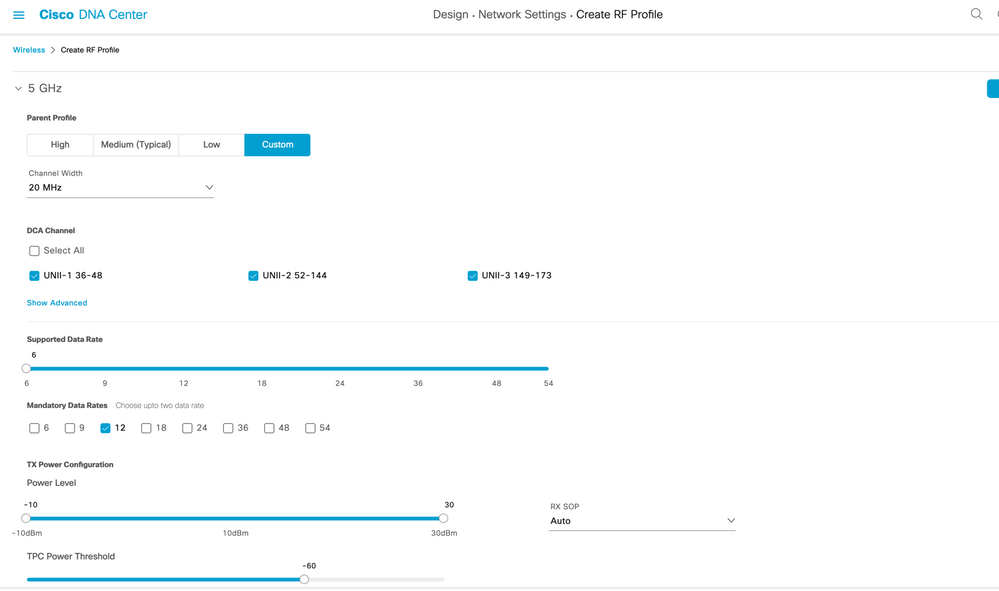

Procedure 4. Create RF Profile

Step 1: Navigate to Design → Network Settings → Wireless.

Step 2: Choose Wireless Radio Frequency Profile → Add

Step 3: In the Profile Name text box, enter a unique name <Terminal_Outdoor_RFProfile>.

Step 4: Under 2.4GHz choose Parent Profile → Custom.

Step 5: Select DCA Channel as 1,6,11.

Step 6: Set Supported Data Rate → Mandatory Data Rates →

Step 7: Set Power Level → Min <Value>dBm and Max <Value>dBm.

Step 8: Set TPC Power Threshold → <Value>dBm.

Step 9: Choose Auto value from the RX-SOP drop-list.

Step 10: Under 5GHz choose Parent Profile → Custom.

Step 11: Channel width select <Value>MHz from the drop-list.

Step 12: Select DCA Channel as <allowed 5Ghz channels of that country>

Step 13: Set Supported Data Rate → Mandatory Data Rates → <Value>.

Step 14: Set Power Level → Min <Value>dBm and Max <Value>dBm.

Step 15: Set TPC Power Threshold → <Value>dBm.

Step 16: Choose Auto value from the RX-SOP drop-list.

Refer below Table for the values to configure remaining RF profile.

Table 4 RF Profile Parameters

| 5 GHz Band | Corporate | Terminal_Indoor | Terminal_Outdoor |

| DCA Channels | 36,40,44,48,52,56,60,64,100, 104,108,112,116,120,124,149,153,157,161. |

36,40,44,48,52,56,60,64,100, 104,108,112,116,120,124,149,153,157,161. |

36,40,44,48,52,56,60,64,100, 104,108,112,116,120,124,149,153,157,161. |

| Supported Rates (mbps) | 24, 36, 48, 54 | 12,18, 24, 36, 48, 54 | 12, 18, 24, 36, 48, 54 |

| Mandatory Rates (mbps) | 24 | 12 | 12,24 |

| TPC Power - Min, Max (dBm) | 5, 17 | 7, 14 | 5, 11 |

| TPC Power Threshold | -60 | -67 | -60 |

| Rx-SOP | Low | Medium | Medium |

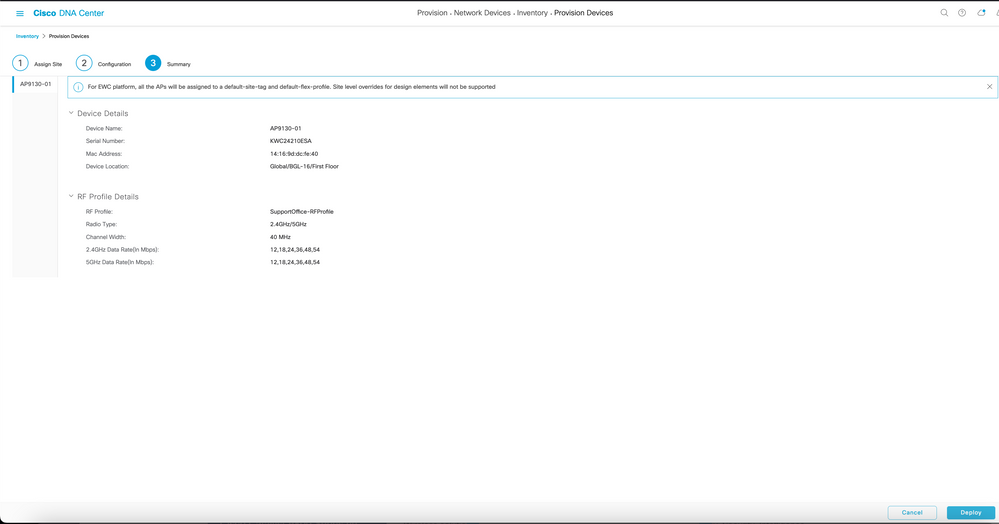

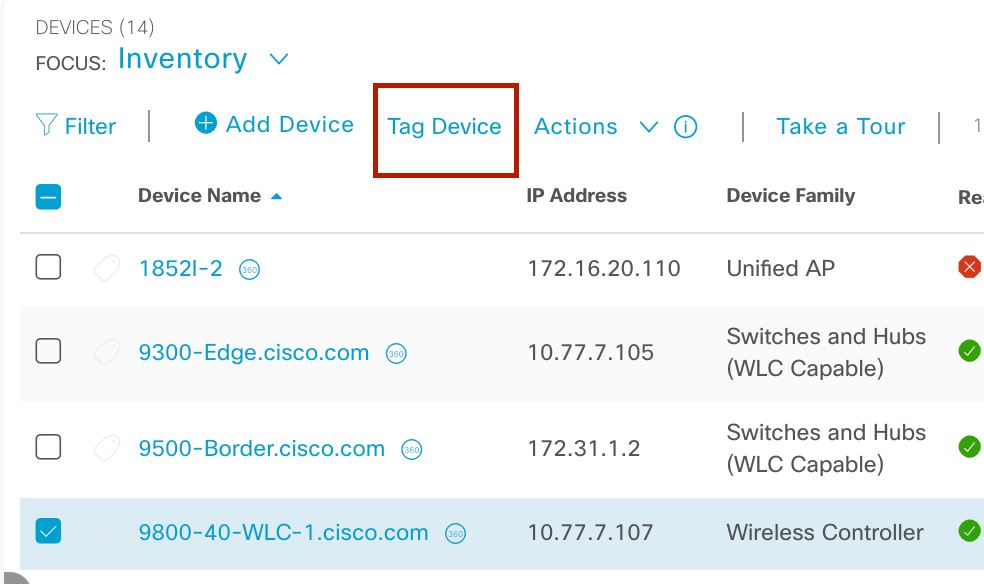

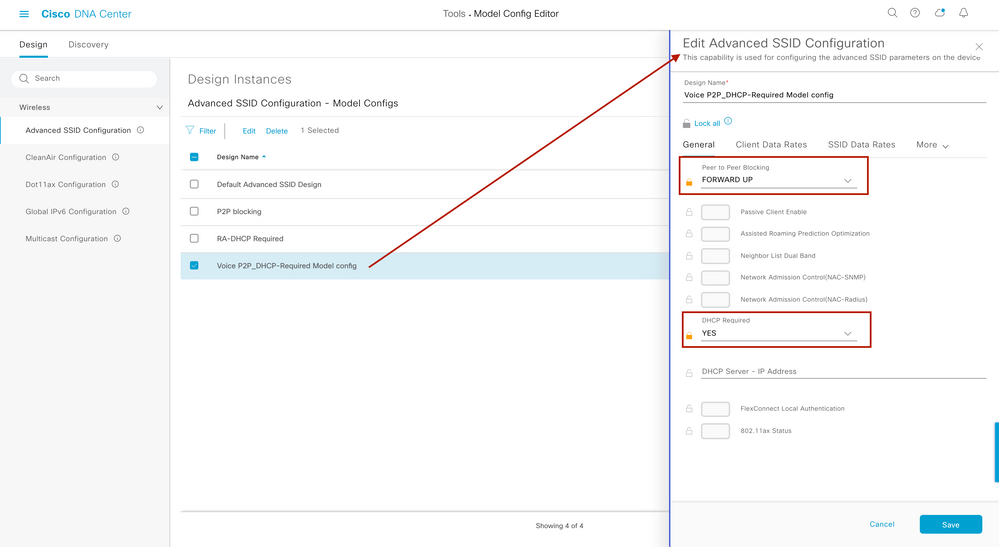

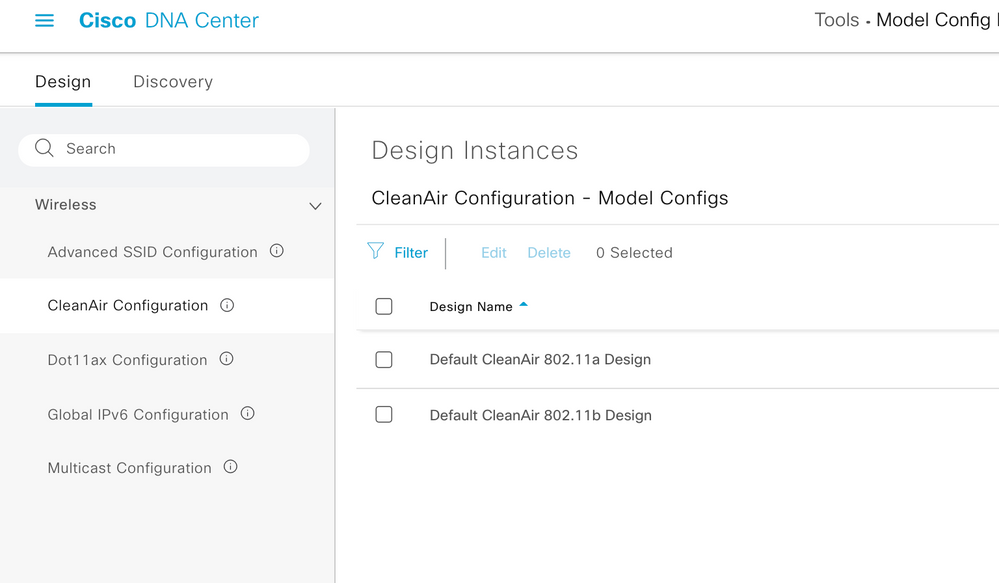

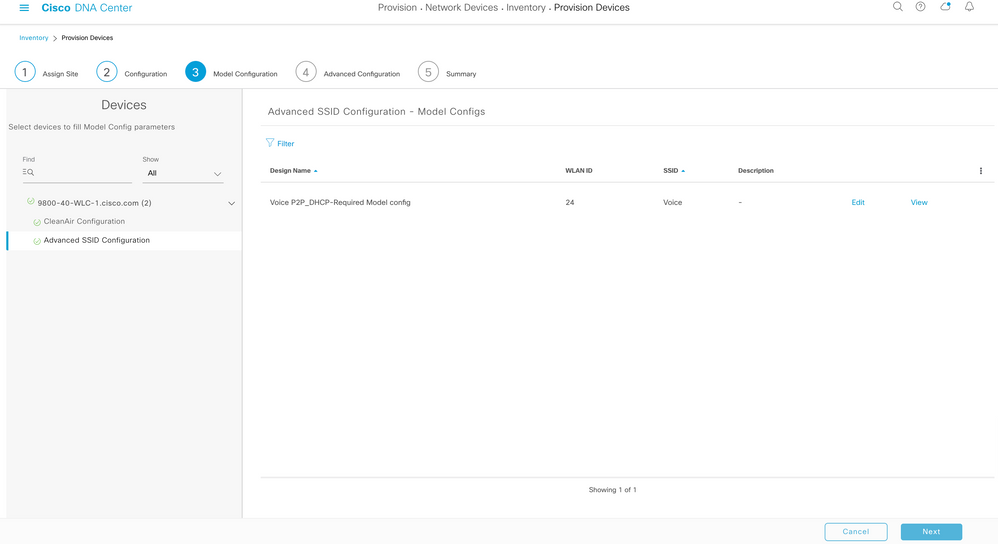

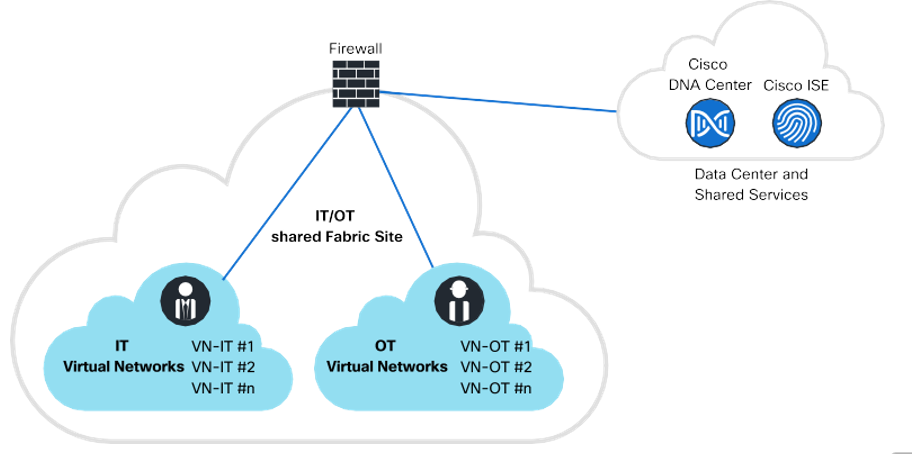

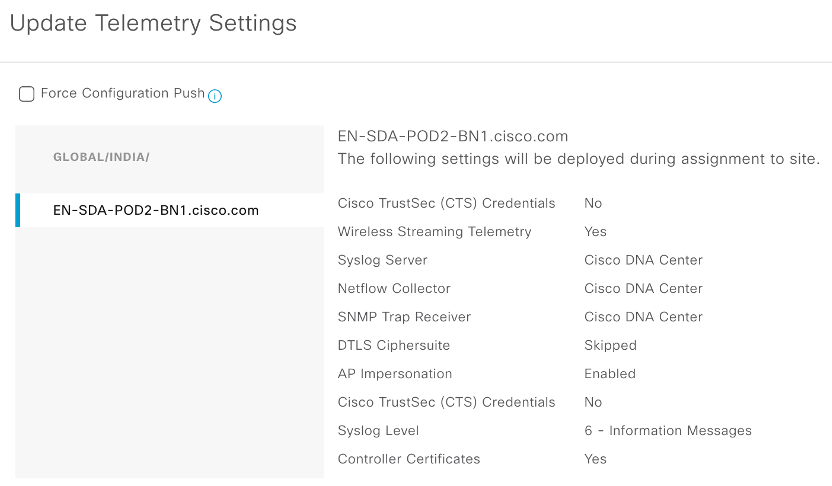

| Channel Width (MHz) | 40 | 40 | 40 |