- Cisco Community

- Technology and Support

- Service Providers

- Service Providers Knowledge Base

- ASR9000/XR: Implementing QOS policy propagation for BGP (QPPB)

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

on 01-16-2014 08:00 AM

Introduction

This document describes how to work with and implement QOS Policy Propagation for BGP, or in short QPPB.

QPPB is the concept of marking prefixes received from BGP with a certain QOS group assignment and this QOS-group can be used

in egress policies (or even ingress policies after marking) to perform certain actions.

While this seems so simple, and it is technically, the ability to achieve this functionality in hardware is quite complex.

In this article I hope to unravle some of these gotcha's and operation when it comes to QPPB.

Support

QPPBwith egress qos policy is supported in Trident, Thor and Typhoon. Both IPv4 & IPv6 is supported.

ASR9K is the first XR platform to support IPv6 QPPB.

Based on interface qppb settings, for the source/Destination prefix, the IP Precedence and/or QOS group is set in ingress linecard and policy can be attached in egress linecard to act on the modified IP Precedence and/or QOS group.

1. Purpose:

In short the purpose of QPPB is to allow packet classification based on BGP attributes.

- IPv4/IPv6 unicast only feature. IPv6 Support only added in 4.2 only in ASR9k platform.

- Classification based on source or destination IP address

- Classify on BGP IP prefix / AS path / Community

- Utilizes Route Policy Language (RPL) for table policies

- QoS Group ID values 0 – 31 supported

- IOS XR started supporting in 3.6 in CRS & C12K

- CRS-1 only supports setting QoS Group ID

- C12k supports setting IP Precedence and/or QoS Group ID

- ASR9k supports setting IP precedence and/or QoS Group ID and QOS policy can configured to match on modified IP prec/QOS Group only on egress.

Configuration and verification

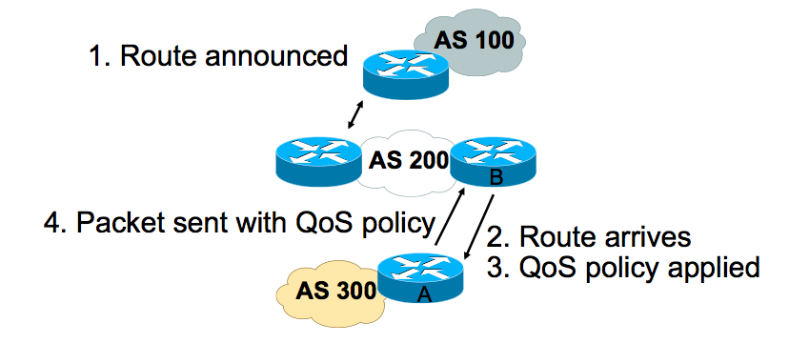

In the following example, Router A learns routes from AS 200 and AS 100. QoS policy is applied to all packets that match the defined route maps. Any packets from Router A to AS 200 or AS 100 are sent the appropriate QoS policy.

2. Sample Scenario

The show commands below follow the application and focus of the QPPB on Router "A" and then on the interface towards and connecting with

Router "B".

3. Configuration steps

- Define Route Policy (routes to match)

- Attach Route Policy to BGP instance

- Apply QPPB to ingress interface

Route policy configuration

| RPL configuration |

|---|

route-policy qppb if destination in (60.60.0.0/24) then set qos-group 5 elseif source in (192.1.1.2) then set qos-group 10 else set qos-group 1 endif pass end-policy |

QPPB ...

4. Application to BGP and Interface

After the route policy configuration you need to apply it to the table policy to do the marking, right when the routes are being received.

This then also needs to be enforced on the interface with the right QOS configuration to leverage this QPPB setting and act upon that.

BGP

| BGP configuration |

|---|

router bgp 300 bgp router-id 19.19.19.19 address-family ipv4 unicast table-policy qppb ! neighbor 192.1.1.2 remote-as 200 address-family ipv4 unicast route-policy pass-all in route-policy pass-all out ! |

Interface

| Interface level configuration |

|---|

interface TenG 0/0/0/0 ipv4 address 192.1.1.1 255.255.255.0 ipv4 bgp policy propagation input qos-group destination keepalive disable ! |

5. Show commands and verification

The following commands give an overview how to verify the QPPB configuration

Using show cef

RP/0/RP0/CPU0:pacifica#show cef 60.60.0.0/24 detail 60.60.0.0/24, version 0, internal 0x40000001 (0x78419634) [1], 0x0 (0x0), 0x0 (0x0) Prefix Len 24, traffic index 0, precedence routine (0) QoS Group: 5, IP Precedence: 0 gateway array (0x0) reference count 100, flags 0x80200, source 3, <snip> |

Using show uidb

uIDB is a micro interface descriptor block, it is a data structure that represents the features that are applied to an interface.

In this case this command explains what features are applied to the interface specific to QPPB.

RP/0/RP0/CPU0:pacifica#show uidb data TenG 0/0/0/0 location 0/0/cpu0 <snip> IPV4 QPPB En 0x1 IPV6 QPPB En 0x1 IPV6 QPPB Dst QOS Grp 0x0 IPV6 QPPB Dst Prec 0x1 IPV6 QPPB Src QOS Grp 0x1 IPV6 QPPB Src Prec 0x0 IPV4 QPPB Dst QOS Grp 0x0 IPV4 QPPB Dst Prec 0x1 IPV4 QPPB Src QOS Grp 0x0 IPV4 QPPB Src Prec 0x0 <snip> |

Restrictions and limitations

This to the best of my knowledge

- In Trident/Typhoon, due to limited space in prefix leaf, all traffic coming in that interface will undergo QPPB processing (IP Precedence and/or QOS Group) based on that interface setting. i.e there is no per prefix level validity checking for IP precedence or QOS group.

TRIDENT

- The loopback decision is made in TOP Parse, so every packet coming into the interface, which has the “QOS group” policy configuration with QPPBenabled will be looped back.

- Due to the loopback and extra cycles the performance will get impacted.

- Also the trigger for loopback is the “QOS group” present in policy, if the user wants to have a policy to act only on the precedence value modified by qppb, user still needs to have a dummy “QOS group” in the policy for the loopback to happen to take effect of the new precedence value.

- Only QOS format 1 and format 2 is supported in ingress QOS policy.

TYPHOON

- The loopback decision is made in TOP Parse, so every packet coming into the interface, which has the “QOS group” policy configuration with QPPBenabled will be (pipeline)looped back.

- Due to the pipeline loopback and extra cycles the performance may get impacted.

- Also the trigger for loopback is the “QOS group” present in policy, if the user wants to have a policy to act only on the precedence value modified by qppb, user still needs to have a dummy “QOS group” in the policy for the loopback to happen to take effect of the new precedence value

Debugging QPPB

These debugging examples are not necessarily related to the configuration example above.

Check for QPPB values in route

RP/0/RSP0/CPU0:public-router#show route 120.120.120.120 de

Wed Feb 23 15:20:01.263 UTC

Routing entry for 120.120.120.0/24

Known via "bgp 500", distance 200, metric 0, type internal

Installed Feb 20 23:58:13.096 for 2d15h

Routing Descriptor Blocks

10.1.7.2, from 10.1.7.2

Route metric is 0

Label: None

Tunnel ID: None

Extended communities count: 0

Route version is 0x1 (1)

No local label

IP Precedence: 6

QoS Group ID: 5

Route Priority: RIB_PRIORITY_RECURSIVE (7) SVD Type RIB_SVD_TYPE_LOCAL

No advertising protos.

CEF command output for trident/typhoon

RP/0/RSP0/CPU0:public-router#show cef 120.120.120.120 hardware ingress location 0/0/cPU0 | inc QPPB

QPPBPrec: 6

QPPBQOS Group: 5

CEF Command output in THOR :

Hardware Extended Leaf Data:

fib_leaf_extension_length: 0 interface_receive: 0x0

traffic_index_valid: 0x0 qos_prec_valid: 0x1

qos_group_valid: 0x1 valid_source: 0x0

traffic_index: 0x0 nat_addr: 0x0

reserved: 0x0 qos_precedence: 0x6

qos_group: 0x5 peer_as_number: 0

path_list_ptr: 0x0

connected_intf_id: 0x0 ipsub_session_uidb: 0xffffffff

Path_list:

urpf loose flag: 0x0

List of interfaces:

Chk for QPPBvalue in uIDB

Trident

RP/0/RSP0/CPU0:public-router# show uidb data location 0/0/CPU0 gigabitEthernet 0/0/0/4 ingress | inc QPPB

Wed Feb 23 15:44:33.154 UTC

IPV6 QPPBSrc Lkup 0x0

IPV4 QPPBSrc Prec 0x1

IPV4 QPPBDst Prec 0x0

IPV4 QPPBSrc QOS Grp 0x0

IPV4 QPPBDst QOS Grp 0x0

IPV6 QPPBSrc Prec 0x0

IPV6 QPPBDst Prec 0x1

IPV6 QPPBSrc QOS Grp 0x0

IPV6 QPPBDst QOS Grp 0x0

IPV4 QPPBEn 0x1

IPV6 QPPBEn 0x1

IPV4 QPPBSrc Lkup 0x1

RP/0/RSP0/CPU0:public-router#show run interface gigabitEthernet 0/0/0/4

Wed Feb 23 15:44:52.313 UTC

interface GigabitEthernet0/0/0/4

service-policy output qppp-qos-pol

ipv4 bgp policy propagation input ip-precedence source

ipv4 address 12.12.12.1 255.255.255.0

ipv6 bgp policy propagation input ip-precedence destination

ipv6 address 12:12:12::1/64

!

Typhoon

RP/0/RSP0/CPU0:public-router#show uidb data location 0/2/cpu0 gigabitEthernet 0/2/0/0 ingress | inc QPPB

Wed Feb 23 15:47:32.436 UTC

IPV4 QPPBSrc Lookup En 0x0

IPV6 QPPBSrc Lookup En 0x1

IPV4 QPPBEn 0x1

IPV6 QPPBEn 0x1

RP/0/RSP0/CPU0:public-router#show uidb data location 0/2/cpu0 gigabitEthernet 0/2/0/0 ing-ex | inc QPPB

Wed Feb 23 15:49:19.118 UTC

IPV6 QPPBDst QOS Grp 0x0

IPV6 QPPBDst Prec 0x1

IPV6 QPPBSrc QOS Grp 0x1

IPV6 QPPBSrc Prec 0x0

IPV4 QPPBDst QOS Grp 0x0

IPV4 QPPBDst Prec 0x1

IPV4 QPPBSrc QOS Grp 0x0

IPV4 QPPBSrc Prec 0x0

Thor

(maybe a bit too deep for the general troubleshooting, but since I had this info I thought it might be nice to share in case, for those interested)

Another gotcha to this example below is a Gig interface in a SIP700, while this works, it is officially not supported, it is just for illustrational purposes to show how to feel inside info out to the surface and how to interpret it.

/*

* Flags bit field definitions for BGP_PA and QPPB.

*/

BF_CREATE(bgp_pa_flag_qppb_enabled, 6, 1); // QPPB

BF_CREATE(bgp_pa_flag_src_qos_group, 5, 1); // QPPB

BF_CREATE(bgp_pa_flag_dst_qos_group, 4, 1); // QPPB

BF_CREATE(bgp_pa_flag_src_prec, 3, 1); // QPPB

BF_CREATE(bgp_pa_flag_dst_prec, 2, 1); // QPPB

BF_CREATE(bgp_pa_flag_src, 1, 1); // BPG_PA

BF_CREATE(bgp_pa_flag_dst, 0, 1); // BPG_PA

uidb offset 780 for v4 and 2000 for v6 qppb:

RP/0/RSP0/CPU0:public-router#show controllers pse qfp infrastructure gigabitEthernet 0/1/1/4 input config raw loc 0/1/CPU0 | inc 780

Wed Feb 23 15:52:21.259 UTC

00000780 00 00 00 48 00 00 00 00

00001780 00 00 00 00 00 00 00 00

00002780 00 00 00 00 89 87 54 00

RP/0/RSP0/CPU0:public-router#show controllers pse qfp infrastructure gigabitEthernet 0/1/1/4 input config raw loc 0/1/CPU0 | inc 2000

Wed Feb 23 15:53:49.236 UTC

00002000 00 00 00 48 e7 ff 36 f0

RP/0/RSP0/CPU0:public-router#show run interface gigabitEthernet 0/1/1/4

Wed Feb 23 16:04:34.892 UTC

interface GigabitEthernet0/1/1/4

description TGN PORT 7 3

service-policy output qppp-qos-pol

ipv4 bgp policy propagation input ip-precedence source

ipv4 address 10.1.7.1 255.255.255.0

ipv6 bgp policy propagation input ip-precedence source

ipv6 address 10:1:7::1/64

ipv6 enable

!

Check if packets are loopbacking due to ingress QOS policy

(both trident and typhoon)

RP/0/RSP0/CPU0:ASR-PE3#show uidb data location 0/0/cpu0 gigabitEthernet 0/0/0/4.1 ingress | inc QOS

Mon Feb 20 10:57:22.781 UTC

QOS Enable 0x1

QOS Inherit Parent 0x0

QOS ID 0x2004

AFMON QOS ID 0x0

IPV4 QPPBSrc QOS Grp 0x1

IPV4 QPPBDst QOS Grp 0x0

IPV6 QPPBSrc QOS Grp 0x0

IPV6 QPPBDst QOS Grp 0x0

QOS grp present in policy 0x1

QOS Format 0x1

Verification of the NP counters, we need to see loopbacks happening (or feedback in PXF or Recirculation in 7600 terminology)

RP/0/RSP0/CPU0:ASR-PE3#show controllers np counters np3 location 0/0/cPU0

Mon Feb 20 11:08:49.262 UTC

Node: 0/0/CPU0:

Show global stats counters for NP3, revision v3

Read 33 non-zero NP counters:

Offset Counter FrameValue Rate (pps)

22 PARSE_ENET_RECEIVE_CNT 6861706 45574

23 PARSE_FABRIC_RECEIVE_CNT 6927056 45574

24 PARSE_LOOPBACK_RECEIVE_CNT 6818799 45573

29 MODIFY_FABRIC_TRANSMIT_CNT 6709882 45574

30 MODIFY_ENET_TRANSMIT_CNT 6929272 45574

34 RESOLVE_EGRESS_DROP_CNT 5 0

38 MODIFY_MCAST_FLD_LOOPBACK_CNT 6818940 45574

RP/0/RSP0/CPU0:ASR-PE3#show uidb data location 0/0/cpu0 gigabitEthernet 0/0/0/4.1 ingress | inc QOSQOS Enable 0x1QOS Inherit Parent 0x0QOS ID 0x2004AFMON QOS ID 0x0IPV4 QPPBSrc QOS Grp 0x1IPV4 QPPBDst QOS Grp 0x0IPV6 QPPBSrc QOS Grp 0x0IPV6 QPPBDst QOS Grp 0x0 QOS grp present in policy 0x1 QOS Format 0x1

Extra Q&A

If the user wants to have a policy to act only on the precedence value modified by qppb, user still needs to have a dummy “QOS group” in the policy for the loopback to happen to take effect of the new precedence value. This requires to configure something like this:

router bgp 1

address-family ipv4 unicast

table-policy QPPB-PREC

route-policy QPPB-PREC

if (community matches-any GOLD) then

set ip-precedence 5

set qos-group 1 <== dummy qos-group

endif

end-policy

!

And at the interface level I should have both ip-precedence and qos-group:

interface TenGigE0/3/0/5

cdp

mtu 9194

ipv4 bgp policy propagation input ip-precedence destination qos-group destination

ipv4 address 192.1.22.14 255.255.255.254

load-interval 30

!

Although in the ingress QoS policy I only match on ip precedence being set by QPPB.

Answer:

You need to have “match on qos group” in input QOS policy and not on the route policy.

See the example below

router bgp 1

address-family ipv4 unicast

table-policy QPPB-PREC

route-policy QPPB-PREC

if (community matches-any GOLD) then

set ip-precedence 5

endif

end-policy

!

QOS policy will look like this

class-map match-any qos-grp-dummy

match qos-group 20

end-class-map

!

class-map match-any qos-prec

match precedence 5

end-class-map

!

policy-map qppb-input-pref

class qos-grp-dummy <--------dummy “qos group”

police rate percent 20

exceed-action drop

!

!

class qos-prec

police rate percent 10

exceed-action drop

!

!

class class-default

!

end-policy-map

!

And at the interface level you don’t need to have qos-group qppb

interface TenGigE0/3/0/5

cdp

mtu 9194

ipv4 bgp policy propagation input ip-precedence destination

service-policy input qppb-input-pref

ipv4 address 192.1.22.14 255.255.255.254

load-interval 30

!

Resolution

This section provides the answer or resolution to the problem provided in the "Core Issue" section.

6.

Related Information

Xander Thuijs CCIE #6775

Principal Engineer IOS-XR; ASR9000, CRS and NCS6K

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thank you.

I would have another question please:

The text above shows the following config:

----

"route-policy qppb

if destination in (60.60.0.0/24) then

set qos-group 5

elseif source in (192.1.1.2) then

set qos-group 10

"------

In slide 1/2, one can read:

----

"

route-policy qppb

if destination in (60.60.0.0/24) then

set qos-group 5

elseif source in (30.30.30.30) then

set qos-group 10

----

Can you please tell me which one is correct ?

I would also appreciate if you could put the prefixes/IPs (60.60.0.0/24, 30.30.30.30) on the figure above. Thank you again.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

hi adrian,

either example is "fine" really. it merely tries to show the different qos group settings based on classification criteria. the destination in this regard means the prefix against we route, the source refers to a source of the bgp prefix.

the example and shows here use the 192.1.1.2.

xander

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello Xander,

First of all thank you for the article.

I'm interested in the performance degradation introduced by QPPB.

Are there public figures like those we have for Scaled ACLs (go to page 25)?

Regards

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

hi marco! qppb would be the cost of "regular" QOS marking (when bgp sets a parameter on the packet), and the "regular" cost of doing policing/q'ing regardless of where the set action came from.

QOS is about a 25%-30% performance hit.

The only crux is when you do ingress QOS, when the qos-group is used that qppb is setting. If that is the case, the packet is looped back, so it costs theoretically 40-50% performance, if ALL packets were subject to that.

The reason for this loop is that the ingress match is done early in the pipeline, whereas the qos-group SET is done later on, so if we want to match on the qppb set qos-group, the packet needs a second pass to examine the qos-group now set.

cheers

xander

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello,

If i understand you correctly you're referring to ASR9000/XR Feature Order of operation .

Is the loopback operation triggered only if one of the classes in the inbound policy-map explicitly refers to ip precedence or qos-group or IOS-XR is smart enough to understand when it needs to loop?

Thank you so much

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

correct Marco! and if the npu detects that your qos class-map has a match on qos-group with qppb enabled that sets the qos-group the loop is automatically instantiated.

cheers!!

xander

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Dear Alexander,

Your article is brilliant, thank you. I have a couple of small questions:

You wrote "In Trident/Typhoon, due to limited space in prefix leaf", what exactly is prefix leaf?

You wrote "The loopback decision is made in TOP Parse", what is TOP Parse?

Beyond IP Presc, can marking and matching be made on DSCP with QPPB?

Thank you,

Mark.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

hi mark! thanks! :)

a prefix leaf is the final "end" of a forwarding lookup. In a "standard" 256-256-256-256 lookup model (also known as "mtrie") you can see the lookup as 4 "intersections" on a path to go from start to finish. On each intersection you have 256 choices to make (as opposed to left or right, here you have 256 streets to pick from).

You could simplify this by a left/right only, but that would make the tree lookup longer like: 2-2-2-2-2-2-2-2-2-2 (and possibly longer). the 4 staged model works nice for a lot of /32's, but for summarized routes, lesser choices (2/2/2/2) and possibly a longer tree works better (this because on a summarized route, the last octet or two are all part of that summary route already). Now when we hit the final intersection in the lookup, from where we dont have to make other decisions anymore as this gives us a straight path to the finish line, that is called the leaf.

This leaf holds all kinds of interesting data for the route. For instance the leaf tells us a next hop, egress interface and the like.

TOP is Task optimized processor. It is not an asic that can only do one thing, it is not a CPU because it as not that flexible. the nice thing of an asic is that it is designed to a single thing, and can't be programmed easily after the die is created. it is very fast though. a cpu having to be very flexible is therefor slow compared to asic. Now what we did in the NPU is that we separated a few "tasks" out over different stages. Each stage is "optimized" to perform a specific task. they are processors as they can be (micro) coded. PARSE/SEARCH/RESOLVE/MODIFY are the stages we carry. Parse is the first stage that deals with packet initial processing. what (sub)if belongs this to, let's interpret the packet and build a key for the tcam based on teh ip header, so later on we can check if an acl or qos class matched etc. More info on stages is here

you can set different things. basically qppb allows the prefix leaf (you know now what that is :) to designate the qos marking for that packet. this marking can be used on ingress (with a recirculation) or on egress to queue, remark or police based on the bgp marked packet on ingress.

cheers!

xander

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Xander,

I have configured QPPB on IOS XE, but in result I noticed only Client upload traffic is matched and policing applied correctly. For the download traffic the policy didn't match and it fall into class class-default instead. Please advise on my configuration and topology as attached.

Best Regards,

Veasna

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

the config looks correct veasna. could it be that on the downstreAM the community is not matched and therefore the marking is not done causing it to drop to the default class?

check the bgp prefix settings to make sure that the prefix coming form that desired community is set properly.

also for testing you could add an extra commlist to match the other community and add that to the qppb policy with a qos group of 2, than we can see if it is one or both having an issue.

regards

xander

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Xander,

For the downstream side, I do not set any community as I just drop PC client and its setting is just static default route. Please advise if have any technique it could focus on source (I mean upstream side) rather than destination (client PC) for download traffic.

Best Regards,

Veasna

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

hi vaesna,

hmm yeah the only option I see is that in your route policy that is tied to the table policy for BGP, you could do a if source in <your prefix> then set qos-group whatever

on egress towards the client, you can then use that qos-group to police or whatever.

so the trick being to use "if source in".

cheers!

xander

- « Previous

-

- 1

- 2

- Next »

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: