- Cisco Community

- Technology and Support

- Service Providers

- Service Providers Knowledge Base

- ASR9000/XR: Using Satellite (Technote)

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

on 07-14-2013 05:11 AM

- Introduction

- Core Issue

- The basics

- Licensing

- Optics

- Software and hardware requirements

- Feature support

- Oversubscription and QoS

- Loadbalancing on bundle ICL

- Initial system bring up

- Config sequence restrictions

- Advanced Satellite Topologies

- Basic status check

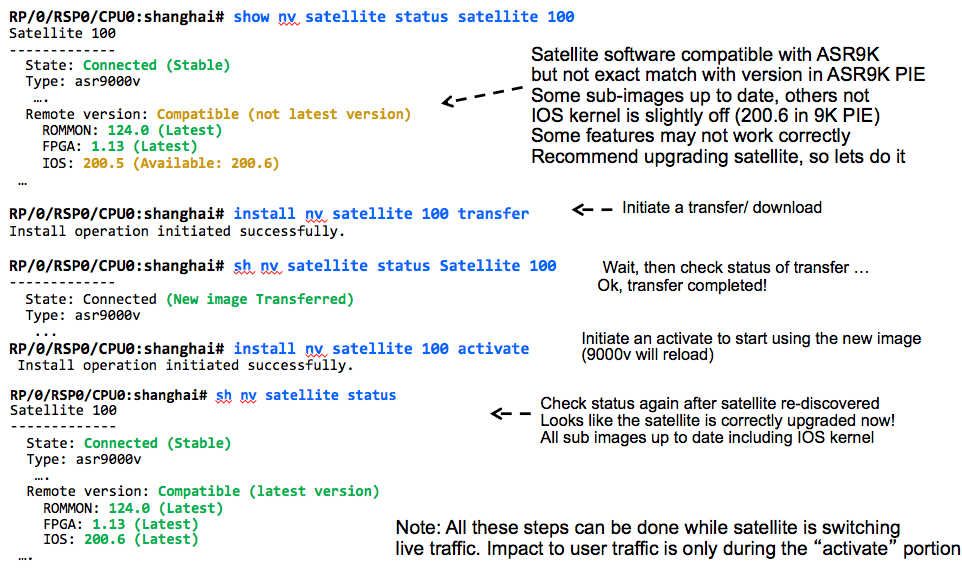

- Upgrade and Software

- Discovery protocol status

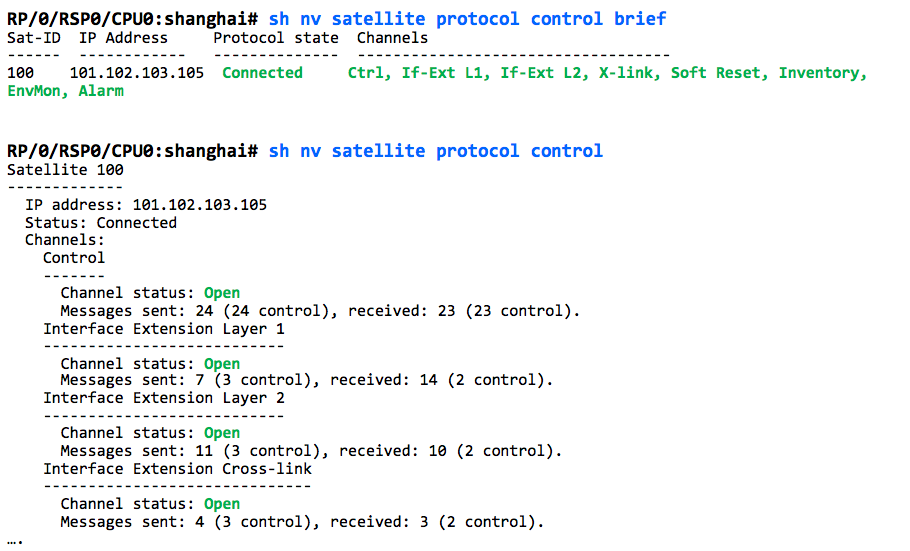

- Control protocol status

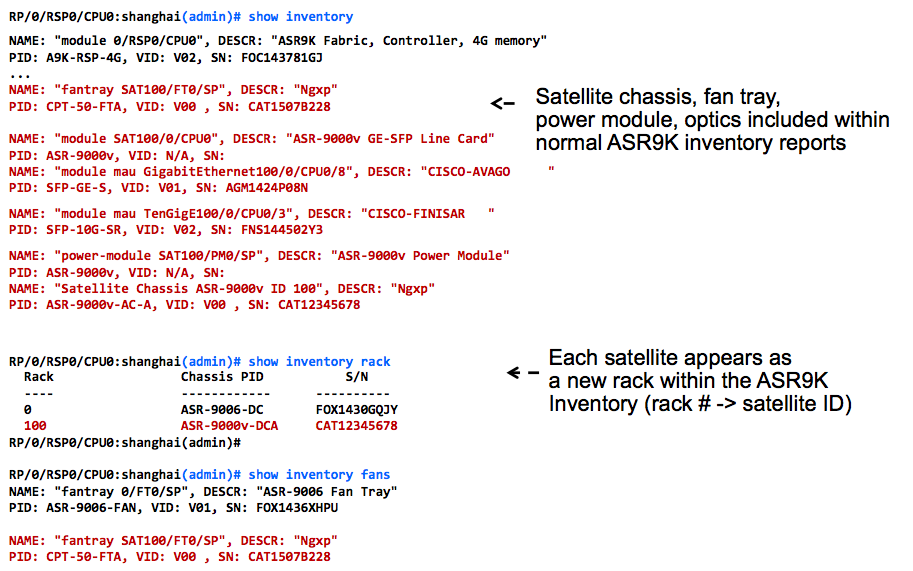

- Satellite inventory

- Common Issues

- Related Information

Introduction

This document provides a guide on how to use the satellite (ASR9000v) with the ASR9000 and ASR9900 series routers. It will be discussed what you can and cannot do, how to verify the satellite operation and use cases.

This document is written assuming that 5.1.1 or greater software release will be used.

Core Issue

Satellite is a relatively new technology that was introduced in XR 4.2.1. Satellite provides you a great and cheap way to extend your 1G ports by using this port-extender which is completely managed out of the ASR9000. The advantage is that you may have 2 devices, but 1 single entity to manage. All the satellite ports are showing up in the XR configuration of the ASR9000.

Another great advantage of the Satellite is that you can put it on remote locations, miles away from the ASR9000 host!

The basics

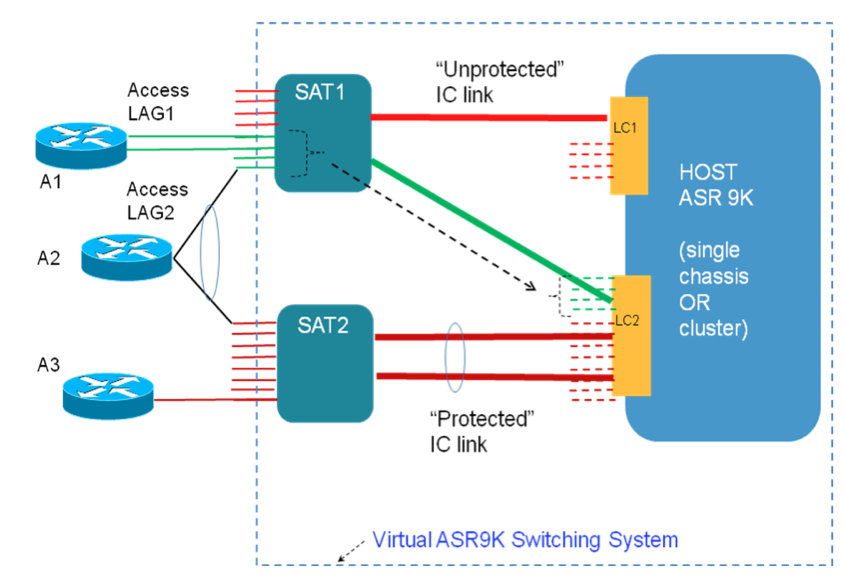

Although there is a limit to the number of satellites you can connect to an ASR9000 (cluster), the Satellite general concept of ASR9000 is shown here in this picture:

The physical connections are very flexible. The link between the Satellite and the ASR9000 is called the ICL or "Inter Chassis Link".

This link transports the traffic from the satellite ports to the 9000 host.

In the ASR9000 host configuration you define how the satellite ports are mapping between the ICL and the satellite ports.

You can statically pin ports from the Satellite to a single uplink (that means there is no protection, when that uplink fails, those satellite

ports become unusable), or you can bundle uplinks together and assign a group of ports to that bundle. This provides redundancy,

but with the limitation that the satellite ports that are using an uplink bundle, can't be made part of a bundle themselves.

We'll talk about restrictions a bit later.

In the picture below you see Access Device A1 connecting with a bundle that uses uplink (green) to the 9k host LC-2.

A second satellite has all their ports in a bundle ICL.

Note that there is no bandwidth constraints enforced, so theoretically you can have a 2 member ICL bundle and 30 Satellite ports mapped to it, but that would mean there is oversubscription.

While the ASR9000v/Satellite is based on the Cisco CPT-50, you cannot convert between the 2 devices by loading different software images.

You can't use the 9000v as a standalone switch, it needs the ASR9000 host.

Visual differences include that the 9000v starts the port number at 0, where the CPT starts at 1. Also the CPT has 4 different power options

and the ASR9000v only 3: AC-A, DC-A, DC-E (A for Ansi, E for ETSI).

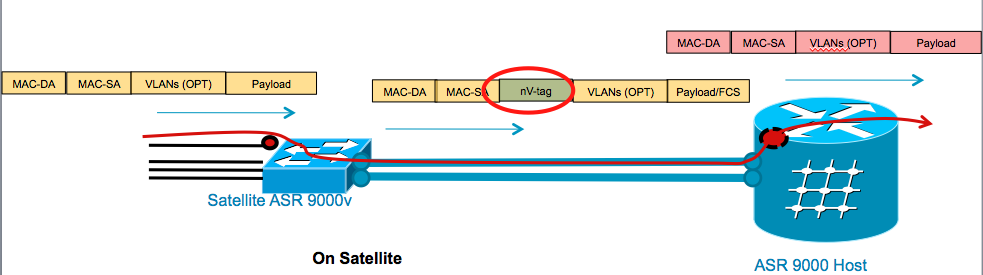

Satellite packet format over L1 topologies looks like this; there is a simple sneaky dot1q tag added which we call the nV tag:

In L2 topologies, such as simple ring, we use dot1ad.

Licensing

There is a license required to run the ASR9000v. There are 3 licenses for 1, 5, or 20 Satellites per 9k host named:

- A9K-NVSAT1-LIC

- A9K-NVSAT5-LIC

- A9K-NVSAT20-LIC

While licenses are not hard enforced, this meaning the system will still work even though a license may not be present, however you are urged to obtain the proper license, syslog messages will show the "violation of use".

Note when using simple ring, a host license for each satellite is needed on each host. E.g. a simple ring with three satellites requires six A9K-NVSAT1-LIC licenses.

Optics

A variety of optics are supported on the ASR9000v, they may not be always the same as the ASR9000. Reference this link for the supported optics for ASR9000/9000v.

When using Tunable optics for the 9000v, pay attention to the following:

(*) note for the tunable optic on the IRL you need to set the wavelength the first time via the 9000v shell on insertion of the optic before shipping it to the destined location.

Handling of Unsupported Optics

For the 9000v ports we do not support the 'service unsupported-transceiver' or 'transceiver permit pid all' commands.

The satellite device simply flags an unsupported transceiver without disabling the port or taking any further action. As long as the pluggable is readable by the satellite the SFP may work, but there are no additional 'hacks' such as the hidden commands beyond what is shown as supported in the tables from the supported optic reference link.

Software and hardware requirements

The following software and hardware requirements exist for the ASR9000v. Although support started in XR4.2.1 My personal recommendation is to go with XR43 (latest) as many initial restrictions are lifted from the first release:

1. SW requirement:

Minimum version is XR 4.2.1

2. HW requirement – Chassis

- Host ASR9K support for ASR 9006 or 9010 (4.2.1)

- Support for ASR 9001and 9922 added (4.3.0)

- Host ASR9K may be a single host, a cluster of two ASR9Ks acting as one logical router, or dual host (5.1.1)

3. HW requirement – Line cards and RSP

- RSP must be RSP440 or RSP2 (only supported with the 9000v)

- Satellite ICL connection must be from a Typhoon based LC

- LC may be –TR or –SE. If –TR, each satellite access GigE port gets 8 TM queues

- Trident based LCs and the SIP-700 can co-exist in the system but cant be used to connect *directly* to the ICL ports. Can forward traffic to/ from satellite ports.

- ISM/ CDS video card could co-exist in the system, but can’t stream video flows to the satellite port in 4.2.1 release. Plan to be supported in future release

4. ASR901 as a Satellite

- Supported for nV satellite since 4.3.0 (901-1G)

- Supported for nV satellite since 5.1.1 (901-10G)

- On both the 1G and 10G versions only 1G ICL are supported at this time in ports G0/10 and G0/11. 10G and other ports, such as TDM, are not supported

- Supports satellite interfaces from SatId/0/0/0 to SatId/0/0/9

- Combo ports 4-7 are set to auto-select. When both copper and fiber are connected fiber is selected, removing fiber switches to copper mode, and reconnecting fiber moves back to fiber.

Note: If the wrong port is used for ICL then the link will stay down on the 901. Once the correct ICL port is used and the 9K configured then a reload of the 901 will need to occur for the link to come up and the 901 become recognized as a satellite.

Feature support

Generally speaking all features supported on physical GigE ports of ASR9K are also automatically supported on the GigE ports of the

satellite switch such as L2, L3, multicast, MPLS, BVI, OAM … (everything that works on a normal GigE).

–L1 features: applied on the satellite chassis

5. Features running specifically on the 9000v/Satellite

- Admin state (shut/no shut)

- Ethernet MTU

- Ethernet link auto-negotiation including

- Half/ full duplex

- Link speed

- Flow control (only RX flow control supported in 4.2.1 on access GigEs)

- Static configuration of auto-neg parameters (speed, duplex, flow ctrl)

- Carrier delay

- L1 packet loopback

- Line loopback (pkt rx on access GigE looped back to tx)

- Internal loopback (pkt about to tx on access GigE looped back to rx)

- TX laser disable

6. Exceptions

1) The following features are not supported on satellite ports in 5.1.1

*Need to update this*

2) When the ICL is a link bundle, there are some restrictions :

- LAG (link bundles) not supported on satellite access GigEs (i.e. no “LAG over LAG” support)

- ICL LAG must be a static EtherChannel (“mode on”); no LACP support on the ICL

Oversubscription and QoS

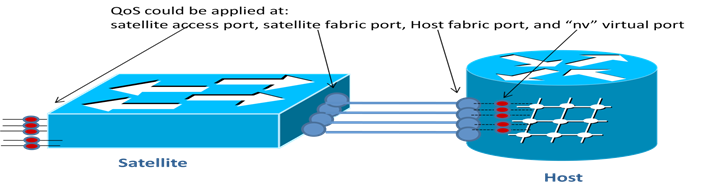

QoS can be applied on the ASR9000 host (runs on the NP where the satellite ports have their interface descriptors) or offloaded to the satellite

When you have oversubscription, that is more then the number of 1G ports compared to the ICL total speed there could be a potential issue. However there is an implicit trust model for all high priority traffic.

Automatic packet classification rules determine whether a packet is control packet (LACP, STP, CDP, CFM, ARP, OSPF etc), high priority data (VLAN COS 5,6,7, IP prec 5, 6, 7) or normal priority data and queued accordingly

For the downstream direction, that is 9000 host to the Satellite, the "standard" QOS rules and shaping are sufficient enough to warrant the delivery of high priority packets to the satellite ports. (e.g. inject to wire etc).

7. QoS Offload

As the ICL link between the satellite and host may be oversubscribed by access interfaces, configuring QoS on the satellite itself is optimal for avoiding the lose of high priority traffic due to congestion. This feature was introduced in 5.1.1

3 steps to configuring QoS offload

- Create class map

- Create QoS policy map

- Bind the QoS polciy to the satellite (physical access interface, bundle access interface, physical ICL interface, or bundle ICL interface)

INPUT access interface (CLI config) example:

class-map match-any my_class

match dscp 10

end-class-map

!

policy-map my_policy

class my_class

set precedence 1

!

end-policy-map

!

interface GigabitEthernet100/0/0/9

ipv4 address 10.1.1.1 255.255.255.0

nv

service-policy input my_policy

!

Loadbalancing on bundle ICL

Traffic is hashed across members of this ICL LAG based on satellite access port number. No packet payload information (MAC SA/ DA or IP SA/ DA) used in hash calculation. This ensures that QoS applied on ASR9K for a particular satellite access port works correctly over the entire packet stream of that access port. Current hash rule is simple (port number modulo # of active ICLs)

Initial system bring up

8. Hardware

Plug-and-play installation: No local config on satellite, no need to even telnet in!

- Unpack new ASR 9000v; rack, stack, connect power

- Plug in ASR 9000v qualified optics of correct type into any 1 or more of the SFP+ slots & appropriate qualified optics into SFP+ or XFP slots on host ASR9K. Connect via SMF/ MMF fiber

- Note: Actual SFP+ port on ASR 9000v does not matter; connect the 10G fibers from 9K to any of the 10G SFP+ ports on the 9000v in any order. No need to line these up => further plug and play.

- Configure satellite via CLI/ XML on ASR 9K host 10G ports

- Power up ASR 9000v chassis

- ASR 9000v chassis turn up status check based on chassis error LEDs on front face plate

- Critical Error LED comes ON => Critical hardware failure; need to swap 9000v hardware

- Major Error LED comes ON => Hardware okay, unable to connect to ASR9K host

- Check fiber connectivity and L1 path/ optics between ASR9K and 9000v

- Check ICL configuration on ASR9K

- Check chassis serial number on ASR 9000v (rear plate) against any configured S/N on ASR9K

- Both Critical and Major Error LEDs stay OFF => ASR 9000v is up and connected to host ASR9K; now fully manageable via host ASR9K and ready to go.

- Can do satellite ethernet port packet loopback tests (through ASR9K) if needed to check end to end data path

- Note: If satellite software requires upgrade, it will raise notification on host ASR9K. User can then do an inband software upgrade from the ASR9K if needed (use “show nv satellite status” on host ASR9K from here on to check status as described next)

9. Management configuration

It is recommended to use the auto-IP feature, no loopback or VRF need to be defined. A VRF **nVSatellite will be auto-defined and does not count towards the number of VRFs configured (for licensing purposes).

10. Define Satellite device

- router(config)# nv satellite 100

- router(config-nV)# type asr9000v

- router(config-satellite)# ipv4 address 192.168.0.100

- router(config-satellite)# serial-number cat12345678

- router(config-satellite)# description 1194N Mathilda Sunnyvale

Optional config secret password for satellite login. Note that the username is 'root'

- router(config-satellite)# secret [0 | 5 | LINE] password

11. Setting up the ICL

There are two options for ICL:

That is static pinning; designate some ports from the satellite to use a dedicated uplink.

Using a bundle ICL that provides for redundancy when one uplink fails.

Static pinning

- router(config)# interface TenGigabitEthernet 0/1/0/0

- router(config-if)# nv satellite-fabric-link satellite 100

- router(config-satellite-fabric-link)# remote-ports GigabitEthernet 0/0/0-5

Bundle ICL

All interfaces mapped to an ICL bundle:

ASR9000 TenG interface putting into bundle mode ON (No LACP support)

- router(config)# interface TenGigabitEthernet 0/2/0/1

- router(config-if)# bundle id 10 mode on

Define the bundle ethernet on the ASR9000 host, and designate which ports will be mapped to the bundle:

- router(config)# interface Bundle-Ethernet 10

- router(config-if)# nv satellite-fabric-link satellite 200

- router(config-satellite-fabric-link)# remote-ports GigabitEthernet all

12. Note

Config sequence restrictions

Because of the order and batching in which things get applied in XR there are some things that you need to know when it comes down to negating certain config which additions of others.

Examples of this are:

In such cases, failures are expected to be seen; generally speaking, failures are expected to be deterministic, and workarounds available

(re-apply the configuration in two commit batches)

Recommendation to users is to commit ICL configuration changes in separate commits to Satellite-Ether configuration changes

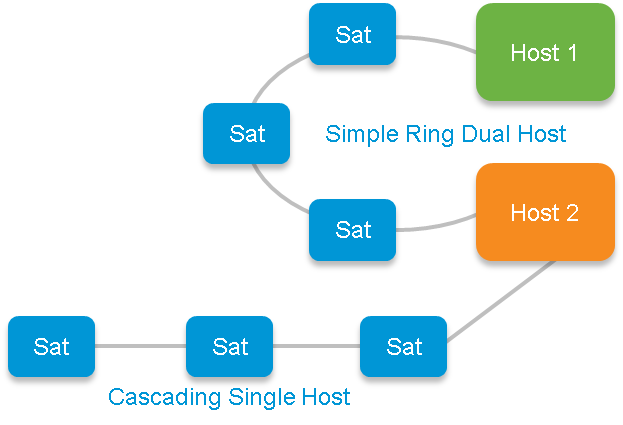

Advanced Satellite Topologies

Starting in 5.1.1 many new features were added to expand upon the basic single host hub-and-spoke model. These features take more configuration than the base satellite configuration and will be discussed below.

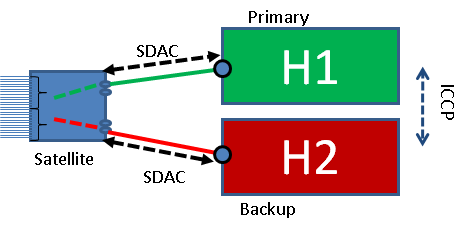

13. Dual Hosts

Starting in 5.1.1 the ability for a satellite (hub-and-spoke) or a ring of satellites (simple ring) to be dual-homed to two hosts was added. (nV Edge acts as one logical router)

With this configuration one ASR9K host is the active and the other is standby. Data and control traffic from the satellite will flow to the active host, but both hosts will send and receive management traffic via the SDAC protocol. This is used to determine connectivity, detect failures, sync the configuration, etc.

The two hosts communicate management information via ICCP with a modified version of SDAC called ORBIT.

Supported Topologies:

Hub-and-spoke dual hosts

9000v with 10G ICL or bundle ICL

901 with 1G ICL

9000v (10G) or 901 (1G) using L2 fabric sub-interfaces

Satellites may be partitioned

Simple ring dual hosts

9000v with 10G ICL

901 with 1G ICL

Satellites may not be partitioned

Note: Partitioning is when you carve out certain access ports to be used by certain ICL interfaces

Current limitations:

Must be two single chassis, no clusters

Load balancing is active/standby per satellite, per access port planned

No user configuration sync between hosts

Configuration Differences:

The most notable changes when coming from a simple hub-and-spoke design is ICCP, and adding the satellite serial number.

Example

Router 1

redundancy

iccp

group 1

member

neighbor 172.18.0.2

!

nv satellite

system-mac <mac> (optional)

!

!

!

!

nv

satellite 100

type asr9000v

ipv4 address 10.0.0.100

redundancy

host-priority <priority> {optional)

!

serial-number <satellite serial>

!

vrf nv_mgmt

!

interface loopback 10

vrf nv_mgmt

ipv4 address 10.0.0.1

!

interface Loopback1000

ipv4 address 172.18.0.1 255.255.255.255

!

interface GigabitEthernet0/1/0/4

ipv4 address 192.168.0.1 255.255.255.0

!

interface ten 0/0/0/0

ipv4 point-to-point

ipv4 unnumbered loopback 10

nv

satellite-fabric-link [network satellite <> | satellite <>]

redundancy

iccp-group 1

remote-ports gig 0/0/0-43

!

!

!

mpls ldp

router-id 172.18.0.1

discovery targeted-hello accept

neighbor 172.18.0.2

!

!

!

router static

address-family ipv4 unicast

172.18.0.2/32 192.168.0.2

!

!

14. Simple Ring Topology

Starting in 5.1.1 we have the ability to support more than just simple hub-and-spoke. The ring topology allows for satellite chaining, cascading, and in general a more advanced satellite network.

Requirements and Limitations:

- Must use 9000v (10G) or 901 (1G) as satellites

- Satellites may not be partitioned

- A satellite must be connected to two hosts

- A host may only be in one dual-home pairing

- Bundle ICLs cannot be used

Configuration:

This is essentially the same as the dual hosts setup, but the network option must be used when entering 'satellite-fabirc-link'

15. Cascading Topology

This is treated as special ring and works the same way as simple ring.

The biggest difference is that in 5.1.1 cascading supports single host while simple ring does not.

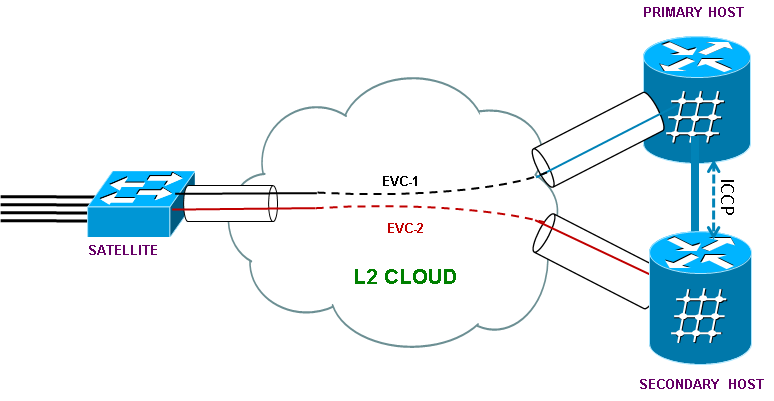

16. L2 Fabric Topology

Starting in 5.1.1 we have the ability to extend the ICL across an EVC. Normally an IRL is a L1 connection. This increases the flexibility of satellite by allowing for greater distances between the ASR9K host and the satellite device.

Requirements and limitations:

- Manual and auto-IP mode supported

- Single home and dual home are supported

- Encapsulation type can be dot1q or dot1ad

- Host EVC is provisioned as a sub-interface

- Different VLANs should be used as a satellite cannot support multiple layer 2 fabric connections with the same VLAN on the same ICL

- The same L2 encapsulation must be used by both hosts

- Usable VLAN range is 2 to 4093 for 9000v (4094 for 901)

- Bundle ICLs are not supported

- P2MP L2 cloud is not supported

- When a bundle is configured on the access ports 'bundle wait timer 0' should be configured to improve convergence times

- Service state synchronization is not supported for the following: ANCP, IGMP, ARP, DHCP

- Ethernet CFM is required

Configuration:

On Active-Host:

interface TenGigE0/1/0/23.200

encapsulation dot1q 200

!

nv

satellite-fabric-link satellite 200

redundancy

iccp-group 1

!

remote-ports GigabitEthernet 0/0/0-43

!

On Standby-Host:

interface TenGigE0/1/1/0.200

encapsulation dot1q 220

!

nv

satellite-fabric-link satellite 200

redundancy

iccp-group 1

!

remote-ports GigabitEthernet 0/0/0-43

!

Note: L2 cloud configuration not shown

System monitoring and troubleshooting

17. Basic status check

18. Upgrade and Software

'show nv satellite status'

- State: One of the following:

- No configured links: No satellite-fabric-links have been configured for this satellite

- Discovering: The SDACP discovery protocol is running on at least one satellite-fabric-link, but discovery has not completed

- Authenticating: The TCP session to the satellite has been established, and the control protocol is authenticating the satellite

- Authentication Failed: The satellite provided incorrect authentication data

-

Checking Version: The version of the software running on the satellite is being checked for compatibility with the running version of IOS-XR on the host

- Incorrect version: The version of the software running on the satellite is incompatible with the host

- Connected: The control protocol sessions to the satellite has been fully established.

- Install State: shown after State, in brackets. One of the following:

- Stable: The satellite has the correct image loaded and it is running

- Transferring: A new image is being transferred to the satellite

- Transferred: A new image has been successfully transferred to the satellite, but not yet installed

- Installing: A new image is being installed on the satellite

- Unknown: The install state of the satellite cannot be determined at this time

- Type: The configured type of the satellite.

- Description: The configured description for the satellite

- MAC Address: The chassis MAC of any discovered satellite

- IPv4 Address: The configured IPv4 address of the satellite

- Received Serial Number: (If control protocol has reached the authentication state): The serial number the satellite has presented

-

Configured Serial Number: (If configured) the serial number configured for the satellite, checked against that presented by the satellite during control protocol authentication

-

Configured Satellite Links: One entry for each of the configured satellite-fabric-links, headed by the interface name. The following information is present for each configured link:

- State: One of:

- Verifying: The configuration of this interface has not yet been accepted

- Inactive: This configured link is currently inactive

- Discovering: The discovery protocol is running over at least one link

- Running discovery protocol: This is a physical satellite-fabric-link, and the discovery protocol is running

- Configuring satellite: This is a physical satellite-fabric-link, and a satellite has been discovered and is currently being configured

- Ready: This is a physical satellite-fabric-link, and the discovery protocol has completed

- Ports: The configured remote ports on this configured link

-

Discovered Satellite Fabric Links: This section is only present for redundant satellite-fabric-links. This lists the interfaces that are members of the configured link, and the per-link discovery state.

- Running discovery protocol: The discovery protocol is running

- Configuring satellite: A satellite has been discovered and is currently being configured

- Ready: The discovery protocol has completed

-

Conflict: If the configured link is not conflicted, the satellite discovered over the link is presenting data that contradicts that found over a different satellite-fabric-link.

- State: One of:

19. Discovery protocol status

'show nv satellite protocol discovery'

- Satellite ID: The satellite ID configured on this satellite-fabric-link

- Status: The current discovery protocol status of the satellite. One of:

- Discovering: Probes are being sent; no satellite yet discovered

- Conflicting: This link conflicts in some way with another link. Detailed reasons are:

- reason unknown: This configured link is in an unknown conflict state

- remote ports overlap: The remote ports configuration on this satellite-fabric-link conflicts with that on another satellite-fabric-link

- satellite not configured: The satellite this satellite-fabric-link is to be attached to isn't fully configured

- Invalid ports: The remote-ports configuration on this satellite-fabric-link is invalid

- multiple IDs: This discovered link is connected to a different satellite to another discovered link

- multiple satellites: Different satellites are connected to member discovered links

- different VRF: The configured satellite-fabric-links are not all in the same VRF

- different IP: The configured satellite-fabric-links do not all have the same IPv4 address

- waiting for VRF: The VRF information has not yet been notified to the GCO. Probably the link is missing IPv4 address configuration.

- Stopped: The discovery protocol is stopped on this satellite-fabric-link. Most likely the link is down.

- Running discovery protocol: The discovery protocol is running, but no satellite has responded.

- Configuring satellite: A satellite has been discovered, and is being configured with an IPv4 address

- Ready: The discovery protocol has completed

- Remote Ports: The configured remote ports on this satellite-fabric-link

-

Host IPv4 Address: The IPv4 address used for the host to communicate to this satellite. Should match the IPv4 address on all the satellite-fabric-links

- Satellite IPv4 Address: The IPv4 address configured for the satellite

For Bundle-Ether satellite-fabric-links, there are then 0 or more 'Discovered links' entries; for physical satellite-fabric-links, the same fields are present but just inline.

- Vendor: The vendor string presented by the satellite it its ident packets

- Remote ID: The satellite's ID for the interface that is connected to this satellite-fabric-link

- Remote MAC address: The MAC address for the satellite interface connected to this satellite-fabric-link

- Chassis MAC address: The chassis MAC address presented by the satellite in its ident packets

20.

21. Control protocol status

'show nv satellite protocol control'

- IP Address: The configured IP address for the satellite. This is the address the TCP session will be connected to

- Status: SDACP Control Protocol status. One of the following:

- Connecting: The TCP session has yet to be established

-

Authenticating: The TCP session has been established, and the control protocol is checking the authentication information provided by the Satellite

- Checking version: The satellite has been successfully authenticated, and the host is checking it is running a compatible version of software

-

Connected: The SDACP control protocol session to the satellite has been successfully brought up, and the feature channels can now be opened.

For each channel, the following fields are present:

- Channel Status: One of:

- Closed: The satellite has sent a close message on this channel, or the control protocol is not up

- Opening: An open message has been sent to the satellite to open this channel

- Opened: An opened response has been received from the satellite

- Open: The feature channel is open

-

Open(In Resync - Awaiting Client Resync End) The Feature Channel Owner (FCO) on the host has not finished sending data to the FCO on the Satellite. If this is the state then typically the triage should continue on the host by the owner. The owner of the Feature Channel should be contacted.

-

Open(In Resync - Awaiting Satellite Resync End) The FCO on the Host is awaiting information from the FCO on the Satellite. If this is the state then typically the triage should continue on the satellite.

- Object Messages Sent: The number of object messages sent to the satellite on this channel

- Non-Object Messages Sent: The number of non-object messages sent to the satellite on this channel

- Object Messages Received: The number of object messages received from the satellite on this channel

- Non-Object Messages Received: The number of non-object messages received from the satellite on this channel

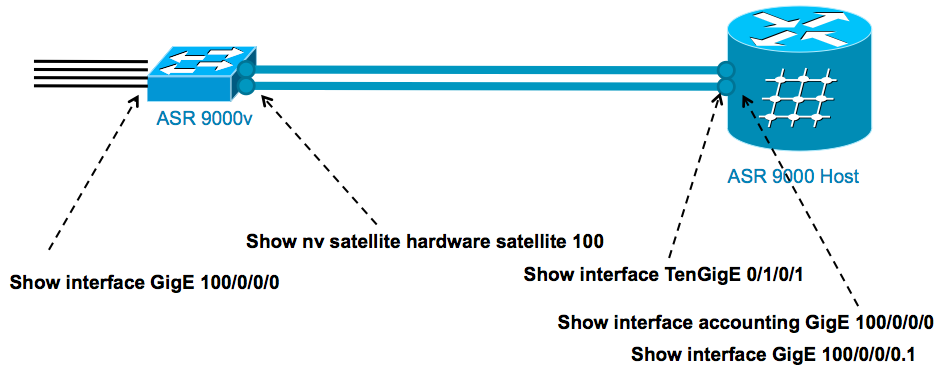

22. Satellite inventory

Dataplane counters and forwarding verification

Notes:

- TX, RX Packet drops @ ASR9K also added into these

- Packet drops at satellite ICL ports NOT included in “show interface GigabitEthernet”

- Packet drops at satellite ICL ports under debug CLI (“show nv satellite hardware …”)

23. Common Issues

- ''SAT9K_IMG_DOWNLOADER-3-TFTP_READ_FAIL: FTP download failure for 4502A1__.FPG with error code:-3'

- Check the MPP configuration

-

icpe_gco[1148]: %PKT_INFRA-ICPE_GCO-6-TRANSFER_DONE : Image transfer completed on Satellite 101

- Here we are indicating the new image transferred to the satellite, and is what we expect to see, but sometimes this is not the case.

-

A few issues can cause this:

-

- 1. MPP

- 2. Using the progress keyword. Do not use the progress keyword

- 3. TFTP homedir set with the default VRF, 'tftp vrf default ipv4 server homedir disk0:', (manual IP not auto-IP) and the directory is on disk0:. TFTP homedir should be removed.

- 4. May fail on 901 due to space limitations. Delete non-nV images from the 901 flash and execute sqeeze flash: to free space

- Note: Image transfer should take around 5 minutes

Conflict Messages

Examples:

- Conflict, multiple IDs

- Check whether you have connected two interfaces to the same satellite box but given them different satellite IDs

- Conflict, multiple satellites

- Check whether you have connected two interfaces to two different satellite boxes but given them the same satellite ID

- Conflict, different VRF

- Check your VRF config - all satellite-fabric-link interfaces to a particular satellite must be in the same VRF

- Conflict, waiting for VRF

- Check all IP config is present, or if any VRF config is present, check *all* the VRF config is present

- Conflict, different IP

- Check whether you have configured two satellite-fabric-links with different IP addresses (you need to unnumber them to the same loopback interface).

- Conflict, remote ports overlap

- Check whether you have configured overlapping remote port ranges on two or more satellite-fabric-links to the same satellite

- Conflict, satellite not configured

- You need to enter the global satellite configuration

- Conflict, invalid ports

- The specified port range is invalid

- Conflict, satellite has no type configured

- You need to configure 'type asr9000v' in the global nv satellite configuration

- Conflict, satellite has no ipv4 address

- You need to configure an IP address on the satellite

- Conflict, satellite has a conflicting IPv4 address configured

- The IPv4 address configured on the satellite conflicts with another IP address in the system

Access/ICL Bundles and stipulations

BNG access over satellite is only qualified over bundle access and isn’t supported over bundle ICLs.

BNG access over ASR9k host and NCS5k satellite specifically is in the process of official qualification in 6.1.x. Please check with PM for exact qualified release.

Access bundles across satellites in an nV dual head solution are generally not recommended. The emphasis is not to bundle services across satellites in a dual head system as if they align to different hosts, the solution breaks without an explicit redundant path. An MCLAG over satellite access is a better solution there.

Bundle access over bundle fabric / ICL require 5.3.2 and above on ASR9k. For NCS5k satellite, bundle ICL including bundle over bundle is supported from 6.0.1 and nV dual head topologies are planned to be supported only from 6.1.1

MC-LAG over satellite access might be more convergence friendly and feature rich than nV dual head for BNG access from previous case studies. For non BNG access, nV dual head and MC-LAG are both possible options with any combinations of physical or bundle access and fabric.

In an MC-LAG with satellite access, the topology is just a regular MC-LAG system with the hosts syncing over ICCP but with satellite access as well. Note that the individual satellites aren’t dual homed/hosted here so there is no dual-host feature to sync over ICCP beyond just MC-LAG from CE.

As a deployment recommendation, unless ICL links (between satellite and host) are more prone to failure over access, MC-LAG might be preferable over nV dual head solution. However, if ICL links have higher failure probability and the links going down can affect BW in bundle ICL cases, then MC-LAG may not switch over unless the whole link goes down or access goes down.

Related Information

Xander Thuijs CCIE#6775

Principal Engineer, ASR9000

Sam Milstead

Customer Support Engineer, IOS XR

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Yeah we need to work on that documentation, but in the interim, let me try and answer those questions.

Because this is ICCP based, it functions the same way as MCLAG.

interface TenGigabitEthernet 0/1/0/2

nv

satellite-fabric-link network

satellite 100

remote-ports GigabitEthernet 0/0/0-43

satellite 200

remote-ports GigabitEthernet 0/0/0-43

regards

xander

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi, Xander:

On the note you write (HW/SW reqs):

- LC may be –TR or –SE. If –TR, each satellite access GigE port gets 8 TM queues

Can you explain this a little further, please? Will I be able to offer 8 queues to a port-based customer when using a TR host card?

What does TM queue mean?

If time allows, can you please offer any advice/consideration we should take into account when thinking of provisioning customers on an 9000v connected to a TR host-card? Obviously the cost difference between TR and SE is considerable. No chance we get an all copper LC for the 9000?

:D

Thanks,

c.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

I saw that exact line in a presentation regarding NV. TR is suppose to give 8 queues per 9000V access port. I started testing that yesterday and I have different port shaper speeds on 17 different ports right now and it seems ok. Test a few of the ports and they seem to be working. Going to try child policies next but it looks like they apply to the interfaces. Need to push some real traffic across though. 5.1.1 on 9000Vs

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Xander,

I am looking at the ICL Lag picture, Specifically A2 - LAG between two 9000Vs. I am trying that now on a set of 9000Vs. 1 9000V is ICL Bundle and the other is a single non bundled ICL. I am not able to bundle ports across 9000Vs like the diagram suggests. Is there a restriction I am missing? I can bundle both ports on the NonBundled 9000V.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

We still have the restriction that we cannot do bundle-on-bundle (ICL and access bundles) at the same time. This and other exceptions are listed in section 6.

You can still partition the satellite to where ports 0-20 are associated with a bundle ICL and ports 21-40 are associated with a non-bundle ICL. If you do this then you cannot have an access bundle on 0-20 but you can have an access bundle on 21-40.

Thanks,

Sam

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

So the A2 configuration on the picture above is not possible yet?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Correct.

A2 shows a physical ICL to satellite 1 and only a bundle ICL to satellite 2. LAG on LAG is not yet supported.

Thanks,

Sam

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello,

Since with 5.1.1 There is "no user configuration sync between hosts" in dual host topology, when can we expect that feature?

Kind regards,

Ivan

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Ivan,

This was at one time targeted for 5.3.1 but it is now off the roadmap due to other more pressing features. Your account team can work with marketing to tell you what is coming and prioritize what features you and the rest of the satellite community need/want to see.

Thanks,

Sam

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello

We are considering evaluating the satellite Nv units in our network (already using asr9000). Can you help me better understand dual homed topology for the satellites?

The scenario we are interested resembles the dual-host one satellite connected to 2 hosts AS9000 for redundancy. From what I understand the two ASR9000 must be configured in a cluster setup (nv-edge, single control plane) for this to work?

Is there any simple dual-home setup for satellite units that does not involve clustering? (more specifically is common control plane for the two asr9k a requirement for the dual homed setup?) ? E.g the satellite directly connecting to both hosts but having only one uplink in active state?

Thanks Victor

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Victor,

You can go 2 ways about that. Either you dual home the satellite to each rack member of the cluster, this is active/active whereby all fab links are used. Or you can dual home to 2 separate devics not part of a cluster and this will give you active/standby operation, handled via ICCP.

So a common control plane is not necessary for the dual home, but it will affect the loadbalancing operation on the fabric uplinks (ICL).

cheers

xander

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thanks for the prompt reply Xander

Now that it have taken a closer look to your original post you had already mentioned in paragraph 13 Current Limitations "Must be two single chassis, no clusters"

One last clarrification again for the scerarion with 2 separate devices. The ICCP link is used just to syncronize management information or it also used to synchronize information about state for limited set of control protocols (e.g. ARP entries, MAC addresses received through satellite unit , pppoe session state (realize especially the last is a long shot))

Thanks again Victor

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

No prob Victor, a single host can be dual homed to racks of a cluster (fully stateful) or homed to 2 separate nodes, but need to be linked via ICCP which is only used for mgmt. PPPoE state is not synced, so if there is a fabric link failover those sessions need to restart.

Good news is that we get this func called GEO redundancy, which will leverage ICCP and then some to sync the state of the sessions between the 2 separate nodes. This is 52 functionality.

regards

xander

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thank you Karwan and also for the detailed description which makes it easier to give an assessment of the situation!

We've had some satellite operational issues in 511 that have been addressed in 512. Based on the info you have here I am suspecting CSCuo11506 primarily.

XR 512 is out today. 513 is coming in august. If it is easy for you to upgrade, and this issue is a biggy for oyu, then 512 is best play right now and potentially consider 513 also at some point.

regards

xander

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi , ouch I didnt expect that! Can you share the config of the loop101 you are using, want to make sure it is in the same subnet and that the satellite is pingable. It likely is because the control channel says stable.

Also try to see if you configure 5 or 10 ports on this fab link if that commit goes through.

If still no dice, then I think weneed to investigate this deeper, and for that a tac case may be the best as we likely need to pull some details from it like debugs and techs etc.

regards!

xander

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: