- Cisco Community

- Technology and Support

- Networking

- Software-Defined Access (SD-Access)

- Deploying Servers in a SD-Access Fabric

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-19-2020 01:05 PM

I have a customer in the education sector who wishes to deploy SD-Access but essentially for use in a server environment although there will be user endpoints like desktops, laptops, printers as well including wireless clients.

I am concerned about putting servers in the SD-Access fabric in this way. In fact, I would prefer Cisco ACI but this cannot be justified due to the relatively small number of servers. I am aware that a feature was introduced in DNAC (v1.2.5 I think) that allows a port to be designated as a server port on an edge switch during the host onboarding process and have a number of questions as follows if you happen to have come across this solution design problem:

(a) - Is this feasible or just a bad idea?

(b) - What exactly does the "Server Port" designation do for servers connected to a fabric edge switch? Can you share any detailed information on this feature?

(c) - What authentication mechanism would you advise for ports that are used for servers in this way? The nature of the consideration here is to ensure that the servers are deployed in a manner that ensures 100% uptime and avoids the risk of momentary disconnections due to periodic re-authentication etc

(d) - The servers that will be migrated to the fabric have to retain their IP addresses and moved in a phased manner i.e. there will be a scenario where some servers have been moved whilst others are still in their legacy environment along with their legacy default gateway. Is it possible for servers to move to the fabric whilst retaining the legacy default gateway for a transition period?

Thank you

Solved! Go to Solution.

- Labels:

-

SD-Access

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-20-2020 04:54 PM

1. If you can onboard subnets that the server would be used in Cisco DNA-Center, then you can leverage the full SD-Access automation (configuring vlan, anycast gateway etc.. ). In the host-onboarding page, you can add SGT to the subnet providing you all the segmentation (macro and micro) benefits that the solution can provide. In this case, you are onboarding the server to the SD-Access overlay network.

2. Layer 2 handoff is mostly for migration where you need same subnet inside the fabric and outside the fabric.

In this case, you are manually configuring the vlan, default gateway and taking care of end-to-end routing but just leveraging the SDA workflow to configure the port as trunk with 'server' option.

3. Unfortunately, port-cahnnel is not configurable at this time.

4. Yes, this is possible. You can have VM's hosted in the server with different subnets. remember the 100 IP-device tracking limit being configured on the port.

It completely depends on the use-case being looked at. If you have a significant number of servers then ACI deployment outside the fabric would be a better approach and leverage Layer 3 handoff (BGP peering to peer device) to provide connectivity.

On the other hand, we do have production deployments where the servers are connected to Fabric Edge port via the truk port with complete SD-Access benefits (segmentation and policy).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-20-2020 07:24 AM

The 'Server' option in the SD-Access workflow will configure the port with 'trunk + IP Device tracking'. IP device tracking will limit the number of endpoints (ip-address) learned on the port to 100. No authentication is configured on the server ports.

!

interface GigabitEthernet1/0/23

switchport mode trunk

device-tracking attach-policy IPDT_TRUNK_POLICY

access-session inherit disable interface-template-sticky

access-session inherit disable autoconf

no macro auto processing

!

device-tracking policy IPDT_TRUNK_POLICY

limit address-count 100

no protocol udp

tracking enable

!

Technically you can maintain a legacy default gateway (not recommended), this will result in inefficient data packet flow. In this scenario, the server will be part of the underlay infrastructure and for clients in the SD-Access fabric to access the server need to traverse the border, the BGP peer device (fusion device), and route in the underlay for reachability. Also, the legacy configuration needs to be configured and managed manually.

The recommendation would be to move the server to the SD-Access network for complete automation and optimized routing.

Hope this helps!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-20-2020 09:39 AM - edited 09-20-2020 09:39 AM

Hello Pdavanag

Thanks so much for the detailed reply. I still have a few queries that would help towards providing sound solution design guidance to my customer if you don't mind.

- If authentication is disabled for servers as you point out, would it be correct to say that the benefits of dynamically imposed SGTs are no longer possible meaning one of the key features of SD-A i.e. micro-segmentation will not be as smooth. I'm aware it's possible to manually configure static IP-to_SGT mappings but I think this would be sub-optimal from an operations perspective as it involves manual configuration which is prone to human error and which SD-A sets out to avoid

- For the default-gateway possibility (not recommended) that you described, would this be relying on the layer 2 hand-off feature in which case only one of the borders can be used and therefore less resilient

- Are there configurations at the fabric edge node to support a port-channel from the fabric edge to a server with dual-NICs

- If the "Server" option configures the port as a trunk as you point out, can the server be a virtual server such as VMware ESXi with multiple virtual machines in different subnets/VLANs

Essentially, I'm trying to get a sense of whether it is recommended or whether there are any advantages to using SD-A fabric edge nodes to host servers in in this way. Wouldn't it be better to keep servers outside the fabric entirely by utilising the fusion layer (in this case a pair of Catalyst 9500s) to double as a traditional networking collapsed core/access layer and leaving the fabric for users only i.e. servers can connect directly to the fusion layer and if greater port capacity is required one or more Catalyst 9300 access switch can be dual-connected to the fusion. As per my original post, I would prefer Cisco ACI for server but cannot justify it in this instance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-20-2020 04:54 PM

1. If you can onboard subnets that the server would be used in Cisco DNA-Center, then you can leverage the full SD-Access automation (configuring vlan, anycast gateway etc.. ). In the host-onboarding page, you can add SGT to the subnet providing you all the segmentation (macro and micro) benefits that the solution can provide. In this case, you are onboarding the server to the SD-Access overlay network.

2. Layer 2 handoff is mostly for migration where you need same subnet inside the fabric and outside the fabric.

In this case, you are manually configuring the vlan, default gateway and taking care of end-to-end routing but just leveraging the SDA workflow to configure the port as trunk with 'server' option.

3. Unfortunately, port-cahnnel is not configurable at this time.

4. Yes, this is possible. You can have VM's hosted in the server with different subnets. remember the 100 IP-device tracking limit being configured on the port.

It completely depends on the use-case being looked at. If you have a significant number of servers then ACI deployment outside the fabric would be a better approach and leverage Layer 3 handoff (BGP peering to peer device) to provide connectivity.

On the other hand, we do have production deployments where the servers are connected to Fabric Edge port via the truk port with complete SD-Access benefits (segmentation and policy).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-20-2020 05:18 PM

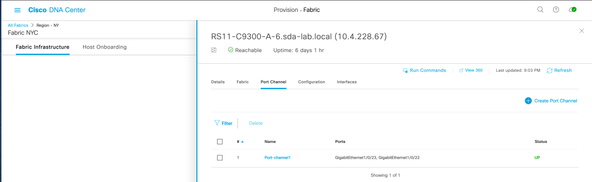

One tiny extra detail: in DNAC GUI, on a single SDA FE, we can create port-channel and make that port-channel a server port. This is possible today.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-20-2020 09:08 PM

Thanks for pointing it out. Yes, we do support Port-cahnnel.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-29-2020 01:12 PM - edited 09-29-2020 01:13 PM

Hi!

Is there anyway you can have a DHCP and DNS server in overlay like this and have the clients to use it within SDA?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-23-2023 04:08 AM

Since things change in time...

Could it be that in DNAC 2.3.3.7 and up this device-tracking policy IPDT_TRUNK_POLICY for "Server" ports was removed and the limit address-count 100 was also removed?

In our test bed we configured ports as: "Trunk" port and "Extended nodes" and no limit address-count was added.

This suggest you could connect a server to a "Trunk" port. The connect servers have some limitations (no servers connected using multi-chassis LACP etc...)

Any other thought, limitations?

Thanks, Paul.

device-tracking policy IPDT_TRUNK_POLICY

device-tracking policy IPDT_TRUNK_POLICY

limit address-count 100

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide