- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

03-16-2018 08:24 PM - edited 01-22-2021 12:11 PM

|

For an offline or printed copy of this document, simply choose ⋮ Options > Printer Friendly Page. You may then Print, Print to PDF or copy and paste to any other document format you like. |

This deployment guide is intended to provide key details, information related to best practices, tips and tricks for smooth upgrade of Cisco Identity Services Engine software.

Author:

Krishnan Thiruvengadam

Mahesh Nagireddy

- Introduction

- About Cisco Identity Services Engine (ISE)

- About This Guide

- Plan your upgrade

- Upgrade procedure

- Single Step vs Multi-Step Upgrade

- Lifecycle of ISE Releases

- What hardware/software should I upgrade to?

- Do I need a hardware upgrade?

- Do I need to upgrade my VM?

- Key considerations for upgrade

- What is better, Backup/Restore or In-service GUI upgrades?

- Guidelines to minimize upgrade time and maximize efficiency during production upgrade.

- Key Functionalities Added Since ISE 2.x

- Issues, Fixes and Other Considerations

- Pre-Upgrade Steps

- Log retention and sizing MnT hard disks

- Upgrade using Backup/Restore Procedure

- Phase 1: Upgrade PAN and MnT Nodes at New York Campus (Secondary DC) to ISE 2.4 release

- Upgrade to ISE 2.4 with latest patch and create a new ISE 2.4 deployment

- Optional Step: How do you carry over the differential logs to the new deployment?

- Phase 2: Join PSN’s nodes at New York Campus (Secondary DC) to ISE 2.4 release

- Post-Upgrade Steps

- Phase 3: Upgrade PSN,PAN & MnT Nodes at San Francisco Campus(Primary DC) to ISE 2.4 release.

- Post-Upgrade Steps

- Single-Step Upgrade (ISE 2.1 to 2.4) process

- Appendix A - Deployment Fundamentals

- Deployment Fundamentals

- Simple Two Node ISE Deployment

- Basic Distributed Deployment

- Fully Distributed Deployment

- Deployment options

- Appendix B – Approach, Benefits & Drawbacks with each upgrade options.

- ISE GUI Upgrade:

- CLI Based Upgrades:

- Appendix C – ISE defects Open and Fixed

- Appendix D - T+/ RADIUS Sessions per ISE Deployment

- Dedicated TACACS+ only deployment:

- Shared deployment (RADIUS + TACACS+):

- Appendix E - ISE VM Sizing and Log retention

- TACACS+ guidance for size of syslogs

- TACACS+ transactions, logs and storage

- TACACS+ log retention (# of days):

- Human Device Admin Model

Introduction

About Cisco Identity Services Engine (ISE)

Cisco Identity Services Engine (ISE) is a market leading, identity-based network access control and policy enforcement system. It’s a common policy engine for controlling, endpoint access and network device administration for your enterprise. ISE allows an administrator to centrally control access policies for wired, wireless and VPN endpoints in the network.

Figure1: Cisco Identity Services Engine

ISE builds context about the endpoints that include users and groups (Who), device-type (What), access-time (When), access-location (Where), access-type (Wired/Wireless/VPN) (how), threats and vulnerabilities. Through the sharing of vital contextual data with technology partner integrations and the implementation of Cisco TrustSec® policy for software-defined segmentation, Cisco ISE transforms the network from simply a conduit for data into a security enforcer that accelerates the time to detection and time to resolution of network threats.

About This Guide

This document provides partners, Cisco field engineers and TME’s with a guide to design and migrate from older ISE versions to 2.x. For this discussion, we consider releases that are currently active and not End of Maintenance. Please see the End of Life details of ISE for further information.

Plan your upgrade

This section will give you information on planning your upgrade and pre-upgrade tasks in general. It will answer questions related to upgrade path, how to clean up your environment, pre-upgrade best practices, key considerations, issues to fix prior to upgrade and checklist of pre-upgrade tasks for successful upgrade.

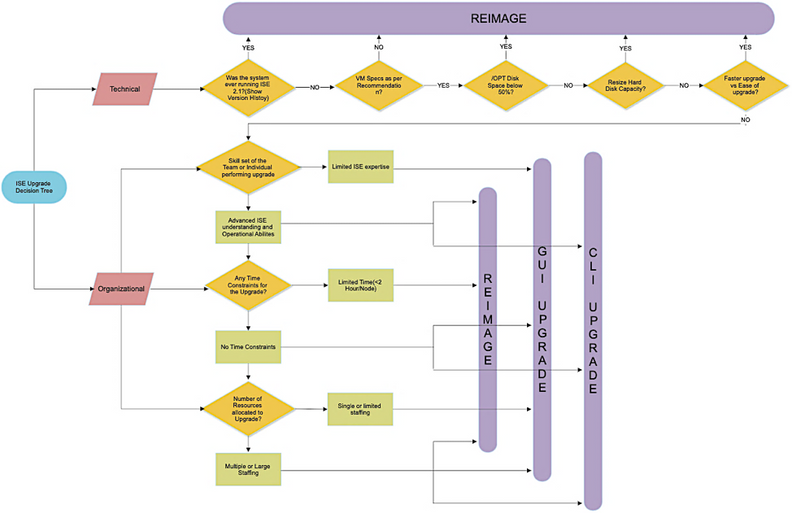

Upgrade procedure

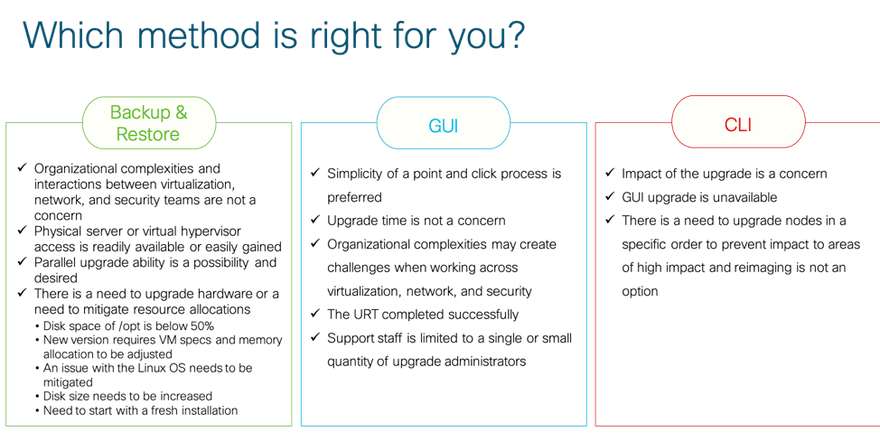

Cisco Identity Services Engine offers multiple upgrade options depending upon downtime tolerance, operational intensiveness, time to upgrade and current ISE version. This guide is to help decide what is the best option for your ISE Upgrade.

Below are three options available to upgrade a Cisco ISE Deployment

- Backup and Restore / Re-imaging

- ISE GUI Upgrade

- CLI Based Upgrade

This document focuses on upgrade using backup and restore/re-imaging method since it is found to minimize overall time taken for upgrades, maintain operational ability and less error prone. Refer to the Appendix section for benefits & drawbacks against each of the supported methods for more details.

This guide will also cover the operational aspects of the upgrade process and provides step by step procedure to perform upgrade from one release to another release. We will also cover multi-step upgrade when upgrading from an older ISE release.

Along with the operational aspects, this document strictly addresses the technical aspect of the upgrade and focuses on the administrative task required to upgrade as well. It assumes that you have necessary time in the change window for upgrade. That said, it will provide areas where you can improve efficiency as well as rough time estimates for specific tasks so that you can plan the effort effectively.

This document will not provide advice on managing change and communication with the users in your or your customer environment, nor does it address user needs and their sensitivity to ISE services. This is beyond the purview of the document.

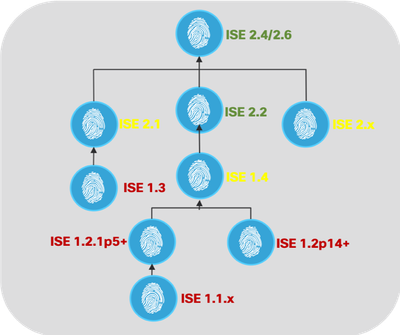

Single Step vs Multi-Step Upgrade

Identity Services Engine provides policy and access services to customers. When this document was updated, ISE 2.6 was the latest release. ISE had following releases post ISE 2.0 version, they are 2.0.1, 2.1, 2.2, 2.3, 2.4 and ISE 2.6. Before ISE 2.0, we had ISE 1.4, ISE 1.3 and lower. For this document we will consider releases above ISE 1.3/ISE 1.4.

ISE upgrade is a natural part of evolving ISE to the latest version. Upgrading a Cisco ISE deployment can be a single step or multi-step process depending on the versioning details.

Figure2: Single Step vs Multi-Step Upgrade

The picture below shows the upgrade path from ISE releases to the latest recommended version of ISE (ISE 2.4 patch 5+).

|

Note: ISE 2.4 patch 5+ is the current recommended release. ISE 2.6 is expected to be the next recommended release in the future. |

If you on a version earlier than Cisco ISE release 2.0, you must first upgrade to one of the 2.x releases and then upgrade to release 2.4 or ISE 2.6. So it would be a two-step upgrade.

Based on the release you are on, you need to first know the upgrade path to the latest release to facilitate the upgrade. From the upgrade paths mentioned above, Red font indicates ISE releases that are End of Support. Yellow font indicates End of Sale but still in software maintenance.

|

Tip: It is recommended to upgrade to the latest patch in the existing release before upgrading to a later release. |

Starting ISE 2.3, to preempt resolution of issues and to enable successful upgrades, Cisco added the Upgrade Readiness Tool (URT) that does an upgrade simulation on the secondary PAN or on a Standalone ISE node. This tool supports upgrade simulation from ISE 2.0 and above to whatever release you need to upgrade to. This tool analyzes the configuration database for potential issues and highlights them during the simulation process to help administrators resolve the issues before the actual upgrade. You can run the URT from CLI of the Cisco ISE node and its supported on earlier ISE releases (2.0,2.01,2.1 & 2.2) as well.

Lifecycle of ISE Releases

The table below provides you with details on the life cycle of ISE release for which End of Life announcement was made already. Please refer to the End of Support and End of Life Statements for complete details.

|

Version |

End of Sale |

End of Maintenance (Sev 1, Vulnerabilities) |

End of Support |

EoS / EoL |

|

ISE 1.0 |

March 2015 |

April 6, 2015 |

April 30, 2015 |

|

|

ISE 1.1, 1.1.x |

March 2015 |

April 6, 2015 |

April 30, 2015 |

|

|

ISE 1.2, 1.2.1 |

May 31, 2016 |

May 31, 2017 |

May 31, 2019 |

|

|

ISE 1.3, 1.3.x |

December 31, 2016 |

December 31, 2017 |

- |

|

|

ISE 1.4, 1.4.x |

September 30, 2017 |

September 30, 2018 |

September 30, 2020 |

|

|

ISE 2.1, 2.0.1 |

March 17, 2019 |

March 17, 2020 |

March 17, 2021 |

|

|

ISE 2.1 |

March 17, 2019 |

September 17, 2019 |

March 17, 2020 |

|

|

ISE 2.3 |

March 17, 2019 |

September 17, 2019 |

June 17, 2020 |

|

| ISE 2.4

|

June 26, 2020 |

December 26, 2021 |

December 26, 2022 |

From ISE 2.4 the release cadence has become streamlined, simple to understand and predictable. Once a release is publicly available in Cisco software download site, the active life for that release is 24 months ( 2 years). After 2 years, it will be maintenance for 1 extra year where Cisco will provide fixes for security vulnerabilities and for sev 1 defects for ISE. The life of a release from its birth to end of maintenance is 3 years followed by support for 1 additional year as entitled by contracts, warranty terms etc. The following link will give you a good clarity of the life term of a release Cisco Identity Services Engine Software Release Lifecycle Product Bulletin

What hardware/software should I upgrade to?

If you are using legacy appliance, it is good to plan on using the new Hardware (35x5 appliance) or VM based on your need and End of Life consideration. ISE 2.4 started supporting large VM appliances (3595) with 256GB memory to support monitoring and reporting capabilities for large organizations.

ISE 2.6 supports newer hardware (36x5 appliance). 36xx appliance provides three choices of appliances (3615, 3655 and 3695) with higher CPU cores and slightly lesser CPU speed with different memory and hard disk configuration to suite customer needs.

Do I need a hardware upgrade?

If you are on ISE 2.1 and above and using SNS-35x5 appliances, , you can still upgrade your existing 35x5 appliance to ISE 2.4 patch 5+. ISE 2.6 supports both 35x5 and 36x5 appliances. ISE 2.4 is our current recommended release and Patch 9 adds support for 36x5 appliances.

Thing to remember is, it makes sense to use the same range of appliance (35x5 appliance series) in all ISE nodes to avoid support/maintenance/licensing mismatch at a later point of time.; Use the ISE Performance and Scale community site for the scalability needs of the deployment that helps you choose the right 35x5/36x5 platform.

If you are upgrading both Hardware and Software, then the upgrade using backup and restore procedure described in this document will be applicable to you. If you are looking for install guide for hardware appliances ( 35x5 / 36x5) here is the link for ISE documentation.

Here is a quick summary of legacy Hardware life cycle.

- SNS-33x5: End of Support and End of Life. ISE 1.4 is the last release that can run on 33x5.

- SNS-34x5: End of Sale (Oct 7, 2016), End of SW Maintenance (Oct 7, 2017) and End of HW Support (Oct 31, 2021). ISE 2.3 is the last release that can run on 34x5.

- SNS-35x5: End of Sale (Mar 15, 2019), End of SW Maintenance (June 15, 2020) and End of HW Support (June 30, 2024)

- Customers using 33x5 have to opt for Backup/Restore. So, do those using 34x5 and upgrading to ISE 2.4 or above.

Do I need to upgrade my VM?

VM upgrades are needed from time to time to support the growth of the deployment as well as to get the equivalent performance of the hardware appliances. ISE software has to be in tandem with the chip and appliance capacity, so it gets refreshed to support latest CPU/Memory capacity available in the UCS Hardware. As ISE version progresses, support for older hardware will be phased out and newer hardware is introduced. It is a good practice to upgrade VM capacity for this reason and for better performance. When planning VM upgrades, its highly recommend to use OVA files to install ISE software. Each OVA file is a package that contains files used to describe a VM and reserves required hardware resources on the appliance for ISE Software Installation.

|

Warning! ISE VMs need dedicated resources in the VM infrastructure. ISE needs adequate amount of CPU cores akin to hardware appliance for performance and scale. Resource sharing is found to impact performance with high CPU, delays in user authentications, registrations, delay and drops in logs, reporting, dashboard responsiveness etc. This will directly impact your end-user and admin user experience in your enterprise. If you are using a resource sharing environment, highly recommend resource reservation for CPU, Memory and Hard disk space. |

If you are upgrading from VM based out of 33x5 appliance or 34x5 appliance, then the upgraded VM need to use more CPU core( OVA for 3515 allocated approximately 6 Core and OVA for 3595 uses 8 Core/64GB RAM with HT enabled). Check out the OVA requirements for ISE 2.4 for more details.

Key considerations for upgrade

What is better, Backup/Restore or In-service GUI upgrades?

Backing up the configuration database on an older ISE version and restoring the backup to the newer freshly installed ISE version is a better option than an in-service upgrade. ISE release is built on top of Red Hat Enterprise Linux (RHEL)OS. ISE version 1.3 ,1.4 and 2.0 uses Red Hat Enterprise Linux 6. Later versions of ISE used Red Hat Enterprise Linux 7. In general, ISE upgrades with RHEL OS upgrades (later version of Red Hat) take longer time per ISE instance. Additionally, if there are changes in the Oracle Database version in ISE, the new Oracle package is installed during OS upgrade. This may take more time to upgrade.

Note: Oracle version in ISE 2.1 changed to 12C from 11G in ISE 2.0 and ISE 2.0.1

|

To minimize the time for upgrades, it is key to understand if the underlying OS is upgraded during ISE upgrades to choose the best upgrade method. Here is a table that will clearly give you an idea if OS upgrade happens when upgrading ISE. In the following table “Yes” indicates that underlying OS was upgraded during ISE upgrade and “No” the absence of it. ISE upgrades that includes OS upgrades can easily be perceived from the size of the upgrade bundle in Cisco’s software download center for ISE. |

|

ISE Upgrade |

TO |

ISE 1.3 |

ISE 1.4 |

ISE 2.0 |

ISE 2.0.1 |

ISE 2.1 |

ISE 2.2 |

ISE 2.3 |

ISE 2.4 |

ISE 2.6 |

|

|

ISE 1.3 |

N/A |

No |

No |

Yes |

Yes |

N/A |

N/A |

N/A |

N/A |

|

ISE 1.4 |

N/A |

N/A |

No |

Yes |

Yes |

Yes |

N/A |

N/A |

N/A |

|

|

ISE 2.0 |

N/A |

N/A |

N/A |

N/A |

Yes |

Yes |

Yes |

Yes |

Yes |

|

|

ISE 2.0.1 |

N/A |

N/A |

N/A |

N/A |

Yes |

Yes |

Yes |

Yes |

Yes |

|

|

ISE 2.1 |

N/A |

N/A |

N/A |

N/A |

N/A |

No |

Yes |

Yes |

Yes |

|

|

ISE 2.2 |

N/A |

N/A |

N/A |

N/A |

N/A |

N/A |

Yes |

Yes |

Yes |

|

|

ISE 2.3 |

N/A |

N/A |

N/A |

N/A |

N/A |

N/A |

N/A |

Yes |

Yes |

|

|

ISE 2.4 |

N/A |

N/A |

N/A |

N/A |

N/A |

N/A |

N/A |

N/A |

Yes |

Guidelines to minimize upgrade time and maximize efficiency during production upgrade.

- Plan out upgrade procedures, testing it in a staging environment to avoid common issues. Consider the following recommendations.

- Use the table above as a general reference to make a choice between GUI upgrade/In-service upgrade vs Backup/Restore for single-step upgrade.

- ISE deployment must be in the same patch level for ISE nodes to talk to each other.

- If you know the number of PSNs in the deployment and have multiple personnel helping on the effort, you can install the final version of ISE you need to upgrade to, apply latest patch, and keep it ready. You can do this upfront or in batches in-parallel based on the number of PSNs and availability requirements.

- The above also applies if you do not want to preserve operational logs in MnT. You can install final version of ISE, apply patches and keep the ISE servers ready for joining the new deployment as MnT nodes.

- ISE installations are in general faster in a separate appliance or VM with dedicated resources. These installations can be done in parallel if you have multi-node deployment without impact to the production deployment. Once you install the ISE versions you can apply the latest patch before joining the deployment. Installing ISE server’s in-parallel saves time especially when you are using backup/restore from a previous release.

- Installations are a better option for MnT nodes with or without operational logs to be restored.

- Installations are a better option for PSNs to save time. You can add a PSN to the new deployment which will download the existing polices during the registration process from the PAN. Use ISE latency and bandwidth calculator to understand the latency and bandwidth requirement in ISE deployment.

- MnT upgrade will take the longer time if there are lots of existing logs. You can just bypass this step by re-imaging if you don’t need to retain the logs. You should take an operational backup of MnT logs if retaining these logs are important. Here is sample information on time taken to upgrade.

- It is best to archive the old logs and not carryover to the new deployment for the following reasons:

- Backup/restore of MnT logs takes time. You need to restore logs in both Primary and Secondary MnT of the new deployment for them to be in sync and consistent with each other and this consumes more time and effort.

- Operational logs restored in the MnTs are not synchronized to different nodes if you change the MnT roles. Let us try and understand how this is important. Say, you first will start with 2 PAN/MnT nodes in the new deployment as Primary and Secondary. Restore MnT logs on them. Then you expand to 4 nodes adding 2 additional nodes that makes up 2 dedicated PAN and 2 MnT nodes for a fully distributed deployment.

- The 2 additional nodes you added will have to have a role of PAN if you want to preserve the logs. This is because the first 2 nodes are already MnTs and will have operational logs restored which will not synchronize with the newer 2 nodes.

- Switching roles for the nodes is a procedural hassle if your new deployment is partly operational. You need some downtime to switch the roles that may impact new user/device registration for guest/BYOD. Authentication services will not be affected.

- If you do a restore at the end, you will miss out on the logs from when the previous backup happened till the restore. If you have a large deployment and planned to do upgrades in different stages as listed in the document, then it might take few weeks to months.

- If you have two Data Centers (DC) with full distributed deployment, upgrade the backup DC and test the use cases before upgrading primary DC.

- Use URT tool to simulate configuration upgrades to identify configuration errors. Remember that URT tool does not simulate MnT operational data upgrades.

- Purge data that is not needed, including old endpoints, old MnT data (logs). Monitoring logs may be needed for audit or for other compliance needed. Please check with your management before making the decision. Also understand the audit needs of your country/state and your institution. Use a remote logging target during the upgrade window so that you don’t miss the logs if audit needs the information.

- Take a configuration backup. If the existing MnT logs are important, please take an operational backup as well.

- If you have load balancers in your deployment, it is a good practice to take a backup of the configuration in case you need to change the configuration after upgrade.

- Test out the new deployment with a handful of users for all the use cases to ensure service continuity.

Now, if you are wondering what ISE 2.x provides you, check out the list of benefits outlined below upgrading to ISE 2.2+. Some of the key functionalities’ customers use were available since ISE 2.0 onwards, however ISE 2.4 with latest patch is recommended for upgrade. Please look at the release notes for the release specific features and details.

Key Functionalities Added Since ISE 2.x

|

ISE Version |

Release Status |

Major features introduced per release (cumulative) |

|

Active |

IPv6 support phase 3 | MUD for IoT | PSN light session directory | Syslog over ISE messaging (WAN survivability) | USB dongle support | New appliance support. |

|

|

Active |

RADIUS over IPv6 | Posture (End user posture failure recovery and management) | Profiler (650 new profiles, ISE and IND integration) | TrustSec enhancements |

|

|

Severity 1 and Security Vulnerability |

New Policy UI | DNA Center Integration | Social Login for Guest ACS parity complete |

|

|

Active |

Secure Access Wizard | RADIUS over IPSec/DTLS | TACACS+ IPv6 support | Hyper-V Support TrustSec DEFCON Matrices Application visibility and Hardware inventory, AnyConnect Stealth mode |

|

|

Severity 1 and Security Vulnerability |

Context Visibility | Easy Connect | Google Chrome BYOD | KVM support Threat Centric NAC | SCCM, USB, Anti-Malware support Higher Scalability - 1.5M Endpoints, 100K Network devices, Dynamic log storage TrustSec workflow, policy staging, SXP scalability SAML v2.0 | 35xx appliance support |

|

|

Severity 1 and Security Vulnerability |

TACACS+ (Device Administration) | 3rd party Network device support New UI | Work Centers |

|

|

1.4 |

End of SW Maintenance |

SAML SSO for Portals | Multiple Cert templates | Automatic PAN failover |

|

1.3 |

EOL |

Major Guest enhancements | pxGrid | Internal Certificate Authority | AD Multi-Forest support |

|

1.2 |

EOL |

MDM Integration | Policy Sets | 2012 AD Integration |

|

1.1 |

EOL |

Guest Portals | TrustSec | EAP Chaining | CoA | Native Supplicant Provisioning |

|

1.0 |

EOL |

Install and upgrade | AAA (RADIUS) | Profiling |

Choosing the version of ISE to upgrade would be the first step to plan for a successful upgrade. It should be based on need, maturity, End of life considerations and based on fixes needed to support the ISE deployment and users. It also a good rule of thumb to be within two versions from the latest released version. Reason is simple, there are more fixes added to these releases to ensure stability that may not make it to older releases. Always look for the Patch 1 or Patch 2 to upgrade since it will help in getting higher reliability and fix upgrade issues if any. Policy Set view is changed starting ISE 2.3 & above Please see this blog on changes in ISE 2.3 Policy sets and additional technical details here.

|

Note: It is highly recommended to upgrade to ISE 2.4 patch 5 or latest. Please see their release notes for details. |

Issues, Fixes and Other Considerations

It is recommended to upgrade to the latest patch before upgrading since latest patches will fix known upgrade failures. The release notes of the original ISE version will have specific details as to upgrade defects later patches resolve.

If upgrading, download and use the latest upgrade bundle for upgrading since it will have latest known fixes available.

Refer to Appendix Section C for some of the recent issues observed and fixed.

Pre-Upgrade Steps

Planning your upgrade requires knowing and cleaning up your existing ISE deployment configuration. These are the general pre-upgrade steps needed.

- Save the ISE running-config from the command line of the node being upgraded.

- Check validity of certificates - Delete the expired certificates, because they will cause failures for upgrade/restore to a later release.

- Export the certificates and private keys. For PAN this should be done via CLI. (See reference after step 14 for details). From ISE GUI go to Administration->System->Certificates->System Certificates, select the certificate and click Export (Select Export Certificates and Private Keys) Also if you have installed new Trusted certificate, export those certificates

- Adjust the local log setting and/or purge them as needed. This frees up the local disk space in general and in case you had an issue or an outage before. You can also setup remote logging targets for forward logs to a remote syslog server.

- Obtain the configuration and operational backup from Cisco ISE Admin Portal .Ensure that you have created repositories for storing the backup files. See the note below for additional information.

|

Note: ISE 2.0 and above supports both CLI and GUI based backup and restore. Prior to ISE 2.0, ISE supported CLI based backup and restore. GUI option does not support backup from older release. You need to use CLI for that. Follow the instructions in Cisco documentation ( ISE 1.3, ISE 1.4, ISE 2.0, ISE 2.1) and read through the guidelines to understand options. |

- If using Profiler service, ensure that you record the Profiler Configuration for each PSN from the Admin Portal, services turned on and certificate configuration for each of ISE nodes. From ISE GUI, go to Administration->System->Deployment->choose PSN node and choose profiler configuration tab and record the configurations under.

- As part of best practice, purge operational logs if not needed or based on how long you need the operational backup logs (This can vary based on the customer and region).

- Purge endpoints based on your policy.

- Set the VM Network adapter to E1000 or VMXNET3.

- If upgrading to Release 2.0.1+ on an ESXi 5.x server (5.1 U2 minimum), you must upgrade the VMware hardware version to 9 before you can select Red Hat Enterprise Linux (RHEL 7) as the Guest OS. RHEL 7 is supported from ISE 2.0.1 onwards. ISE 1.4 and ISE 2.0 installs on RHEL 6.0.

- Allocate dedicated resources in VM based on the H/W requirement and the type of node. Minimum requirement for VM. Please see the installation guide for details

- Disable automatic PAN Failover (if configured) and disable Heartbeat between PANs. This should be done while upgrading the production deployment not during testing.

- If using load balancers, take the backup of load balancers before changing the configuration. Look at the instructions in the reference below. You may remove the PSNs from the load balancers temporarily from your deployment during the upgrade window and add it after full upgrade.

References:Please take a look at ISE 2.4 pre-upgrade checklist for full details. - Licensing: From Cisco ISE release 2.4, the number of Device Administration licenses must be equal to the number of device administration nodes in a deployment. For Release 2.4 and above, it is recommended that you install appropriate VM licenses for the VM nodes in your deployment. You must install the VM licenses based on the number of VM nodes and each VM node's resources such as CPU and memory. Otherwise you will receive warnings and notifications to procure and install the VM license keys.

- Outdated, redundant or stale policy or rule cleanup (optional but recommended): As part of upgrade effort, revise and clean existing policy and rule set in ISE. Some of the policies might be inactive, obsolete and no longer relevant. Same goes true for users and Network devices. ISE 2.3 introduced Hit Counts to identify policies being used. Consolidating and optimizing several policies into one is a good way to make configuration more manageable, more scalable and less prone to mistakes.

- Cleanup from previous upgrades: If you ran a CLI upgrade before and not sure if clean-up was done. Run a “application upgrade cleanup” before upgrade. This cleans up upgrade bundles from ISE that was left from previous upgrades.

Here is the related defect: https://bst.cloudapps.cisco.com/bugsearch/bug/CSCvm47317 - Staging Environment for Upgrade testing: Create separate instances of ISE servers for staging the upgrade to perform tests in the lab to ensure smooth upgrade and necessary changes in the configuration. In such a case, all changes will be done in that staging environment and will not affect production environment.

|

Tip: It is highly recommended to test the upgrades using URT tool and MNT upgrade before upgrading your production. URT tool helps find issues upgrading from ISE 2.0+ to ISE 2.4. You can also use this tool for upgrades to ISE 2.2. For upgrade from ISE 1.x release where you need to do a multi-hop upgrade, it is important to test PAN and MNT upgrade before production upgrade. |

Log retention and sizing MnT hard disks

Upgrade does not need changes to the MnT disk capacity. However, if you are consistently filling up the logs and need greater hardware capacity you can plan out the Hard disk size for MnT taking into account the log retention needs for your customer. It is important to understand that log retention capacity has increased many folds in ISE 2.2 than the previous releases. You can also setup collection filters in ISE to filter out unnecessary logs from different devices that overwhelms your ISE MnT(From ISE UI, go to Administration->System->Logging->Collection filters).

Please see the ISE storage requirements under ISE performance and scalability community page. The table lists log retention based on number of endpoints for RADIUS and number of Network devices for TACACS+. Log retention should be calculated for both TACACS+ and/or RADIUS separately.

Upgrade using Backup/Restore Procedure

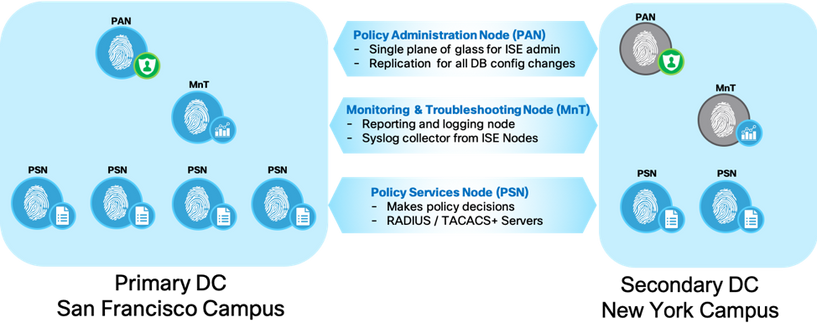

Upgrade process can be either a single step upgrade or a two-step upgrade based on the version you need to upgrade to. In this section, we are going to take an example of a two-step upgrade process and provide steps for upgrading successfully. Let us consider a scenario with a customer having two Data Centers at San Francisco and New York connected by a high-speed link. Let us say we have ISE 1.3 version patch 6 and wanted to upgrade to ISE 2.4 (latest patch).

This requires a two-step upgrade, from ISE 1.3 => ISE 2.1 => ISE 2.4.

Let us take an example of a sample deployment and walk through the different phases of upgrade. Let us take a fully distributed ISE deployment with Primary Policy Administration Node (PAN), Monitoring and Troubleshooting (MnT) in Primary DC and secondary PAN, MnT is secondary DC. The Primary DC at San Francisco has 4 PSNs and Secondary DC at New York has 2 PSNs.

It is important to understand that you can have larger number of PSNs in your deployment located in datacenter or in branch/remote locations, but for this example we take a handful of PSNs.

Let us separate the upgrade into three phases. Each phase consists of a few steps and a handful of tasks to perform under each step. Here is the overview of the phases and steps under each of them. We will dive into each phase and try and understand the tasks at hand during each step to complete upgrade.

Phase 1: Upgrade PAN and MnT Nodes at Secondary Datacenter (New York Campus) to ISE 2.4 release.

- De-register Secondary PAN Node at New York Campus

- Re-image the deregistered Secondary PAN Node (Node 1) to ISE 2.1 release and restore ISE 1.3 configuration backup.

- De-register Secondary MnT Node at New York Campus

- Re-Image the deregistered Secondary MnT node (Node 2) to ISE 2.1 release and restore ISE 1.3 operational backup

- Re-image Node 1 to ISE 2.4 release.

- Re-image Node 2 to ISE 2.4 release and Join to Node 1 as Primary MnT

Phase 2: Join PSN’s nodes at New York Campus (Secondary DC) to ISE 2.4 release.

- De-register PSNs at the New York Campus

- Re-image PSN at New York Campus to ISE 2.4 Patch5 and join PSN to new ISE 2.4 deployment

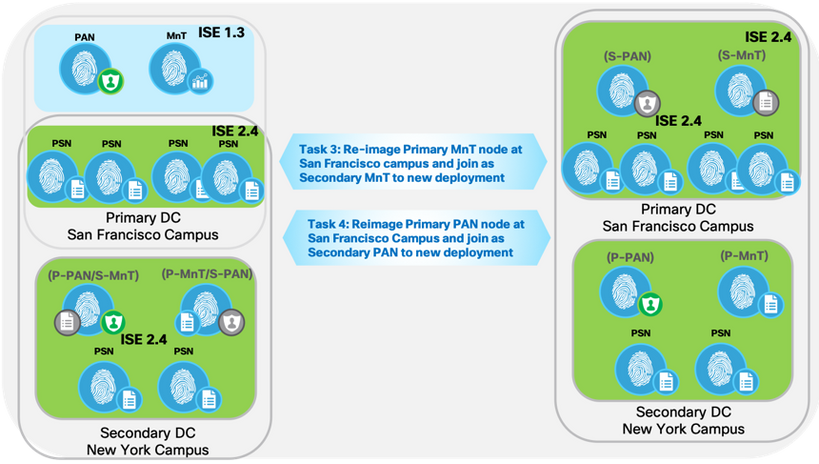

Phase 3: Upgrade PSN, PAN & MnT Nodes at San Francisco Campus (Primary DC) to ISE 2.4 release.

- De-register PSNs at the San Francisco Campus

- Re-image PSN in San Francisco Campus to ISE 2.4 Patch 5 and add PSN to new deployment (ISE 2.4 Patch 5 cluster)

- Re-image Primary MnT node at San Francisco Campus and Join as Secondary MnT to new deployment

- Re-image Primary PAN node at San Francisco Campus and Join as Secondary PAN to new deployment

Next, let us walk through Multi-step upgrade from ISE 1.3 => ISE 2.1 => ISE 2.4 in more detail discussing the different phases and steps as outlined above.

Phase 1: Upgrade PAN and MnT Nodes at New York Campus (Secondary DC) to ISE 2.4 release

Restore Backup from ISE 1.3 to ISE 2.1 Patch 6 or Latest.

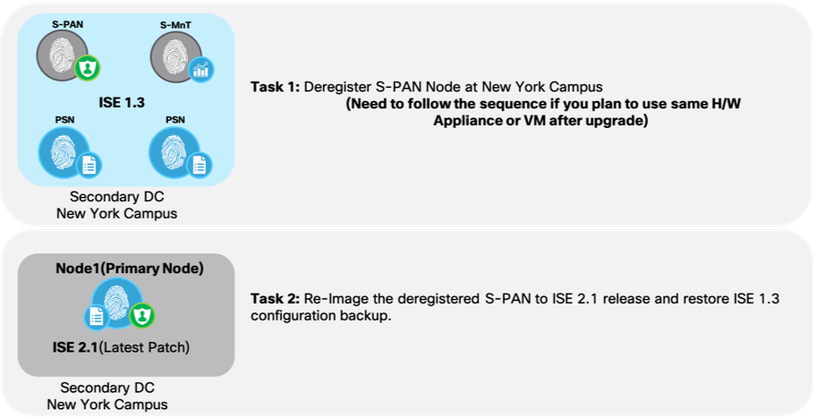

Task 1: De-register Secondary PAN Node at New York Campus. (Remember to do these tasks in sequence if you plan to use same H/w appliance after upgrade)

- Choose Administration -> System -> Deployment

- Check the check box next to the secondary node that you want to remove, and then click Deregister and Click OK

Task 2: Re-image the deregistered Secondary PAN Node (Node 1) to ISE 2.1 release and restore ISE 1.3 configuration backup.

Figure5: Cisco ISE S-PAN re-image

- Fresh install ISE 2.1 version on deregistered Secondary PAN node (Appliance/VM) as standalone node. Let us call it Node 1.

- Apply latest ISE 2.1 patch to Node 1.

- Promote Node 1 as Primary node for ISE 2.1 deployment.

- Restore ISE 1.3 configuration backup on to Node 1.

- Import ise-https-admin CA certificates from Secondary PAN unless using wild card certificates.

- Take a new ISE 2.1 configuration backup from Node 1.

Task 3: De-register Secondary MnT Node at New York Campus

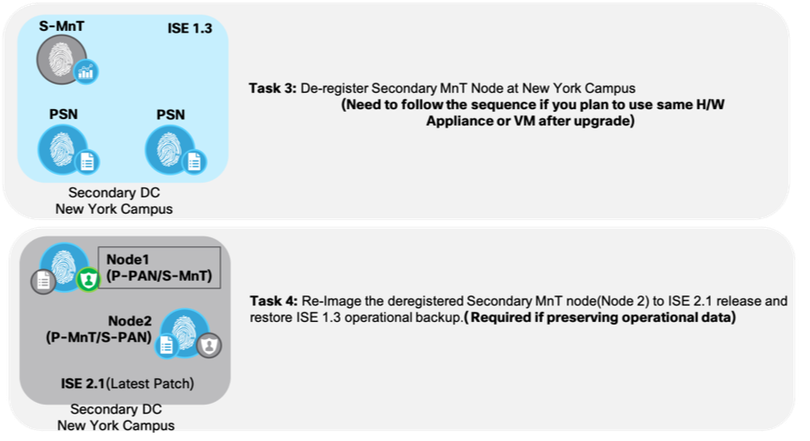

Figure6: Cisco ISE S-MNT upgrade

Task 4: Re-Image the deregistered Secondary MnT node (Node 2) to ISE 2.1

(Needed only if preserving operational data. Skip this step if you don’t have to preserve operational backup). If choosing to continue, remember that ISE installation can be done upfront or in-parallel to save time.

- Fresh install ISE 2.1 on deregistered S-MnT node (Appliance or VM based as standalone node (Node 2).

- Apply latest ISE 2.1 Patch to Node 2.

- Join Node2 as Primary MnT/Secondary PAN node for ISE 2.1 deployment.

- Restore ISE 1.3 operational backup on to Node 2.

- Import ise-https-admin CA certificates from ISE 1.3 backup repository.

- Take a new ISE 2.1 operational backup.

Upgrade to ISE 2.4 with latest patch and create a new ISE 2.4 deployment

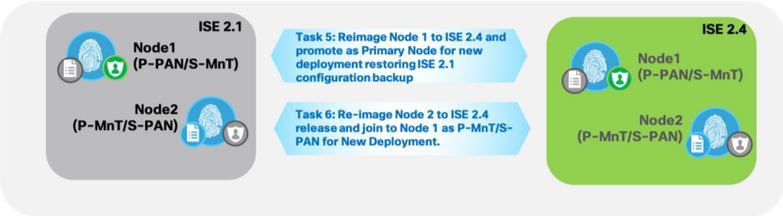

Figure7: Cisco ISE 2.1 to ISE 2.4

Task 5: Re-image Node 1 to ISE 2.4 release

- Fresh install ISE 2.4 on Node 1 as standalone node.

- Apply latest ISE 2.4 Patch to Node 1.

- Promote the node as Primary node for New 2.4 deployment.

- Restore ISE 2.1 configuration backup on to Node 1.

- Import ise-https-admin CA certificates from backup repository unless using wild card certificates.

At this point you have a Primary Node in ISE 2.4. Now you need a Primary MnT in the deployment.

Task 6: Re-image Node 2 to ISE 2.4 release and Join to Node 1 as Primary MnT node.

- Fresh install ISE 2.4 on Node 2 as a Standalone Node.

- Apply latest ISE 2.4 Patch to Node 2.

- Import ise-https-admin CA certificates from ISE 1.3 backup repository unless using wild card certificates.

- Join the node as Primary MnT/Secondary PAN node for New 2.4 deployment.

Now to keep the operational data in sync, you need to restore the ISE 2.1 operational backup on to Node 2. Perform this step only if it required to preserve the operational backup.

Alternatively, to speed up the upgrade process (Task 5 & 6) installing ISE 2.4 on spare Appliance or VM, applying latest patch and restore the configuration & operational backup from ISE 2.1 P6 or latest (Node 2) and joining to ISE 2.4 deployment as Primary PAN and MnT. Remember you need an Appliance or VM available, boot strapped and ready for this.

Optional Step: How do you carry over the differential logs to the new deployment?

Optionally, to carryover the differential logs (that is the delta of the logs from the time you took the backup(ISE 1.3) from the old deployment till your new deployment is ready) to the new deployment, you can forward the logs from the Primary MnT in the old deployment to Primary MnT in the new deployment.

From ISE UI of the Primary PAN in the old deployment(ISE 1.3) go to Administration->System->Logging->Remote Logging target, create a remote logging target that points to the Primary MnT in the new deployment (Syslog: UDP/20514, Secure Syslog: TCP/6514, Facility code: Local6). Then, go to ‘Logging categories’ another item under ‘Logging’ menu to forward specific logs to the remote logging targets. The last step is key to forward newer logs to the new deployment.

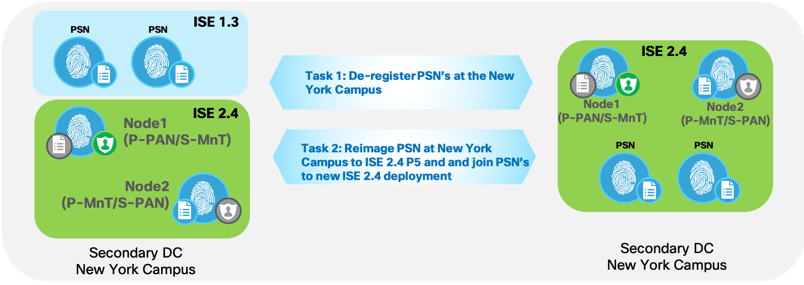

Phase 2: Join PSN’s nodes at New York Campus (Secondary DC) to ISE 2.4 release

Task 1: De-register PSNs at the New York Campus.

Note: Nodes can be de-activated and re-activated on the load balancer if one is in use to minimize failover times and reduce any impact to the network.

Figure8: Cisco ISE PSN re-image at Secondary DC

Task 2: Reimage PSN at New York Campus to ISE 2.4 Patch5 and join PSN to ISE 2.4 deployment

- Fresh install ISE 2.4 on deregistered PSN’s as a Standalone Node.

- Apply latest ISE 2.4 Patch to the PSN.

- Import ise-https-admin CA certificates of PSNs.

- Join/Register ISE 2.4 PSN node with Primary PAN node of New 2.4 deployment. Once registered, Data will be sync’d to PSN node and application server will be restarted.

Note: You can upgrade several PSNs in parallel, but if you upgrade all the PSNs concurrently, your network will experience a downtime.

|

Warning! It is important to test the upgrade before doing a production upgrade. |

Post-Upgrade Steps

Here are a few post-upgrade instructions, but look at the complete list here for ISE 2.4.

- Connect to Active Directory store for authentication.

- Import EAP certificate to all ISE nodes.

- Import the licenses.

Finally, test your ISE 2.4 deployment for all the use cases with few real users before proceeding further.

Phase 3: Upgrade PSN,PAN & MnT Nodes at San Francisco Campus(Primary DC) to ISE 2.4 release.

Figure9: Cisco ISE PSN re-image at Primary DC

Task 1: De-register PSN’s at San Francisco Campus.

Note: If traffic was shifted via load balancer make sure to shift the traffic to the newly upgraded nodes before starting this phase.

Task 2: Reimage PSN’s at San Francisco Campus to ISE 2.4 Patch 5 and join PSN’s to ISE 2.4 deployment

- Fresh install ISE 2.4 on 4x deregistered PSN’s as a Standalone Node.

- Apply latest ISE 2.4 Patch to the PSN.

- Import ise-https-admin CA certificates.

- Join/Register ISE 2.4 PSN node with Primary PAN node of New 2.4 deployment and data from the primary node is replicated to all PSN’s.

Task 3: Reimage Primary MnT node at San Francisco Campus and Join as Secondary MnT to new deployment.

- Fresh install ISE 2.4 on Primary MnT Node as a Standalone Node to new deployment

- Apply latest ISE 2.4 Patch

- Import ise-https-admin CA certificates from ISE 1.3 backup repository unless using wild card certificates

- Restore ISE 2.1 operational backup.

- Promote/Join the node as Secondary MnT node for New 2.4 Deployment

Task 4: Reimage Primary PAN node at San Francisco Campus and Join as Secondary PAN to new deployment

- Fresh Install ISE 2.4 on Primary PAN node at San Francisco DC as Standalone node for Secondary PAN in new deployment.

- Apply ISE 2.4 Patch 5 or latest patch.

- Import ise-https-admin CA certificates from backup repository unless using wild card certificates

- Register the node as Secondary PAN node for New 2.4 Deployment.

- With above two Task, the ISE servers that are in that role in the deployment will relinquish the respective role allowing you to add dedicated and separate nodes for PAN and MnT for Primary and Secondary roles.

|

Note: You do not need to move your Primary MnT to the new deployment to complete the upgrade. In an ISE deployment, Primary and Secondary MnT is an active-active pair and logs gets sent to both the nodes. If you do not want to preserve the logs then this is not a problem. However if you want to preserve logs, you can have the differential logs ( Delta logs from the time you backup MnT till now) to be forwarded to the new deployment as outlined above (Look at the last paragraph in Phase 1àStep2). Once the upgrade is complete, you can archive the logs and shutdown/re-purpose the Primary MnT. |

|

Note: At the end of the upgrade ISE PAN and MnT in Primary DC (San Francisco campus) becomes Secondary PAN and MnT in the new deployment. You need to reverse it if needed. |

Post-Upgrade Steps

Once you complete the upgrade of the entire deployment, run though all the post-upgrade steps mentioned in phase 1 and test it thoroughly. Finally take a backup of the configuration database and operational database as needed.

Cleanup from previous upgrades: If you ran an upgrade using upgrade bundle during any of your steps. Run a “application upgrade cleanup” from CLI. This cleans up upgrade bundles from ISE that was left from previous upgrades.

Single-Step Upgrade (ISE 2.1 to 2.4) process

The number of phases in a single-step process is the same as described above, however Step 1 and Step 2 of Phase 1 should be merged together. Here is a simple list of things to do for upgrade between ISE 2.1 and ISE 2.4 considering the same example network as two step upgrade mentioned above.

Phase 1: Upgrade PAN and MnT Nodes at Secondary Datacenter (New York Campus) to ISE 2.4 release.

- De-register Secondary PAN Node at New York Campus

- Re-image the deregistered Secondary PAN Node (Node 1) to ISE 2.4, restore the ISE 2.1 configuration backup and promote the node as Primary node for new deployment

- De-register Secondary MnT Node at New York Campus

- Re-Image the deregistered Secondary MnT node (Node 2) to ISE 2.4, restore the ISE 2.1 operational backup and Join node as Primary MnT for new deployment.

Phase 2: Join PSN’s nodes at Secondary DC (New York Campus) to ISE 2.4 release.

- De-register PSNs at the Secondary DC

- Reimage PSN at Secondary DC to ISE 2.4 Patch5 and join PSN to new ISE 2.4 deployment

Phase 3: Upgrade PSN, PAN & MnT Nodes at Primary DC (San Francisco Campus) to ISE 2.4 release.

- De-register PSNs at the Primary DC

- Reimage PSN in Primary DC to ISE 2.4 Patch 5 and join PSN to new deployment (ISE 2.4 Patch 5 cluster)

- Reimage Primary MnT node at Primary DC and Join as Secondary MnT to new deployment

- Reimage Primary PAN node at Primary DC and Join as Secondary PAN to new deployment

Appendix A - Deployment Fundamentals

Deployment Fundamentals

This is a refresher for Administrators, Deployment specialist, Pre-Sales personnel to understand the basics of deployment design and how to scale the number of ISE servers based on endpoints, network devices, location, sites and other factors that are characteristic to your business.

This section is not must for upgrade but would serve as a good refresher if you are new to ISE and need to understand fundamentals of deployment design and deployment options. Here is a quick summary of ISE deployment types. ISE supports Standalone deployment for smaller customer and Distributed deployment for medium to large customers. All the deployment support services including network access, profiling, BYOD, Guest, Posture Compliance, TrustSec etc.

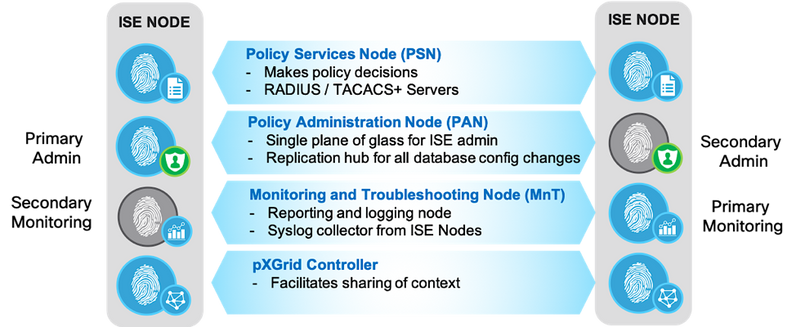

A Cisco ISE node is a dedicated appliance or virtual machine that supports different functional roles or personas such as Administration, Policy Service, Monitoring and PxGrid. Details about the functional roles are described here. These functional roles can be combined or separated in dedicated nodes to optimize the distribution of endpoint connections based on geography, based on the type of services used etc. Each of the personas can be part of a standalone or in a distributed deployment.

Simple Two Node ISE Deployment

In a simple 2 node ISE deployment with redundancy, ISE node can have an active/standby pair for Administration and active/active Monitoring personas. Policy services persona is also part of the same node for standalone deployment. Policy Service persona is the work horse of ISE providing network access, posture, BYOD, guest access, client provisioning, and profiling services.

Figure11: Cisco ISE Personas

In an ISE deployment, each persona can be a dedicated node with separate Administration, Monitoring and Policy Services nodes or a combination as shown in the figure. Policy Services Node provides AAA services including RADIUS services, TACACS services. Policy Services persona evaluates the policies, gathers profiling information and makes all the decisions in an ISE deployment. You can have more than one node assuming this persona.

Basic Distributed Deployment

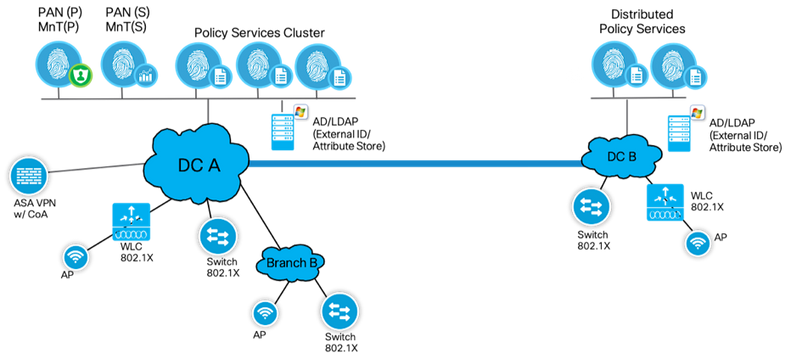

In ISE, the simple 2 node deployment above can be expanded to a basic distributed deployment, for a network that spans across different locations. For a basic distributed deployment, you can add up to 5 dedicated PSNs to the 2-node setup in the figure. In this topology, you have two PAN/ MnT nodes as primary and secondary in a redundant setup in the same datacenter. This setup supports to a maximum of 20,000 endpoints across PSNs across different geographies.

Figure12: Cisco ISE Basic Distributed Deployment

|

Note: In a distributed deployment, between ISE nodes the delay (latency) should be lesser than or equal to 200ms for versions ISE 2.0 and lower (300ms for ISE 2.1+) for successful node communication and replication. For TACACS+ the latency requirement may be relaxed. For Bandwidth requirement please use the calculator here. |

Fully Distributed Deployment

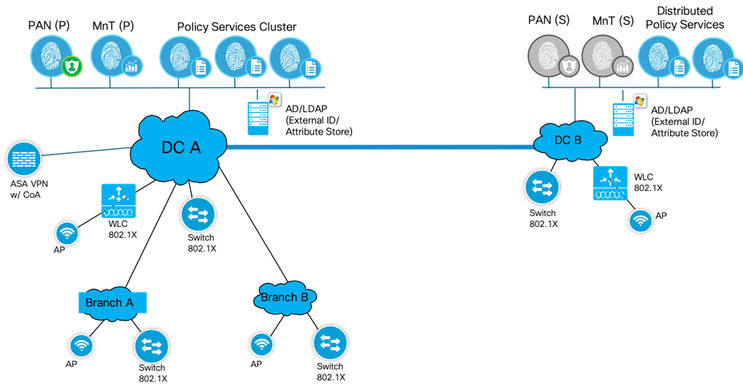

In a fully distributed deployment, the administration and monitoring personas are separated in different nodes with 2 Admin nodes and 2 MnT nodes. In a large network, the primary, secondary admin and monitoring nodes are dedicated nodes that can be in different Data Centers as shown in below.

Figure13: Cisco ISE Fully Distributed Deployment

The full distributed topology requires Data Centers to be connected with a high speed and low latency links. With ISE versions 2.1 and above, a maximum of 50 PSNs with two dedicated PAN and MnT is recommended with a maximum of 500,000 endpoints across PSNs (250k endpoints for ISE 2.0). Policy services nodes in the same location can be part of a node group called cluster that will help with high availability in case of PSN failures and for replication. This can be useful for services such as profiling etc. The ISE Performance and Scale community page provides comparison on the deployment limits of ISE, and can be used as a reference to determine to scale your deployment.

Deployment options

There are three ways you can deploy multiple services in ISE such as TACACS+ etc. These are dedicated deployment, dedicated PSNs and Integrated deployment. Each has its own pros and cons and use cases as mentioned here . Please use the right deployment option based on the need.

If you are using load balancers such as F5 please refer the ISE deployment best practices with Load balancer for additional information.

Once you have planned the release you need to upgrade to, it is recommended to test the upgrade in your lab setup. To plan and run your upgrade, you need to perform several steps. The document will guide you through these steps necessary for a medium or large-scale deployment.

Appendix B – Approach, Benefits & Drawbacks with each upgrade options.

Cisco Identity Services offers multiple options for upgrading, which can be aligned with organizational abilities and technical expertise. While there is no single recommended upgrade path that is one size fits all for every deployment. This section is meant to help guide in deciding what is the best option for ISE upgrade. The three options for upgrading are as following:

Figure14: Cisco ISE upgrade options

Figure15: Cisco ISE upgrade consideration flow chart

Backup/Restore or Re-imaging:

While re-imaging of the ISE node is typically seen as a troubleshooting or initial deployment step, it can also be used to upgrade a deployment while providing for restoration of the policy onto the new deployment once the new version is reached.

- Approach: Assuming resources are limited, and new deployment is unable to spin up a parallel ISE node, Secondary PAN & MnT is removed from production deployment and is used as the first device to upgrade. Nodes are moved into the new deployment; a configuration & operational backup is restored on respective nodes creating a parallel deployment with only the configuration from the previous deployment being restored. This allows for policy sets, custom profiles, network access devices, and endpoints to be restored into the new deployment without need for manual intervention.

- Benefits:

- Less Error Prone: When re-imaging nodes to create a new ISE deployment, customers typically find that the new deployment is as straight forward as the original deployment of the nodes, meaning the node is cleanly deployed and the configuration restored from backup.

- Maintains Operational Abilities: With the ability to manually choose which nodes will be reused for the new deployment, nodes can be used which are under-utilized as the preliminary upgrade nodes, giving more control of when and for how long a given area of the network is impacted. This also lends itself to the ability to parallel ISE node upgrades and deploy multiple at once, as opposed to a serial approach with a GUI based upgrade.

- Parallel upgrade of nodes can reduce downtime: Based on the ability to deploy PSN’s in parallel, this approach can reduce the amount of downtime, especially in a virtual environment. The parallel approach to deploying new PSN’s is hindered by being able to join only one node at the time to the ISE deployment, however, can reduce the upgrade time for nodes being upgraded individually.

- Staging abilities of the upgrade ISE deployment: If a deployment can withstand fewer operational nodes in their deployment or has additional VMWare resources available to stand up a parallel deployment, nodes can be staged outside of maintenance windows, reducing the amount of time to completion of the upgrade during the production impacting event. This would require just a switch over to the new nodes, or reconfiguration of the RADIUS servers on network access devices.

- Drawbacks

- Require additional personnel: Depending on the layout of a organizational structure, many customers will find that there is a need for involvement from multiple business units to perform this type of upgrade, including network administration, security administration, data center, and virtualization resources. Where teams have a synchronous relationship, this may be less impactful to the business, however, can result in increased operational costs to perform the upgrade due to the number of staff required operationally.

- Require additional resources: Deploying parallel deployments requires resources which can be reserved for the ISE deployment before being released. In the case of reusing existing hardware, additional load will need to be balanced to nodes which remain online, resulting in a need to evaluate current load and latency limits before the deployment begins to ensure the rest of the deployment can handle increases in users per node.

- Result in downtime: With deployment to limited expansion abilities, either need to account for downtime and fallback methods on network access devices, or the need to touch each network access device and change deployment to which it sends RADIUS requests to so the network rebalances between the two ISE deployments. In addition, the need to rejoin the node to the new deployment, restore certificates, rejoin to active directory, and wait for policy synchronization can lead to multiple reloads and a similar amount of time to deploy as compared to a net-new deployment.

- Limited abilities to rollback: Due to the deployment being re-imaged and all information contained on the node erased, rolling back is the same procedure as re-imaging second time.

- Recommendation: With additional resources available and given the flexibility to create an isolated environment or have load balancers to switch Virtual IP address for RADIUS requests, reimaging is a better approach. While additional staff may be required, but the ability to deploy in parallel and/or stage the deployment is worth for some deployment. If the deployment can be done well before the maintenance window and user load balancer to point to the new deployment is typically preferred by many.

ISE GUI Upgrade:

The ISE GUI upgrade is typically the next recommended way to upgrade an ISE deployment. It is single click with some customizable options that are offered to ensure they can accurately plan on the sequence of events which will need to occur to complete the upgrade throughout its processes.

- Approach: A GUI upgrade is executed from the ISE Administration > Upgrade menu and requires a repository be created to pull the image. During the upgrade the Secondary PAN is moved into an upgraded deployment automatically and is upgraded first followed by primary MnT creating the future state of ISE deployment. As a result, if either of these upgrades fail, it is mandatory that the node will be rolled back to the previous version and rejoined to the ISE deployment. Later PSN’s are moved one by one to the new deployment and upgraded. There is optional ability to continue the upgrades on failure or cease upgrades on failure and remain with a dual-version deployment, allowing for troubleshooting to occur before the upgrade continues. Only after all PSN’s have completed their upgrades will the Secondary MnT and Primary PAN be upgraded and joined to the new version ISE deployment.

- Benefits:

- Single Click: After doing minimal customizations and pointing to a repository where the ISE upgrade image will reside, the upgrade is automated with minimal intervention.

- Automatic: There is minimal intervention need, once upgraded, Nodes automatically rejoin the deployment.

- Flexible: The administrator can choose order of PSN Upgrade to ensure continuity whenever possible, especially when redundancy available between data centers.

- Operationally Inexpensive: A single administrator can execute the upgrade without need for additional personnel to touch each device or interact with third party hypervisors or network access devices.

- Drawbacks

- Continuation in Failure Scenarios: Within the upgrade procedure it can be chosen whether the upgrade script should continue on failure or cease upgrades when a PSN fails to upgrade successfully. While the Cisco Upgrade Readiness Tool should indicate any incompatibilities or misconfigurations, if the proceed option is checked, additional errors may be encountered if due diligence was not acted upon before the upgrade.

- Rollback Limitations: In a scenario where an upgrade fails on a PAN or MnT node, the nodes are automatically rolled back. If a PSN fails to upgrade, the nodes remain on their current version of ISE and troubleshooting can be done on the failed node while impairing redundancy. ISE is still operational during this time, and therefore rollback abilities are limited without re-imaging.

- Artifact Maintenance: In past versions of software it was found that settings such as the Java heap size were maintained between versions which impacted operation and required TAC intervention to resolve. These limitations are actively monitored and cleaned up in more modern upgrade scripts and can be detected with the upgrade readiness tool.

- Time to Completion: Due to the single click nature of the upgrade, all nodes must complete before the ISE deployment upgrade is complete. We typically see each PSN taking 90-120 minutes for upgrade, which makes this procedure longer for larger ISE deployments, with less control.

- Recommendation: For customers who are interested in a straightforward and limited number of clicks to upgrade, but are able to accommodate the upgrade time required, the GUI is the upgrade path to consider as well. Given the limited amount of ISE knowledge required to proceed with the upgrade, a single administrator can start the upgrade and rely on NOC or SOC engineers to monitor and report on upgrade status or open a TAC case.

CLI Based Upgrades:

Recommended for troubleshooting purposes only due to the level of effort required. ISE does offer the ability to upgrade strictly via CLI.

- Approach: This approach requires that the administrator touch each ISE node individually, download the upgrade image to the local node, execute the upgrade, and monitor each node individually throughout the upgrade process. While the upgrade sequence is similar in nature to that of the GUI upgrade, this approach operationally intensive from a monitoring and actions point of view.

- Benefits:

- Granular ability to monitor the upgrade: As CLI’s are used for the upgrade and requiring the image and upgrade to be performed locally, there is the ability to monitor the node via CLI as opposed to monitoring only via GUI. The CLI typically presents additional logging messages to the user while the upgrade is performed.

- Operational Continuity: Similar to the approach of re-imaging, the nodes which are upgraded can be chosen with more control and upgraded in parallel. This does result in the need to evaluate load and latency requirements to ensure nodes which are not being upgraded can handle additional load as endpoints are rebalanced across the deployment.

- Easier Rollback Abilities: As opposed to re-imaging, where an additional reimage needs to occur, or the GUI upgrade, rolling back at the CLI is much easier due to the ability to instruct scripts to undo previous changes.

- Easier Preparation: As the image resides on the node locally, copy errors can be eliminated if any between the PAN and PSN’s.

- Less Staff intensive as compare to Reimage: Due to the ability to copy the image locally to the node and needs CLI access per node as opposed to redeployment, less staff are generally needed when compared to re-imaging.

Appendix C – ISE defects Open and Fixed

If upgrading, download and use the latest upgrade bundle for upgrading since it will have latest known fixes available. Use the bug search tool from Cisco with the following query for ISE upgrade defects that are open and fixed:

Here are few common issues documented in ISE 2.4 documentation for Upgrades.

If you are upgrading to ISE 2.2, be aware of the following defects that affects upgrade. Cisco recommends that you apply the latest patch to your current Cisco ISE version before upgrade: CSCvc38488, CSCvc34766.

Here are few key high severity issues highlighted.

Upgrade Issue 1

ISE 2.4 - EST Service not running after upgrade from 2.3

Solution: This is fixed in ISE 2.4 patch 2. Workaround is to regenerate ISE Root CA chain

Upgrade Issue 2:

Solution: This is fixed in latest ISE 2.4 patch 4, latest ISE 2.2 patch.

Workaround: Disable the CDP conditions in Cisco-Device to prevent the PC from going down that profiling path.

Re-profile the device so that it get profiled correctly.

Upgrade Issue 3:

Re-imaging 35x5 appliance with ISE 2.4 fails. ISE 2.3 works fine.

"HTML based KVM" had known issues with CIMC 3.0.3(S2) that is resolved in 4.0(1c).

Solution: Workaround: Use HTML based KVM.

Upgrade Issue 4:

Solution: This is fixed in ISE 2.4 patch 2. All the other releases require Hotfixes.

Upgrade Issue 5:

ISE2.4 will keep old DNAC client cert cause new DNAC pxgrid with ISE fail

Solution: This is fixed in ISE 2.4 patch 2. Workaround is to delete old DNAC client certification

Upgrade Issue 6:

Dec 11 2017, upgrade failure issue was seen after the profiler feed update happened. This affects upgrade as well as backup/restore from ISE 1.3/ ISE 1.4/ ISE 2.0/ ISE 2.0.1 to ISE 2.1/ ISE 2.2 / ISE 2.3. It is highly recommended to follow the procedure in tech zone to fix it.

Solution:

|

Note: The above upgrade issue does impact 34xx and 35xx appliance if you had updated profiling feed after December 2017. Resource allocation is important during installs especially on VM environment. Make sure all the nodes are above 16GB before install. Run the URT tool that can be downloaded from Cisco.com. Always download the latest URT tool to test the upgrade. URT tool is supported from ISE 2.3. |

Upgrade Issue 7:

Upgrade to ISE 2.3: In a multi-node deployment with Primary and Secondary PANs, monitoring dashboards and reports might fail after upgrade because of a caveat in data replication. See CSCvd79546 for details.

Solution:

Workaround: Perform a manual synchronization from the Primary PAN to the Secondary PAN before initiating upgrade.

Appendix D - T+/ RADIUS Sessions per ISE Deployment

Dedicated TACACS+ only deployment:

Max Concurrent TACACS+ Sessions/TPS by Deployment Model and Platform

|

Deployment Model |

Platform |

Max # Dedicated PSNs ( # recommended) |

Max RADIUS Endpoints per Deployment |

Max T+ Sessions/TPS (TPS for recommended PSNs) |

|

Standalone (all personas on same node) |

3415 |

0 |

N/A |

500 |

|

3495 |

0 |

N/A |

1,000 |

|

|

3515 |

0 |

N/A |

1,000 |

|

|

3595 |

0 |

N/A |

1,500 |

|

|

Basic Distributed: Admin + MnT on same node; Dedicated PSN (Minimum 4 nodes redundant) |

3415 as Admin+MNT |

**5 (2 rec.) |

N/A |

2,500 (1,000) |

|

3495 as Admin+MNT |

**5 (2 rec.) |

N/A |

5,000 (2,000) |

|

|

3515 as Admin+MNT |

**5 (2 rec.) |

N/A |

* 5,000 (2,000) |

|

|

3595 as Admin+MNT |

**5 (2 rec.) |

N/A |

* 7,500 (3,000) |

|

|

Fully Distributed: Dedicated Admin and MnT nodes (Minimum 6 nodes redundant) |

3495 as Admin and MNT |

**40 (2 rec.) |

N/A |

20,000 (2,000) |

|

3595 as Admin and MNT |

**50 (2 rec.) |

N/A |

*25,000 (3,000) |

* Under ISE 2.0.x, scaling for small and large 35x5 appliance same as small and large 34x5 appliance.

** Device Admin service can be enabled on each PSN; minimally 2 for redundancy, but 2 often sufficient.

Red indicates TPS that will cause performance hits on MnT and is not recommended.

|

Scaling per PSN |

Platform |

Max RADIUS Endpoints per PSN |

Max T+ Sessions/TPS |

|

Dedicated Policy nodes |

SNS-3415 |

N/A |

500 |

|

SNS-3495 |

N/A |

1,000 |

|

|

SNS-3515 |

N/A |

* 1,000 |

|

|

SNS-3595 |

N/A |

* 1,500 |

Shared deployment (RADIUS + TACACS+):

Integrated PSNs: PSNs that share RADIUS and TACACS+ service and sharing same Admin + MnT node.

Dedicated PSNs: PSNs that are dedicated for RADIUS only or TACACS+ only service sharing same Admin + MnT.

Max Concurrent RADIUS Sessions / TACACS+ TPS by Deployment Model and Platform

|

Deployment Model |

Platform |

Max #PSNs: Integrated / Dedicated PSNs( Radius + TACACS+) |

Max RADIUS Endpoints per Deployment |

Max TACACS+ TPS (Integrated / dedicated T+ PSNs) |

|

Standalone (all personas on same node) |

3415 |

0 |

5,000 |

50 |

|

3495 |

0 |

10,000 |

50 |

|

|

3515 |

0 |

7,500 |

50 |

|

|

3595 |

0 |

20,000 |

50 |

|

|

Basic Distributed: Admin + MnT on same node; Integrated / Dedicated PSNs (Minimum 4 nodes redundant) |

3415 as Admin+MNT |

**5 / 3+2 |

5,000 |

100 / 500 |

|

3495 as Admin+MNT |

**5 / 3+2 |

10,000 |

100 / 1,000 |

|

|

3515 as Admin+MNT |

**5 / 3+2 |

7,500 |

* 100 / 1,000 |

|

|

3595 as Admin+MNT |

**5 / 3+2 |

20,000 |

* 100 / 1,500 |

|

|

Fully Distributed: Dedicated Admin and MnT nodes; Integrated / Dedicated PSNs (Minimum 6 nodes redundant) |

3495 as Admin and MNT |

**40 / 38+2 |

250,000 |

1,000 / 2,000 |

|

3595 as Admin and MNT |

*50* / 48+2 |

500,000 |

* 1,000 / 3,000 |

** Device Admin service enabled on same PSNs also used for RADIUS OR dedicated RADIUS and T+ PSNs.

* Under ISE 2.0.x, scaling for Small and Large 35x5 appliance same as Small and Large 34x5 appliance.

|

Scaling per PSN |

Platform |

Max RADIUS Endpoints per PSN |

Max TACACS+ TPS |

|

Dedicated Policy nodes |

SNS-3415 |

5,000 |

500 |

|

SNS-3495 |

20,000 |

1,000 |

|

|

SNS-3515 |

7,500 |

1,000 |

|

|

SNS-3595 |

40,000(ISE 2.1+) |

1,500 |

Appendix E - ISE VM Sizing and Log retention

|

Note: ISE 2.2 allows 60% allocation for logging for both RADIUS and TACACS+. ISE MnT requires purging at 80% capacity. For e.g.: If you have a 600G hard disk, 480G of hard disk will be the effective hard disk space with purging. Out of the 50% total allocation, 20% allocation is for TACACS+ and 30% is for RADIUS logs. |

TACACS+ guidance for size of syslogs

|

Message size per TACACS+ Session |

Message Size per Command Authorization (per session) |

|

Authentication: 2kB |

Command Authorization: 2kB |

|

Session Authorization: 2kB |

Command Accounting: 1kB |

|

Session Accounting: 1kB |

|

TACACS+ transactions, logs and storage

Human Administrators and Scripted device administrator (Robot) model

|

|

Session Authentication and Accounting Only |

Command Accounting Only |

Auth. + Session + Command Authorization + Accounting |

|||||||||

|

Avg TPS |

Peak TPS |

Logs/ Day |

Storage/ |

Avg TPS |

Peak TPS |

Logs/ Day |

Storage/ |

Avg TPS |

Peak TPS |

Logs/ Day |

Storage/ |

|

|

# Admins |

Based on 50 Admin Sessions per Day – Human Admin model |

|||||||||||

|

1 |

< 1 |

< 1 |

150 |

< 1MB |

< 1 |

< 1 |

650 |

1MB |

< 1 |

<1 |

1.2k |

2MB |

|

5 |

< 1 |

< 1 |

750 |

1MB |

< 1 |

< 1 |

3.3k |

4MB |

< 1 |

<1 |

5.8k |

9MB |

|

10 |

< 1 |

< 1 |

1.5k |

3MB |

< 1 |

< 1 |

6.5k |

8MB |

< 1 |

1 |

11.5k |

17MB |

|

25 |

< 1 |

< 1 |

3.8k |

7MB |

< 1 |

1 |

16.3k |

19MB |

< 1 |

2 |

28.8k |

43MB |

|

50 |

< 1 |

1 |

7.5k |

13MB |

< 1 |

2 |

32.5k |

37MB |

1 |

4 |

57.5k |

86MB |

|

100 |

< 1 |

1 |

15k |

25MB |

1 |

4 |

65k |

73MB |

2 |

8 |

115k |

171MB |

|

# NADs |

Based on 4 Scripted Sessions per Day – Scripted Device Admin model |

|||||||||||

|

500 |

< 1 |

5 |

6k |

10MB |

< 1 |

22 |

26k |

30MB |

1 |

38 |

46k |

70MB |

|

1,000 |

< 1 |

10 |

12k |

20MB |

1 |

43 |

52k |

60MB |

1 |

77 |

92k |

140MB |

|

5,000 |

< 1 |

50 |

60k |

100MB |

3 |

217 |

260k |

300MB |

5 |

383 |

460k |

700MB |

|

10,000 |

1 |

100 |

120k |

200MB |

6 |

433 |

520k |

600MB |

11 |

767 |

920k |

1.4GB |

|

20,000 |

3 |

200 |

240k |

400MB |

12 |

867 |

1.04M |

1.2GB |

21 |

1.5k |

1.84M |

2.7GB |

|

30,000 |

5 |

300 |

360k |

600MB |

18 |

1.3k |

1.56M |

1.7GB |

32 |

2.3k |

2.76M |

4.0GB |

|

50,000 |

7 |

500 |

600k |

1GB |

30 |

2.2k |

2.6M |

2.9GB |

53 |

3.8k |

*4.6M |

6.7GB |

*Red indicates logs/ day that will cause performance hits and slowness in log processing.

TACACS+ log retention (# of days):

Please see the static tables below for easy use. If you are an advanced user and would like to customize number of commands/sessions etc., please use the ISE MnT Log sizing calculator for TACACS+ and RADIUS .

Scripted Device Admin Model

- Number of sessions per day: 4

- Number of commands: 10

- Message Size /session (KB) = 5kB + Number of commands/session *3kB

- Automated access(single script) log size calculation = n Number of devices * 4 Sessions * Message size

- g. : Log Size for 30k Network devices = 4GB/day

ISE 2.1(20% allocation):

|

# of Network Devices in the deployment |

MnT Disk Size(GB) |

||||

|

200 |

400 |

600 |

1024 |

2048 |

|

|

500 |

480 |

959 |

1439 |

2455 |

4909 |

|

1000 |

240 |

480 |

720 |

1228 |

2455 |

|

5000 |

48 |

96 |

144 |

246 |

491 |

|

10000 |

24 |

48 |

72 |

123 |

246 |

|

20000 |

12 |

24 |

36 |

62 |

123 |

|

30000 |

8 |

16 |

24 |

41 |

82 |

|

50000 |

5 |

10 |

15 |

25 |

50 |

ISE 2.2 (60% disk allocation):

|

# Network Devices |

200 GB |

400 GB |

600 GB |

1024 GB |

2048 GB |

|

500 |

1439 |

2877 |

4315 |

7363 |

14726 |

|

1,000 |

720 |

1439 |

2158 |

3682 |

7363 |

|

5,000 |

144 |

288 |

432 |

737 |

1473 |

|

10,000 |

72 |

144 |

216 |

369 |

737 |

|

25,000 |

29 |

58 |

87 |

148 |

293 |

|

50,000 |

15 |

29 |

44 |

74 |

147 |

|

75,000 |

9 |

19 |

29 |

49 |

91 |

|

100,000 |

7 |

14 |

22 |

27 |

73 |

Human Device Admin Model

Device admin using sample number of sessions and commands shown below.

- Number of sessions: 50

- Number of Commands/session: 10

- Message Size /session (KB) = 5kB + Number of commands/session *3kB

- Manual access log size calculation = 50 Sessions * N Admins * Message size

- E.g : Log Size for 50 admins = 85.4MB/ day

|

Number of Admins\ Disk Size(GB) |

MnT Disk Size (GB) |

||||

|

200 |

400 |

600 |

1024 |

2048 |

|

|

5 |

3835 |

7670 |

11505 |

19635 |

39269 |

|

10 |

1918 |

3835 |

5753 |

9818 |

19635 |

|

20 |

959 |

1918 |

2877 |

4909 |

9818 |

|

30 |

640 |

1279 |

1918 |

3273 |

6545 |

|

40 |

480 |

959 |

1439 |

2455 |

4909 |

|

50 |

384 |

767 |

1151 |

1964 |

3927 |

RADIUS Log retention (# of days)

- Number of Authentications per day per endpoint: 100

- Custom Disk Size (GB): 500

- Allocated MnT Table-space (GB): 120

- Message size per Auth. (KB): 4

- Log Size/day: Number of Endpoints * 10 auth./day * Message Size

ISE 2.1 – (30% disk allocation)

|

Number of Endpoints |

MnT Disk Size (GB) |

|||||

|

200 |

400 |

600 |

1024 |

2048 |

Custom |

|

|

10,000 |

126 |

252 |

378 |

645 |

1,289 |

315 |

|

20,000 |

63 |

126 |

189 |

323 |

645 |

158 |

|

30,000 |

42 |

84 |

126 |

215 |

430 |

105 |

|

40,000 |

32 |

63 |

95 |

162 |

323 |

79 |

|

50,000 |

26 |

51 |

76 |

129 |

258 |

63 |

|

100,000 |

13 |

26 |

38 |

65 |

129 |

32 |

|

150,000 |

9 |

17 |

26 |

43 |

86 |

21 |

|

200,000 |

7 |

13 |

19 |

33 |

65 |

16 |

|

250,000 |

6 |

11 |

16 |

26 |

52 |

13 |

|

Note: Above values are based on controlled criteria including event suppression, duplicate detection, message size, re-authentication interval, etc. and result may vary depending on the environment.

|

ISE 2.2 – (60% disk allocation)

|

Total Endpoints |

200 GB (days) |

400 GB (days) |

600 GB (days) |

1024 GB (days) |

2048 GB (days) |

|

5,000 |

504 |

1007 |

1510 |

2577 |

5154 |

|

10,000 |

252 |

504 |

755 |

1289 |

2577 |

|

25,000 |

101 |

202 |

302 |

516 |

1031 |

|

50,000 |

51 |

101 |

151 |

258 |

516 |

|

100,000 |

26 |

51 |

76 |

129 |

258 |

|

150,000 |

17 |

34 |

51 |

86 |

172 |

|

200,000 |

13 |

26 |

38 |

65 |

129 |

|

250,000 |

11 |

21 |

31 |

52 |

104 |

|

500,000 |

6 |

11 |

16 |

26 |

52 |

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Could you please update this paper to including ISE 2.4? Thanks//ping

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

You can view the upgrade guide 2.4 at the following

https://www.cisco.com/c/en/us/td/docs/security/ise/2-4/upgrade_guide/b_ise_upgrade_guide_24.html

If there is a question please post it on the ISE community page http://cs.co/ise-community

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

While this is a great document, I do have some follow-up questions:

Upgrades (Dumb question but seeking validation)

- Customer is running 2.2P5 and will be upgrading to 2.2P9. Could we still use URT for this process?

Backup

- Based on the size of the DB, I understand the backup process can take multiple hours. Is the best practice here to run the backup the night before your scheduled upgrade/maintenance window? If so, should the customer have a change freeze in place for 24 hours?

- What impact does the backup process take during production hours? Is the best practice here to run the backup during off-hours?

Restore

- Does a restore take the same amount of time as the backup?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Tony,

Thanks for your initial comments. As discussed over jabber, URT is a tool to verify upgrade sanctity for PAN between two release by simulating an upgrade using your configuration db.

In your situation you are upgrading patches. Please make sure you do this during a downtime. Patches have to be updated for all the ISE nodes in the deployment.

Admin backup make take sometime depending on the type of the configuration, policies endpoints etc.

It is the operations backup that takes time again it depends on the number of endpoints, services you are running etc.

Customer need to do a backup whenever there is a change in the configuration or at regular intervals.