- Cisco Community

- Technology and Support

- Data Center and Cloud

- Application Centric Infrastructure

- ACI L3OUT over vPC with A LOT OF SVIs

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-14-2021 06:40 PM - edited 01-14-2021 06:43 PM

Hello Everyone,

I am preparing to migrate a data center with 10 VRFs, 121 VLANs, and 93 SVIs. The brownfield is pretty straight forward, I have two 7Ks at the core where all the VRFs, VLANs, and SVIs are defined. I have six 5548 switches with a lot of FEX 2248's connected. The FEX's are nearing end-of-life, so I need to migrate everything to the ACI fabric as network centric. Once I am off the FEX's, I plan to start moving to an Application Centric configuration model.

I have the L2 figured out. I plan to connect the two Nexus 7Ks to two border leaf switches and trunk all 121 VLANs to the ACI fabric through two port channels. I am having trouble wrapping my mind around is setting up the L3OUT. I am routing with OSPF on the 7Ks. Everything I have read and every example I have seen shows configuring a single VLAN on a single L3OUT. What is the best approach to moving 93 SVIs from a legacy network to SVI?

The 93 SVIs are currently broken up into 10 different VRFs, so I still need to keep those routing process separate. I know I could setup each one a separate tenant or separate VRF. For now, I plan on setting each up as a separate VRF, but I was wondering if there was a way to use a single VRF and split into separate L3OUTs in the same VRF.

If I were migrating from two 7Ks to another pair of traditional switches, I would just trunk the VLANs and move the SVIs. I know there are a couple of ways to move these to ACI, but some of the methods seem to be either complex or cumbersome to manage. I've been thinking about scripting some of the configurations through the REST API if it is going to be a complex process to manually configure each VLAN SVI.

Thanks in advance for any help.

Solved! Go to Solution.

- Labels:

-

Cisco ACI

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-20-2021 01:20 AM

Hi @bburns2

The best way to migrate a high amount of SVIs to ACI is definitely automation. I used Ansible in the past which is good and bad at the same time. Python or simply postman might be a good alternative.

Below is an example of the Ansible playbook I presented at a CiscoConnect - it includes shutting down SVI in NXOS, then enabling routing in BD:

- name: Perform Layer-3 Migration (NXOS)

hosts: nxos

gather_facts: False

tasks:

- name: Shutdown VLAN in existing Network

nxos_config:

lines:

- shutdown

parents: interface Vlan150

- name: Perform Layer-3 Migration (ACI)

hosts: aci

gather_facts: False

tasks:

- name: Change BD VLAN150 to L3 Mode

aci_bd:

host: '{{ inventory_hostname }}'

username: '{{ apic_username }}'

password: '{{ apic_password }}'

validate_certs: false

tenant: CiscoConnect2020

vrf: DEV

bd: VLAN150

enable_routing: yes

arp_flooding: no

l2_unknown_unicast: proxy

l3_unknown_multicast: flood

multi_dest: bd-flood

state: present

delegate_to: localhost

- name: Change subnet on BD VLAN150 to advertise externally

aci_bd_subnet:

host: '{{ inventory_hostname }}'

username: '{{ apic_username }}'

password: '{{ apic_password }}'

validate_certs: false

tenant: CiscoConnect2020

bd: VLAN150

gateway: 10.0.150.254

mask: 24

scope: public

state: present

To make it practical, you just need to run each task through a list (with_items).

Stay safe,

Sergiu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-20-2021 05:42 AM - edited 01-20-2021 05:45 AM

Another easy way to create multiple objects, is to use Postman runner and csv file for input. Create first SVI manually, save as JSON

and replace whatever needs change with {{VARIABLE}}. Script is ready.

Here is the JSON for the SVI :

{

"totalCount": "1",

"imdata": [

{

"fvSubnet": {

"attributes": {

"ctrl": "",

"descr": "",

"dn": "uni/tn-{{TENANT}}/BD-{{BD_NAME}}/subnet-[{{SUBNET}}{{NETMASK}}]",

"ip": "{{SUBNET}}{{NETMASK}}",

"name": "",

"nameAlias": "",

"preferred": "{{PRIMARY}}",

"virtual": "{{VIRTUAL}}",

"scope": "{{SCOPE}}",

"status": "created,modified"

}

}

}

]

}

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-19-2021 06:37 PM

In general, with migration link extended all VLANs from your 7K core you can migrate all connected endpoints to ACI, still doing L3 routing in 7K. After you will have to shutdown SVI in 7K and enable on L3 BD. How you will do routing between VRFs in ACI is a different topic.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-20-2021 06:54 AM

Thanks for the response. We are currently routing between VRFs through a couple of next gen firewalls. We also have a couple of inline taps for a packet aggregator. The packet aggregator forwards traffic to other security tools for packet inspection. Our plan is to move all the SVIs into ACI so we can sunset the legacy network. During this process, we would move the existing firewall connections to the ACI border leaf and continue to route the Inter-VRF traffic through the physical next gen firewall until we can move to an application centric model. Our goal is to eventually move to an application centric deployment model and introduce micro-segmentation. This is obviously going to take a significant amount of time to fully migrate, so we need to be able to support the legacy environment during this process.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-20-2021 01:20 AM

Hi @bburns2

The best way to migrate a high amount of SVIs to ACI is definitely automation. I used Ansible in the past which is good and bad at the same time. Python or simply postman might be a good alternative.

Below is an example of the Ansible playbook I presented at a CiscoConnect - it includes shutting down SVI in NXOS, then enabling routing in BD:

- name: Perform Layer-3 Migration (NXOS)

hosts: nxos

gather_facts: False

tasks:

- name: Shutdown VLAN in existing Network

nxos_config:

lines:

- shutdown

parents: interface Vlan150

- name: Perform Layer-3 Migration (ACI)

hosts: aci

gather_facts: False

tasks:

- name: Change BD VLAN150 to L3 Mode

aci_bd:

host: '{{ inventory_hostname }}'

username: '{{ apic_username }}'

password: '{{ apic_password }}'

validate_certs: false

tenant: CiscoConnect2020

vrf: DEV

bd: VLAN150

enable_routing: yes

arp_flooding: no

l2_unknown_unicast: proxy

l3_unknown_multicast: flood

multi_dest: bd-flood

state: present

delegate_to: localhost

- name: Change subnet on BD VLAN150 to advertise externally

aci_bd_subnet:

host: '{{ inventory_hostname }}'

username: '{{ apic_username }}'

password: '{{ apic_password }}'

validate_certs: false

tenant: CiscoConnect2020

bd: VLAN150

gateway: 10.0.150.254

mask: 24

scope: public

state: present

To make it practical, you just need to run each task through a list (with_items).

Stay safe,

Sergiu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-20-2021 05:42 AM - edited 01-20-2021 05:45 AM

Another easy way to create multiple objects, is to use Postman runner and csv file for input. Create first SVI manually, save as JSON

and replace whatever needs change with {{VARIABLE}}. Script is ready.

Here is the JSON for the SVI :

{

"totalCount": "1",

"imdata": [

{

"fvSubnet": {

"attributes": {

"ctrl": "",

"descr": "",

"dn": "uni/tn-{{TENANT}}/BD-{{BD_NAME}}/subnet-[{{SUBNET}}{{NETMASK}}]",

"ip": "{{SUBNET}}{{NETMASK}}",

"name": "",

"nameAlias": "",

"preferred": "{{PRIMARY}}",

"virtual": "{{VIRTUAL}}",

"scope": "{{SCOPE}}",

"status": "created,modified"

}

}

}

]

}

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-20-2021 07:26 AM

Thanks Sergiu,

We currently use Ansible Tower in our CI/CD pipeline, so I was planning to use Ansible to manage the ACI object creation. I have a playbook I have been testing with https://sandboxapicdc.cisco.com/.

I assume I can add multiple SVIs to an L3OUT logical interface profile, or do I need to create a separate L3OUT for each SVI?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-20-2021 07:35 AM

For the L3Out you only need one SVI (or even a router port). This is basically a L3 connection for routing to external subnets.

The 121 SVIs which are currently configured on Nexus, and correct me if I am wrong, are the GW for your servers. These ones will be configured on the BD, which you will mark as "export externally" to advertise them as prefixes to L3Out router.

Stay safe,

Sergiu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-20-2021 07:59 AM

Thanks Sergiu!

That makes sense, but I do have a couple of SVI's that peer with our F5 load-balancers and VPN concentrators. Those are currently physical, so I assume I would need an additional L3OUT SVI for those. For example, in my server VRF, I would have an L3OUT to peer with the firewall and 7K (currently a layer 2 VLAN), and an L3OUT SVI to peer with the F5 load-balancer? The rest of the SVIs would be defined as a subnet gateway for an external bridged network, and I would define an external bridge network for each of the VLANs?

Thanks for any help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-20-2021 09:50 AM - edited 01-20-2021 10:07 AM

Actually L3OUT could have SVI, Routed interface and sub-interface. it will be in a way "routed port", where you can have static routes, BGP, OSPF. For security (zoning table) there are "network EPG(s)" in L3OUT defining what is on the other side of L3OUT and you need to take care of the contacts or preferred group or to make VRF unrestricted. If you have a transit VRF (2 x L3OUT that have networks that need to talk to each other ) Net-EPG settings should be done with proper settings.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-24-2021 07:08 AM - edited 01-24-2021 07:11 AM

Thanks for all the great information. I think I understand a little better now. For gateways that need to peer with external OSPF neighbor's, I need to add the gateway to an L3OUT logical interface profile. If the gateway IP does not need to peer with an external OSPF router, I would add the gateway to the Bridge Domain, then bind to the L3OUT EPG with a contract. The only other question I have is how do I assign the tenant Bridge Domain gateway to a specific VLAN?

For example, If I have a gateway IP of 10.10.100.1/24 in a Bridge Domain, how would I bind or map this to VLAN 100 that is in the VLAN pool assigned to a physical domain or external bridged network?

Since I have external clients that need to use this IP as a default gateway, does this mean I need to assign the gateway IP to an L3OUT logical interface profile instead of a Bridge Domain subnet gateway? If that is the case, then when I have migrated all of my endpoints to ACI, do I need to move this gateway from an L3OUT to a Bridge Domain subnet?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-24-2021 09:14 AM

Hi @bburns2

You "assign" vlans to EPGs, and not BDs, and you do that in two ways - through static binding, or through AAEP. But for both options, the configuration of it is more than just the specific thing I highlighted.

And because of that, I think there are some knowledge gaps you need to cover first, before staring any migration to ACI, knowledge which cannot be learned through comments here on Cisco Community. Of course, you can always ask questions to clarify things which are not clear, but I will not suggest trying to learn the basics here on the community.

I would suggest as a very good starting point for ACI Learning the following free resources:

1. Cisco live - https://www.ciscolive.com where there are a lot of on-demand videos from previous ciscolive events.

One which I recommend starting with is: Your First 7 Days of ACI - DGTL-BRKACI-1001

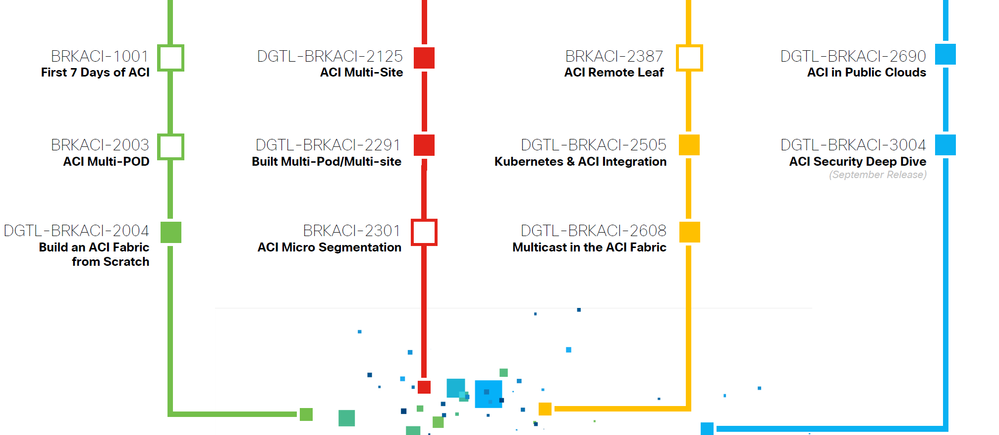

Here is a learning map for ACI:

Start from left to right. But I guess Green and Red might be more then sufficient for most of deployments. Yellow and Blue are very niche.

2. ACI Whitepapers and Config Guides- https://www.cisco.com/c/en/us/support/cloud-systems-management/application-policy-infrastructure-controller-apic/tsd-products-support-series-home.html

Start with the Fundamentals and Getting Started guides.

Trust me, on the long run, it will be more useful for you if you go through these materials first, rather then trying to configure small pieces each and there based on community suggestions and then hope it will work.

Stay safe,

Sergiu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-25-2021 10:45 AM

@Sergiu.Daniluk, thanks for the information. I've actually read through a lot of that content already, along with the different ACI design guides and the ACI dCloud DevNet sandbox and labs. We have a lab environment that mirrors our production environment and trying to put this information into practice to evaluate different migration strategies. Like @6askorobogatov mentioned, I think I am making things more complicated than they have to be. I was hoping to find a way to work around the 1 VLAN = 1 EPG = 1 BD. This seems time consuming and cumbersome to configure manually for 93 VLANs, so I will more than likely use Ansible to deploy configurations from JSON templates.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

01-24-2021 11:51 AM - edited 01-24-2021 03:41 PM

I think you are overcomplicating. Forget for a minute. about ACI. You have VRF, ok ? You have L2 VLANs (BD) with SVI (BD L3 interfaces)

Everything on that VRF can can talk to each other over L3 (all subnets are directly connected to VRF) and now you need to get to outside of the VRF. That is when you create routed port and config static or dynamic routing (L3OUT).

If you need to communicate with another VRFs on the fabric, you may have L3out on VRF1 connected to L3 device outside of the fabric (FW, router etc) and L3OUT on VRF2 connected to the other side of that FW/router.

Another mechanism is to use inter-VRF contacts to create routes between VRFs inside the fabric. Between tenants, there will be exported contracts and contract interfaces.

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide