- Cisco Community

- Technology and Support

- Security

- Security Knowledge Base

- High Availability and Scalability Design and Deployment of Cisco Firepower Threat Defense Virtual in...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

06-25-2020 03:12 PM - edited 06-29-2020 11:13 PM

- Introduction

- Prerequisites

- FTDv in Azure Design Considerations

- FTDv in Azure Design Scenarios

- Single Group of FTDv firewalls

- Inbound from Internet

- Outbound from the workloads to the internet

- E/W traffic inside Azure, or Between Azure and On-prem

- Azure Load Balancer Health Probes

- Two Groups of FTDv firewalls, Separating Internet and E/W traffic

- Multiple IP Address Assignment to Allow One to One Static NAT

- FTDv in Azure Deployment Example

- Step 1. Create a Resource Group

- Step 2. Create Availability Set

- Step 3. Create Virtual Network

- Step 4. Deploy ARM Template

- Step 5. Create and Configure Internal Load Balancer

- Step 6. Create and Configure Public Load Balancer

- Step 7. Create Internal and External NSGs

- Step 8. Create and Configure UDRs for Web, App & DB Subnets

- Step 9.First login and Initial Configuration of FTDv firewalls

- Step 10. Associate a Public IP on the Outside Interfaces for Outbound Traffic

- Step 11. Configure the FTDv Firewalls from FMC

Introduction

Cisco Firepower Threat Defense Virtual (FTDv) brings Cisco's Firepower Next-Generation Firewall functionality to virtualized environments, enabling consistent security policies to follow workloads across your physical, virtual, and cloud environments, and between clouds.

The cloud is changing the way infrastructure is designed, including the design of firewalls as the network is not physical or in virtual LANs anymore. Not all features of a physical network are available in Microsoft Azure Virtual Networks (VNet). This includes the use of floating IP addresses or broadcast traffic and that influences the implementation of HA architectures. Due to such limitations in Azure, normal FTD High Availability (HA) and clustering setups are not possible, instead, Load Balancers (LB) can be utilized in a certain way to achieve an HA architecture within a VNet.

This document explains a couple of design scenarios and walks through the deployment of two FTDv appliances in high availability, and in a setup that will be easy and simple to scale in the future.

Prerequisites

- Microsoft Azure account, you can create one at https://azure.microsoft.com/en-us/. Note that the Microsoft trial account does not allow deploying VM sizes that are enough to run FTDv. You have to upgrade to a paid account.

- Basic knowledge in Azure concepts, such as Subscription, Resource Group, Virtual Networks (VNet), Subnets, Network Security Groups (NSG), User Defined Routes (UDR), Availability Sets and Network Virtual Appliances (NVAs).

- A Firepower Management Center (FMC) deployed and licensed to manage the new FTDv. You can use an on-prem physical or virtual appliance, or you can deploy a new one in Azure, refer to Deploy the Firepower Management Center Virtual On the Microsoft Azure Cloud. Note that if you are deploying a new FMC, you can leverage the evaluation period before registering it to a Cisco Smart Account. If you don’t have a Cisco Smart Account yet, you can visit Cisco Software Central and go to Smart Software Licensing.

- Knowledge in basic steps to register FTD to FMC, device configuration, Access Control Policy, NAT and Routing configuration for FTD in FMC.

The information in this document was created from the devices in a specific lab environment. All of the devices used in this document started with a cleared (default) configuration. If your network is live, make sure that you understand the potential impact of any command.

FTDv in Azure Design Considerations

Before going through design scenarios, it is important to take the below points into consideration to set a basic understanding of Azure networking concepts relevant here:

- By default, in Azure, all resources in the same VNet can communicate with each other, whether they are in the same subnet or in different subnets. Also, by default, all subnets in a VNet have a default route outbound to the internet. UDRs can be used to override Azure default system routes, and direct traffic through firewalls when desired.

- You can communicate inbound to a resource by attaching a public IP address to it or to a public Load Balancer. Traffic can be controlled up to layer 4 using NSGs to limit the IPs that can access specific ports on a resource. Public IP mapping and routing to/from the internet is handled by Azure.

- You can connect your on-premises networks to a VNet using a VPN connection or an Azure ExpressRoute. Setting up such connections are not explained in this document.

- Only Internal Standard (Public LBs do not have this capability) Azure Load Balancers maintains a mapping of inbound and outbound requests so it can forward the correct response to the original requestor. This capability allows the traffic to be sent back to the correct firewall that originally handled the request and achieve symmetric routing. However, if the traffic passes through two or more Load Balancers, it is possible for the traffic to be sent back to another firewall, causing asymmetric routing. This happens because Load Balancers in Azure do not have access to each other’s mappings, and it can be solved by perform inbound source network address translation (SNAT). This would replace the original source IP of the requestor to one of the IP addresses of the FTDv used on the inbound flow. This ensures that you can use multiple FTDv appliances at a time, while preserving the route symmetry.

- Azure Load Balancer rules are usually configured to load-balance incoming traffic for specific TCP or UDP port. A high availability (HA) ports load-balancing rule is a variant of a load-balancing rule, configured only on an internal Standard Load Balancer, and helps you load-balance TCP and UDP flows on all ports simultaneously. This will allow us to load balance all incoming traffic to the firewalls.

- Azure Load Balancers use health probes to verify the availability of the NVAs in the backend pools. Health probes are basically a TCP connection sent to a port of choice, the source of the probe will always be from the virtual public IP address 168.63.129.16. NVAs must be properly configured to reply back to the probes.

- Azure Load Balancers default distribution mode for Azure Load Balancer is a five-tuple hash, the tuple is composed of the source IP and port, destination IP and port, and protocol type. This provides stickiness only within a transport session. Packets that are in the same session are directed to the same backend pool. When the client starts a new session from the same source IP, the source port changes and may cause the traffic to go to another backend pool IP.

FTDv in Azure Design Scenarios

A single FTDv firewall needs one interface for Management, one interface for Diagnostics, and two or more interfaces for data. When designing your Azure network to include FTDv firewalls, you have to prepare one separate subnet for each of the interfaces mentioned above.

Note: As of version 6.5, FTDv can support up to 8 vNICs when using VM sizes Standard D4_v2 or Standard D5_v2. Smaller VM sizes only supports 4 vNICs. Keep in mind that 2 vNICs must be used for Management and Diagnostics interfaces.

Single Group of FTDv firewalls

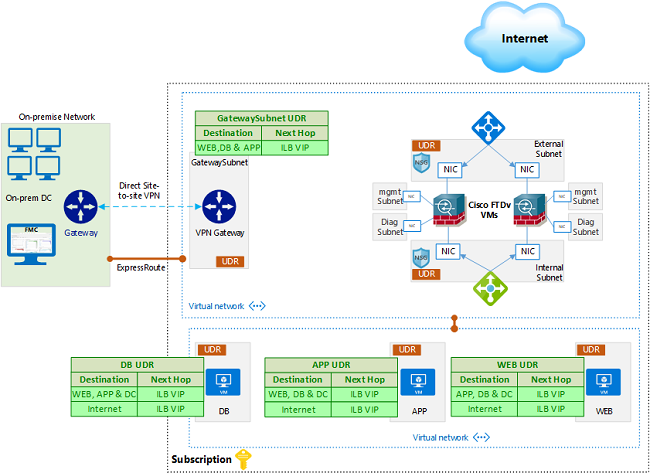

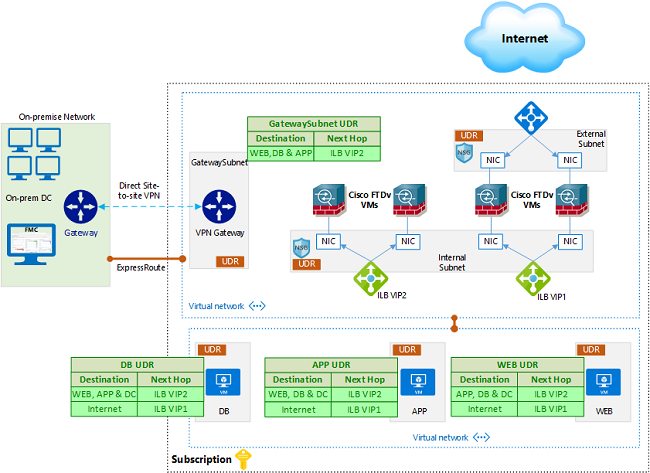

In this Scenario, we will use a single group of firewalls to handle all traffic; Inbound and Outbound, as well as East/West (E/W) traffic.

An already deployed FMC in the on-prem network is shown in this design, however, as explained earlier, FMCv can be deployed in Azure or even in another cloud. Once the ExpressRoute or Site to site VPN is setup, the FMC can reach directly to the FTDv management interfaces and vice versa.

The diagram below shows the components used, you can see two FTDv firewalls, but more firewalls can be added if higher throughput is required.

For the workloads, it is common to have 3-tier applications in their own VNets, but they can be in the same VNet as the firewalls or in different VNets. Also, load balancers can be used to distribute traffic if your application has more than one web server and so on.

Traffic that flows through these firewalls can be one of the following:

- Inbound from the internet

- Outbound from the workloads to the internet

- E/W traffic between the workloads in Azure, or on-prem

Also, the firewalls will handle the health probes sent from the Azure LBs. We’ll go through them one by one.

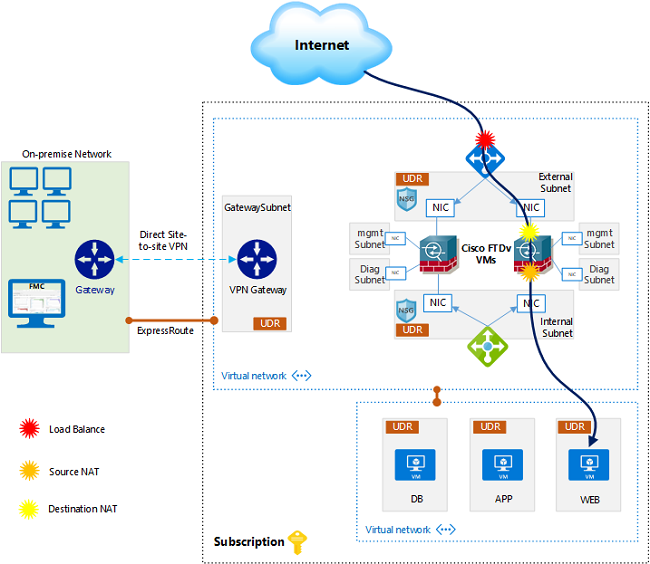

Inbound from Internet

For Inbound traffic, in this example, we need external internet users to access the web server on port 80. Diagram below shows the forward traffic flow, it also shows where load balancing and NAT happens.

Here, the traffic source will be some random IP address in the internet, and the destination will be the public IP assigned to the frontend external LB. The LB is configured with a rule to load-balance incoming traffic to port 80, to the external IP addresses of the FTDv firewalls. Once the traffic hits LB frontend public IP address, the LB will change the destination IP to one of the firewalls’ external IP and pass the traffic to it.

The firewall will first perform destination NAT, changing the destination IP from its own external IP address to the web server IP address. Also, it will perform source NAT, changing the original internet user public IP address, to its own internal interface IP address. Then, the traffic is finally sent to the web server. The source NAT is done to ensure that the return traffic is sent back to the same firewall that handled the forward traffic.

For the return path, the web server receives the traffic sourced from one of the firewalls internal IP address. When it replies, it can reach the send the traffic directly to the firewall. A couple of notes here:

- If the web server is in a different VNet, peering has to be done in Azure to allow the two VNets to communicate with each other

- If the source NAT was NOT done, the web server would’ve received the traffic with the original user public IP address, and it would have sent the return traffic to the internal load balancer. Which, in its turn, doesn’t know which firewall handled the forward traffic, and it could send the traffic to any firewall, causing asymmetric routing and traffic drop.

When the firewall receives the return traffic, it will match the session created on previously on it, undo the NAT done, and send the traffic back to the internet.

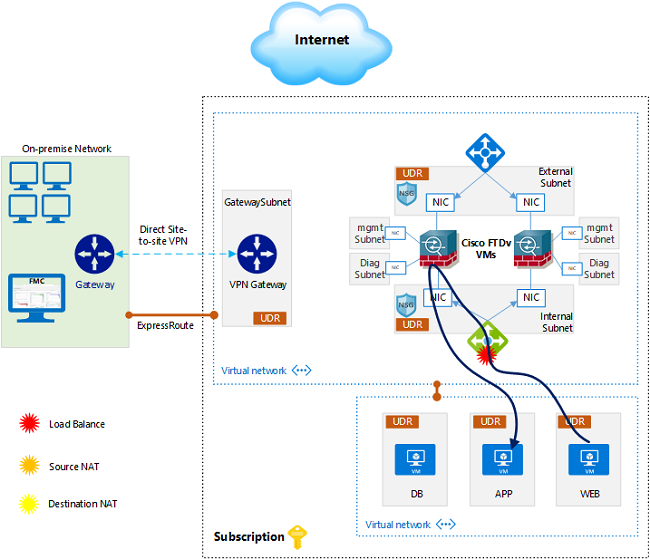

Outbound from the workloads to the internet

For example, your App servers needs to reach out to the internet to get an update. The below diagram shows the forward traffic flow.

First, we need to apply a UDR on the App subnet, with a default route pointing to the internal load balancer front end IP address. The internal load balancer is configured with HA ports rule to load balance all incoming traffic on all ports to the firewalls.

The Load Balancer will send the traffic to one of the firewalls. Each firewall has its own external IP address attached to a public IP address, and can reach the internet directly. It will source NAT the traffic and send it to the internet.

The return traffic is sent back to the public IP, Azure takes care of passing it to the to the respective private IP (firewall external interface) attached to it. When the firewall receives the return traffic, it will undo the NAT, and send it back to the app server.

E/W traffic inside Azure, or Between Azure and On-prem

For E/W traffic, we need to apply UDRs to the app, web and db subnets, to point to the internal load balancer IP address when they want to communicate to each other, routes will be as simple as the tables in the initial design diagram above. The traffic flow is shown in the diagram below.

As an example, the web server wants to reach out to the app server, it will send the forward traffic to the internal load balancer frontend IP, which load-balances it to one of the firewalls. The firewall that receives the traffic will handle it according to the security policies, and send it directly to the app server without any NAT.

Now when the app server replies, the return traffic is sent to the load balancer frontend IP. Since this is the same load balancer that handled the forward flow, and also it is an Internal Standard LB, it maintains the session affinity and sends the return flow to the same firewall that handled the forward flow. Now the firewall matches the traffic to the session it created and sends it back to the web server directly.

For the traffic coming from on-prem, we need to apply a UDR on the GatewaySubnet. The destination in the route will be the App, Web and DB subnets, while the next hop will be the Internal Load balancer IP. We also need to add a route for the App, Web and DB UDRs pointing to the ILB IP in order to reach the on-prem IP addresses. The traffic flow will be similar to E/W discussed above.

Note that for traffic to/from on-prem, it’s highly likely that it will be inspected by a physical FTD deployed on-prem, thus, rules can be relaxed on the FTDv firewalls in Azure, as there is no need to inspect the traffic twice.

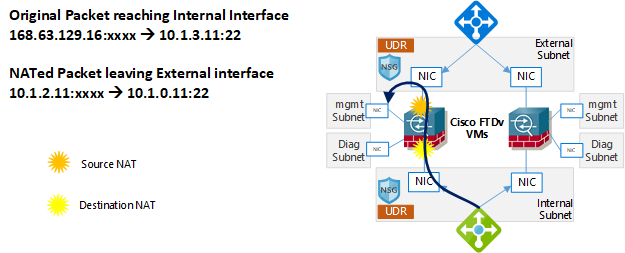

Azure Load Balancer Health Probes

As mentioned earlier, Azure Load Balancers use health probes to verify the availability of the NVAs in the backend pools. The source address for the health probes will always be the virtual public IP 168.63.129.16.

For the external LB, the backend pool IPs are the firewalls external IPs, while for the internal LB, the backend pool IPs are the internal IPs. The firewall will receive probes sourced from the same IP address on both internal and external interfaces. This creates an issue when the firewall replies back. As we can have only one effective route configured on the firewall to send the traffic back to 168.63.129.16, either from the internal interface or the external interface.

The workaround for this issue is achieved using twice NAT, where the destination is changed from the internal/external firewall interfaces to the management interface, while the source is switched to the other interface. Traffic flow is explained in the below diagram.

In this example, we have the internal interface IP as 10.1.3.11/24, external interface IP is 10.1.2.11/24, and the management is 10.1.0.11/24.

For the internal load balancer probes, Azure will send a packet sourced from 168.63.129.16 and a random port to 10.1.3.11 port 22 (the health probe destination ports are configurable). Once received, the firewall will do Twice NAT, changing the destination 10.1.3.11 to the management IP, 10.1.0.11. And also changing the source from 168.63.129.16 to the external IP address 10.1.2.11. The firewall is also configured with a route, for destination management IP 10.1.0.11, the next hop will be the external subnet gateway 10.1.2.1. The packet will reach the management interface of the firewall sourced from the firewall external IP.

The firewall management interface replies back to 10.1.2.11. When the reply comes back to the external IP, the firewall will match the session and undo all the NAT and send the traffic back to 168.63.129.16 from the original probe destination, the internal IP address 10.1.3.11. The firewall is configured with a route for 168.63.129.16 and a destination of the internal subnet gateway IP, 10.1.3.1.

For the external load balancer probes, the traffic is handled in the same way. The firewall is also configured with a different metric routes for both 168.63.129.16 and the management IP on the respective interfaces.

Keep in mind the following notes:

- The firewall has a separate management and data route tables.

- Azure will take care of routing the traffic between the external and management subnets, and the internal and management subnet, as they are in the same VNet

- The routes configured on the firewall for 168.63.129.16 and its own management IP address are only for session verification and finding the next hope. The egress interface is determined using NAT rules. Routes are added with different metric so the firewall configuration would be valid.

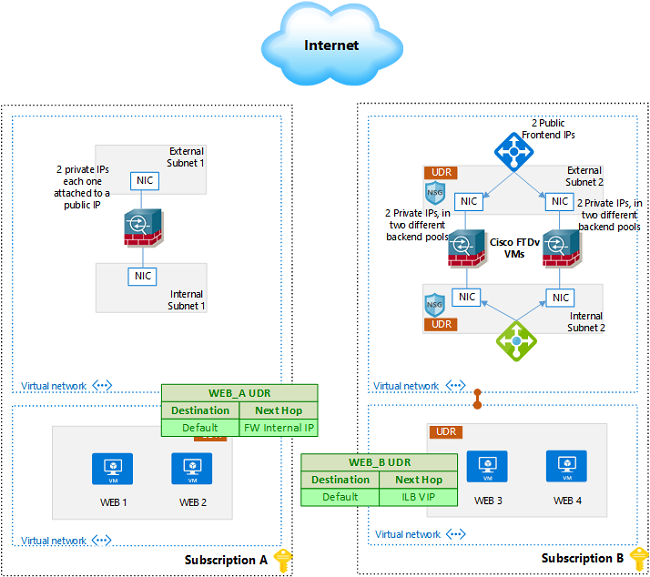

Two Groups of FTDv firewalls, Separating Internet and E/W traffic

In some situations, it is preferred to totally separate Internet and E/W traffic. We can deploy one group of firewalls to handle internet traffic, and another group to handle E/W traffic.

Another benefit of this design is to save on the license consumption. Threat, Malware and URL licenses are needed per each firewall. The higher throughput required, the more firewalls we add. In some situations, Internet traffic would not require more than two firewalls to satisfy the throughput requirements, while E/W traffic would need more. Firewalls that handle internet traffic would require URL license, while firewalls that handle E/W traffic usually do not. With that said, we can have two firewalls with Threat, Malware and URL licenses for Internet traffic, while deploying another group of firewalls scaling to the number required, with only Threat and Malware licenses.

Also, this design makes administration and operation easier, as we will not have NAT complications on the E/W traffic.

Below is a diagram of the components used in this design, management and diagnostic interfaces are not shown to simplify the diagram. Traffic flows scenarios are not explained in detail, as they are similar to the ones discussed above. Keep in mind that LBs, UDRs and NSGs must configured with care to satisfy the traffic flow desired.

Multiple IP Address Assignment to Allow One to One Static NAT

It is quite common to host more than one service on the same TCP port, to achieve this, multiple IP addresses can be assigned to the firewall. In case of scalable design, you can configure more than one frontend IP address on the public load balancer, and have more than one backend pool, consisting on the additional IP addresses assigned on the firewalls. The below diagram describes the setup required. Subscription A shows a single firewall design while Subscription B shows scalable design. Note that multiple designs can be in the same subscription.

On a physical FTD, once you configure a static NAT rule for a published service, the firewall starts doing proxy ARP in order to receive the traffic for the translated IP address. In Azure, that’s not the case, we need to add IP configuration to the external network interface, configure it with the private IP needed and attach it to a public IP address if needed. Remember that Azure NAT Gateway will take care of assigning the public IP and route the traffic to your private IP.

Once the IP configuration is added to the network interface of your firewall in Azure, it will start receiving traffic destined to that IP, and you can configure the required NAT and ACL rules to process the traffic.

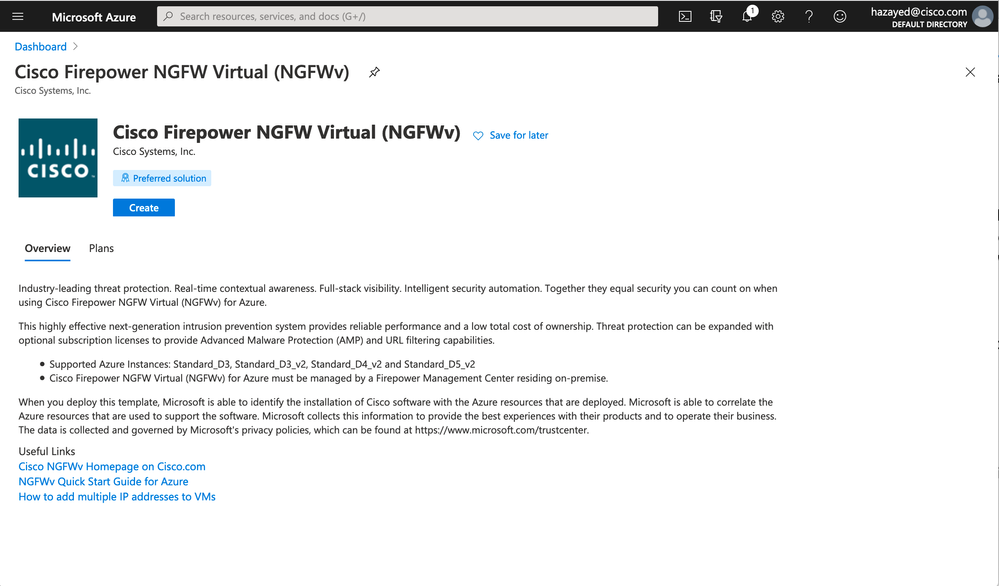

FTDv in Azure Deployment Example

You can deploy FTDv firewalls in Azure from the marketplace, search for Cisco Firepower NGFW Virtual, click on Create, and follow the wizard to complete the deployment.

Alternatively, you can deploy FTDv firewalls using ARM templates. In Azure, the Azure Resource Manager (ARM) is the management layer (API). Using ARM templates, you can take advantage of countless benefits, to mention a few:

- you can automate deployments and use the practice of infrastructure as code.

- ARM template defines the infrastructure that needs to be deployed. Just like application code, you store the infrastructure code in a source repository and version it.

- ARM template is a JavaScript Object Notation (JSON) file that defines the infrastructure and configuration for your infrastructure and resources. It uses declarative syntax, which lets you state what you intend to deploy without having to write the sequence of programming commands to create it.

- It allows you to deploy resources into an existing resource group

- Templates are validated before publishing. And can be reused, future deployments can be scripted to avoid human error.

In this example, you will find a step by step guide to deploy a single group of FTDv firewalls in high availability and scalability using an ARM template, the template used is attached to this document and you can download it from the link at the end. Also, you can find more templates in this GitHub repository. The example deploys the design described above in section Single Group of FTDv firewalls. You can go to Azure Portal, login and follow the steps below

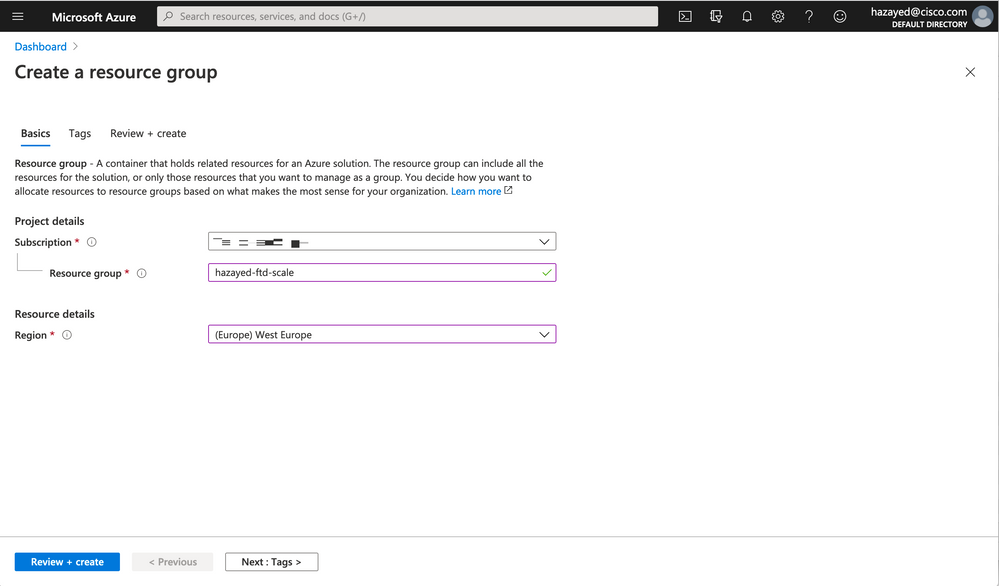

Step 1. Create a Resource Group

Search for Resource Groups, and click on Add. Choose the Subscription, Region and Resource group name, and click on Review+create, review the details and then click on Create.

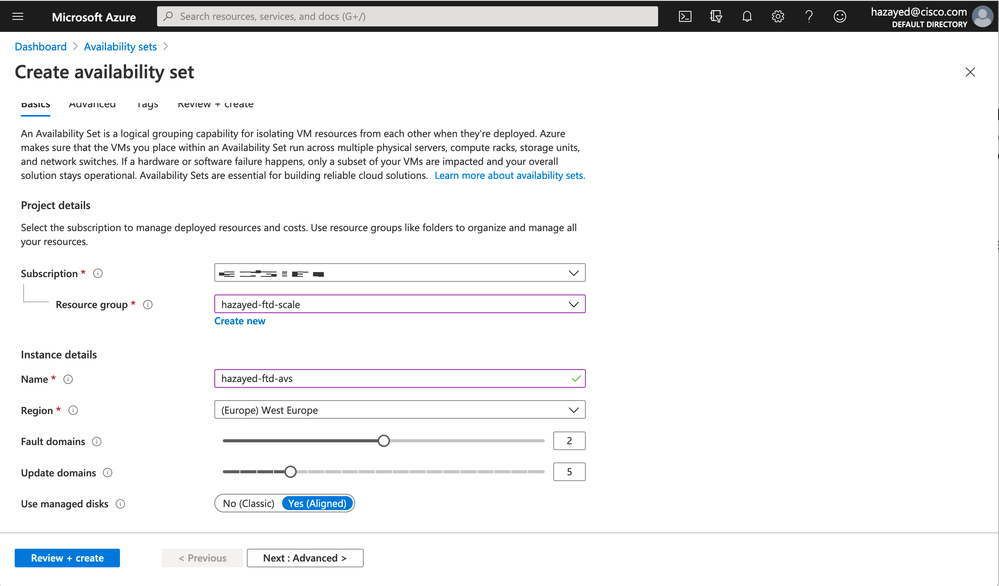

Step 2. Create Availability Set

Search for Availability Sets, and click on Add. Choose the Subscription, Region and Resource group created earlier, Fault and Update Domains desired, and click on Review+create, review the details and then click on Create.

Step 3. Create Virtual Network

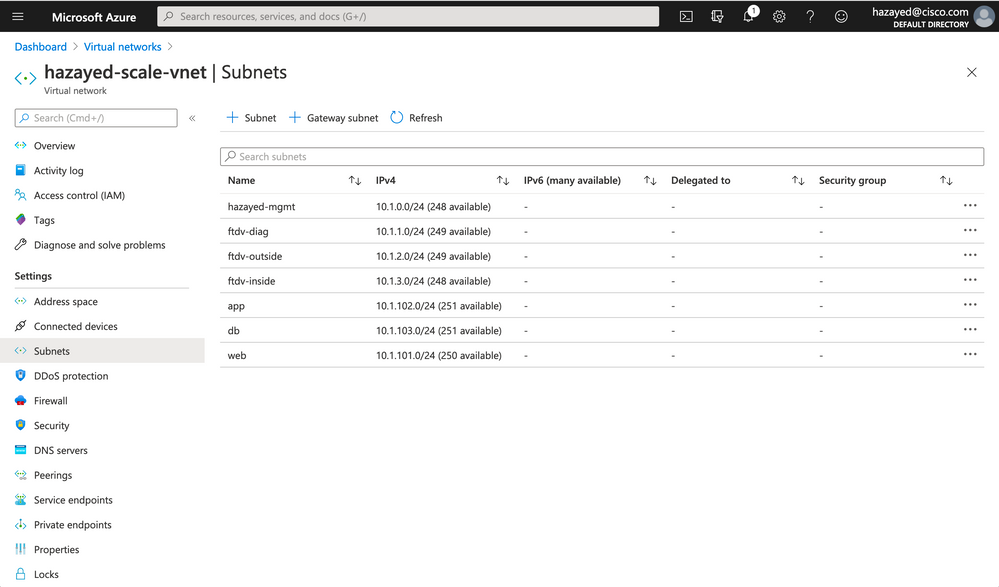

Search for Virtual Networks, click on Add. Choose the Subscription, Resource Group, Region and the name of the virtual network. And click on Next to go to IP Addresses. Here, define the address space of this VNet and the subnets. We will choose the range 10.1.0.0/16, and the following subnets

| Subnet Name | Range |

| hazayed-mgmt | 10.1.0.0/24 |

| ftdv-diag | 10.1.1.0/24 |

| ftdv-outside | 10.1.2.0/24 |

| ftdv-inside | 10.1.3.0/24 |

| web | 10.1.101.0/24 |

| app | 10.1.102.0/24 |

| db | 10.1.103.0/24 |

Then, click on Review+create, review the details entered and click on Create. After the job is successful, go to the VNet and view the subnets to verify

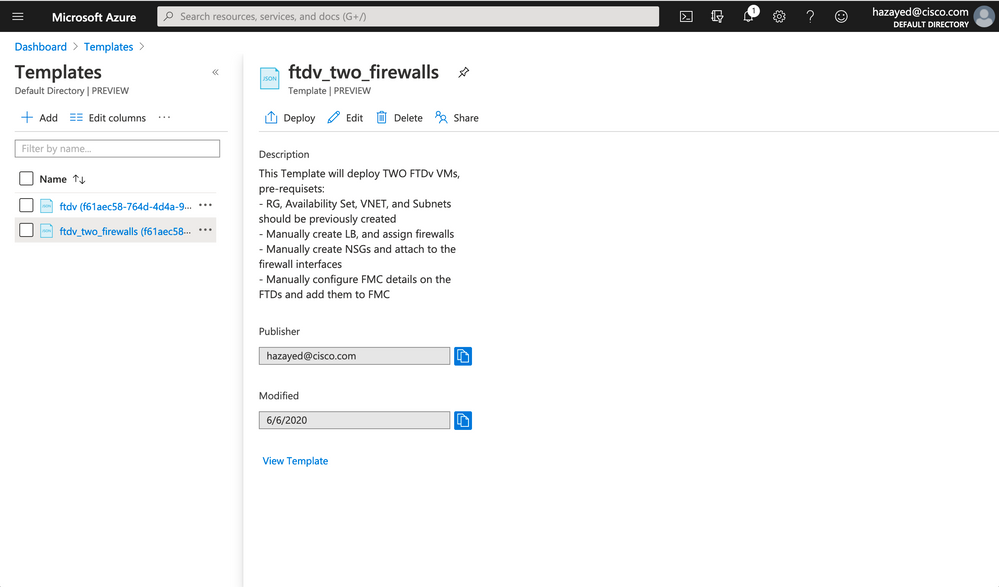

Step 4. Deploy ARM Template

Search for Templates, click on Add. Enter a descriptive name and description, and on the next page, paste all the JSON file contents. After the Template is created, click on it and choose Deploy.

Choose the Subscription, Resource Group and the location to deploy the new VMs in. the table below lists the parameters to fill, some of them previously created (RG, VNet, Availability Set, Subnet names), others will be created (Storage Accounts):

| Vm1Name | hazayed-ftdv1 |

| Vm2Name | hazayed-ftdv2 |

| Admin Username | <admin_name> |

| Admin Password | <password> |

| Vm1Storage Account | hazayedftdvstr1 |

| Vm2Storage Account | hazayedftdvstr2 |

| Availability Set Resource Group | hazayed-ftdv-scale |

| Availability Set Name | hazayed-ftdv-avs |

| Virtual Network Resource Group | hazayed-ftdv-scale |

| Virtual Network Name | hazayed-scale-vnet |

| Mgmt Subnet Name | hazayed-mgmt |

| Mgmt Subnet IP1 | 10.1.0.11 |

| Mgmt Subnet IP2 | 10.1.0.21 |

| Diag Subnet Name | ftdv-diag |

| Diag Subnet IP1 | 10.1.1.11 |

| Diag Subnet IP2 | 10.1.1.21 |

| Gig00Subnet Name | ftdv-outside |

| Gig00Subnet IP1 | 10.1.2.11 |

| Gig00Subnet IP2 | 10.1.2.21 |

| Gig01Subnet Name | ftdv-inside |

| Gig01Subnet IP1 | 10.1.3.11 |

| Gig01Subnet IP2 | 10.1.3.21 |

| Vm Size | <choose_per_requirements> |

Check the box to agree to the terms and conditions, and click on Purchase. It may take around 10 minutes to set up the VMs.

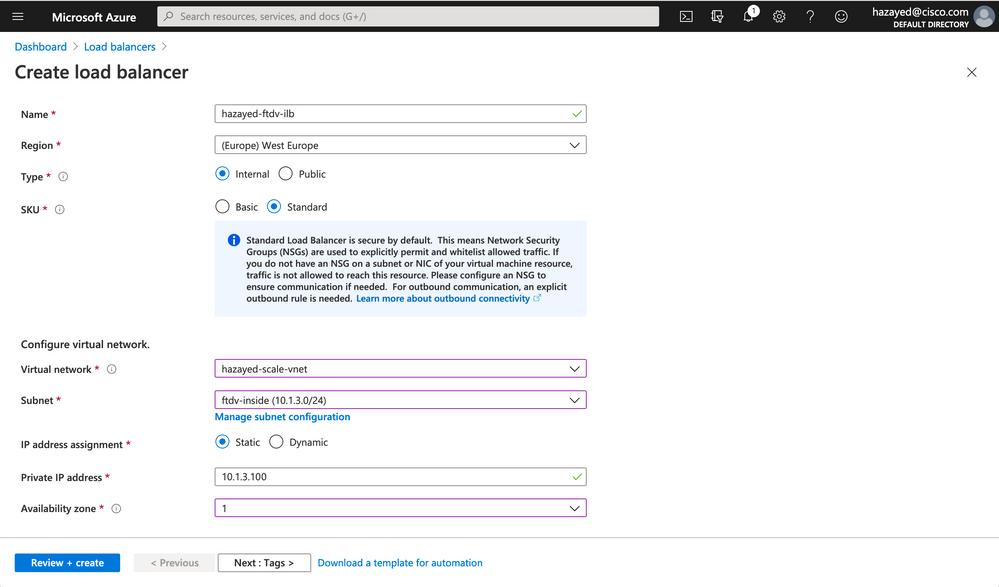

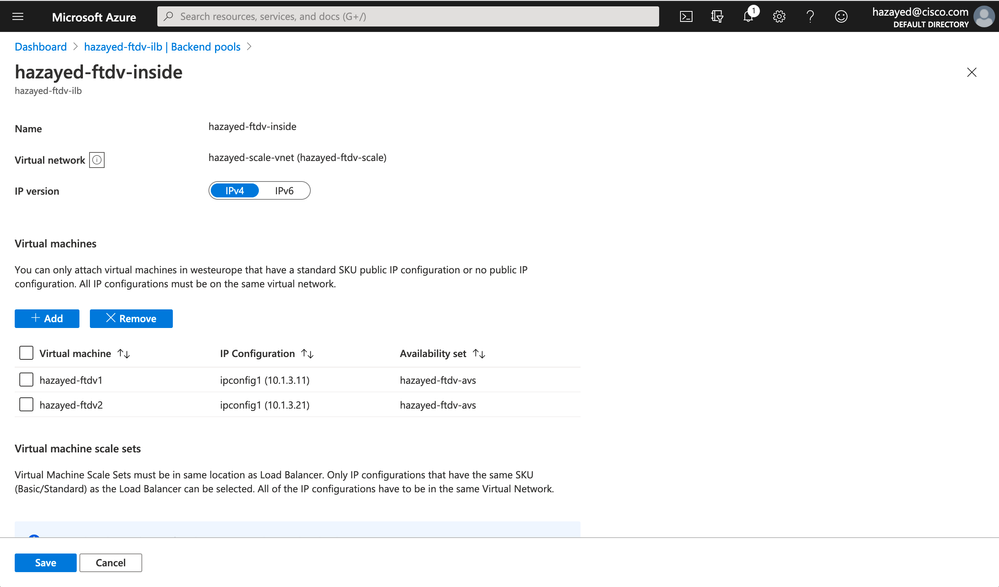

Step 5. Create and Configure Internal Load Balancer

Search for Load Balancers, click on Add. Choose the Subscription, Resource Group and the rest of the parameters. It’s important to make sure to choose Internal and Standard to have the HA port rule functionality.

After the deployment succeeds, go to the LB created. Verify that you can see 10.1.3.100 in the Frontend IP Configuration. Next, we configure the backend pool, adding the firewalls inside IP addresses.

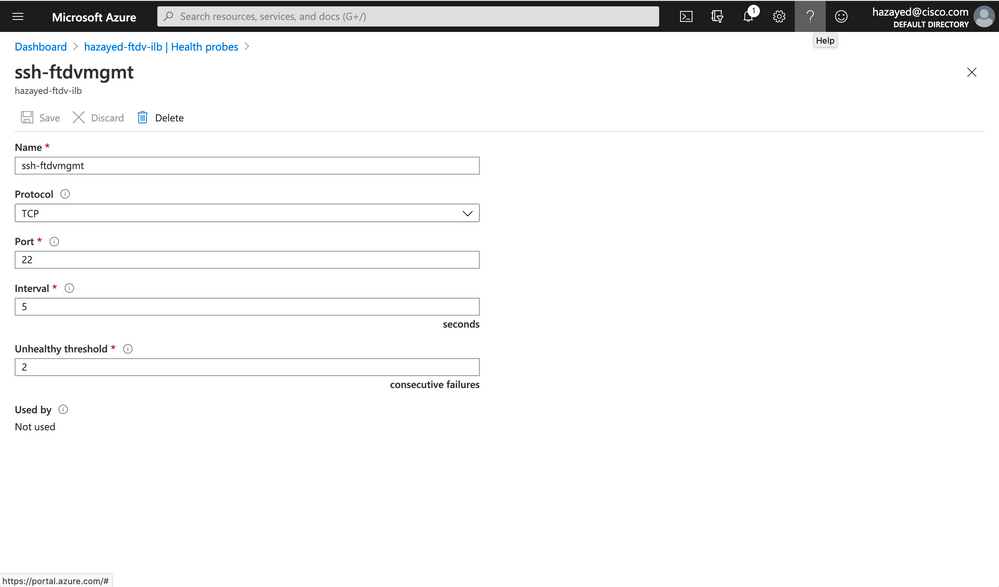

We need to configure a Heath probe before we configure the load balancing rule

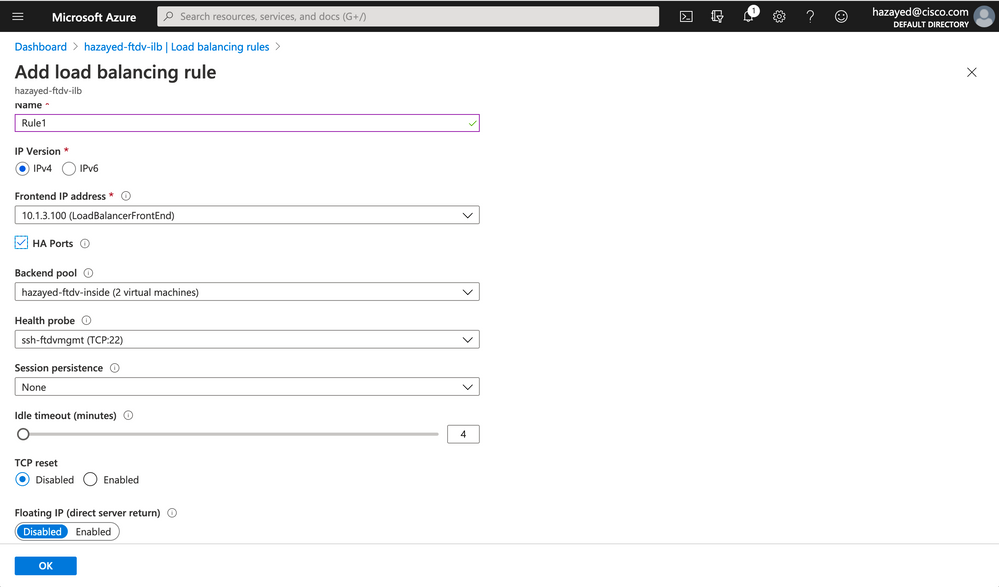

Now, with the Frontend IP, backend pool and health probe configured, we can add the load balancing rule

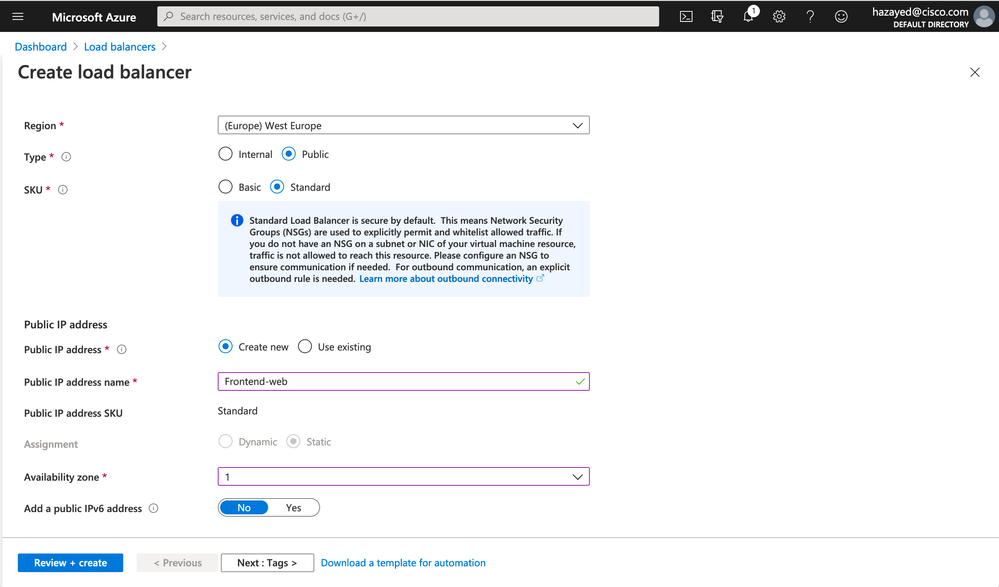

Step 6. Create and Configure Public Load Balancer

Similarly to the previous step, proceed with creating and configuring another load balancer. This time, the Type will be Public and we’ll create and Public IP address to use

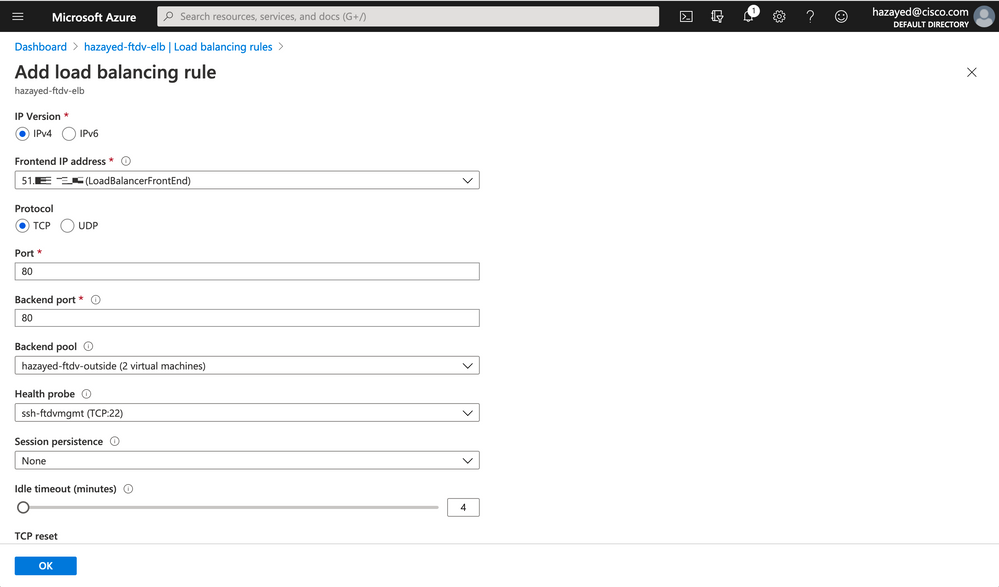

Create backend pools consisting of the outside interfaces of the firewalls, and a similar health probe done in the previous step. After that proceed with creating the load balancing rule to receive traffic on port 80

Step 7. Create Internal and External NSGs

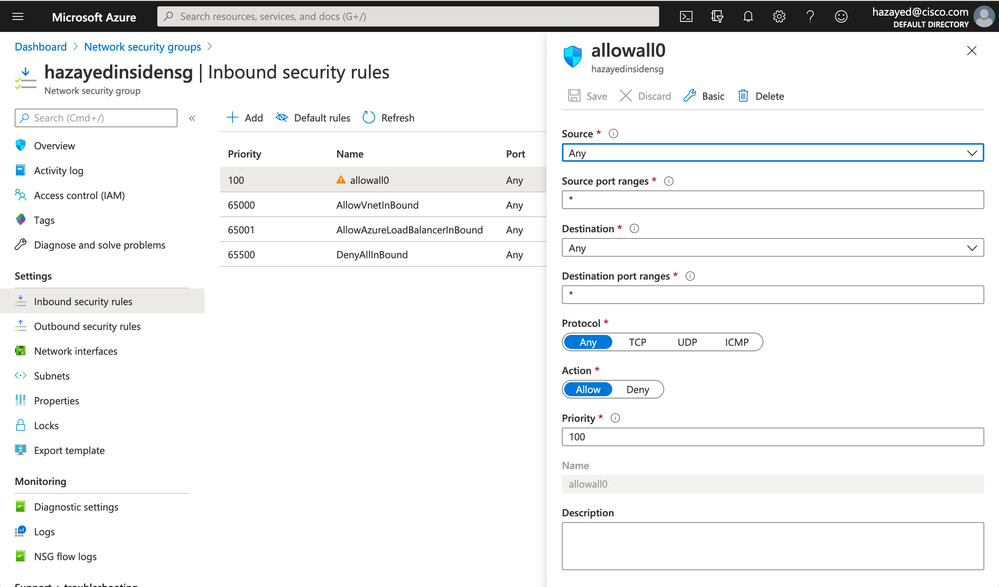

In order to allow the traffic to reach the firewall inside and outside interfaces, we need to create NSGs and attach them to the respective subnets, and configure rules to allow the traffic. You can allow specific traffic to reach the firewall or allow everything, however, in order to have full visibility on the firewall and have a single point of traffic control, we choose to allow all traffic on the NSGs. Consult your organization security policy on what to choose here.

Search for NSGs, create one for the inside subnet and another one for outside subnet. Navigate to the NSGs and configure a rule to allow traffic in both Inbound and Outbound security rules, in both NSGs. Below is an example on one rule of them

Step 8. Create and Configure UDRs for Web, App & DB Subnets

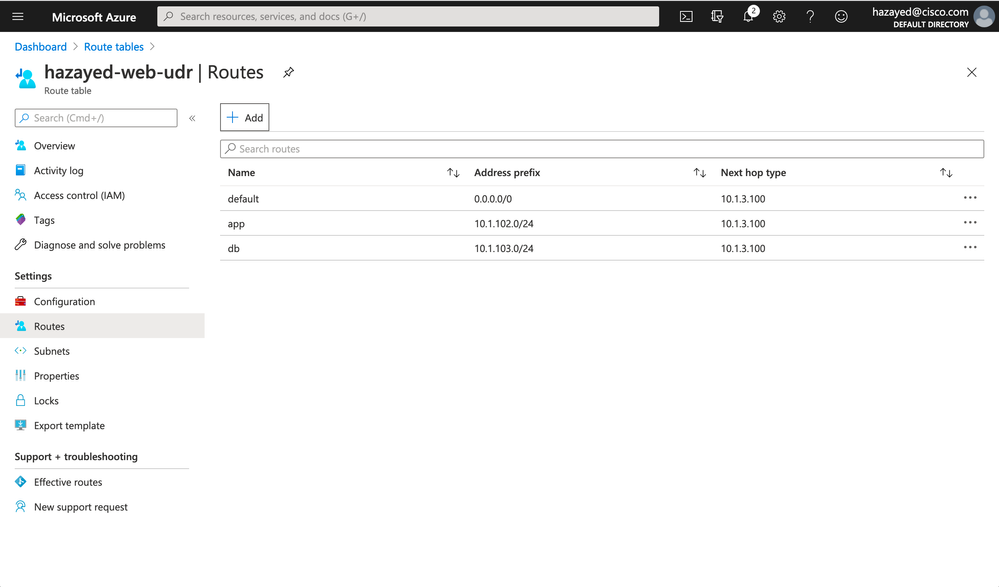

As explained in the design discussion earlier, in order to steer the traffic to go through the firewalls for both N/S and E/W traffic, we need to configure UDRs on the workloads subnets to point to the Internal Load Balancer Frontend IP.

Search for UDR, Add a new one for each subnet; web, app and db. Navigate to the newly created UDRs, and configure the routes. The route to point to the Internal Load Balancer will have a next hop type of Virtual Appliance. Below is an example of the web subnet UDR

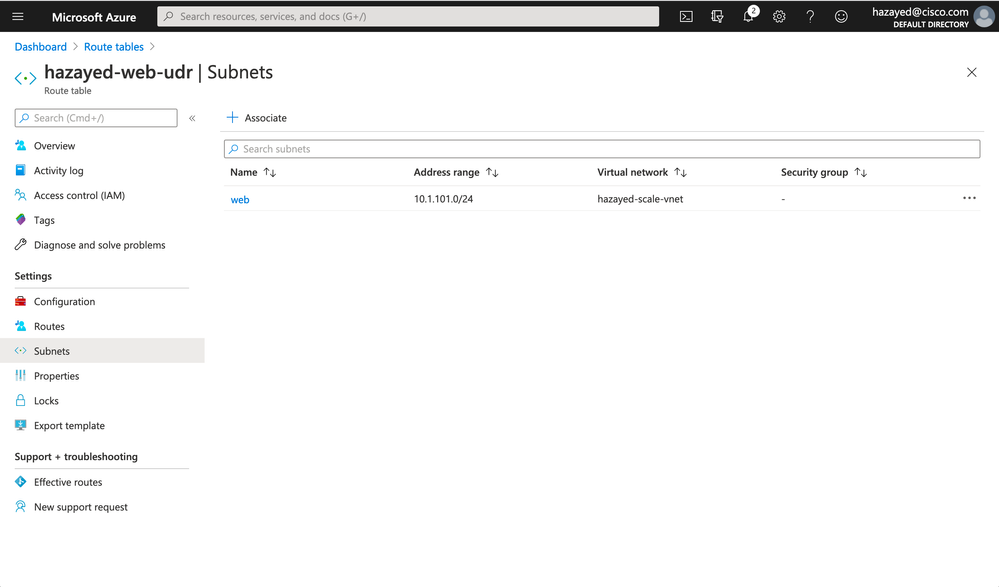

Don’t forget to associate the UDR to the intended subnet

If you don't have a web server ready in the web subnet, for testing purposes, you can follow this guide to Create a Linux virtual machine in the Azure portal, and install a web server.

Step 9. First login and Initial Configuration of FTDv firewalls

Part of the ARM template used, the firewalls management interfaces will be configured with public IP addresses to access them for the first time. If you have a site to site tunnel, ExpressRoute, or a jump server configured and you can access the private IP of the management interfaces, and also the FMC can reach them, then public IPs are not needed.

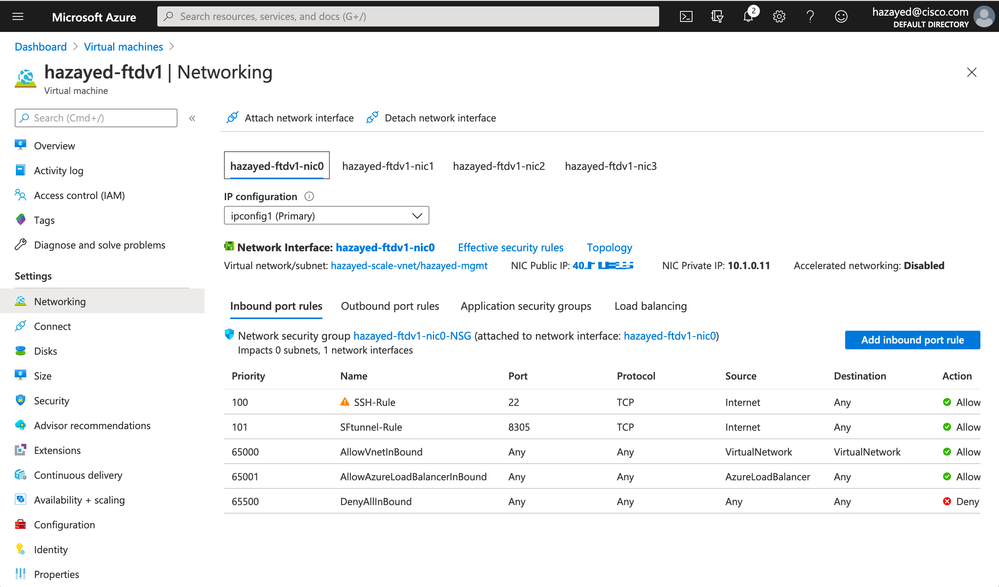

Also, the template will deploy NSG for the management interfaces, allowing any IP address to reach the firewalls on port 22 for SSH, and port 8305 for sftunnel (FMC communication), modify as needed for your environment.

To find the public IP address associated with the firewalls, navigate to ftdv1 VM, click on Networking, choose the first NIC (nic0) which is the management interface, and you will see the public IP assigned to it.

SSH to the public IP from your PC, username and password for first login will be admin/Admin123.

After you go through the initial dialogue, configure the FTD to register to the FMC. This document does not go through the steps of registration, please refer to the FMC configuration guide, Chapter: Device Management Basics

Repeat the steps for the second firewall as well.

Step 10. Associate a Public IP on the Outside Interfaces for Outbound Traffic

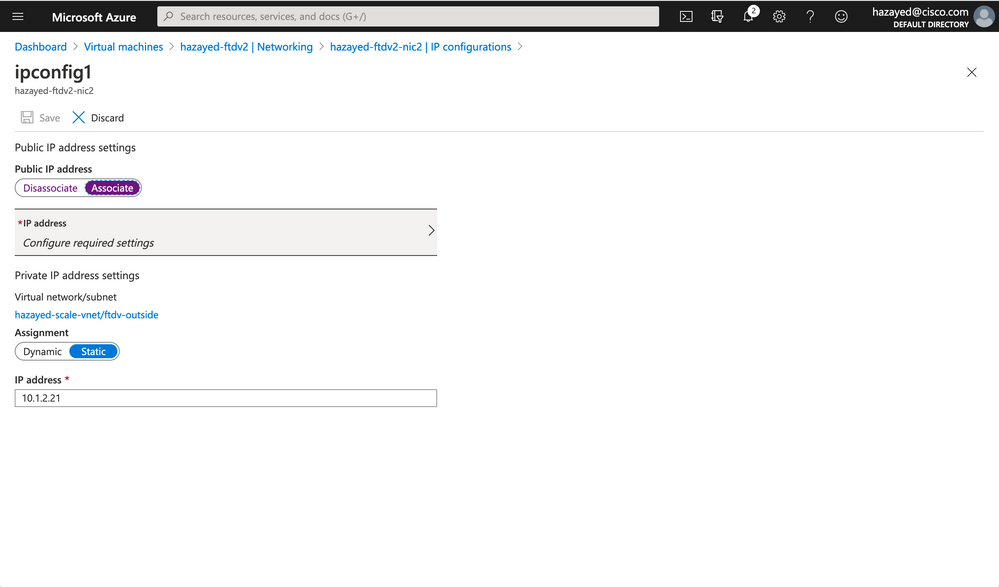

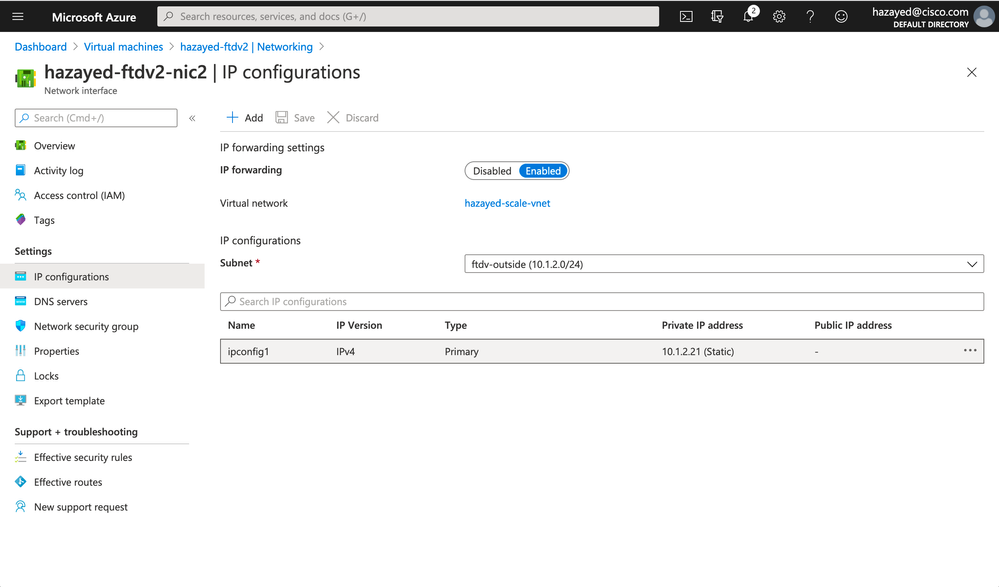

In order for firewalls to serve outbound traffic initiated from the workloads going to the internet, they need to have public IPs assigned on the outside interfaces. Navigate to the outside NIC of the ftdv1, and click on IP configurations

Repeat the steps for the other firewall too. There is no more configuration required on the Azure portal, next we go to the FMC.

Step 11. Configure the FTDv Firewalls from FMC

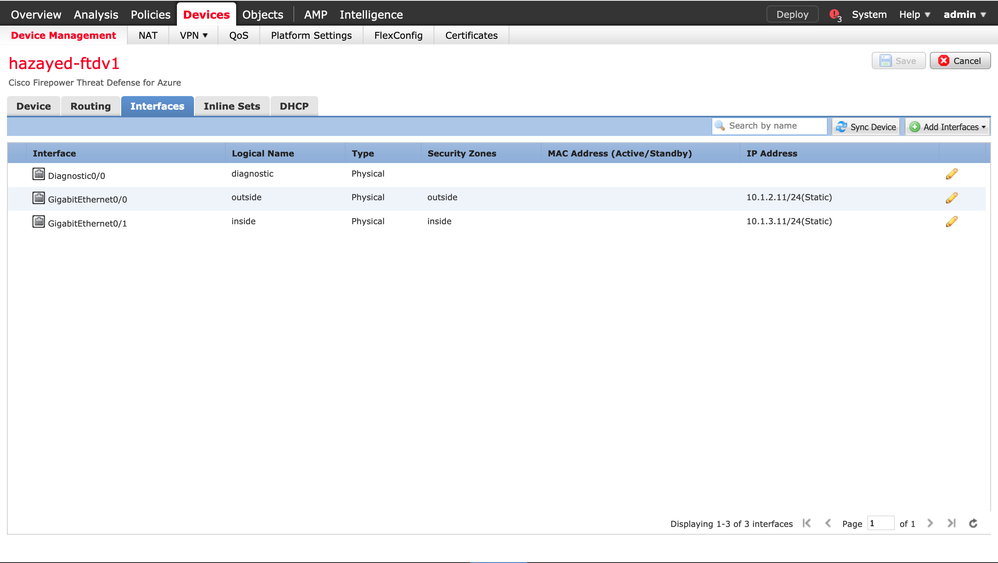

After registering the FTDs with the FMC, proceed with configuring the FTD zones and interfaces. Configure both firewalls, below is ftdv1 configuration

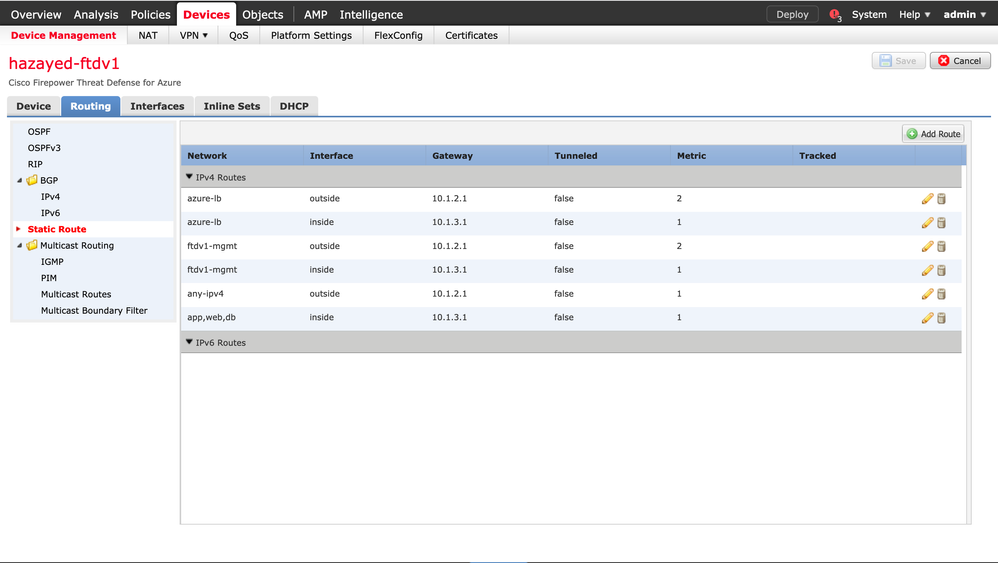

Next, static routes are configured to take care of data traffic as well as the health probes. Configure both firewalls, below is ftdv1 configuration. The routes are explained in the design sections discussed earlier.

We will use two separate NAT policies for the two firewalls, the below screenshot shows the NAT configuration of ftdv1, configure ftdv2 similarly. The first and second NAT rules are configured to send the health probes through the firewall. The third one is for inbound traffic coming from the public load balancer going to the web server, while the last one is for outbound traffic going to the internet.

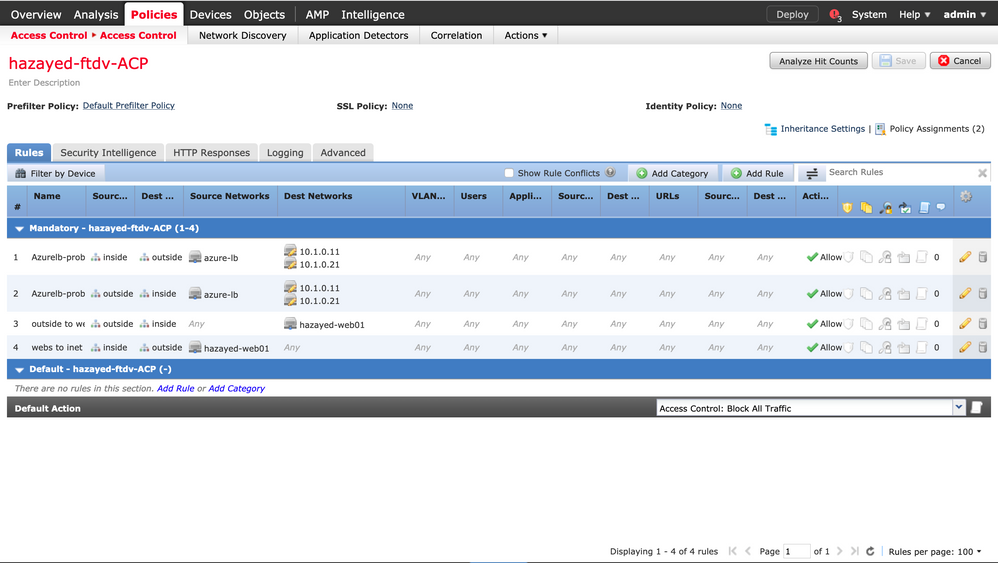

Both firewalls are assigned to the same Access Control Policy. Rules are configured to allow the traffic mentioned above. The first two rules are for the health probes, thus, it's recommended to keep logging off for them. Rule 3 is for Inbound traffic coming for the web server, while the last rule is for the web server itself to go to the internet and get the patches it needs.

Now with all the configuration done, all traffic scenarios discussed in the design section will be working.

Learn More:

ARM yourself using NGFWv and ASAv (Azure) - BRKSEC-3093

Deploy NGFWv & ASAv in Public Cloud (AWS & Azure) - LTRSEC-3052

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Great work here but it's worth adding a note about a horrible Azure limitation - if your VM name is in upper case then you will get the following error "Invalid Domain Name Label" when the deployment validates. Changing it to lower case fixed the problem. Hope this comment saves someone some time (spent about 4 hours bashing my head against that...)

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@mhmservice Thanks for your comment, glad that it worked for you after all :), I'll add a note about this in the steps!

The VM name will be used for the DNS label when you attach a public IP to it. While VM name itself should allow upper and lower case, the DNS label in Azure only accepts lower case letters. I guess that was the issue you faced.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi this is a great document. Is this TAC supported? I was reading

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@thomuff Thank you!

As of now, FTD Active/Passive failover is not supported for public cloud firewalls, it's mentioned in the document you referenced:

"The Firepower Threat Defense Virtual on public cloud networks such as Microsoft Azure and Amazon Web Services are not supported with High Availability because Layer 2 connectivity is required."

Firewalls failover in general rely on Layer 2 connectivity and visibility between the two units, and as this is not available in the public clouds, thus A/P failover is not supported.

It's being investigated to rely on other methods to support failover (like an agent for ex., which is available for ASA firewalls, check link below), but it's not yet committed.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

When you created your "Outside NSG" are you following the same rule as the Inside NSG where you allow ALL traffic in both the Inbound and Outbound rule sets?

Wouldn't the "allow all" traffic rule in the outbound rule set of the outside subnet's NSG allow the firewall external interfaces or anything spun up in that outside subnet to directly route to the internal vnets? This would bypass the firewall completely, correct?

If so, what would be the appropriate rules to configure for the outside subnet's NSG outbound rules?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Routing is the piece that actually controls where the traffic goes, NSGs will only allow/deny it. By default in Azure single VNet, all IPs can directly reach each. However, we can play with the traffic path using UDRs and LBs. In the design above, we are utilizing LBs and UDRs to route the traffic through the firewalls. Whatever you configure in the NSG, it can never affect whether the traffic bypasses or goes through the firewall.

For traffic coming from internet, flow will be:

Internet -> Public IP (front end IP) on LB -> backend pool (FW external interfaces) -> FW inspects traffic -> inside

Note that once the traffic reaches a FW interface, it's up to the firewall routing and policies configuration itself (not Azure) to control where to send the traffic next.

The NSG is just a layer 4 ACL that can allow/deny the traffic (not where to send the traffic next). It is applied on the packets just before it reaches the external FW interface (or subnet, depending where you apply the NSG). In the configuration above, NSG is configured with "allow any" to allow all traffic to reach the firewall external interface and control it on the firewall. However, if you wish to allow specific traffic to reach the firewall, you can configure any policy that suits you on the NSG, but anytime you need to modify security policies, you have to do that twice, on the firewalls and the NSG. It's actually a personal/corporate preference.

Hope that clears it for you

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Can you skip the NAT and static routes for azure-lb and fw-mgmt, if you use the method described in the AutoScale documentation to provide the LB Health Probes direct access the the outside and inside interfaces (Configured via Platform settings)?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@Justin Walker

Yes, you can use either the NAT and static routes as explained in this document, or deal with the probes using the Platform settings. It's up to you.

Using NAT, you will be able to monitor if the FTD is actually passing the traffic, and monitor the management interface too. You can use one NAT policy for both firewalls if you utilize object override, making NAT configuration easier (one policy for all firewalls).

Using Platform settings, configuration of NAT and static routes will be simpler, but you won't be able to monitor if the traffic is actually passing through the firewall.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Awesome, thank you for explanation of pros and cons for each method. Nice work on the documentation above as well, its very helpful as a reference.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@Justin Walker Thank you, glad to help

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thx a lot !, Great document.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello @hazayed,

really great document, I only have two questions about probes health configuration on FTD (Section - Azure Load Balancer Health Probes

1 - why do we need twice NAT and not using only source NAT?

2 - why do we need to use route for directly connected interfaces?

Thanks

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@Simone Stellato Thank you Simone, glad that you found it helpful!

1- The LB probe sent from Azure to the firewall has the destination of the outside/inside interface, we need to change the destination to the management IP so the probe is handled by management. Source NAT is done so the reply is routed through the firewall too. Thus twice NAT is needed.

The comment by Justin above and my reply to it explains why we do this, and explains the other option to handle the probes without any NAT.

2- I'm afraid I'm not getting your question, can you please clarify to which route exactly are you referring?

Keep in mind that the FW does not know anything about Azure routing, you need to configure routes to send the traffic to the Azure GW (first IP in each subnet), and Azure will handle it. Hope this helps!

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

thanks for the answers @hazayed !

what I'm trying to understand is why we need a route to reach the management interface which, being directly connected, shouldn't need it, what am I missing?

Hope now my question is more clear

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@Simone Stellato Apologies for not replying earlier!

The FTD uses separate routing tables for data traffic (through-the-device) and for management traffic (from-the-device). Thus it's not directly connected.

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: