- Cisco Community

- Technology and Support

- Security

- Security Knowledge Base

- Cisco Network Visibility (NVM) Collector

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

on

03-18-2021

10:08 AM

- edited on

09-20-2021

03:39 AM

by

sathishr

![]()

This document goes over the purpose, information and installation of the Anyconnect Network Visibility (NVM) Collector.

- CESA for Splunk

- Support

- NVM Collector

- Install

- Upgrade

- Configuration Options

- Collector Enhancements

- Docker Installation

- Detailed Configuration and Operation

- Concurrency

- Filtering Flows

- Additional Exporters

- Implementing Your Own Exporter as a Plugin

- Data Model and Correlation Index

- NVM Collector Syslog Fields

CESA for Splunk

Cisco AnyConnect Network Visibility Module (NVM) App for Splunk is comprised of three components:

* The Splunk App with pre-designed Dashboards to visualize and view the data.

* The Splunk Add-on which provides data indexing and formatting inside Splunk Enterprise.

* The NVM Collector Component which is responsible for collecting and translating all IPFIX (nvzFlow) data from the endpoints and forwarding it to the Splunk Add-on.

This is a manually installed component that runs on 64-bit Linux.

Your options for install is to put on an existing system (there is a guide posted to the bottom of CESA POV page), on a separate linux or docker machine.

This document also talks about the collector install and configuration options.

Support

For more information on the solution access the following links:

Proof of Value - this page goes over everything to know about CESA, solution, sales, licensing, design, etc - http://cs.co/cesa-pov

CESA guide - this page goes through all the dashboard elements of CESA-splunk and the necessary - http://cs.co/cesa-guide

- For first time engagement and for POV/Trial please reach out to your account team before installation

- For first-line technical support on the CESA solution (Anyconnect, NVM module, NVM collector and CESA dashboard), please reach out to Cisco TAC and/or Cisco Account team. If the account team is new to the product, they are able to reach out to cesa-pov@cisco.com for help with the deployment

- If there are specific questions about CESA specifically (for account teams) please email - cesa-pov@cisco.com.

- For specific questions around the collector, design enhancements and technical details please reach out to nvzFlow@cisco.com.

NVM Collector

Install

The collector runs on 64-bit Linux. For compatibility with other platforms, please see Docker Installation section below. CentOS and Ubuntu configuration scripts are included. The CentOS install scripts and configuration files can also be used in Fedora and Redhat distributions as well.

The collector should be run on either a standalone 64-bit Linux system or on a 64-bit Splunk Forwarder.

The collector is packaged with the Cisco NVM Technology Add-On for Splunk. In order to install the collector you will need to copy the application in the acnvmcollector.zip file from, located in the $APP_DIR$/appserver/addon/ directory to the system you plan to install it on.

Extract the tar file on the system where you plan to install the collector on and execute the install.sh script with super user privileges.

It is recommended to read the $PLATFORM$_README file in the .zip bundle before executing the install.sh script. The $PLATFORM$_README file provides information on the relevant configuration settings that need to be verified and modified (if necessary) before the install.sh script is executed. Failing to do so can cause the collector to not function accordingly.

NOTE: Check if the collector is running

A collector can handle a minimum of 5000 flows per second on a properly sized system.

Upgrade

Do the same as install, it will overwrite existing, and then after upgraded validate the version

To find the collector version run with -v flag

- ./opt/acnvm/bin/acnvmcollector -v

/opt/acnvm/bin

[root@splunk-virtual-machine bin]# ./acnvmcollector -v

Cisco AnyConnect Network Visibility Module Collector (version 4.10.02086 release)

Copyright (C) 2004-2021 All Rights Reserved.

Configuration Options

The collector needs to be configured and running before the Splunk App can be used. By default, the collector receives flows from AnyConnect NVM endpoints on UDP port 2055.

Additionally, the collector produces three data feeds for Splunk, Per Flow Data, Endpoint Identity Data and Endpoint Interface Data, on UDP ports 20519, 20520 and 20521 respectively.

Both the receive and data feed ports can be changed by altering the acnvm.conf file and restarting the collector instance.

Make sure that any network firewalls between endpoints and the collector or between the collector and Splunk system(s) are open for the configured UDP ports.

Also ensure that your AnyConnect NVM configuration matches your collector configuration. Refer to the AnyConnect Administration Guide for more information.

The Splunk App is NOT configured by default to receive data, however the inputs.conf file contains an example of three data feeds that are disabled, Per Flow Data, Endpoint Identity Data and Endpoint Interface Data, on UDP ports 20519, 20520 and 20521 respectively.

To enabled these feeds change the settings inputs.conf file to remove the commented out default connections.

Collector Enhancements

In addition to Ubuntu and CentOS install scripts, we have added Docker support so you can run the NVM Collector in a Docker Container.

We've also added the ability to tune the multi-core behavior of the NVM Collector as well as include/exclude filtering capability refer to the collector configuration help file in the Splunk App dashboard for more information.

The NVM Collector daemon now runs under a low rights user and group (acnvm) instead of a root process.

Finally, there is an SDK so that you can write your own streaming format. In addition to the built-in syslog format, we include an Apache Kafka C++ sample plugin to get you started.

Docker Installation

CiscoNVMCollector_TA.zip includes a Dockerfile that can be used to build a Docker image for the collector. Before building, first make any configuration adjustments (as needed) to acnvm.conf.

Build docker image from the directory containing Dockerfile:

docker build -t nvmcollector .

Parameters for running the docker image depends on your acnvm.conf file. For the default config where collector listens on port 2055 and the syslog server is on the same host:

docker run -t -p 2055:2055/udp --net="host" nvmcollector

Detailed Configuration and Operation

Concurrency

By default when running on a host with multiple cores, the collector takes advantage of by creating a separate server process per core. This parallelizes IPFX processing. This configuration can be tuned using the "multiprocess" dictionary inside of the main collector config file.

To disable multiprocessing (force a single process):

{

"multiprocess":

{"enabled": false}

}

To force 2 server processes:

{

"multiprocess":

{

"enabled": true,

"numProcesses": 2

}

}

Filtering Flows

Collector supports three optional flow filtering modes- inclusion mode (whitelist), exclusion mode (blacklist), and hybrid. Filtering is defined in a separate JSON policy file. The policy file path could be specified when starting the collector. By default, the collector will look for policy stored in this file:

/etc/acnvmfilters.conf

If no policy exists, filtering is disabled and all flows will be processed and exported. The three filtering modes operates as follows:

- Include Only (whitelist) - By default, a flow is dropped unless it matches an include rule.

- Exclude Only (blacklist) - By default, a flow is collected unless it matches an exclude rule.

- Include + Exclude (hybrid) - By default, a flow is dropped unless it matches an include rule AND it does not match an exclude rule.

Each rule is specified as a JSON dictionary, with each key/value pair specifying a match criteria on a flow field (whose name matches the key). Suffix wildcards are supported for string field types and is denoted by a *.

Sample: Exclude all DNS flows:

{

"rules":

{

"exclude":

[

{"dp": 53, "pr": 17}

]

}

}

Sample: Exclude flows to certain DNS servers:

{

"rules":

{

"exclude":

[

{"dp": 53, "pr": 17, "da": "1.2.*"},

{"dp": 53, "pr": 17, "da": "8.8.8.8"}

]

}

}

Sample- Only collect flows from Angry Birds (Android app), but ignore DNS flows:

{

"rules":

{

"include":

[

{"pname": "com.rovio.angrybirds"}

],

"exclude":

[

{"dp": 53, "pr": 17, "da": "1.2.*"},

{"dp": 53, "pr": 17, "da": "8.8.8.8"}

]

}

}

Additional Exporters

The collector exporter currently supports Splunk (syslog). There is also limited support for Kafka. To request additional support, please contact: nvzflow@cisco.com

Export behavior can be configured in the main configuration file.

Sample Splunk (syslog) Export Configuration

{

"exporter": {

"type": "syslog",

"syslog_server": "localhost",

"flow_port": 20519,

"endpoint_port": 20520,

"interface_port": 20521

}

}

Sample Kafka Export Configuration

{

"exporter": {

"type": "kafka",

"bootstra_server": "localhost:9092",

"flow_port": "flow",

"endpoint_port": "endpoint",

"interface_port": "interface"

}

}

Implementing Your Own Exporter as a Plugin

The collector's export capability can be extended using native C++ code by building a shared library against the plugin API. The SDK bundle (including headers and sample) is located in the Splunk app bundle in addon directory: CiscoNVM/appserver/addon. For questions, please contact: please contact: nvzflow@cisco.com

To use your custom plugin, a special configuration is required in the main collector config:

{

"exporter": {

"type": "plugin"

}

}

Data Model and Correlation Index

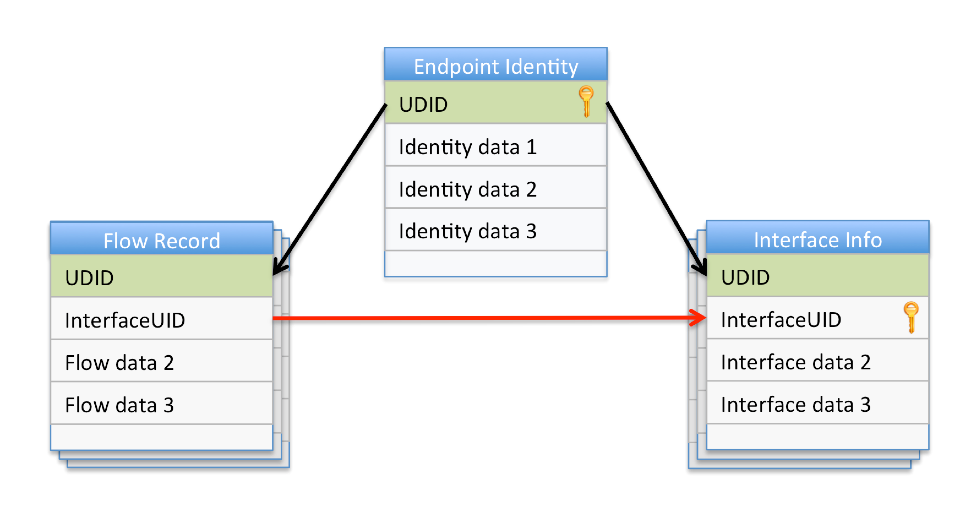

The following diagram depicts the relationship between the three data sources, Per Flow, Endpoint Identity, and Interface Info. The UDID field is used as a means of correlating records between these sources.

NVM Collector Syslog Fields

NOTE: The "Source" entry below indicates which Splunk sourcetype(s) it is found in:

cisco:nvm:flowdata, cisco:nvm:sysdata, cisco:nvm:ifdata

| Search Key | Key Name | Source | Key Description | |

| udid | UDID | cisco:nvm:flowdata cisco:nvm:sysdata cisco:nvm:ifdata |

unique identifier for each end point in the network. This is derived and recreated from hardware attributes. It is used to correlate records back to a single source. Note that this matches the same value sent by AnyConnect VPN to an ASA. | |

| osn | OSName | cisco:nvm:sysdata | Name of the Operating System on the endpoint (e.g. WinNT). Note that this matches the same value sent by AnyConnect VPN to an ASA. | |

| osv | OSVersion | cisco:nvm:sysdata | Version of the operating system on the endpoint(e.g. 6.1.7601). Note that this matches the same value sent by AnyConnect VPN to an ASA. | |

| vsn | VirtualStationName | cisco:nvm:sysdata | Device name configured on the endpoint (e.g. Boris-Macbook). Domain joined machines will be in the form [machinename].[domainname].[com] (e.g. CESA-WIN10-1.mydomain.com) |

|

| sm | SystemManufacturer | cisco:nvm:sysdata | Endpoint Manufacturer (e.g. Lenovo, Apple, etc.). | |

| st | SystemType | cisco:nvm:sysdata | Endpoint Type (e.g. x86, x64). | |

| agv | AgentVersion | cisco:nvm:sysdata | Software version of the agent/client software. Typically of the form major_v.minor_v.build_no. [AnyConnect NVM 4.9 or later] | |

| liuid | LoggedInUser | cisco:nvm:flowdata | The logged in username on the physical device, in the form Authority\Principal, on the endpoint from which the network flow is generated. Note that a domain user is in the same form as a user locally logged in to a machine. e.g. MYDOMAIN\aduser or SOMEMACHINE\localuseracct NOTE: If this field is empty, it indicates that either no user was not logged into the device, or a user remotely logged in to the device via RDP, SSH, etc. liuida and liuidp fields would also be empty as a result. User information can still be derived from the process account (pa) information (see below for more information). |

|

| liuida | LoggedInUserAuthority | cisco:nvm:flowdata | The Authority portion of the logged in username on the physical device from which the network flow is generated. | |

| liuidp | LoggedInUserPrincipal | cisco:nvm:flowdata | The Principal portion of the logged in username on the physical device from which the network flow is generated. | |

| fss | StartSeconds | cisco:nvm:flowdata | Time stamp of when the network flow was initiated by the endpoint. see timestamp notes below for details | |

| fes | EndSeconds | cisco:nvm:flowdata | Time stamp of when the network flow was completed by the endpoint. see timestamp notes below for details | |

| sa | SourceIPv4Address | cisco:nvm:flowdata | IPv4 address of the interface from where the flow was generated on the endpoint. | |

| da | DestinationIPv4Address | cisco:nvm:flowdata | IPv4 address of the destination to where the flow was generated from the endpoint. | |

| sa6 | SourceIPv6Address | cisco:nvm:flowdata | IPv6 address of the interface from where the flow was generated on the endpoint. | |

| da6 | DestinationIPv6Address | cisco:nvm:flowdata | IPv6 address of the destination to where the flow was generated from the endpoint. | |

| sp | SourceTransportPort | cisco:nvm:flowdata | Source port number from where the flow was generated on the endpoint. | |

| dp | DestinationTransportPort | cisco:nvm:flowdata | Destination Port number to where the flow was generated from the endpoint. | |

| ibc | InBytesCount | cisco:nvm:flowdata | The total number of Bytes downladed during a given flow on the endpoint at layer 4, not including L4 headers. | |

| obc | OutBytesCount | cisco:nvm:flowdata | The total number of Bytes uploaded during a given flow on the endpoint at layer 4, not including L4 headers. | |

| pr | ProtocolIdentifier | cisco:nvm:flowdata | Network Protocol number associated with each flow. For now we only support TCP (6) and UDP(17). | |

| ph | ProcessHash | cisco:nvm:flowdata | Unique SHA256 hash for the executable generating the Network Flow on the endpoint. | |

| pa | ProcessAccount | cisco:nvm:flowdata | The fully qualified account, in the form Authority\Principal, under whose context the application generating the Network Flow on the endpoint was executed. | |

| pap | ProcessAccountPrincipal | cisco:nvm:flowdata | The Principal portion of the fully qualified account under whose context the application generating the Network Flow on the endpoint was executed. | |

| paa | ProcessAccountAuthority | cisco:nvm:flowdata | The Authority portion of the fully qualified account under whose context the application generating the Network Flow on the endpoint was executed. | |

| pn | ProcessName | cisco:nvm:flowdata | Name of the executable generating the Network Flow on the endpoint. | |

| pph | ParentProcessHash | cisco:nvm:flowdata | Unique SHA256 hash for the executable of the parent process of the application generating the Network Flow on the endpoint. | |

| ppa | ParentProcessAccount | cisco:nvm:flowdata | The fully qualified account, in the form Authority\Principal, under whose context the parent process of the application generating the Network Flow on the endpoint was executed. | |

| ppap | ParentProcessAccountPrincipal | cisco:nvm:flowdata | The Principal portion of the fully qualified account under whose context the parent process of the application generating the Network Flow on the endpoint was executed. | |

| ppaa | ParentProcessAccountAuthority | cisco:nvm:flowdata | The Authority portion of the fully qualified account under whose context the parent process of the application generating the Network Flow on the endpoint was executed. | |

| ppn | ParentProcessName | cisco:nvm:flowdata | Name of the parent process of the application generating the Network Flow on the endpoint. | |

| ds | FlowDNSSuffix | cisco:nvm:flowdata | The DNS suffix configured on the network to which the user was connected to when the network flow was generated on the endpoint. | |

| dh | FlowDestinationHostName | cisco:nvm:flowdata | The Destination Domain of the Destination Address to where the network flow was sent to from the endpoint. | |

| fv | FlowVersion | cisco:nvm:flowdata | Network Visibility Flow (nvzFlow) version sent by the client. | |

| mnl | ModuleNameList | cisco:nvm:flowdata | List of 0 or more names of the modules hosted by the process that generated the flow. This can include the main DLLs in common containers such as dllhost, svchost, rundll32, etc. It can also contain other hosted components such as the name of the jar file in a JVM. | |

| mhl | ModuleHashList | cisco:nvm:flowdata | List of 0 or more SHA256 hashes of the modules associated with the nvzFlowModuleNameList | |

| luat | LoggedInUserAccountType | cisco:nvm:flowdata | Account type of the logged in user. As per the enumeration of AccountType, defined by this spec. | |

| puat | ProcessAccountType | cisco:nvm:flowdata | Account type of the process account. As per the enumeration of AccountType, defined by this spec. | |

| ppuat | ParentProcessAccountType | cisco:nvm:flowdata | Account type of the parent-process account. As per the enumeration of AccountType, defined by this spec. | |

| fsms | FlowStartMsec | cisco:nvm:flowdata | Time stamp (in msec) of when the network flow was initiated by the endpoint. [AnyConnect NVM 4.9 or later] see timestamp notes below for details | |

| fems | FlowEndMsec | cisco:nvm:flowdata | Time stamp (in msec) of when the network flow was completed by the endpoint. [AnyConnect NVM 4.9 or later] see timestamp notes below for details | |

| pid | ProcessId | cisco:nvm:flowdata | Process Id of the process that initiated the network flow. [AnyConnect NVM 4.9 or later] | |

| ppath | ProcessPath | cisco:nvm:flowdata | Filesystem path of the process that initiated the network flow. [AnyConnect NVM 4.9 or later] | |

| parg | ProcessArgs | cisco:nvm:flowdata | Command Line Arguments of the process that initiated the network flow. [AnyConnect NVM 4.9 or later] | |

| ppid | ParentProcessId | cisco:nvm:flowdata | Process Id of the parent of the process that initiated the network flow. [AnyConnect NVM 4.9 or later] | |

| pppath | ParentProcessPath | cisco:nvm:flowdata | Filesystem path of the parent of the process that initiated the network flow. [AnyConnect NVM 4.9 or later] | |

| pparg | ParentProcessArgs | cisco:nvm:flowdata | Command Line Arguments of the parent of the process that initiated the network flow. [AnyConnect NVM 4.9 or later] | |

| iid | InterfaceInfoUID | cisco:nvm:flowdata cisco:nvm:ifdata |

Unique ID for an interface meta-data. Should be used to lookup the interface meta-data from the InterfaceInfo records. | |

| ii | InterfaceIndex | cisco:nvm:ifdata | The index of the Network interface as reported by the OS. | |

| it | InterfaceType | cisco:nvm:ifdata | Interface Type, such as Wired, Wireless, Cellular, VPN, Tunneled, Bluetooth, etc. Enumeration of network types, defined by this spec. | |

| in | InterfaceName | cisco:nvm:ifdata | Network Interface/Adapter name as reported by the OS | |

| ssid | SSID | cisco:nvm:ifdata | Parsed from InterfaceDetailsList, this is the SSID portion, e.g. SSID=internet | |

| ist | Trust State | cisco:nvm:ifdata | Parsed from InterfaceDetailsList, this is the STATE portion, e.g. STATE=Trusted. This means either AnyConnect VPN is active or has determined the device is on a Trusted Network based on TND | |

| im | InterfaceMacAddress | cisco:nvm:ifdata | Mac address of the interface. |

TIMESTAMP NOTES:

fss & fes provide seconds-accuracy, while fsms & fems provide milliseconds-accuracy. Both timestamp formats are sent to the NVM collector starting with version 4.9 of the client. If you are not interested in higher resolution timestamps and want to save bandwidth or storage, you can drop nvzFlowFlowStartMsec and nvzFlowFlowEndMsec in the AnyConnect NVM Data Collection Policy settings. Any of these fields can also be dropped at the NVM Collector using Filtering.

Enumerations

- Interface Types

- 1 - Wired Ethernet

- 2 - Wireless (802.11)

- 3 - Bluetooth

- 4 - Token Ring

- 5 - ATM (Slip)

- 6 - PPP

- 7 - Tunnel (generic)

- 8 - VPN

- 9 - Loopback

- 10 - NFC

- 15 - Unknown/Unspecified

- Account Types

- All Platforms

- Unknown - 0x0000

- Domain User - 0x8000 (Mask. 16th bit set for Domain user. Eg., a Domain-user administrator will have 0x8002 as the type).

- Standard User - 0x0001

- Admin User - 0x0002

- Guest User - 0x0003

- Standard User - 0x0001

- Root user - 0x0004

- Bit Representation

- Bit 16 - Domain bit

- Bit 15 - Reserved but not implemented yet

- Bit 14 - Reserved but not implemented yet

- Bits 13 through 9 - Reserved for future bit-masks

- Bits 8 through 1 - Specific AccountType values

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello @Jason Kunst . Waht is the duration of time remove NVN AnyConnect module can buffer and store Cisco nvzFlow while the endpoint is not connected to the VPN?

What is the best practice for NVN collectors? Would you recommend exposing it to the internet or keep inside the perimeter?

The Install and Configure AnyConnect NVM 4.7.x or Later and Related Splunk Enterprise Components for CESA guide suggesting NVN has RFC1918 IP address.

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: