- Cisco Community

- Technology and Support

- Security

- Security Knowledge Base

- How To: Cisco & F5 Deployment Guide: ISE Load Balancing Using BIG-IP

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

on

06-20-2016

11:09 AM

- edited on

07-30-2025

05:27 AM

by

jcedrone

![]()

Author: Craig Hyps

|

For an offline or printed copy of this document, simply choose ⋮ Options > Printer Friendly Page. You may then Print, Print to PDF or copy and paste to any other document format you like. |

Table of Contents

- Introduction

- What is Cisco Identity Services Engine?

- What are F5 BIG-IP Local Traffic Manager and Global Traffic Manager?

- About This Document

- Scenario Overview

- Topology

- Components

- Topology and Traffic Flow

- Deployment Model

- Physically Inline Traffic Flow

- Logically Inline Traffic Flow

- Topology and Network Addressing

- Configuration Prerequisites

- F5 Configuration Prerequisites

- Verify Basic F5 Network Interfaces Assignments, VLANs, IP Addressing, and Routing

- Verify Self IP address and interface settings

- Optional: Verify LTM High Availability

- ISE Configuration Prerequisites

- Configure Node Groups for Policy Service Nodes in a Load-Balanced Cluster

- Define a Node Group

- Add Load-Balanced PSNs to the Node Group

- Add F5 BIG-IP LTM as a NAD for RADIUS Health Monitoring

- Configure BIG-IP LTM as a Network Device in ISE

- Optional: Define an Internal User for F5 RADIUS Health Monitor

- Configure DNS to Support PSN Load Balancing

- Configure DNS Entries for Sponsor and My Devices Portals

- Configure Certificates to Support PSN Load Balancing

- Generate a CSR for an ISE Server Certificate

- IP Forwarding for Non-LB traffic

- LTM Forwarding IP Configuration - Inbound

- LTM Forwarding IP Configuration - Outbound

- Load Balancing RADIUS

- NAT Restrictions for RADIUS Load Balancing

- RADIUS Persistence

- Sticky Methods for RADIUS

- Sticky Attributes for RADIUS

- Example F5 BIG-IP LTM iRules for RADIUS Persistence

- Fragmentation and Reassembly for RADIUS

- Persistence Timeout for RADIUS

- NAD Requirements for RADIUS Persistence

- RADIUS Load Balancing Data Flow

- RADIUS Health Monitoring

- F5 Monitor for RADIUS

- RADIUS Monitor Timers

- User Account Selection for RADIUS Probes

- ISE Filtering and Log Suppression

- RADIUS Load Balancing: F5 Configuration Details

- RADIUS CoA Handling

- Network Access Device Configuration for CoA

- Source NAT for RADIUS CoA

- RADIUS CoA SNAT: F5 Configuration Details

- Load Balancing ISE Profiling

- Introduction

- What is ISE Profiling?

- Why Should I Load Balance Profiling Traffic?

- Load Balancing Requirements for Profiling Data

- Load Balancing RADIUS Profiling Data

- Health Monitors for Profiling Services: DHCP, SNMP, and NetFlow

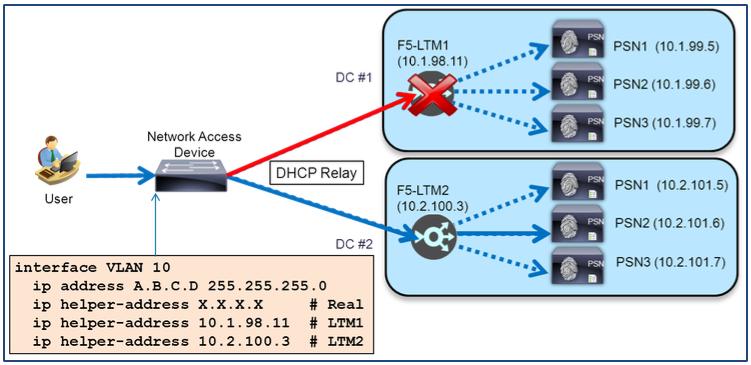

- Load Balancing DHCP Profiling Data

- Load Balancing SNMP Trap Profiling Data

- Load Balancing Netflow Profiling Data

- Profiling Load Balancing: F5 Configuration Details

- Load Balancing ISE Web Services

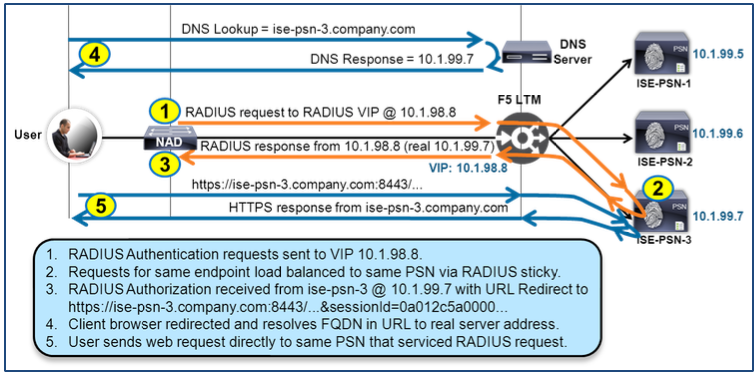

- URL-Redirection Traffic Flow

- Shared PSN Portal Interface for URL-Redirected Portals

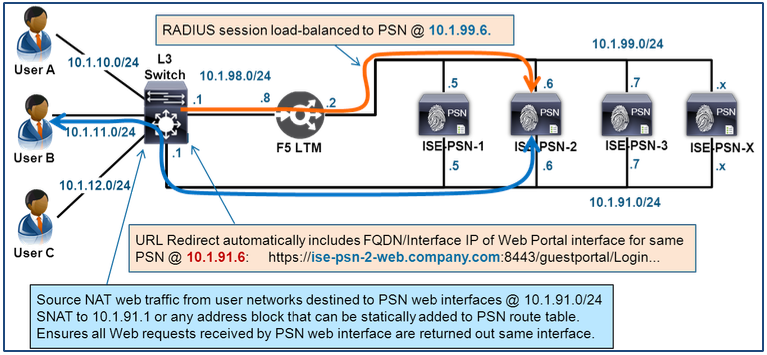

- Dedicated PSN Portal Interface for URL-Redirected Portals

- Direct-Access Web Services

- Web Portal Load Balancing Traffic Flow

- Load Balancing Sponsor, My Devices, and LWA Portals

- Shared PSN Portal Interface for Direct-Access Portals

- Dedicated PSN Portal Interface for Direct-Access Portals

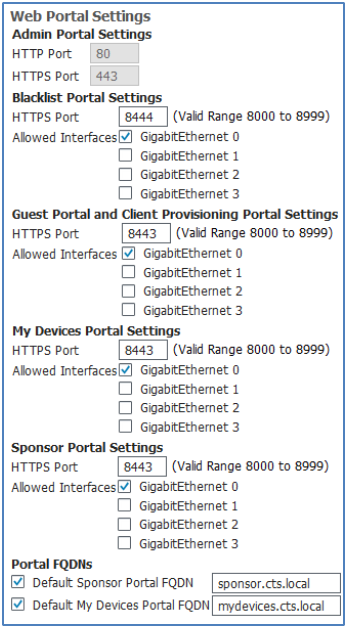

- ISE Web Portal Interfaces and Service Ports

- Virtual Servers and Pools to Support Portal FQDNs and Redirection (Sponsor and My Devices Only)

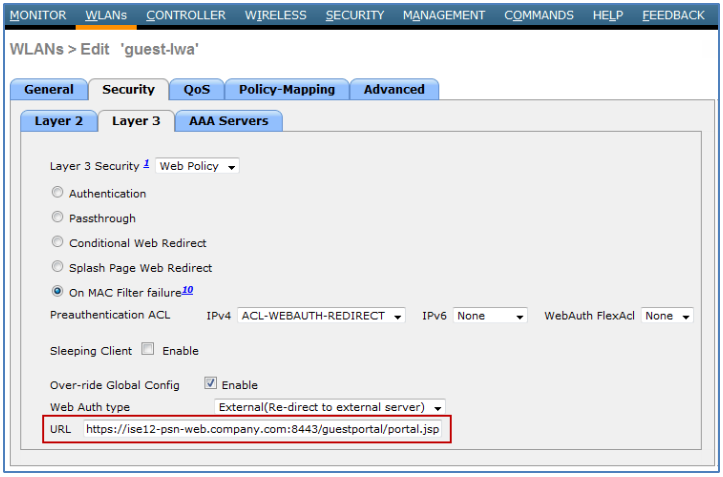

- LWA Configuration Example for Cisco Wireless Controller

- HTTPS Persistence for Direct-Access Portals

- HTTPS Health Monitoring

- F5 Monitor for HTTPS

- HTTPS Monitor Timers

- User Account Selection for HTTPS Probes (No longer required)

- HTTPS Load Balancing: F5 Configuration Details

- Global ISE Load Balancing Considerations

- General Monitoring and Troubleshooting

- Cisco ISE Monitoring and Troubleshooting

- Verify Operational Status of Cisco Components

- ISE Authentications Live Log

- ISE Reports

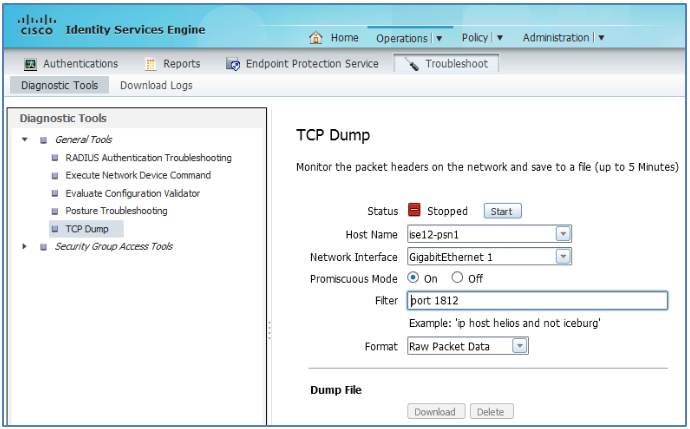

- ISE Packet Capture using TCP Dump

- Logging Suppression and Collection Filters

- F5 BIG-IP LTM Monitoring and Troubleshooting

- Verify Operational Status of F5 Components

- Health Monitors

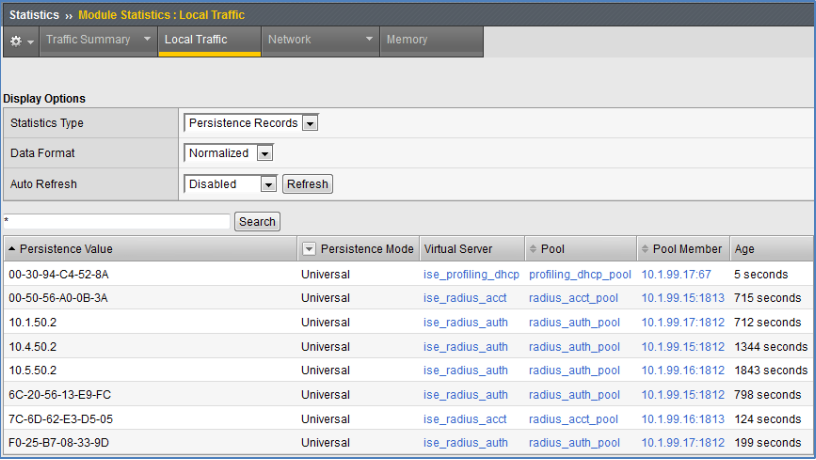

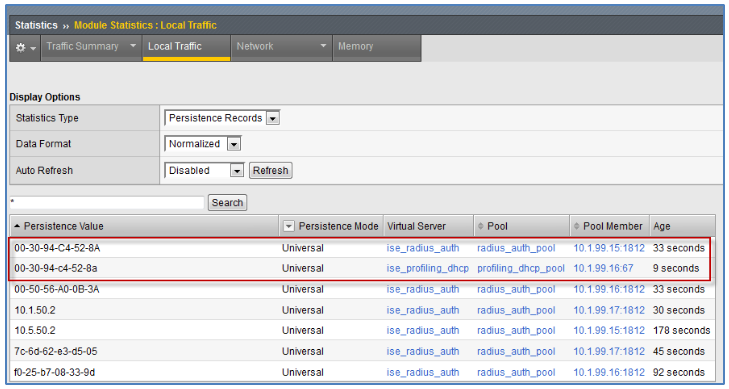

- Persistence Records

- iRule Debug and View Local Traffic Logs

- Packet Capture using TCP Dump

- Network Topology, Routing, and Addressing Review

- Appendix A: F5 Configuration Examples

- Example F5 BIG-IP LTM Configurations

- Full F5 Configuration

- Example F5 iRules for DHCP Persistence

- DHCP Persistence iRule Example: dhcp_mac_sticky

- Appendix B: Configuration Checklists

Introduction

What is Cisco Identity Services Engine?

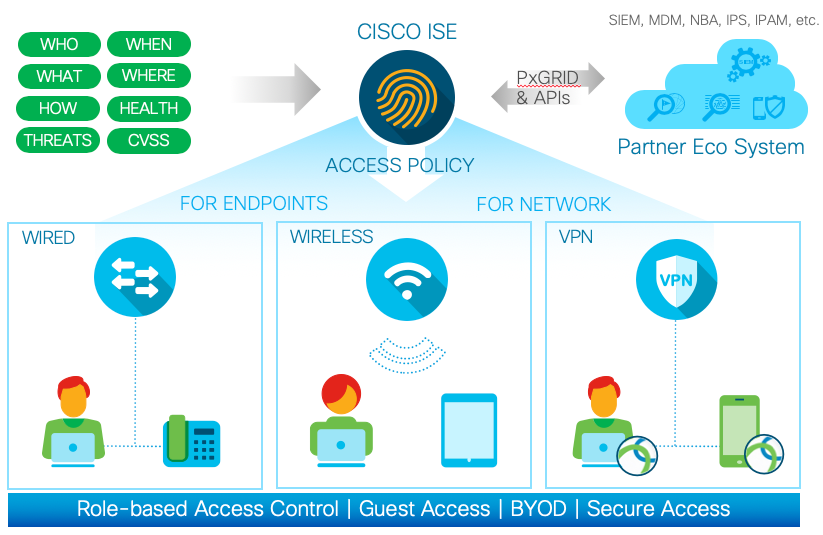

Cisco Identity Services Engine (ISE) is an all-in-one enterprise policy control product that enables comprehensive secure wired, wireless, and Virtual Private Networking (VPN) access.

Cisco ISE offers a centralized control point for comprehensive policy management and enforcement in a single RADIUS-based product. The unique architecture of Cisco ISE allows enterprises to gather real-time contextual information from networks, users, and devices. The administrator can then use that information to make proactive governance decisions. Cisco ISE is an integral component of Cisco Secure Access.

Cisco Secure Access is an advanced Network Access Control and Identity Solution that is integrated into the Network Infrastructure. It is a fully tested, validated solution where all the components within the solution are thoroughly vetted and rigorously tested as an integrated system.

Unlike overlay Network Access Control solutions the Cisco Secure Access utilizes the access layer devices (switches, wireless controllers, etc.) for enforcement. The access device itself now handles functions that were commonly handled by appliances and other overlay devices, such as URL redirection for web authentications.

The Cisco Secure Access not only combines standards-based identity and enforcement models, such as IEEE 802.1X and VLAN control, it also has many more advanced identity and enforcement capabilities such as flexible authentication, Downloadable Access Control Lists (dACLs), Security Group Tagging (SGT), device profiling, guest and web authentications services, posture assessments, and integration with leading Mobile Device Management (MDM) vendors for compliance validation of mobile devices before and during network access.

What are F5 BIG-IP Local Traffic Manager and Global Traffic Manager?

F5 Local Traffic Manager (LTM) and Global Traffic Manager (GTM) are part of F5’s industry-leading BIG-IP Application Delivery Solutions.

BIG-IP Local Traffic Manager provides intelligent traffic management for rapid application deployment, optimization, load balancing, and offloading. LTM increases operational efficiency and ensures peak network performance by providing a flexible, high-performance application delivery system. With its application-centric perspective, BIG-IP LTM optimizes your network infrastructure to deliver availability, security, and performance for critical business applications.

BIG-IP Global Traffic Manager is a global load balancing solution that improves access to applications by securing and accelerating Domain Name resolution. Using high-performance DNS services, BIG-IP GTM scales and secures your DNS infrastructure during high query volumes and DDoS attacks. It delivers a complete, real-time DNSSEC solution that protects against hijacking attacks. BIG-IP GTM improves the performance and availability of your applications by intelligently directing users to the closest or best-performing physical, virtual, or cloud environment. In addition, enables mitigation of complex threats from malware and viruses by blocking access to malicious IP domains.

About This Document

This document is the results of a joint effort on behalf of Cisco and F5 to detail best practice design and configurations for deploying BIG-IP Local Traffic Manager with Cisco Identity Services Engine. This is a validated solution that has undergone thorough design review and lab testing from both Cisco and F5. This document is intended to serve as a deployment aid for customers as well as support personnel alike to ensure a successful deployment when integrating these vendor solutions.

Many features may exist that could benefit your deployment, but if they are not part of the tested solution they may not be included in this document. Other configurations are possible and may be working successfully in your specific deployment, but may not be covered in this guide due to insufficient testing or confidence for a stable deployment. Additionally, many features and scenarios have been tested, but are not considered a best practice, and therefore may not be included in this document (Example: Transparent mode load balancing).

|

Note: Within this document, we describe the recommended method of deployment, and a few different options depending on the level of security and flexibility needed in your environment. These methods are illustrated by examples and include step-by-step instructions for deploying an F5 BIG-IP LTM-Cisco ISE deployment as prescribed by best practices to ensure a successful project deployment. |

Scenario Overview

Topology

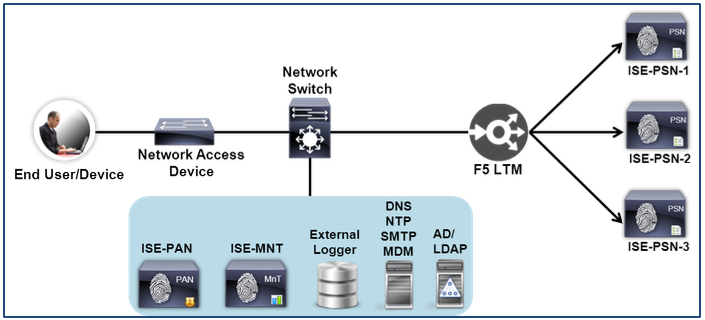

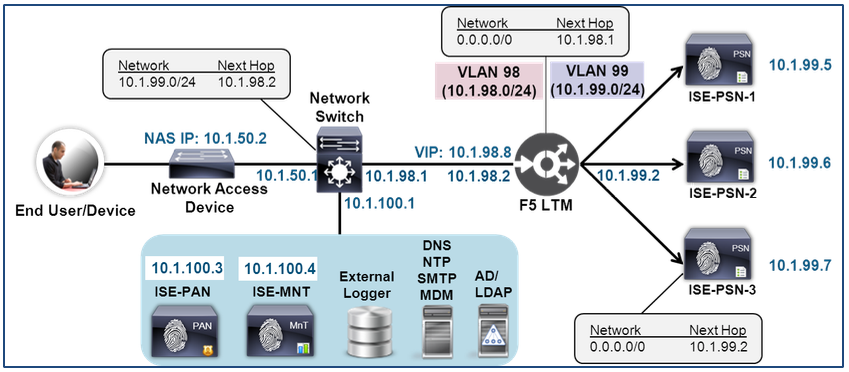

The figure depicts a basic end-to-end Cisco ISE deployment integrated with an F5 BIG-IP Load Balancer. The figure includes key components of the deployment even though they may not be directly involved with the load balancing process. These components include other ISE nodes such as the Policy Administration node (PAN), Monitoring and Troubleshooting node (MnT) and supporting servers and services like Microsoft Active Directory (AD), Lightweight Directory Access Protocol (LDAP), Domain Name Service (DNS), Network Time Protocol (NTP), Simple Mail Transport Protocol (SMTP), and external Sysloggers.

This document focuses on the load balancing of the following ISE Policy Service Node (PSN) services:

- RADIUS Authentication, Authorization, and Accounting (AAA) requests from network access devices (NADs) as well as RADIUS Change of Authorization (CoA) from ISE PSNs to NADs.

- Profiling data sent by NADs and other network infrastructure and security devices.

- Web services for Sponsors (ISE Guest Services) and My Devices (ISE Device Registration Services)

Figure 1: Topology Overview

For simplicity, three ISE PSNs are depicted in the sample topology, although a load-balanced group of PSN nodes could constitute two or more appliances.

Components

The table includes the supported hardware as well as versions tested in this guide. Other platforms and versions may also work but can be subject to specific limitations. Platform and version specific caveats and issues are noted as best possible within the guide.

Table 1: F5 and Cisco Components

| Component | Supported Hardware Platforms | Recommended Software Releases |

|---|---|---|

| F5 BIG-IP Local Traffic Manager | Hardware or Virtual Appliance – Details required | F5 BIG-IP LTM 11.4.0 hotfix HF6

F5 BIG-IP LTM 11.4.1 hotfix HF5 |

| Cisco Identity Services Engine (ISE) | Any supported appliance: SNS-3595, SNS-36x5, VMware | Cisco ISE 3.1 |

| Cisco Catalyst Series Switch | Refer to the Cisco Identity Services Engine Network Component Compatibility Guide for current list of supported Cisco switch platforms and recommended software versions for ISE.

The configurations in this guide should work with many switch platforms but actual support will be depend on the capabilities of the network access device and support for specific RADIUS features and attributes. |

|

| Cisco Wireless LAN Controller (WLC)

Wireless Services Module (WiSM) |

Refer to the Cisco Identity Services Engine Network Component Compatibility Guide for current list of supported Cisco wireless platforms and recommended software versions for ISE.

The configurations in this guide should work with many wireless platforms but actual support will be dependent on the capabilities of the network access device and support for specific RADIUS features and attributes. |

|

Note: For F5 BIG-IP LTM, the minimum recommended software release is 11.4.1 hotfix HF5 or 11.4.0 hotfix HF6. Additionally, 11.6.0 HF2 incorporates performance enhancements that can improve RADIUS load balancing performance. |

Topology and Traffic Flow

Deployment Model

There are many ways to insert the F5 BIG-IP LTM load balancer (LB) into the traffic flow for ISE PSN services. The actual traffic flow will depend on the service being load balanced and the configuration of the core components including the NAD, F5 BIG-IP LTM, ISE PSNs, and the connecting infrastructure. The method that has been most tested and validated in successful customer deployments is a fully inline deployment.

In a fully inline deployment, the F5 BIG-IP-LTM is either physically or logically inline for all traffic between endpoints/access devices and the PSNs. This includes RADIUS, direct and URL-redirected web services, profiling data, and other communications to supporting services.

Physically Inline Traffic Flow

The figure below depicts the “physically inline” scenario. The F5 BIG-IP LTM uses different physical adapters for the internal and external interfaces to separate the PSNs from the rest of the network; all traffic to/from the PSNs must pass through the load balancer on different physical interfaces.

Figure 2: Physically Inline Traffic Flow

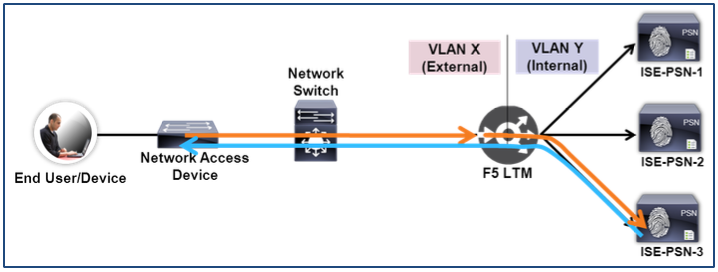

Logically Inline Traffic Flow

The figure below depicts the “logically inline” or “on-a-stick” deployment scenario. Like the physically inline case, the PSNs are on a separate network from the rest of the network and all traffic to/from the PSNs must pass through the load balancer. The difference is that only a single physical adapter is configured with VLAN trunking; network separation for the PSNs is provided using logical internal and external interfaces.

Figure 3: Logically Inline Traffic Flow

In both cases routes must be configured to point to the F5 external interface to reach the PSNs on the internal interface. Additionally, the PSNs must have their default gateway set to the F5’s internal interface.

Both of the above inline deployment models are valid and the one chosen is primarily one of customer preference. Some customers prefer physical separation and more intuitive traffic paths using different network adapters while other customers opt for the simplicity of a single interface connection.

|

Note For very high traffic volume, a single connection using the inline deployment model will incur higher per-interface utilization. Separate physical interfaces can be used to increase interface bandwidth capacity. |

Topology and Network Addressing

The sample topology below will be used to illustrate the load-balancing configuration of ISE PSN services using an F5 BIG-IP appliance. The diagram includes the network-addressing scheme used in the detailed configuration steps. Notice in the following illustration that the F5 BIG-IP LTM is deployed fully inline between the ISE PSNs and the rest of the network.

Figure 4: Sample Topology Network Addressing Scheme

Table 2: Network Addressing Scheme

| Device | Interface | VLAN | Subnet | IP Address | Routing |

|---|---|---|---|---|---|

| ISE-PAN | GE0 | 100 | 10.1.100.0/24 | 10.1.100.3 | DFG: 10.1.100.1 |

| ISE-MNT | GE0 | 100 | 10.1.100.0/24 | 10.1.100.4 | DFG: 10.1.100.1 |

| ISE-PSN-1 | GE0 | 99 | 10.1.99.0/24 | 10.1.99.5 | DFG: 10.1.99.2 |

| ISE-PSN-2 | GE0 | 99 | 10.1.99.0/24 | 10.1.99.6 | DFG: 10.1.99.2 |

| ISE-PSN-3 | GE0 | 99 | 10.1.99.0/24 | 10.1.99.7 | DFG: 10.1.99.2 |

| F5-BIG-IP | Internal | 99 | 10.1.99.0/24 | 10.1.99.2 | |

| F5-BIG-IP | External | 98 | 10.1.98.0/24 | 10.1.98.2 | DFG: 10.1.98.1 |

| Network Switch/Router | F5-Facing | 98 | 10.1.98.0/24 | 10.1.98.1 | 10.1.99.0/24: Next Hop 10.1.98.2 |

| Network Switch/Router | NAD-Facing | 50 | 10.1.50.0/24 | 10.1.50.1 | |

| Network Switch/Router | Server-Facing | 100 | 10.1.100.0/24 | 10.1.100.1 | |

| Network Access Device | RADIUS Source | 50 | 10.1.50.0/24 | 10.1.50.2 | DFG: 10.1.50.1 |

|

Best Practice: A well-documented topology with network addressing and routing information is a critical step in ensuring a successful ISE deployment using load balancers. One of the top issues that cause load-balancing issues is failure to understand the path traffic is taking through or around the load balancer. This includes the ingress and egress interfaces/VLANs for all devices as well as route next hops and source/destination IP addresses/port numbers for all traffic in the end-to-end flow. |

Configuration Prerequisites

F5 Configuration Prerequisites

This section includes items that are assumed to be pre-configured or setup prior to the primary load balancing configuration. These include:

- Validate IP addressing for Internal and External interfaces

- Validate correct VLAN assignments

- Verify routes are properly configured to forward traffic

- Optional: Verify LTM High Availability

Verify Basic F5 Network Interfaces Assignments, VLANs, IP Addressing, and Routing

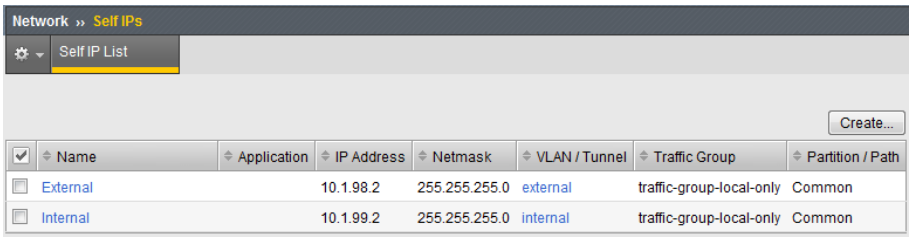

Verify Self IP address and interface settings

- From the F5 web-based admin interface, navigate to Main > Network Self IPs and check the IP address and interface assignments as shown:

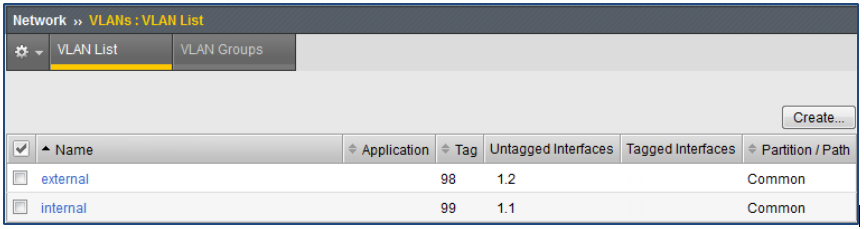

- Navigate to Main > Network > VLANs > VLAN List and check interfaces for correct VLAN assignment, tagging and physical interface mapping as shown:

In the above example, the first available interface 1.1 is mapped to internal and associated with VLAN 99. Interface 1.2 is mapped to external and associated to VLAN 98. Since both interfaces are dedicated network connections (no trunking), each are configured as “Untagged”.

The diagram below shows an example of how to configure a single trunked interface for virtual network separation.Note that only one single interface is used (1.1) and separate VLANs assigned using tagged interfaces. The connecting switch will need to be configured for 802.1Q trunking for the specified VLANs (example: 98 and 99).

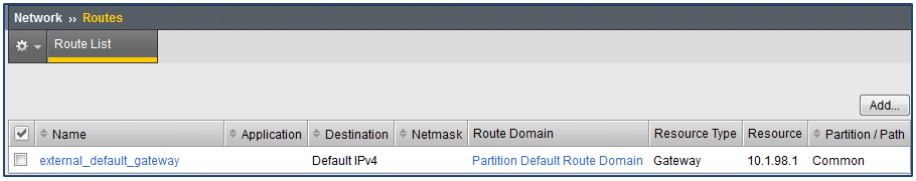

- Navigate to Main > Network > Routes and confirm that a default route exists for the upstream Layer 3 gateway.

In the example, the default gateway for the F5 appliance is the upstream network switch at 10.1.98.1 off the external interface.

|

Note: For simplicity, the example configuration uses the Common partition for load balanced virtual servers and services. It is up to customer discretion whether the need for a separate partition is deemed necessary for the ISE load-balancing configuration. |

Optional: Verify LTM High Availability

F5 BIG-IP LTM may be configured in Active-Standby and Active-Active high availability modes to prevent single points of failure with the load balancing appliance. Configuration of LTM high availability is beyond the scope of this guide. For additional details on Active-Standby configuration, refer to F5 product documentation on Creating an Active-Standby Configuration Using the Setup Utility. For additional details on Active-Active configuration, refer to Creating an Active-Active Configuration Using the Setup Utility.

When configured for high availability, default gateways and next hop routes will point to the floating IP address on the F5 appliance, but health monitors will be sourced from the locally-assigned IP addresses.

ISE Configuration Prerequisites

This section includes items that are assumed to be pre-configured or setup prior to the primary load balancing configuration. These include:

- Node groups configured for any LB cluster

- Adding the BIG-IP LTM(s) as a NAD for RADIUS health monitoring

- DNS properly configured

- Certificates properly installed

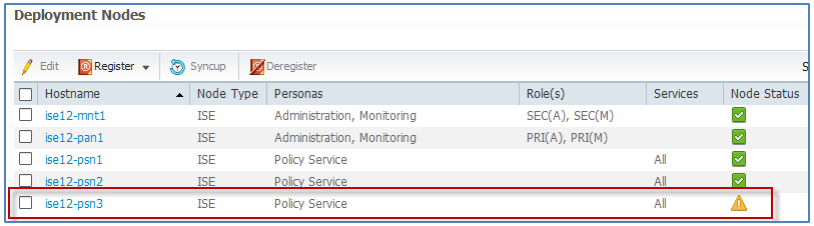

Configure Node Groups for Policy Service Nodes in a Load-Balanced Cluster

When multiple Policy Service nodes are connected through high-speed LAN connections, it is a general best practice to add them to the same ISE Node Group. ISE Node Groups optimize the replication of endpoint profiling data amongst PSNs and also offer recovery of Posture Pending sessions in the event of a node failure. Although node groups do not require members to be part of a load-balanced group, it makes sense that if multiple PSNs are part of a locally load-balanced server group, they most likely satisfy requirements for node group membership.

Define a Node Group

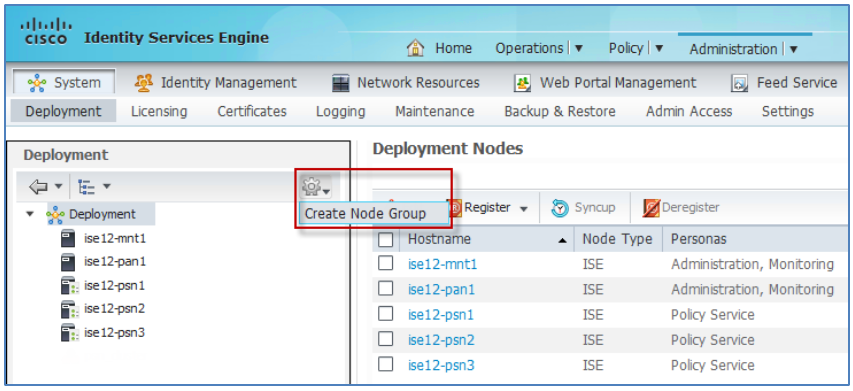

- From the ISE admin interface, navigate to Administration > System > Deployment. From the left panel, click the gear icon in the upper right corner as shown to display the Create Node Group option:

- Click Create Node Group and complete the form and click Submit when finished. The below figure shows an example node group configuration.

- Verify the node group now appears in the list of nodes in the left panel.

Add Load-Balanced PSNs to the Node Group

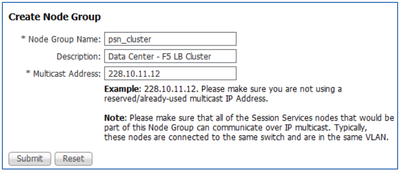

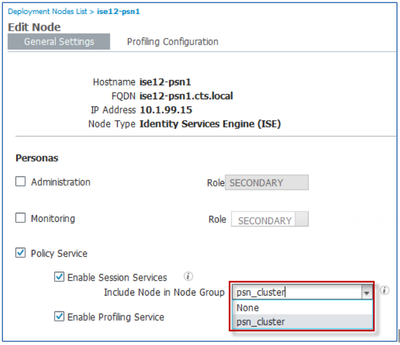

Add all PSNs that are part of the same local load-balanced server farm to the same node group. If there are multiple load-balanced PSN server groups, such as in separate data centers, then they will be added to their own unique group. A key criterion for node group membership is LAN proximity.

- Add a PSN to a node group by clicking the name of the PSN from either the left or right panel:

- Under the Policy Services section, click the drop down next to Include Node in Node Group box and select the name of the node group created for the load-balanced PSNs. Click Save to commit the changes:

- Repeat the steps for each PSN to be added to the node group.

- When complete, all selected PSNs should appear under the node group:

Add F5 BIG-IP LTM as a NAD for RADIUS Health Monitoring

When load balancing services to one of many candidate servers, it is critical to ensure the health of each server before forwarding requests to that server. In the case of RADIUS, the F5 BIG-IP LTM includes a health monitor to periodically verify that the RADIUS service is active and correctly responding. It performs this check by simulating a RADIUS client and sending authentication requests to each PSN (the RADIUS Server) with a username and password. Based on the response or lack of response, the BIG-IP LTM can determine the current status of the RADIUS auth service. Therefore, ISE must be configured to accept these requests from the BIG-IP LTM. This section covers the steps to add the BIG-IP LTM as a Network Device (RADIUS client) to the ISE deployment.

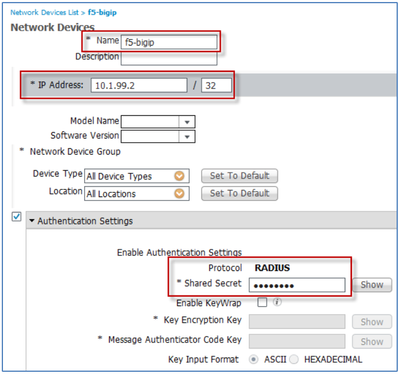

Configure BIG-IP LTM as a Network Device in ISE

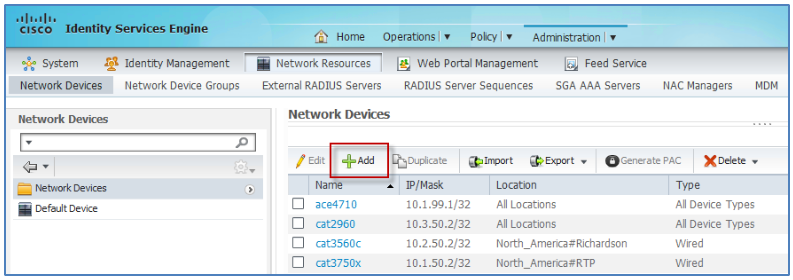

- From the ISE admin interface, navigate to Administration > Network Resources > Network Devices and click Add from the right panel menu.

- Complete the form and click Submit when finished.

Key fields that need to be completed include the following:- Enter a name (such as the hostname) of the F5 BIG-IP LTM.

- Enter the forwarding IP address (Self IP) of the BIG-IP LTM’s Internal interface. This is the source IP address of RADIUS request as seen by the ISE PSN.

- Click the checkbox for the Authentication Settings section and enter a shared secret. This is the password used to secure RADIUS communications between the BIG-IP LTM and the ISE PSN.

- Write down and save the shared secret in a safe place, because it will be needed later for the F5 Monitor configuration.

Optional: Define an Internal User for F5 RADIUS Health Monitor

F5 BIG-IP LTMs have the ability to treat a failed authentication (RADIUS Access-Reject) as a valid response to the RADIUS health monitor. The fact that ISE is able to provide a response indicates that the service is running. If deliberately sending incorrect user credentials, then an Access-Reject is a valid response and the server is treated as healthy.

If it is desired to have F5 send valid credentials and receive a successful authentication response (RADIUS Access-Accept), then it is necessary to configure the appropriate Identity Store—either internal or external to ISE—with the username and password sent by the BIG-IP LTM.

The procedure shows an example of creating an ISE Internal User account for this purpose.

|

Note: See the RADIUS Health Monitoring section of this guide for a detailed discussion on RADIUS Monitor considerations and F5 configuration. This procedure has been included here to streamline the ISE configuration steps to support RADIUS monitoring. |

- From the ISE admin interface, navigate to Administration > Identity Management > Identities > Users and Click Add from the right panel menu.

- Complete the form and click Submit when finished. Required information includes Name and Password:

- Write down and save the user credentials in safe place as they will be needed later for the F5 Monitor configuration.

|

Note: Be sure to include the identity store used for validating F5 RADIUS credentials in the Authentication Policy Rule used to authenticate the F5 monitor. In this example, the identity store InternUsers must be included as the ID store for matching load balancer requests. |

|

Best Practice: To ensure that the F5 Monitor account is not used for other purposes that may grant unauthorized access, lock down the ability to authenticate using this account and restrict access granted to this account. As a health probe, no real network access needs to be granted. Example: Create ISE Authorization Policy Rule that matches specifically on the F5 IP address or parent ISE Network Device Group and the specific F5 test username and return policy that denies network access such as ACL=deny ip any any. |

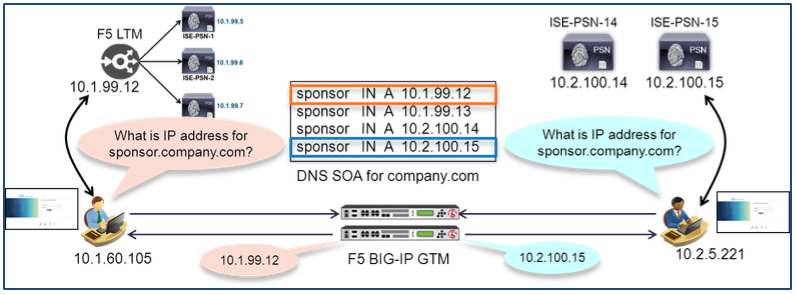

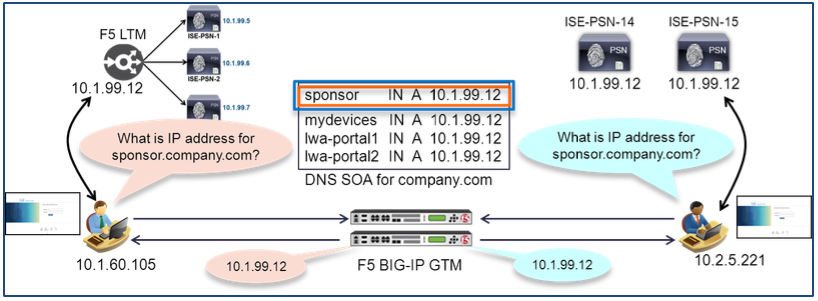

Configure DNS to Support PSN Load Balancing

DNS plays an important role in load balancing ISE web portal services such as the Sponsor Portal and My Devices Portal. Each if these portals can run on every PSN. In order to provide high availability and scaling for these portals, we can deploy F5 to load balance requests to multiple PSNs using a single fully qualified domain name (FQDN). End users can be given a simple and intuitive server name such as sponsor.company.com or guest.company.com that resolves to the IP address of an F5 Virtual Server IP address. The first step in making this happen is to create entries in the organization’s DNS service for these load-balanced portals.

Configure DNS Entries for Sponsor and My Devices Portals

- If ISE Guest Services or My Devices are deployed and will be load balanced, add entries similar to the following in DNS.

DNS SERVER: DOMAIN = COMPANY.COM

SPONSOR IN A 10.1.98.8

MYDEVICES IN A 10.1.98.8

ISE-PAN-1 IN A 10.1.100.3

ISE-PAN-2 IN A 10.1.101.3

ISE-MNT-1 IN A 10.1.100.4

ISE-MNT-2 IN A 10.1.101.4

ISE-PSN-1 IN A 10.1.99.5

ISE-PSN-2 IN A 10.1.99.6

ISE-PSN-3 IN A 10.1.99.7

Configure Certificates to Support PSN Load Balancing

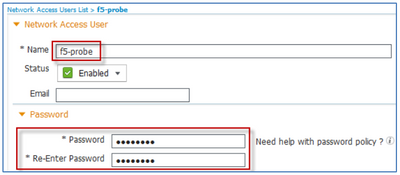

ISE Policy Service nodes use digital certificates to authenticate users via various Extensible Authentication Protocol (EAP) methods as well as to establish trust for secure web portals. When PSN load balancing is deployed, client supplicant requests may be directed to one of many PSNs for authentication and session establishment, or for web-based services including web authentication, device registration, guest and sponsor portals, and client provisioning. Therefore, it is critical that ISE nodes have certificates that will be trusted by the clients regardless of the PSN servicing the request.

To this end, clients will need to explicitly trust every PSN certificate presented or else trust the Certificate Authority (CA) chain that signed the PSN certificate. Additionally, for secure web requests, the client browser typically requires that the identity listed in the certificate matches the name of the requested server. Otherwise, the user may be warned of a name mismatch and manually accept the risk if security policy allows this exception.

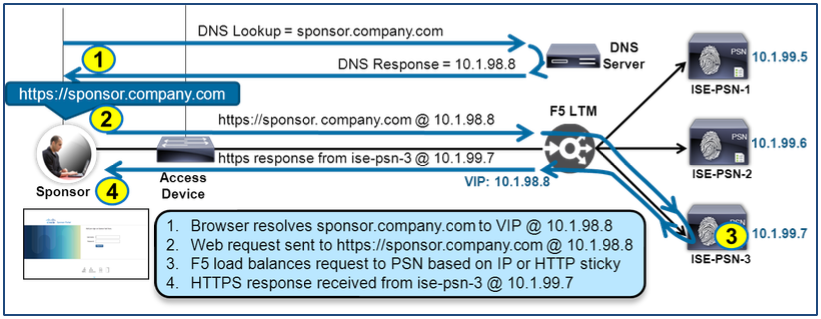

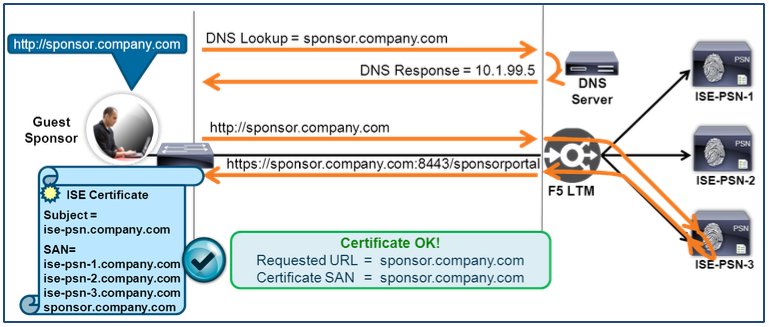

To illustrate this point, consider the example below that depicts load balancing for the ISE Sponsor Portal, a secure web service.

Figure 20: PSN Load Balancing Certificate Mismatch Example

The employee is given the URL of https://sponsor.company.com for creating new guest accounts. When the URL is entered into the employee’s browser, DNS will resolve the FQDN to the Virtual Server IP on the BIG-IP LTM. The web request is directed to ISE-PSN-3 and the PSN presents its HTTPS certificate to the employee’s browser. The server certificate includes the PSN’s identity, but this name is different than the one the employee attempted to access, so a certificate warning is presented to warn the user of the discrepancy.

To avoid certificate failures and warnings, it is important to configure the ISE PSN nodes with certificates that will be trusted. Customers can pre-provision clients with the individual certificates needed for trust based on the service, but this is often management intensive. An alternative is to deploy a PSN server certificate that is universal; in other words, a certificate that can be deployed to multiple PSNs and be trusted by each client for EAP or HTTPS access.

The diagram below shows the same scenario where the PSNs share the same server certificates that include the FQDN for all servers and services required in the Subject Alternative Name (SAN) field of the certificate.

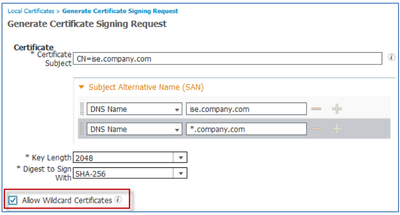

The certificate shown in the diagram is an example of a Unified Communications Certificate (UCC) or Multi-SAN certificate. This type of “universal” certificate can be deployed to each PSN in the cluster and contains the FQDN of each node, but also requires update if new nodes or services are added. An alternative to a UCC is a wildcard certificate. Traditionally, wildcard certificates have a Subject CN value that uses an asterisk (*) followed by the company domain/subdomain name as in *.company.com. An option offered by some SSL Providers is to allow this wildcard domain name to be present in the SAN field and a static entry such as ise.company.com in the Subject CN. It has been found that this combination offers the most flexibility and compatibility with different client operating systems and use cases.

|

Note: Each customer should carefully evaluate the use of UCC or wildcard certificates for their appropriateness and applicability. There are many factors that need to be considered when choosing digital certificates for production use. This will often be a balance between corporate security policy, risk, cost, supportability, productivity, and end user experience. |

Be sure that you include DNS entries for all FQDNs referenced in the server certificate. In the case of load-balanced FQDNs, these entries should resolve to the Virtual Server IP address on the F5 BIG-IP LTM.

The following procedures are intended to serve as a brief overview of the steps required to generate a Certificate Signing Request (CSR) for either a UCC or “Wildcard SAN” certificate. Although the use of self-signed certificates may be appropriate for lab and proof of concept testing, they are often not suitable for production use. Furthermore, it is common for customers to have ISE certificates signed by a public CA to avoid certificate trust warnings for non-employees while using a private CA to sign ISE and client certificates for client certificate provisioning and authentication using EAP-TLS.

ISE 1.2 supports one certificate for all HTTPS authentications to the ISE node and another certificate for all EAP authentications. ISE 1.3 will support unique HTTPS certificates per portal.

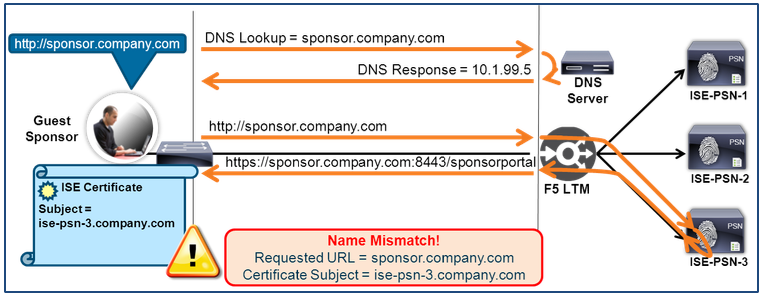

Generate a CSR for an ISE Server Certificate

- From the ISE admin interface, navigate to Administration > System > Certificates > Local Certificates and click Add from the right panel to Generate CSR for ISE Server Certificate :

- Select Generate Certificate Signing Request from the drop-down menu:

- Complete the CSR form. To create a universal certificate for the ISE PSNs, you can enter a generic FQDN under the subject using your specific domain (Example: ise.company.com)

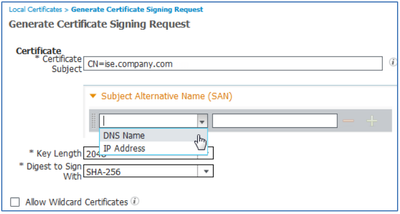

- Add SAN entries by expanding the Subject Alternative Name (SAN) field. Select the DNS Name for the field type.

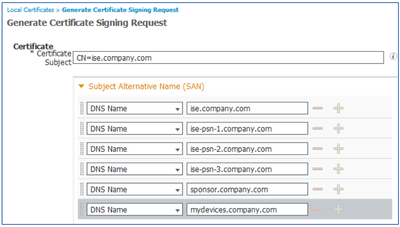

UCC Certificate: For a UCC certificate, add the same FQDN as the Subject CN to the SAN field. Use the + icon to the right of new entry to create additional entries. Continue to add SAN entries for all PSN nodes and services such as the Sponsor Portal and My Devices Portal. The FQDN of each PSN node will be included in this list as shown in the example.

Depending on policy and certificate usage, the Administration and Monitoring node FQDNs may also be included in the SAN list.

Wildcard SAN Certificate: For a certificate with a wildcard in the SAN, start by adding the same FQDN as the Subject CN to the SAN field. Use the + icon to the right of new entry to create an additional entry. Next, add a wildcard entry in the SAN field as shown in the example. - Be sure to check the box Allow Wildcard Certificates.

- Set the Key Length and Digest to Sign With values per your security requirements and click Submit to complete the form.

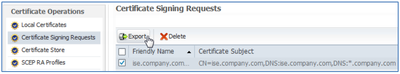

- Navigate to the Certificate Signing Request section in the left panel, select the newly created CSR, and click Export.

A private or public CA can now sign the exported CSR.

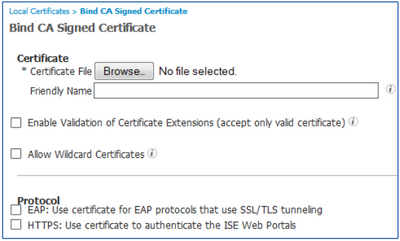

Note: Be sure to import the signing CA certificate or certificate chain to the Certificate Store on each ISE node to use this certificate. For certificate chains, import each certificate in the chain as an individual cert rather than a single file. - o bind the signed certificate, select the Bind CA Signed Certificate option from the drop-down menu in Step 2 (under Local Certificates).

- Complete the form:

- Browse to the CA-signed certificate and upload it to the ISE node.

- Check the Enable Validation of Certificate Extensions checkbox if policy required RFC-conformance.

- Check the Allow Wilcard Certificates option as appropriate.

- Check the required protocols for certificate usage:

- EAP will select the certificate for use in all EAP authentications.

- HTTPS will select the certificate for use in all HTTPS communications including web portals and inter-node communications.

- Click Submit to install the certificate.

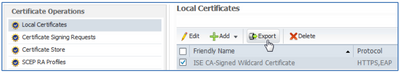

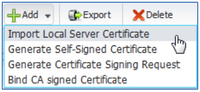

- To apply the same certificate to other nodes, select the new universal certificate under Local Certificates and click Export to export the certificate and private key. Be sure to secure the certificate and private key pair and password!

- Go to the admin interface of the other ISE nodes that will use this certificate and navigate to Administration > System > Certificates > Local Certificates and Select the Import Local Server Certificate option from the Add drop-down menu as shown.

- Complete the form per Step 8 above.

IP Forwarding for Non-LB traffic

In addition to specific PSN traffic that must be sent directly to the Virtual Server IP address(es) for load balancing, there are a number of flows that do not require load balancing and simply need to be forwarded by the inline F5 appliance. This traffic includes:

- Node communications between PSNs and each Admin and Monitoring node.

- All management traffic to/from the PSN real IP addresses such as HTTPS, SSH, SNMP, NTP, DNS, SMTP, and Syslog.

- Repository and file management access initiated from PSN including FTP, SCP, SFTP, TFTP, NFS, HTTP, and HTTPS.

- All external AAA-related traffic to/from the PSN real IP addresses such as AD, LDAP, RSA, external RADIUS servers (token or foreign proxy), and external CA communications (CRL downloads, OCSP checks, SCEP proxy).

- All service-related traffic to/from the PSN real IP addresses such as Posture and Profiler Feed Services, partner MDM integration, pxGrid, and REST/ERS API communications.

- Client traffic direct to PSN real IP addresses resulting from ISE Profiler (NMAP, SNMP queries) and URL-Redirection such as CWA, DRW/Hotspot, MDM, Posture, and Client Provisioning.

- RADIUS CoA from PSNs to network access devices.

As you can see, there are many flows that are not subject to load balancing. To accommodate this traffic in a fully inline deployment, it is necessary to create a Virtual Server on the F5 appliance that will serve as a catch all for these traffic flows and perform IP forwarding.

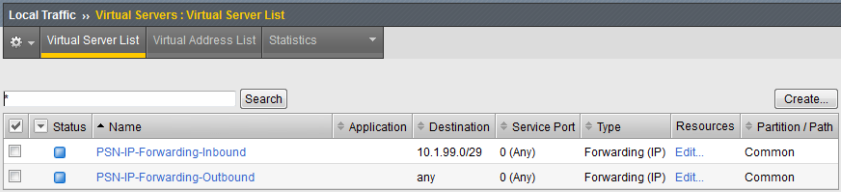

Follow the configuration settings in the following table to define two separate IP forwarding servers for inbound and outbound traffic.

|

Note: Although possible to create a single IP Forwarding Virtual Server for both inbound and outbound traffic, the recommended virtual server configuration outlined below will establish separate inbound and outbound servers to allow traffic to be limited to the PSN nodes connected to the Internal interface. |

LTM Forwarding IP Configuration - Inbound

Virtual Server (Inbound) (Main tab > Local Traffic > Virtual Servers > Virtual Server List)

|

Note: Create two virtual servers for IP Forwarding—one for Inbound traffic (to PSNs) and one for Outbound traffic (from PSNs) |

| BIG-IP LTM Object | Recommended Setting | Notes |

|---|---|---|

| Name | <IP Forwarding Server Name>

Example: PSN-IP-Forwarding-Inbound |

Type the name of the virtual server for IP Forwarding non-load balanced traffic from external hosts to the PSNs. |

| Type | Forwarding (IP) |

Forwarding (IP) allows traffic that does not require load balancing to be forwarded by F5 to the PSNs. |

| Source | <Source Network Address/Mask>

Example: 10.0.0.0/8 |

Type the network address with bit mask for the external network addresses that need to communicate with the ISE PSNs. |

| Destination | Type: Network | |

| Address:

<Dst Network> Example: 10.1.99.0 |

Enter the PSN network address appropriate to your environment.

|

|

| Mask:

<Dst Mask> Example: 255.255.255.224 |

Enter the PSN network address mask appropriate to your environment. For added security, make the address range as restrictive as possible. |

|

| Service Port | * / * All Ports |

Select the wildcard service port to match all ports by default |

| Protocol | * All Protocols |

Select the wildcard protocol to match all protocols by default |

| VLAN and Tunnel Traffic | Enabled On… |

Optional: Restrict inbound IP forwarding to specific VLANs. |

| VLANs and Tunnels | <External VLANs>

Example: External |

Select the ingress VLAN(s) used by external host to communicate with the PSNs. |

LTM Forwarding IP Configuration - Outbound

Virtual Server (Outbound) (Main tab > Local Traffic > Virtual Servers > Virtual Server List)

|

Note: Create two virtual servers for IP Forwarding—one for Inbound traffic (to PSNs) and one for Outbound traffic (from PSNs) |

| BIG-IP LTM Object | Recommended Setting | Notes |

|---|---|---|

| Name | <IP Forwarding Server Name>

Example: PSN-IP-Forwarding-Outbound |

Type the name of the virtual server for IP Forwarding non-load balanced traffic from the PSN servers to external hosts. |

| Type | Forwarding (IP) |

Forwarding (IP) allows traffic that does not require load balancing to be forwarded by F5 from the PSNs. |

| Source | <Source Network Address/Mask Bits>

Example: 10.1.99.0/28 |

Enter the PSN network address with bit mask. |

| Destination | Type: Network | |

| Address:

<Dst Network> Example: 0.0.0.0 |

Enter the network address network appropriate to your environment. Make the destination as restrictive as possible while not omitting hosts that need to communicate directly to the PSNs. |

|

| Mask:

<Dst Mask> Example: 0.0.0.0 |

Enter the destination network mask appropriate to your environment. |

|

| Service Port | * / * All Ports |

Select the wildcard service port to match all ports by default |

| Protocol | * All Protocols |

Select the wildcard protocol to match all protocols by default |

| VLAN and Tunnel Traffic | Enabled On… |

Optional: Restrict outbound IP forwarding to specific VLAN. |

| VLANs and Tunnels | <Internal VLAN>

Example: Internal |

Select the PSN server VLAN used to communicate with external hosts. |

Verify the two new Virtual Server IP Forwarding entries. If all protocols were allowed, then it should be possible to ping each of the PSN nodes from an external management network.

Load Balancing RADIUS

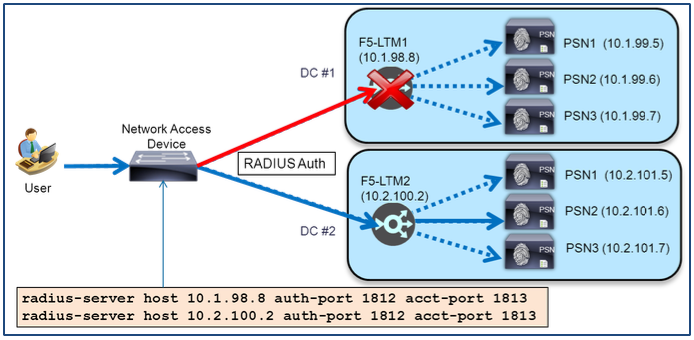

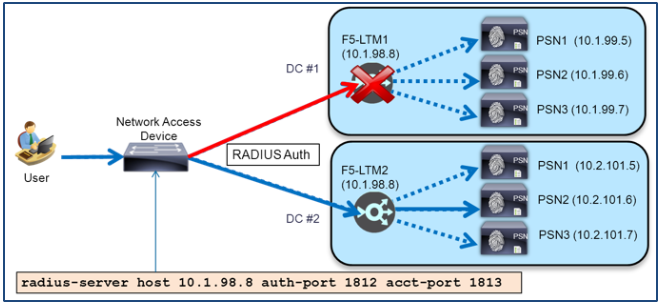

NAT Restrictions for RADIUS Load Balancing

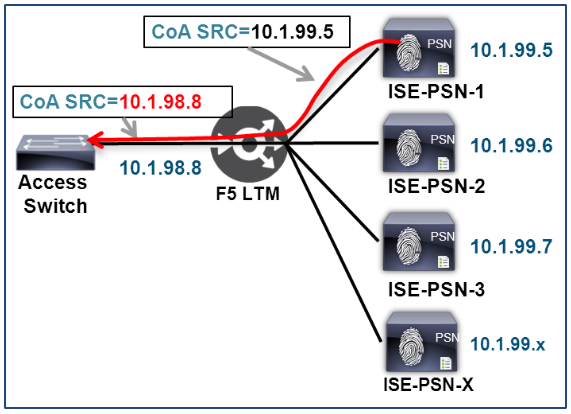

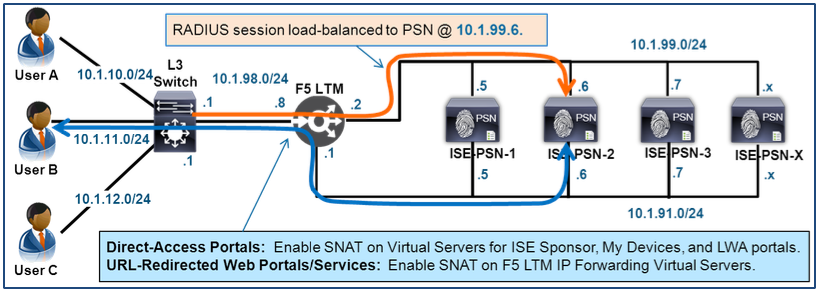

A common load balancing option is to have the F5 appliance perform source network address translation, also known as source NAT, or SNAT, on traffic sent to the Virtual IP address. This method can simplify routing since the real servers see the load balancer as the source of all traffic and consequently reply directly to the load balancer’s IP address whether the appliance is fully or partially inline to the traffic flow. Unfortunately, this option is not suitable for use with ISE RADIUS AAA services when Change of Authorization (CoA) is required.

RADIUS CoA is used to allow the RADIUS server to trigger a policy server action including reauthentication, termination or updated authorization against an active session. In an ISE deployment, CoA is initiated by the PSN and sent to the NAD to which the authenticated user/device is connected. The NAD IP address is determined by the source IP address of RADIUS authentication requests, a field in the IP packet header, not a RADIUS attribute such as NAS-IP-Address.

For this reason, SNAT of RADIUS traffic from the NAD is not compatible with RADIUS CoA in an ISE deployment. With SNAT of RADIUS AAA traffic, PSNs see the load balancer IP address as the source of RADIUS requests and treat it as the NAD that manages the client session. As a result, RADIUS CoA requests are sent directly to the BIG-IP LTM and dropped.

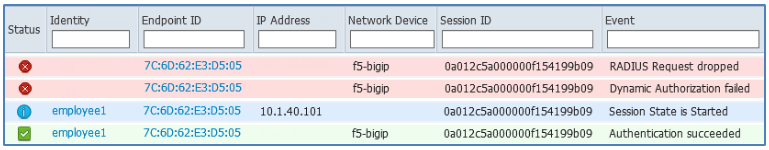

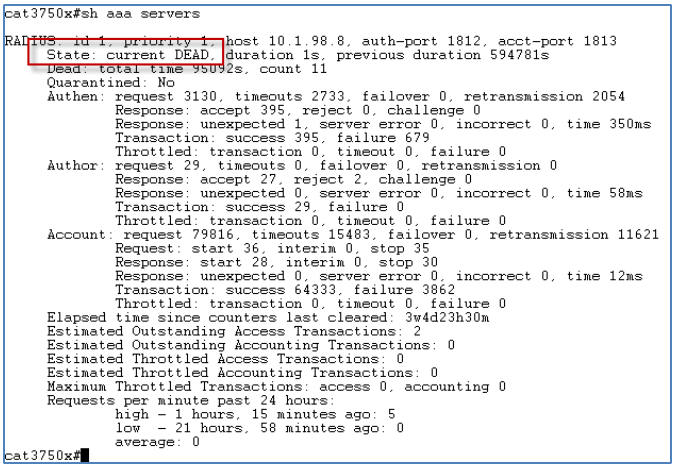

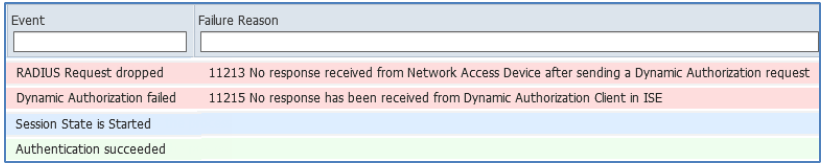

The ISE Live Authentication log below shows an example of this situation.

Figure: Failed RADIUS CoA Due to SNAT – Part 1

Note that the F5 BIG-IP LTM appears as the source of RADIUS requests instead of the true network access device, a Cisco WLC. After a user was successfully authenticated, a CoA (a Dynamic Authorization request) was initiated and sent to the NAD which in this case is the BIG-IP LTM appliance. The more detailed failure reason in log below reveals the error is due to lack of response from the LTM appliance to the CoA request.

|

Note: A later section in this guide covers a different scenario whereby RADIUS CoA traffic initiated from PSNs to the NAD may be Source NATted. This particular scenario should not be confused with the above restriction which specifically applies to SNAT of NAD-initiated RADIUS traffic. |

RADIUS Persistence

Persistence, also known as “sticky” or “stickiness” allows traffic matching specific criteria to be load balanced (or “stick”) to the same real server for processing.

To allow the PSN to properly manage the lifecycle of a user/device session, ISE requires that RADIUS Authentication and Authorization traffic for a given session be established to a single PSN. This includes additional RADIUS transactions that may occur during the initial connection phase such as reauthentication following CoA.

It is advantageous for this persistence to continue after initial session establishment to allow reauthentications to leverage EAP Session Resume and Fast Reconnect cache on the PSN. Database replication due to profiling is also optimized by reducing the number of PSN ownership changes for a given endpoint.

RADIUS Accounting for a given session should also be sent to the same PSN for proper session maintenance, cleanup and resource optimization. Therefore, it is critical that persistence be configured to ensure RADIUS Authentication, Authorization, and Accounting traffic for a given endpoint session be load balanced to the same PSN.

Sticky Methods for RADIUS

F5 supports different methods to configure persistence including:

- Persist Attribute (configured under the RADIUS Service Profile).

- Default Persistence Profile and Fallback Persistence Profile (specified under the Virtual Server Resources). Persistence Profiles further allow the specification of an iRule that defines persistence logic.

The recommended method for ISE RADIUS load balancing is the iRule option. Although the Persist Attribute option is simple and may be sufficient in some deployments, the iRule method is recommended as it offers superior processing capabilities based on multiple attributes, fallback logic, and options to log events for advanced troubleshooting.

|

Note: If persistence is configured under both the RADIUS Service Profile and Default Persistence Profile, then the RADIUS Service Profile takes precedence. If the RADIUS Service Profile references an iRule for persistence, the iRule takes precedence. |

Sticky Attributes for RADIUS

There are numerous attributes that F5 can use for persistence including, but not limited to, RADIUS attributes (Calling-Station-ID, Framed-IP-Address, NAS-IP-Address, IETF or Cisco Session ID) or Source IP Address. The source IP address is typically the same as the IP address of the RADIUS client (the NAD) or RADIUS NAS-IP-Address.

Cisco’s Audit Session ID (also known as CPM Session ID) is a unique value that is calculated by the NAD based on its NAS-IP-Address, an incrementing counter value, and the session start timestamp. Unlike the RFC 2866 Acct-Session-Id that may change over re-authentications, the Audit Session ID can be carried over multiple RADIUS reauthentications, each which needs to be sent to the same PSN for processing. Although a reasonable choice for most Cisco access devices, it is not suitable for all devices. Additionally, it is possible in some scenarios that the Audit Session ID is renegotiated by the NAD during client reauthentication attempts, for example during a failed wireless roam to a new access point or controller.

NAS-IP-Address or Source IP address may be reasonable choices where there are numerous network access devices (RADIUS clients) but only few clients per NAD. Under these conditions one may expect reasonable load distribution. However, for cases where many clients connect to a single NAD, then persistence on NAD IP address will likely result in over-loading of specific PSNs.

Calling-Station-ID is a common attribute across many RADIUS authentication methods and is based on a unique endpoint. This value does not change across multiple connection attempts using the same network adapter. Therefore, this attribute is the recommended persistence attribute.

There are some cases where the Calling-Station-ID value is not populated such as certain 3rd-party NADs, so it is recommended to have a fallback persistence method defined in such cases. NAS-IP-Address/Source IP Address is a suitable choice.

The recommended persistence attribute for ISE RADIUS load balancing is Calling-Station-ID. Source IP address or NAS-IP-Address is recommended fallback methods.

Example F5 BIG-IP LTM iRules for RADIUS Persistence

This section highlights working iRule examples for RADIUS Persistence.

RADIUS Persistence iRule Example #1: radius_mac_sticky

This is the generally recommended iRule and is based on RADIUS Calling-Station-Id as the primary persistence attribute. If the Calling-Station-Id attribute is not populated, then the persistence falls back to the RADIUS NAS-IP-Address attribute.

# ISE persistence iRule based on Calling-Station-Id (MAC Address) with fallback to NAS-IP-Address as persistence identifier

when CLIENT_DATA {

# 0: No Debug Logging 1: Debug Logging

set debug 0

# Persist timeout (seconds)

set nas_port_type [RADIUS::avp 61 "integer"]

if {$nas_port_type equals "19"}{

set persist_ttl 3600

if {$debug} {set access_media "Wireless"}

} else {

set persist_ttl 28800

if {$debug} {set access_media "Wired"}

}

# If MAC address is present - use it as persistent identifier

# See Radius AV Pair documentation on https://devcentral.f5.com/wiki/irules.RADIUS__avp.ashx

if {[RADIUS::avp 31] ne "" }{

set mac [RADIUS::avp 31 "string"]

# Normalize MAC address to upper case

set mac_up [string toupper $mac]

persist uie $mac_up $persist_ttl

if {$debug} {

set target [persist lookup uie $mac_up]

log local0.alert "Username=[RADIUS::avp 1] MAC=$mac Normal MAC=$mac_up MEDIA=$access_media TARGET=$target"

}

} else {

set nas_ip [RADIUS::avp 4 ip4]

persist uie $nas_ip $persist_ttl

if {$debug} {

set target [persist lookup uie $nas_ip]

log local0.alert "No MAC Address found - Using NAS IP as persist id. Username=[RADIUS::avp 1] NAS IP=$nas_ip MEDIA=$access_media TARGET=$target"

}

}

}

Since the persistence TTL value is set, it will take precedence over the Persistence Profile timeout setting. The iRule also includes a logging option to assist with debugging. Set the debug variable to “1” to enable debug logging. Set the debug variable to “0” to disable debugs logging.

Here is sample output when these log statements are enabled (not commented out using hash):

Sat Sep 27 13:55:44 EDT 2014 alert f5 tmm[9443] Rule /Common/radius_mac_sticky <CLIENT_DATA>: Username=6C-20-56-13-E9-FC MAC=6C-20-56-13-E9-FC Normal MAC=6C-20-56-13-E9-FC MEDIA=Wired TARGET= Sat Sep 27 13:55:43 EDT 2014 alert f5 tmm[9443] Rule /Common/radius_mac_sticky <CLIENT_DATA>: Username=6c205613e9fc MAC=6C-20-56-13-E9-FC Normal MAC=6C-20-56-13-E9-FC MEDIA=Wired TARGET=/Common/radius_auth_pool 10.1.99.6 1812 Sat Sep 27 13:55:40 EDT 2014 alert f5 tmm[9443] Rule /Common/radius_mac_sticky <CLIENT_DATA>: Username=employee1 MAC=7c-6d-62-e3-d5-05 Normal MAC=7C-6D-62-E3-D5-05 MEDIA=Wireless TARGET=/Common/radius_acct_pool 10.1.99.7 1813 Sat Sep 27 13:55:40 EDT 2014 alert f5 tmm[9443] Rule /Common/radius_mac_sticky <CLIENT_DATA>: Username=employee1 MAC=7c-6d-62-e3-d5-05 Normal MAC=7C-6D-62-E3-D5-05 MEDIA=Wireless TARGET=/Common/radius_acct_pool 10.1.99.7 1813 Sat Sep 27 13:55:39 EDT 2014 alert f5 tmm[9443] Rule /Common/radius_mac_sticky <CLIENT_DATA>: Username=employee1 MAC=7c-6d-62-e3-d5-05 Normal MAC=7C-6D-62-E3-D5-05 MEDIA=Wireless TARGET= Sat Sep 27 13:55:39 EDT 2014 alert f5 tmm[9443] Rule /Common/radius_mac_sticky <CLIENT_DATA>: Username=employee1 MAC=7c-6d-62-e3-d5-05 Normal MAC=7C-6D-62-E3-D5-05 MEDIA=Wireless TARGET= Sat Sep 27 13:55:39 EDT 2014 alert f5 tmm[9443] Rule /Common/radius_mac_sticky <CLIENT_DATA>: Username=employee1 MAC=7c-6d-62-e3-d5-05 Normal MAC=7C-6D-62-E3-D5-05 MEDIA=Wireless TARGET= Sat Sep 27 13:55:38 EDT 2014 alert f5 tmm[9443] Rule /Common/radius_mac_sticky <CLIENT_DATA>: Username=00-50-56-A0-0B-3A MAC=00-50-56-A0-0B-3A Normal MAC=00-50-56-A0-0B-3A MEDIA=Wired TARGET= Sat Sep 27 13:55:37 EDT 2014 alert f5 tmm[9443] Rule /Common/radius_mac_sticky <CLIENT_DATA>: No MAC Address found - Using NAS IP as persist id. Username=#ACSACL#-IP-CENTRAL_WEB_AUTH-5334c9a5 NAS IP=10.1.50.2 MEDIA=Wired TARGET= Sat Sep 27 13:55:37 EDT 2014 alert f5 tmm[9443] Rule /Common/radius_mac_sticky <CLIENT_DATA>: Username=005056a00b3a MAC=00-50-56-A0-0B-3A Normal MAC=00-50-56-A0-0B-3A MEDIA=Wired TARGET=

Fragmentation and Reassembly for RADIUS

When persistence is based on a RADIUS attribute within the UDP packet, it is critical that the load balancer reassembles the IP fragments in order for the load balancer to make the correct PSN forwarding decision. Otherwise, large RADIUS packets such as those containing certificates for EAP-TLS may be fragmented and load balanced using a fallback mechanism. This can cause fragments to be sent to different PSNs and result in client authentication failures.

Fortunately, “When a BIG-IP virtual server receives an IP fragment, the Traffic Management Microkernel (TMM) queues the packet and waits to collect and reassemble the remaining fragments into the original message. TMM does not generate a flow for the fragment until TMM reassembles and processes the entire message. If part of the message is lost, the BIG-IP system discards the fragment.” For more information, see F5 support article SOL9012: The BIG-IP LTM IP fragment processing.

Load balancing using type FastL4 is an exception to the above behavior whereby IP fragment reassembly must be explicitly enabled under the FastL4 Protocol Profile. Current recommendation is to use Standard as the load balancing type so fragmentation/reassembly of UDP RADIUS packets should not be an issue.

Persistence Timeout for RADIUS

F5 defines a Persistence Timeout, or Time to Live (TTL), that controls the duration that a given persistence entry should be cached. Once expired, a new packet without a matching entry can be load balanced to a different server.

In an ISE deployment, it is recommended that RADIUS for a given session be load balanced to the same PSN even after initial session establishment to optimize session maintenance and profiling database replication. Therefore, a longer persistence timeout is generally proposed for RADIUS. A value of five minutes (300 seconds) should be adequate for most deployments to cover the initial session establishment. If ISE services like posture and onboarding are deployed, then 10 or 15 minutes may be necessary to cover the initial assessment, provisioning and remediation phase. If EAP Session Resume or Profiling Services are enabled, then even longer timeouts are recommended, say one hour (3600 seconds) for wireless and eight hours (28800 seconds) for wired deployments. These longer persistence intervals will optimize authentication performance, general session lifecycle maintenance, and profiling data replication.

|

Note: A side effect of longer persistence timeouts is that it may take longer for existing sessions to be load balanced to a newly added server. The persistence timers for existing sessions will need to expire or be cleared before they can be load balanced to the new PSN. Based on load balancing method such as Least Connections, it is likely that a majority of new sessions will be sent to the new PSN until load increases and is commensurate with other PSNs. |

The persistence timeout setting ultimately depends on the network environment. For highly mobile environments where the average connect time to the network is much lower, then a lower persistence setting may be more appropriate to more closely match the expected connect time. In more static environments like a wired LAN that includes mostly immobile endpoints, much longer persistence timeouts can be set.

|

Note: It is possible to configure separate Virtual Servers with different persistence timeouts. It is also possible to leverage F5 iRules to change the persistence TTL based on a RADIUS attribute like Network Device Group Type or Location. |

The persistence timeout can be set directly under the Persistence Profile or through an iRule named under the Persistence Profile. If both are configured, the iRule takes precedence.

NAD Requirements for RADIUS Persistence

In order to apply persistence based on specific attributes in a RADIUS packet, it is necessary that each NAD properly populates these attributes with the expected data and format.

Cisco Catalyst Switches

By default, Cisco Catalyst switches populate the RADIUS Calling-Station-ID attribute with the MAC address of the wired hosts connected to its switchports. There are some cases where it may be desirable to supplement the information sent in RADIUS requests. The following are some examples:

Table 3: RADIUS Attributes for Cisco Catalyst Switches

| Cisco Catalyst IOS Command | Description |

|---|---|

| radius-server attribute 8 include-in-access-req | Include Framed-IP-Address (if available) in RADIUS Access Requests |

| radius-server attribute 31 send nas-port-detail | Include client IP address for remote console (vty) connections to the switch |

| radius-server attribute 31 mac format ietf upper-case | Set the MAC address format to 00-00-40-96-3E-4A (all upper case letters) |

Cisco Wireless LAN Controllers

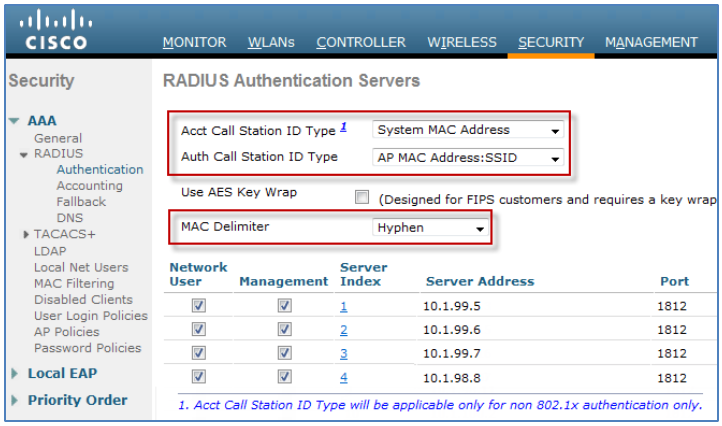

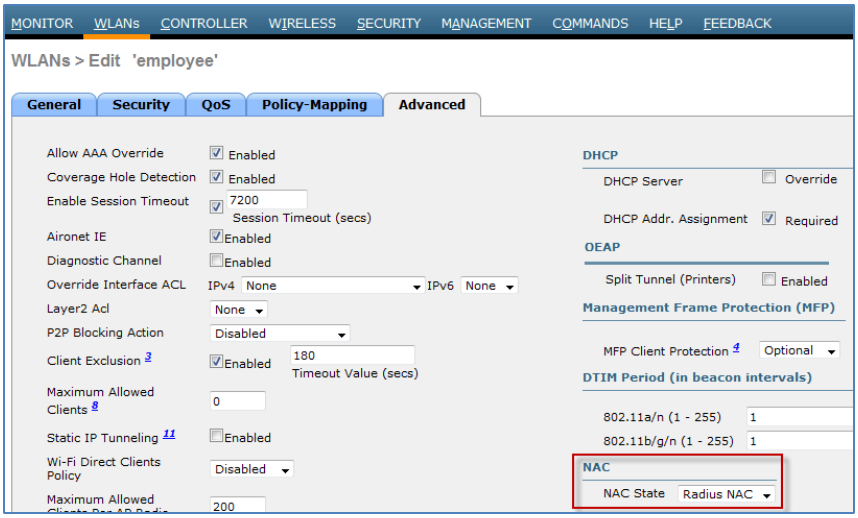

By default, Cisco Wireless LAN Controllers populate the RADIUS Calling-Station-ID attribute with the MAC address of the wireless clients when RADIUS NAC is enabled on the WLAN. There are some deployments that use Local Web Authentication (LWA) where RADIUS NAC is not enabled. In these cases, if the Auth Call-Station-ID attribute is set to IP address, then the Calling-Station-Id uses the client IP address rather than the MAC address. This can break persistence for RADIUS. The diagram depicts the typical settings to ensure client MAC is populated in the Calling-Station-Id for most cases.

Figure: RADIUS Attributes for Cisco Wireless Controllers

|

Note: WLC versions prior to 7.6 may not display separate entries for Auth and Accounting Call Station ID. In those versions, the single entry will specify the format for Authentication and Authorization requests. For reference, the RADIUS NAC setting is required to ensure that the Cisco Audit Session ID is presented in the RADIUS request. It is configured under the WLAN settings in the Advanced tab as shown in the figure. |

Figure: RADIUS Attributes for Cisco Wireless Controllers

Cisco ASA Remote Access VPN Servers

By default, Cisco Adaptive Security Appliances (ASAs) populate the RADIUS Calling-Station-ID attribute with the public IP address of the full-tunnel remote access VPN client. The real client MAC address is not available to the ASA since the connection is over an L3 IP connection.

For other Cisco and non-Cisco RADIUS NADs, you can view the contents of the various RADIUS attributes from the NAD logs, ISE authentication detail logs, or packet captures.

RADIUS Load Balancing Data Flow

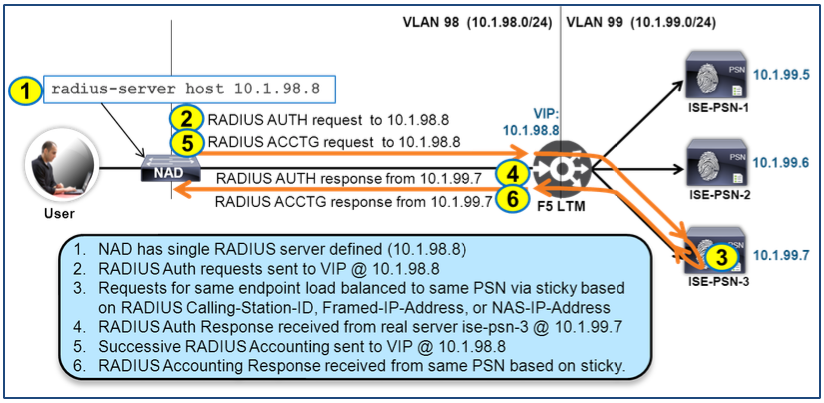

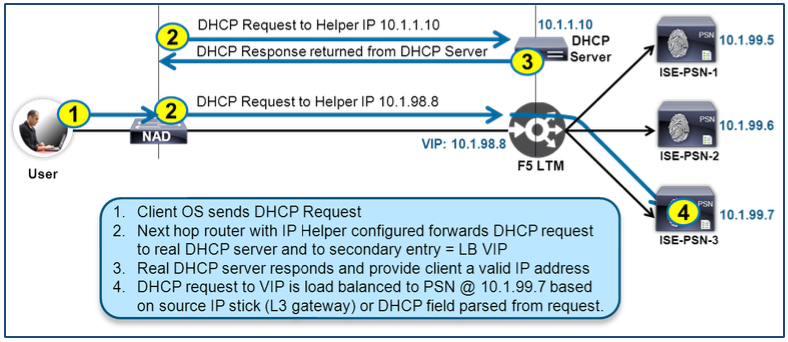

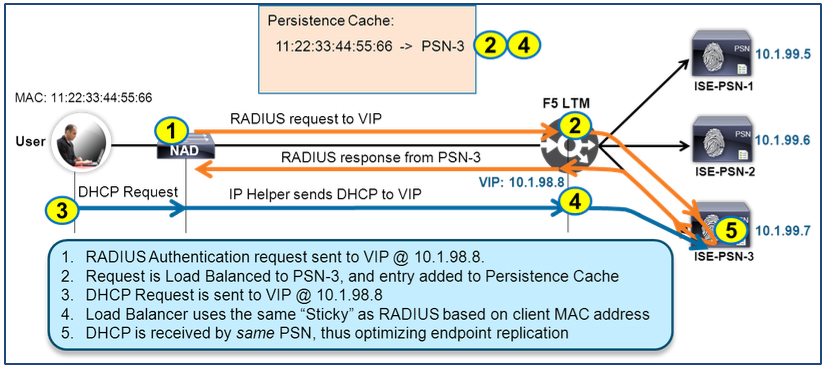

The diagram depicts the expected traffic flow for load-balancing RADIUS Authentication, Authorization, and Accounting.

Figure: RADIUS Load Balancing Traffic Flow

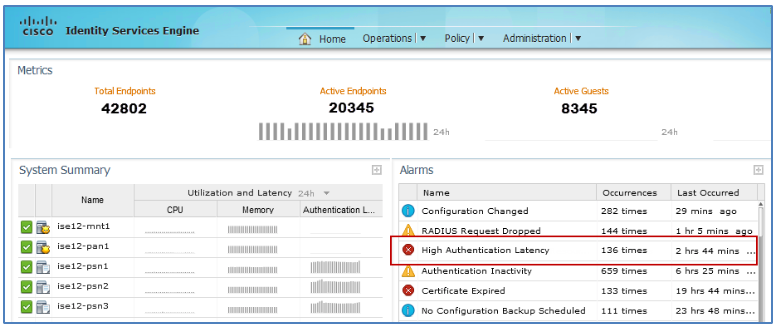

RADIUS Health Monitoring

F5 Monitors are used to perform periodic health checks against node members in a load-balanced pool. These monitors, or probes, validate that a real server is healthy before sending it requests. The term healthy is a relative term which can range in meaning from “Server is IP reachable using ICMP ping” to “Server is actively and successfully responding to simulated application requests; additionally, out-of-band checks validate resources are sufficient to support additional load”.

F5 Monitor for RADIUS

F5 includes a RADIUS Authentication monitor that will be used for monitoring the health of the ISE PSN servers. It will periodically send a simulated RADIUS Authentication request to each PSN in the load-balanced pool and verify that a valid response is received. A valid response can be either an Access-Accept or an Access-Reject. As covered under the ISE prerequisite configuration section, it is critical that the following be configured on the ISE deployment for the F5 health monitor to succeed:

- F5 BIG-IP LTM internal IP address is configured as a RADIUS Network Device

- Matching RADIUS secret key configured under the Network Device definition

- A valid user account in an applicable identity store if want the RADIUS monitor request to return an Access-Accept

This same probe will be used to monitor the members of the RADIUS Accounting pool to reduce the number of RADIUS requests sent to each PSN.

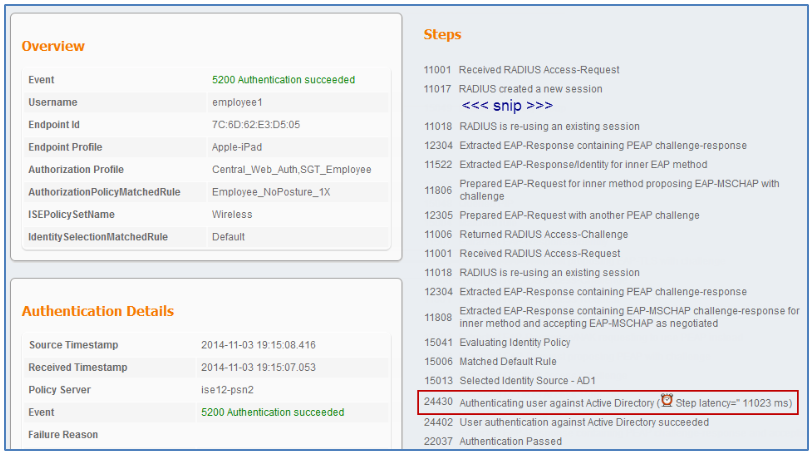

RADIUS Monitor Timers

The Monitor timer determines how frequently the health status probes are sent to each member of a load-balanced pool. Timers should be set short enough to allow failover before a RADIUS request from an access device times out and long enough to prevent excessive and unnecessary load on the ISE PSNs.

The optimal values ultimately depend on the network environment and the RADIUS server configuration on each of the access devices. A reasonable start value for RADIUS timeout on a Cisco WLC Controller ranges from 5-10 seconds with three retries for a total of 20-40 seconds. A typical RADIUS timeout for a Cisco Catalyst switch is approximately 10-15 seconds with two to three retries for a total of 30-60 seconds.

Longer timeouts and retries may be required for switches/routers connected across slower or less reliable WAN links. Other potential causes for RADIUS delays include slow connections to backend identity stores or extensive attribute retrieval from remote directories. Under the authentication log details you may see a Step Latency counter that reveals excessive delays in identity store responses. Slow or misconfigured DNS services can also lead to delayed responses from backend stores. Ideally these issues are addressed to reduce overall RADIUS latency, but it may be necessary to set higher values on network access devices as an interim solution.

So how does all of this relate to F5 RADIUS Health Monitors? The goal is to set the F5 monitor timers such that they detect PSN failure and try another PSN before the NAD RADIUS request times out. Otherwise, the F5 BIG-IP LTM will continue to send requests to the same failed PSN until the configured monitor interval is exceeded. For example, if the total NAD RADIUS timeout is 40 seconds, the interval may need to be set to 31 seconds. At the same time, setting an interval of 11 seconds will likely be too excessive and simply cause unnecessary RADIUS authentication load on each of the PSNs. If RADIUS timers differ significantly between groups of NADs or NAD types, it is recommended to use the lower settings to accommodate all NADs using the same RADIUS Virtual Servers.

|

Note: Be sure to take into consideration whether the test RADIUS account used by the F5 monitor is an internal ISE user account, or one that must be authenticated to an external identity store as this will impact total RADIUS response time. For more details on user account selection, refer to the section User Account Selection for RADIUS Probes. |

The BIG-IP LTM RADIUS monitor has two key timer settings:

- Interval = probe frequency (default = 10 sec)

- Timeout = total time before monitor fails (default = 31 seconds)

Therefore, we can deduce that four health checks are attempted before declaring a node failure:

- Timeout = (3 * Interval) + 1

Sample LTM Monitor configuration for RADIUS:

ltm monitor radius /Common/radius_1812 {

debug no

defaults-from /Common/radius

destination *:1812

interval 10

password P@$$w0rd

secret P@$$w0rd

time-until-up 0

timeout 31

username f5-probe

}

User Account Selection for RADIUS Probes

The RADIUS Monitor requires that a user account be configured to send in the periodic RADIUS authentication request. This raises the question of which account to use—A user defined in the ISE Internal User database? An external user in Microsoft AD, LDAP directory, or other identity store? Or simply a non-existent account?!

Another decision is based on the security of the user account. It is important that this user account only be used to validate the PSNs are successfully processing RADIUS requests. No access should be available to this account in the event the credentials are leaked and used for access to secured resources. This may be a reason to choose an invalid user account so that an Access-Reject is returned. As noted, the BIG-IP LTM will deem this response as valid in determining health status since it must have been processed by the PSN’s RADIUS server.

An alternative to the above method is to use a valid account, but to be sure that no access privileges are granted to the authenticated user. ISE controls these permissions using the Authorization Policy. The PSN can authenticate the probe user (Access-Accept), but also return a RADIUS Authorization that explicitly denies access. For example, an Access Control List (ACL) that returns ‘deny ip any any’ could be assigned, or an unused/quarantine VLAN. Further, the Authentication and Authorization Policy rule should specifically limit the probe user account to the F5 IP address or other conditions (authentication protocol, service type, network device group, etc) that limit where account is expected.

General guidance is to use the ISE Internal User database account with different password to force Access-Reject, unless validation of an external user account is required to verify backend database operation. This requirement may stem from the premise that “If the PSN cannot authenticate to my identity store, then it is as good as down even if RADIUS is functioning”.

If AD/LDAP account validation is requires as terms for determining RADIUS status, then it is recommended to return Access-Accept when the identity store is available and to lock down authorization for probe account as noted. There are implications to RADIUS failover that need to be considered on backend store failure, i.e. return Process Error versus Access-Reject and potential impact to the load balancing cluster as a whole. If AD/LDAP is down for all PSNs, then the NADs need to failover to different cluster since the VIP will be declared down.

ISE Filtering and Log Suppression

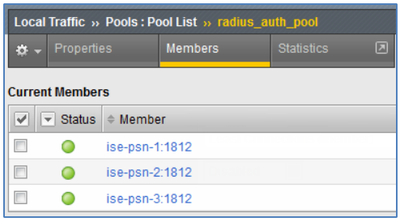

After properly configuring the RADIUS Monitor checks you are overjoyed by the friendly green icons under the F5 Pool List or Pool Member List that signify your success and a healthy RADIUS server farm…

Figure: Validating Health Monitors for RADIUS Load Balancing

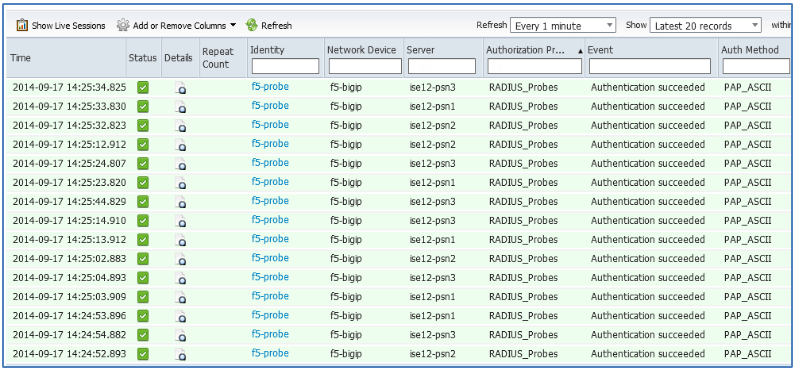

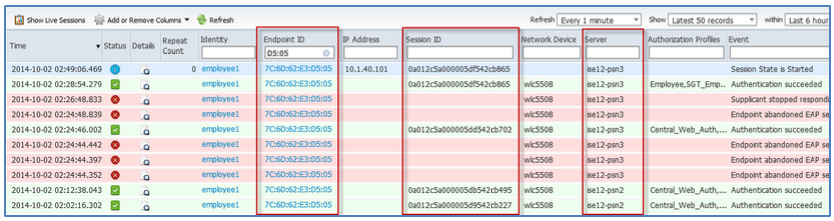

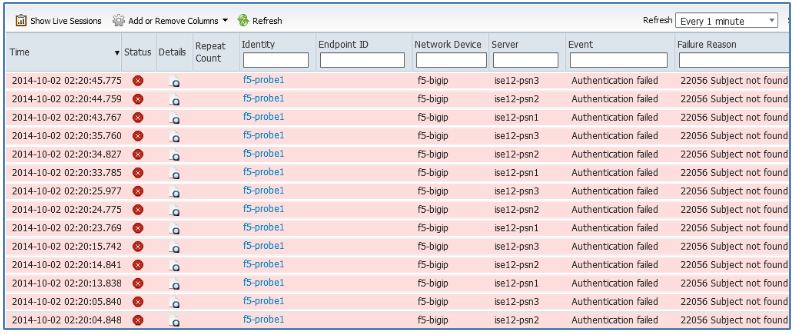

Your joy is diminished once you return to the ISE Live Authentications dashboard to find the consequence of your success…

Figure: Authentication Logging for RADIUS Health Monitors

If an invalid user account was used, the above would instead be filled with red events for every probe authentication attempt. In either case, these numerous and repetitive log entries can make it difficult to focus on the items of interest like access attempts by network users and devices.

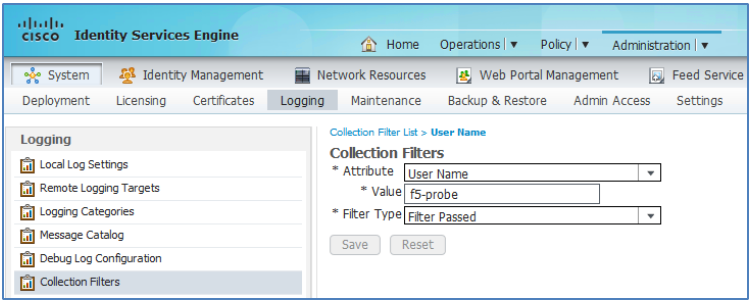

To filter out the “noise”, it is recommended to enable PSN Collection Filters. Collection Filters allow you to filter out logging of events based on failure/pass status and other conditions including

- User Name

- Policy Set Name

- NAS-IP-Address

- Device-IP-Address

- MAC (Calling-Station-ID)

For F5 monitor checks, a simple Collection Filter can be configured based on Device-IP-Address or NAS-IP-Address that is typically the F5’s internal interface IP, or else use the User Name of the probe account as shown in the example.

Figure: ISE Collection Filters for RADIUS Health Monitors

If the probe user account is expected to fail authentication, then Filter Type should be set to “Filter Failed”. (Collection Filters are configured under Administration > System > Logging > Collection Filters).

RADIUS Load Balancing: F5 Configuration Details

This section provides the detailed F5 configuration for RADIUS load balancing of ISE PSN servers including the recommended settings and considerations for each component.

The RADIUS Load Balancing configuration is broken down into the following major components:

- RADIUS Health Monitor

- RADIUS Profiles

- UDP

- RADIUS Service

- RADIUS Persistence

- Pool Lists for Authentication/Authorization and Accounting

- Virtual Servers for RADIUS Authentication/Authorization and Accounting

Use the settings outlined in the table to configure F5 for RADIUS load balancing with ISE PSNs.

Table 4: LTM RADIUS Load Balancing Configuration

| BIG-IP LTM Object | Recommended Setting | Notes |

|---|---|---|

| Health Monitor | Main tab > Local Traffic > Monitors | |

| Name | <RADIUS Monitor Name>

Example: radius_1812 |

Enter a name for the RADIUS Authentication and Authorization health monitor. |

| Type | RADIUS |

F5 provides a native monitor for RADIUS Authentication |

| Timers: Interval and Timeout | Wireless:

Wired:

|

Timers should be set short enough to allow failover before RADIUS request from access device times out and long enough to reduce excessive logging to the ISE PSNs Recommended Cisco WLC RADIUS Timeout value ranges from 5-10 seconds with 3 retries. A Common RADIUS timeout for a Cisco Catalyst switch is approximately 30-45 seconds total. The longer timeouts and retries may be required for switches/routers connected across slower or less reliable WAN links. See the “RADIUS Monitor Timers” section for additional details. |

| User Name | <ISE User Name>

Example:

|

Type the user name of the ISE user account. The name should match the value configured under Add F5 BIG-IP LTM as a NAD for RADIUS Health Monitoring in the “ISE Configuration Prerequisites” section. See “User Account Selection for RADIUS Probes” section for more details on account selection. |

| Password | <ISE User Password> |

Type the password the ISE user account. The password should match the value configured under Add F5 BIG-IP LTM as a NAD for RADIUS Health Monitoring in the “ISE Configuration Prerequisites” section. |

| Secret | <RADIUS Secret> |

Type the RADIUS secret key used to communicate with the PSNs. The RADIUS secret should match the value configured under Add F5 BIG-IP LTM as a NAD for RADIUS Health Monitoring in the “ISE Configuration Prerequisites” section. |

| NAS IP Address | <NAS IP Address> |

Optional. By default, BIG-IP LTM will use the interface, or Self IP. If wish to match the monitor’s RADIUS requests using a different NAS-IP-Address, enter new value here. |

| Alias Service Port | 1645 or 1812 |

Set the service port to be the same as used for the RADIUS Authentication/Authorization Virtual Server and Pool. |

| iRules | Main tab > Local Traffic > iRules | |

| Name | <RADIUS Persistence iRule Name>

Example: radius_mac_sticky |

Enter a name for the iRule used to persist RADIUS Authentication, Authorization, and Accounting traffic. |

| Definition | <iRule Definition> |

Enter the iRule details. Refer to Example F5 BIG-IP LTM iRules for RADIUS Persistence under the “RADIUS Persistence” section for more details. |

| Profiles | Main tab > Local Traffic > Profiles | |

| UDP

(Protocol > UDP) |

Name: <Profile Name> Example: ise_radius_udp |

Type a unique name for the UDP profile |

| Parent Profile: udp | ||

| Idle Timeout: 60 |

UDP idle timeout should be set based on the RADIUS environment and load balancer resources. High RADIUS activity will consume more F5 appliance resources to maintain connection states. This setting can be increased in networks with lower authentication activity or sufficient appliance capacity. Conversely, the value may need to be tuned lower with higher authentications activity or lower appliance resource capacity. For initial deployment, it is recommended to start with the default setting of 60 seconds. |

|

| Datagram LB: <Disabled> |

ISE requires multiple RADIUS packets for a given host be sent to the same PSN. |

|

| RADIUS

(Services > RADIUS) |

Name: <Profile Name> Example: ise_radiusLB |

Type a unique name for the RADIUS Service profile. |

| Parent Profile: radiusLB |

Defining a RADIUS profile allows F5 to process RADIUS Attribute-Value Pairs (AVPs) in iRules |

|

| Persist Attribute: <Not Configured> |

Recommendation is to use iRule to define persistence attribute Persist Attribute option is simple and may be sufficient in some deployments, but the iRule method is recommended for its additional support for advanced rule processing, multiple attributes, fallback logic, and options to log events to assist in troubleshooting. If iRule not used, recommendation for persist attribute value is “31” (Calling-Station-ID). In the event Calling-Station-ID (or Framed-IP-Address) is not present, Source IP address or RADIUS NAS-IP-Address are suitable fallback persistence options. |

|

| Persistence | Name: <Profile Name>

Example: radius_sticky |

Type a unique name for the RADIUS persistence profile. |

| Parent Profile: Universal |

Universal profile allows specification of an iRule for advanced persistence logic |

|

| Match Across Services: Enabled |

When using separate Virtual Servers that share the same IP address but different service ports, this setting allows load balancing to persist across RADIUS Auth and Accounting services. No requirement to Match Across Servers when Virtual Servers share the same IP address. For more information, see F5 support article SOL5837: Match Across options for session persistence. |

|

| iRule: <iRule Name>

Example: radius_mac_sticky |

Specify iRule used to set RADIUS persistence. See “RADIUS Persistence” section for more details on recommended iRules for persistence. |

|

| Timeout: <Persist Timeout>

Wireless: 3600 seconds (1 hour) Wired: 28800 seconds (8 hours) |

Recommendation is to use iRule to define persistence timeout. Persistence timeout configured in iRule overrides profile setting here. If iRule not used, set Persistence Timeout based on environment. These factors include access method, device types, and average connection times. It is possible to set different persistence TTLs in F5 through separate Virtual Servers or through iRules. See “RADIUS Persistence” section for more details on recommended timeout values. |

|

| Pool List | Main tab > Local Traffic > Pools > Pool List |

The Pool List contains the list of real servers that service load balanced requests for a given Virtual Server. Note: Create two server pools—one for RADIUS Authentication and Authorization and another for RADIUS Accounting |

| Name | <Pool Name>

Examples:

|

Type a unique name for each RADIUS pool—one pool will be defined for RADIUS Authentication and Authorization and another pool will be defined for RADIUS Accounting. |

| Health Monitors | <RADIUS Health Monitor>

Example: radius_1812 |

Enter the RADIUS Health Monitor configured in previous step. Note: The same monitor will be used to verify both RADIUS Auth and Accounting services on the ISE PSN appliances to minimize resource consumption on both F5 and ISE appliances. |

| Allow SNAT | No |

RADIUS CoA support requires that SNAT be disabled. Setting here reinforces SNAT setting in Virtual Server definition. |

| Action on Service Down | Reselect |

Reselect option ensures established connections are moved to an alternate pool member when a target pool member becomes unavailable. For additional details on failed node handling, refer to following F5 Support articles: |

| Member IP Addresses | <ISE PSN addresses in the LB cluster>

Examples:

|

These are the real IP addresses of the PSN appliances. The PSNs are configured as nodes under the Node List. These entries can be automatically created when defined within the Pool List configuration page. |

| Member Service Port | <Separate, Unique Ports for RADIUS Authentication/Authorization and Accounting>

Examples:

|

For each pool, define the appropriate RADIUS Authentication or Accounting port to be used to connect to the PSNs. |

| Load Balancing Method | Least Connections (node) |

Least Connections (member) also viable, but ‘node’ option allows F5 to take into consideration all connections across pools. |

| Virtual Server

|

Main tab > Local Traffic > Virtual Servers > Virtual Server List | Note: Create two virtual servers—one for RADIUS Authentication and Authorization and another for RADIUS Accounting |

| Name | <RADIUS Virtual Server Name>

Examples:

|

Enter the name to identify the virtual server for RADIUS Auth and RADIUS Accounting |

| Type | Standard |

Standard allows specification of profiles for the UDP Protocol and RADIUS |

| Source | <Source Network Address/Mask>

Example: 10.0.0.0/8 |

Type the network address with bit mask for the external network addresses that need to communicate with the ISE PSNs. Make the source as restrictive as possible while not omitting RADIUS clients (network access devices) that need to communicate to the PSNs for RADIUS AAA. |

| Destination (VIP Address) |

Type | Host |

| Address: <Single IP Address for RADIUS Authentication, Authorization, and Accounting>

Example: 10.1.98.8 |

Enter the Virtual IP Address for RADIUS AAA services. Single IP address (versus separate IP addresses for Authentication/Authorization and Accounting) is recommended as it simplifies both load balancer configuration and the access device (RADIUS client) configuration. |

|

| Service Port | <Separate, Unique Ports for RADIUS Authentication/Authorization and Accounting>

Examples:

|

Wildcard port (all UDP ports) is an option, but general recommendation is to define separate Virtual Servers that share a single VIP but each with distinct ports for RADIUS Authentication/Authorization and Accounting. This offers additional load balancing controls and management by Virtual Server and service. |

| Protocol | UDP |

UDP Protocol Profile and RADIUS Profile to be defined. See Profile section in the table for more details. |

| Protocol Profile (Client) | <UDP Protocol Profile>

Example: ise_radius_udp |

Enter the name of the UDP Protocol Profile defined earlier. |

| RADIUS Profile | <RADIUS Service Profile>

Example: ise_radiusLB |

Enter the name of the RADIUS Service Profile defined earlier. |

| VLAN and Tunnel Traffic | Enabled On… |

Optional: Restrict inbound RADIUS requests to specific VLANs. |