- Cisco Community

- Technology and Support

- Service Providers

- Service Providers Knowledge Base

- ASR9000/XR: Troubleshooting packet drops and understanding NP drop counters

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

on 03-04-2011 08:06 AM

Introduction

In this article we'll discuss how to troubleshoot packet loss in the asr9000 and specifically understanding the NP drop counters, what they mean and what you can do to mitigate them.

This document will be an ongoing effort to improve troubleshooting for the asr9000, so if after reading this article things are still not clear, please do comment on the article and I'll have details added in case my current descriptions are not explanatory enough.

Core Issue

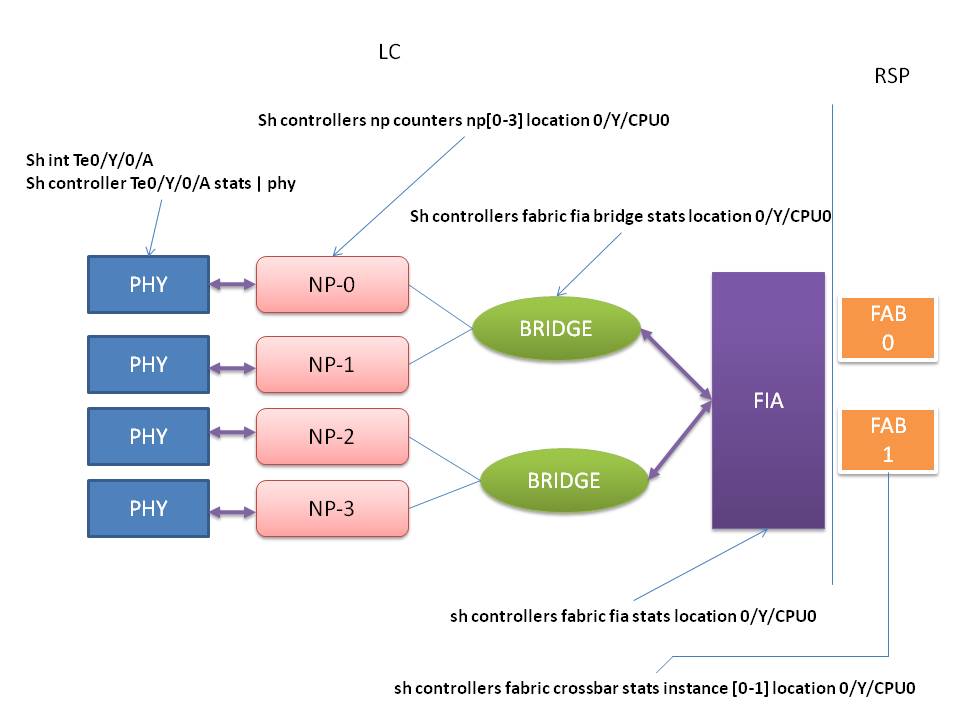

Before we are going to dive into the packet troubleshooting on the asr9000 it is important to undertand a bit of the architecture of the system.

Packets are received by the mac/phy, forwarded to the NPU who is processing the features, the NPU hands it over to the bridge who in turn delivers

the packets to the FIA (Fabric Interfacing Asic) upon which it is sent over the fabric (residing physically on the RSP) and handed over to the egress

Linecard who follows the FIA->bridge->NP-MAC path then.

The NP processes packets for both ingress and egress direction.

The Bridge is just a memory interface converter and is non blocking, unless you have QOS back pressure, there is generally no reason

for the Bridge to drop packets. This article will not focus on the bridge and FIA drop counters as they are generally not common.

Both NP, Bridge, FIA and Fabric are all priority aware and have separate queues for High and Low priority traffic as well as Unicast and Multicast. So when you look at the bridge you see UC/MC (unicast/mcast) as well as LP/HP queues.

A different article will focus on that.

Resolution

Here a graphical overview of how the individual asics are interconnecting with the different commands that point to the actual

asic of where we are viewing statistics from.

In this picture Y is the slot where the linecard is inserted on and A identifies the individual interface.

The first place to verify drops is the interface. The regular show interface command is well known.

Drops reported on the interface are an aggregate of phyiscal layer drops as well as (certain!!) drops that were experienced in the NP.

When printing the show controller np counters command, you may not know which interface identifier connects to which, therefore use

the "show controller np ports all location 0/Y/CPU0" to find out which interface maps to which NP.

RP/0/RSP1/CPU0:A9K-BOTTOM#sh controllers np ports all loc 0/7/CPU0

Fri Mar 4 12:09:41.132 EST

Node: 0/7/CPU0:

----------------------------------------------------------------

NP Bridge Fia Ports

-- ------ --- ---------------------------------------------------

0 0 0 TenGigE0/7/0/3

1 0 0 TenGigE0/7/0/2

2 1 0 TenGigE0/7/0/1

3 1 0 TenGigE0/7/0/0

In case you have an oversubscribed linecard, 2 TenGig interfaces can be connected to the same NP.

For 80G linecards such as the A9K-8T, you'll see 8 NP's listed.

For the 40 port 1GE, you'll see 10 1-Gig interfaces connecting to an NP, note that this is not oversubscription.

With that info you can now view the controller statistics and some information as to what the controller is doing.

Note that not all counters in this command constitute a problem! They are regular traffic counters also.

There is a simple rate mechanism that keeps the shadow counts from the previous command invokation.

Upon the next time the command is run delta's are taken and divided over the elapsed time to provide some rate counts.

It is important for more accurate rate counts instantly that you run this command twice the first time with a 2/3 second interval.

The output may look similar to this:

RP/0/RSP1/CPU0:A9K-BOTTOM#show controller np count np1 loc 0/7/CPU0

Node: 0/7/CPU0:

----------------------------------------------------------------

Show global stats counters for NP1, revision v3

Read 30 non-zero NP counters:

Offset Counter FrameValue Rate (pps)

-------------------------------------------------------------------------------

22 PARSE_ENET_RECEIVE_CNT 51047 1

23 PARSE_FABRIC_RECEIVE_CNT 35826 0

30 MODIFY_ENET_TRANSMIT_CNT 36677 0

31 PARSE_INGRESS_DROP_CNT 1 0

34 RESOLVE_EGRESS_DROP_CNT 628 0

40 PARSE_INGRESS_PUNT_CNT 3015 0

41 PARSE_EGRESS_PUNT_CNT 222 0

....

What all these mnemonics mean is listed out in this table below:

1. Common Global Counters

| Counter Name | Counter Description |

| IPV4_SANITY_ADDR_CHK_FAILURES | Ingress IPV4 address martian check failure 127.0.0.0/8 224.0.0.0/8, not 224.0.0.0/4 (225.0.0.0/8 and 239.0.0.0/8 don't increment error count) When source address is below, ASR9K forward the packet without any error. 0.0.0.0/8 10.0.0.0/8 128.0.0.0/16 169.254.0.0/16 172.16.0.0/12 191.255.0.0/16 192.0.0.0/24 223.255.255.0/24 240.0.0.0/4 |

| DROP_IPV4_LENGTH_ERROR | Ingress IPV4 header length invalid or frame length incompatible with fragment length |

| DROP_IPV4_CHECKSUM_ERROR | Ingress IPV4 checksum invalid |

| IPV6_SANITY_ADDR_CHK_FAILURES | Ingress IPV6 frame source or destination address invalid |

| DROP_IPV6_LENGTH_ERROR | Ingress IPV6 frame length invalid |

| MPLS_TTL_ERROR | Ingress MPLS frame outer label TTL is 0 |

| MPLS_EXCEEDS_MTU | MPLS TTL exceeded – punt frame to host |

| DROP_IPV4_NOT_ENABLED | Ingress IPV4 frame and IPV4 not enabled in L3 ingress intf struct |

| DROP_IPV4_NEXT_HOP_DOWN | Drop bit set in rx adjacency (ingress) or tx adjacency (egress) and ICMP punt disabled in intf struct (ingress/egress) for the interface – drop bit in non-recursive adjacency or adjacency result |

| IPV4_PLU_DROP_PKT | Drop bit set in leaf or non-recursive adjacency lookup miss (ingress/egress) and ICMP punt disabled in intf struct (ingress/egress) for the interface. |

| IPV4_RP_DEST_DROP | IPV4 RP drop bit set in non-recursive adjacency or adjacency result and ICMP punt disabled in intf struct (ingress/egress) for the interface |

| IPV4_NULL_RTE_DROP_PKT | No IPV4 route (ingress/egress) and ICMP punt disabled in intf struct (ingress/egress) for the interface – null route set in leaf or recursive adjacency |

| DROP_IPV6_NOT_ENABLED | Ingress IPV6 frame and IPV6 not enabled in L3 ingress intf struct |

| DROP_IPV6_NEXT_HOP_DOWN | Drop bit set in rx adjacency (ingress) or tx adjacency (egress) and ICMP punt disabled in intf struct (ingress/egress) for the interface – drop bit in non-recursive adjacency or adjacency result |

| DROP_IPV6_PLU | Drop bit set in leaf or non-recursive adjacency lookup miss (ingress/egress) and ICMP punt disabled in intf struct (ingress/egress) for the interface. |

| IPV6_RP_DEST_DROP | RP drop bit set in non-recursive adjacency or adjacency result and ICMP punt disabled in intf struct (ingress/egress) for the interface |

| IPV6_NULL_RTE_DROP_PKT | No IPV6 route (ingress/egress) and ICMP punt disabled in intf struct (ingress/egress) for the interface – null route set in leaf or recursive adjacency |

| MPLS_NOT_ENABLED_DROP | Ingress MPLS frame and MPLs not enabled in L3 ingress intf struct |

| MPLS_PLU_NO_MATCH | Ingress MPLS frame and outer label lookup miss and not a VCCV frame associated with a pseudo-wire |

| MPLS_PLU_DROP_PKT | Ingress MPLS frame and label lookup results return a NULL route, or have no forwarding control bits set, OR one of the following: 1) Next hop down – Drop bit set in rx adjacency (ingress) or tx adjacency (egress) and ICMP punt disabled in intf struct (ingress/egress) for the interface – drop bit in non-recursive adjacency or adjacency result 2) Leaf drop - Drop bit set in leaf or non-recursive adjacency lookup miss (ingress/egress) and ICMP punt disabled in intf struct (ingress/egress) for the interface. 3) RP drop - RP drop bit set in non-recursive adjacency or adjacency result and ICMP punt disabled in intf struct (ingress/egress) for the interface 4) No route - No route (ingress/egress) and ICMP punt disabled in intf struct (ingress/egress) for the interface – null route set in leaf or recursive adjacency 5) Punt bit set in leaf results or recursive adjacency results – egress mpls punt not supported |

| MPLS_UNSUPP_PLU_LABEL_TYPE | Ingress MPLS frame outer label is 15 or less and not a router alert or null tag. |

| DEAGG_PKT_NON_IPV4 | Ingress MPLS frame outer label lookup control bits are L3VPN deagg. And the payload is not IPV4. |

2. NP debug and drop counters

- Bulk Forwarding Counts

- Inject/Punt type counts

- Main Drop counts

- QOS/ACL drop counts

| Counter Name | Counter Description |

| PARSE_ENET_RECEIVE_CNT | Total frames received from Ethernet on an ingress NP |

| PARSE_FABRIC_RECEIVE_CNT | Total frames received by an egress NP from the fabric – Note that this currently includes loopback frames |

| MODIFY_FABRIC_TRANSMIT_CNT | Total frames sent to fabric by an ingress NP |

| MODIFY_ENET_TRANSMIT_CNT | Total frames sent to Ethernet by an egress NP |

| PARSE_LOOPBACK_RECEIVE_CNT | Total loopback frames received by an NP – Note that these are egress loopback frames only and do not include ingress loopback frames such as Interflex |

| PARSE_INTERFLEX_RECEIVE_CNT | Total frames received by an NP from the interflex loopback port – these are ingress loopback frames |

| MODIFY_FRAMES_PADDED_CNT | Total number of frames sent to fabric that were undersized and required padding to 60 bytes. |

| MODIFY_RX_SPAN_CNT | Ingress frames sent to fabric on a SPAN link – these frames are replicated |

| MODIFY_TX_SPAN_CNT | Egress frames sent to Ethernet on a SPAN link – these frames are replicated |

| MODIFY_LC_PUNT_CNT | Total frames punted to LC host (all punts are handled by the modify engine) |

| MODIFY_RP_PUNT_CNT | Total frames punted to RP host (all punts are handled by the modify engine) |

| MODIFY_PUNT_DROP_CNT | Total punt packets dropped by ingress modify engine due to punt policing, OR due to punt reason lookup fail. |

| MODIFY_LC_PUNT_EXCD_CNT | Punt frames to LC dropped due to punt policing |

| MODIFY_RP_PUNT_EXCD_CNT | Punt frames to RP dropped due to punt policing |

| PARSE_INGRESS_DROP_CNT | Total packets dropped by ingress NP in the parse engine |

| PARSE_EGRESS_DROP_CNT | Total packets dropped by egress NP in the parse engine |

| RESOLVE_INGRESS_DROP_CNT | Total packets dropped by ingress NP in the resolve engine |

| RESOLVE_EGRESS_DROP_CNT | Total packets dropped by egress NP in the resolve engine |

| MODIFY_INGRESS_DROP_CNT | Total packets dropped by ingress NP in the modify engine |

| MODIFY_EGRESS_DROP_CNT | Total packets dropped by ingress NP in the modify engine |

| PARSE_INGRESS_PUNT_CNT | Total packets flagged for punting by the ingress NP in the parse engine |

| PARSE_EGRESS_PUNT_CNT | Total packets flagged for punting by the egress NP in the parse engine |

| RESOLVE_INGRESS_L3_PUNT_CNT | Total Layer3 packets flagged for punting by an ingress NP in the resolve engine due to lookup results or other cases such as ICMP unreachable |

| RESOLVE_INGRESS_IFIB_PUNT_CNT | Total Layer3 packets flagged for punting due to an ingress IFIB lookup match |

| RESOLVE_INGRESS_L2_PUNT_CNT | Total Layer2 packets flagged for punting by the egress NP in the resolve engine due to cases such as IGMP/DHCP snooping or punting of ingress L2 protocol such as LAG or MSTP or CFM |

| RESOLVE_EGRESS_L3_PUNT_CNT | Total Layer3 packets flagged for punting by an ingress NP in the resolve engine due to lookup results or other cases such as ICMP unreachable |

| RESOLVE_EGRESS_L2_PUNT_CNT | Total Layer2 packets flagged for punting by the egress NP in the resolve engine due to cases such as IGMP/DHCP snooping or punting of ingress L2 protocol such as MSTP or CFM |

| PARSE_LC_INJECT_TO_FAB_CNT | Frames injected from LC host that are intended to go directly to fabric to an egress LC without modification or protocol handling (inject header is stripped by microcode) |

| PARSE_LC_INJECT_TO_PORT_CNT | Frames injected from LC host that are intened to go directly to an Ethernet port without modification or protocol handling (inject header is stripped by microcode) |

| PARSE_FAB_INJECT_IPV4_CNT | Total number of IPV4 inject frames received by the egress NP from the fabric (sent from RP) |

| PARSE_FAB_INJECT_PRTE_CNT | Total number of layer 3 preroute inject frames received by the egress NP from the fabric (sent from RP) |

| PARSE_FAB_INJECT_UNKN_CNT | Total number of unknown inject frames received by the egress NP from the fabric (sent from RP). These are punted to the LC host. |

| PARSE_FAB_INJECT_SNOOP_CNT | Total number of IGMP/DHCP snoop inject frames received by the egress NP from the fabric (sent from RP). For DHCP, these are always re-injects from the RP. For IGMP, these can be re-injects or originated injects from the RP. |

| PARSE_FAB_INJECT_MPLS_CNT | Total number of mpls inject frames received by the egress NP from the fabric (sent from RP). |

| PARSE_FAB_INJECT_IPV6_CNT | Total number of IPV6 inject frames received by the egress NP from the fabric (sent from RP) |

| INJECT_EGR_PARSE_PRRT_NEXT_HOP | Inject preroute frames received from the fabric that are next hop type. |

| INJECT_EGR_PARSE_PRRT_PIT | Inject preroute frames received from the fabric that are PIT type . |

| L3_EGR_RSV_PRRT_PUNT_TO_LC_CPU | Inject preroute frames that have a PIT table lookup miss. These are punted to the LC host. |

| L2_INJECT_ING_PARSE_INWARD | Total number of ingress CFM injects received from the LC host that are intended to be sent to the fabric. These can either be bridged or pre-routed to a specific NP. |

| CFM_L2_ING_PARSE_BRIDGED | Total number of ingress CFM injects received from the LC host that are intended to be bridged and sent to the fabric. The bridge lookups are done for these inject frames resulting in unicast or flood traffic. |

| L2_INJECT_ING_PARSE_PREROUTE | Total number of ingress CFM injects received from the LC host that are intended to be pre-routed to a specific output port on an egress NP. bridged and sent to the fabric. The bridge lookups are not done for these inject frames. |

| L2_INJECT_ING_PARSE_EGRESS | Total number of ingress CFM injects received from the LC host that are intended to be sent to a specific output port on an egress NP. |

| INJ_UNKNOWN_TYPE_DROP | Unknown ingress inject received from the LC host – these are dropped. |

| STATS_STATIC_PARSE_INTR_AGE_CNT | Total Number of Parse Interrupt frames associated with learned MAC address aging. |

| STATS_STATIC_PARSE_INTR_STATS_CNT | Total Number of Parse Interrupt frames associated with statistics gathering. |

| PARSE_VIDMON_FLOW_AGING_SCAN_CNT | Total Number of Parse Interrupt frames associated with video monitoring flow scanning. |

| UNSUPPORTED_INTERRUPT_FRAME | Unsupported interrupt frame type received on NP. Supported types are for aging and statistics only. |

| RESOLVE_BAD_EGR_PRS_RSV_MSG_DROP_CNT | Invalid parse engine to resolve engine message received for an ingress frame – this is likely a software implementation error. |

| RESOLVE_BAD_ING_PRS_RSV_MSG_DROP_CNT | Invalid parse engine to resolve engine message received for an egress frame – this is likely a software implementation error. |

| EGR_UNKNOWN_PAK_TYPE | A frame received from the fabric by an egress NP has an unknown NPH header type – these are dropped. This is likely a software implementation error. |

| EGR_LB_UNKNOWN_PAK_TYPE | A frame received from the egress loopback queue by an egress NP has an unknown NPH header type – these are dropped. This is likely a software implementation error. |

| XAUI_TRAINING_PKT_DISCARD | These are diag packets sent to the egress NP at startup from the fabric FPGA. These are ignored and dropped by software. |

| PRS_DEBUGTRACE_EVENT | This counter increments whenever a preconfigured debug trace event matches on an ingress or egress frame. Currently the debug trace is only configurable for all drop events. |

| IN_INTF STRUCT_TCAM_MISS | Ingress frame with a pre-parse TCAM lookup miss – these are dropped. This is likely due to a TCAM setup problem. |

| IN_INTF STRUCT_NO_ENTRY | Ingress frame with a pre-parse TCAM index that is invalid – these are dropped. This is likely due to a TCAM setup problem |

| IN_INTF STRUCT_DOWN | Ingress frame with a intf struct entry with down status – these are dropped, with the exeception of ingress LACP frames on a subinterface. |

| OUT_INTF STRUCT_INTF STRUCT_DOWN | Egress Layer3 frame with an egress INTF STRUCT that has line status down, and ICMP punt is disabled in the intf struct. |

| IN_L2_INTF STRUCT_DROP | Ingress Layer2 frame on a subinterface with the L2 intf struct drop bit set. |

| ING_VLAN_FILTER_DISCARD | A vlan tagged packet has a intf struct index that does points to a default layer3 intf struct – these are dropped. Only untagged pkts can be setup on a default layer3 intf struct. |

| IN_FAST_DISC | A ingress frame was dropped due to the early discard feature. This can occur at high ingress traffic rate based on the configuration. |

| UNKNOWN_L2_ON_L3_DISCARD | Unknown Layer2 frame (non-IPV4/V6/MPLS) on a Layer3 interface. These are dropped. Known layer2 protocols are punted on a layer3 interface such as ARP/LACP/CDP/ISIS/etc. |

| L3_NOT_MYMAC | A layer3 unicast IPV4/IPV6 frame does not have the destination mac address of the router, or any mac address defined in the VRRP table. These are dropped. |

| IPV4_UNICAST_RPF_DROP_PKT | With RPF enabled, an ingress IPV4 frame has a source IP address that is not permitted, or not permitted on the ingress interface – these are dropped. |

| RESOLVE_L3_ING_PUNT_IFIB_DROP | Ingress frame has TTL of 0, or discard BFD frame if BFD not enabled |

| MPLS_VPN_SEC_CHECK_FAIL | An ingress MPLS does not have the proper VPN security code, and IPV4 is not using the global routing table. |

| MPLS_EGR_PLU_NO_MATCH | MPLS frame outer label lookup on egress NP does not match – these are dropped. |

| MPLS_EGR_PLU_DROP_PKT | MPLS frame outer label lookup on egress NP has a result with the drop bit set. |

| RESOLVE_EGR_LAG_NOT_LOCAL_DROP_CNT | An egress frame hash to a bundle interface has a bundle member that is not local to the NP – these are dropped. This can be due to a bad hash, or a bad bundle member index. It can also occur normally for floods or multicast – non-local members are dropped for each copy that is a bundle output. |

| RESOLVE_L3_ING_ERROR_DROP_CNT | L3 ingress protocol handling in the resolve engine falls through to an invalid case – these are dropped. This would occur due to a software implementation error. |

| RESOLVE_L3_EGR_ERROR_DROP_CNT | L3 egress protocol handling in the resolve engine falls through to an invalid case – these are dropped. This would occur due to a software implementation error. |

| ING_QOS_INVALID_FORMAT | An ingress packet has a QOS format value in the ingress intf struct that is not valid. Only checked if QOS is enabled in the ingress intf struct. Valid formats are 20/3/2/1/0. |

| RESOLVE_L3_ING_ACL_DENY_DROP_CNT | Total number of ingress Layer3 frames dropped due to an ACL deny policy. |

| RESOLVE_L3_EGR_ACL_DENY_DROP_CNT | Total number of egress Layer3 frames dropped due to an ACL deny policy. |

| RESOLVE_L2_ING_ACL_DENY_DROP_CNT | Total number of ingress Layer2 frames dropped due to an ACL deny policy. |

| RESOLVE_L2_EGR_ACL_DENY_DROP_CNT | Total number of egress Layer2 frames dropped due to an ACL deny policy. |

| RESOLVE_L2L3_QOS_C_DROP_CNT | Total number of ingress/egress frames dropped to a child policing policy. |

| RESOLVE_L2L3_QOS_P_DROP_CNT | Total number of ingress/egress frames dropped to a parent policing policy. |

Layer3 Multicast related counts

These include:

- IP mcast forwarding counts

- Video Monitoring counts

- Multicast Fast Reroute counts

| Counter Name | Counter Description |

| IPV4_MCAST_NOT_ENABLED | Ingress IPV4 frames dropped due to ipv4 multicast not enabled. The ipv4 multicast enable check may be ignored for non-routable IGMP frames. Also, the ipv4 multicast enable check does not apply to well known multicast frames. |

| IPV6_MCAST_UNSUPPORTED_DROP | Ingress IPV6 multicast frame and IPV6 multicast not supported in the current release |

| RESOLVE_IPM4_ING_RTE_DROP_CNT | Ingress IPV4 multicast frame route lookup fails with no match. |

| RESOLVE_IPM4_EGR_RTE_DROP_CNT | Egress IPV4 multicast frame route lookup fails with no match. |

| RESOLVE_IPM4_NO_OLIST_DROP_CNT | Egress IPV4 multicast frame has zero olist members – no copies are sent to output ports. |

| RESOLVE_IPM4_OLIST_DROP_CNT | Egress IPV4 multicast frame has olist drop bit set (no copies are sent to output ports), OR egress IPV4 multicast frame olist lookup fails. Olist lookup failure could be due to a bad copy number, or a missing entry for that copy. |

| RESOLVE_IPM4_FILTER_DROP_CNT | Egress IPV4 multicast frame copy is reflection filtered – copies to the original source port are dropped. |

| RESOLVE_IPM4_TTL_DROP_CNT | Egress IPV4 multicast frame copy fails TTL check. The frame TTL is beyond the programmed threshold. |

| MODIFY_RPF_FAIL_DROP_CNT | Ingress IPV4 multicast frames dropped due to reverse-path-forwarding check failed (to prevent looping or duplicated packets); these multicast frames came in from wrong interface. |

| RESOLVE_VIDMON_FLOWS_DELETED_BY_AGING | Inactive Video Monitoring flows deleted by hardware aging mechanism. |

| RESOLVE_VIDMON_CLASS_FLOW_LIMIT_REACHED | Indicates that the Video Monitoring Maximum flows per class limit reached. |

| RESOLVE_VIDMON_FLOW_START_INTRVL_RESET | Video Monitoring flow start interval reset to current Real Time Clock Tick (instead of updating with actual next packet arrival time) because the flow has been inactive for more than 3 interval timeouts. |

| VIDMON_PUNT_FAILED_NO_TXBUF_CNT | Punt frames dropped by Video Monitoring due to no buffer |

| PARSE_MOFRR_WATCHDOG_INTR_RCVD | L3 Multicast Fast Reroute Watchdog interrupts received by nP. The count is summation of both Activity and Loss interrupt counts. |

| PARSE_MOFRR_SWITCH_MSG_RCVD_FROM_FAB | Number of L3 Multicast Fast Reroute Switch (Flood) messages received by nP from Fabric. |

| RESOLVE_MOFRR_HRT_LKUP_FAIL_DROP | Number of watchdog interrupts are dropped because of an error in MoFRR Hash Table lookup. This counter indicates that the watchdog interrupt (Activity or Loss) is received but micro code failed to process further because of MoFRR Hash look up failed. This counter indicates an error. |

| RESOLVE_MOFRR_WDT_INVALID_RESULTS_DROP_CNT | Number of watchdog interrupts are dropped because of an error in Watch Dog counter hash table lookup. This counter indicates that the watchdog interrupt (Activity or Loss) is received but micro code failed to process further because of lookup failed. This counter indicates an error. |

| RESOLVE_MOFRR_SWITCH_MSG_HRT_LKUP_FAIL_DROP | Number of MoFRR switch messages are dropped because of an error with MoFRR Hash Table lookup failure. This counter indicates that the switch message is received from fabric but micro code failed to process further because of MoFRR Hash look up failed. This counter indicates an error and could be traffic disruptive. |

| RESOLVE_MOFRR_SWITCH_MSG_SEQNUM_MISMATCH_DROP | Number of MoFRR switch messages are dropped because of sequence number mismatch. This counter indicates that the switch message is received from fabric but micro code failed to process further the sequence number received from ingress LC does not match with sequence number for same route on egress LC. This counter indicates a programming error, race condition or hash result corruption in micro code etc. This is not a usual thing and could be traffic disruptive. |

| RESOLVE_MOFRR_HASH_UPDATE_CNT | Number of times MoFRR active flag is updated in MoFRR hash table. This counter indicates the switch message is processed successfully, i.e. RDT/Hash/Direct table lookups are successful and MoFRR Active flag is updated in MoFRR hash table. |

| RESOLVE_MOFRR_SWITCH_MSG_INGNORED | Total numbers of MoFRR switch (flood) messages are filtered without being punted to LC CPU. This counter indicates switch message is processed successfully (i.e. lookups successful and MoFRR flag is updated in hardware hash table), but the message is not punted to LC CPU either because the RPF Id is not primary or RPF Id is not local to the nP. Note that this counter is informative and does not necessarily mean an error. |

| RESOLVE_MOFRR_SWITCH_MSG_TO_FAB | Number of MoFRR switch (flood) messages generated by nP as a result of Loss Activity interrupts. This counter indicates that the Loss Activity interrupt is processed successfully by ingress LC and MoFRR switch message is flooded to all LCs towards fabric. |

| RESOLVE_MOFRR_EGR_RPF_FAIL_DROP | IP Multicast MoFRR enabled packets dropped at egress LC because of RPF check failure. |

Layer 2 protocol Debug Counters

These include: · VPLS counts · VPWS counts · DHCP/IGMP snooping counts · CFM protocol counts · VPLS Learning/Aging related counts

| Counter Name | Counter Description |

| IN_L2_BLOCKED | Ingress L2 frames dropped and not forwarded due to ingress interface in spanning tree blocked state. |

| OUT_L2_BLOCKED | Egress L2 frames dropped and not forwarded due to egress interface in spanning tree blocked state. |

| IN_DOT1Q_VTP_FILTERED | Ingress VTP frames dropped due to dot1q filtering enabled. |

| IN_DOT1Q_DROP | Ingress 802.1q frames dropped due to dot1q filtering enabled. |

| IN_DOT1AD_DROP | Ingress 802.1ad frames dropped due to dot1ad filtering enabled. |

| ING_SLOW_PROTO_UNKNOWN_SUBTYPE | Ingress slow protocol frame on an L3 interface that is not recognized (not LACP/EFM/SYNCE). |

| IN_ROUTER_GUARD_DROP | Ingress Layer2 IGMP or PIM dropped due to Router Guard with IGMP snooping enabled. |

| IN_DHCP_UNTRUSTED_DROP | Ingress DHCP frame dropped due to the untrusted DHCP bit set in the ingress L2 intf struct. |

| RESOLVE_L2_DHCP_SNOOP_UNTRUSTED_DROP_CNT | Egress DHCP frame dropped due to the untrusted DHCP bit set in the egress L2 intf struct. |

| ING_DHCP_PW_DROP | Ingress DHCP frame from AC that is destined for a PW and is eligible for snooping due to the DHCP snoop enabled bit set in the L2 ingress INTF STRUCT. This is dropped on ingress due to an L2 unicast to PW. DHCP snooping not supported for AC to PW frames. |

| EGR_DHCP_PW_DROP | Ingress DHCP frame from AC that is destined for a PW and is eligible for snooping due to the DHCP snoop enabled bit set in the L2 ingress INTF STRUCT. This is dropped on egress due to an L2 flood with a PW member. DHCP snooping not supported for AC to PW frames. |

| L2VPN_DEAGG_NON_VPWS_VPLS_DROP | Invalid setting for L2VPN deaggregation set in Label UFIB lookup result for ingress MPLS frame. This is most likely due to a programming error in the Label UFIB entry. |

| XID_ZERO_DISCARD | Ingress VPWS XC to AC frame has a zero destination XID stored in the L2 ingress INTF STRUCT – zero XID index is invalid. |

| XC2AC_NOMATCH_DROP | Egress VPWS XC to AC frame XID lookup fails. This is caused by an invalid XID index or missing XID entry. |

| L2UNIC_NOMATCH_DROP | Egress VPLS AC to AC frame XID lookup fails. This is caused by an invalid XID index or missing XID entry. This can also occur on an IGMP snoop originated inject to an AC/PW/Bundle with invalid XID in the inject feature header. |

| L2FLOOD_NOMATCH_DROP | Egress VPLS flood copy lookup in the flood member table fails. This could be caused by an invalid copy number or missing entry in the flood member table for that copy. |

| L2FLOOD_SRCDST_DROP | Egress VPLS flood copy is reflection filtered – copies to the original source port are dropped. |

| L2FLOOD_INACTIVE_MEMBER_DROP | Egress VPLS flood copy lookup in the flood member table returns an entry that’s not active (active bit in result is cleared). |

| L2FLOOD_EGR_XID_NO_MATCH | Egress VPLS flood copy XID lookup fails – XID stored in the OLIST result is invalid or entry is missing. This can occur if the copy is intended to go over a PW and the PW is down. |

| EGR_AC_PW_MISMATCH | Egress VPWS XC to AC XID lookup returns a PW type. For VPWS, PW entry is always on ingress. Note that for VPLS AC to AC, a PW type XID is allows in order to support IGMP injects to a PW. |

| RESOLVE_VPWS_LAG_BIT_DROP_CNT | An ingress VPWS XC to Bundle frame with a LAG table lookup fail. This is due to programming error where the LAG table pointer is invalid or no LAG table entry. |

| RESOLVE_L2_SXID_MISS_DROP_CNT | An ingress VPLS frame with a source XID lookup fail. This can be due to an invalid source XID programmed in the L2 ingress intf struct, or a missing source XID entry. |

| RESOLVE_VPLS_SPLIT_HORIZON_DROP_CNT | An ingress or egress VPLS that is dropped due to a split horizon group mismatch. Note that the split horizon group check is skipped for zero split horizon group indexes. |

| RESOLVE_VPLS_REFLECTION_FILTER_DROP_CNT | An ingress unicast VPLS frame that has a source XID that matches its destination XID. These are dropped since it cannot be sent back to the source port. |

| RESOLVE_VPLS_MAC_FILTER_DROP_CNT | An ingress VPLS frame has a source or destination MAC with the filter bit set in the L2FIB result – these are dropped. |

| RESOLVE_VPLS_ING_BD_STR_MISS_DROP_CNT | An ingress VPLS frame that is dropped due to a bridge domain lookup fail. |

| RESOLVE_VPLS_EGR_BD_STR_MISS_DROP_CNT | An egress VPLS flood frame or mac notify frame that is dropped due to a BD structure lookup miss. |

| RESOLVE_VPLS_FLOOD_BLOCK_DROP_CNT | An Ingress VPLS frame that is dropped due to its associated flood block bit being set: Unknown Unicast or Multicast. |

| RESOLVE_VPLS_STORM_DROP_CNT | An ingress VPLS frame that is dropped due to rate exceed for its associated flood storm control – Unknown Unicast or Multicast or Broadcast. |

| RESOLVE_VPLS_EGR_FLD_NO_MEMBERS_DROP_CNT | Egress L2 flood frame has zero members – no copies are sent to output ports. |

| RESOLVE_L2_EGR_INTF STRUCT_MISS_DROP_CNT | An egress VPLS unicast or flood copy frame to an AC or Bundle that is dropped to an egress L2 INTF STRUCT lookup miss. This is due to an invalid INTF STRUCT index in the XID or a missing egress L2 INTF STRUCT entry. This can also occur with L2 multicast forwarded frames or L2 multicast inject frames. |

| RESOLVE_L2_EGR_PW_INTF STRUCT_MISS_DROP_CNT | An egress VPLS unicast or flood copy frame to a PW that is dropped to an egress L2 INTF STRUCT lookup miss. This is due to an invalid INTF STRUCT index in the XID or a missing egress L2 INTF STRUCT entry. This can also occur if the PW is down. . This can also occur with L2 multicast forwarded frames or L2 multicast inject frames to a PW. |

| RESOLVE_VPWS_L2VPN_LDI_MISS_DROP_CNT | An ingress VPWS or VPLS unicast frame to a PW with an L2VPN LDI table lookup fail. This can be due to an invalid L2VPN LDI index in the XID, or a missing entry in the L2VPN LDI table. |

| RESOLVE_VPWS_RP_DROP_CNT | An ingress VPWS or VPLS unicast frame to a PW with the RP drop bit or RP dest. bit set in the NON-RECURSIVE ADJACENCY or RX-ADJ lookup results. Punts are not supported for an L2 PW adjacency. |

| RESOLVE_VPWS_NULL_ROUTE_DROP_CNT | An ingress VPWS or VPLS unicast frame to a PW with the Null Route bit or IFIB punt bit set in the Leaf lookup or RECURSIVE ADJACENCY lookup results. Punts are not supported for an L2 PW adjacency. |

| RESOLVE_VPWS_LEAF_DROP_CNT | An ingress VPWS or VPLS unicast frame to a PW with the Drop bit or Punt bit set in the Leaf lookup or RECURSIVE ADJACENCY lookup results. Punts are not supported for an L2 PW adjacency. |

| RESOLVE_VPLS_EGR_NULL_RTE_DROP_CNT | An egress VPLS flood frame copy to a PW with the Null Route bit set in the Leaf lookup or RECURSIVE ADJACENCY lookup results. Also includes IGMP snoop injects to a PW. Due to a software problem, this may also get incremented due to a BD structure lookup miss on egress flood. |

| RESOLVE_VPLS_EGR_LEAF_IFIB_DROP_CNT | An egress VPLS flood frame copy to a PW with the IFIB punt bit set in the Leaf lookup or RECURSIVE ADJACENCY lookup results. Punts are not supported for an L2 PW adjacency. Also includes IGMP snoop injects to a PW. |

| RESOLVE_VPLS_EGR_LEAF_PUNT_DROP_CNT | An egress VPLS flood frame copy to a PW with the Drop bit or Punt bit or Next Hop Down bit set in the Leaf lookup or RECURSIVE ADJACENCY or NON-RECURSIVE ADJACENCY lookup results. Punts are not supported for an L2 PW adjacency. Also includes IGMP snoop injects to a PW. |

| RESOLVE_VPLS_EGR_TE_LABEL_DROP_CNT | An egress VPLS flood frame copy to a PW with the Drop bit or Punt bit or Incomplete Adjacency bit set in the Tx adjacency or Backup Adjacency or NH Adjacency lookup results. Punts are not supported for an L2 PW adjacency. Also includes IGMP snoop injects to a PW. |

| RESOLVE_VPLS_EGR_PW_FLOOD_MTU_DROP_CNT | An egress VPLS flood frame copy to a PW that fails L3 MTU check. Punts are not supported for an L2 PW adjacency with MTU fail. Also includes IGMP snoop injects to a PW. |

| RESOLVE_VPLS_EGR_PW_FLOOD_INTF STRUCT_DOWN_DROP_CNT | An egress VPLS flood frame copy to a PW with egress INTF STRUCT in down state. Punts are not supported for an L2 PW adjacency with line status down. Also includes IGMP snoop injects to a PW. |

| RESOLVE_VPLS_EGR_PW_FLOOD_NO_NRLDI_DROP_CNT | An egress VPLS flood frame copy to a PW with and NON-RECURSIVE ADJACENCY lookup miss. Also includes IGMP snoop injects to a PW. |

| RESOLVE_INGRESS_LEARN_CNT | Total number of VPLS Source MAC learns on an ingress frame – learned locals. |

| PARSE_FAB_MACN_W_FLD_RECEIVE_CNT | Count of all frames received from fabric on egress LC with NPH L2 flood type and mac notify included (mac notify for ingress SA learn included with L2 flood) |

| PARSE_FAB_MACN_RECEIVE_CNT | Count of all frames received from fabric on egress LC with NPH mac notify flood type (mac notify for ingress SA learn). This includes: 1) forward mac notifies (from ingress LC) 2) reverse mac notifies (from egress LC in response to flood 3) mac deletes (due to a mac move or port flush) |

| PARSE_FAB_DEST_MACN_RECEIVE_CNT | Count of all mac notify frames received from fabric on egress LC that are reverse mac notify type |

| PARSE_FAB_MAC_DELETE_RECEIVE_CNT | Count of all mac notify frames received from fabric on egress LC that are mac delete type |

| RESOLVE_LEARN_FROM_NOTIFY_CNT | Total number of VPLS Source MAC learns from mac notify received. |

| RESOLVE_VPLS_MAC_MOVE_CNT | Total number of source MAC moves detected as a result of an ingress learn local or mac notify receive learn. |

| RESOLVE_BD_FLUSH_DELETE_CNT | Total number of learned entries deleted due to a Bridge Domain Flush |

| RESOLVE_INGRESS_SMAC_MISS_CNT | Total number of ingress frames with source MACs that did have a learned entry. These source MACs will be relearned if learned limits have not been exceeded, learning is enabled, and the address is unicast. |

| RESOLVE_VPLS_MCAST_SRC_MAC_DROP_CNT | Total number of ingress frames with source MACs that were not unicast. These frames are invalid and are are dropped. Non-unicast source macs cannot be learned. |

| RESOLVE_HALF_AGE_RELEARN_CNT | Total mac address from ingress frames that were relearned due to exceeding the half age period. These entries are refreshed to update the age timeout. |

| STATS_STATIC_PARSE_PORTFLUSH_CNT | Egress VPLS frame that has been detected as port flushed. Not valid if port based mac accounting is disabled. The frame will be dropped and mac delete indication sent to the bridge domain. |

| RESOLVE_PORT_FLUSH_DROP_CNT | Egress unicast frames dropped because the destination port has been flushed. For each a Mac Delete frame is sent to the bridge domain to remove the destination address. |

| RESOLVE_PORT_FLUSH_DELETE_CNT | Count of learned MACs deleted due to their source port being flushed. Does not include MACs deleted due to MAC Delete message. |

| RESOLVE_PORT_FLUSH_AGE_OUT_CNT | VPLS MAC entries that have been port flushed as detected by the MAC aging scan. These frames are dropped. Also can occur if the frame XID lookup fails and the XID is global or learned local – XID has been removed. |

| RESOLVE_VPLS_LEARN_LIMIT_ACTION_DROP_CNT | Ingress VPLS Frames dropped to learned limit exceeded – port based or bridge domain based learn limits – these frames are dropped. |

| RESOLVE_VPLS_STATIC_MAC_MOVE_DROP_CNT | A static MAC entry that are deleted due to a MAC move detected – these should never move. |

| RESOLVE_MAC_NOTIFY_CTRL_DROP_CNT | Total number of processed mac notify frames – forward or reverse mac notify – dropped after processing. |

| RESOLVE_MAC_DELETE_CTRL_DROP_CNT | Total number of processed mac delete frames – forward or reverse mac notify – dropped after processing. |

| RESOLVE_AGE_NOMAC_DROP_CNT | MAC scan aging message dropped due to an L2FIB lookup fail for the scanned mac entry. |

| RESOLVE_AGE_MAC_STATIC_DROP_CNT | MAC scan aging message dropped due to static MAC address for the scanned mac entry. |

| EGR_VLANOPS_DROP | Egress L2 frame without the proper number of vlans to do egress VLAN ops – the frame from the fabric needs at least one vlan to do a pop operation or replace operation. |

| EGR_PREFILTER_VLAN_DROP | Egress L2 frame that fails EFP pre-filter check – only valid if EFP filtering enabled – attached VLAN mismatch. |

| RESOLVE_EFP_FILTER_TCAM_MISS_DROP | Egress L2 frame that fails EFP filter TCAM – only valid if EFP filtering enabled – likely due to a TCAM missing entry due to a programming error. |

| RESOLVE_EFP_FILTER_MISS_MATCH_DROP | Egress L2 frame that fails EFP filter check – only valid if EFP filtering enabled – attached VLAN mismatch. |

| CFM_ING_PUNT | Ingress CFM frame punt count. |

| CFM_EGR_PUNT | Egress CFM frame punt count. |

| CFM_EGR_PARSE | Total egress Layer2 CFM frames processed by the NP – including egress CFM inject and egress CFM forwarded frames. |

| INJ_NO_XID_MATCH_DROP | Ingress CFM frame injects with an XID lookup fail – source XID for bridged injects, and dest. XID for pre-route injects – invalid XID index or missing entry. |

| EGR_CFM_XID_NOMATCH | Egress CFM frame inject with a dest. XID lookup fail – invalid XID index or missing entry. |

| EGR_CFM_PW_DROP | Egress CFM frame injected to a PW – not supported. |

| PARSE_INGRESS_L3_CFM_DROP_CNT | Ingress CFM frame on a layer 3 interface dropped due to CFM not enabled. |

| RESOLVE_VPLS_MC_ING_RTE_DROP_CNT | Ingress L2 multicast frame route lookup fails with no match. |

| RESOLVE_VPLS_MC_EGR_RTE_DROP_CNT | Egress L2 multicast frame route lookup fails with no match. |

| RESOLVE_VPLS_MC_NO_OLIST_DROP_CNT | Egress L2 multicast frame has zero olist members – no copies are sent to output ports. |

| EGR_L2MC_OLIST_NOMATCH | Egress L2 multicast frame has olist drop bit set (no copies are sent to output ports), OR egress L2 multicast frame olist lookup fails. Olist lookup failure could be due to a bad copy number, or a missing entry for that copy. |

| EGR_L2MC_SRCDST | Egress L2 multicast frame copy is reflection filtered – copies to the original source port are dropped. |

| EGR_L2MC_XID_NOMATCH | Egress L2 multicast frame copy XID lookup fails – XID stored in the OLIST result is invalid or entry is missing. This can occur if the copy is intended to go over a PW and the PW is down. |

| RESOLVE_L2_PROT_BYTE_ERROR_DROP_CNT | Invalid L2 protocol action set – this is an internal software programming error on an ingress or egress L2 frame if incremented. |

| RESOLVE_VPLS_ING_ERR_DROP_CNT | An ingress VPLS frame has an invalid processing type. This is likely an internal error in software. |

| RESOLVE_VPLS_EGR_STRUCT_MISS_DROP_CNT | Egress VPLS frame protocol handling falls through to an invalid case. This is likely a software implementation error. |

3. Punt Reason Counters

For each punt reason, there are 2 counters. The total number of frames attempted to be punted by microcode is the sum of these 2 counters.

- Actual punted

- Punt drops due to policing

If the punt reason lookup fails, then software will drop the packet with MODIFY_PUNT_DROP_CNT increment. A layer3 interface is either a router gateway or a default interface for untagged frames (or both).

| Counter Name | Counter Description |

| PUNT_INVALID PUNT_INVALID_DROP | Invalid Punt Reason – this should not occur. |

| PUNT_ALL PUNT_ALL_EXCD | Punt All Set – not implemented by software. |

| CDP CDP_EXCD | Punt Ingress CDP protocol frames – layer3 interface only. |

| ARP ARP_EXCD | Punt Ingress ARP protocol frames – layer3 interface only. |

| RARP RARP_EXCD | Punt Ingress Reverse ARP protocol frames – layer3 interface only. |

| CGMP CGMP_EXCD | Punt Ingress CGMP protocol frames – layer3 interface only. |

| LOOP LOOP_EXCD | Punt Ingress ELOOP protocol frames – layer3 interface only. |

| CFM_OTHER CFM_OTHER_EXCD | Ingress NP Punt or Egress NP Punt of CFM other packets (link trace or perf. mon.). Ingress punts can occur on a Layer2 or Layer3 interface Egress punts occur on a Layer2 interface only. |

| CFM_CC CFM_CC_EXCD | Ingress NP Punt or Egress NP Punt of CFM CC packets (continuity check). Ingress punts can occur on a Layer2 or Layer3 interface Egress punts occur on a Layer2 interface only. |

| DHCP_SNOOP_REQ DHCP_SNOOP_REQ_EXCD | Ingress Punt of DHCP snoop request frames – layer2 interface only and DHCP snooping enabled. |

| DHCP_SNOOP_REPLY DHCP_SNOOP_REPLY_EXCD | Ingress Punt of DHCP snoop reply frames – layer2 interface only and DHCP snooping enabled. |

| MSTP MSTP_EXCD | Ingress NP Punt or Egress NP Punt of MSTP frames. For Layer2 VPLS or Layer3, punt is on ingress frames only. For Layer3 VPWS, punt is on egress frames only. |

| MSTP_PB MSTP_PB_EXCD | Ingress NP Punt or Egress NP Punt of MSTP Provider Bridge frames. For Layer2 VPLS or Layer3, punt is on ingress frames only. For Layer3 VPWS, punt is on egress frames only. |

| IGMP_SNOOP IGMP_SNOOP_EXCD | Ingress punt of snooped IGMP or PIM frames – layer2 interface only and IGMP snooping enabled. |

| EFM EFM_EXCD | Ingress Punt of EFM Protocol frames – layer3 interface only. |

| IPv4_OPTIONS IPv4_OPTIONS_EXCD | Ingress Punt of IP frames with options – layer3 interface only – IPV4, IPV6. |

| IPV4_PLU_PUNT (IPV4_FIB_PUNT) IPV4_PLU_PUNT_EXCD | Ingress or Egress punt of IPV4 frames with punt flag set in Leaf results or RECURSIVE ADJACENCY results – layer3 interface only. |

| IPV4MC_DO_ALL IPV4MC_DO_ALL_EXCD | Ingress punt of IPV4 Mcast frames due to punt only set for route, OR Egress punt of IPV4 Mcast frames due to punt and forward bit set for mcast group member – layer3 interface only. IPV4 Mcast punts not support for egress route. |

| IPV4MC_DO_ALL_BUT_FWD IPV4MC_DO_ALL_BUT_FWD_EXCD | Ingress punt and forward of IPV4 Mcast frames due to punt only set for route, OR Egress punt and forward of IPV4 Mcast frames due to punt and forward bit set for mcast group member – layer3 interface only. IPV4 Mcast punts not support for egress route. This can also occur as a result of the RPF source interface check. |

| PUNT_NO_MATCH (PUNT_FOR_ICMP) PUNT_NO_MATCH_EXCD | An Ingress or Egress IPV4/IPV6 unicast frame punt due to ICMP Unreachable (i.e., next hop down). |

| IPV4_TTL_ERROR (TTL_EXCEEDED) IPV4_TTL_ERROR_EXCD | An Ingress IPV4 frame with a TTL of 1 is punted (TTL of 0 is dropped). |

| IPV4_DF_SET_FRAG_NEEDED_PUNT IPV4_DF_SET_FRAG_NEEDED_PUNT_EXCD | An egress IPV4 or MPLS frame with MTU exceeded is punted. |

| RP_PUNT RP_PUNT_EXCD | An ingress IPV4 or MPLS has the RP punt bit set in the NON-RECURSIVE ADJACENCY or RX adjacency. |

| PUNT_IFIB PUNT_IFIB_EXCD | An ingress IPV4 ucast or IPV6 frame has an IFIB lookup match resulting in an IFIB punt. |

| PUNT_ADJ PUNT_ADJ_EXCD | An ingress IPV4 ucast or MPLS frame has the adjacency punt bit set in the NON-RECURSIVE ADJACENCY or RX adjacency. An egress IPV4 ucast or MPLS frame has the adjacency punt bit set in the NON-RECURSIVE ADJACENCY or TX adjacency. |

| PUNT_UNKNOWN_IFIB PUNT_UNKNOWN_IFIB_EXCD | An ingress IPV4 ucast frame has the IFIB bit set in the LEAF or RECURSIVE ADJACENCY and the IFIB lookup does not get a match, OR an ingress MPLS frame has the IFIB bit set in the LEAF or RECURSIVE ADJACENCY. |

| PUNT_ACL_DENY PUNT_ACL_DENY_EXCD | Ingress or Egress frame has a deny action set in the ACL policy and ACL punt (instead of drop) is enabled. |

| PUNT_ACL_LOG PUNT_ACL_LOG_EXCD | Ingress or Egress frame has an ACL policy match and ACL logging is enabled. Layer3 frames only. |

| PUNT_ACL_LOG_L2 PUNT_ACL_LOG_L2_EXCD | Ingress or Egress frame has an ACL policy match and ACL logging is enabled. Layer2 frames only. |

| IPV6_LINK_LOCAL IPV6_LINK_LOCAL_EXCD | Ingress IPV6 frames received that are link local packets – these are punted – layer3 interface only. |

| IPV6_HOP_BY_HOP IPV6_HOP_BY_HOP_EXCD | Ingress IPV6 frames received that are hop by hop packets - these are punted – layer3 interface only. |

| IPV6_TTL_ERROR IPV6_TTL_ERROR_EXCD | Ingress IPV6 frames that have a TTL error – these are punted – layer3 interface only. |

| IPV6_PLU_PUNT IPV6_PLU_PUNT_EXCD | Ingress or Egress punt of IPV6 frames with punt flag set in Leaf results or RECURSIVE ADJACENCY results – layer3 interface only. |

| IPV6_PLU_RCV IPV6_PLU_RCV_EXCD | Not implemented by software. |

| IPV6_ROUT_HEAD IPV6_ROUT_HEAD_EXCD | Ingress IPV6 frames received that are router header packets – these are punted – layer3 interface only. |

| IPV6_TOO_BIG IPV6_TOO_BIG_EXCD | Egress IPV6 frames that are too bit – exceed MTU – these are punted – layer3 interface only. |

| IPV6_MISS_SRC IPV6_MISS_SRC_EXCD | Not implemented by software. |

| IPV6MC_DC_CHECK IPV6MC_DC_CHECK_EXCD | Not implemented by software. |

| IPV6MC_DO_ALL IPV6MC_DO_ALL_EXCD | Not implemented by software. |

| IPV6MC_DO_ALL_BUT_FWD IPV6MC_DO_ALL_BUT_FWD_EXCD | Not implemented by software. |

| MPLS_PLU_PUNT (MPLS_FIB_PUNT) MPLS_PLU_PUNT_EXCD | Ingress MPLS frame punted due to Punt set in the Leaf or RECURSIVE ADJACENCY, or an Ingress MPLS router alert frame – these are punted. |

| MPLS_FOR_US MPLS_FOR_US_EXCD | Ingress MPLS frame punted due to IFIB (for-us) set in the Leaf or RECURSIVE ADJACENCY. |

| PUNT_VCCV_PKT PUNT_VCCV_PKT_EXCD | Ingress MPLS frame that is a PW VCCV frame – must be a PWtoAC de-aggregation flow. |

| PUNT_STATISTICS PUNT_STATISTICS_EXCD | Statistics gathering frame punted to LC host – generated by statistics scanning machine. |

| NETIO_RP_TO_LC_CPU_PUNT NETIO_RP_TO_LC_CPU_PUNT_EXCD | Preroute egress inject frame received from the RP that is sent to the LC host. |

| MOFRR_PUNT MOFRR_PUNT_EXCD | IPV4 multicast over fast reroute punt frames. |

| VIDMON_PUNT VIDMON_PUNT_EXCD | IPV4 multicast video monitoring punt frames. |

| VIDMON_PUNT_FLOW_ADD VIDMON_PUNT_FLOW_ADD_EXCD | IPV4 multicast video monitoring new flow added punt frames. |

| PUNT_DBGTRACE | Debug Trace frames that are punted. |

| PUNT_SYNCE | Punt Ingress SYNCE protocol frames – layer3 interface only. |

Related Information

n/a

Xander Thuijs - CCIE #6775

Sr Tech Lead ASR9000

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

hi Xiufeng,

if you are running 5.3.3 or later check the two new NP drop troubleshooting features described in

/Aleksandar

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello Xander,

I have been observing packet drops on the ASR platforms and the only details regarding these drops are stated in your write-up above. Is there any detailed writeup on these drops that I can refer to for better understanding?

Also I've noticed that on Ethernet bundle links (LAG), the aggregate of all bundle links for "Input Drops" is sometimes 4 times more then the aggregate of individuals TenGig links within the bundles. Is this a bug or is there an explanation for the difference?

Thank you

Faz

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Faz,

see also https://supportforums.cisco.com/document/12927596/asr9000xr-troubleshooting-packet-drops-drops-np-microcode

regards,

Aleksandar

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

In the context of ICFDQ, what is considered network control and what is HP?

BRKSPG-2904 2017 eur says Network control, High (ToS/Exp/Vlan Cos/DSCP >= 6), does it imply control = 7 and HS = 6? Or network control is based on protocol (isis, lacp and what else)?

Thanks.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

In this context, the control protocols are:

L3: ICMP, IGMP, RSVP, OSPF, PIM, VRRP, etc.

L2: STP, LACP, LLDP, pause, ARP, PPPoE control, PTP, CFM, CLNS, etc.

Everything else with CoS, IP Prec, MPLS Exp of value 6 and 7 is considered high priority.

hope this helps,

/Aleksandar

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Aleksandar,

This actually poses a problem, and I’ve been pointing this out with other vendor s too, ICMP should not be considered NC, ideally I’d like to be able to modify what ICU considers as NC, so that I can protect my border routers from any DDoS vectors.

Is there a command I can use please?

Thank you

adam

netconsultings.com

::carrier-class solutions for the telecommunications industry::

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

hi Adam,

there is no CLI to change the network control.

/Aleksandar

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Aleksandar,

Hmm that’s unfortunate, and I see other vendors struggling with this as well, can such a functionality be added please, so that you can stay ahead of the competition?

I appreciate the instruction set is very limited on these components but having a fully configurable “pre-classification” is a must in order to be able to protect what customer deems as an important traffic/packets in events when NPU is congested and ICFDQs are getting clogged.

Thank you

adam

netconsultings.com

::carrier-class solutions for the telecommunications industry::

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

hi Adam,

some ICMP packets are more important than other. It would be nice to differentiate between them, but in this critical part of the microcode we have to conserve the ucode cycles and can't take in new commands to fine tune the EFD further. It's something we should definitely look into in the new LC generations.

Btw, on Tomahawk the EFD happens in hardware. You need to run "sh controllers np fast-drop" to see whether EFD has dropped any packet (vs looking for PARSE_FAST_DISCARD_LOW_PRIORITY_DROP NP counters on Typhoon).

regards,

/Aleksandar

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Aleksandar,

Sorry for the late response,

Regarding the ICMP importance let’s just agree to disagree :)

It would be excellent to have a simple on/off switch next to each of these control protocol comparators, or to be able to define which of the protocols supported should fall into Control feeder queue, whichever eats less cycles.

Our testing suggests that on platforms with this problem when enough ICMP traffic is sent to the NPU from WAN side, it may overload the NPU and starve out all other traffic passing through including traffic arriving at the NPU from Fabric . Since traffic coming from Fabric to NPU feeder queues will be marked as high priority at most (no control feeder queues on from-fabric-to-NPU) and in this case DoS traffic coming from WAN is Control – meaning it will always be prioritized even over traffic coming from Fabric and as a result Fabric side is going to be backpressured severely.

adam

netconsultings.com

::carrier-class solutions for the telecommunications industry::

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

adam, mind you this, when we are in EFD mode we need to make decisions quickly to free up the pipeline. if we customize that behavior with too many knobs and checks it slows down the pipeline's baseline performance as well as the ability to quickly determine whether to keep or drop the packet.

it does make sense to have icmp classified as low priority to helpindicuate with packet loss that there is an issue. however this behavior has been like this for forever and it may be that folks have become reliant on this existing behavior. so to change it there needs to be a sound justification. not to mention the edcuation and documentation effort it requires for understanding etc.

I find it somewhat unlikely that one could have 200Mpps of ICMP to starve the NPU. In addition, if "for-me", lpts will assist here also.

anyways, things are always possible, but certain things need a strong use case/justification making it worth the effort. I dont happen to see it right now.

xander

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Xander,

Looking at the headlines of some of the security related articles it seems that there’s an uptake in high Mpps DDoS attacks (some as large as 300Mpps).

And the actual Mpps budget of the NPU depends on average packet size and features enabled, all that combined and the DDoS attack doesn’t need to be in high hundreds Mpps to cause problems.

I take it that the overall pps budget of Tomahawk NPU is actually 2x200Mpps TX+RX (i.e. 200Mpps for WAN to Fabric & 200Mpps for Fabric to WAN).

One possible solution would be to divide the feeder queues (64 groups x 4 CoS queues) into separate/dedicated WAN and Fabric feeder queues, then WAN feeder queues getting full could trigger different flow-control/backpressure than Fabric feeder queues getting full. So that in case of DDoS coming from WAN, WAN side could be backpressured independently –not affecting traffic coming from Fabric to the NPU (scoping the impact).

Or even better setting the depth of these feeder queues right could limit the impact

(e.g. sum of WAN Control-priority feeder queues couldn’t take over the whole Mpps budget of the NPU –causing problems in the from-Fabric direction).

You could set the queue numbers and depths so, that for example:

SUM of WAN feeder queues can take max 70% of the overall Mpps budget.

SUM of Fabric feeder queues can take max 70% of the overall Mpps budget.

SUM of (WAN) Control-priority feeder queues can take max 10% of the overall Mpps budget of the NPU. –this can be done even without the WAN/Fabric split. If limited to X% -then fullness of these queues doesn’t even need to trigger flow-control/backpressure.

adam

netconsultings.com

::carrier-class solutions for the telecommunications industry::

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

hi Adam,

it's 200 Mpps total. There is already a limit on the number of RFDs per NP port. "Ports" from the NP point of view are all physical network interfaces controlled by the NP, port towards FIA, port towards LC CPU, loopback port, etc. This way a DDoS stream from one network port will never be able to bring down the NP.

/Aleksandar

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Aleksandar,

It’s not so much about the number of ICFD/RFD queues per port, but about what proportion of these is dedicated or can be used by Network Control priority/traffic (if NC traffic can fill all these queues up that wouldn’t be desirable).

Maximum I can generate from a single 100GE port, 100Gbps@L2(64B)@L1(84B), is ~149Mpps (wouldn’t make the NPU happy but survivable). But from two ports I can actually do some damage.

- I guess my question is, whether if I throw 200Gbps/~300Mpps worth of control traffic onto the NPU, whether all of that will actually end up in NPUs pipeline for processing or the ICFD/RFD queues for control traffic would overflow and start dropping far sooner; and if the latter is the case then I’d like to understand at what rate it starts dropping at (if the control is above x? Mpps).

If the NPUs budget was 200Mpps for both directions, then the card would be able to transport at line-rate only if packet size is >230B, I’m pretty sure that’s not the case.

adam

netconsultings.com

::carrier-class solutions for the telecommunications industry::

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

hi Adam,

pps rate at which the NP starts dropping packets depends very much on the features that need to be executed. The nominal performance is for plain simple ipv4 forwarding or mpls label swap.

/Aleksandar

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: