- Cisco Community

- Technology and Support

- Service Providers

- Service Providers Knowledge Base

- ASR9000/XR: Understanding QOS, default marking behavior and troubleshooting

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

03-07-2011 01:43 PM - edited 12-18-2018 05:19 AM

Introduction

This document provides details on how QOS is implemented in the ASR9000 and how to interpret and troubleshoot qos related issues.

Core Issue

QOS is always a complex topic and with this article I'll try to describe the QOS architecture and provide some tips for troubleshooting.

Based on feedback on this document I'll keep enhancing it to document more things bsaed on that feedback.

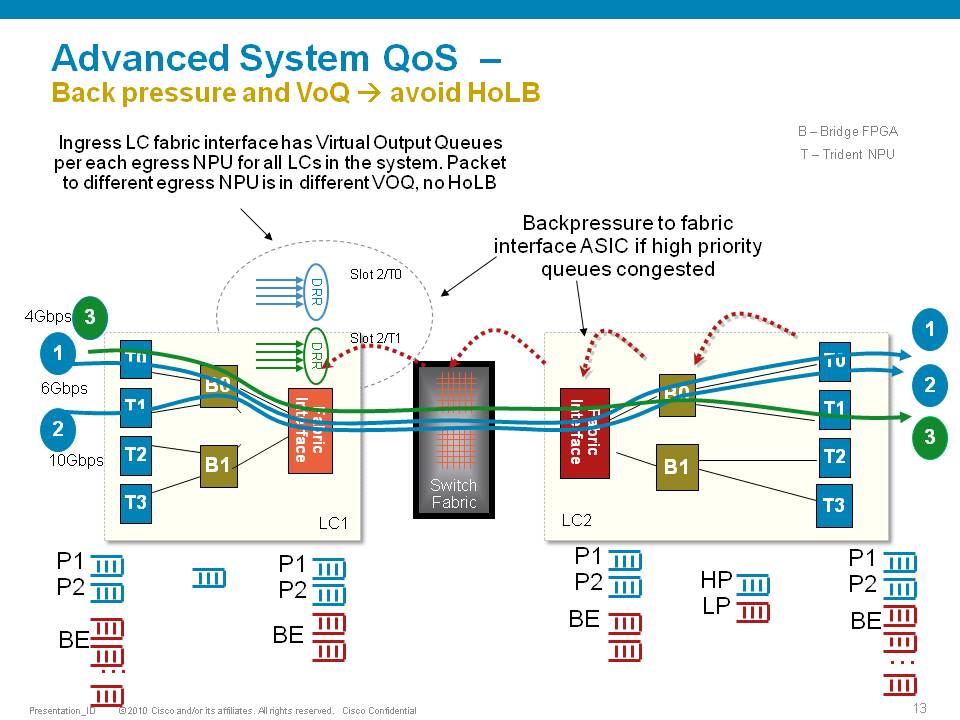

The ASR9000 employs an end to end qos architecture throughout the whole system, what that means is that priority is propagated throughout the systems forwarding asics. This is done via backpressure between the different fowarding asics.

One very key aspect of the A9K's qos implementation is the concept of using VOQ's (virtual output queues). Each network processor, or in fact every 10G entity in the system is represented in the Fabric Interfacing ASIC (FIA) by a VOQ on each linecard.

That means in a fully loaded system with say 24 x 10G cards, each linecard having 8 NPU's and 4 FIA's, a total of 192 (24 times 8 slots) VOQ's are represented at each FIA of each linecard.

The VOQ's have 4 different priority levels: Priority 1, Priority 2, Default priority and multicast.

The different priority levels used are assigned on the packets fabric headers (internal headers) and can be set via QOS policy-maps (MQC; modular qos configuration).

When you define a policy-map and apply it to a (sub)interface, and in that policy map certain traffic is marked as priority level 1 or 2 the fabric headers will represent that also, so that this traffic is put in the higher priority queues of the forwarding asics as it traverses the FIA and fabric components.

If you dont apply any QOS configuration, all traffic is considered to be "default" in the fabric queues. In order to leverage the strength of the asr9000's asic priority levels, you will need to configure (ingress) QOS at the ports to apply the priority level desired.

In this example T0 and T1 are receiving a total of 16G of traffic destined for T0 on the egress linecard. For a 10G port that is obviously too much.

T0 will flow off some of the traffic, depending on the queue, eventually signaling it back to the ingress linecard. While T0 on the ingress linecard also has some traffic for T1 on the egress LC (green), this traffic is not affected and continues to be sent to the destination port.

Resolution

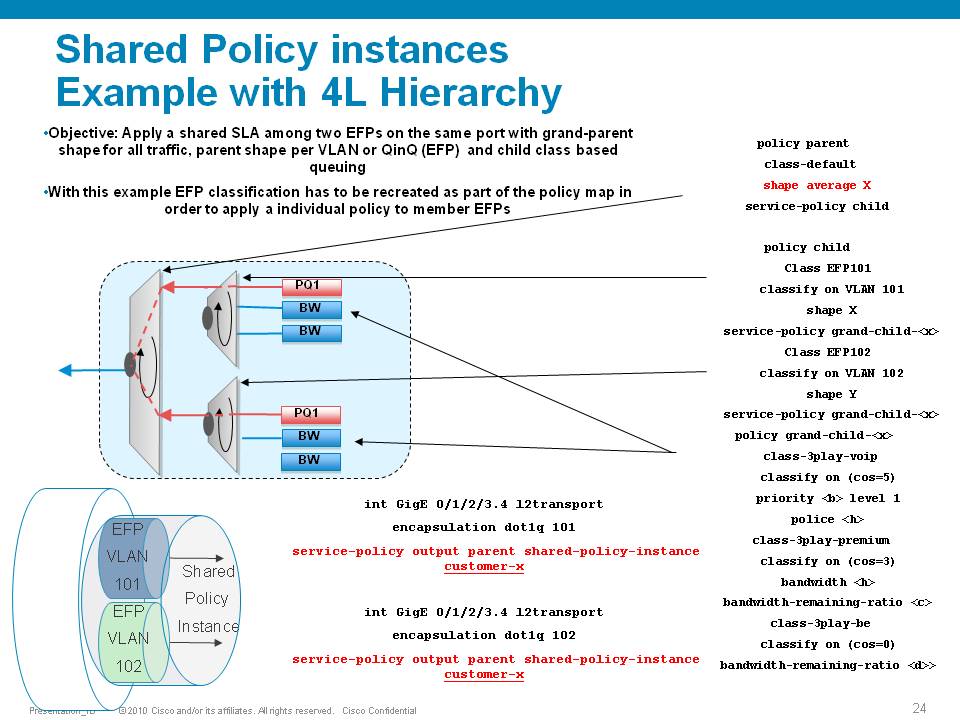

The ASR9000 has the ability of 4 levels of qos, a sample configuration and implemenation detail presented in this picture:

Policer having exceeddrops, not reaching configured rate

Set the Bc to CIR bps * (1 byte) / (8 bits) * 1.5 seconds

and

Be=2xBc

Default burst values are not optimal

Say you are allowing 1 pps, and then 1 second you don’t send anything, but the next second you want to send 2. in that second you’ll see an exceed, to visualize the problem.

Alternatively, Bc and Be can be configured in time units, e.g.:

policy-map OUT

class EF

police rate percent 25 burst 250 ms peak-burst 500 ms

For viewing the Bc and Be applied in hardware, run the "show qos interface interface [input|output]".

Why do I see non-zero values for Queue(conform) and Queue(exceed) in show policy-map commands?

On the ASR9k, every HW queue has a configured CIR and PIR value. These correspond to the "guaranteed" bandwidth for the queue, and the "maximum" bandwidth (aka shape rate) for the queue.

In some cases the user-defined QoS policy does NOT explicitly use both of these. However, depending on the exact QoS config the queueing hardware may require some nonzero value for these fields. Here, the system will choose a default value for the queue CIR. The "conform" counter in show policy-map is the number of packets/bytes that were transmitted within this CIR value, and the "exceed" value is the number of packets/bytes that were transmitted within the PIR value.

Note that "exceed" in this case does NOT equate to a packet drop, but rather a packet that is above the CIR rate on that queue.

You could change this behavior by explicitly configuring a bandwidth and/or a shape rate on each queue, but in general it's just easier to recognize that these counters don't apply to your specific situation and ignore them.

What is counted in QOS policers and shapers?

When we define a shaper in a qos pmap, the shaper takes the L2 header into consideration.

The shape rate defined of say 1Mbps would mean that if I have no dot1q or qinq, I can technically send more IP traffic then having a QIQ which has more L2 overhead. When I define a bandwidth statement in a class, same applies, also L2 is taken into consideration.

When defining a policer, it looks at L2 also.

In Ingress, for both policer & shaper, we use the incoming packet size (including the L2 header).

In order to account the L2 header in ingress shaper case, we have to use a TM overhead accounting feature, that will only let us add overhead in 4 byte granularity, which can cause a little inaccuracy.

In egress, for both policer & shaper we use the outgoing packet size (including the L2 header).

ASR9K Policer implementation supports 64Kbps granularity. When a rate specified is not a multiple of 64Kbps the rate would be rounded down to the next lower 64Kbps rate.

For policing, shaping, BW command for ingress/egress direction the following fields are included in the accounting.

|

MAC DA |

MAC SA |

EtherType |

VLANs.. |

L3 headers/payload |

CRC |

Port level shaping

Shaping action requires a queue on which the shaping is applied. This queue must be created by a child level policy. Typically shaper is applied at parent or grandparent level, to allow for differentiation between traffic classes within the shaper. If there is a need to apply a flat port-level shaper, a child policy should be configured with 100% bandwidth explicitly allocated to class-default.

Understanding show policy-map counters

QOS counters and show interface drops:

Policer counts are directly against the (sub)interface and will get reported on the "show interface" drops count.

The drop counts you see are an aggregate of what the NP has dropped (in most cases) as well as policer drops.

Packets that get dropped before the policer is aware of them are not accounted for by the policy-map policer drops but may

show under the show interface drops and can be seen via the show controllers np count command.

Policy-map queue drops are not reported on the subinterface drop counts.

The reason for that is that subinterfaces may share queues with each other or the main interface and therefore we don’t

have subinterface granularity for queue related drops.

Counters come from the show policy-map interface command

| Class name as per configuration | Class precedence6 | ||||||||

| Statistics for this class | Classification statistics (packets/bytes) (rate - kbps) | ||||||||

| Packets that were matched | Matched : 31583572/2021348608 764652 | ||||||||

| packets that were sent to the wire | Transmitted : Un-determined | ||||||||

| packets that were dropped for any reason in this class | Total Dropped : Un-determined | ||||||||

| Policing stats | Policing statistics (packets/bytes) (rate - kbps) | ||||||||

| Packets that were below the CIR rate | Policed(conform) : 31583572/2021348608 764652 | ||||||||

| Packets that fell into the 2nd bucket above CIR but < PIR | Policed(exceed) : 0/0 0 | ||||||||

| Packets that fell into the 3rd bucket above PIR | Policed(violate) : 0/0 0 | ||||||||

| Total packets that the policer dropped | Policed and dropped : 0/0 | ||||||||

| Statistics for Q'ing | Queueing statistics <<<---- | ||||||||

| Internal unique queue reference | Queue ID : 136 | ||||||||

|

how many packets were q'd/held at max one time (value not supported by HW) |

High watermark (Unknown) | ||||||||

|

number of 512-byte particles which are currently waiting in the queue |

Inst-queue-len (packets) : 4096 | ||||||||

|

how many packets on average we have to buffer (value not supported by HW) |

Avg-queue-len (Unknown) | ||||||||

|

packets that could not be buffered because we held more then the max length |

Taildropped(packets/bytes) : 31581615/2021223360 | ||||||||

| see description above (queue exceed section) | Queue(conform) : 31581358/2021206912 764652 | ||||||||

| see description above (queue exceed section) | Queue(exceed) : 0/0 0 | ||||||||

|

Packets subject to Randon Early detection and were dropped. |

RED random drops(packets/bytes) : 0/0 | ||||||||

Understanding the hardware qos output

RP/0/RSP0/CPU0:A9K-TOP#show qos interface g0/0/0/0 output

With this command the actual hardware programming can be verified of the qos policy on the interface

(not related to the output from the previous example above)

Tue Mar 8 16:46:21.167 UTC

Interface: GigabitEthernet0_0_0_0 output

Bandwidth configured: 1000000 kbps Bandwidth programed: 1000000

ANCP user configured: 0 kbps ANCP programed in HW: 0 kbps

Port Shaper programed in HW: 0 kbps

Policy: Egress102 Total number of classes: 2

----------------------------------------------------------------------

Level: 0 Policy: Egress102 Class: Qos-Group7

QueueID: 2 (Port Default)

Policer Profile: 31 (Single)

Conform: 100000 kbps (10 percent) Burst: 1248460 bytes (0 Default)

Child Policer Conform: TX

Child Policer Exceed: DROP

Child Policer Violate: DROP

----------------------------------------------------------------------

Level: 0 Policy: Egress102 Class: class-default

QueueID: 2 (Port Default)

----------------------------------------------------------------------

Default Marking behavior of the ASR9000

If you don't configure any service policies for QOS, the ASR9000 will set an internal cos value based on the IP Precedence, 802.1 Priority field or the mpls EXP bits.

Depending on the routing or switching scenario, this internal cos value will be used to do potential marking on newly imposed headers on egress.

Scenario 1

Scenario 2

Scenario 3

Scenario 4

Scenario 5

Scenario 6

Special consideration:

If the node is L3 forwarding, then there is no L2 CoS propagation or preservation as the L2 domain stops at the incoming interface and restarts at the outgoing interface.

Default marking PHB on L3 retains no L2 CoS information even if the incoming interface happened to be an 802.1q or 802.1ad/q-in-q sub interface.

CoS may appear to be propagated, if the corresponding L3 field (prec/dscp) used for default marking matches the incoming CoS value and so, is used as is for imposed L2 headers at egress.

If the node is L2 switching, then the incoming L2 header will be preserved unless the node has ingress or egress rewrites configured on the EFPs.

If an L2 rewrite results in new header imposition, then the default marking derived from the 3-bit PCP (as specified in 802.1p) on the incoming EFP is used to mark the new headers.

An exception to the above is that the DEI bit value from incoming 802.1ad / 802.1ah headers is propagated to imposed or topmost 802.1ad / 802.1ah headers for both L3 and L2 forwarding;

Related Information

ASR9000 Quality of Service configuration guide

Xander Thuijs, CCIE #6775

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Catalin, thanks! :) So I see why you think scenario 6, but in fact you are hitting scenario 5. So in that case the IPP is used for the internal cos and set on the new headers imposed exp or dot1q.

If you like to leverage the EXP instead in your case, you will need to make a policy-map to set a qos-group derived from the exp, and on egress use that qos-group to set the dot1p.

cheers

xander

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

hi Xander ,

Not sure why is scenario 5 tbh as the packet has a label. Also if on outbound interface i apply a policy to match cos ( internal i assume ) i got hits on that.

Worth mentioning the packet is a p2mp/mldp multicast.

edit : an vpn unicast ping with IPP=0 marked to exp4 on ingress PE get sent to egree with dot1q 4 and IPP=0 , so looks like scenario 6 is the one for unicast , not sure why m-cast is not the same.

Thx,

Catalin

ingress:

RP/0/RSP1/CPU0:#sh policy-map interface Te0/0/1/1 | b Class EXP_4

Wed Nov 18 12:52:09.989 GMT

Class EXP_4_CE

Classification statistics (packets/bytes) (rate - kbps)

Matched : 50326200/66430584000 52630

class-map match-any EXP_4_CE

match mpls experimental topmost 4

end-class-map

!

egress:

TenGigE0/0/0/2.401 output: PE-CE

Class IPP_4_CE1

Classification statistics (packets/bytes) (rate - kbps)

Matched : 48021699/63388642680 44866

policy-map PE-CE

class IPP_4_CE1

priority level 1

police rate percent 10

!

!

class class-default

!

end-policy-map

!

class-map match-any IPP_4_CE1

match cos 4

end-class-map

!

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Xander,

Thank you very much for these articles

1) Regarding Backpressure:

egress NP -> egress FIA -> fabric Arbiter -> ingress FIA -> VoQ

- the granularity of backpressure is per VoQ but BE packets are dropped first (WRED),

then P2, then P1 - is that right please?

Based on what are the access grants issued by the Fabric arbiter please?

- Is it based on the sum of egress port capacity of an Egress NP

- or based on actual Egress NP capability to process traffic.

E.G. if Egress NP has to process high PPS rate on input

and in addition a lot of features are enabled on the Egress NP

and as a result it is unable to process Egress traffic at full line rate

- Will it issue backpressure towards FIA, Fabric and ingress NPs?

- Also will it issue pause frames in attempt to slow down the high PPS WAN input?

If NP does not initiate backpressure or pause frames based on its ability to process traffic

- If in overload monde:

- On Fabric input is it guaranteed that it will start dropping low priority traffic

first, trying to protect the CP and P1 traffic or possibly P2 traffic.

- On WAN input is it guaranteed that it will start dropping low priority traffic

first, trying to protect the P1 or possibly P2 traffic

(CP traffic has to be manually matched to P1 on input right?)

- Will it start dropping WAN input and Fabric input equally please?

- Is WAN input and Fabric input serviced 1:1 by the NP

- Is there a strict priority on NP

or is it more like HP1:MP2:MP3:LP = 16:4:2:1 or something like that?

2) Regarding the Fabric arbitration

-what is “credit return” please?

3) Regarding the VOQ.

Just to confirm the nomenclature:

-VOQ consist of 4 VQIs i.e. P1, P2, BE, M-CAST please?

On Trident LCs:

VOQ per NP, basically 4 VQI per 10GE port?

On Typhoon LCs:

10GE port has 4 VQIs: P1, P2, BE, M-CAST

40GE port has 8 VQIs: 2xP1, 2xP2, 2xBE, 2xM-CAST

100GE port has 16 VQIs: 4xP1, 4xP2, 4xBE, 4xM-CAST

Is CP = HP1

Is P1 = MP2

Is P2 = MP3

Is LP = LP

How it translates to Typhoon Egress: P1,P2,P3,LP

thank you very much

adam

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Alexander

When implementing QoS on ASR9010/ASR9006, I encountered the following issue:

interface TenGigE0/x/x/x

service-policy output Parent_FA_Egress-GRP

!!% 'qos-ea' detected the 'warning' condition 'Queue related actions on a class require parent class to have queue'

Parent_FA_Egress-GRP is a H-QoS policy map.

Would you please give some comments about the issue ?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Alexander

when implementing the H-QoS on ASR9010/ASR9006 with 5.3.2 , I encountered an issue

interface TenGigE0/3/0/x

service-policy output Parent_FA_Egress-GRP

!!% 'qos-ea' detected the 'warning' condition 'Queue related actions on a class require parent class to have queue'

!

Would you please give some comments or suggestion about this troubleshooting?

Br

Vincent

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

hi Vincent,

you are very likely missing a shaper at the parent level. Without parent shaper the child policy doesn't have a reference bandwidth.

Aleksandar

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Aleksandar

Thank you for your reply. It works when adding shaper under parent level.

1. If I want to policy the flow rather than shaping the flow, how do I configure it ?

2. If the configuration is listed as below:

interface Te0/0/0/0

service-policy output Parent_FA_Egress

!

policy-map Parent_FA_Egress

class class-default

service-policy Child_FA_Egress

police rate 1000 mbps

!

shape average percent 90

!

policing will be 1000 mbps, will be shaping when flow is outof 90% of 10G, right?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

hi Vincent,

if you have a policer at the parent level then you want to deploy the conform-aware hierarchical policer. There is a good example at:

For #2, your understanding is correct. I have to say that the example is good for theoretical discussion but in practice this config would be rejected because the flat shaper rate on an interface can't exceed 4Gbps. Also, the shaper would never kick in because policer is applied first and the policer rate is much lower than the shaper rate.

regards,

Aleksandar

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Limitations on using IPv4 ACL to define class-map on an MPLS enabled link.

Xander - we are trying to use the following config to prioritize some management type traffic (stuff like TACACS, SSH, SNMP, etc.) We configure an ACL to define our class-map to match TACACS traffic for example. This works fine on the router that is generating the traffic, but not on the next hop router. I believe we are having some sort of issue matching the ACL due to the MPLS header since our ACL doesn't see any packets matched.

Can you please confirm that ACL matching is not supported on 5.2.2 for an MPLS LDP interface?

Here's my configs:

Router A te0/0/2/1 connects to Router B te0/0/2/0. We see packets matching on egress on Router A (and forwarded appropriately), but not on ingress to Router B (which 'breaks' our QoS design).

Router A:

policy-map PMAP-QOS-CORE

< OUTPUT OMITTED >

class CMAP-QOS-TACACS

priority level 1

police rate percent 1

< OUTPUT OMITTED >

end-policy-map

class-map match-any CMAP-QOS-TACACS

description QOS CMAP - MATCH TACACS TRAFFIC

match access-group ipv4 ACL-QOS-TACACS

end-class-map

ipv4 access-list ACL-QOS-TACACS

10 remark QOS ACL - MATCH TACACS TRAFFIC

20 permit tcp any any eq tacacs

30 permit tcp any eq tacacs any

40 permit udp any any eq tacacs

50 permit udp any eq tacacs any

interface TenGigE0/0/2/1

description TE0/0/2/1->CORE-02_TE0/0/2/0

cdp

mtu 9100

service-policy output PMAP-QOS-CORE

ipv4 point-to-point

ipv4 address 10.0.0.10 255.255.255.252

flow-control bidirectional

carrier-delay up 100 down 0

load-interval 30

ethernet oam

profile OAM_Core

!

transceiver permit pid all

ipv4 access-group TEST ingress hardware-count interface-statistics

ipv4 access-group TEST egress hardware-count interface-statistics

!

mpls ldp

log

neighbor

nsr

graceful-restart

session-protection

!

nsr

graceful-restart

igp sync delay on-session-up 30

router-id 4.4.4.4

address-family ipv4

label

local

advertise

explicit-null

!

!

!

!

interface TenGigE0/0/2/1

Router A - sees TACACS packets egress

RP/0/RSP0/CPU0:AGG-02#sh access-lists TEST hardware egress int te0/0/2/1 loc 0/0/cpu0

Tue Jan 26 13:30:46.493 UTC

ipv4 access-list TEST

10 permit tcp any any eq tacacs (1087 hw matches)

20 permit tcp any eq tacacs any

30 permit udp any any eq tacacs

40 permit udp any eq tacacs any

50 permit ipv4 any any (5772583 hw matches)

Router B:

interface TenGigE0/0/2/0

description TE0/0/2/0->AGG-02_TE0/0/2/1

cdp

mtu 9100

service-policy output PMAP-QOS-CORE

ipv4 point-to-point

ipv4 address 10.0.0.9 255.255.255.252

monitor-session SNIFF ethernet

!

flow-control bidirectional

carrier-delay up 100 down 0

load-interval 30

ethernet oam

profile OAM_Core

!

transceiver permit pid all

ipv4 access-group TEST ingress hardware-count interface-statistics

!

mpls ldp

log

neighbor

nsr

graceful-restart

session-protection

!

nsr

graceful-restart

igp sync delay on-session-up 30

router-id 3.3.3.3

interface TenGigE0/0/2/0

!

interface TenGigE0/0/2/1

!

!

Router B - does not see TACACS packets ingress, hence the packets are not classified correctly on egress to the next router, which 'breaks' our QoS.

RP/0/RSP0/CPU0:CORE-02#sh access-lists TEST hardware ingress int te0/0/2/0 loc 0/0/cpu0

Tue Jan 26 13:31:29.504 UTC

ipv4 access-list TEST

10 permit tcp any any eq tacacs

20 permit tcp any eq tacacs any

30 permit udp any any eq tacacs

40 permit udp any eq tacacs any

50 permit ipv4 any any (11662343 hw matches)

I think this is some sort of limitation of the code we are running. The packet captures look fine - they are just IPv4 packets with an MPLS header on them.

Any ideas? If we can't match on an IPv4 ACL, we'd like to do EXP marking, but I don't believe that is not supported for TACACS like DSCP is. Same goes for other services like Telnet, SSH, SNMP, etc. (you can set DSCP, but not EXP, per service).

TAC SR 637839463

RP/0/RSP0/CPU0:CORE-02#admin sh install active summ

Tue Jan 26 13:43:56.097 UTC

Default Profile:

SDRs:

Owner

Active Packages:

disk0:asr9k-9000v-nV-px-5.2.2

disk0:asr9k-asr901-nV-px-5.2.2

disk0:asr9k-doc-px-5.2.2

disk0:asr9k-fpd-px-5.2.2

disk0:asr9k-k9sec-px-5.2.2

disk0:asr9k-mcast-px-5.2.2

disk0:asr9k-mgbl-px-5.2.2

disk0:asr9k-mini-px-5.2.2

disk0:asr9k-mpls-px-5.2.2

disk0:asr9k-services-px-5.2.2

disk0:asr9k-px-5.2.2.CSCuq87630-1.0.0

disk0:asr9k-px-5.2.2.CSCur13869-1.0.0

disk0:asr9k-px-5.2.2.CSCur17985-1.0.0

disk0:asr9k-px-5.2.2.CSCur46358-1.0.0

disk0:asr9k-px-5.2.2.CSCur62957-1.0.0

disk0:asr9k-px-5.2.2.CSCur69192-1.0.0

disk0:asr9k-px-5.2.2.CSCur76163-1.0.0

disk0:asr9k-px-5.2.2.CSCur83223-1.0.0

disk0:asr9k-px-5.2.2.CSCur87691-1.0.0

disk0:asr9k-px-5.2.2.CSCus21011-1.0.0

disk0:asr9k-px-5.2.2.CSCus40073-1.0.0

disk0:asr9k-px-5.2.2.CSCus45891-1.0.0

disk0:asr9k-px-5.2.2.CSCus54586-1.0.0

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Just got an email from TAC - see bug ID CSCud94957

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello, Alexander. Really nice article!

I have a question: What happened to the counters that shown on regular IOS with the show service-policy interface command? I'm looking for a command that shows the number of matches per classification but have not been able to found none.

I'm guessing there is only one counter in the IOS-XR implementation that aggregates all the match numbers into one but would like your comments.

Thank you very much and keep on the good work!

Class-map: DataPlus (match-any)

18995418 packets, 4643254933 bytes

30 second offered rate 4000 bps, drop rate 0000 bps

Match: ip dscp af22 (20)

Match: ip dscp cs3 (24)

Match: ip precedence 3

Match: ip dscp af21 (18)

Queueing

queue limit 19 packets

(queue depth/total drops/no-buffer drops) 0/126973/0

(pkts output/bytes output) 18868446/4602190045

bandwidth 20% (76 kbps)

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

hi! and thank you :) yeah the way the classification works is that a packet received is sent with a set of keys (ip fields) to the tcam for a match, we get the match back that reports the class ID.

the class-id or map keeps the rate for QOS.

for regular ACL we can keep track of per ACE counters, but I am not sure if they would apply also when they are aggregated for a class match

so for your case to find out rates/matches per match criteria, I dont think there is another way then to put the different matches in different classes to get the per class (hence match) counters.

cheers!

xander

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Is there a way to configure the ASR9K to ignore the L2 header and only take the L3 header into consideration? IF so what is the link to configure and minimum XR version supported?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Yes, you can do that:

RP/0/RSP0/CPU0:IM1(config)#int te 0/0/1/1 RP/0/RSP0/CPU0:IM1(config-if)#service-policy input test account user-defined ?

<-63,+63> Overhead accounting value

This is quite an old functionality, you should be able to use it in all supported releases. Our recommendation is to always go with the latest Extended Maintenance Release.

regards,

Aleksandar

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

What about the following command available under GSR routers (would like to apply the same command whether the frames are single vlan tagged, or double tagged).

service-policy (interface)

To attach a policy map to an input interface or output interface to be used as the service policy for that interface, use the service-policy command in the appropriate configuration mode. To remove a service policy from an input or output interface, use the no form of the command.

service-policy { input | output } policy-map [ account nolayer2 ]

no service-policy { input | output } policy-map [ account nolayer2 ]

Syntax Description

|

input |

Attaches the specified policy map to the input interface. |

|

output |

Attaches the specified policy map to the output interface. |

|

policy-map |

Name of a service policy map (created using the policy-map command) to be attached. |

|

account nolayer2 |

(Optional) Turns off layer 2 QoS-specific accounting and enables Layer 3 QoS accounting. |

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: