Introduction

In EVPN A/A + IRB both PE in same EVI have BVI playing a default GW role. Its not supported to have BVI to be shutdown on one of PEs, In this case if if traffic hit this PE with DMAc equal to BVI Custom MAC, then it will drop this traffic due to L3 interface Down.

Problem

In EVPN A/A + IRB - if BVI is shutdown on one PE out of pair, then incoming traffic on same BD is dropped when DMAC=BVI MAC.

Config:

l2vpn

bridge group BG-1501

bridge-domain BD-1501

interface Bundle-Ether1029.1501

!

routed interface BVI1501

!

evi 6362

interface BVI1501

interface BVI1501 host-routing

interface BVI1501 mtu 1514

interface BVI1501 vrf ATT_SDB

interface BVI1501 ipv4 address 10.33.75.17 255.255.255.240

interface BVI1501 mac-address 1000.1111.1111

interface BVI1501 shutdown

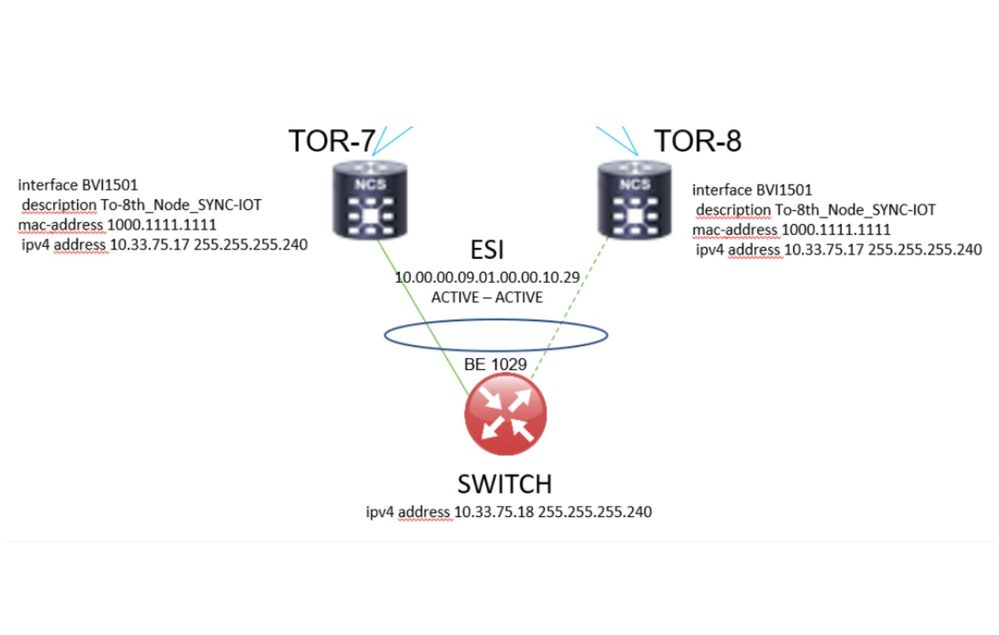

Topology:

Drops seen:

NCS5500:

RP/0/RP0/CPU0:ROUTER#sh captured packets in loc 0/0/CPU0

Tue Oct 13 06:42:27.012 CDT

-------------------------------------------------------

packets dropped in hardware in the ingress direction

buffer overflow pkt drops:17885, current: 200, maximum: 200

-------------------------------------------------------

Wrapping entries

-------------------------------------------------------

[1] Oct 13 06:42:21.391, len: 118, hits: 1, buffhdr type: 1

i/p i/f: BVI1501

punt reason: DROP_PACKET

Ingress Headers:

port_ifh: 0x800020c, sub_ifh: 0x0, bundle_ifh: 0x0

logical_port: 0x450, pd_pkt_type: 1

punt_reason: DROP_PACKET (0)

payload_offset: 29, l3_offset: 47

FTMH:

pkt_size: 0x95, tc: 1, tm_act_type: 0, ssp: 0xc00d

PPH:

pph_fwd_code: CPU Trap (7), fwd_hdr_offset: 0

inlif: 0x3003, vrf: 0x1a, rif: 0x50

FHEI:

trap_code: VTT_MY_MAC_AND_IP_DISABLE (52), trap_qual: 0

ASR9k:

show controller np counters:

[np:NP0] PARSE_DROP_IN_UIDB_DOWN: +1529

Explanation

When BVI is removed from BD, If we have incoming Packets with BVI Custom MACs (say there are other BVIs in the system), forwarding from one MH peer to other MH Peer happens via L2 Flooding in the context of Bridging. There is no L3 forwarding of packets.

We have a MY_MAC_ENABLE bit associated with L3 Interface ID (VSI) in BCM SDK. This bit gets set to TRUE during Interface Create and to FALSE during Interface Delete with in SDK. With Admin Shut on BVI, we don't delete the interface obviously. Hence, the observed behavior.

RP/0/RP0/CPU0:ROUTER#show controllers fia diagshell 0 "dump IHP_VRID_MY_MAC_TCAM" location 0/0/CPU0

Fri Oct 16 10:55:13.880 IST

Node ID: 0/0/CPU0

R/S/I: 0/0/0

IHP_VRID_MY_MAC_TCAM.IHP0[0]: <VALID=1,MASK=0x00000000000000,KEY=0x03100110021003,IP_VERSION_MASK=0,IP_VERSION=3,INDEX=0,DA_MASK=0x000000000000,DA=0x100110021003>

IHP_VRID_MY_MAC_TCAM.IHP0[1]: <VALID=1,MASK=0x01000000000000,KEY=0x00100110021003,IP_VERSION_MASK=1,IP_VERSION=0,INDEX=1,DA_MASK=0x000000000000,DA=0x100110021003>

In topologies where you don’t have redundancy - The typical (most common) use case of

doing Admin Shut on a any (Virtual) interface would be to drop the incoming traffic.

Current behavior is matching between NCS5500 and ASR9k.

Recommendation

It is recommended to shutdown corresponding Attachment Circuits if certain BVI/BD/node needs to be taken out of service. Bringing AC down will automatically bring BVI down and prevent packets from reaching this PE from CE side. All the traffic in this case will automatically flow through redundant PE.