- Cisco Community

- Technology and Support

- Data Center and Cloud

- UCS Director

- UCS Director Knowledge Base

- UCSD - Export Workflows with a scheduled workflow

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

01-12-2016 08:18 AM - edited 11-13-2018 03:47 AM

| Task Name | Export workflows with a workflow which can be scheduled |

| Description | |

| Prerequisites |

Minimum UCSD version: 5.4 |

| Category | Custom task |

| Components | |

| User Inputs | |

| User Output | OutputString |

Instructions for Regular Workflow Use:

Instructions for Regular Workflow Use:

- Download the attached .ZIP file below to your computer. *Remember the location of the saved file on your computer.

- Unzip the file on your computer. Should end up with a .WFD file.

- Log in to UCS Director as a user that has "system-admin" privileges.

- Navigate to "Policies-->Orchestration" and click on "Import".

- Click "Browse" and navigate to the location on your computer where the .WFD file resides. Choose the .WFD file and click "Open".

- Click "Upload" and then "OK" once the file upload is completed. Then click "Next".

- Click the "Select" button next to "Import Workflows". Click the "Check All" button to check all checkboxes and then the "Select" button.

- Click "Submit".

- A new folder should appear in "Policies-->Orchestration" that contains the imported workflow. You will now need to update the included tasks with information about the specific environment.

Thank you goes out to: Girisha Puttaswamy

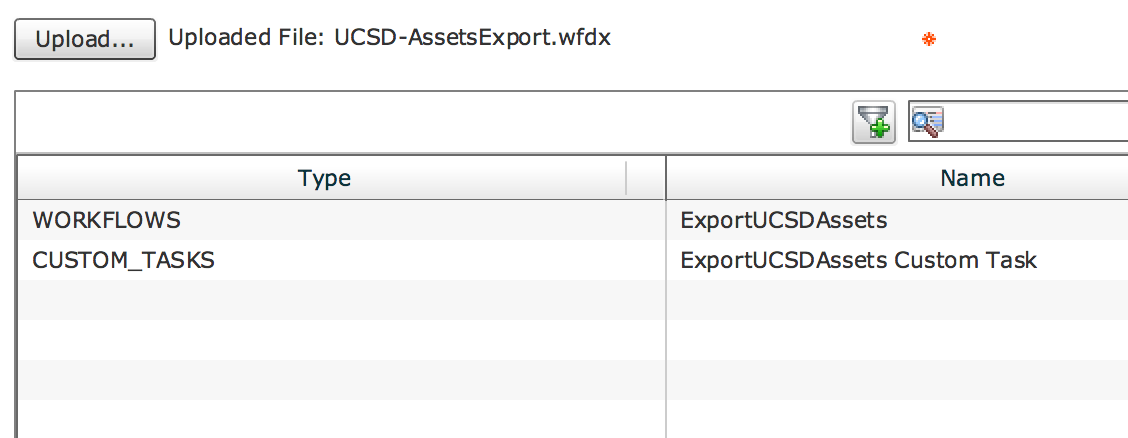

The workflow and custom task import:

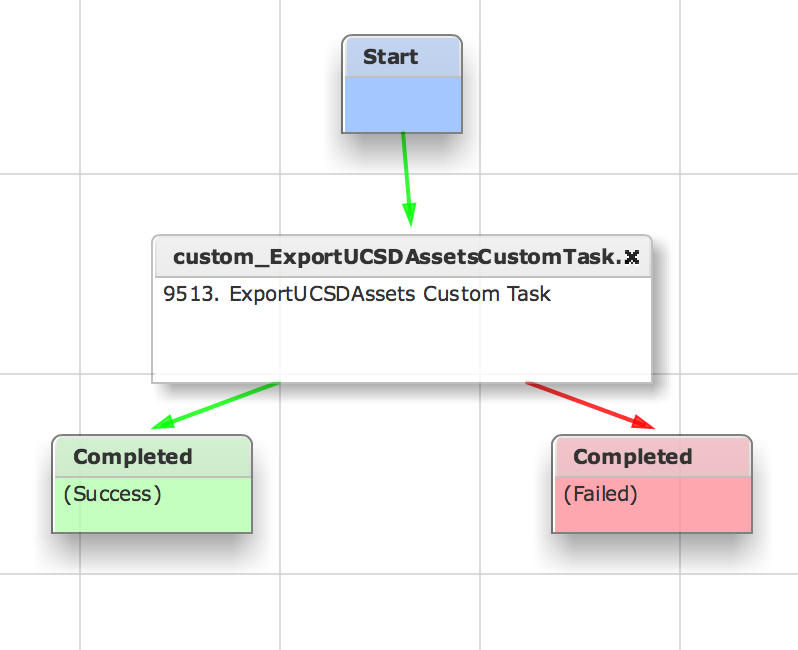

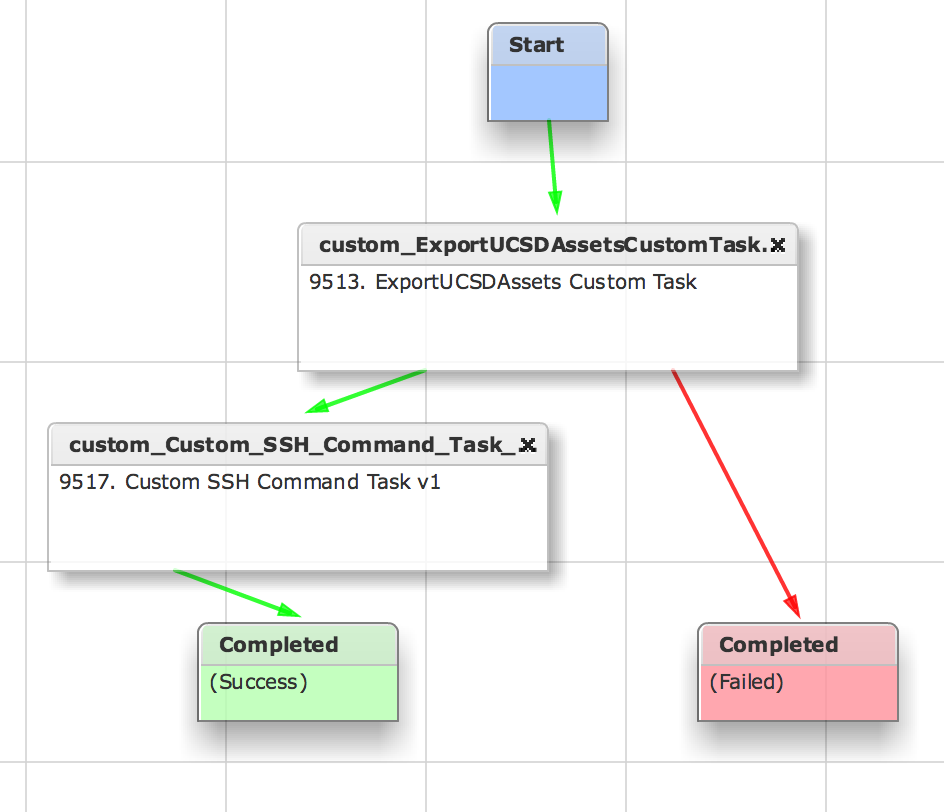

The workflow:

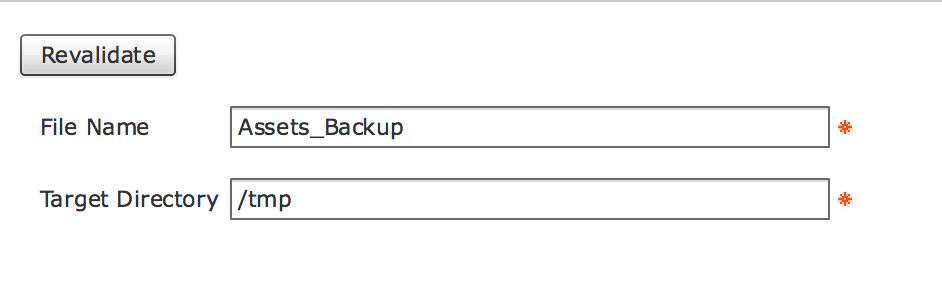

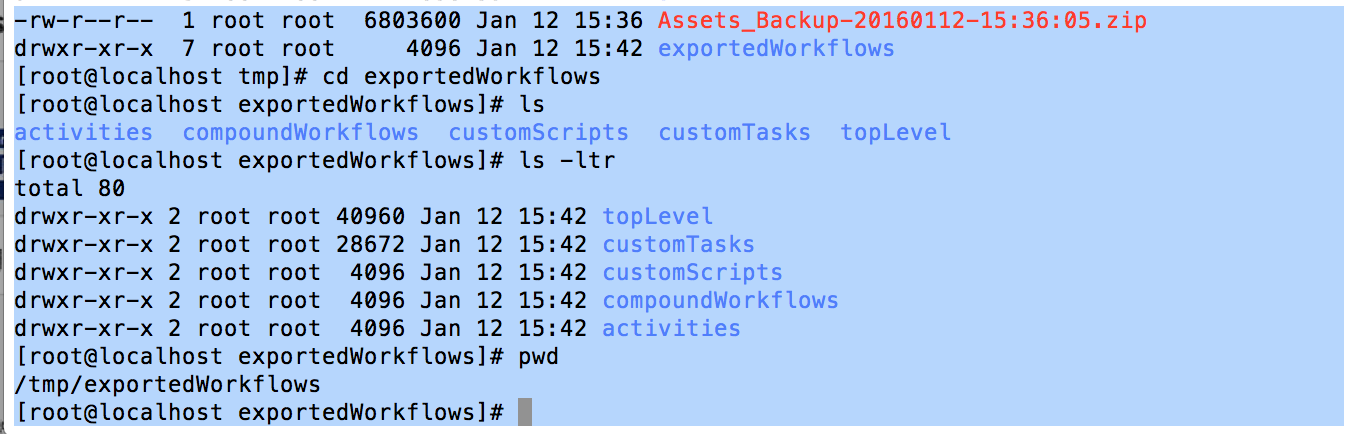

The place the workflow zip file will be by default:

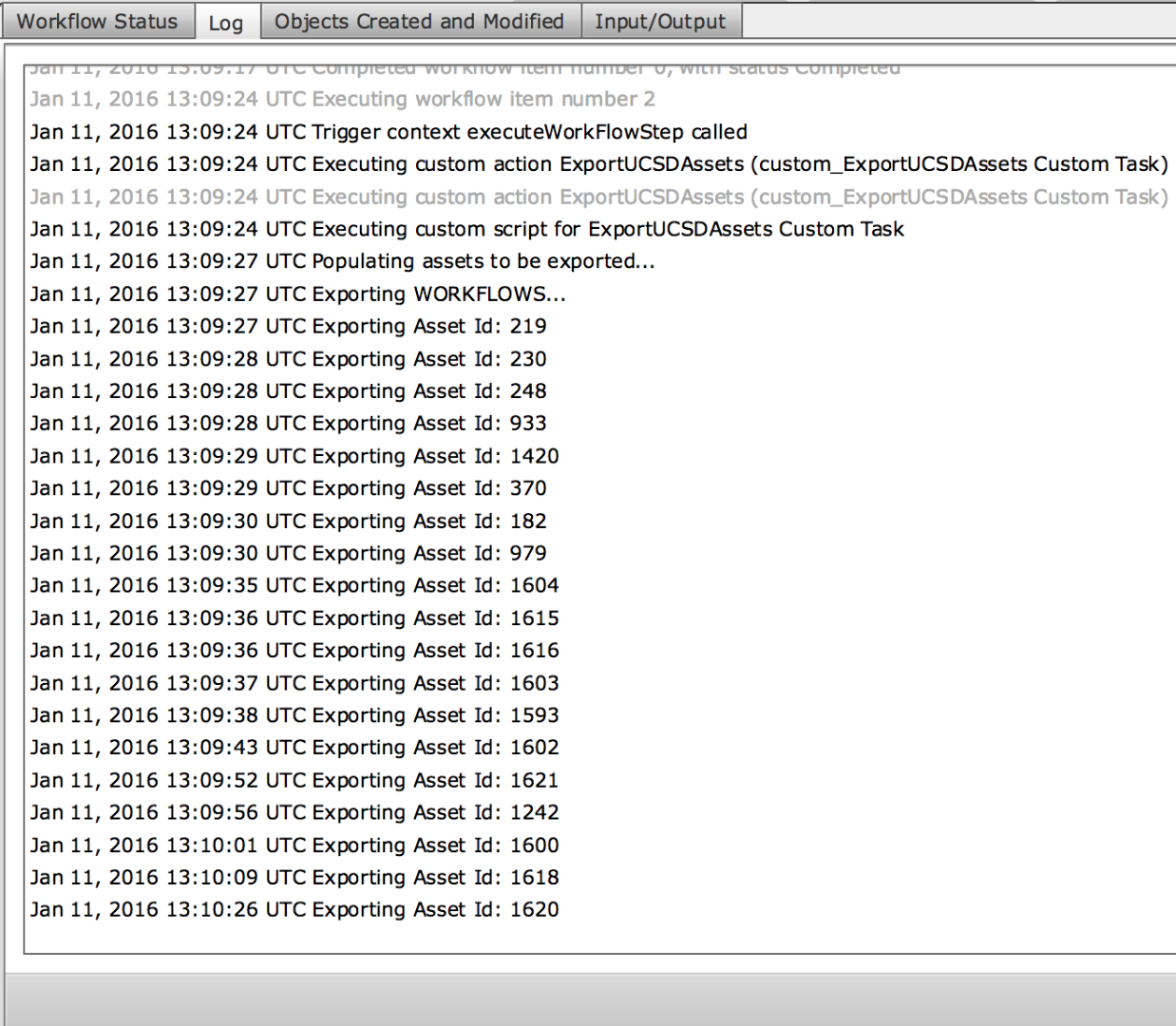

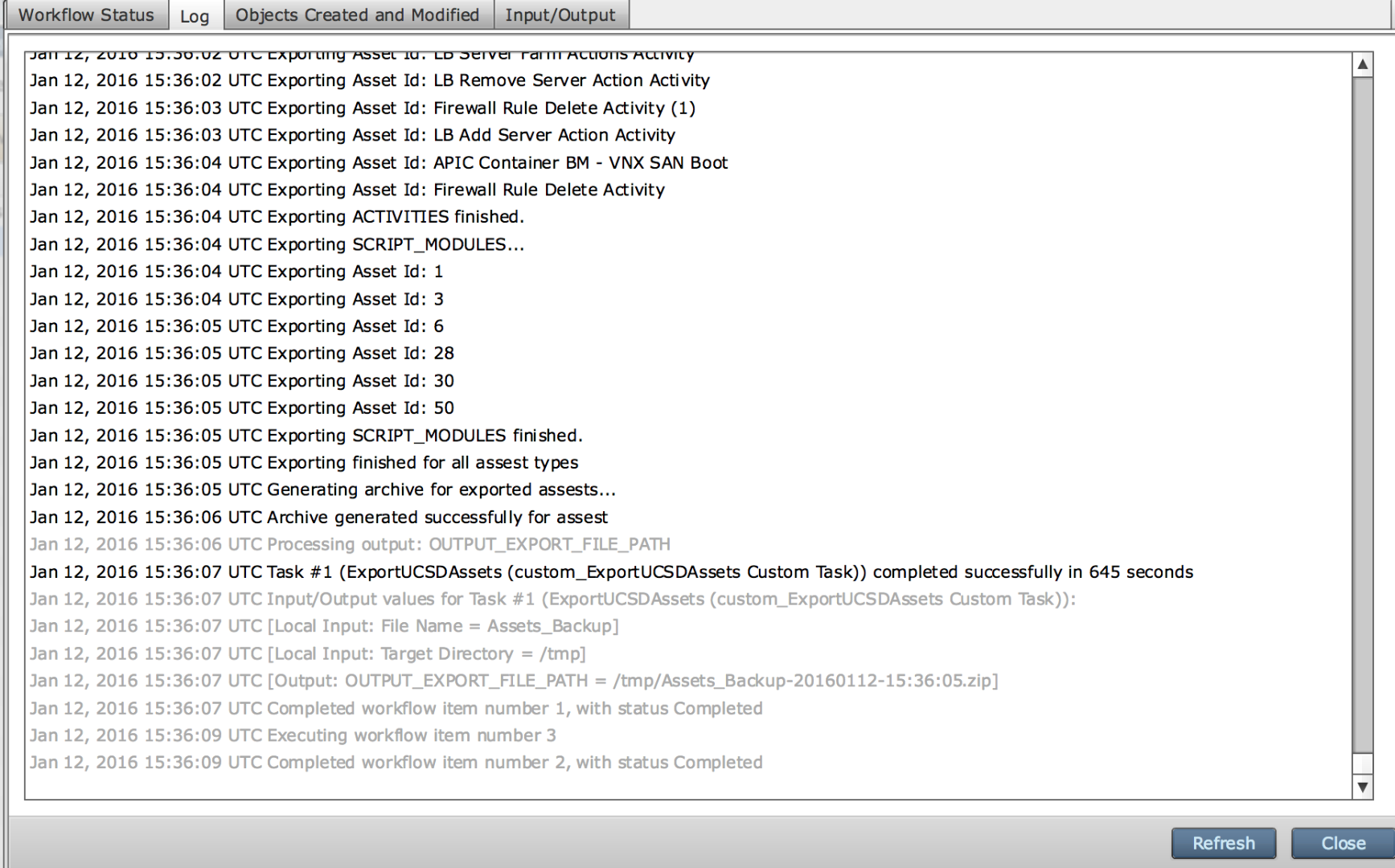

Example run:

Note:

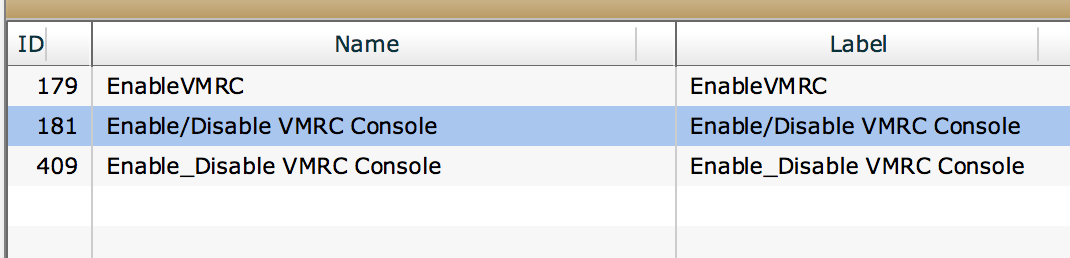

Workflows or custom task with a "/" in the name could be an issue and the task/workflow may have to be re-named:

The directory of the zip file on the UCSD appliance:

A cron job could get this off to a FTP server or a file share on UCSD could work as well or set up a ssh task after this task and send the file off to an FTP server (or scp).

Example when the workflow execution finishes:

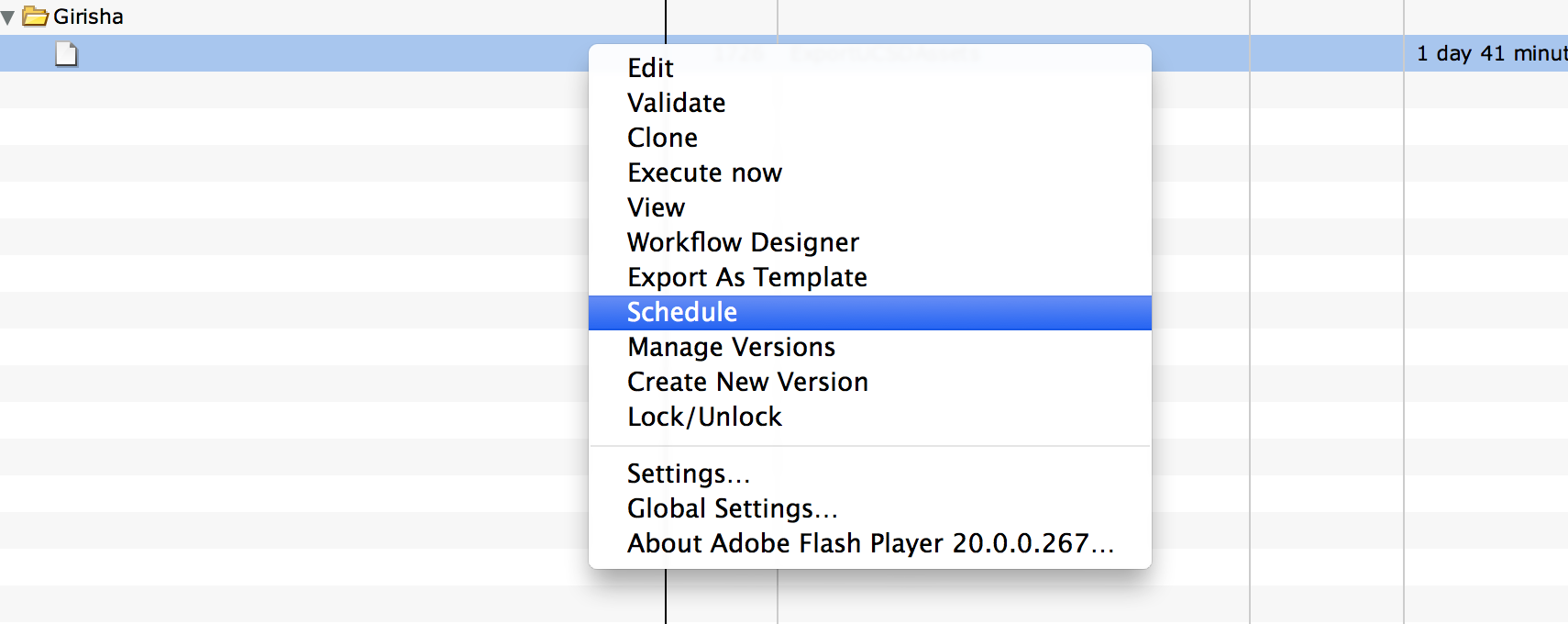

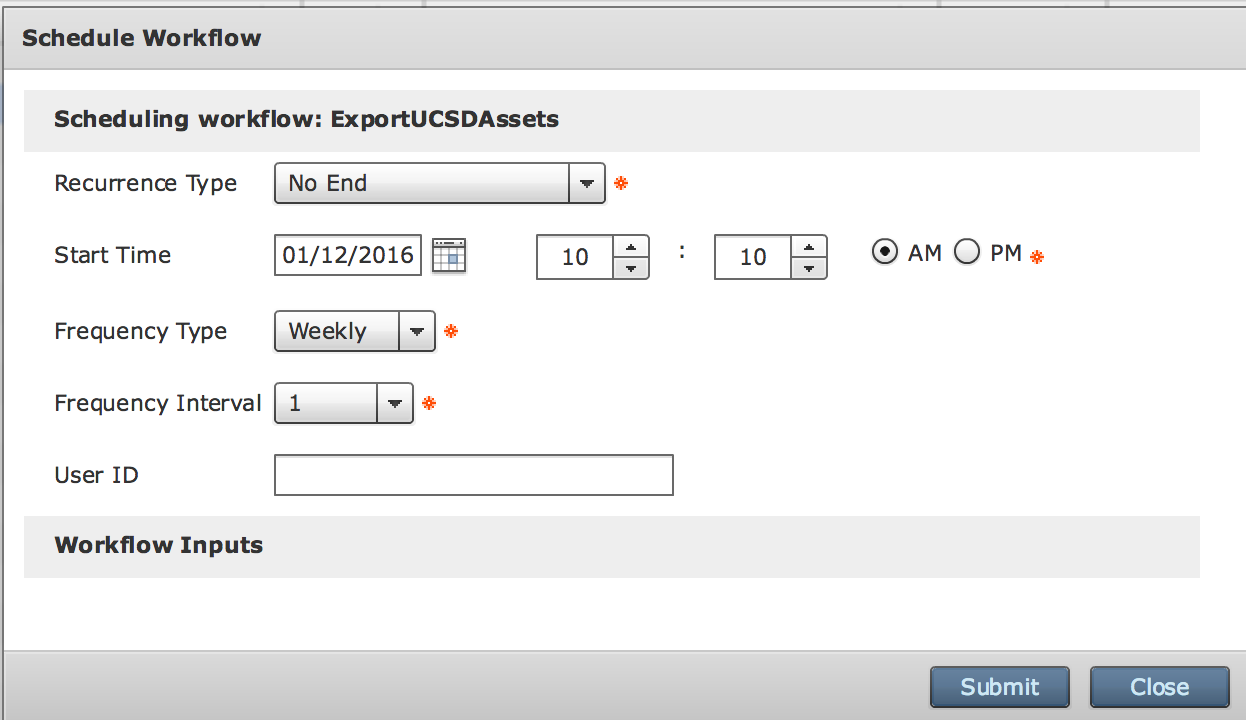

Scheduling the workflow:

The schedule I set up in my lab:

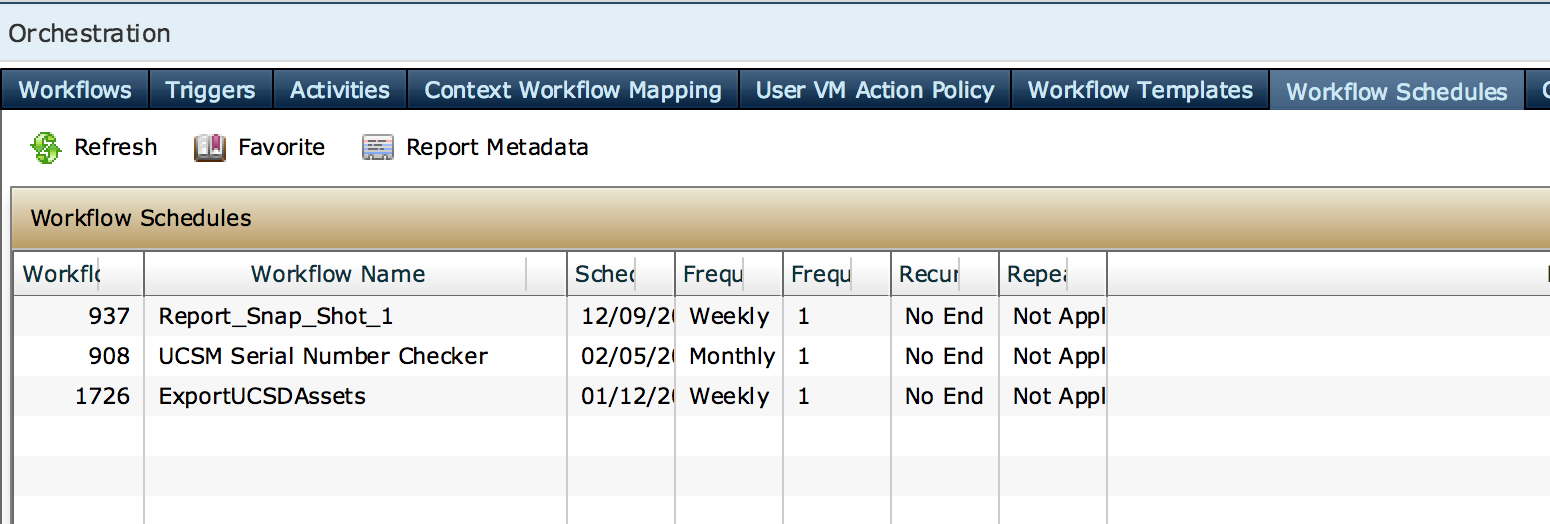

The resulting schedule:

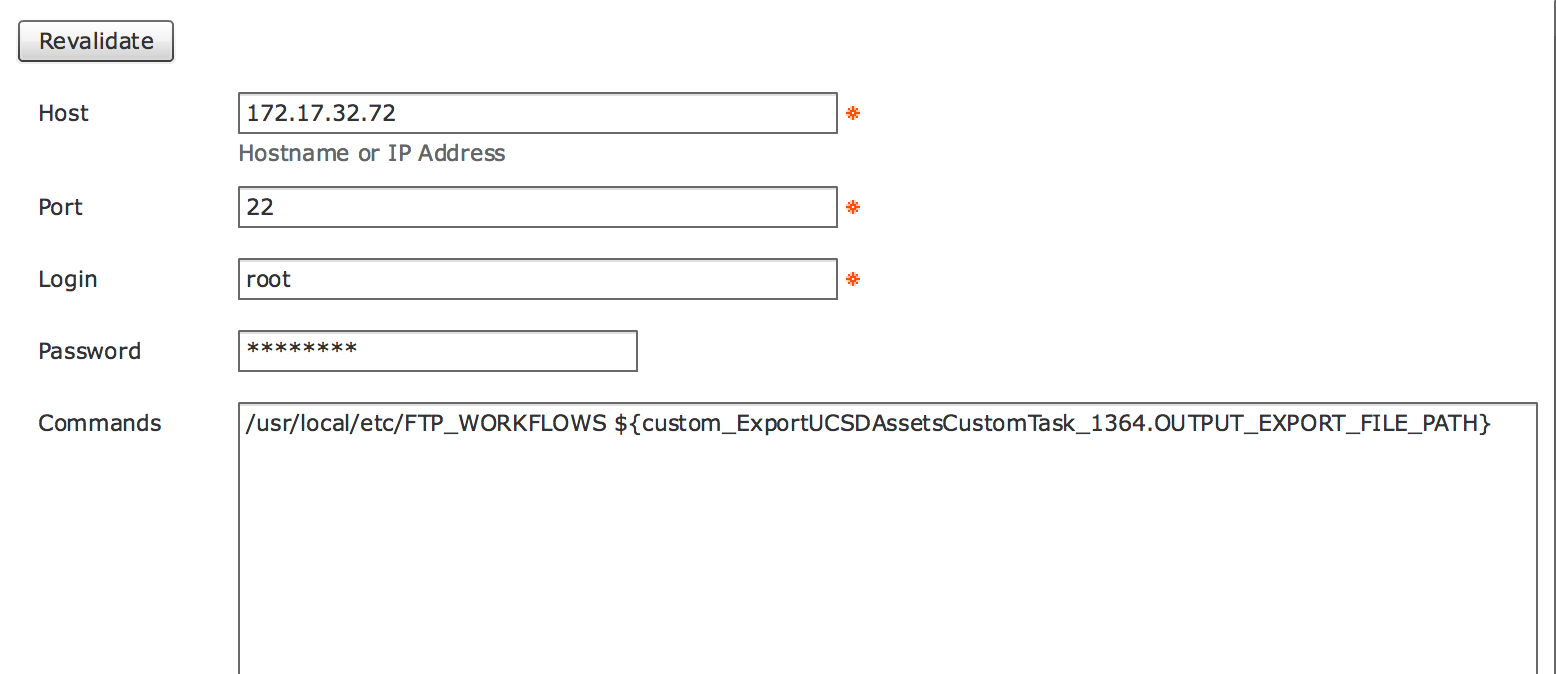

Update to the original workflow with FTP option example:

The SSH content:

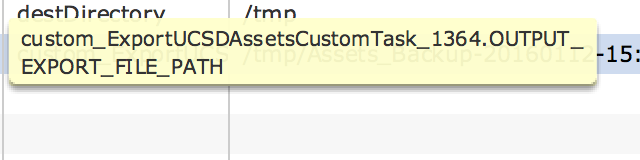

The variable of the output file variable:

FTP script on the UCSD server:

#!/bin/sh

# server login password localfile remote-dir

upload_script(){

echo "verbose"

echo "open $1"

sleep 2

echo "user $2 $3"

sleep 3

shift 3

echo "bin"

echo $*

sleep 10

echo quit

}

doftpput(){

upload_script $1 $2 $3 put $4 $5 | /usr/bin/ftp -i -n -p

}

#BKFILE=/tmp/Assets_Backup*

if [ ! -f $BKFILE ]

then

echo "Backup failed. "

return 1

fi

export NEWFILE="workflow_backup_`date '+%m-%d-%Y-%H-%M-%S'`.zip"

export FTPSERVER=172.17.32.110

export FTPLOGIN=cisco

export FTPPASS=cisco

#doftpput $FTPSERVER $FTPLOGIN $FTPPASS $BKFILE $NEWFILE

doftpput $FTPSERVER $FTPLOGIN $FTPPASS $1 $NEWFILE

exit 0

New API to export workflows:

Request

curl -v -g --insecure -X POST --header "X-Cloupia-Request-Key:DAFEFD6CB27B4D779D25EDF5E9BEB976" -H "Content-Type:application/json" 'https:// 172.39

.119.140/app/api/rest?formatType=json&opName=version:userAPIUnifiedExport&opData={param0:{%22workflowIds%22:[%22450%22,%22442%22],%22customTaskIds%2

2:[],%22scriptModuleId%22:[],%22activityNames%22:[]},param1:%22TestExport%22}'

Attached Document printed out:

Guide to Using Orchestration REST APIs

Here is a simple guide with examples to the Export/Import workflow APIs introduced/enhanced in 6.6. The below examples use curl tool to illustrate all the APIs. The commands shown can be copied and used directly (updating the parameters to match your needs).

General Precautions

- Any special characters (for example, “) must be URL encoded. In the examples below, we use %22. Notice that the URL is enclosed in single quotes to avoid parsing by the shell

- The Cloupia-Request-Key must be copied from the UCSD UI. Please read the UCSD Rest API Getting Started Guide

- The REST API to download a file will return Base64 encoded data. That data must be saved into a file. See example below.

Export workflows

To export UCSD elements (e.g., workflows, tasks) to a file, use the API: userAPIUnifiedExport

In the command below:

workflowIds: This is an array of workflow ids that you see in the UCSD UI.

customTaskIds: This is an array of ids of all custom tasks to export

…

Param1: This is the name of the file into which the contents are exported. No pathnames allowed, just a simple filename. If the filename has any special characters, encode them with %XX%.

In the example below, we are exporting workflows with ids [450, 442] into a file TestExport.

In all the examples below, the address of UCSD is 172.39.119.140

Request

curl -v -g --insecure -X POST --header "X-Cloupia-Request-Key:DAFEFD6CB27B4D779D25EDF5E9BEB976" -H "Content-Type:application/json" 'https:// 172.39

.119.140/app/api/rest?formatType=json&opName=version:userAPIUnifiedExport&opData={param0:{%22workflowIds%22:[%22450%22,%22442%22],%22customTaskIds%2

2:[],%22scriptModuleId%22:[],%22activityNames%22:[]},param1:%22TestExport%22}'

* About to connect() to 172.39.119.140 port 443 (#0)

* Trying 172.39.119.140... connected

* Connected to 172.39.119.140 (172.39.119.140) port 443 (#0)

* Initializing NSS with certpath: sql:/etc/pki/nssdb

* warning: ignoring value of ssl.verifyhost

* skipping SSL peer certificate verification

* SSL connection using TLS_ECDHE_RSA_WITH_AES_128_CBC_SHA

* Server certificate:

* subject: CN="Cisco Systems, Inc.",OU=CSPG,O="Cisco Systems, Inc.",L=San Jose,ST=California,C=US

* start date: Nov 10 04:46:29 2017 GMT

* expire date: Nov 09 04:46:29 2022 GMT

* common name: Cisco Systems, Inc.

* issuer: CN="Cisco Systems, Inc.",OU=CSPG,O="Cisco Systems, Inc.",L=San Jose,ST=California,C=US

> POST /app/api/rest?formatType=json&opName=version:userAPIUnifiedExport&opData={param0:{%22workflowIds%22:[%22450%22,%22442%22],%22customTaskIds%22:[],%22scriptModuleId%22:[],%22activityNames%22:[]},param1:%22TestExport%22} HTTP/1.1

> User-Agent: curl/7.19.7 (x86_64-redhat-linux-gnu) libcurl/7.19.7 NSS/3.27.1 zlib/1.2.3 libidn/1.18 libssh2/1.4.2

> Host: 172.39.119.140

> Accept: */*

> X-Cloupia-Request-Key:DAFEFD6CB27B4D779D25EDF5E9BEB976

> Content-Type:application/json

>

< HTTP/1.1 200 OK

< Set-Cookie: JSESSIONID=9CF7C252198C3E0B43C236949488042D5B58E9098151AA7AE4EDFE374812645F; Path=/app; Secure; HttpOnly

< Content-Security-Policy: child-src 'self'

< Strict-Transport-Security: max-age=31536000

< X-Frame-Options: SAMEORIGIN

< X-Content-Type-Options: nosniff

< X-XSS-Protection: 1; mode=block

< Cache-Control: max-age=0,must-revalidate

< Expires: -1

< Content-Type: application/json;charset=UTF-8

< Content-Length: 125

< Date: Thu, 15 Mar 2018 16:37:41 GMT

< Server: Web

<

* Connection #0 to host 172.39.119.140 left intact

* Closing connection #0

Response

{ "serviceResult":"TestExport.wfdx", "serviceError":null, "serviceName":"InfraMgr", "opName":"version:userAPIUnifiedExport" }

The response indicates that the UCSD will create the export file TestExport.wfdx with the specified contents.

Check status

Once you request UCSD to export some elements to a file, you can check progress with the API: userAPIUnifiedExportStatus. The input is the name of the file you received in the response to your export request above (TestExport.wfdx).

Request

curl -v -g --insecure -X POST --header "X-Cloupia-Request-Key:DAFEFD6CB27B4D779D25EDF5E9BEB976" -H "Content-Type:application/json" 'https://172.39

.119.140/app/api/rest?formatType=json&opName=version:userAPIUnifiedExportStatus&opData={param0:%22KDTestExport.wfdx%22}'

> POST /app/api/rest?formatType=json&opName=version:userAPIUnifiedExportStatus&opData={param0:%22KDTestExport.wfdx%22} HTTP/1.1

> User-Agent: curl/7.19.7 (x86_64-redhat-linux-gnu) libcurl/7.19.7 NSS/3.27.1 zlib/1.2.3 libidn/1.18 libssh2/1.4.2

> Host: 172.39.119.140

> Accept: */*

> X-Cloupia-Request-Key:DAFEFD6CB27B4D779D25EDF5E9BEB976

> Content-Type:application/json

>

< HTTP/1.1 200 OK

< Set-Cookie: JSESSIONID=6B4B227C82DA691C8685391C08D46F0A445D1EC7D131A2D0E47B763187A3DF31; Path=/app; Secure; HttpOnly

< Content-Security-Policy: child-src 'self'

< Strict-Transport-Security: max-age=31536000

< X-Frame-Options: SAMEORIGIN

< X-Content-Type-Options: nosniff

< X-XSS-Protection: 1; mode=block

< Cache-Control: max-age=0,must-revalidate

< Expires: -1

< Content-Type: application/json;charset=UTF-8

< Content-Length: 125

< Date: Thu, 15 Mar 2018 16:42:46 GMT

< Server: Web

<

* Connection #0 to host 172.39.119.140 left intact

* Closing connection #0

Response

{ "serviceResult":"Completed", "serviceError":null, "serviceName":"InfraMgr", "opName":"version:userAPIUnifiedExportStatus" }

The response indicates that the export operation has been completed.

Download the file with the exported workflows

To download the file with the exported contents, use the API: userAPIDownloadFile. The value of the parameter param0 is the name of the file to be downloaded. The filename cannot contain any paths or drive names etc.

curl -v -g --insecure -X POST --header "X-Cloupia-Request-Key:DAFEFD6CB27B4D779D25EDF5E9BEB976" -H "Content-Type: application/ json" 'https://172.39.119.140/app/api/rest?formatType=json&opName=version:userAPIDownloadFile&opData={param0:%22TestExport.wfdx%22}'

> POST /app/api/rest?formatType=json&opName=version:userAPIDownloadFile&opData={param0:%22TestExport.wfdx%22} HTTP/1.1

> User-Agent: curl/7.19.7 (x86_64-redhat-linux-gnu) libcurl/7.19.7 NSS/3.27.1 zlib/1.2.3 libidn/1.18 libssh2/1.4.2

> Host: 172.39.119.140

> Accept: */*

> X-Cloupia-Request-Key:DAFEFD6CB27B4D779D25EDF5E9BEB976

> Content-Type: application/

> json

>

< HTTP/1.1 200 OK

< Set-Cookie: JSESSIONID=F655E001EB85392518E8D797EE6C650F82C4A3CDF89341FAA44E4533A6F3B3DB; Path=/app; Secure; HttpOnly

< Content-Security-Policy: child-src 'self'

< Strict-Transport-Security: max-age=31536000

< X-Frame-Options: SAMEORIGIN

< X-Content-Type-Options: nosniff

< X-XSS-Protection: 1; mode=block

< Cache-Control: max-age=0,must-revalidate

< Expires: -1

< Content-Disposition: attachment; filename=TestExport.wfdx

< Content-Type: application/x-download

< Transfer-Encoding: chunked

< Date: Thu, 15 Mar 2018 16:45:18 GMT

< Server: Web

Response

In this case, the response contains a file as a multipart attachment. The data is in XML format and a snippet of the file is shown below. The proper way to save the file is to extract the content between the tags <xml version=”1.0?> and …</OrchExportInfo>.

<?xml version="1.0" ?><OrchExportInfo><Time>Thu Mar 15 09:37:42 PDT 2018</Time><User></User><Comments></Comments><UnifiedFeatureAssetInfo><addiInfo></addiInfo><featureAssetEntry

The easiest way is to use a tool such as grep or sed (on Linux systems) and filter the essential data. For example:

curl -v -g --insecure -X POST --header "X-Cloupia-Request-Key:DAFEFD6CB27B4D779D25EDF5E9BEB976" -H "Content-Type: application/ json" 'https://172.39.119.140/app/api/rest?formatType=json&opName=version:userAPIDownloadFile&opData={param0:%22TestExport.wfdx%22}'

| grep -e '<?xml version=.*</OrchExportInfo>' > MyDownloadFile.wfdx

Upload file to UCSD

The API for uploading a file (using multipart attachment) is: userAPIUploadFile. Notice the Content-Type with this API is no longer application/json. The local file that must be uploaded to UCSD is specified in the –F argument. The parameters do not have any significance because the multipart attachment has all the details.

If you need to upload and also import the contents of an export file into UCSD, a simpler API is userAPIUnifiedImport, illustrated later in this note. The userAPIUnifiedImport handles uploading as well as importing in one call.

curl -v -F "File=@./TestWorkflows.wfdx" -g --insecure -X POST --header "X-Cloupia-Request-Key:DAFEFD6CB27B4D779D25EDF5E9BEB976" -H "Content-Type:multipart/form-data" 'https://172.39.119.140/app/api/rest?formatType=json&opName=servicerequest:userAPIUploadFile&opData={param0:{%22uploadPolicy%22:%22replace%22,%22description%22%22:%22TestWorkflows.wfdx%22}}'

> POST /app/api/rest?formatType=json&opName=servicerequest:userAPIUploadFile&opData={param0:{%22uploadPolicy%22:%22replace%22,%22description%22%22:%22TestWorkflows.wfdx%22}} HTTP/1.1

> User-Agent: curl/7.19.7 (x86_64-redhat-linux-gnu) libcurl/7.19.7 NSS/3.27.1 zlib/1.2.3 libidn/1.18 libssh2/1.4.2

> Host: 172.39.119.140

> Accept: */*

> X-Cloupia-Request-Key:DAFEFD6CB27B4D779D25EDF5E9BEB976

> Content-Length: 36289

> Expect: 100-continue

> Content-Type:multipart/form-data; boundary=----------------------------5f04f0ca472f

>

< HTTP/1.1 100 Continue

< HTTP/1.1 200 OK

< Set-Cookie: JSESSIONID=337ED13DBEDC7FBCB20DD719A1FC349552E33F258DA04135BAD1E8C33A62082A; Path=/app; Secure; HttpOnly

< Content-Security-Policy: child-src 'self'

< Strict-Transport-Security: max-age=31536000

< X-Frame-Options: SAMEORIGIN

< X-Content-Type-Options: nosniff

< X-XSS-Protection: 1; mode=block

< Cache-Control: max-age=0,must-revalidate

< Expires: -1

< Content-Type: application/json;charset=UTF-8

< Content-Length: 299

< Date: Thu, 15 Mar 2018 17:15:18 GMT

< Server: Web

<

* Connection #0 to host 172.39.119.140 left intact

* Closing connection #0

Response

The response contains the size of the file uploaded and the current state of the upload. It also indicates if a file with that name already exists.

{ "serviceResult":{"fileName":"TestWorkflows.wfdx","description":"","comment":null,"uploadPolicy":"","fileSize":36079,"fileUploadStatus":"Started","failureReason":"","userName":"admin","isFileExist":false}, "serviceError":null, "serviceName":"InfraMgr", "opName":"servicerequest:userAPIUploadFile" }

Upload Status

When uploading large files, you may wish to check the status/progress of the upload using the API: userAPIGetFileUploadStatus. The param0 argument value is the name of the file that you just uploaded

curl -v -g --insecure -X POST --header "X-Cloupia-Request-Key:DAFEFD6CB27B4D779D25EDF5E9BEB976" -H "Content-Type:application/json" 'https://172.39.119.140/app/api/rest?formatType=json&opName=servicerequest:userAPIGetFileUploadStatus&opData={param0:%22TestWorkflows.wfdx%22}'

Request

> POST /app/api/rest?formatType=json&opName=servicerequest:userAPIGetFileUploadStatus&opData={param0:%22TestWorkflows.wfdx%22} HTTP/1.1

> User-Agent: curl/7.19.7 (x86_64-redhat-linux-gnu) libcurl/7.19.7 NSS/3.27.1 zlib/1.2.3 libidn/1.18 libssh2/1.4.2

> Host: 172.39.119.140

> Accept: */*

> X-Cloupia-Request-Key:DAFEFD6CB27B4D779D25EDF5E9BEB976

> Content-Type:application/json

>

< HTTP/1.1 200 OK

< Set-Cookie: JSESSIONID=E99938A769745540AAE5903DFD9019AE72C4607151CDF3D99652971067BA2222; Path=/app; Secure; HttpOnly

< Content-Security-Policy: child-src 'self'

< Strict-Transport-Security: max-age=31536000

< X-Frame-Options: SAMEORIGIN

< X-Content-Type-Options: nosniff

< X-XSS-Protection: 1; mode=block

< Cache-Control: max-age=0,must-revalidate

< Expires: -1

< Content-Type: application/json;charset=UTF-8

< Content-Length: 199

< Date: Thu, 15 Mar 2018 17:20:41 GMT

< Server: Web

<

* Connection #0 to host 172.39.119.140 left intact

* Closing connection #0

Response

{ "serviceResult":{"fileName":"TestWorkflows.wfdx","uploadStatus":"Completed","failureCause":""}, "serviceError":null, "serviceName":"InfraMgr", "opName":"servicerequest:userAPIGetFileUploadStatus" }

Notice that the upload was completed successfully.

Import elements from a file

You can upload a file containing workflows, tasks, etc. and also import the contents of the uploaded file into UCSD using a single API: userAPIUnifiedImport.

Here the param1: contains various attributes for the import operation. The param0:uploadPolicy is the name of the file. The argument param2 provides the name of the folder into which the file contents (workflows, etc.) will be imported.

In the sample below, we are uploading the file TestWorkflows.wfdx to the UCSD at [172.19.35.49] and we are indicating that the contents of the file should be imported into the folder “NewImports”.

Notice that the multipart attachment header must be specified with the –F argument.

In this run, the file TestWorkflows.wfds is being uploaded and then contents imported into the USCD at 172.39.119.141

Request

curl -v -g --insecure -X POST -F "File=@./TestWorkflows.wfdx" --header "X-Cloupia-Request-Key:DAFEFD6CB27B4D779D25EDF5E9BEB976" -H "Content-Type:multipart/form-data" 'https://172.39.119.141//app/api/rest?formatType=json&opName=version:userAPIUnifiedImport&opData={param0:{%22uploadPolicy%22:%22TestWorkflows.wfdx%22,%22description%22:%22sample%22},param1:{%22workflowImportPolicy%22:%22replace%22,%22customTaskImportP...

2ScriptModuleImportPolicy%22:%22replace%22,%22activityImportPolicy%22:%22replace%22},param2:%22sNewImports%22}'

> POST //app/api/rest?formatType=json&opName=version:userAPIUnifiedImport&opData={param0:{%22uploadPolicy%22:%22TestWorkflows.wfdx%22,%22description%22:%22sample%22},param1:{%22workflowImportPolicy%22:%22replace%22,%22customTaskImportPolicy%22:%22replace%22,%22ScriptModuleImportPolicy%22:%22replace%22,%22activityImportPolicy%22:%22replace%22},param2:%22sNewImports%22} HTTP/1.1

> User-Agent: curl/7.19.7 (x86_64-redhat-linux-gnu) libcurl/7.19.7 NSS/3.27.1 zlib/1.2.3 libidn/1.18 libssh2/1.4.2

> Host: 172.39.119.141

> Accept: */*

> X-Cloupia-Request-Key:DAFEFD6CB27B4D779D25EDF5E9BEB976

> Content-Length: 36289

> Expect: 100-continue

> Content-Type:multipart/form-data; boundary=----------------------------68b99d333031

>

< HTTP/1.1 100 Continue

< HTTP/1.1 200 OK

< Set-Cookie: JSESSIONID=156302B86B4B10504FF8EDA7D8123DE99807E319F9798E6698F8D5DD7D484339; Path=/app; Secure; HttpOnly

< Content-Security-Policy: child-src 'self'

< Strict-Transport-Security: max-age=31536000

< X-Frame-Options: SAMEORIGIN

< X-Content-Type-Options: nosniff

< X-XSS-Protection: 1; mode=block

< Cache-Control: max-age=0,must-revalidate

< Expires: -1

< Content-Type: application/json;charset=UTF-8

< Content-Length: 128

< Date: Thu, 15 Mar 2018 17:53:40 GMT

< Server: Web

<

* Connection #0 to host 172.39.119.141 left intact

* Closing connection #0

Response

{ "serviceResult":"TestWorkflows.wfdx", "serviceError":null, "serviceName":"InfraMgr", "opName":"version:userAPIUnifiedImport" }

The response just provides the name of the file uploaded for importing.

Import Status

Once an import operation has been initiated, to check the status of the import, use the API: userAPIUnifiedImportStatus

The only argument needed is the filename that you sent for the previous userAPIUnifiedImport API.

curl -v -g --insecure -X POST --header "X-Cloupia-Request-Key:DAFEFD6CB27B4D779D25EDF5E9BEB976" -H "Content-Type:application/json" 'https://172.39.119.141/app/api/rest?formatType=json&opName=version:userAPIUnifiedImportStatus&opData={param0:%22TestWorkflows.wfdx%22}'

> POST /app/api/rest?formatType=json&opName=version:userAPIUnifiedImportStatus&opData={param0:%22TestWorkflows.wfdx%22} HTTP/1.1

> User-Agent: curl/7.19.7 (x86_64-redhat-linux-gnu) libcurl/7.19.7 NSS/3.27.1 zlib/1.2.3 libidn/1.18 libssh2/1.4.2

> Host: 172.39.119.141

> Accept: */*

> X-Cloupia-Request-Key:DAFEFD6CB27B4D779D25EDF5E9BEB976

> Content-Type:application/json

>

< HTTP/1.1 200 OK

< Set-Cookie: JSESSIONID=A8A04A6C3D5BF060C8CB055C470165C5D4827F59A5063B7CA06EFEA651CE331E; Path=/app; Secure; HttpOnly

< Content-Security-Policy: child-src 'self'

< Strict-Transport-Security: max-age=31536000

< X-Frame-Options: SAMEORIGIN

< X-Content-Type-Options: nosniff

< X-XSS-Protection: 1; mode=block

< Cache-Control: max-age=0,must-revalidate

< Expires: -1

< Content-Type: application/json;charset=UTF-8

< Content-Length: 219

< Date: Thu, 15 Mar 2018 17:56:54 GMT

< Server: Web

<

* Connection #0 to host 172.39.119.141 left intact

* Closing connection #0

Response

{ "serviceResult":"number - these custom input(s) skipped since they already exist import completed", "serviceError":null, "serviceName":"InfraMgr", "opName":"version:userAPIUnifiedImportStatus" }

The response indicates a successful import of the file contents. It also indicates that some elements were skipped because they already exists in the UCSD.

--------------------------------

Working with PostMan (and other tools)

With Postman, it is a bit easier to manage the REST API interaction, Here are some examples.

Import Workflows: userAPIUnifiedImport

Here is the URL to be entered:

This will place the imported workflows/tasks in the directory "Import" once UCSD successfully imports the contents of the file.

The file from which the elements are imported is attached using the Postman interface (see screenshot below).

The attached file is part of the Body and Postman handles the encoding

In the Body tab, the Key:Value pair can be Text or a File. From the pull-down, select "File" and then click on "Choose Files" and select a file to upload and import. In the screenshot above, the file kdExport4.wfdx is selected for upload. The Key can be some name that you like.

You also need add the header X-Cloupia-Request-Key with the appropriate value, as well as the correct Content-Type header.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hey Orf - is there an equivalent to import the workflows too? Be good to dump them out to a GIT repo so that we can move workflows from Dev to Prod - without having to do it manually.

Thanks

Regards

Phil

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Sorry no one has written one.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Aloha Orf!

We've been enjoying these exports for a while now - thanks!.

Last week, we had an OS problem on a UCSD appliance, and in attempting to restore workflows, we noticed that these workflow exports do not include all associated custom tasks, activities, etc, the way a manual export seems to default - no doubt to reduce duplication, which is understandable. Here are 2 quick questions around this:

1. is there a way to tweak writeWFDX() to include all associated custom tasks, activities, etc. with each export? We have ample space, and this would be a faster set of workflows to import that way.

2. is there a best way to import all from this export-all (the way writeWFDX() is now? I think we imported workflows first, forgetting they imported without their associated custom tasks, and things started behaving very strangely - disconnected task links, etc.

Thanks!

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

So I am re-testing this right now, but I am looking into the code

var currentUsersHomeDir = java.lang.System.getProperty("user.home");

var tempExportDir = currentUsersHomeDir"/WorkflowExport_temp"System.currentTimeMillis();

var wfsExportDir = tempExportDir+"/exportedWorkflows";

var topLevelWfsExportDir = wfsExportDir+"/topLevel";

var customTasksExportDir = wfsExportDir+"/customTasks";

var activitiesExportDir = wfsExportDir+"/activities";

var customScriptsExportDir = wfsExportDir+"/customScripts";

var compoundWfsExportDir = wfsExportDir+"/compoundWorkflows";

var exportedWfdxFile = null;

var exportedZipFileName = input.exportedFileName;

and you are saying that a certain asset ID does not contain in the end all associated custom task and variables?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

From what we've seen, none of the workflow exports include the custom tasks.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Only checked 4 or 5 so far...

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

I see the issue now…

inflating: exportedWorkflows/customTasks/SetConcreteDeviceIdentity .wfdx

inflating: exportedWorkflows/customTasks/VARIABLE_CONVERTER_Gen2VLANID.wfdx

inflating: exportedWorkflows/customTasks/Trigger_APIC_Container_DR_Site.wfdx

inflating: exportedWorkflows/customTasks/Select Locale .wfdx

inflating: exportedWorkflows/customTasks/Common_Service_Graph_Node_Identity.wfdx

inflating: exportedWorkflows/compoundWorkflows/APICContainerResizeVPXVM.wfdx

inflating: exportedWorkflows/compoundWorkflows/ACI - Create Blank Interface Selectors.wfdx

inflating: exportedWorkflows/compoundWorkflows/APICContainerSRMSettings.wfdx

you would have to do all custom tasks first then compound and then the workflows to get them all aligned correctly…

asking BU now…

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Yeah I see it is all separate.

We may have to re-write this for this API call

userAPIExportWorkflows

look it up in the rest API browser

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Ok, thanks - checking out userAPIExportWorkflows...

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: