- Cisco Community

- Technology and Support

- Data Center and Cloud

- Application Centric Infrastructure

- Re: Policy Group Best Practice

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-07-2021 12:09 PM

Hey guys, I would like you to help me with a question.

Lets say we have several servers (Bare Metals), and each of these servers may have 5 interfaces or more. One of those interfaces may be used for management and the others for production. They are on different vlans as access ports or trunk ports on traditional nexus switches. No Port-channels configured.

My question is, should I create only one Policy Group per server, cause all the interfaces will share the same properties (Same speed, cdp, lldp, etc) or should I create one Policy Group per interface?

We are using a networking centric approach and I would be doing static binding for each interface on their respective EPG (EPG vlan related cause network centric).

I was thinking that if one of those interfaces would need a different speed later, after already migrated to ACI, would have to create another policy group just for that interface. But creating Policy groups per interface may be a lot of work.

I dont know if there is any other subject I should take into consideration to use Policy Groups per interface.

Solved! Go to Solution.

- Labels:

-

Cisco ACI

-

Other ACI Topics

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-07-2021 03:39 PM

Hi @Fernando Hernández ,

This is an interesting question, and at the heart of it is an understanding of how Access Policy Chains work, and how the anchor point of such chains is the AAEP to Domain mapping.

But the KEY point you have to consider is this:

An physical port can ONLY be linked to ONE Interface Policy Group (either an Access Port Policy Group (APPG - for single cable attachments), a Port Channel Interface Policy Group or a Virtual Port Channel Interface Policy Group)

Now for Port Channel Interface Policy Groups (PCIPGs) and Virtual Port Channel Interface Policy Groups (VPCIPGs), I would always recommend a new V/PCIPG for every PC/VPC because of the risk of ACI seeing two different PCs/VPCs as a single unit, and to give more flexibility in the future.

But in your case, where you are using single-attachment Access Port Policy Groups, there is no such risk, so now it comes down to how you want to organise the rest of the chain. And the one question I would ask if I were to configure your system is:

Do you want to keep the management interface in a separate Access Policy Chain (i.e. different VLAN, different Physical Domain, different AAEP) to the rest of the user-data interfaces?

And IF you were using a VMM Domain for your user-data interfaces, I'd be suggesting the answer might be "yes". If not... then it might depend on just how separated you like your management VLANs to be.

Bottom Line

- For the four interfaces carrying user data - use the same APPG on all servers. Which means they all get linked to the dame AAEP/Domain (Phys and/or VMM) and VLAN Pool.

- For the vmk0 management interface (assuming vmk0 is your mgmt interface) you MIGHT consider putting it in a separate APPG, especially if your organisation has issues about keeping Mgmt completely separate, but use the same APPG for all servers.

If in some time in the future a policy change is needed for one or more of the interfaces, such as a link speed change, create a new APPG, link it to the the same AAEP then re-map the interface selector to that new APPG.

BUT ONE MORE THING

The question that is possibly MORE important is the one you didn't ask.

Should each physical interface have its own Interface Selector, in an Interface Profile linked to a single switch (via a Leaf Profile)?

And the answer to this question is an emphatic YES.

You see, it you are tempted to add interface selectors with a range of interfaces (say 1/1-4) in an Interface Selector, then you will NEVER have the option of changing the link speed of just ONE of those ports without changing the others at the same time.

So make sure you go through the tedious process of adding 5 interface selectors for EVERY host.

I hope this helps.

Don't forget to mark answers as correct if it solves your problem. This helps others find the correct answer if they search for the same problem

Forum Tips: 1. Paste images inline - don't attach. 2. Always mark helpful and correct answers, it helps others find what they need.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-07-2021 03:39 PM

Hi @Fernando Hernández ,

This is an interesting question, and at the heart of it is an understanding of how Access Policy Chains work, and how the anchor point of such chains is the AAEP to Domain mapping.

But the KEY point you have to consider is this:

An physical port can ONLY be linked to ONE Interface Policy Group (either an Access Port Policy Group (APPG - for single cable attachments), a Port Channel Interface Policy Group or a Virtual Port Channel Interface Policy Group)

Now for Port Channel Interface Policy Groups (PCIPGs) and Virtual Port Channel Interface Policy Groups (VPCIPGs), I would always recommend a new V/PCIPG for every PC/VPC because of the risk of ACI seeing two different PCs/VPCs as a single unit, and to give more flexibility in the future.

But in your case, where you are using single-attachment Access Port Policy Groups, there is no such risk, so now it comes down to how you want to organise the rest of the chain. And the one question I would ask if I were to configure your system is:

Do you want to keep the management interface in a separate Access Policy Chain (i.e. different VLAN, different Physical Domain, different AAEP) to the rest of the user-data interfaces?

And IF you were using a VMM Domain for your user-data interfaces, I'd be suggesting the answer might be "yes". If not... then it might depend on just how separated you like your management VLANs to be.

Bottom Line

- For the four interfaces carrying user data - use the same APPG on all servers. Which means they all get linked to the dame AAEP/Domain (Phys and/or VMM) and VLAN Pool.

- For the vmk0 management interface (assuming vmk0 is your mgmt interface) you MIGHT consider putting it in a separate APPG, especially if your organisation has issues about keeping Mgmt completely separate, but use the same APPG for all servers.

If in some time in the future a policy change is needed for one or more of the interfaces, such as a link speed change, create a new APPG, link it to the the same AAEP then re-map the interface selector to that new APPG.

BUT ONE MORE THING

The question that is possibly MORE important is the one you didn't ask.

Should each physical interface have its own Interface Selector, in an Interface Profile linked to a single switch (via a Leaf Profile)?

And the answer to this question is an emphatic YES.

You see, it you are tempted to add interface selectors with a range of interfaces (say 1/1-4) in an Interface Selector, then you will NEVER have the option of changing the link speed of just ONE of those ports without changing the others at the same time.

So make sure you go through the tedious process of adding 5 interface selectors for EVERY host.

I hope this helps.

Don't forget to mark answers as correct if it solves your problem. This helps others find the correct answer if they search for the same problem

Forum Tips: 1. Paste images inline - don't attach. 2. Always mark helpful and correct answers, it helps others find what they need.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-08-2021 08:29 AM

Thank you so much for your answer Chris! It was really helpful and clear.

About the interface selector, yeah, I knew in advance I should create one per physical interface, so I wasn´t worried about that and accepted my destiny.

The only time I used interface selector with a range was on a Port-channel cause they must have always same speed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-07-2021 10:52 PM

I couldn't agree more with Chris, especially about the Interface Selector.

In my projects, I configure a single Policy Group for each type of server. For example all ESX servers will have one PG., regardless if the interface is mgmt or data.

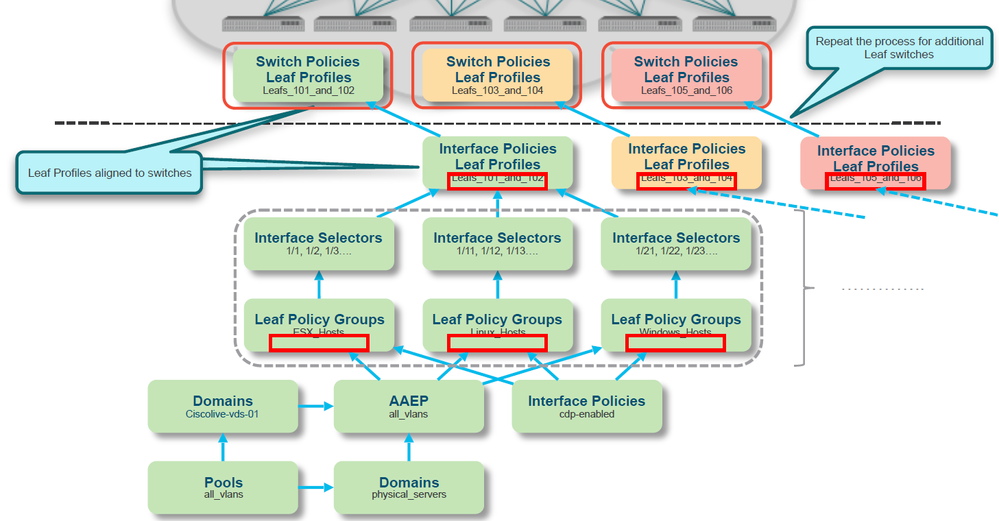

My config looks similar to this:

BTW, if you leave the speed on "inherit" you do not have to worry to much about static speed configuration, because speed will be inherited based on transceiver connected on the port. Also you can use auto-negotiation for RJ45 ports.

Stay safe,

Sergiu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-08-2021 08:32 AM

Hey Sergiu, thanks a lot for your answer as well!

In my case, the customer have most of the servers on manual speed in the traditional nexus servers and they want to do a transparent migration with the minimum changes (or not changes at all if possible). So I´m just translating the configuration ports from traditional switches to ACI with same speed and so.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-08-2021 10:13 AM

Then you can configure port groups like this:

- ESXi_1G

- ESXi_10G

- ESXi_40G

- MS_1G

- MS_10G

- BareMetal_1G

- etc

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide