- Cisco Community

- Technology and Support

- Online Tools and Resources

- Cisco Bug Discussions

- Re: CSCvi65059 - Catalyst 9k Switch - Last reload reason is not updated correctly under "show versio...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-09-2020 04:25 PM

I had the same problem in a stack of 6 C9200L switches with version 16.9.3 and Cisco has not yet determined a solution since with the currently recommended version (16.9.5) the same behavior happens.

Solved! Go to Solution.

- Labels:

-

Cisco Bugs

-

Switching or Switches

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-30-2020 10:16 AM - edited 07-30-2020 10:31 AM

Hi Chris, yes I was able to solve the problem, although it was a combination of many steps.

Originally my stacks had 6 switches and version 16.9.5, the one recommended at that time by Cisco, however, they started with the behavior. The first activity before raising a case was:

- I made a downgrade to version 16.9.3, which I have in other 4-switch stacks and I have never had any problems.

- When downgrade the behavior was the same.

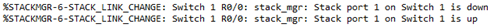

- I raised the case with the TAC and I could also see in the logs in a syslog server, that there was flapping in the stack ports (stack modules).

- The TAC recommended me to upgrade to 16.9.5 (recommended at the time) since the show tech observed the following:

Dup CSCvq48005 bug.which caused the stack to restart.

- I did the upgrade and also on my own I changed the stack modules where the flapping was seen first, if later I continued with the behavior I also changed the stack cables until the flapping stopped being seen with the recommended version.

- After all that parts and software change the stacks have remained stable.

The change of parts was not recommended by the TAC, however it was told of the actions carried out in addition to the upgrade. I don't know if they have updated the Workaround adding the change of physical parts but that made the stacks stay stable.

"I believe that the final solution and the one that solved the problem was the change of stack modules and stack cables where flapping was observed since originally the stack had version 16.9.5, which was recommended by TAC."

I hope the information can help you, Regards.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-09-2020 05:26 PM

@OscarPineda11 wrote:

the currently recommended version (16.9.5)

Cisco's "recommended version" does not always mean it is stable.

Post the complete output to the following command:

sh log on switch active uptime detail

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-09-2020 05:34 PM

I use "archive" for the logger all changes made on the switch. At the time of the reboot, the log buffer was filled with the logs corresponding to the commands executed in the startup configuration and the reason could not be displayed, however this symptom has been repeating itself for no reason.

What can be seen in the "show version" is the following:

STACK1-MDF-P1 uptime is 2 hours, 13 minutes

Uptime for this control processor is 2 hours, 16 minutes

System returned to ROM by unknown reload cause - reason ptr 0xF, PC 0x0,address 0x0 at 12:02:16 CDT Thu May 7 2020

System restarted at 17:18:30 CDT Sat May 9 2020

System image file is "flash:packages.conf"

Last reload reason: unknown reload cause - reason ptr 0xF, PC 0x0,address 0x0

In addition I have already raised a case with the TAC since I have only had this problem with stacks of more than 4 switches. I have stacks with 4 switches running version 16.9.3 and I have never had a similar event. It appears that the problem is inherited from previous switch families.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-09-2020 05:43 PM

Post the complete output to the following command:

dir crashinfo:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-09-2020 05:58 PM

The last file that switch created was:

STACK1-MDF-P1#dir crashinfo:

Directory of crashinfo:/

7329 drwx 90112 May 9 2020 19:45:05 -05:00 tracelogs

18 -rw- 1083052 May 6 2020 12:42:07 -05:00 system-report_1_20200506-124204-CDT.tar.gz

20 -rw- 0 Jan 13 2019 23:10:59 -06:00 koops.dat

12 -rw- 272631 Apr 30 2020 16:23:06 -05:00 STACK1-MDF-P1_1_RP_0_trace_archive_0-20200430-162305.tar.gz

13 -rw- 974943 Apr 30 2020 16:23:09 -05:00 STACK1-MDF-P1_1_RP_0_trace_archive_1-20200430-162306.tar.gz

14 -rw- 916460 Apr 30 2020 16:23:11 -05:00 STACK1-MDF-P1_1_RP_0_trace_archive_2-20200430-162308.tar.gz

16 -rw- 540098 Apr 30 2020 17:03:54 -05:00 STACK1-MDF-P1_1_RP_0_trace_archive_11-20200430-170352.tar.gz

15 -rw- 486014 Apr 30 2020 16:43:46 -05:00 STACK1-MDF-P1_1_RP_0_trace_archive_6-20200430-164343.tar.gz

11 -rw- 384337 Apr 30 2020 16:44:02 -05:00 STACK1-MDF-P1_1_RP_0_trace_archive_8-20200430-164401.tar.gz

17 -rw- 572657 Apr 30 2020 17:03:57 -05:00 STACK1-MDF-P1_1_RP_0_trace_archive_12-20200430-170354.tar.gz

19 -rw- 2822637 May 7 2020 13:04:17 -05:00 STACK1-MDF-P1_1_RP_0_trace_archive_0-20200507-130409.tar.gz

21 -rw- 2852829 May 8 2020 05:02:05 -05:00 STACK1-MDF-P1_1_RP_0_trace_archive_1-20200508-050153.tar.gz

22 -rw- 600326 May 9 2020 17:12:54 -05:00 STACK1-MDF-P1_1_RP_0_trace_archive_2-20200509-171248.tar.gz

825638912 bytes total (763711488 bytes free)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-09-2020 09:03 PM - edited 05-09-2020 09:04 PM

18 -rw- 1083052 May 6 2020 12:42:07 -05:00 system-report_1_20200506-124204-CDT.tar.gz

I want this file.

This is the crash log. The other files are just auto-generated when the switch gets rebooted.

And I still want to see the output to the following command:

sh log on switch active uptime detail

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-30-2020 06:30 AM

Hi Oscar,

Did you ever get a fix for this, looks like we have a simular problem using version 16.09.02 ?

At the moment I just plan to upgrade to the latest recommended version.

regards,

Chris.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-30-2020 10:16 AM - edited 07-30-2020 10:31 AM

Hi Chris, yes I was able to solve the problem, although it was a combination of many steps.

Originally my stacks had 6 switches and version 16.9.5, the one recommended at that time by Cisco, however, they started with the behavior. The first activity before raising a case was:

- I made a downgrade to version 16.9.3, which I have in other 4-switch stacks and I have never had any problems.

- When downgrade the behavior was the same.

- I raised the case with the TAC and I could also see in the logs in a syslog server, that there was flapping in the stack ports (stack modules).

- The TAC recommended me to upgrade to 16.9.5 (recommended at the time) since the show tech observed the following:

Dup CSCvq48005 bug.which caused the stack to restart.

- I did the upgrade and also on my own I changed the stack modules where the flapping was seen first, if later I continued with the behavior I also changed the stack cables until the flapping stopped being seen with the recommended version.

- After all that parts and software change the stacks have remained stable.

The change of parts was not recommended by the TAC, however it was told of the actions carried out in addition to the upgrade. I don't know if they have updated the Workaround adding the change of physical parts but that made the stacks stay stable.

"I believe that the final solution and the one that solved the problem was the change of stack modules and stack cables where flapping was observed since originally the stack had version 16.9.5, which was recommended by TAC."

I hope the information can help you, Regards.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-31-2020 03:01 AM

hi Oscar,

Thanks for the input. I have upgraded one of our switches for now to see if that remains stable. upgraded to ver16.12.3a

No stacks involved in my network but the switches that go down are in a loop off a distribution switch rather than individually linked to the distribution switch. I think this might be part of the cause but I would expect that to work.

regards,

Chris.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

05-09-2020 09:22 PM - edited 05-09-2020 09:25 PM

IMPORTANT:

- I do NOT work for Cisco. My access to the Bug ID is no different to an "outsider".

- Information(s) found in the Bug ID need to be taken with a grain of salt: Bug IDs are rarely updated, thus, they are rarely accurate. If one has a valid Service Contract to raise a TAC Case, use it: Raise a TAC Case and the TAC agent can provide the latest update, base on the Cisco INTERNAL notes, which can always be different (if not contradictory) to the information found in the Bug ID.

I just reviewed this Bug ID and this bug is not fixed until "Fuji-16.9.4". If your stack is running "Fuji-16.9.3" what happens if you upgrade the stack to "Fuji-16.9.4"?

NOTE:

Cisco's IOS-XE naming convention is designed to be confusing. Let me explain:

- If the IOS-XE version are expressed only in numbers, like 17.1.1 or 16.9.1 then it means the versions are "internal" or "development" (aka "beta").

- If the IOS-XE version is expressed with the names of mountains, like "Gibraltar-16.10.1", "Gibraltar-16.10.1a" or "Fuji-16.9.4" then it can potentially mean a version meant for "public" or "production".

Take note I said "it can potentially mean". There are times when a particular IOS-XE version is/are found in both "Known Affected Releases" and "Known "Fixed Releases". This means that the bug was found in a production/public IOS-XE release, fixed and then re-published, while the version number remains unchanged.

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide