- Cisco Community

- Technology and Support

- Data Center and Cloud

- Data Center and Cloud Knowledge Base

- ACI: Common migration issue / Overlapping VLAN pools

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

04-08-2018 04:26 PM - edited 03-01-2019 06:07 AM

- Document Objective

- Problem Description

- Common troubleshooting steps

- Validating the connectivity

- iPing test

- VerifyEndpoint learning

- Reviewing EPM trace for endpoint learning across vPC leaf peer

- Understanding EPG vnid in ACI fabric

- Review the Physical domain association and VLAN pools

- Resolution

- Verification

Document Objective

This document discuss a common issue observed during the workload migration to ACI fabric.

Problem Description

VMware Virtual machines are hosted in Cisco UCS-B series blade servers (ESXi servers). UCS Fabric interconnect is connected to leaf101 & leaf102 (vPC - LACP active).

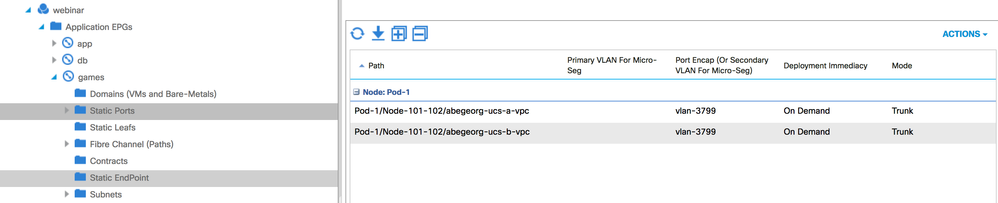

EPG "abegeorg:webinar:games" has a static-binding with vPC port (FI-A and FI-B) for trunk vlan-3799.

Once the VM workload is migrated to new server blades connected to ACI fabric (DVS is managed to vCenter), the intermittent connectivity issue is observed with the newly migrated VM. Users are experiencing the slowness in accessing the services, sometimes services are not accessible.

The example VM considered for this troubleshooting is IP -> 10.10.79.1/24, the default GW of this endpoint is residing in ACI fabric (10.10.79.254/24)

Common troubleshooting steps

This sections covers common troubleshooting steps can be used to isolate similar issues. You are encouraged to use these steps to troubleshoot such connectivity issues.

Validating the connectivity

Now, lets check some connectivity state and validate how the ACI fabric is connected to the server (in this case UCS B-Series chassis). The CDP and port-channel output obtained below indicates that the FI-A & FI-B are connected to ACI fabric leaf (leaf101 & leaf102) using vPC. The vPC ports and up.

leaf101# show cdp neighbors

<< snip >>

UCS-ACI-PODs-A(SSI182207R7) Eth1/37 171 S I s UCS-FI-6248UP Eth1/5

UCS-ACI-PODs-B(SSI1822054V) Eth1/38 127 S I s UCS-FI-6248UP Eth1/5

<< snip >>

leaf101#

leaf101# show vpc extended

<< snip >>

691 Po3 up success success 3799,3874-3875,3887 abegeorg-ucs-a-vpc

692 Po9 up success success 3799,3874-3875,3887 abegeorg-ucs-b-vpc

<< snip >>

leaf101#

leaf101# show port-channel extended

<< snip >>

3 Po3(SU) abegeorg-ucs-a-vpc LACP Eth1/37(P)

9 Po9(SU) abegeorg-ucs-b-vpc LACP Eth1/38(P)

10 Po10(SU) abegeorg-ucs-b-vpc LACP Eth1/38(P)

leaf101#

leaf102# show cdp neighbors

<< snip >>

UCS-ACI-PODs-A(SSI182207R7) Eth1/37 165 S I s UCS-FI-6248UP Eth1/6

UCS-ACI-PODs-B(SSI1822054V) Eth1/38 121 S I s UCS-FI-6248UP Eth1/6

<< snip >>

leaf102#

leaf102# show vpc extended

<< snip >>

691 Po3 up success success 3799,3874-3875,3887 abegeorg-ucs-a-vpc

692 Po2 up success success 3799,3874-3875,3887 abegeorg-ucs-b-vpc

<< snip >>

leaf102#

leaf102# show port-channel extended

<< snip >>

2 Po2(SU) abegeorg-ucs-b-vpc LACP Eth1/38(P)

3 Po3(SU) abegeorg-ucs-a-vpc LACP Eth1/37(P)

leaf102#

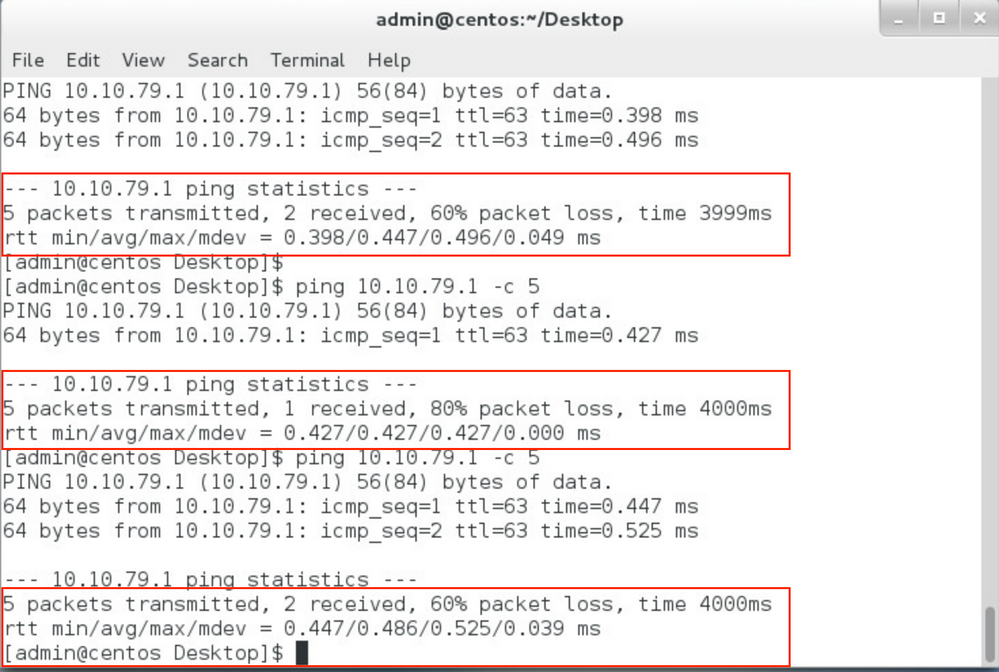

In this case, the default GW of the VM workload is at the ACI fabric. So, lets do some connectivity test from ACI fabric. The iping result indicates that there are intermittent ping drops, the connectivity from the ACI fabric itself is having issue.

iPing test

leaf101# iping -V abegeorg:webinar 10.10.79.1

PING 10.10.79.1 (10.10.79.1) from 10.10.79.254: 56 data bytes

Request 0 timed out

Request 1 timed out

64 bytes from 10.10.79.1: icmp_seq=2 ttl=64 time=0.398 ms

64 bytes from 10.10.79.1: icmp_seq=3 ttl=64 time=0.436 ms

64 bytes from 10.10.79.1: icmp_seq=4 ttl=64 time=0.405 ms

--- 10.10.79.1 ping statistics ---

5 packets transmitted, 3 packets received, 40.00% packet loss

round-trip min/avg/max = 0.398/0.413/0.436 ms

leaf101#

leaf102# iping -V abegeorg:webinar 10.10.79.1

PING 10.10.79.1 (10.10.79.1) from 10.10.79.254: 56 data bytes

64 bytes from 10.10.79.1: icmp_seq=0 ttl=64 time=0.622 ms

Request 1 timed out

64 bytes from 10.10.79.1: icmp_seq=2 ttl=64 time=0.601 ms

Request 3 timed out

64 bytes from 10.10.79.1: icmp_seq=4 ttl=64 time=0.662 ms

--- 10.10.79.1 ping statistics ---

5 packets transmitted, 3 packets received, 40.00% packet loss

round-trip min/avg/max = 0.601/0.628/0.662 ms

leaf102#

Verify Endpoint learning

Now, lets check the endpoint learning details... using "show system internal epm endpoint ip 10.10.79.1" . When I validated the fabric-encap (VLAN vnid) for the encap vlan-3799 in both leaf switch, we see it different. It should be ideally same VLAN vnid across across the fabric. In this case, we see this different across the vPC pair leaf, which causes the connectivity issue.

>>> @leaf101

leaf101# show system internal epm endpoint ip 10.10.79.1

MAC : 0050.5690.a99e ::: Num IPs : 1

IP# 0 : 10.10.79.1 ::: IP# 0 flags :

Vlan id : 34 ::: Vlan vnid : 13392 ::: VRF name : abegeorg:webinar

BD vnid : 15597462 ::: VRF vnid : 2129922

Phy If : 0x16000002 ::: Tunnel If : 0

Interface : port-channel3

Flags : 0x80004c05 ::: sclass : 10941 ::: Ref count : 5

EP Create Timestamp : 04/08/2018 19:38:36.866026

EP Update Timestamp : 04/08/2018 19:38:36.866026

EP Flags : local|vPC|IP|MAC|sclass|timer|

::::

leaf101# show vlan id 34 extended

VLAN Name Status Ports

---- -------------------------------- --------- -------------------------------

34 abegeorg:webinar:games active Eth1/37, Eth1/38, Po3, Po9

VLAN Type Vlan-mode Encap

---- ----- ---------- -------------------------------

34 enet CE vlan-3799

leaf101#

leaf101# vsh_lc

vsh_lc

module-1#

module-1# show system internal eltmc info vlan brief | egrep "Fabric_enc|3799"

VlanId HW_VlanId Type Access_enc Access_enc Fabric_enc Fabric_enc BDVlan

34 41 FD_VLAN 802.1q 3799 VXLAN 13392 33

module-1#

>>> @leaf102

leaf102# show system internal epm endpoint ip 10.10.79.1

MAC : 0050.5690.a99e ::: Num IPs : 1

IP# 0 : 10.10.79.1 ::: IP# 0 flags :

Vlan id : 50 ::: Vlan vnid : 13300 ::: VRF name : abegeorg:webinar

BD vnid : 15597462 ::: VRF vnid : 2129922

Phy If : 0x16000002 ::: Tunnel If : 0

Interface : port-channel3

Flags : 0x80004c05 ::: sclass : 10941 ::: Ref count : 5

EP Create Timestamp : 04/08/2018 19:38:46.927238

EP Update Timestamp : 04/08/2018 19:38:56.875307

EP Flags : local|vPC|IP|MAC|sclass|timer|

::::

leaf102#

leaf102# show vlan id 50 extended

VLAN Name Status Ports

---- -------------------------------- --------- -------------------------------

50 abegeorg:webinar:games active Eth1/37, Eth1/38, Po2, Po3

VLAN Type Vlan-mode Encap

---- ----- ---------- -------------------------------

50 enet CE vlan-3799

leaf102#

leaf102# vsh_lc

vsh_lc

module-1# show system internal eltmc info vlan brief | egrep "Fabric_enc|3799"

VlanId HW_VlanId Type Access_enc Access_enc Fabric_enc Fabric_enc BDVlan

50 35 FD_VLAN 802.1q 3799 VXLAN 13300 49

module-1#

Reviewing EPM trace for endpoint learning across vPC leaf peer

The EPM-trace indicates that the leaf101 is receiving an endpoint update from its vPC peer (leaf102) but the received EPG vnid 13300 is doesn't exist on this leaf101. Hence, the EPM removes the endpoint from its database. Similarly, leaf102 is receiving an endpoint update from its vPC peer (leaf101) but the received EPG vnid 13192 is doesn't exist on this leaf102. Hence, the EPM removes the endpoint from its database.

>>>/var/log/dme/log/epm-trace.txt @ leaf101

[2018 Apr 8 19:25:53.653200154:1119:epm_debug_dump_epm_ep_req:359:t] log_collect_ep_event

req_src=EPM_SAP_PEER

optype = ADD; mac = 0050.5690.a99e; num_ips = 1

ip[0] = 10.10.79.1

vlan = 3799; epg_vnid = 13300; bd_vnid = 15597462; vrf_vnid = 2129922

ifindex = 0x7e0002b3; tep_ip_tun_ifindex = 0; sclass = 10941

flags = local|vPC|IP|MAC|sclass|

ep_req_ts = 04/08/2018 19:25:39.030373

[2018 Apr 8 19:25:53.653216494:1124:epm_mcec_pre_process_ep_req:808:E] Unknown FD vlan/vxlan 13300 bd_vnid 15597462 ... ignoring EP req; ep_flags local|vPC|IP|MAC|sclass|

[2018 Apr 8 19:25:53.653218130:1125:epm_send_ep_del_ack_to_peer:1204:t] EP req for EP for which FD/BD/VRF/Tun doesn't exist, deleting EP from EP Db, if it exists

[2018 Apr 8 19:25:53.653221839:1126:epm_pre_process_ep_req:4097:E] Failed to pre-process EP req, err : VLAN does not exist

[2018 Apr 8 19:25:53.653224190:1127:epm_process_ep_req:4464:E] EP req pre processing failed, err : VLAN does not exist

[2018 Apr 8 19:25:53.653225512:1128:epm_mcec_ep_req_handler:459:E] Error processing EP# 1 in EP request from peer EPM

[2018 Apr 8 19:25:53.653229434:1129:epm_debug_dump_epm_ep_req:359:t] log_collect_ep_event

req_src=EPM_SAP_PEER

optype = ADD; mac = 0050.5690.233a; num_ips = 1

ip[0] = 10.10.79.2

vlan = 3799; epg_vnid = 13300; bd_vnid = 15597462; vrf_vnid = 2129922

ifindex = 0x7e0002b3; tep_ip_tun_ifindex = 0; sclass = 10941

flags = local|vPC|IP|MAC|sclass|

ep_req_ts = 04/08/2018 19:25:29.986185

[2018 Apr 8 19:25:53.653244299:1134:epm_mcec_pre_process_ep_req:808:E] Unknown FD vlan/vxlan 13300 bd_vnid 15597462 ... ignoring EP req; ep_flags local|vPC|IP|MAC|sclass|

[2018 Apr 8 19:25:53.653245934:1135:epm_send_ep_del_ack_to_peer:1204:t] EP req for EP for which FD/BD/VRF/Tun doesn't exist, deleting EP from EP Db, if it exists

[2018 Apr 8 19:25:53.653249397:1136:epm_pre_process_ep_req:4097:E] Failed to pre-process EP req, err : VLAN does not exist

[2018 Apr 8 19:25:53.653251663:1137:epm_process_ep_req:4464:E] EP req pre processing failed, err : VLAN does not exist

[2018 Apr 8 19:25:53.653253034:1138:epm_mcec_ep_req_handler:459:E] Error processing EP# 2 in EP request from peer EPM

>>> /var/log/dme/log/epm-trace.txt @ leaf102

[2018 Apr 8 17:27:36.566066818:1021641:epm_debug_dump_epm_ep_req:359:t] log_collect_ep_event

req_src=EPM_SAP_PEER

optype = ADD; mac = 0050.5690.a99e; num_ips = 1

ip[0] = 10.10.79.1

vlan = 3799; epg_vnid = 13192; bd_vnid = 16646020; vrf_vnid = 2129922

ifindex = 0x7e0002b3; tep_ip_tun_ifindex = 0; sclass = 32772

flags = local|vPC|IP|MAC|sclass|

ep_req_ts = 04/08/2018 17:27:39.330522

[2018 Apr 8 17:27:36.566087404:1021646:epm_mcec_pre_process_ep_req:808:E] Unknown FD vlan/vxlan 13192 bd_vnid 16646020 ... ignoring EP req; ep_flags local|vPC|IP|MAC|sclass|

[2018 Apr 8 17:27:36.566089169:1021647:epm_send_ep_del_ack_to_peer:1204:t] EP req for EP for which FD/BD/VRF/Tun doesn't exist, deleting EP from EP Db, if it exists

[2018 Apr 8 17:27:36.566091529:1021648:epm_process_ep_del:2313:t] Delete req rcvd for EP:

[2018 Apr 8 17:27:36.566093844:1021649:epm_debug_dump_epm_ep:398:t] log_collect_ep_event

EP entry:

mac = 0050.5690.a99e; num_ips = 1

ip[0] = 10.10.79.1

vlan = 34; epg_vnid = 13300; bd_vnid = 16646020; vrf_vnid = 2129922

ifindex = 0x16000002; tun_ifindex = 0; vtep_tun_ifindex = 0

sclass = 32772; ref_cnt = 5

flags = local|vPC|IP|MAC|sclass|timer|

create_ts = 04/08/2018 17:27:38.827157

upd_ts = 04/08/2018 17:27:39.321509

[2018 Apr 8 17:27:36.566114551:1021655:epm_ep_notify_coop:1465:t] rec_ts: 1523179659:332728768

[2018 Apr 8 17:27:36.566118069:1021656:epm_process_ep_del:2401:t] Deleting EP from the DB

[2018 Apr 8 17:27:36.566233949:1021657:epm_add_del_epg_dyn_pol_obj:2429:t] Del dyn pol trig obj with DN sys/ctx-[vxlan-2129922]/bd-[vxlan-16646020]/vlan-[vlan-3799]/dynepgpolicytrig, vlan id 34 bd = 16646020; mac = 0050.5690.a99e Active EP count on if 0x16000002 is 1

[2018 Apr 8 17:27:36.566244842:1021660:epm_del_ip_ep_objs:2176:t] Deleting IP EP obj of (vrf 2129922, IP 10.10.79.1)

[2018 Apr 8 17:27:36.566265002:1021661:epm_del_mac_ep_obj:2200:t] Deleting MacEp object of (BD 16646020, MAC 0050.5690.a99e) vlan_id 34

[2018 Apr 8 17:27:36.566268373:1021662:epm_attach_to_dirty_vlan_list:4359:t] Adding vlan 34 to dirty list with version 17382

[2018 Apr 8 17:27:36.566281362:1021663:epm_pre_process_ep_req:4097:E] Failed to pre-process EP req, err : VLAN does not exist

[2018 Apr 8 17:27:36.566283518:1021664:epm_process_ep_req:4464:E] EP req pre processing failed, err : VLAN does not exist

Understanding EPG vnid in ACI fabric

Now, let's understand how the EPG vnid is derived in ACI fabric. The formula for deriving the EPG-vnid is to add the position of encap (starting at 0) within the VLAN block to the base value.

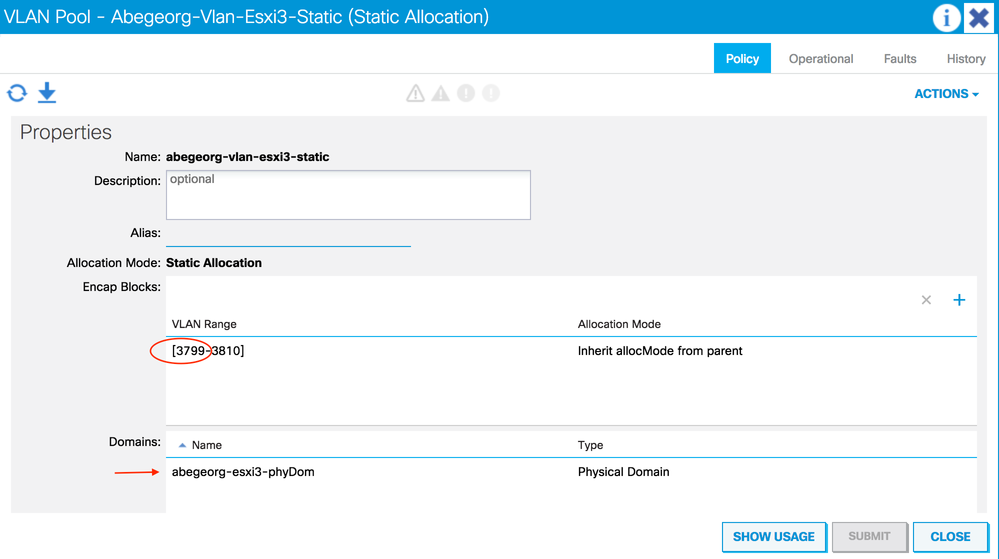

On leaf101, the EPG-vnid is 13392, was pulled from a the following VLAN block which is associated with physical domain abegeorg-esxi3-phyDom. The EPG-vnid is derived in the following manner.

base : 13392

block : vlan-3799 to vlan-3810. Hence,index for vlan-3799 (starting 0): 0

EPG-vnid: 13392 + 0 = 13392

# stp.AllocEncapBlkDef

encapBlk : uni/infra/vlanns-[abegeorg-vlan-esxi3-static]-static/from-[vlan-3799]-to-[vlan-3810]

base : 13392

childAction :

descr :

dn : allocencap-[uni/infra]/encapnsdef-[uni/infra/vlanns-[abegeorg-vlan-esxi3-static]-static]/allocencapblkdef-[uni/infra/vlanns-[abegeorg-vlan-esxi3

-static]-static/from-[vlan-3799]-to-[vlan-3810]]

from : vlan-3799

lcOwn : resolveOnBehalf

modTs : 2018-04-08T19:25:48.615+08:00

monPolDn : uni/fabric/monfab-default

name :

nameAlias :

ownerKey :

ownerTag :

rn : allocencapblkdef-[uni/infra/vlanns-[abegeorg-vlan-esxi3-static]-static/from-[vlan-3799]-to-[vlan-3810]]

status :

to : vlan-3810

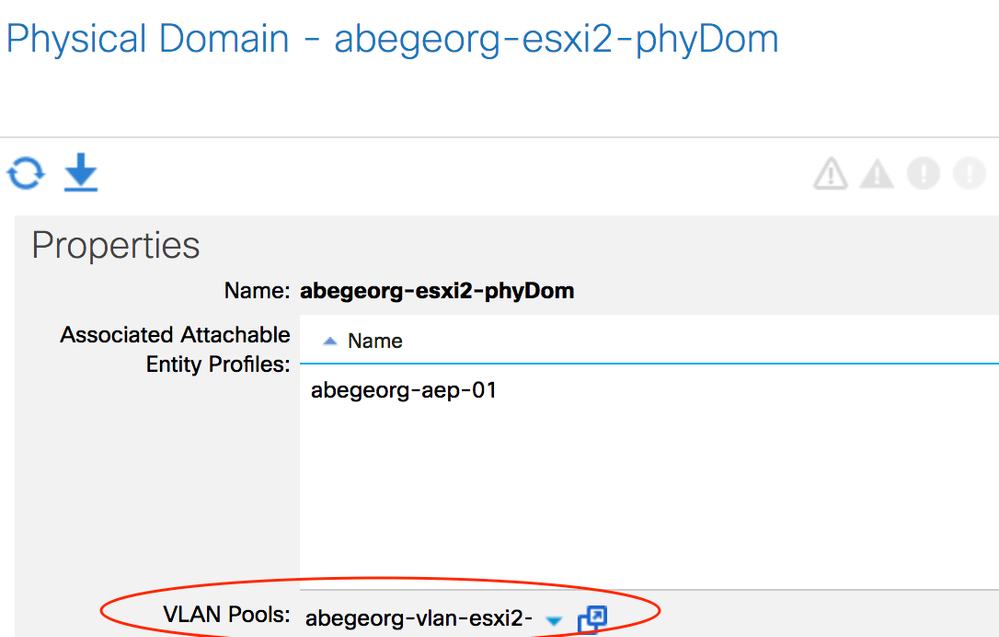

On leaf102, the EPG-vnid is 13300, was pulled from a the following VLAN block which is associated with physical domain abegeorg-esxi2-phyDom. The EPG-vnid is derived in the following manner.

base : 13292

block : vlan-3791 to vlan-3799. Hence,index for vlan-3799 (starting 0): 8

EPG-vnid: 13292 + 8 = 13300

# stp.AllocEncapBlkDef

encapBlk : uni/infra/vlanns-[abegeorg-vlan-esxi2-static]-static/from-[vlan-3791]-to-[vlan-3799]

base : 13292

childAction :

descr :

dn : allocencap-[uni/infra]/encapnsdef-[uni/infra/vlanns-[abegeorg-vlan-esxi2-static]-static]/allocencapblkdef-[uni/infra/vlanns-[abegeorg-vlan-esxi2-static]-static/from-[vlan-3791]-to-[vlan-3799]]

from : vlan-3791

lcOwn : resolveOnBehalf

modTs : 2018-04-08T19:25:48.615+08:00

monPolDn : uni/fabric/monfab-default

name :

nameAlias :

ownerKey :

ownerTag :

rn : allocencapblkdef-[uni/infra/vlanns-[abegeorg-vlan-esxi2-static]-static/from-[vlan-3791]-to-[vlan-3799]]

status :

to : vlan-3799

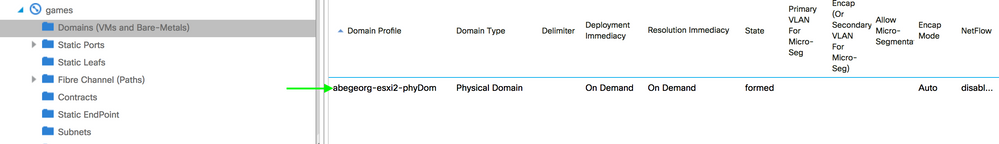

The EPG-vnid mismatch occured due to the overlapping VLAN pool.

Review the Physical domain association and VLAN pools

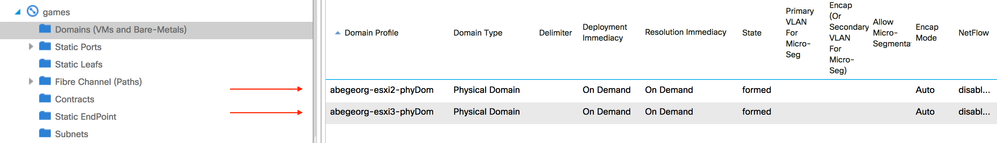

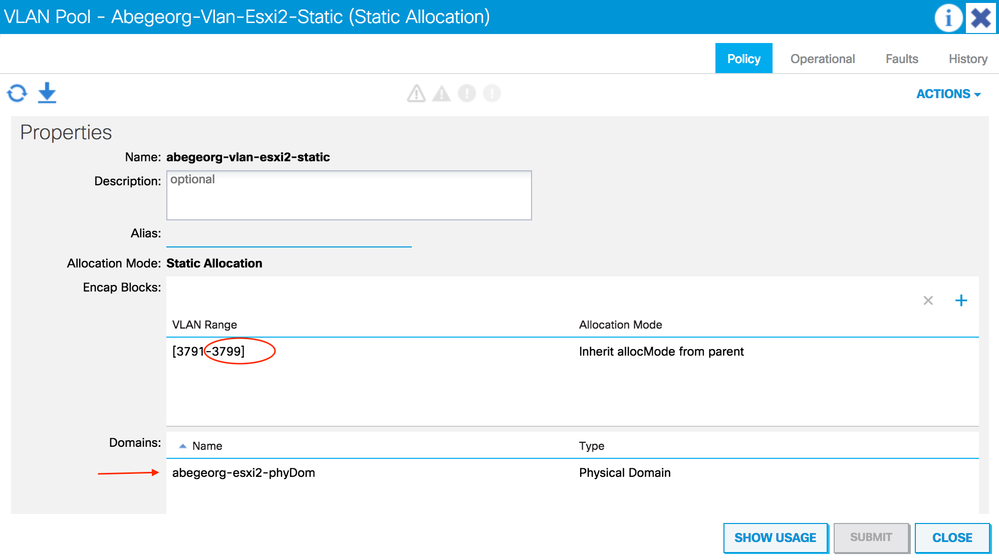

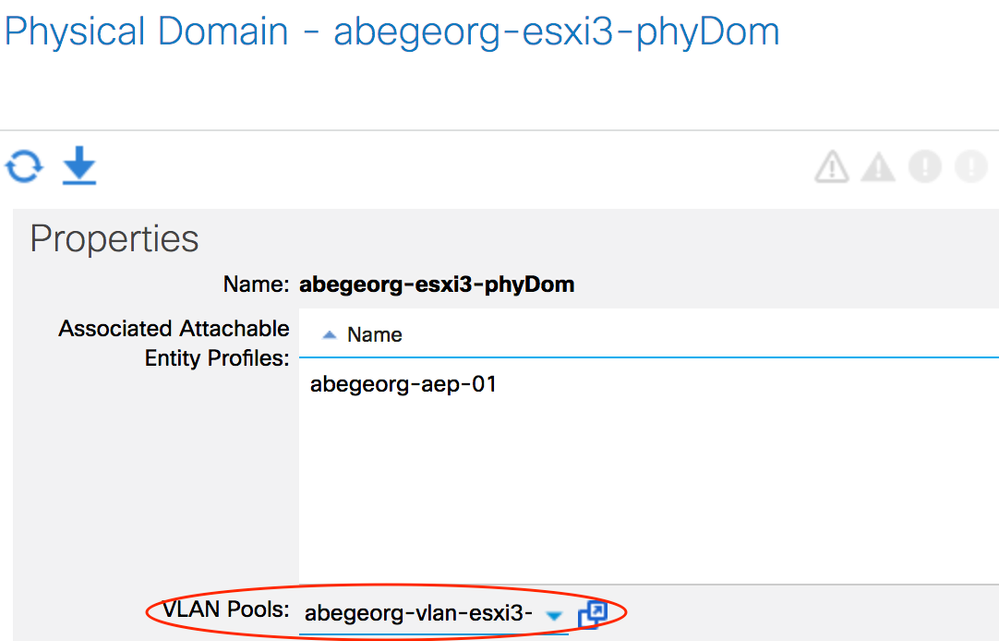

There are two physical domains are associated with this EPG (abegeorg-esxi2-phyDom & abegeorg-esxi3-phyDom)

Let's review these domains and its associated VLAN pool.

The domain abegeorg-esxi2-phyDom uses vlan pool abegeorg-vlan-esxi2-static (vlan-3791 to vlan-3799)

The domain abegeorg-esxi3-phyDom uses vlan pool abegeorg-vlan-esxi3-static (vlan-3799 to vlan-3810)

Resolution

Manually resolve the VLAN overlap. Please note that this is a disruptive operation and cause traffic drops. In this example, the abegeorg-esxi3-phyDom was redundant, so I removed it. Please review your requirement and make the changes accordingly.

Verification

Now, let's verify how the EPG-vnid is derived. We can see that the EPG-vnid derived at both leaf101 & leaf102 are same, which is 13300.

leaf101# show system internal epm endpoint ip 10.10.79.1

MAC : 0050.5690.a99e ::: Num IPs : 1

IP# 0 : 10.10.79.1 ::: IP# 0 flags :

Vlan id : 34 ::: Vlan vnid : 13300 ::: VRF name : abegeorg:webinar

BD vnid : 15597462 ::: VRF vnid : 2129922

Phy If : 0x16000002 ::: Tunnel If : 0

Interface : port-channel3

Flags : 0x80004c05 ::: sclass : 10941 ::: Ref count : 5

EP Create Timestamp : 04/08/2018 19:48:09.626467

EP Update Timestamp : 04/08/2018 19:51:56.597519

EP Flags : local|vPC|IP|MAC|sclass|timer|

::::

leaf101#

leaf101# vsh_lc

vsh_lc

module-1#

module-1# show system internal eltmc info vlan brief | egrep "Fabric_enc|3799"

VlanId HW_VlanId Type Access_enc Access_enc Fabric_enc Fabric_enc BDVlan

34 41 FD_VLAN 802.1q 3799 VXLAN 13300 33

module-1#

leaf102# show system internal epm endpoint ip 10.10.79.1

MAC : 0050.5690.a99e ::: Num IPs : 1

IP# 0 : 10.10.79.1 ::: IP# 0 flags :

Vlan id : 50 ::: Vlan vnid : 13300 ::: VRF name : abegeorg:webinar

BD vnid : 15597462 ::: VRF vnid : 2129922

Phy If : 0x16000002 ::: Tunnel If : 0

Interface : port-channel3

Flags : 0x80004c05 ::: sclass : 10941 ::: Ref count : 5

EP Create Timestamp : 04/08/2018 19:48:09.626467

EP Update Timestamp : 04/08/2018 19:54:22.823590

EP Flags : local|vPC|IP|MAC|sclass|timer|

leaf102#

leaf102# vsh_lc

vsh_lc

module-1# show system internal eltmc info vlan brief | egrep "Fabric_enc|3799"

VlanId HW_VlanId Type Access_enc Access_enc Fabric_enc Fabric_enc BDVlan

50 35 FD_VLAN 802.1q 3799 VXLAN 13300 49

module-1#

This restores the connectivity to stable state...

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hey Abey,

Awesome post,

Can you give a tip? We are doing a migration to ACI, but when the legacy network send a TCN to ACI, the ACI made a Flush on mac table....thats a normal behavior, but in my case after the flush , the ACI delay 1 min to relearn the endpoints.

I saw on epm_trace something like:

mac = 00f2.8b2b.7d40; num_ips = 0

vlan = 100; epg_vnid = 13893; bd_vnid = 15237055; vrf_vnid = 2260992

ifindex = 0x16000007; tun_ifindex = 0; vtep_tun_ifindex = 0

sclass = 16417; ref_cnt = 4

flags = local|vPC|MAC|sclass|timer|

create_ts = 09/19/2018 21:37:09.484093

upd_ts = 09/19/2018 21:40:20.890612

[2018 Sep 20 04:42:55.057801333:1969235489:epm_process_ep_del:2401:t] Deleting EP from the DB

bd = 15237055; mac = 00f2.8b2b.7d40 Active EP count on if 0x16000007 is 0

[2018 Sep 20 04:42:55.057809697:1969235492:epm_del_mac_ep_obj:2182:t] Deleting MacEp object of (BD 15237055, MAC 00f2.8b2b.7d40)

vlan_id 100[2018 Sep 20 04:42:55.057816026:1969235493:epm_attach_to_dirty_vlan_list:4326:t] Adding vlan 100 to dirty list with version 6598

[2018 Sep 20 04:42:55.057817606:1969235494:epm_attach_to_dirty_vlan_list:4340:t] vlan 100 is already in dirty list with ver 6598

[2018 Sep 20 04:42:55.057819622:1969235495:epm_process_ep_del:2313:t] Delete req rcvd for EP:

[2018 Sep 20 04:42:55.057823264:1969235496:epm_debug_dump_epm_ep:398:t] log_collect_ep_event

[2018 Sep 20 04:42:56.022114100:1969258110:epm_pre_process_ep_req:4064:E] Failed to pre-process EP req, err : Endpoint request rejected as learning is disabled

[2018 Sep 20 04:42:56.022115620:1969258111:epm_process_ep_req:4431:E] EP req pre processing failed, err : Endpoint request rejected as learning is disabled

[2018 Sep 20 04:42:56.022116678:1969258112:epm_mcec_ep_req_handler:459:E] Error processing EP# 10 in EP request from peer EPM

[2018 Sep 20 04:42:56.022118793:1969258113:epm_debug_dump_epm_ep_req:359:t] log_collect_ep_event

req_src=EPM_SAP_PEER

optype = ADD; mac = 00f2.8b2b.7d40; num_ips = 0

vlan = 262; epg_vnid = 13893; bd_vnid = 15237055; vrf_vnid = 2260992

ifindex = 0x7e000002; tep_ip_tun_ifindex = 0; sclass = 16417

flags = local|vPC|MAC|sclass|

ep_req_ts = 09/19/2018 21:41:35.831981

[2018 Sep 20 04:42:56.022123851:1969258116:epm_mcec_pre_process_ep_req:772:t] Don't learn flag set, ignoring EP req

[2018 Sep 20 04:42:56.022125662:1969258117:epm_pre_process_ep_req:4064:E] Failed to pre-process EP req, err : Endpoint request rejected as learning is disabled

[2018 Sep 20 04:42:56.022127164:1969258118:epm_process_ep_req:4431:E] EP req pre processing failed, err : Endpoint request rejected as learning is disabled

[2018 Sep 20 04:42:56.022128211:1969258119:epm_mcec_ep_req_handler:459:E] Error processing EP# 11 in EP request from peer EPM

[2018 Sep 20 04:42:56.022130311:1969258120:epm_debug_dump_epm_ep_req:359:t] log_collect_ep_event

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: