- Cisco Community

- Technology and Support

- Data Center and Cloud

- Data Center and Cloud Knowledge Base

- Unable to initially discover Switches on DCNM version 11.3(1)

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

06-03-2020 01:55 PM - edited 06-22-2020 02:14 AM

Introduction

Cisco Data Center Network Manager (DCNM) version 11.3(1) and earlier releases use the interface called Out-of-Band Network (eth1 in DCNM Linux shell) to communicate, push and sync configurations with the Switching fabric. To do this, DCNM first needs to discover the Switching Fabric by either manually specifying the pre-provisioned Switches' mgmt0 IP address or by auto-discovering these Switches using the POAP (Power On Auto Provisioning) process.

On the other hand, the purpose of DCNM's Management Network interface (eth0 in DCNM Linux shell) is to make available for the network admin DCNM's Web GUI and API allowing the management of the DCNM controller.

Symptoms

During the setup of a new Switching fabric in DCNM, the latter will be unable to discover the Switches if their mgmt0 interfaces are not Layer 2 adjacent with DCNM's eth1 interface.

In other words, this is seen if DCNM eth1 is not in the same broadcast domain and IP subnet as the Switches mgmt0.

As a reminder, in the Cisco Nexus family of Switches their mgmt0 interface is always part of an exclusive VRF simply called "management". This is to allow the isolation within the Switch of control and data plane management traffic with production control and data plane traffic.

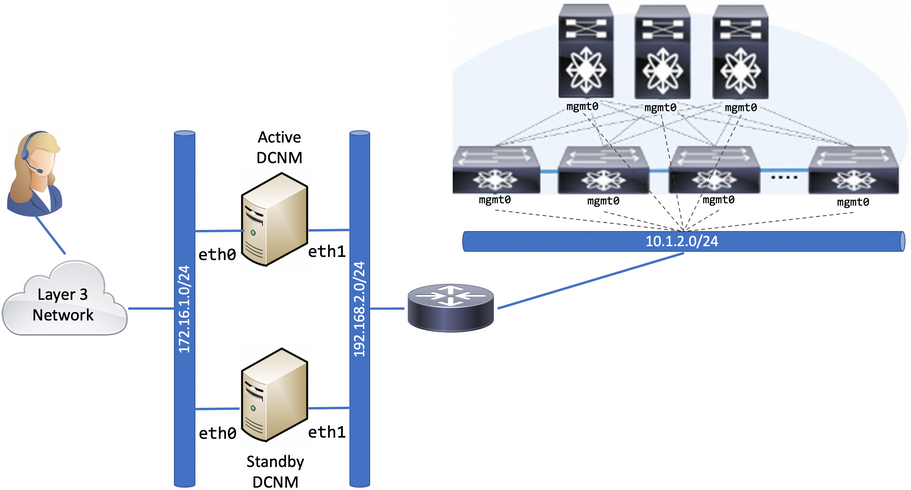

Let's take a look at the following network topology:

This specific setup consists of two DCNM instances in Native HA mode (Active/Standby). A requirement for DCNM's Native HA mode is to have DCNM's eth0 and eth1 interfaces Layer 2 adjacent between these two DNCM instances. Therefore, the DCNM instances are setup with IP addresses in the same subnet for these interfaces respectively.

Regardless of the deployment mode of DCNM (Standalone or Native HA), one can notice that DCNM Out-of-Band Network (eth1) part of the 192.168.2.0/24 subnet, connects to a Router. The same Router is connected to a different subnet allocated for the Switches's mgmt0 IP addressing in the 10.1.2.0/24 subnet.

In this case, DCNM Out-of-Band Network is not in the same broadcast domain and IP subnet as the Switches' mgmt0 subnet.

For DCNM to discover the Switching Fabric, it first needs to create a Fabric in the Web GUI, the path is Control > Fabrics > Fabric Builder.

Once done, in the same Fabric Builder section, the new fabric can be selected.

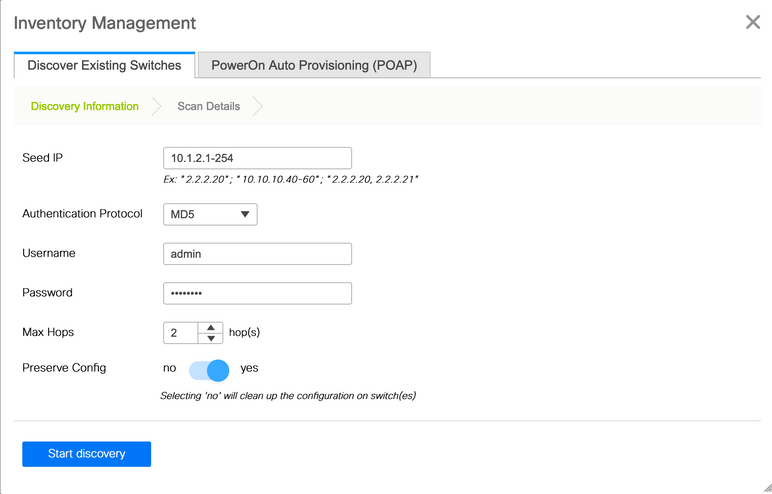

In this new fabric, clicking on Add switches makes the next window appears:

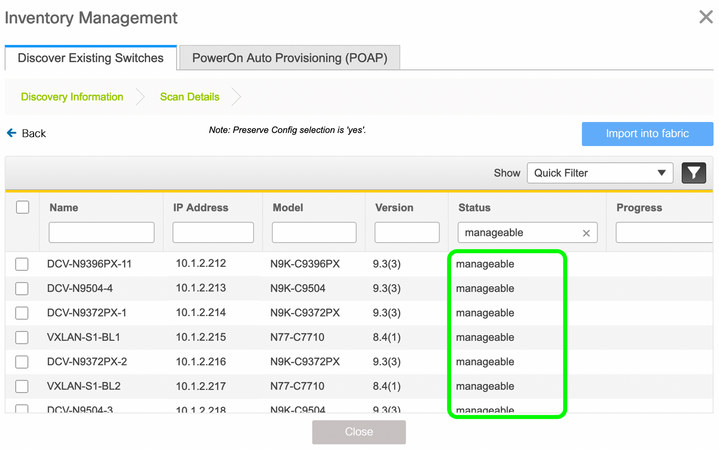

In this case we hace selected the Discover Existing Switches tab. This implies that the Switches have already been configured with a proper IP address for their mgmt0 interface, default route in the VRF called "management" and last but not least, username & password credentials.

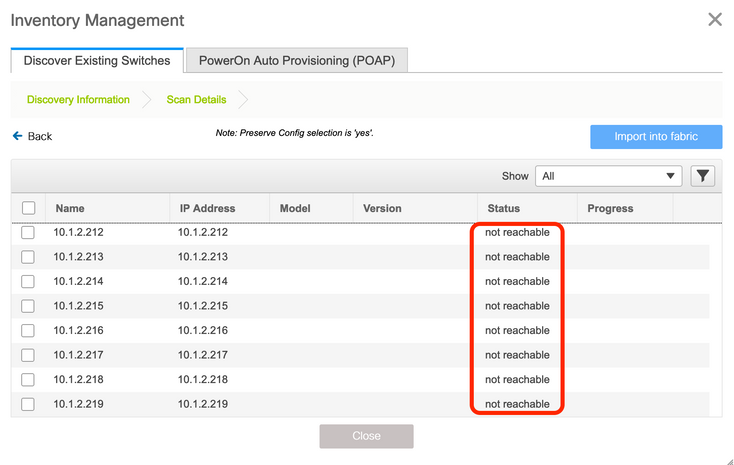

Clicking on Start discovery initiates this process of DCNM trying to communicate with the Switches mgmt0 IP address. A clear symptom that this process has failed is that after waiting no more than a couple of minutes, the screen shows these results:

Under the Status column, the not reachable state is seen for all the Switches' IP addresses.

Diagnosis

The first step to diagnose this issue is to SSH into the underlying Linux shell of DCNM via CLI.

On DCNM version 11.3(1) the username is sysadmin and the password is the one specified during the DCNM installation process.

On DCNM versions earlier than 11.3(1), like 11.2(1), the username is root and the password is the the one specific during the DCNM installation process.

Once in the Linux shell, try pinging the IP addresses of the Switches' mgmt0 or try with their default gateway which in this case is the Router IP 10.1.2.1. The expectation is to see the ping test failing.

Since my DCNM deployment is running 11.3(1). I am using sysadmin as username for the SSH session.

Laptop:~ hecserra$ ssh sysadmin@dcnm1 sysadmin@dcnm1's password: [sysadmin@dcnm1 ~]$ ping 10.1.2.200 PING 10.1.2.200 (10.1.2.200) 56(84) bytes of data. ^C --- 10.1.2.200 ping statistics --- 6 packets transmitted, 0 received, 100% packet loss, time 4999ms [sysadmin@dcnm1 ~]$ ping 10.1.2.1 PING 10.1.2.1 (10.1.2.1) 56(84) bytes of data. ^C --- 10.1.2.1 ping statistics --- 7 packets transmitted, 0 received, 100% packet loss, time 6001ms [sysadmin@dcnm1 ~]$

[sysadmin@dcnm1 ~]$ ping 192.168.2.1 -I eth1 PING 192.168.2.1 (192.168.2.1) from 192.168.2.101 eth1: 56(84) bytes of data. 64 bytes from 192.168.2.1: icmp_seq=1 ttl=255 time=0.407 ms 64 bytes from 192.168.2.1: icmp_seq=2 ttl=255 time=0.370 ms 64 bytes from 192.168.2.1: icmp_seq=3 ttl=255 time=0.437 ms 64 bytes from 192.168.2.1: icmp_seq=4 ttl=255 time=0.450 ms 64 bytes from 192.168.2.1: icmp_seq=5 ttl=255 time=0.449 ms ^C --- 192.168.2.1 ping statistics --- 5 packets transmitted, 5 received, 0% packet loss, time 3999ms rtt min/avg/max/mdev = 0.370/0.422/0.450/0.037 ms [sysadmin@dcnm1 ~]$

Solution

By examining the Routing Table of DCNM in the Linux shell, we see that there is no route to reach the Switches' mgmt0 subnet, which is the 10.1.2.0/24. Remember, DCNM is not VRF aware and the only default route it contains in its Routing Table is for the eth0 -Management Network- interface.

[sysadmin@dcnm1 ~]$ route -n Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 172.16.1.1 0.0.0.0 UG 0 0 0 eth0 192.168.2.0 0.0.0.0 255.255.255.0 U 0 0 0 eth1 172.16.1.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0 172.17.0.0 0.0.0.0 255.255.252.0 U 0 0 0 docker0 172.18.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker_gwbridge [sysadmin@dcnm1 ~]$

The solution to this dilemma is just to manually add a route to the 10.1.2.0/24 subnet over eth1's gateway. To make changes persist across reloads, there is a script available in DCNM to aid in this task.

First, elevate your privileges in Linux to the root account using the sudo su Linux command. Linux will ask you for your sysadmin or root password one more time.

Once done, the script in question is /root/packaged-files/scripts/configureOob.sh

[sysadmin@dcnm1 ~]$ sudo su

Password:

[root@dcnm1 sysadmin]# /root/packaged-files/scripts/configureOob.sh Usage: configureOob.sh [-n|--subnet subnet/masklen] [-g|--gateway gatewayip] [-i|--host host-ip/masklen] [root@dcnm1 sysadmin]#

If at the time of installing DCNM you configured a Gateway for eth1, simply invoke this script with the -n parameter specifying the subnet you would like to add. In this case, it is 10.1.2.0/24

[root@dcnm1 sysadmin]# /root/packaged-files/scripts/configureOob.sh -n 10.1.2.0/24 Validating subnet route input, if invalid, script exits Done configuring subnet route Done. [root@dcnm1 sysadmin]#

Also, note that if you were to add an entry for a /32 host route, you would need to run the script specifying the -i parameter instead.

[root@dcnm1 sysadmin]# /root/packaged-files/scripts/configureOob.sh -i 10.1.2.100/32

Examine DCNM Routing Table one more time. An entry for the 10.1.2.0/24 should be there now.

[sysadmin@dcnm1 ~]$ route -n Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 172.16.1.1 0.0.0.0 UG 0 0 0 eth0 10.1.2.0 192.168.2.1 255.255.255.0 UG 0 0 0 eth1 192.168.2.0 0.0.0.0 255.255.255.0 U 0 0 0 eth1 172.16.1.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0 172.17.0.0 0.0.0.0 255.255.252.0 U 0 0 0 docker0 172.18.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker_gwbridge [sysadmin@dcnm1 ~]$

A ping test to the Switches' mgmt0 IP address is now successful.

[root@dcnm1 sysadmin]# ping 10.1.2.200 PING 10.1.2.200 (10.1.2.200) 56(84) bytes of data. 64 bytes from 10.1.2.200: icmp_seq=1 ttl=63 time=5.54 ms 64 bytes from 10.1.2.200: icmp_seq=2 ttl=63 time=0.567 ms 64 bytes from 10.1.2.200: icmp_seq=3 ttl=63 time=0.646 ms ^C --- 10.1.2.200 ping statistics --- 3 packets transmitted, 3 received, 0% packet loss, time 2002ms rtt min/avg/max/mdev = 0.567/2.251/5.541/2.326 ms [root@dcnm1 sysadmin]# ping 10.1.2.1 PING 10.1.2.1 (10.1.2.1) 56(84) bytes of data. 64 bytes from 10.1.2.1: icmp_seq=2 ttl=255 time=0.624 ms 64 bytes from 10.1.2.1: icmp_seq=3 ttl=255 time=0.547 ms 64 bytes from 10.1.2.1: icmp_seq=4 ttl=255 time=0.737 ms ^C --- 10.1.2.1 ping statistics --- 4 packets transmitted, 3 received, 25% packet loss, time 2999ms rtt min/avg/max/mdev = 0.547/0.636/0.737/0.078 ms [root@dcnm1 sysadmin]#

You can take a quick look at /etc/sysconfig/network-scripts/route-eth1, this file is invoked by Linux somewhere along the boot process.

The static route added by us should be persistent after a reload event.

[root@dcnm network-scripts]# cat /etc/sysconfig/network-scripts/route-eth1 10.1.2.0/24 via 192.168.2.1 dev eth1 [root@dcnm network-scripts]#

The same script is useful to update the Default Gateway of the eth1 interface by specifying the -g parameter

[root@dcnm1 sysadmin]# /root/packaged-files/scripts/configureOob.sh -g 192.168.2.3

Validating Gateway IP ...

{"ResponseType":0,"Response":"Refreshed"}

[root@dcnm1 sysadmin]#

The way to validate the gateway change is with the below command:

[root@dcv-dcnm-vxlan-pod1-1 sysadmin]# curl localhost:35000/afw/server/status

{"ResponseType":0,"Response":{"AfwServerEnabled":true,"AfwServerReady":true,"InbandSubnet":"0.0.0.0/0","InbandGateway":"0.0.0.0","InbandSubnetIPv6":"","InbandGatewayIPv6":"","OutbandSubnet":"192.168.2.0/24","OutbandGateway":"192.168.2.3"}}

[root@dcv-dcnm-vxlan-pod1-1 sysadmin]#

Take into consideration that updating eth1's Gateway doesn't update the existing manually added routes in the Routing Table.

The script doesn't delete Static Routes. In such situation, we need to delete static route entries manually from the routing table and clean the /etc/sysconfig/network-scripts/route-eth1 with the following commands:

[root@dcnm1 sysadmin]# ip route delete 10.1.2.0/24 via 10.48.58.129 [root@dcnm1 sysadmin]# cat /dev/null > /etc/sysconfig/network-scripts/route-eth1

Conclusion

Since DCNM is now able to establish bidirectional communication with the Switches as verified with the ping test, the discovery process of pre-provisioned Switches is now successful in this case.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

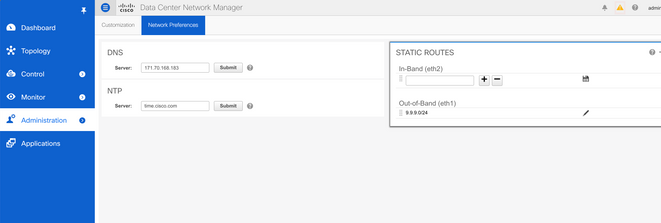

Thanks for writing this up but from DCNM 11.4 release onwards, there is a better way to add static routes in DCNM.

Adding specific routes in DCNM must be done in one of 2 ways:

1]*Preferred - Via the GUI where you can add specific routes over eth1 or eth2 as follows:

The advantage here is that the routes are synced across all nodes of the DCNM cluster including primary, secondary, and computes.

2] Using the appmgr update network-properties command. This needs to be applied individually on all DCNM/Compute nodes.

appmgr update network-properties [-h|--help]

appmgr update network-properties session {start|validate|apply|clear}

appmgr update network-properties session show config [diffs|changes]

appmgr update network-properties session show routes [diffs]

appmgr update network-properties set ipv4 eth0 <ipv4 address> <netmask> [gateway]

appmgr update network-properties set ipv4 eth1 <ipv4 address> <netmask> [gateway]

appmgr update network-properties set ipv4 eth2 <ipv4 address> <netmask> <gateway>

appmgr update network-properties set ipv6 eth0 <ipv6 address/prefix> [gateway]

appmgr update network-properties set ipv6 eth1 <ipv6 address/prefix> [gateway]

appmgr update network-properties set ipv6 eth2 <ipv6 address/prefix> <gateway>

appmgr update network-properties set hostname local <fqdn>

appmgr update network-properties add dns {<ipv4 address>|<ipv6 address>}

appmgr update network-properties add ntp {<ipv4 address>|<ipv6 address>|<name>}

appmgr update network-properties add route ipv4 eth1 <ipv4 network/prefix>

appmgr update network-properties add route ipv4 eth2 <ipv4 network/prefix>

appmgr update network-properties add route ipv6 eth1 <ipv6 network/prefix>

appmgr update network-properties add route ipv6 eth2 <ipv6 network/prefix>

appmgr update network-properties delete dns {<ipv4 address>|<ipv6 address>}

appmgr update network-properties delete ntp {<ipv4 address>|<ipv6 address>|<name>}

appmgr update network-properties delete route ipv4 eth1 <ipv4 network/prefix>

appmgr update network-properties delete route ipv4 eth2 <ipv4 network/prefix>

appmgr update network-properties delete route ipv6 eth1 <ipv6 network/prefix>

appmgr update network-properties delete route ipv6 eth2 <ipv6 network/prefix>

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Great help!! Thank you

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Have one more question:

1. What else can be checked if the DCNM eth1 and switch Mgmt0 are on the same subnet however failing to discover?

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: