- Cisco Community

- Technology and Support

- Data Center and Cloud

- Data Center and Cloud Knowledge Base

- VMWare ESXi 4.0 Installation on UCS Blade Server with UCS Manager KVM

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

on

10-15-2009

01:27 PM

- edited on

03-25-2019

01:23 PM

by

ciscomoderator

![]()

- Introduction

- Terminologies

- BMC - Baseboad Management Controller

- Using KVM Connection to Setup the Blade Server

Introduction

Although not required but readers are highly encouraged to get familar with the concepts discussed in the following document first

Deploying UCS Blade Server with UCS Manager for Virtualization

Once the blade server is provisioned using the UCS manager and necessary configurations like service profile creation etc. is done. The next step is to configure and deploy blade server for various applications. Since the blade server is very attractive for virtualization based applications, in this document the VMWare ESXi installation is discussed. The concepts discussed here are also applicable to non-vmware based hypervisor and any other vendor's software application installation as well.

During this setup no physical keyboard, mouse or monitor was attached to the physical KVM port on the blade server. It should be noted that this document only discusses the installation using the UCS manager built-in software KVM switch. Although there could be various other ways to install the software on the blade server (for instance USB DVD based installation on the blade server).

Terminologies

BMC - Baseboad Management Controller

Baseboard Management Controller (BMC), also called as Service processor, resides on each blade for OS independent/pre-OS management. The BMC's focus is to monitor/manage a single blade in the UCS chassis. BMC should be assigned an IP address so that the UCS Manager's software KVM can connect to it using the IP. The UCS manager talks to the BMC and configures the blade. BMC controls things like BIOS (upgrade/downgrade), assigning MAC addresses, assigning WWNs, etc.

Using KVM Connection to Setup the Blade Server

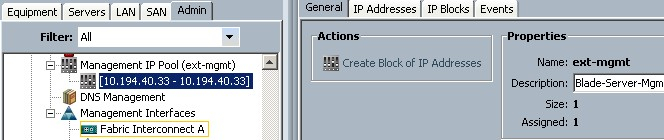

Each physical blade is capable of supporing remote KVM and remote media access. This is made possible by associating IP addresses for the cut-through interfaces that correspond to each blade's BMC. Typically, these addresses are configured on the same subnet as the management IP address of the UCSM. This pool is created from the Admin tab, by selecting "Management IP Pool".

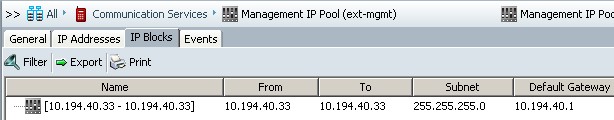

In our example, the ip address 10.194.40.208 is used to connect to the UCS Manager. And 10.194.40.33 is assigned to the " BMC Management IP Pool" so that UCSM can connect to blade server using KVM and will also be used for remtoe media access.

Notice that in our example we have only one IP address because we are configuring only one blade right now. So one ip address is required per blade server.

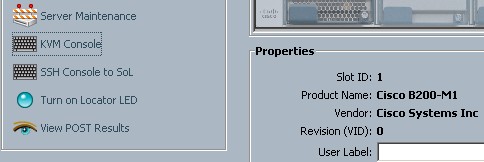

In the following screen it is shown that chassis-1/blade-1 is assigned the 10.194.40.33 IP address for remote KVM and virtual media access.

Following screen shows the IP address block that is allocated for BMC.

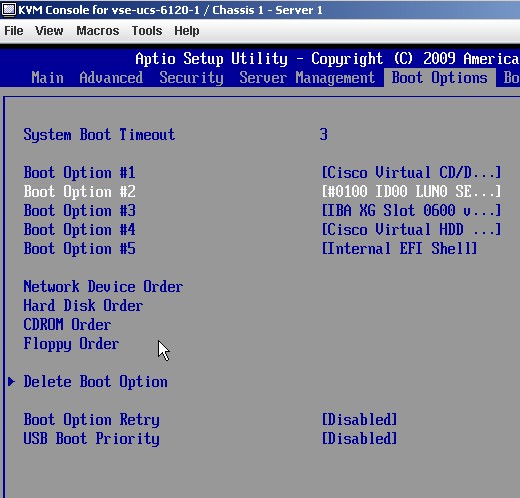

Now connect to blade server's software based KVM Console

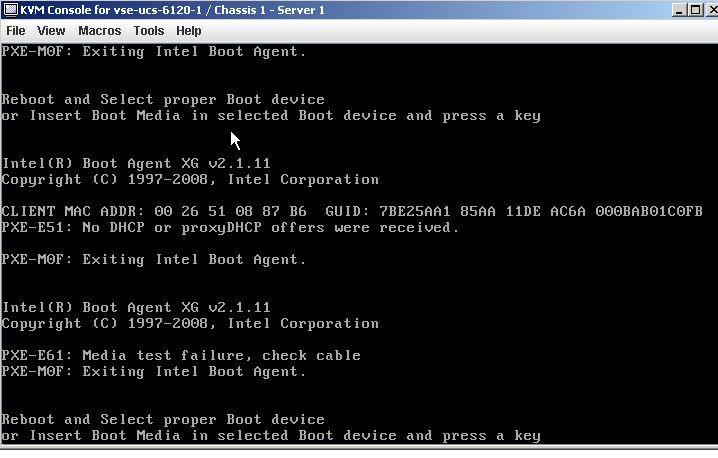

This should open a new dialog box that will show the console screen of the blade server.

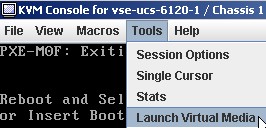

Now you can launch the virtual media from within the UCS Manager windows and can map vmvisor (ESXi4.0) iso image as virtual CD/DVD.

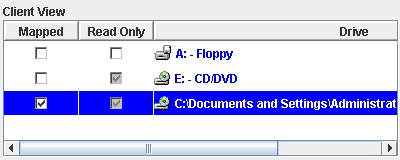

The macros are available to perform basic tasks as follows

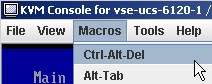

You may also setup the boot oder on the blade server's BIOS

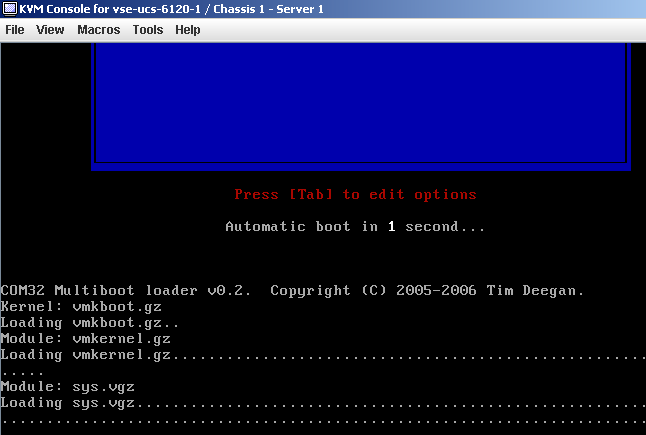

Now the blade should dedect the virtual CD/DVD and will start booting from it.

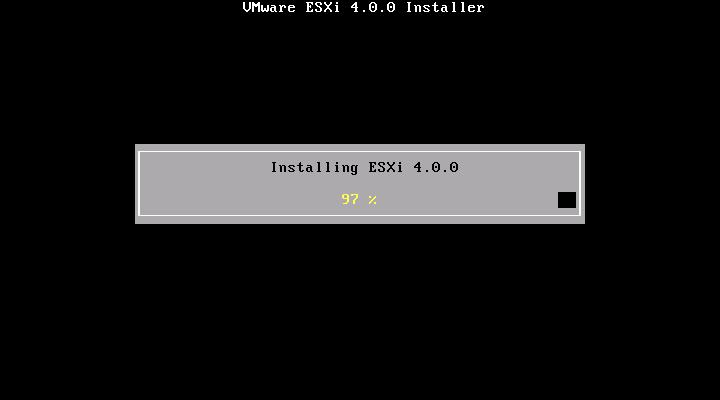

ESXi 4.0 installation will continue

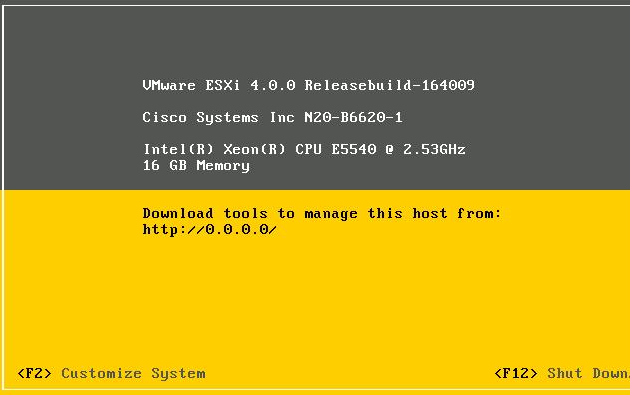

The installation is done. Now the next step should be to assing a management ip address to the ESXi server itself so that you can access it individually or can add it to your vCenter Setup.

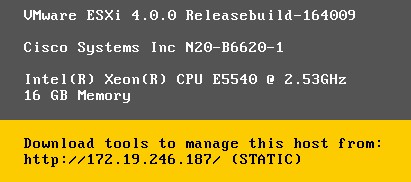

At this point, the VMWare EXSi 4.0 hypervisor (VMware-VMvisor-Installer-4i.0.0-164009.x86_64.iso) is installed on the UCS blade server and you can customize the vmware hypervisor with the IP address related information so you can connect to it using the vCenter and manage it from the vmwar vCenter clinet. Also right now no DataStore is created. So once you login to ESXi server, you can create data store either using iSCSI or SAN based data store or NAS.

So after that part is done, if your VLAN and network connectivity is in place, you should be able to connect/ping the 172.19.246.187.

Notice that there are two different ethernet networks here.

UCS Management Network:

10.194.x.x network (is used here in this setup to connect to UCS Manager and for KVM over IP purposes for the blade.This is out of band network and connect to the management port on the UCS fabric interconnect UCS-6120XP). The UCS Manager is the management application that is embedded inside the UCS-6120XP fabric interconnect switch.

VMWare Management Network:

172.19.246.x network (this is used to connect the vmware hypervisor using the in-band network so that it can be managed by the vCenter. This same network can be used for iSCSI traffic if needed but it is not recommended). So in our setup, the ESXi4 is running on the local hard drive on the blade server (ESXi 4 can also boot from the SAN itself but it is not covered in this document). Logically the vmware management traffic will be going through the UCS Chassis fabric extender to UCS fabric interconnect 6120XP switch. The 6120XP is connected to CAT6K 10GE port in this setup. Although the ethernet uplink could be Nexus 5K or Nexus 7K switch as well.

Next Step: Understanding and Deploying UCS B-Series LAN Networking

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hey Shazhad, I can't see the pictures...

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Steve,

Thanks for pointing it out to me. I have also opened a service request with the supportforum team. They are working on to resolve it. But I don't know why they were not showing up. So I have re-attached them again and hopefully you should be able to see it now.

Thanks,

Shahzad

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Great article. Any tips or concerns with running ESXi 4 on UCS blades without local disks? I know ESXi 4 can boot from SAN but this is only experimental and not currently supported.

Is UCS able to provision a SAN LUN in the profile to use in BIOS? Since this mapping is from the hardware would ESXi just think this is no different from a local disk?

Thanks.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

UCS can provision the FCoE adapter BIOS to support SAN boot like any traditional adapter. UCS Manager has a Boot Policy definition for defining SAN boot configurations with Boot Target and Lun Number. CiscoIT has been running ESX4i SAN Boot on UCS blade in lab environment for several months and have not seen any specific issues with that configuration. ESXi sees it just like any other disk.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi,

I am not an expert on the SAN and booting from SAN technologies so I did some research and I found out that access to the SAN LUN for booting is handled via the boot BIOS on the HBA ASIC of the UCS CNA mezzanine adapters. The BIOS Initial Program Load (IPL) sequence hands off the boot procedure to this adapter BIOS code just as it would to a NIC for a PXE boot. In any case, whatever you are booting (ESXi, in this case) must have the proper drivers for the storage device communicating to the drives to fully boot, so there is no way of “disguising” the SAN controllers as a locally-attached drive on the onboard array controller.

I hope this calrifies tings a bit :-)ShahzadFind answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: